Abstract

Computer communication via text messaging or Social Networking Services (SNS) has become increasingly popular. At this time, many studies are being conducted to analyze user information or opinions and recognize emotions by using a large amount of data. Currently, the methods for the emotion recognition of dialogues requires an analysis of emotion keywords or vocabulary, and dialogue data are mostly classified as a single emotion. Recently, datasets classified as multiple emotions have emerged, but most of them are composed of English datasets. For accurate emotion recognition, a method for recognizing various emotions in one sentence is required. In addition, multi-emotion recognition research in Korean dialogue datasets is also needed. Since dialogues are exchanges between speakers. One’s feelings may be changed by the words of others, and feelings, once generated, may last for a long period of time. Emotions are expressed not only through vocabulary, but also indirectly through dialogues. In order to improve the performance of emotion recognition, it is necessary to analyze Emotional Association in Dialogues (EAD) to effectively reflect various factors that induce emotions. Therefore, in this paper, we propose a more accurate emotion recognition method to overcome the limitations of single emotion recognition. We implement Intrinsic Emotion Recognition (IER) to understand the meaning of dialogue and recognize complex emotions. In addition, conversations are classified according to their characteristics, and the correlation between IER is analyzed to derive Emotional Association in Dialogues (EAD) and apply them. To verify the usefulness of the proposed technique, IER applied with EAD is tested and evaluated. This evaluation determined that Micro-F1 of the proposed method exhibited the best performance, with 74.8% accuracy. Using IER to assess the EAD proposed in this paper can improve the accuracy and performance of emotion recognition in dialogues.

1. Introduction

Due to the COVID-19 pandemic, more and more people are communicating through Social Network Services (SNS), such as Twitter and Facebook, rather than face-to-face. During online dialogues and communications, however, it is often difficult to assess the communicant’s emotions [1,2]. In modern analytics research, emotion recognition is an important field, referring to the recognition of emotions by analyzing social issues or various personal opinions [3,4]. Affective computing entails understanding human emotions through computers, and many researchers are actively creating new methods to do so.

In the field of affective computing, many researchers have argued that it is more effective to classify a sentence as multi-emotion rather than single-emotion [5]. Multi-emotion recognition in dialogue data is an important task to understand users’ detailed emotions. A study related to this is based on Attentive RNN in Dialogues (DialogueRNN), which is a modified recurrent neural network (RNN) using a multi-modal, multi-label emotion, intensity, and sentiment dialogue data set (MEISD). The results of multi-emotion recognition in text messages proved to be more accurate than other inputs [6]. In addition, using Semantic Evaluation-2018 (SemEval-2018), multi-emotion sentences were recognized based on attention-based convolutional neural networks (AttnConvnet) [7]. Attempts to recognize multiple emotions in various ways are continuing, but most of these studies were conducted using English datasets. Korean dialogue datasets are mostly classified as single-emotion, so research on datasets and multi-emotion sentences is needed.

Dialogue data, unlike normal text, are made up of exchanged messages between respondents in the form of back-and-forth communication, similar to an in-person dialogue. In the case of computer-based dialogues, there are cases where the sender and the receiver interpret the messages differently, which can lead to unexpected misunderstandings. Successful dialogues include transparent emotional exchange. The emotions of communicants during dialogues may change according to the words of others, and emotions that arise once may persist for a long period of time. Therefore, emotion recognition in dialogues should reflect not only various emotionally charged words but also various factors that induce emotions by association.

Various studies have attempted to identify communicants’ intentions in dialogue data. Speakers convey intentions by the words they use and how they construct the sentences in their communication. For example, even if the sentences express the same meaning, different emotions may be expressed according to the flow of dialogue, and in the case of a sarcastic sentence, the emotions expressed might very well be the opposite of the meaning of individual words. Therefore, it is necessary to analyze the relationship between the respondents’ previous dialogues and the emotions included in those communications in order to understand emotions of the current dialogue. That is, understanding the context of the dialogue and being aware of how emotional states change is a very important first step in analyzing intentions.

Therefore, in this paper, we propose a method for overcoming the limitations of single-emotion recognition in dialogue and for accurate emotion recognition. After learning Intrinsic Emotion Recognition (IER), which recognizes multiple emotions in dialogues, IER is applied to derive Emotional Association in Dialogues (EAD). The structure of this study is as follows: Following the introduction, Section 2 presents research on emotion recognition in dialogues and multi-emotion recognition. Section 3 proposes IER considering EAD. In order to improve the performance of emotion recognition in dialogues, we classify dialogue data according to their characteristics and apply IER to dialogues. EAD is derived through the analysis of IER results in dialogues, and the IER that applies it is described. Section 4 describes the results of experiments conducted to the IER considering the EAD, and Section 5 provides our conclusions and directions for future research.

2. Related Work

2.1. Emotion Recognition in Dialogues

In text emotion recognition, emotions are classified for current input data. In addition, it is necessary to intelligently enable more accurate emotional recognition according to past memories, emotional subjects, personality, or inclination [8]. In the past, most emotion keywords were extracted to determine emotion. However, since these methods lose various syntactic data or semantic information contained in sentences, there is a limit to the ability of machines to recognize complex human thoughts and emotions. In addition, since text emotion recognition determines emotions based on emotion keyword data extracted from sentences, the linguistic expressions used in texts may differ depending on individuals. However, as a developed emotional expression method, it is possible to express inner emotional states in various and intricate ways. Recently, emotions are analyzed, including the annual relationship between keywords and phrases, with the development of natural language processing technology, rather than judging emotions only with keywords that represent emotions [9].

As a study on emotion recognition in dialogues, the studies [10,11] pointed out the problem that emotion classification can depend on context and used lexical features and dialogues context in a speech to solve this problem. However, the more the context is expanded, the more vocabulary features become excessive, and unless there is a large amount of training data, a serious data sparseness problem emerges. Ref. The authors of [12] used a word embedding model using a large amount of raw corpus for emotion classification to solve the problem of few data. Although this problem was solved to some extent through the use of this method, since the dialogue history was not structurally reflected in the classification model, it was necessary to go through the feature engineering process, including the dialogue context, several times.

The CNN model of [13] exhibited a good performance in sentence and document classification with simple processes of convolution and pooling. In addition, the LSTM model in [14] exhibited a good performance not only in machine translation, but also for various problems, as it learns and generates features considering the order of sequential input data. The model in [15] showed good performance in dialogue act classification by using Recurrent Neural Network (RNN) and CNN for classification of short, continuous (sentences in documents, utterances in dialogues) texts, especially among texts. As such, various studies to recognize emotions in dialogue have been proposed, but there is a limit in that it is difficult to accurately recognize emotions by recognizing only a single emotion in a sentence. In order to improve this, it is necessary to identify the Emotional Association in Dialogues (EAD) and analyze the meaning to recognize detailed and diverse emotions.

2.2. Multi-Emotion Recognition

The method for recognizing multi-emotion classifies data in which an object has n multiple values rather than a single value. In addition, the corresponding n multiple values can be treated as vectors and are usually expressed as binary values. Unlike a single classification method, multiple classifications are essential for emotion analysis research because they can be classified by n [16]. The method of recognizing multiple emotions started from the absence of a clear criterion for classifying emotions. Instead of recognizing a sentence as single-emotion, a method for recognizing multiple emotions has been proposed [5]. In [17], it is more difficult to improve the accuracy of single-emotion classification, and if a sentence is classified as single-emotion, it is considered to belong to only one class among several emotion classes, but it is argued that it actually contains various emotions. To prove this, we proposed a multi-emotion classification technique that predicts readers’ emotions in news articles. In the study of [18], pointing out that most of the emotion classification studies are focused on a single-emotion classification, ignoring the coexistence of several other emotions, they proposed the multi-emotion classification of Twitter data through a deep learning-based approach.

As a study on emotion recognition using datasets in which multi-emotion are classified, Ref. [6] used the Multimodal Multi-Label Emotion, Intensity, and Sentiment Dialogue dataset (MEISD) to transform the Recurrent Neural Network (RNN). Multi-emotion sentences were recognized based on Attentive RNN in Dialogues (DialogueRNN). It was proved that the result of the multi-emotion recognition of text was more accurate than that of audio and video. The authors of Ref. [7] utilized Semantic Evaluation-2018 (SemEval-2018) to recognize multi-emotion sentences based on Attention-based Convolutional Neural Networks (AttnConvnet) that combine Attention and Convolution. A two-step process that humans use when analyzing sentences, first understanding the meaning of a sentence and classifying emotions, was used. The study Ref. [19] classified multi-emotion sentences by applying a con-tent-based method (word and letter n-gram) to tweets. It has been shown that con-tent-based word Uni-grams outperform other methods. However, most of the studies were conducted using English data, and a multi-classification study [18] using the Korean dialogue data set is also being conducted, but more diverse studies are needed. Therefore, in order to improve the performance of emotion recognition, it is necessary to understand the meaning of dialogue by identifying the characteristics of Korean dialogue and recognizing multi-emotion included in sentences. In addition, a method for recognizing multi-emotion sentences and recognizing emotions intrinsic in a dialogue is needed.

3. Intrinsic Emotion Recognition Considering the Emotional Association in Dialogues

In this chapter, we propose Intrinsic Emotion Recognition (IER) considering Emotional Association in Dialogues (EAD) for accurate emotion recognition and overcoming the limitations of single-emotion recognition in dialogues. In order to improve the performance of emotion recognition in dialogues, dialogue data between two speakers were classified into Speaker Level (SL), 1-turn DL (Dialogue Level), and multi-turn DL based on the speaker according to the characteristics of the dialogue, and IER recognized the emotions of dialogues by applying them. EAD is derived through emotional analysis between sentences and dialogues, and the IER applied with it is described.

3.1. Proposed Method

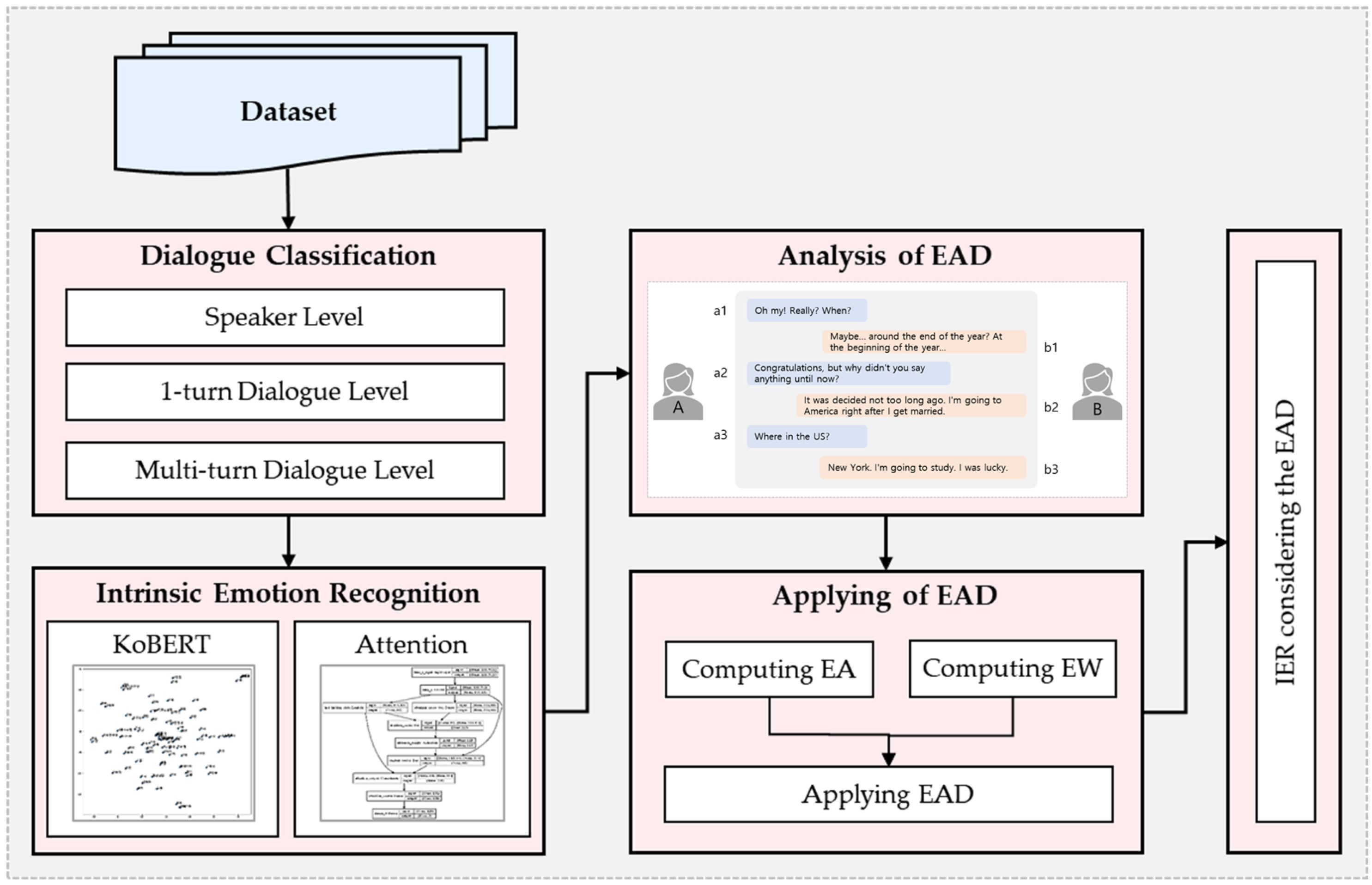

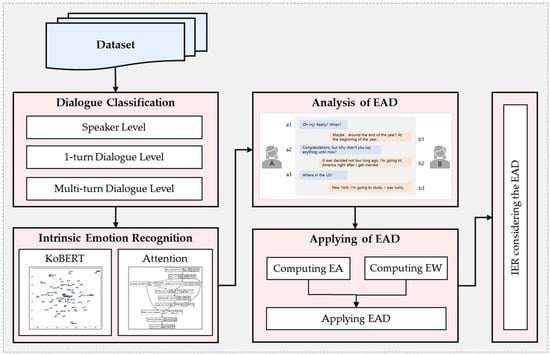

This section describes the IER process considering the proposed EAD. Figure 1 shows the configuration diagram of the proposed method. IER considering EAD includes the dialogue classification stage, IER stage, IER in Dialogue stage, and EAD application stage. In the dialog classification step, dialogue data between two speakers are classified into SL, 1-turn DL, and multi-turn DL dialogues based on the speaker according to the characteristics of the dialogue. In the IER stage, multi-emotion sentences are recognized. In the IER in the Dialogue step, multi-emotion sentences are recognized by applying the IER to the dialogue. In the EAD application stage, the EAD is derived through the analysis of emotional association between sentences and dialogues, and IER is implemented by applying it.

Figure 1.

Configuration diagram of the proposed method.

3.2. Dialogue Classification

This section describes how to classify data into three types of dialogue texts, SL, 1-turn DL, and multi-turn DL, according to the characteristics of dialogue data. Dialogue data between two speakers can be classified into three types according to the characteristics of the dialogue. Dialogue consisting of the first single speaker (Speaker Level: SL), dialogue in the form of 1-turn exchanging between the second speakers (1-turn Dialogue Level: 1-turn DL), and continuous exchanging between the third speakers. This can be classified as a dialogue (Multi-turn Dialogue Level: Multi-turn DL). In this paper, the dialogue data set is classified into three types of dialogues, SL, 1-turn DL, and multi-turn DL, and used for research. Table 1 shows the classification process of dialogue data set. In the dialogue data between two speakers A and B, SL is classified as dialogue by speakers A and B, and 1-turn DL is classified as one dialogue exchanged between A and B. Multi-turn DL classifies all dialogues exchanged between A and B.

Table 1.

Classification process of Dialogue data set.

3.3. Intrinsic Emotion Recognition

In this section, we describe a method for recognizing intrinsic emotions through multi-emotion recognition in sentences for more accurate emotion recognition. Due to the nature of the data, there were many non-standard words and many sentences in which spaces were ignored. Therefore, as a preprocessing process, neologisms and profane words were converted into standard words by using a dictionary that matched non-standard words with standard words. In addition, a morpheme analysis was conducted to analyze a string in text data and decompose them into morpheme units. Since the accuracy of the model varies depending on which morpheme analyzer is used, a morpheme analyzer suitable for the characteristics of the data should be used. Since the data used in this paper were not well-spaced, the OKT morpheme analyzer, which is good for sentence analysis with no spaces among morpheme analyzers, was used.

Next, sentence embedding was learned using KoBERT, a Korean natural language processing model, among many models derived from BERT, a pretrained embedding model. The input sentence is tokenized through BertTokenizer, one of the tokenizer methods, and words are converted into ‘input ids’ in the same way as bag-of-words. The output vector size of the pretrained KoBERT is 768, and the size of the corpus used for learning is 5 M sentences, 54 M words, and the number of words is 8002. It accepts a maximum input of up to 512 tokens, and the output size is 768 [20].

For multi-emotion recognition, vectors and emotion classes learned through sentence embedding are learned using a deep learning model. The designed model attempts to solve the vanishing gradient problem. The input data are the collected sentences and the maximum number of words per sentence, which is 113. Sentence embedding with learned words consists of three dimensions (number of sentences, word length, and embedding vector) with 512 dimensions. Attention is located in the output part of LSTM so that it can be predicted even if the length of the sentence is long, and the unit is composed of 128 units. In the output layer, to predict multi-emotion, Dence’s output was set according to the number of emotions, and softmax was used as the activation function [21]. In addition, adam was used as the optimizer and categorical_crossentropy was used as the loss. For model learning, epochs were set to 30, and overfitting occurred when learning more than 20 times. To reduce the learning time, EarlyStopping and Model-Checkpoint were used for learning, and the optimal model was saved. Through the multi-emotion recognition result, various emotions intrinsic in the sentence can be recognized.

3.4. Intrinsic Emotion Recognition in Dialogues

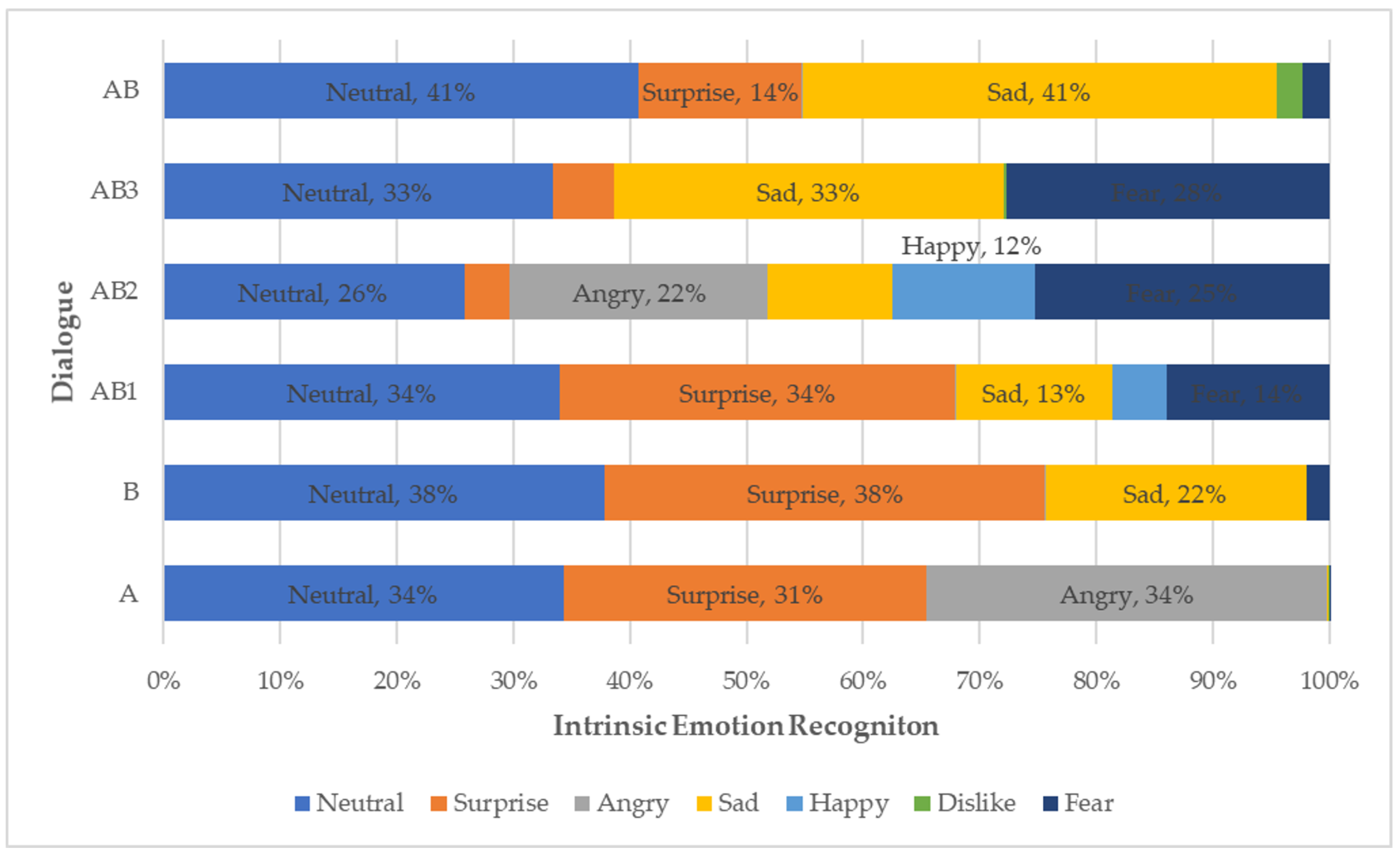

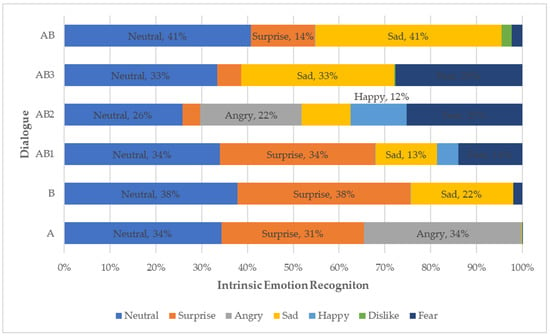

In this section, multi-emotion sentences are predicted by applying IER to the three types of SL, 1-turn DL, and multi-turn DL dialogues classified above [22]. Table 2 shows the emotions and IER results classified in the data set for each sentence, and Table 3 shows the results of applying IER to Dialogue. Figure 2 shows the emotion ratio by dialogue as a result of IER. Using IER, multi-emotion can be recognized by inputting a data set classified as a single emotion per sentence, and multi-emotion sentences can be recognized by inputting dialogues classified into three categories, as shown in Table 3. From Figure 2, we can see how many emotions are intrinsic in the dialogue.

Table 2.

Sentence emotion and IER result.

Table 3.

IER results in dialogue.

Figure 2.

IER results in dialogue emotion ratio.

3.5. Analysis of Emotional Association in Dialogues

In this section, we describe the process of performing a correlation analysis of EAD between sentences and IER results of dialogue sentences and the extracted correlation coefficient. Correlation is how closely linear relationships are between dialogue sentences, and correlation analysis is the analysis of correlations to see how closely the correlations change with each other. As an analysis method, Pearson’s correlation coefficient was used. The correlation coefficient has a value between 0 and 1, and a value between 0 and 0.4 means that the correlation is weak. Usually, a value between 0.4 and 0.7 is considered to be correlated, and a value between 0.7 and 1.0 is considered to be strong correlation [23]. In this paper, the IER correlation coefficient between sentences and dialogues was defined as Emotional Association (EA). Table 4 shows sentences and dialogues with an EA of 0.7 or higher, which is a strong correlation coefficient, by rank. In order of EA, the EA of [b1, B] is the highest. It can be seen that the first sentence of speaker B has the greatest influence on the speaker’s emotions. Next, the high EA of [a1, AB1] indicates that speaker A’s emotions greatly affect the first 1-turn DL. Through the analysis results, it can be seen that in a dialogue, the speaker’s previous emotions affect the next dialogue, and the emotions between speakers are exchanged.

Table 4.

Ranking of sentence and dialogue with an EA of 0.7 or higher.

3.6. Applying of Emotional Association in Dialogues

In this section, the EA analysis method is applied to the entire dialogue, the EA formula is derived through correlation, and the process of applying it is described. The entire dialogue data set is divided into sentences and dialogue, and the EA analysis method of dialogue is applied. Emotion Association in Dialogues (EAD) is determined by extracting only strong correlations of 0.7 or higher among the EA of sentences and dialogues and summing only the correlation coefficients with Emotion Weights (EW) of 0.4 or higher according to the sequence of sentences. Table 5 shows the highest EA from the first to the seventh in the entire dialogue data set. Considering that [b2, AB2] has the highest EA, it can be seen that the dialogue of speaker B has the greatest effect on emotion in the second 1-turn DL. Next, the high EA of [a1, AB] means that the first dialogue of speaker A has a great influence on the emotion of the entire dialogue. EW is derived according to the order of sentences through EA analysis of the entire dialogue.

Table 5.

EA ranked 1st to 7th in dataset.

Table 6 shows the median value of EA by sentence by deriving EW according to sentence order in the entire dialogue data set. The reason for using the median is that it is a number in the middle of the entire data and is more useful than the average when there are extreme values. As a result of analyzing EW, it can be seen that the first sentence of a dialogue has the greatest correlation with the emotion of the entire dialogue. In addition, it can be seen that the same speaker’s emotion affects the next dialogue, and there is a change in emotion according to the other speaker’s emotion. In addition, it can be confirmed that the entire dialogue and the sentence ‘a1, b2’ have significant EA. You can see that the EA of sentence ‘a1’ is the largest, followed by the EA of sentence ‘b2’. It can be seen that the sentence ‘a2, a3, b3’ has very weak EA with the entire dialogue, and the sentence ‘b1’ has negative EA. Therefore, EW applies to the entire dialogue and sentences ‘a1, b2’ with EA.

Table 6.

EW of dialogue in sentence order.

Emotional Association in Dialogue (EAD) is derived by summing the EA and EW of two dialogues. EAD is calculated as follows: If the EA of the two dialogues is greater than 0.7, the multiple emotions of the sentence are multiplied by the EA. If EW is 0.4 or more, it is summed and stored as EAD. In order to compare the IER result of the sentence with the result of applying EAD, the original value is added without change and stored. If EA is less than 0.7 or EW is less than 0.4, it is not applied, so it is set to 0 and not calculated. The following expression is used to derive EAD. The derived EAD is applied to the entire sentence and dialogue. After analyzing the correlation between the sentence and the dialogue, if EA is 0.7 or more or EW is 0.4 or more, the IER result is multiplied by EA, then the EW is summed, and the value is added to the IER of the sentence and stored. Therefore, if EAD, which is a combination of EA and EW, is applied to the emotion recognition of dialogues, it is possible to recognize the emotions of dialogues more accurately, thereby improving the emotion recognition performance.

4. Experiment and Evaluation

In this chapter, IER applied with IER and EAD is tested and evaluated to prove the effectiveness of the proposed method. The data set used in the experiment is described, and the performance of IER is evaluated by measuring Macro-Fl and Micro-F1, which are multi-class performance evaluation indicators. Finally, IER with EAD evaluates performance using emotional similarity.

4.1. Experiment Data

In this section, the data set used to test the proposed method is described. In order to recognize the emotion of dialogue text, we used an open-source dataset provided by AI-HUB [24] (‘single dialogue data set (DS-A) containing Korean emotion information’ and ‘continuous dialogue data set (DS-B) containing Korean emotion information’). The two data sets were constructed by conducting web crawling on SNS, comments, and dialogues, and classifying the selected sentences into a single emotion class. DS-A is divided into ‘Sentence’ and ‘Emotion’ and consists of a total of 38,594 sentences. Each sentence is classified as one emotion among seven emotion classes: fear, surprise, angry, sad, neutral, happy, and disgust. DS-B is divided into ‘Dialog’, ‘Utterance’, and ‘Emotion’. It consists of 55,600 sentences with a total of 10,000 dialogues and is classified as one emotion among seven emotion classes.

Table 7 shows the number of data for each emotion class. It can be seen that the data imbalance of DS-B is more severe than that of DS-A. In this paper, two data sets are merged and used to build enough learning data to learn emotion recognition. The total, which is the sum of the two data sets, also consists of imbalanced data in the number of data per emotion class. Looking at the ratio of data by emotion class, the most frequent emotion class is ‘Neutral’,at 48,616, accounting for 51.6%. The least emotion is the ‘Fear’ class, at 5566, accounting for 5.9%. If the data are imbalanced, the precision, recall, and F1-score may be low even though the accuracy is high. In this paper, in order to solve the data imbalance problem, we balanced the data of all emotion classes to 5500 according to the ‘Fear’ class, which has the smallest number of data. A total of 38,500 data were used by randomly extracting 5500 data for each of the seven emotions.

Table 7.

Number of data by emotion class.

4.2. Experiment Evaluation Method

This section describes the experimental evaluation method of IER to evaluate the accuracy of the proposed method. As an evaluation method of the proposed method, the performance is compared using Macro-Fl and Micro-F1, which compare the average of F1-scores of rows and classes, which are multi-class evaluation methods. The multi-class evaluation method calculates and evaluates the classification of a class predicted to contain data or the probability that data are included in each class as a number between 0 and 1, and repeats binary classification as many times as the number of classes. Mean-F1 is a method of comparing the average value of the F1-score calculated by the row data unit, and Macro-Fl is the average value calculated by the F1-score by the row data unit, giving the same weight regardless of the frequency of the class and averaging way. Micro-F1 counts which of TP, TN, FP, FN corresponds to each pair of row data*class and calculates an F score based on this, to solve the imbalance problem by class, weighted and averaged according to frequency way to pay. In this paper, Macro-F1 and Micro-F1 are used as multi-class evaluation methods to evaluate multi-label emotions. The expression represents the expression for calculating Macro-F1 and Micro-F1.

4.3. Analysis of Experiment Results

In this section, we describe the experiments and performance evaluation of the IER to which IER and EAD are applied to analyze the experimental results of the proposed method. We conducted a performance evaluation and comparative evaluation through the used model and analysis. As for the analysis of the experimental results, IER and IER applied with EAD are compared and evaluated. EAD was derived by applying the IER to the dialogue, and the IER of the dialogue was recognized.

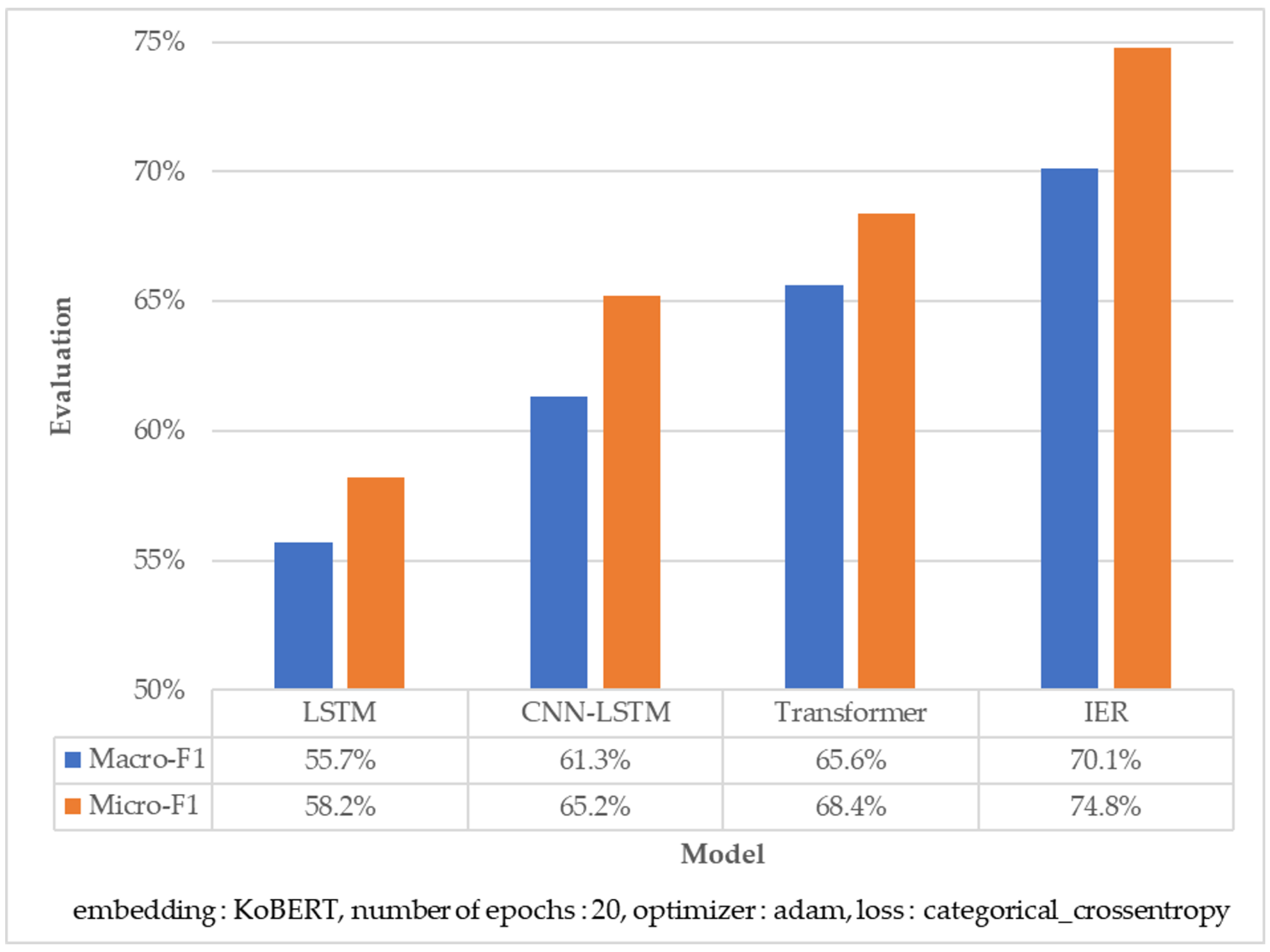

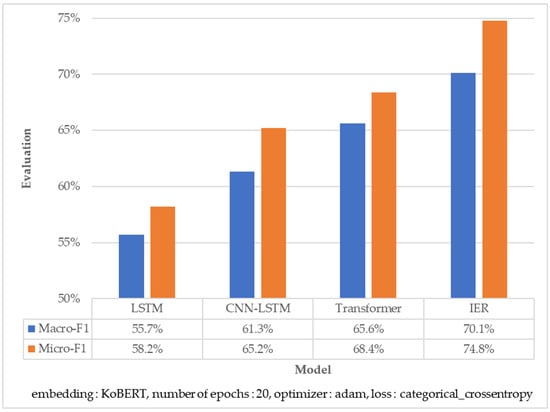

To verify the proposed IER, we compare and evaluate using the multi-class evaluation methods Macro-Fl and Micro-F1. Figure 3 is a graph comparing Macro-Fl and Micro-F1, which are multi-classification results of LSTM, CNN-LSTM, and Transformer models and the proposed IER. As a result of comparing the multi-classification performance of each model, it can be seen that the proposed IER Macro-F1 and Micro-F1 perform the best. It can be seen that the performance of the proposed method is improved by 16.6% and 9.6% compared to the LSTM and CNN-LSTM models, and the performance of the proposed method is improved by 6.4% compared to the Transformer model. The reason for the best performance of the proposed method is considered to be that the attention model is effective in analyzing the correlation in dialogue because it better grasps the dependence between words. If you collect more training data and improve the model, you can improve performance.

Figure 3.

Macro-F1 and Micro-F1 comparison by model.

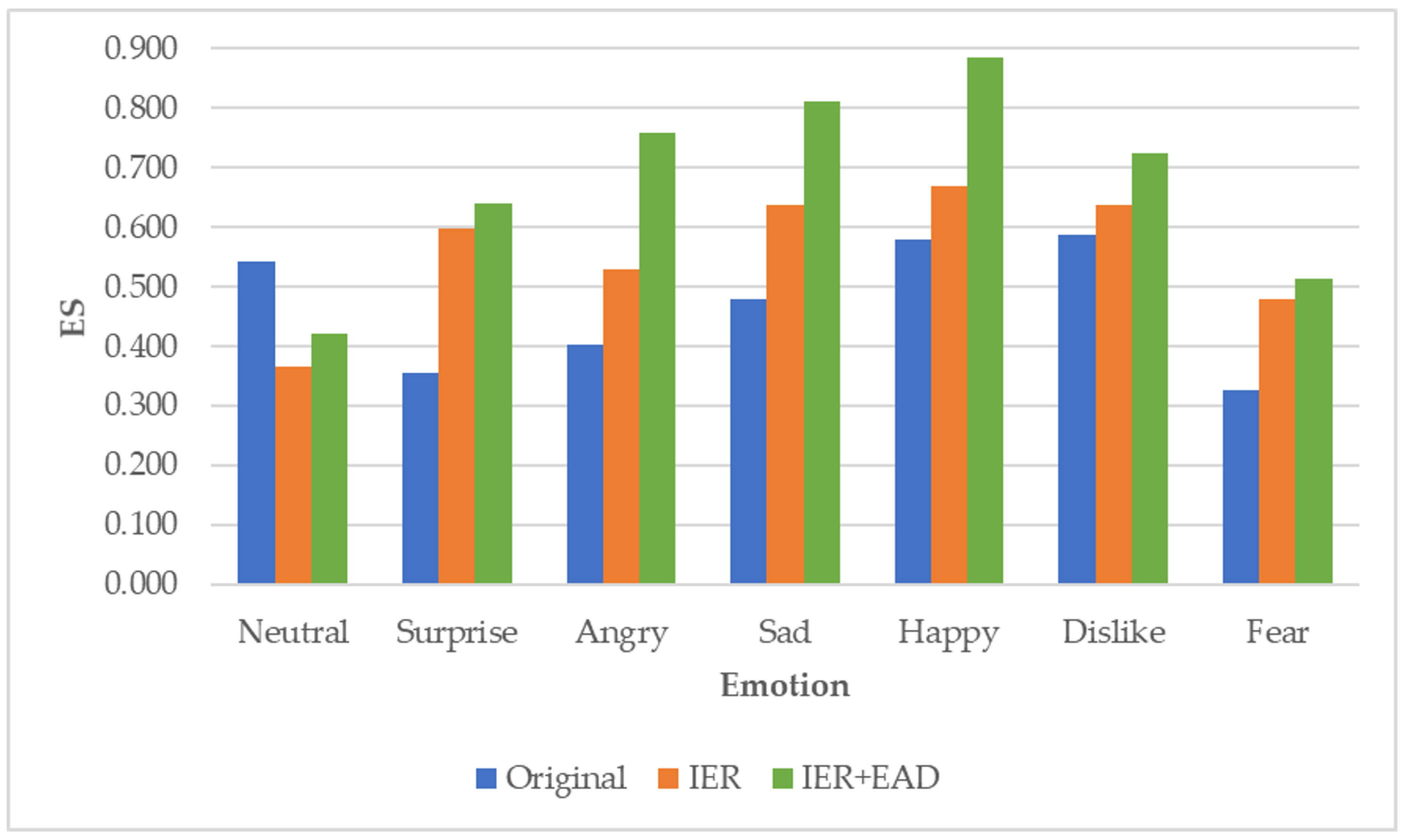

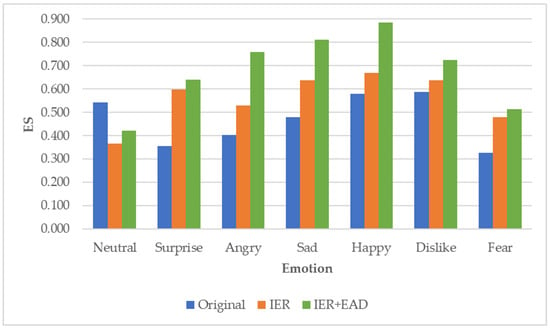

Next, we compare the similarity between emotions and sentences to verify the IER applying EAD. We also compared the original data (Original) and IER, and IER+EAD where EAD is applied to IER using emotion and sentence similarity. The similarity is determined as the sum of the similarities between emotions and words in a sentence, and cosine similarity, which is often used to compare emotions, is used. The cosine similarity refers to a similarity obtained by using a cosine angle between two vectors. Figure 4 shows a comparison graph of emotional similarity (ES) of sentences. As a result of comparing sentence similarity between emotion and Original, IER, and IER+EAD, it can be seen that the proposed IER+EAD has the highest similarity, and it can be confirmed that IER has a higher similarity than Original. This means that IER recognizes more diverse and detailed emotions than Original, and IER to which the proposed EAD is applied recognizes the most accurate emotions of dialogue.

Figure 4.

Comparison of ES of sentences.

Finally, we compare the emotion recognition results of Emotion, IER, and IER+EAD through dialogue examples. Table 8 shows examples of dialogue sentences. In addition, Table 9 compares the emotion recognition results of the emotion class classified in the dataset, IER, and the proposed IER+EAD. In the dataset, emotions are classified as single emotions and most sentences are classified as ‘Neutral’, but multi-emotion sentences can be recognized through IER. In addition, as a result of applying EAD, it was found that the emotion of the first sentence had the most influence on the emotion of the entire dialogue, and the emotions ‘Surprise’ and ‘Happy’, which were felt the most in the entire dialogue, were recognized the most. Therefore, when recognizing multi-emotion in a sentence through IER and applying EAD, it is possible to improve the performance of emotion recognition because it is possible to recognize emotions more accurately than when recognizing single emotions for each sentence by being affected by the flow of emotions throughout the dialogue.

Table 8.

Examples of dialogue sentences.

Table 9.

Comparison of emotion recognition results.

5. Conclusions and Suggestions

In this paper, we proposed Intrinsic Emotion Recognition (IER) considering Emotional Association in Dialogues (EAD) to overcome the limitations of single-emotion recognition in dialogues and recognize more accurate emotions. As the data set, the ‘single dialogue data set containing Korean emotion information’ and the ‘continuous dialogue data set’ provided by AI-HUB were used. The data for each emotion class were unbalanced, and the number of data was balanced to 5500. As a preprocessing process, non-standard language was treated as standard language. After analyzing the morphemes using Okt, the sentence embeddings in the data set were vectorized by learning with KoBERT. The learned vector and emotion class were IER using the Attention model. The data set was made up of sentences with a single emotion, but IER can recognize multiple emotions in a sentence. In addition, dialogue data were classified into SL, 1-turn DL, and multi-turn DL dialogues according to their characteristics, and the presence of multiple emotions in dialogues was recognized by applying IER. EAD was determined through emotion analysis between dialogues, and it was shown that the emotion recognition performance can be improved by applying it.

As a result of comparing the performance of the proposed method with Macro-Fl and Micro-F1, which are multi-class evaluation methods, the performance of the proposed IER was the best, at 74.8%. The IER+EAD method was determined to be the best for recognizing the emotions of dialogues. Through the proposed method, the imbalance of data was resolved, the type of dialogue text was identified, and the appropriate processing method was applied. As a result of IER, various emotional changes in the sentence were seen, proving that more accurate emotion recognition is possible. In addition, if IER+EAD is used, it is possible to understand the meaning of dialogue and to recognize multiple emotions other than the representative emotions in a sentence, enabling more accurate emotion recognition. Through these detailed changes in emotions, it is possible to identify the relationship between emotions in dialogue and to improve the performance of emotion recognition.

However, the analysis of the emotions of daily dialogues with only short texts was limited. More accurate emotion recognition is possible if sufficient learning is achieved through dialogue data in more diverse fields by collecting more data. Emotion-labeled daily dialogue datasets are required for learning in various fields. If additional data are collected and a model is built, the emotion recognition performance will be improved. This study can contribute to improving the performance of emotion recognition in dialogue. In addition, by applying AI that accurately recognizes emotions to a dialogue interface that interacts directly with humans, it can be used in various fields such as counseling treatment, emotional engineering, emotional marketing, and emotional education. For future research, we plan to create a deep learning model that recognizes multiple emotions in continuous dialogue by specifically reflecting the intrinsic emotions and building a dialog system that predicts continuous emotions.

Author Contributions

Conceptualization, methodology, M.-J.L., J.-H.S.; validation, formal analysis, M.-J.L., J.-H.S. and M.-H.Y.; investigation, resources, data curation, M.-J.L., M.-H.Y.; writing—original draft preparation, M.-J.L.; visualization, supervision, project administration, M.-J.L., J.-H.S. and M.-H.Y.; funding acquisition, J.-H.S. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by research fund from Chosun University, 2022.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Available online: https://aihub.or.kr/keti_data_board/language_intelligence (accessed on 15 December 2022).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Yoon, G.-M. Performance Improvement of Movie Recommendation System Using Genetic Algorithm and Adjusting Artificial Neural Network Parameters. J. KINGComput. 2014, 10, 56–64. [Google Scholar]

- Seo, J.; Park, J. Data Filtering and Redistribution for Improving Performance of Collaborative Filtering. J. KINGComput. 2021, 17, 13–22. [Google Scholar]

- Son, G.; Lee, W.; Han, K.; Kyeong, S. The study of feature vector generation and emotion recognition using EEG signals. J. KINGComput. 2020, 16, 72–79. [Google Scholar]

- Lim, M.; Shin, J. Continuous Emotion Recognition Method applying Emotion Dimension. 2021 Spring Conf. KISM 2021, 10, 173–175. [Google Scholar]

- Shin, D.-W.; Lee, Y.-S.; Jang, J.-S.; Rim, H.-C. Using CNN-LSTM for Effective Application of Dialogue Context to Emotion Classification. In Proceedings of the Annual Conference on Human and Language Technology, Pusan, Republic of Korea, 7–8 October 2016; pp. 141–146. [Google Scholar]

- Firdaus, M.; Chauhan, H.; Ekbal, A.; Bhattacharyya, P. MEISD: A multimodal multi-label emotion, intensity and sentiment dialogue dataset for emotion recognition and sentiment analysis in dialogues. In Proceedings of the 28th International Conference on Computational Linguistics, Barcelona, Spain, 8–13 December 2020; pp. 4441–4453. [Google Scholar] [CrossRef]

- Kim, Y.; Lee, H.; Jung, K. AttnConvnet at SemEval-2018 task 1: Attention-based convolutional neural networks for multi-label emotion classification. In Proceedings of the 12th International Workshop on Semantic Evaluation, New Orleans, LA, USA, 5–6 June 2018; pp. 141–145. [Google Scholar] [CrossRef]

- Zeng, X.; Chen, Q.; Chen, S.; Zuo, J. Emotion label enhancement via emotion wheel and lexicon. Math. Probl. Eng. 2021. [Google Scholar] [CrossRef]

- Lim, M. A Study on the Expressionless Emotion Analysis for Improvement of Face Expression Recognition. Master’s Thesis, Chosun University, Gwangju, Republic of Korea, 2017. [Google Scholar]

- Hasegawa, T.; Kaji, N.; Yoshinaga, N.; Toyoda, M. Predicting and eliciting addressee’s emotion in online dialogue. In Proceedings of the 51st Annual Meeting of the Association for Computational Linguistics, Sofia, Bulgaria, 4–9 August 2013; Volume 1, pp. 964–972. [Google Scholar]

- Kang, S.-W.; Park, H.-M.; Seo, J.-Y. Emotion classification of user’s utterance for a dialogue system. Korean J. Cogn. Sci. 2010, 21, 459–480. [Google Scholar]

- Shin, D.-W.; Lee, Y.-S.; Jang, J.-S.; Lim, H.-C. Emotion Classification in Dialogues Using Embed-ding Features. In Proceedings of the 27rd Annual Conference on Human and Language Technology, Jeonju, Republic of Korea, 16–17 October 2015; pp. 109–114. [Google Scholar]

- Kim, Y. Convolutional Neural Networks for Sentence Classification. In Proceedings of the Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 26–28 October 2014; pp. 1746–1751. [Google Scholar] [CrossRef]

- Plutchik, R. A general psychoevolutionary theory of emotion. In Emotion: Theory, Research, and Experience. Theor. Emot. 1980, 1, 3–33. [Google Scholar] [CrossRef]

- Lee, J.; Dernoncourt, F. Sequential Short-Text Classification with Recurrent and Convolutional Neural Networks. In Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics, San Diego, CA, USA, 12–17 June 2016; pp. 515–520. [Google Scholar] [CrossRef]

- Won, J.; Lee, K. Multi-Label Classification Approach to Effective Aspect-Mining. Inf. Syst. Rev. 2020, 22, 81–97. [Google Scholar]

- Mohammed, J.; Moreno, A. A deep learning-based approach for multi-label emotion classification in tweets. Appl. Sci. 2019, 9, 1123. [Google Scholar] [CrossRef]

- Lim, Y.; Kim, S.; Jang, J.; Shin, S.; Jung, M. KE-T5-Based Text Emotion Classification in Korean Dialogues. In Proceedings of the 33rd Annual Conference on Human & Cognitive Language Technology, Online, 14–15 October 2021; pp. 496–497. [Google Scholar]

- Ameer, I.; Ashraf, N.; Sidorov, G.; Adorno, H.G. Multi-label emotion classification using con-tent-based features in Twitter. Comput. Sist. 2020, 24, 1159–1164. [Google Scholar] [CrossRef]

- Hong, T. A Method of Video Contents Similarity Measurement Based on Text-Image Embedding. Doctoral Dissertation, Chosun University, Gwangju, Republic of Korea, 2022. [Google Scholar]

- Lim, M.; Yi, M.; Kim, P.; Shin, J. Multi-label Emotion Recognition Technique considering the Characteristics of Unstructured Dialogue Data. Mob. Inf. Syst. 2022. [Google Scholar] [CrossRef]

- Lim, M. Multi-Label Emotion Recognition Model Applying Correlation of Conversation Context. Doctoral Dissertation, Chosun University, Gwangju, Republic of Korea, 2022. [Google Scholar]

- Lim, M.; Kim, S.; Shin, J. Association Prediction Method Using Correlation Analysis between Fine Dust and Medical Subjects. Smart Media J. 2018, 7, 22–28. [Google Scholar] [CrossRef]

- Cognitive Technology-Language Intelligence. Available online: https://aihub.or.kr/keti_data_board/language_intelligence (accessed on 2 June 2021).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).