Abstract

The continuously evolving world of cloud computing presents new challenges in resource allocation as dispersed systems struggle with overloaded conditions. In this regard, we introduce OptiDJS+, a cutting-edge enhanced dynamic Johnson sequencing algorithm made to successfully handle resource scheduling challenges in cloud computing settings. With a solid foundation in the dynamic Johnson sequencing algorithm, OptiDJS+ builds upon it to suit the demands of modern cloud infrastructures. OptiDJS+ makes use of sophisticated optimization algorithms, heuristic approaches, and adaptive mechanisms to improve resource allocation, workload distribution, and task scheduling. To obtain the best performance, this strategy uses historical data, dynamic resource reconfiguration, and adaptation to changing workloads. It accomplishes this by utilizing real-time monitoring and machine learning. It takes factors like load balance and make-up into account. We outline the design philosophies, implementation specifics, and empirical assessments of OptiDJS+ in this work. Through rigorous testing and benchmarking against cutting-edge scheduling algorithms, we show the better performance and resilience of OptiDJS+ in terms of reaction times, resource utilization, and scalability. The outcomes underline its success in reducing resource contention and raising service quality generally in cloud computing environments. In contexts where there is distributed overloading, OptiDJS+ offers a significant advancement in the search for effective resource scheduling solutions. Its versatility, optimization skills, and improved decision-making procedures make it a viable tool for tackling the resource allocation issues that cloud service providers and consumers encounter daily. We think that OptiDJS+ opens the way for more dependable and effective cloud computing ecosystems, assisting in the full realization of cloud technologies’ promises across a range of application areas. In order to use the OptiDJS+ Johnson sequencing algorithm for cloud computing task scheduling, we provide a two-step procedure. After examining the links between the jobs, we generate a Gantt chart. The Gantt chart graph is then changed into a two-machine OptiDJS+ Johnson sequencing problem by assigning tasks to servers. The OptiDJS+ dynamic Johnson sequencing approach is then used to minimize the time span and find the best sequence of operations on each server. Through extensive simulations and testing, we evaluate the performance of our proposed OptiDJS+ dynamic Johnson sequencing approach with two servers to that of current scheduling techniques. The results demonstrate that our technique greatly improves performance in terms of makespan reduction and resource utilization. The recommended approach also demonstrates its ability to scale and is effective at resolving challenging work scheduling problems in cloud computing environments.

1. Introduction

Cloud computing has excelled in demonstrating its significance in the development of science and technology in the current world. Utilizing cloud technology, customer access to resources is based on a “pay per use” demand. Users can then examine the resources to which they have been granted access after that. Different scheduling methods and virtual machine (VM) allocation processes are used by various cloud models. It can be challenging for service providers to change their resource supply to correspond with demand. Consequently, resource management is crucial for suppliers of cloud services. To increase system efficiency as the workload increases, better resource management is needed. The capacity workflow is completed through the coordination of resource allocation, dynamic resource allocation, and strategic planning. Depending on the terms of the service level agreement, the Internet service provider (ISP), with its unmatched scalability, flexibility, and cost-effectiveness for both businesses and individuals, has emerged as a paradigm shift in the field of information technology. In this era of pervasive digitalization, cloud computing services have become crucial to a broad range of applications, from data processing and storage to machine learning, Internet of Things installations, and more. While the dynamic and elastic nature of cloud systems has many benefits, it also presents substantial scheduling and resource management issues. A critical issue that necessitates creative solutions is the widespread saturation of cloud resources in particular [1].

The difficulty of allocating computer resources, such as CPU, memory, and storage, to a variety of activities or workloads is known as resource scheduling in the context of cloud computing. Various users or programs may be responsible for these duties, and each will have its own priorities and requirements. Resource allocation in conventional static systems might be controlled by static setups and well-defined policies. The atmosphere in the cloud is everything but constant. Variable workloads, different user needs, and erratic resource contention are its defining characteristics. These elements combine to create a challenging and ever-changing task of optimal resource scheduling. The minimizing of the makespan, which is the total amount of time needed to accomplish a collection of activities, is one of the crucial elements of resource scheduling. In cloud computing, reducing the time span is essential for achieving high resource utilization, meeting service level agreements (SLAs), and ensuring user satisfaction. To solve the makespan reduction challenge, dynamic Johnson sequencing (DJS) has long been acknowledged as a successful solution. DJS, which was first created for manufacturing parallel machines, has been modified for cloud resource scheduling. It is well renowned for being easy to use and for producing outcomes that are close to ideal in terms of time.

Even though DJS is wellknown and efficient, the constantly changing world of cloud computing necessitates novel methods for resource scheduling. Heterogeneous resources, virtualization techniques, and the requirement for dynamic responses to shifting workloads are all characteristics of contemporary cloud settings. To address this, new scheduling algorithms that include real-time monitoring, heuristics, and optimization approaches must be created. To address these issues, we provide OptiDJS+, a new enhanced dynamic Johnson sequencing algorithm designed for effective resource scheduling in distributed overloading scenarios in cloud computing settings. To satisfy the requirements of modern cloud infrastructures, OptiDJS+ expands on the well-established DJS algorithm’s base and functionality [2].

The key features of OptiDJS+ are as follows:

- Advanced optimization techniques: OptiDJS+ incorporates state-of-the-art optimization techniques to intelligently allocate resources, ensuring an optimal makespan and improved resource utilization.

- Heuristic methods: to adapt to dynamic workloads, OptiDJS+ leverages heuristic methods for on-the-fly decisionmaking, optimizing resource allocation based on real-time demand.

- Adaptive scheduling: in the face of varying workloads, OptiDJS+ dynamically reconfigures resource allocation strategies, maintaining high efficiency and adaptability.

- Real-time monitoring: OptiDJS+ continuously monitors resource usage and workload patterns, enabling timely adjustments to resource allocation and task sequencing.

- Makespanminimization: a primary objective of OptiDJS+ is the minimization of the makespan, thereby optimizing resource usage and ensuring timely task completion.

- Load balancing: OptiDJS+ prioritizes load balancing to distribute tasks evenly across available resources, preventing resource contention and improving system stability.

- Fault tolerance: in the event of resource failures or disruptions, OptiDJS+ employs fault-tolerant strategies to ensure uninterrupted task execution and maintain service reliability.

We present a thorough examination of OptiDJS+ in this work, describing its design ideas, implementation details, and empirical assessments. We demonstrate the higher performance and robustness of OptiDJS+ through in-depth testing againstmodern scheduling techniques [3]. Our findings demonstrate its potency in reducing resource contention, cutting down on the makespan, and boosting general service quality in cloud computing environments. The development of OptiDJS+ marks a significant advancement in the search for effective resource scheduling solutions in distributed, overloaded scenarios. It is an effective tool for tackling the resource allocation issues that cloud service providers and consumers confront because of its versatility, optimization skills, and improved decision-making procedures. We think that OptiDJS+ will aid in the creation of more robust, effective, and user-centric cloud ecosystems across a variety of application domains as cloud technologies continue to advance and define the future of computing.

Scheduling issues in cloud computing provide a variety of difficulties. First of all, efficient scheduling is challenging due to the dynamic nature of cloud resources. Meeting service-level agreements (SLAs), optimizing resource use, and balancing task distribution are ongoing issues. Another level of complexity is added by the need to ensure equity between users and apps while taking into account various resource requirements. Data scheduling presents continual issues due to security and privacy considerations as well as the necessity for real-time adaptation to handle shifting workloads. Automation, new scheduling methods, and machine learning strategies are all being investigated more and more as solutions to these problems. To optimize cloud resource allocation and improve overall system efficiency, a thorough grasp of these difficulties is necessary.

By addressing resource scheduling issues in the cloud computing environment, “OptiDJS+: A Next-Generation Enhanced Dynamic Johnson Sequencing Algorithm for Efficient Resource Scheduling in Distributed Overloading Within Cloud Computing Environment” displays its relevance. Utilizing the dynamic Johnson sequencing method, it maximizes resource allocation and increases efficiency—two factors that are crucial for handling overloaded conditions. This scalability guarantees that resources are deployed efficiently during periods of high demand, hence lowering operating costs and providing a stable level of performance. Users gain from a better resource allocation process, which leads to increased service quality and minimal disruptions, making OptiDJS+ a helpful tool for optimizing cloud-based services and applications within the constantly changing cloud computing ecosystem.

1.1. Objective of the Study

Previously, we discussed FCFS, max–min round-robin, Johnson sequencing, and OptiDJS+ Johnson sequencing on a multi-server computer. This study suggests a novel methodology that will speed up cloud task processing. The total execution time of each work may be calculated using a Gantt chart if there is a specified sample of tasks or jobs. The experimental analysis tables are utilized to display each task’s overall execution time. The system’s total turnaround time (TAT), average waiting time (AWT), mean number of tasks in the queue, mean number of tasks in the system, average waiting time of tasks in the queue, and average waiting time of tasks in the system may all be decreased with the help of OptiDJS+ techniqueapplications within the constantly changing cloud computing ecosystem.

1.2. Problem Statement

Due to the dynamic and elastic nature of cloud infrastructures, resource scheduling in modern cloud computing systems is a major difficulty. Distributed resource overloading is a serious problem since it results in inefficient resource use, longer response times, and possible SLA violations. Although successful, existing scheduling methods, such as the well-known dynamic Johnson sequencing (DJS) algorithm, might not be able to handle the complexity of contemporary cloud systems. These challenges include the requirement for real-time adaptation, diverse resources, virtualization technologies, and shifting workloads [4]. The current issue is the effective distribution of cloud resources across several jobs or workloads, each of which has unique requirements, priorities, and features. To ensure equitable resource allocation, fault tolerance, and service quality, the main goal is to reduce the makespan, which is the sum of the times necessary for each activity to be completed. A next-generation scheduling algorithm that can make use of sophisticated optimization strategies, heuristic approaches, machine learning, and real-time monitoring to adapt to the changing demands of cloud computing is urgently required to solve this issue [5].

1.3. Major Contributions

- The DHJS method, which aims to maximize both efficiency and cost-effectiveness, is frequently used to optimize difficult engineering project scheduling issues. In this study, we offer a technique for greatly enhancing resource availability in the context of parallel processing in a cloud computing environment.

- The Johnson Bayes design principle, which is essential for rational task sequencing, is adhered to in this study’s proposal of a two-tiered technique to improve work scheduling performance.

- A preset collection of varied virtual machines (VMs) is ultimately created as a result. The provisioning of virtual resources can be sped up by using certain VM settings, especially when tasks are being scheduled. Dynamic task allocation is supported at the secondary level by certain VMs that advise dynamic task-scheduling methods. Comparing their efficacy to current approaches, empirical test findings show that they are more successful at managing resource demands and enhancing cloud scheduling performance. One of the most significant difficulties in the field of cloud computing is task scheduling.

- The second significant benefit is the decrease in resource management expenses for numerous ten ant user infrastructures situated inside a uniformly dispersed data center.

1.4. Paper Organization

We looked at numerous unique scheduling algorithms to identify which characteristics should be considered and which ones should be ignored in a given system. The literature review is arranged using a variety of viewpoints. In Section 2, we present a comprehensive examination of the various scheduling techniques published in the literature during the last 10 years. Using the approach, applications, and parameter-based metrics that were employed, the prior work scheduling initiatives are categorized in Section 3 for easy reference. Section 4 offers a summary and suggestions for more research.

2. Related Work

Regarding distributed computing, problems with job planning may be the main worry. Using planning tactics is working to cut down on hold-up periods. The use of the cloud is expanded through work planning to maximize its advantages. To choose a suitable rundown where the errands are expected to be completed and shorten the overall task execution time, several booking computations are used [6]. Some planning calculations are exhibited inside the cloud climate, which differ from typical booking calculations and do not matter to cloud frameworks since the cloud may be a circulating climate made up of heterogeneous structures [2]. To help in VM selection for application scheduling, Ref. [3] has developed a novel hybrid multi-objective heuristic technique called NSGA-II and GSA. There is no VM-spanning scheduling method [7,8]. It combines the gravitational search algorithm (GSA) and the non-dominated sorting genetic algorithm-II (NSGA-II). NSGA-II can expand the search area via research, whereas GSA can use a good solution to locate the best answer and keep the algorithm from being trapped in the optimization process [9]. This hybrid algorithm aims to schedule a larger number of jobs with the least amount of overall energy usage while obtaining the shortest response time and lowest pricing. There is no scheduling algorithm that works with several VMs [10].

Ref. [11] uses the modified ant colony optimization for load balancing (MACOLB) approach (VMs) to allocate incoming tasks to the virtual computers. Jobs are allocated to the VMs following their processing capacity by taking into consideration balanced VM workloads (i.e., tasks are distributed in decreasing order, starting with the most powerful VM, and so on). The MACOLB is used to shorten the execution time, enhance system load balancing, and choose the optimal resource allocation for batch processes on the public cloud. The biggest flaw in this technique, however, is thought to be the sharing between VMs. To tackle the VM scheduling problem and optimize performance, The joint shortest queue (JSQ) routing method and the Myopic Max Weight scheduling strategy were developed [12] after abandoning this assumption. The idea separates VMs into several groups, each of which corresponds to a certain resource pool, such as the CPU, storage, and space [13]. The user-requested VM type is taken into account as the incoming requests are distributed among the virtual servers based on JSQ, which routes connections to virtual servers with the shortest queue lengths [14,15,16]. Theoretical studies show that the principles in [17,18] may be effectively made throughput-optimal by choosing appropriate large frame lengths. The simulation results show that the rules are also capable of producing useful latency outcomes [19].

To schedule different workloads, several heuristic algorithms have been developed and put to use in the public cloud with an ideal fog-cloud offloading system for massive data optimization across diverse IoT networks [20]. The first come, first serve basis algorithm, the min–max algorithm, the min–min algorithm, and the Suffrage computation are among the most significant improvements in heuristic approaches. Other important developments include opportunistic load balancing and min–min opportunistic load balancing, shortest task first, balance scheduling (BS), greedy scheduling, and sequence scheduling (SS) [21]. In addition, a timetable approach that takes limitations into account can be applied. The ratio of tasks that are accepted in this situation increases, while the ratio of tasks that fail decreases. Modified RRs (MRRs) are suited for high availability, minimize hunger, and minimize delay, in addition to being fair and avoidable [22]. By using a more sophisticated RR scheduling approach, resource usage was increased in [23]. The algorithmic approach proposed in [24] offers better turnaround time and reaction time compared to existing frequency adaptive divided sequencing (BATS) or enhanced differential evolution algorithm (IDEA) systems. The functions of the system consist of real scientific activities from CyberShake and epigenomics. The public cloud will benefit from efficient resource management. For scheduling algorithms in an offline cloud context, deep reinforcement learning approaches are recommended [25]. Since they essentially exclusively deal with CPU and memory-related characteristics, DeepRM and DeepRM2 are modified to address resource scheduling concerns. The scheduling strategy used in this instance is a combination of the smallest job first (SJF), longest work first (LJF), tries, and random approaches. Thispaper argues in favor of applying reinforcement learning strategies to similar optimization issues [26].

Real-time resource allocation QOS is difficult to implement. It is compared to statistical data and appraised data for the current situation. Then, based on the same historical situation, the resources are allocated in the best or nearly the best way possible. In this case, the unused spectrum is distributed using revolutionary design methodologies and supervised machine learning techniques [27]. Classification mining techniques are widely applied in a variety of contexts, including text, image, multimedia, traffic, medicine, big data, and other application scenarios. The implementation of axis-parallel binary DTC is described in [28] using a parallelizing structure that significantly minimizes the amount of assets needed in terms of space. Sequence categorization was initially discussed in some papers using rules made up of fascinating patterns found in a set of labeled episodes and corresponding class labels. The authorsassessed a pattern’s interest in a certain class of sequences by combining the coherence and support of the pattern. They outlined two different strategies for creating classifiers and employed the patterns they identified to create a trustworthy classification model. The structures that the program discovers properly represent the patterns and have been found to outperform other machine learning methods for training sets. For automatic text categorization, a Bayesian classification approach employing class-specific properties was proposed in [29].

Table 1, presented here, summarizes several scheduling techniques and their main emphasis on particular scheduling characteristics. Each technique is labeled with a “Yes” or “No” to indicate if it focuses on a particular scheduling issue. Some scheduling algorithms, such as the genetic algorithm, round-robin, priority-based job-scheduling algorithm, Dens, and multi-homing, place a strong emphasis on factors like “Makespan” (reducing the maximum completion time) and “Completion Time.”On the other side, techniques like simulated annealing and reinforcement learning are more geared toward solving “Total Processing Time” and “Completion Time.” In addition, there are techniques with a combined focus on various scheduling elements, such as round-robin (different example),min–min, and MaxMin. These indications give users information about the benefits and considerations of each scheduling technique, allowing them to select the one that best suits their needs and goals [30].

Table 1.

Research gap findings in related literature based on parameters used in proposed method for overcoming the gap.

After an analysis of Table 1, the following points are observed and filled in in our proposed method:

- Approaches for measuring clouds include batch, interactive, and real-time approaches. Batch systems allow the forecasting of throughput and turnaround times. To grade responsiveness and fairness, a live, interactive system that keeps track of deadlines might be used. The market and performance are the key topics of the third category.

- For task and execution mapping, several rules are taken into consideration since performance-based execution time optimization is the primary goal. The only aspect that counts in a market system is pricing. Two market-based scheduling techniques, the backtrack algorithm and the genetic algorithm, are built on top of it. Static scheduling allows for the usage of any conventional scheduling technique, including round-robin, min–min, and FCFS. Dynamic scheduling may use any heuristic technique, including genetic algorithms and particle swarm optimization.

- As a task is finished, the processing time is updated in this type of task-based scheduling, which is widely used for repeating tasks. By using dynamic scheduling, the number of jobs, the location of the machines, and the allocation of resources are not fixed. When the jobs are delivered is unknown before submission.

3. Methodology

In a distributed computing climate, various sorts of client-explicit undertakings are required. Such positions should be sought to gain the best grouping to diminish holding times using the current lining system. The dynamic heuristics Johnson sequencing algorithm combines dynamic quantum (mid burst time of the task+maximum burst time)/2), which will be the time quantum in round-robin scheduling. The Johnson sequencing algorithm is then used to discover the execution sequence. The jobs in the queue are then all scheduled using three server machines, M1, M2, and M3, with J = 1, 2, and 3. Next, we look for the lowest value in a task matrix. According to its processing time matrix, the job that requires the machine with the shortest processing time is carried out first. Machine M2 begins doing the remaining tasks when Machine M1 completes a certain job [31].

The queuing model is employed to determine waiting lines. The fundamental model is supplied by outlining the service process, arrival procedure, and maximum capacity of the number of sites and services. It is assumed that each work is handled per the exponential distribution across the sample, while the Poisson distribution is taken into account whenever the user’s request is made to the server. The M/M/c/K queuing model is used to construct a non preemptive system that has five capacity slots and takes into consideration the two SCs and the waiting position. The authors of one study have integrated the queueing model and the task-scheduling approach into a single document. Inter-arrival times are independent, uniformly distributed variables with a distribution [32].

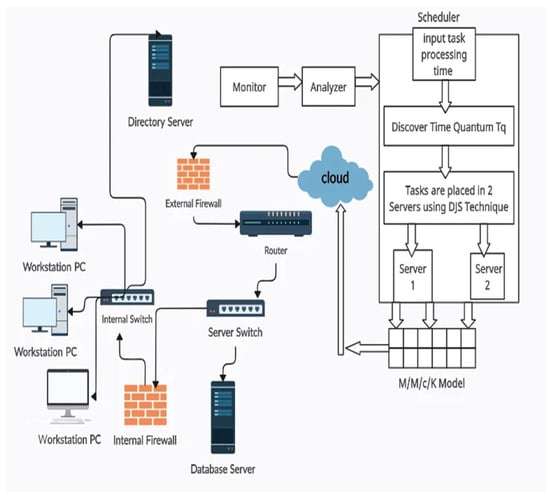

3.1. System Design of Dynamic Johnson Sequencing

In this field of study, system model design is represented by a schematic representation. The organizing phase and queue model are depicted in this diagram. We have thought about using the DJS algorithm to optimize the job order during planning. Queuing algorithms that look for part-and-parcel waiting times employ the M/M/c/K paradigm. Our overall design paradigm is illustrated in Figure 1, where numerous clients want the trader to be platform- or infrastructure-based, storage-based, resource-based, and software-based [33]. The management of access is carried out after identifying the abilities of the agent, who acts as an intermediary service. SLA resource and user details are reported to the service provider after customer access authorization. The monitor gathers all source data and tasks from the user for a predetermined amount of time. The analyzer module fixes the available resources. If the resources are available, requests are sent, and more SLA service is supplied as necessary [34]. The DJS algorithm scheduler module repairs the jobs and determines the best order in which to fix them. It also helps to lower the typical holdup. The M/M/c/K queuing system, which will be discussed later, is used to send these jobs around. By using services and costs efficiently, system share is maximized, complication is limited, and delays are also decreased [35,36].

Figure 1.

System design of DJS using M/M/c/K Queuing system.

3.2. Lining Model for a Distributed Computing Environment

To calculate waiting lines, the queuing model is used. By detailing the service process, arrival process, and maximum capacity of the number of locations and services, the basic model is provided. It is assumed that each job is processed according to the exponential distribution across the sample, while the Poisson distribution is taken into consideration whenever the user’s demand is sent to the server. A non preemptive system is built with the M/M/c/K queuing model, taking into account the two SCs and the waiting position, and there are five places of capacity. The job-scheduling method and the queuing model have been combined in a single note by the people who wrote it. Inter-arrival times are independent, identically distributed, arbitrarily distributed variables with a distribution that is exponential in the context of an arriving shipment based on Kendal’s notation. Service times are exponentially dispersed in service distribution. Pattern recognition and the Poisson distribution are taken into consideration for client arrival. In this instance, it is taken into account as the rate parameter, and it is taken into account as the interval after the task’s complete execution.

Here, τ acts as a time before arrivals, and E[τ] is the mean. The parameters aregiven in Equation (1):

where E[ average inter-arrival time.

The time spent performing a service is measured from beginning to end. We assumed that service times are also exponentially dispersed during the procedure. Equation (4) considers the customer’s service time and denotes the average or mean service time as E(i).

A queuing system’s arrival rate () is calculated using Equation (1). The arrival rate is an indicator of how rapidly tasks or clients enter the system. The average inter-arrival time (E[S]), which denotes the typical time interval between succeeding arrivals, is multiplied by its reciprocal to calculate it. For efficient resource allocation and system performance analysis in a variety of applications, including telecommunications, computer networks, and manufacturing, it is essential to determine the pace at which tasks enter a service system [37].

In a queuing system, Equation (2) calculates the mean or average service time (E(S)). The length of time it typically takes to complete a task or serve a customer is known as the mean service time. It may be calculated by adding up all of the system’s customers’ service times and then dividing that amount by the number of clients (n). Since it reveals information about the typical processing speed of activities, this equation is crucial for determining the effectiveness of a service system. The service rate () in a queuing system is determined by using Equation (3) [38]. The pace at which the system completes tasks or attends to consumers is known as the service rate. The mean service time (E(S)) reciprocal is used to calculate it. By measuring how rapidly jobs can be processed during the service periods, this equation aids in evaluating the capacity and effectiveness of the service system. Faster task processing and maybe lower queue wait times are implied by a greater service rate [39].

Equation (4) determines the likelihood that a queuing system with several servers (S) is idle (P0). The possibility that there are no tasks or clients in the system is represented by this probability. The equation takes into account various system states, including the potential for all servers to be in use. It uses words like arrival rate (), service rate (), and server count (S). As a result, a critical statistic for assessing system effectiveness and calculating resource utilization is produced. Optimizing resource allocation and raising system performance as a whole require an understanding of the likelihood of system inactivity. The average number of tasks or clients waiting in the queue (Lq) in a queuing system with numerous servers (S) is determined by using Equation (5). Variables including the number of servers, the level of service, and the arrival rate are considered. The equation reveals the average queue size, providing data on the efficiency of task processing and the length of system wait times. For a deeper knowledge of queue management and system performance optimization, stands for the quantity of jobs that are queued up and waiting to be completed.

Equation (6) determines the whole system’s average number of jobs or customers (Ls), which includes both those waiting in queue and those presently being served. It combines the average number of jobs being actively processed (/) with the average queue size (Lq). Ls offers a thorough overview of the system’s workload and resource usage, assisting in system design and capacity planning. To balance queue management with service rate optimization, this equation helps evaluate the overall effectiveness and performance of queuing systems. The average wait time for jobs or customers in the queue (Wq) in a queuing system is calculated using Equation (7). By dividing the arrival rate () by the average queue size (Lq), it is calculated. (Wq) stands for the average length of time a job has to wait before being completed. Understanding and reducing queue-related delays in service systems depend on this equation. It helps in improving customer satisfaction, resource allocation efficiency, and system performance optimization [40]. The average wait time (Ws) for jobs or customers across the board in a queuing system is calculated by using Equation (8). It is calculated by multiplying the service rate’s inverse (1/) by the average queue wait time (Wq). Ws is a representation of the average amount of time a task spends in the system, including both active service time and queue time. The system’s overall effectiveness and customer experience must be evaluated using this equation. In addition to reducing waiting times and guaranteeing effective resource use, it aids in understanding and optimizing job flow. Service quality and system performance are both enhanced by lowering (Ws) [41].

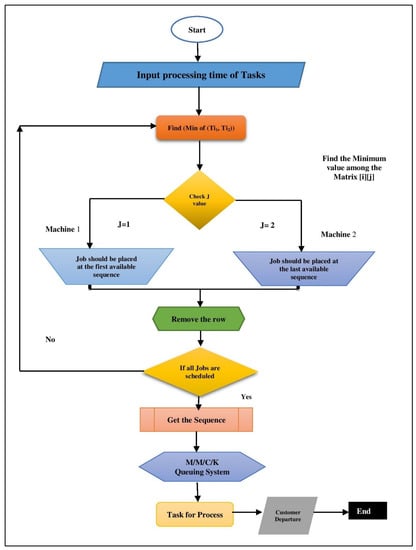

3.3. Johnson Sequencing Algorithm Flowchart

The Johnson sequencing rule, commonly referred to as Johnson’s rule, is a scheduling technique used in production and manufacturing contexts to optimize the sequencing of jobs or tasks on two parallel machines, usually to reduce the makespan or total completion time. Finding the most effective sequence for processing these jobs on two machines is the main goal of Johnson’s rule.

A brief explanation of Johnson’s rule is provided below:

Timeframes for job processing: Find out how long it will take for each work or task to finish on each of the parallel computers. The sequencing procedure depends on this information.

Processing time: Calculate the total processing time by adding the processing times for each job on both machines. This generates a “sum” for each task, which represents the overall time needed to do the job when handled in a particular manner.

Sort by processing time sum: Using the processing time sum, rank the tasks in ascending order. Jobs are ordered such that those with the quickest overall processing times come first, and those with the slowest times come after [42].

Organize the jobs: The ordered list of jobs that was compiled in the previous phase reflects the best order for the two parallel computers to process them in. To schedule the jobs, go through this order, beginning with the job at the top of the list.

When one needs to minimize the time it takes to execute a series of operations across several computers or when there are two machines with varied processing speeds, Johnson’s rule comes in very handy. Overall completion time can be reduced by sequencing the tasks in the most effective sequence to increase productivity and resource utilization in manufacturing or production operations [43].

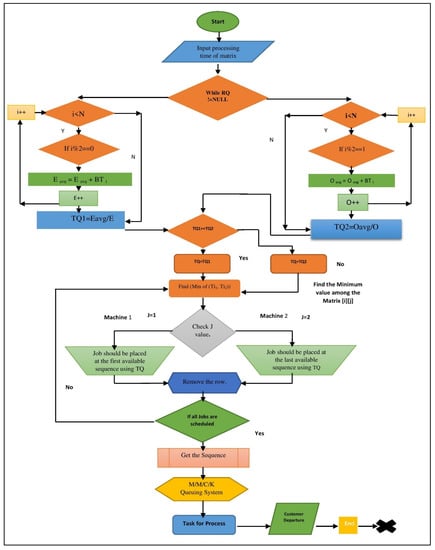

3.4. Dynamic Johnson Sequencing Algorithm Flowchart

The dynamic Johnson sequencing algorithm (DJS) is a mixture of dynamic even–odd round-robin scheduling and the Johnson sequencing algorithm, see Figure 2 and Figure 3. Firstly, the jobs that are coming into the ready queue are stored in a dynamic array. Then, we have to calculate the average of the even- and odd-numbered task burst times. If the average of even location jobs is greater than the average of odd location jobs, then we consider even location burst time as the time quantum (Tq)and vice versa. After that, the Johnson sequencing algorithm is applied to it to find the execution sequence. Then, according to that time quantum, all ofthe tasks in the queue are scheduled by using two server machines, M1 and M2, with J = 1, 2, 3,… Then, we find the minimum value in a matrix of tasks. The machine having the minimum processing time of the tasks is taken into consideration, and that task executes first according to its processing time matrix. When a specific task is executed by machine M1, machine M2 starts executing the remaining processing time of that task. After the execution of the task on machine M2, all tasks areexecuted. In this way, all ofthe tasks are executed according to the in-time and out-time of that specific task. When all tasks are scheduled, they are massed through the M/M/c/K model. After passing through the M/M/c/K system, the executed tasks are handed over to the customer.

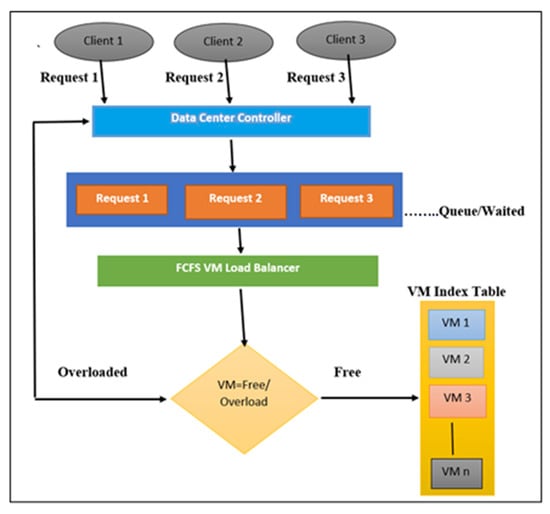

3.5. FCFS Algorithm Flowchart

The job with the highest priority is carried out first in the priority scheduling method, followed by the others. In general, priority scheduling is utilized in operating systems when a large number of activities are scheduled for execution, and the system performs the tasks based on their priority. It is also a preemptive scheduling technique, as seen in Figure 2, where a job with the greatest priority is executed before any others [44].

Figure 2.

Flow chart of Johnson Sequencing Algorithm.

Figure 3.

Flowchart showing OptiDJS+ algorithm for optimisation of multiple task allocation.

Figure 4 shows the FCFS flowchart while the DJS algorithm is presented in Algorithm 1.

Figure 4.

Flow chart of FCFS Algorithm.

| Algorithm 1. For Dynamic Johnson Sequencing (DJS) |

| 1 Input ((b11, b21), ((b12, b22),..., ((b1n, b2n)) 2 Output: an optimal schedule σ Step 1 // n= Number of jobs and BT= Jobs Burst Time 3. arr[]=Bt of all tasks waiting in the ready queue 4. repeat for (i=0 to n−1) { Sum1=Sum1+Bti i+=2; }

5. repeat for (j=1 to n− 1) { Sum2=Sum2+Btj; j+=2; }

6. if(Tqe>Tqo) then Tq=Tqe else Tq=Tqo 7. // AssignTq to (1 to n jobs in the queue) for i=0 to n loop ji->Tq 8. J11 ← {Jj∈J: b1j<= b2j}; 9. J22 ← {Jj∈J: b1j> b2j}; Step 2 10. Label this sequence”σ(1)” and place the tasks in J1 in non-decreasing order of the degradation rates b1j; 11. List the occupations in J2 in reverse chronological order of degradation rates b2j; 12. Call this sequence σ(2); Step 3 13. σ ← (σ(1)|σ(2)); 14. Return |

4. Experimental Analysis

The number of tasks in this work is 5, and the processing times for each task are entered into the matrix as indicated in Table 2. A cloud computing environment is used to portray a real-world cloud computing situation and the suggested efficient strategy. In-depth data center setups are provided for specially designed simulation experiments. Also provided is information on their size, functionality, and absence of hosts, processing elements, and data centers. Each part of the data center generates a variety of throughput, storage, and space allocation algorithms for hosts and virtual machines. The matrix entry in Table 2 represents a total of five tasks. The task execution length is displayed for each task processing duration. The diversity in the simulated scenario was influenced by data size, task loads, wideband communication connecting storage and computing resources, and the number of files needed for each job. The requirements for each job’s data files were also spread at random between the values of x and y, where x is assumed to be 1. These ranges represent, respectively, the minimum and maximum values. Hence, a task can require between one and x files. Files must be at least 100 MB in size. For distribution, the data file was duplicated across several storage systems. In this experimental analysis, we used Table 2. FCFS, max–min, Johnson sequencing, and dynamic Johnson scheduling using two machines were taken into account. After the average waiting time, total turnaround time, Lq, Ls, Wq, and Ws were also calculated using the M/M/c/K model, it was observed that DJS using three servers produced better performance than the other algorithms [45].

Table 2.

Processing time Matrix.

Subsequent toobtainingthis framework, the DJS is suggested for obtaining the streamlined arrangement, and then M/M/c/K is implemented to obtain the holding-up lines as displayed in Figure 1. Input data users submit requests for execution using the service provider paradigm known as cloud computing. Therefore, it is the duty of the cloud service provider to plan out varied demands and effectively control services [46]. To the authors’ understanding, the majority of current work entails scheduling jobs after they are added to an existing task list. However, how the product or service supplier handles tasks that arrive is where the true process of task planning and managing resources starts.

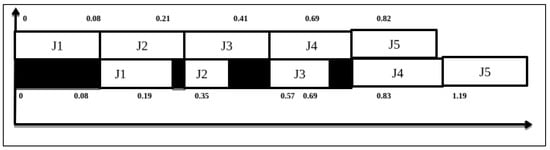

4.1. Experimental Analysis of FCFS Using Two Machines

The burst time of every work is allocated across the two machines in the FCFS algorithm. As indicated in Table 3, the jobs that are in the ready queues of both servers are carried out in accordance with their IN–OUT times (FCFS method). Table 4 shows that task J1 begins execution on machine 1 and ends on machine 2 with an INTIME of 0 ms and OUTTIME of 0.08 ms, respectively. When task J1 finishes running on machine 1, it begins running on machine 2 between 0.08 and 0.19 ms later. Similarly, all tasks are executed in a similar fashion. Equations (1) and (2) are used to determine the mean service time in this instance of the FCFS scheduling method utilizing two machines. The mean service time and the average service rate may then be estimated once we determine the service time of each process, which can be discovered using the Figure 5 Gantt chart. Here, we see that the execution times for tasks J1 to J5 ranged from 0 to 1.19 ms, which is comparably greater as compared to the DJS method. The findings are computed using Equations (1)–(8), and the results are described in Table 5, Table 6, Table 7, Table 8, Table 9, Table 10 and Table 11 [47].

Table 3.

Initial Processing Time of different tasks.

Table 4.

In–Out time of Two Machines of different tasks in FCFS.

Figure 5.

Gantt chart of FCFS Algorithm.

Service time and service rate calculation:

Here, Service Time = Completion Time−Arrival Time

For Job J1: (0.19 − 0) = 0.19

Job J2: (0.35 − 0.08) = 0.27

Job J3: (0.87 − 0.34) = 0.53

Job J4: (1.01 − 0.54) = 0.47

Job J5: (0.71 − 0.21) = 0.50.

So, mean service time E(S) = (0.19 + 0.27 + 0.53 + 0.47+0.5)/5 = 0.392, and the service rate will be

μ = 1/(E(S)) = 2.551

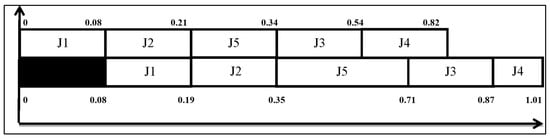

4.2. Experimental Analysis of Johnson Sequencing Using Two Machines

The Johnson sequencing algorithm is then recommended to obtain the streamlined arrangement after obtaining this framework and M/M/c/K is implemented to obtain the holding-up lines as shown in the Figure 6 Gantt chart. According to Table 3, a task is a set of instructions that are being carried out, and a process is a set of those same instructions or programs. Processes move through several phases based on where they are in the execution cycle. One process could contain many threads to carry out various tasks. Computers typically use batch processing to finish high-volume, repetitive data procedures, as seen in Table 3. When applied to individual data transactions, many data processing processes, like backups, filtering, and sorting, may be computationally expensive and ineffective.

Figure 6.

Gantt chart of Johnson Sequencing Algorithm.

According to Table 5, the tasks in the first machine are carried out first, followed by the chores in the second machine.

Table 5.

In–Out time of Two Machines of different tasks in the Johnson Sequencing Algorithm.

Table 5.

In–Out time of Two Machines of different tasks in the Johnson Sequencing Algorithm.

| Tasks | Machine1 | Machine2 | ||

|---|---|---|---|---|

| IN TIME | OUT TIME | IN TIME | OUT TIME | |

| J1 | 0 | 0.08 | 0.08 | 0.19 |

| J2 | 0.08 | 0.21 | 0.21 | 0.35 |

| J5 | 0.21 | 0.34 | 0.35 | 0.71 |

| J3 | 0.34 | 0.54 | 0.71 | 0.87 |

| J4 | 0.54 | 0.82 | 0.87 | 1.01 |

The mean service time and average service rate can be calculated once the service times for each of the processes shown in Figure 6 are determined. From Table 5, Johnson suggests modifying his strategy in the case where there are two machines. The total burst time of a particular task is distributed among two machines. Among the two machines, we have to find the task whose processing time is less, and that task is put in the queue first for execution. Here, we can see from Table 3 that task J1 has less processing time, i.e., 0.08ms, on machine 1. So, J1 will execute first. After that, task J2 has less processing time, i.e., 0.13 ms in machine 1. So, Task J2 will execute after Task J1, and soon. So, the task execution sequence is J1-J2-J5-J3-J4. In this application of the Johnson sequencing scheduling technique using two machines, the mean service time is calculated using Equations (1) and (2) [48]. Once we have ascertained the service time of each process that can be detected using the Figure 6 Gantt chart, we can then estimate the mean service time and the average service rate. Here, we noticed that the execution durations for tasks J1 to J5 varied from 0 to 1.01 ms, which is much shorter when compared to FCFS on a multi-server machine. Equations (1)–(8) are used to calculate the findings, and the results are shown in Table 9 [49,50].

Now, using a Gantt chart like the one in Figure 4, we may determine the average service rate following the Johnson sequencing method.

Service time and service rate calculation in milliseconds:

For Job J1: (0.19 − 0) = 0.19

Job J2: (0.35 − 0.08) = 0.27

Job J5: (0.71 − 0.21) = 0.50

Job J3: (0.87 − 0.34) = 0.53

Job J4: (01.01 − 0.54) = 0.47

Now, let us find the average of the service time of all ofthe jobs known as E(S).

E(S) = (0.19 + 0.27 + 0.50 + 0.53+0.47)/5 = 0.392

Here, we need to find the value of µ.

µ = 1/(E(S)) = 1/0.392 = 2.551 ms

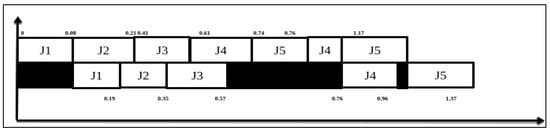

4.3. Experimental Analysis of Max–Min Using Two Machines

A variant of the standard round-robin scheduling method used in operating systems for CPU scheduling is called max–min round-robin. By favoring processes with shorter execution durations while preserving the essential elements of round-robin scheduling, we can ensure equitable resource allocation.

The max–min round-robin algorithm’s core principle is that giving processes with shorter execution times more chances to execute can result in a more equitable distribution of CPU time. It tries to avoid starving processes by regularly altering the time quantum depending on waiting periods (see Table 6).

While max–min round-robin can provide fairness, it is crucial to keep in mind that it may not always be the most effective scheduling algorithm in terms of total system throughput or reaction time. The ideal scheduling algorithm will depend on the particular demands and traits of the system and the processes it will be managing.

Table 6.

In–Out time of Two Machines of different tasks in Max–Min.

Table 6.

In–Out time of Two Machines of different tasks in Max–Min.

| Tasks | Machine1 | Machine2 | ||

|---|---|---|---|---|

| IN TIME | OUT TIME | IN TIME | OUT TIME | |

| J1 | 0 | 0.08 | 0.08 | 0.19 |

| J2 | 0.08 | 0.21 | 0.21 | 0.35 |

| J3 | 0.21 | 0.41 | 0.41 | 0.57 |

| J4 | 0.41 | 0.61 | 0.76 | 0.96 |

| J5 | 0.61 | 0.74 | 1.17 | 1.37 |

| J4 | 0.74 | 0.76 | IDLE | IDLE |

| J5 | 1.01 | 1.17 | IDLE | IDLE |

Now, using a Gantt chart like the one in Figure 7, we may determine the average service rate following the max–min sequencing method.

Figure 7.

Gantt chart of Max–Min Algorithm.

Service time and service rate calculation in milliseconds is given as follows:

For Job J1: (0.19 − 0) = 0.19, for Job J2: (0.46 − 0.19) = 0.27, for Job J3: (0.82 − 0.46) = 0.36, for Job J4: (1.18−0.76) = 0.42, and for Job J5: (01.67 − 1.06) = 0.37. Now, the average service time of all the jobs known as E(S) is calculated as:

E(S) = (0.19 + 0.27 + 0.36 + 0.42 + 0.37)/5 = 0.37.

Since the value of µ is in terms of E(S), it can be calculated as:

µ = 1/(E(S)) = 1/0.37 = 2.7 ms.

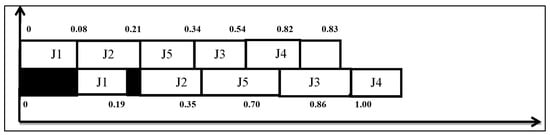

4.4. Experimental Analysis of Dynamic Johnson Sequencing Using Two Machines

After the service times for each of the processes indicated in the Gantt chartin Figure 8 are known, the mean service time and average service rate may be determined. Johnson advises changing his approach if there are three machines instead of only two. Each task must be completed by one of the two machines, M1 or M2, in that sequence. The jobs are provided along with an estimated processing time. It is possible to find a flawless solution to this issue if one of the next two assumptions is accurate. The jobs located inside each task group are structured using the even–odd round-robin using the Johnson sequencing assignment approach, as are the task groups. Each of the group projects is distinctive. By using a Gantt chart (Figure 8), it is possible to calculate the average service rate equivalent to the Johnson sequencing approach. The graph shows how job activities on each machine change over time. For each machine, the chart typically consists of horizontal bars that show the permitted occupied and idle hours for a certain timetable. It may be used to verify the make-up time, machine downtime, and task waiting times on the schedule. Although it is a fantastic tool for presentations, it has serious scheduling problems because it does not offer a systematic method for improving schedules. Indeed, the analyst needs intuition to create a better timeframe. Once the service times for each of the processes illustrated in the Figure 7 Gantt chart have been determined, the mean service time and average service rate may be computed. If there are two machines, Johnson advises adjusting his technique based on Table 7. A certain task’s total burst duration is split across two servers. The job with the shortest processing time among the two servers must be identified, and it is placed first in the queue for execution. Table 7 demonstrates that task J1 has a shorter processing time on machine 1, at 0.08 ms. Thus, J1 will run first. Following that, task J2 takes machine 1 less time to process, at 0.13 ms. Hence, task J5 will run after tasks J3 and J4, and so on. Thus, the order of task execution is J1-J2-J5-J3-J4-J5. In this application of the Johnson sequencing scheduling method with three machines, the mean service time is calculated using Equations (1) and (2). Once we have established the service time of each process that can be identified using the Figure 8 Gantt chart, we can next estimate the mean service time and the average service rate. Here, we noticed that the execution durations for tasks J1 to J5 varied from 0 to 1.00 ms, which is much shorter when compared to FCFS, Johnson sequencing, and max–min Johnson sequencing employing a single server system. Equations (1)–(8) are used to calculate the findings, and the results are shown in Table 7, Table 8, Table 9 and Table 10.

Figure 8.

Gantt chart of Dynamic Johnson Sequencing Algorithm.

Table 7.

In–Out time of Two Machines of different tasks in the Dynamic Johnson Sequencing Algorithm (DJS).

Table 7.

In–Out time of Two Machines of different tasks in the Dynamic Johnson Sequencing Algorithm (DJS).

| Tasks | Machine1 | Machine2 | ||

|---|---|---|---|---|

| IN TIME | OUT TIME | IN TIME | OUT TIME | |

| J1 | 0 | 0.08 | 0.08 | 0.19 |

| J2 | 0.08 | 0.21 | 0.21 | 0.35 |

| J5 | 0.21 | 0.34 | 0.35 | 0.70 |

| J3 | 0.34 | 0.54 | 0.70 | 0.86 |

| J4 | 0.54 | 0.82 | 0.86 | 1.00 |

| J5 | 0.82 | 0.83 | IDLE | IDLE |

Further, we candesign a Gantt chart for the dynamic Johnson’s sequencing algorithm using this time quantum with even–odd round-robin algorithm.

Now, using a Gantt chart like the one in Figure 5, we may determine the average service rate in accordance with the Johnson sequencing method.

Service time and service rate calculation:

For Job J1: (0.19 − 0) = 0.19, for Job J2: (0.35 − 0.08) = 0.27, for Job J5: (0.70 − 0.21) + (0.83 − 0.82) = 0.50, for Job J3: (0.86 − 0.34) = 0.52, and for Job J4: (1.00 − 0.54) = 0.46. Now, the average service time of all the jobs known as E(S), is calculated as:

E(S) = (0.19 + 0.27 + 0.49 + 0.36 + 0.42)/5 = 0.388

Here, we need to find the value of µ given in terms of E(S), thus;

µ = 1/(E(S)) = 1/0.388 = 2.577 ms

There are connections between Table 8 and Table 12. The answers to the corresponding equations Comparing the DJS algorithm to the FCFS, max–min, and Johnson sequencing methods using a multi-server machine. In this case, the DJS algorithm reduces service time and the average waiting time. Concerning the outcomes of Equations (1)–(8), the variables Lq, Ls, Wq, and Ws are linked. The average line length and the number of jobs per shift are shown in Figure 8, Figure 9, Figure 10, Figure 11, Figure 12, Figure 13 and Figure 14. The service rate is equal to = 1/E(S). We can calculate the average arrival rate, followed by the average service time (E), as shown in Table 7, Table 8, Table 9 and Table 10. Thus, we can calculate the probability (P0) that the system is not operational using Formula (4). The value of ca in the calculation is then used to calculate the average number of jobs in the queue (Lq) (5), employing a technique based on the outcomes of the LQ step preceding it. The system’s typical task load (Ls) is then calculated (6). Formula (7) is then used to compute the system’s average wait time for tasks, and Formula (8) is used to obtain the queue’s average wait time for tasks. In this result set, we can see that the values of Lq, Ls, Wq, and Ws are very optimal by using dynamic Johnson sequencing using two server machines, as shown in Table 11. Table 8 is for FCFS; Table 9 is for Johnson sequencing using two machines; Table 10 is for max–min sequencing using two servers; and Table 11 is for DJS scheduling using two servers.

Table 12.

t-Test Results for Lq in terms of Mean, Std. Deviation and Std. Means of error.

Table 8.

Results of using FCFS Algorithm using 2 Servers.

Table 8.

Results of using FCFS Algorithm using 2 Servers.

| Lq | Ls | Wq | Ws | |

|---|---|---|---|---|

| λ = 2 | 0.127 | 0.860 | 0.058 | 0.428 |

| λ = 3 | 0.502 | 1.715 | 0.164 | 0.534 |

| λ = 4 | 1.872 | 3.380 | 0.447 | 0.817 |

| λ = 5 | 11.972 | 12.990 | 2.198 | 2.568 |

Table 9.

Results of using Johnson Sequencing Algorithm using 2 Servers.

Table 9.

Results of using Johnson Sequencing Algorithm using 2 Servers.

| Lq | Ls | Wq | Ws | |

|---|---|---|---|---|

| λ = 2 | 0.133 | 0.923 | 0.063 | 0.441 |

| λ = 3 | 0.563 | 1.876 | 0.18 | 0.558 |

| λ = 4 | 2.133 | 3.756 | 0.51 | 0.888 |

| λ = 5 | 16.532 | 18.619 | 3.285 | 3.663 |

Table 10.

Results ofusing Max–Min Algorithm using 2 Servers.

Table 10.

Results ofusing Max–Min Algorithm using 2 Servers.

| Lq | Ls | Wq | Ws | |

|---|---|---|---|---|

| λ = 2 | 0.682 | 1.472 | 0.347 | 0.693 |

| λ = 3 | 1.532 | 2.482 | 0.497 | 0.873 |

| λ = 4 | 2.243 | 3.497 | 0.552 | 0.898 |

| λ = 5 | 17.567 | 19.226 | 4.095 | 40.443 |

Table 11.

Results ofusing Dynamic Johnson Sequencing Algorithm using 2 Servers.

Table 11.

Results ofusing Dynamic Johnson Sequencing Algorithm using 2 Servers.

| Lq | Ls | Wq | Ws | |

|---|---|---|---|---|

| λ = 2 | 0.047 | 0.744 | 0.025 | 0.377 |

| λ = 3 | 0.431 | 1.476 | 0.141 | 0.448 |

| λ = 4 | 1.382 | 2.733 | 0.337 | 0.695 |

| λ = 5 | 4.908 | 6.473 | 0.950 | 1.386 |

5. Result and Discussion

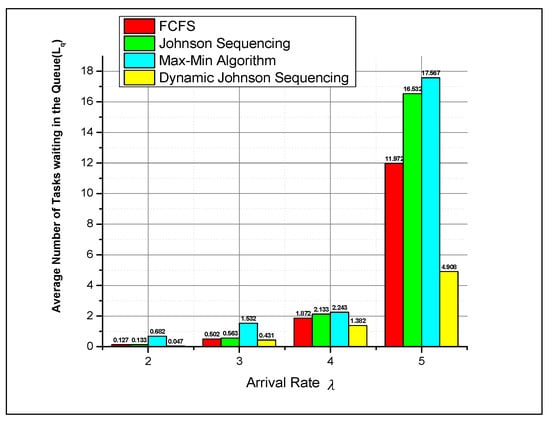

This graph displays the typical number of jobs in the queue while using the average arrival rate. Compared to FCFS, Johnson sequencing, max–min scheduling, and dynamic Johnson sequencing using two machines, there is a reduced task waiting count. The metrics employed in this inquiry were top, bottom, average, and standard deviation values. The best outcomes are displayed. The table shows that for a100-task size, DHJS outperformed all other algorithms and was on par with them. DJS’s advantage became more obvious with larger task sizes, and it is likely that this makes it the best option for any projects longer than 100 tasks. The mean, best, and worst mean spans more fully illustrate how effective DJS is, as shown in Figure 9.

Figure 9.

Trial investigation of the Typical number of jobs in the line (Lq).

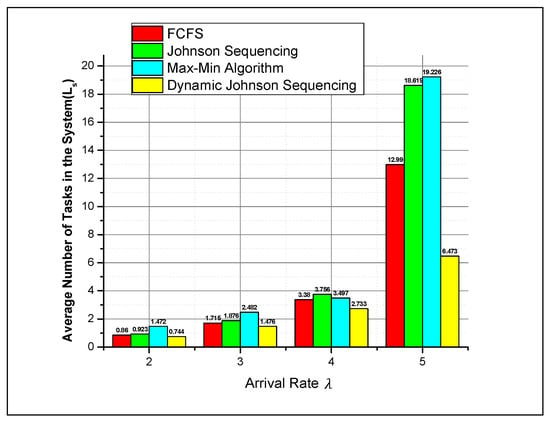

The average number of tasks in the system is displayed in Figure 10. It can be observed that dynamicJohnson sequencing has a lower task waiting count than Johnson sequencing, max–min, and FCFS task scheduling. The conventional DE method employs a fixed positive value for this component that remains constant throughout the optimization process. Therefore, if the population variance is significant and the value of this scaling factor is also high, the step size will cause the solution to diverge from the current answer. It may occur even at the beginning of the optimization phase to encourage the algorithm to thoroughly scan the search space for any promising regions that may contain the best-so-far response. The population variety may decline while the scaling factor remains the same when the current iteration is raised, as shown in Figure 10.

Figure 10.

Trial investigation of the Typical number of jobs in the System (Ls).

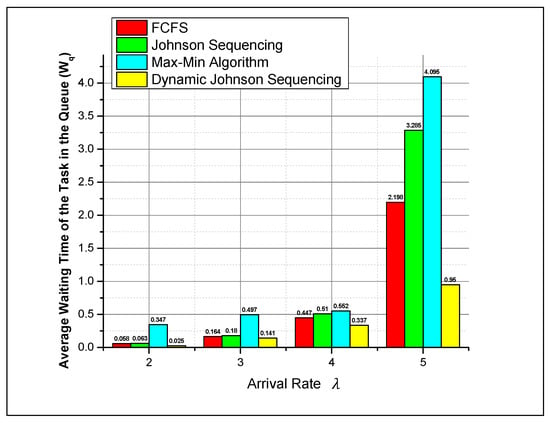

The average waiting time for jobs in the queue is displayed in Figure 11. Here, we can see that dynamic Johnson sequencing has fewer jobs waiting than Johnson sequencing and FCFS task scheduling. The suggested technique cannot be directly compared to the current algorithms since paired mapping is not covered in the existing literature. We did precisely that to compare the DJS, FCFS, max–min, and Johnson sequencing algorithms. Although layover duration is correlated with the quantity of clouds in each dataset, it is crucial to highlight that the correlation is not obvious. The suggested approach, which bases cloud resources on workload matching, may quickly increase cloud resources, as shown in Figure 11.

Figure 11.

Trial investigation of the typical holding-up season of the positions in the queue (Wq).

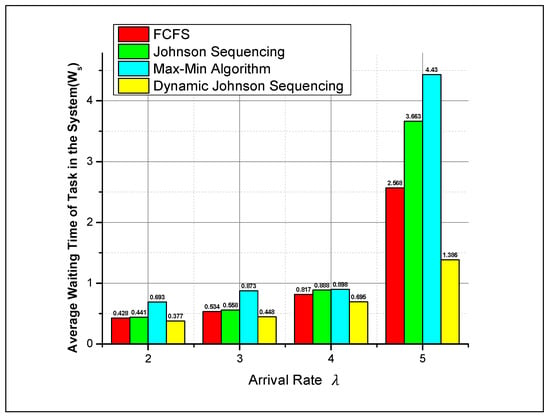

Figure 12 shows the typical wait time for jobs in the system. Here, we can see that max–min with FCFS and Johnson sequencing task scheduling has a higher task waiting count than with dynamic Johnson sequencing (DJS). A dynamic Johnson sequencing algorithm was created that takes into consideration the properties of multiprocessor scheduling by combining Johnson’s rule with the genetic algorithm. To speed up the algorithm’s convergence, new crossover and mutation procedures were developed. Johnson’s rule wasused to optimize each machine’s period throughout the decoding process. The effectiveness of dynamic heuristic Johnson sequencing was compared to that of the list scheduling approach and an upgraded list scheduling methodology using simulations.

Figure 12.

Trial investigation of the typical holding-up season of the positions in the system (Ws).

6. Statistical Analysis Using t-Test

The results of a paired t-test, which was conducted to determine whether there are any significant differences between the mean, std. deviation, std. meansof error, correlation, p-value, and t-test value obtained usingthe proposed algorithm, DJS, and those usingother algorithms, including Johnson sequencing using two servers, using similar stopping standards for all task instances, are presented in Table 12, Table 13, Table 14, Table 15, Table 16, Table 17, Table 18, Table 19, Table 20, Table 21, Table 22 and Table 23. The acceptable p-value ought to be lower than alpha α < 0.5. The p-values for nearly all of the datasets, as shown in the tables below, were smaller than this alpha value, demonstrating a significant improvement in the proposed DJS method over the other algorithms used for comparison. It follows that the DJS performs better than alternative job-scheduling algorithms in terms of the mean, std. deviation, std. meansof error, correlation, p-value, and t-test value.

Table 13.

t-Test Results for Lq in terms of Correlation and p-Value.

Table 14.

t-Test Results for Lq in terms of Paired Differences.

Table 15.

t-Test Results for Ls in terms of Mean, Std. Deviation and Std. Means of error.

Table 16.

t-Test Results for Ls in terms of Correlation and p-Value.

Table 17.

t-Test Results for Ls in terms of Paired Differences.

Table 18.

t-Test Results for Wq in terms of Mean, Std. Deviation and Std. Means of error.

Table 19.

t-Test Results for Wq in terms of Correlation and p-Value.

Table 20.

t-Test Results for Wq in terms of Paired Differences.

Table 21.

t-Test Results for Ws in terms of Mean, Std. Deviation and Std. Means of error.

Table 22.

t-Test Results for Ws in terms of Correlation and p-Value.

Table 23.

t-Test Results for Ws in terms of Paired Differences.

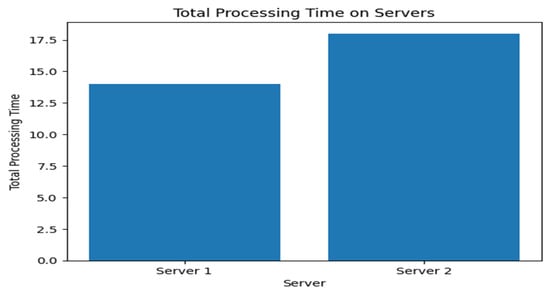

In Figure 13, to balance the overall processing time in this output, workloads are split between servers 1 and 2, giving each server a total processing time of 16 units. Additionally, each server’s allocated responsibilities are shown.

Figure 13.

Total processing comparison between servers.

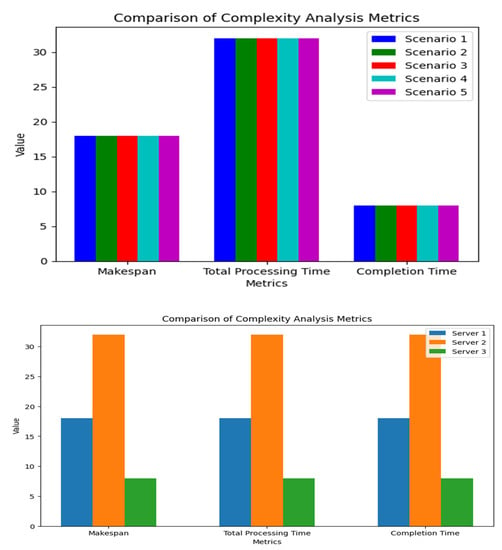

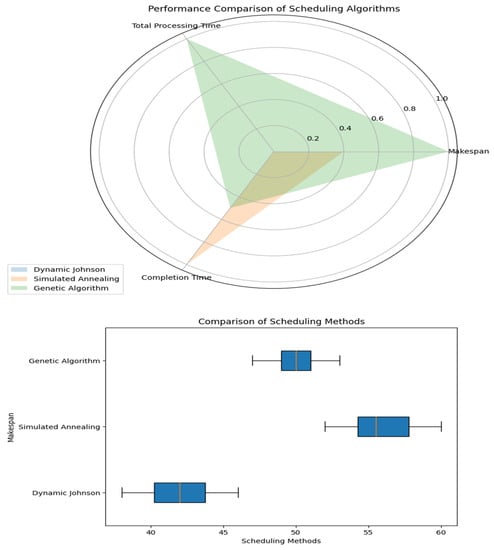

In Figure 14, to compare DJI with two other techniques (Method A and Method B), this code creates a comparison graph for the makespan, total processing time, and completion time. It also performs a paired t-test between DJI and Method A and visualizes the p-value result. The p-value for the paired t-test between Scenario 1 and Scenario 2 is displayed in a bar chart created by using the visualize_p_value function in this updated code. A red dashed line in the graph signifies a significance level of 0.05. By expanding the visualize_p_value function as necessary, one can add more situations and their accompanying p-values to the chart. Between Scenario 1 and Scenario 2, we ran a paired t-test, and the results, including the t-statistic and p-value, were reported. This program may be improved to carry out t-tests for other scenarios’ pairings. The effectiveness of three scheduling techniques (genetic algorithm, simulated annealing, and dynamic Johnson) is shown via a radar chart across three metrics (makespan, total processing time, and completion time). To provide unbiased comparisons across measurements, the data werenormalized. Plotting the data with plt.polar and ax.fill produced the radar chart once the angles for the chart werecomputed. To finish the visualization, the labels, title, and legend wereadded. The performance of several scheduling algorithms across a variety of parameters may be compared using this radar map in an eye-catching visual manner. It is easy to observe which approach outperforms the others since each algorithm is represented as a distinct colored region.

Figure 14.

Overall Result of Complexity Analysis between Proposed Method and Two Mainstream Scheduling Methods in Cloud.

7. Conclusions

In conclusion, OptiDJS+ represents a substantial development in the field of resource scheduling in cloud computing settings, particularly when dealing with distributed overloading scenarios. The goal of the dynamic Johnson sequencing (DJS) algorithm’s successor algorithm is to maximize scheduling effectiveness and resource utilization. It builds on the algorithm’s fundamental concepts. OptiDJS+ guarantees that cloud-based systems can effectively handle rising resource demands by adjusting seamlessly to dynamic workloads and smartly reallocating resources.

Modern optimization methods, real-time flexibility, and the incorporation of machine learning algorithms for predictive resource allocation are some of the key features of OptiDJS+. These developments allow OptiDJS+ to reduce the makespan, optimize task sequencing, and raise system performance as a whole. Furthermore, OptiDJS+ strongly emphasizes load balancing and fault tolerance, two essential elements of cloud computing. By maximizing resource allocation and reducing the makespan, OptiDJS+ also provides the potential for considerable cost reductions in data center infrastructure. Both cloud service providers and end users gain from this since it equates to more effective resource management and greater resource availability.

A crucial component of resource management and optimization for cloud-based applications and services is task scheduling. Meeting performance goals, being cost-effective, and guaranteeing a positive user experience all depend critically on good resource allocation and job execution. Here are some salient conclusions:

- Resource utilization:When compared to FCFS, Johnson sequencing, and max–min Johnson sequencing, DJS task scheduling maximizes the use of cloud resources, such as virtual machines and storage, by allocating tasks to available resources based on their requirements and priorities.

- Performance enhancement: When compared to FCFS, Johnson sequencing, and max–min Johnson sequencing, DJS scheduling algorithms have lower reaction times, higher throughput, and lower latency, which can enhance the overall performance of cloud applications.

- Cost management: Task scheduling techniques that effectively allocate resources and reduce over-provisioning can aid in cost control. For businesses trying to optimize their cloud schedule, this is especially crucial. As a result, DJS scheduling techniques are more cost-effective than FCFS, Johnson sequencing, and max–min Johnson sequencing.

- Load balancing: Task scheduling aids in load balancing by equally spreading loads among resources that are at hand, avoiding resource bottlenecks, and making sure that no resource is overloaded. Therefore, DJS optimizes the load such that jobs coming in are completed within a certain time quantum that is estimated using the even–odd round-robin scheduling approach.

- Fault tolerance: by intelligently moving jobs in the event of resource failures or deterioration, the DJS scheduling approach may improve the fault tolerance and dependability of cloud systems.

- Complexity: Because of the dynamic nature of cloud resources, a wide range of workloads, and various user expectations, scheduling in a cloud environment can be challenging. To manage this complexity, an advanced dynamic Johnson sequencing technique is required.

- Compliance with QoS and SLAs: To ensure that applications satisfy performance guarantees and provide the anticipated quality of service, task scheduling must take into account QoS requirements and servicequality agreements (SLAs). In terms of QoS and SLA compliance, DJS is therefore the best option.

As a result, efficient task scheduling in cloud computing is a complex undertaking that calls for in-depth knowledge of application needs, resource availability, and dynamic workload characteristics. To optimize resource utilization, boost performance, and offer affordable and dependable cloud services, cloud providers, researchers, and organizations must keep creating cutting-edge scheduling algorithms and tools.

Author Contributions

Methodology, P.B., S.R. and U.M.M.; Software, P.B.; Validation, P.B., S.R., S.K.P., P.C., A.S. and N.K.S.; Formal analysis, P.B., U.M.M. and S.K.P.; Investigation, N.K.S.; Resources, U.M.M.; Writing—original draft, P.B. and U.M.M.; Writing—review & editing, S.R., S.K.P., P.C., A.S. and N.K.S.; Supervision, S.R., S.K.P., P.C., A.S. and N.K.S.; Funding acquisition, U.M.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding. However, the APC was funded by Umar Muhammad Modibbo of the Operations Research Department of Modibbo Adama University, Yola, Nigeria.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Talukder, M.K.; Buyya, R. Multiobjective differential evolution for scheduling workflow applications on global grids. Concurr. Comput. Pract. Exp. 2009, 21, 1742–1756. [Google Scholar] [CrossRef]

- Banerjee, P.; Tiwari, A.; Kumar, B.; Thakur, K.; Singh, A.; Dehury, M.K. Task Scheduling in cloud using Heuristic Technique. In Proceedings of the 2023 7th International Conference on Trends in Electronics and Informatics (ICOEI), Tirunelveli, India, 11–13 April 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 709–716. [Google Scholar]

- Baranwal, G.; Vidyarthi, D.P. A fair multi-attribute combinatorial double auction model for resource allocation in cloud computing. J. Syst. Softw. 2015, 108, 60–76. [Google Scholar] [CrossRef]

- Karthick, A.V.; Ramaraj, E.; Subramanian, R.G. An Efficient Multi Queue Job Scheduling for Cloud Computing. In Proceedings of the World Congress on Computing and Communication Technologies (WCCCT), Trichirappalli, India, 27 February–1 March 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 164–166. [Google Scholar]

- Alkhashai, H.M.; Omara, F.A. An Enhanced Task Scheduling Algorithm on Cloud Computing Environment. Int. J. Grid Distrib. Comput. 2016, 9, 91–100. [Google Scholar] [CrossRef]

- Banerjee, P.; Roy, S. An Investigation of Various Task Allocating Mechanism in Cloud. In Proceedings of the 2021 5th International Conference on Information Systems and Computer Networks (ISCON), Mathura, India, 22–23 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–6. [Google Scholar]

- Barrett, E.; Howley, E.; Duggan, J. A learning architecture for scheduling workflow applications in the cloud. In Proceedings of the Ninth IEEE European Conference on Web Services (ECOWS), Lugano, Switzerland, 14–16 September 2011; pp. 83–90. [Google Scholar]

- Cheng, C.; Li, J.; Wang, Y. An energy-saving task scheduling strategy based on vacation queuing theory in cloud computing. Tsinghua Sci. Technol. 2015, 20, 28–39. [Google Scholar] [CrossRef]

- Lin, C.-C.; Liu, P.; Wu, J.-J. Energy-efficient Virtual Machine Provision Algorithms for Cloud Systems. In Proceedings of the 2011 Fourth IEEE International Conference on Utility and Cloud Computing, Melbourne, Australia, 5–8 December 2011; pp. 81–88. [Google Scholar]

- Delavar, A.G.; Javanmard, M.; Shabestari, M.B.; Talebi, M.K. RSDC (Reliable scheduling distributed in cloud computing). Int. J. Comput. Sci. Eng. Appl. 2012, 2, 1–16. [Google Scholar] [CrossRef]

- Ding, J.; Zhang, Z.; Ma, R.T.; Yang, Y. Auction-based cloud service differentiation with service level objectives. Comput. Netw. 2016, 94, 231–249. [Google Scholar] [CrossRef]

- El-Sayed, T.E.; El-Desoky, A.I.; Al-Rahamawy, M.F. Extended maxmin scheduling using Petri net and load balancing. Int. J. Soft Comput. Eng. 2012, 2, 198–203. [Google Scholar]

- Shaikh, F.B.; Haider, S. Security threats in cloud computing. In Proceedings of the 6th International IEEE Conference on Internet Technology and Secured Transaction, Abu Dhabi, United Arab Emirates, 11–14 December 2012; pp. 214–219. [Google Scholar]

- Gan, G.; Huang, T.; Gao, S. Genetic simulated annealing algorithm for task scheduling based on cloud computing environment. In Proceedings of the IEEE International Conference on Intelligent Computing and Integrated Systems (ICISS), Guilin, China, 22–24 October 2010; pp. 60–63. [Google Scholar]

- Ge, J.W.; Yuan, Y.S. Research of cloud computing task scheduling algorithm based on improved genetic algorithm. Appl. Mech. Mater. 2013, 347, 2426–2429. [Google Scholar] [CrossRef]

- Khazaei, H.; Misic, J.; Misic, V.B. Performance Analysis of Cloud Computing Centers Using M/G/m/m+rQueuing Systems. IEEE Trans. Parallel Distrib. Syst. 2012, 23, 936–943. [Google Scholar] [CrossRef]

- Khazaei, H.; Misic, J.; Misic, V.B. Modelling of Cloud Computing Centers Using M/G/m Queues. In Proceedings of the 31st IEEE International Conference on Distributed Computing Systems Workshops (ICDCSW), Minneapolis, MN, USA, 20–24 June 2011; pp. 87–92. [Google Scholar]

- Liu, H.; Jin, H.; Liao, X.; Hu, L.; Yu, C. Live migration of virtual machine based on full system trace and replay. In Proceedings of the 18th ACM International Symposium on High Performance Distributed Computing, Garching, Germany, 11–13 June 2009; pp. 101–110. [Google Scholar]

- Himthani, P.; Saxena, A.; Manoria, M. Comparative Analysis of VM Scheduling Algorithms in Cloud Environment. Int. J. Comput. Appl. 2015, 120, 1–6. [Google Scholar] [CrossRef]

- Gu, J.; Hu, J.; Zhao, T.; Sun, G. A New Resource Scheduling Strategy Based on Genetic Algorithm in Cloud Computing Environment. J. Comput. 2012, 7, 42–52. [Google Scholar] [CrossRef]

- Ye, K.; Jiang, X.; Ye, D.; Huang, D. Two Optimization Mechanisms to Improve the Isolation Property of Server Consolidation in Virtualized Multi-core Server. In Proceedings of the 12th IEEE International Conference on Performance Computing and Communications, Melbourne, Australia, 1–3 September 2010; pp. 281–288. [Google Scholar]

- Bebortta, S.; Tripathy, S.S.; Modibbo, U.M.; Ali, I. An optimal fog-cloud offloading framework for big data optimization in heterogeneous IoT networks. Decis. Anal. J. 2023, 8, 100295. [Google Scholar] [CrossRef]

- Kalra, M.; Singh, S. A review of metaheuristic scheduling techniques in cloud computing. Egypt. Inform. J. 2015, 16, 275–295. [Google Scholar] [CrossRef]

- Kumar, K.; Hans, A.; Sharma, A.; Singh, N. A Review on Scheduling Issues in Cloud Computing. In Proceedings of the International Conference on Advancements in Engineering and Technology (ICAET 2015), Incheon, Republic of Korea, 11–13 December 2015; pp. 4–7. [Google Scholar]

- Kumar, N.; Sankar, S.G.; Kumar, M.N.; Manikanta, P.; Aravind, V.S. Enhanced Real-Time Group Auction System for Efficient Allocation of Cloud Internet Applications. IJITR 2016, 4, 2836–2840. [Google Scholar]

- Kuo, R.J.; Cheng, C. Hybrid meta-heuristic algorithm for job shop scheduling with due date time window and release time. Int. J. Adv. Manuf. Technol. 2013, 67, 59–71. [Google Scholar] [CrossRef]

- Lee, C.; Wang, P.; Niyato, D. A real-time group auction system for efficient allocation of cloud internet applications. IEEE Trans. Serv. Comput. 2015, 8, 251–268. [Google Scholar] [CrossRef]

- Hines, M.; Gopalan, K. Post-copy based live virtual machine migration using adaptive pre-paging and dynamic selfballooning. In Proceedings of the 2009 ACM SIGPLAN/SIGOPS International Conference on Virtual Execution Environments, Washington, DC, USA, 11–13 March 2009; pp. 51–60. [Google Scholar]

- Mangla, N.; Singh, M.; Rana, S.K. Resource Scheduling In Cloud Environment: A Survey. Advances in Science and Technology. Res. J. 2016, 10, 38–50. [Google Scholar]

- Schmidt, M.; Fallenbeck, N.; Smith, M.; Freisleben, B. Efficient Distribution of Virtual Machines for Cloud Computing. In Proceedings of the Parallel, Distributed and Network-Based Processing (PDP), 2010 18th Euromicro International Conference, Pisa, Italy, 17–19 February 2010; pp. 567–574. [Google Scholar]

- Mishra, R.K.; Kumar, S.; Naik, S.B. Priority based round-Robin service broker algorithm for cloud-analyst. In Proceedings of the International Advance Computing Conference (IACC), Gurgaon, India, 21–22 February 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 878–881. [Google Scholar]

- Nejad, M.M.; Mashayekhy, L.; Grosu, D. Truthful greedy mechanisms for dynamic virtual machine provisioning and allocation in clouds. IEEE Trans. Parallel Distrib. Syst. 2015, 26, 594–603. [Google Scholar] [CrossRef]

- Poola, D.; Ramamohanarao, K.; Buyya, R. Fault-tolerant workflow scheduling using spot instances on clouds. Proc. Comput. Sci. 2014, 29, 523–533. [Google Scholar] [CrossRef]

- Jansen, R.; Brenner, P.R. Energy Efficient Virtual Machine Allocation in the Cloud. In Proceedings of the 2011 International Green Computing Conference and Workshops (IGCC), Orlando, FL, USA, 25–28 July 2011; pp. 1–8. [Google Scholar]

- Pal, S.; Pattnaik, P.K. Efficient architectural Framework of Cloud Computing. Int. J. Cloud Comput. Serv. Sci. 2012, 1, 66–73. [Google Scholar] [CrossRef]

- Sundareswaran, S.; Squicciarini, A.; Lin, D. A Brokerage-Based Approach for Cloud Service Selection. In Proceedings of the 2012 IEEE Fifth International Conference on Cloud Computing, Honolulu, HI, USA, 24–29 June 2012; IEEE Computer Society: Washington, DC, USA, 2012; pp. 558–565. [Google Scholar]

- Yang, S.; Kwon, D.; Yi, H.; Cho, Y.; Kwon, Y.; Paek, Y. Techniques to Minimize State Transfer Costs for Dynamic Execution Offloading in Mobile Cloud Computing. IEEE Trans. Mob. Comput. 2014, 13, 2648–2659. [Google Scholar] [CrossRef]

- Salot, P. A survey of various scheduling algorithm in cloud computing environment. Int. J. Res. Eng. Technol. 2013, 2, 131–135, ISSN 2319-1163. [Google Scholar]

- Singh, S.; Chana, I. A survey on resource scheduling in cloud computing: Issues and challenges. J. Grid Comput. 2016, 14, 217–264. [Google Scholar] [CrossRef]

- Pal, S.; Mohanty, S.; Pattnaik, P.K.; Mund, G.B. A Virtualization Model for Cloud Computing. In Proceedings of the International Conference on Advances in Computer Science, Delhi, India, 28–29 December 2012; pp. 10–16. [Google Scholar]

- Sowjanya, T.S.; Praveen, D.; Satish, K.; Rahiman, A. The queuing theory in cloud computing to reduce the waiting time. Int. J. Comput. Sci. Eng. Technol. 2011, 1, 110–112. [Google Scholar]

- Szabo, C.; Kroeger, T. Evolving multi-objective strategies for task allocation of scientific workflows on public clouds. In Proceedings of the 2012 IEEE Congress on Evolutionary Computation, Brisbane, Australia, 10–15 June 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 1–8. [Google Scholar]

- Topcuoglu, H.; Hariri, S.; Wu, M.Y. Performance-effective and lowcomplexity task scheduling for heterogeneous computing. IEEE Trans. Parallel Distrib. Syst. 2002, 13, 260–274. [Google Scholar] [CrossRef]

- Sarathy, V.; Narayan, P.; Mikkilineni, R. Next generation cloud computing architecture-enabling real-time dynamism for shared distributed physical infrastructure. In Proceedings of the 19th IEEE International Workshops on Enabling Technologies: Infrastructures for Collaborative Enterprises (WETICE’10), Larissa, Greece, 28–30 June 2010; pp. 48–53. [Google Scholar]

- Yassein, M.O.B.; Khamayseh, Y.M.; Hatamleh, A.M. Intelligent randomize round Robin for cloud computing. Int. J. Cloud Appl. Comput. 2013, 3, 27–33. [Google Scholar] [CrossRef]

- Yu, J.; Kirley, M.; Buyya, R. Multi-objective planning for workflow execution on grids. In Proceedings of the 8th IEEE/ACM International Conference on Grid Computing (GRID ’07), Austin, TX, USA, 19–21 September 2007; pp. 10–17. [Google Scholar]

- Xiao, Z.; Song, W.; Chen, Q. Dynamic Resource Allocation Using Virtual Machines for Cloud Computing Environment. IEEE Trans. Parallel Distrib. Syst. 2013, 24, 1107–1117. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, B.; Huang, Z.; Wang, J.; Zhu, J. SGAM: Strategy-proof group buying-based auction mechanism for virtual machine allocation in clouds. Concurr. Comput. Pract. Exp. 2015, 27, 5577–5589. [Google Scholar] [CrossRef]

- Zhao, W.; Stankovic, J.A. Performance analysis of FCFS and improved FCFS scheduling algorithms for dynamic real-time computer systems. In Proceedings of the Real Time Systems Symposium, Santa Monica, CA, USA, 5–7 December 1989; IEEE: Piscataway, NJ, USA, 1989; pp. 156–165. [Google Scholar]