SiamUT: Siamese Unsymmetrical Transformer-like Tracking

Abstract

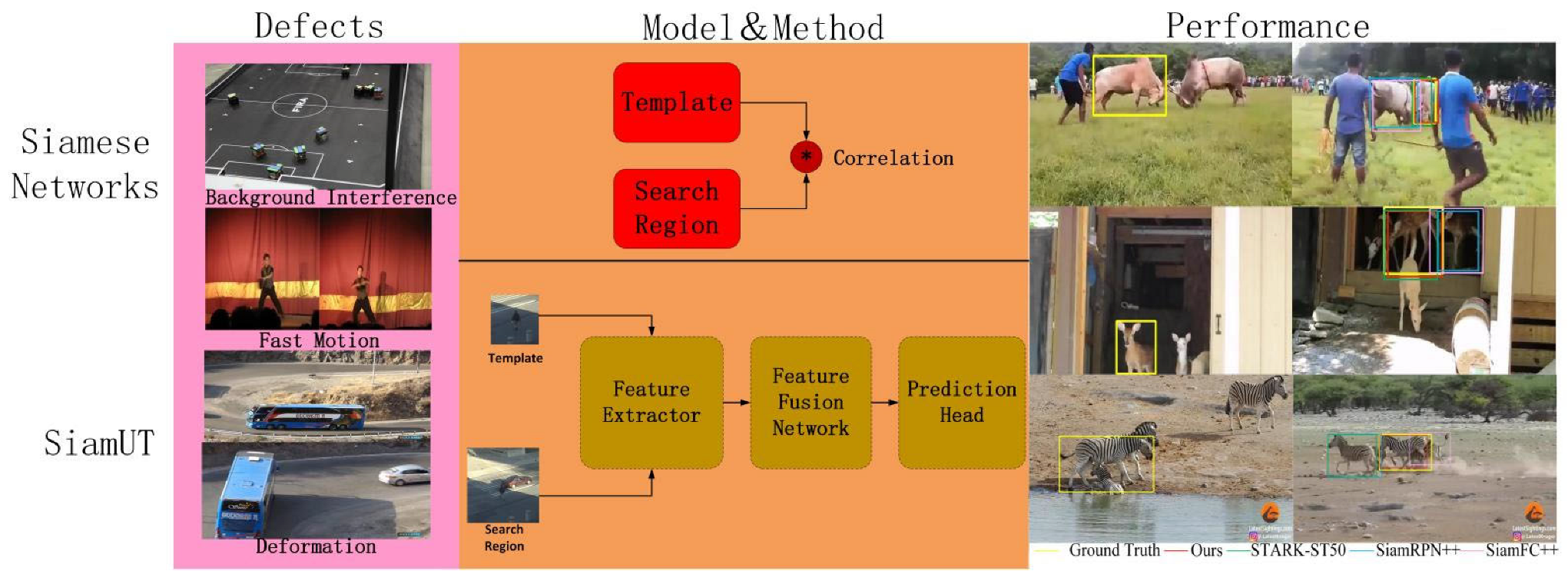

1. Introduction

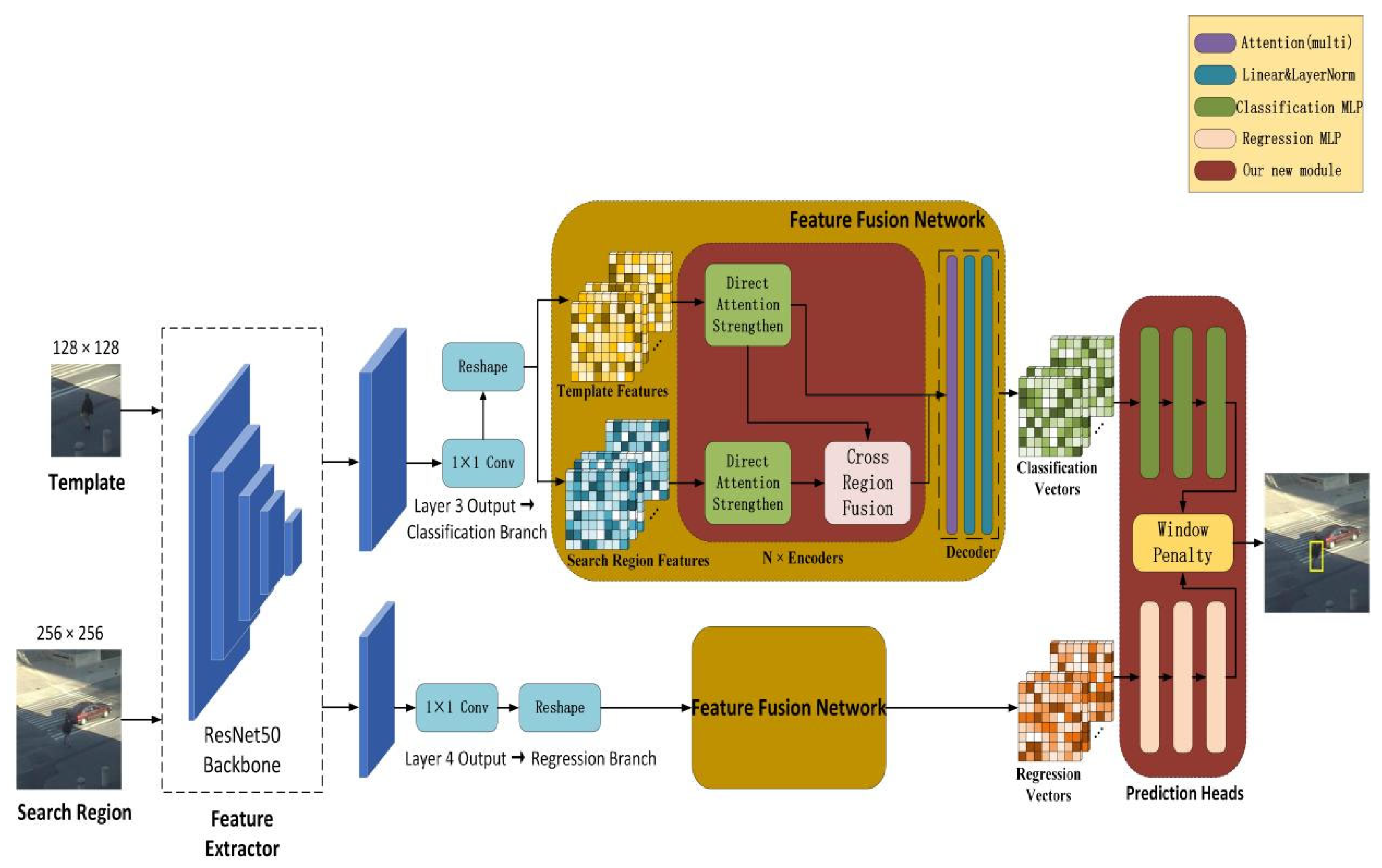

- We propose a transformer-like feature fusion network based on pure utilization of attention mechanisms, combining the template and the search region instead of a cross-correlation operation;

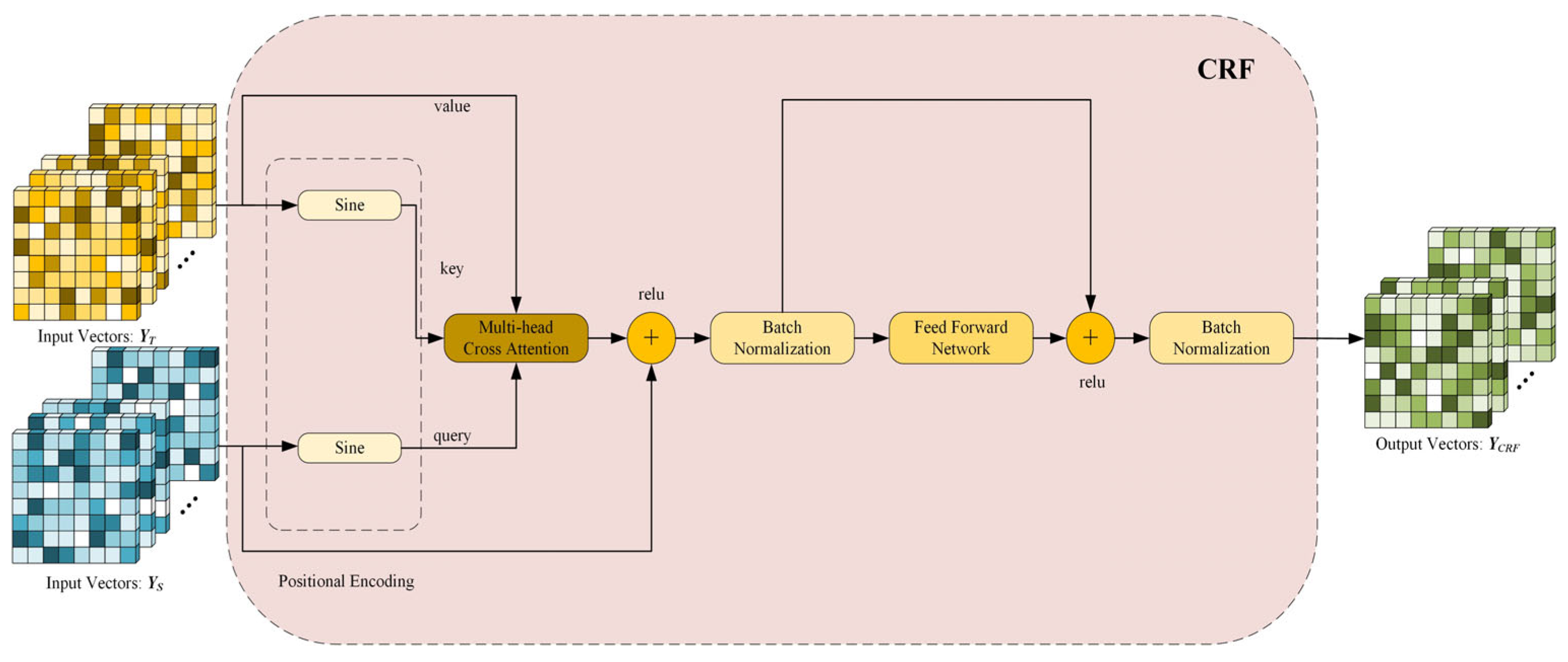

- We develop two highly specialized attention modules: a direct attention strengthening module based on self-attention and a cross-region fusion module with cross attention, enabling the tracker to focus on useful information and establish long-term feature associations;

- We propose decoupling prediction heads for both classification and regression along with the two-layer output mechanism to enhance the results of the previous attention map. Furthermore, we replace the basic GIOU loss with DIOU, which is more suitable for single object tracking.

2. Related Works

2.1. Siamese Networks Based on Cross-Correlation Operation

2.2. Transformer and Transformer-like Networks

3. Model

3.1. Feature Extractor

3.2. Feature Fusion Network

3.3. Prediction Heads and Training Loss

4. Experiments

4.1. Implementations

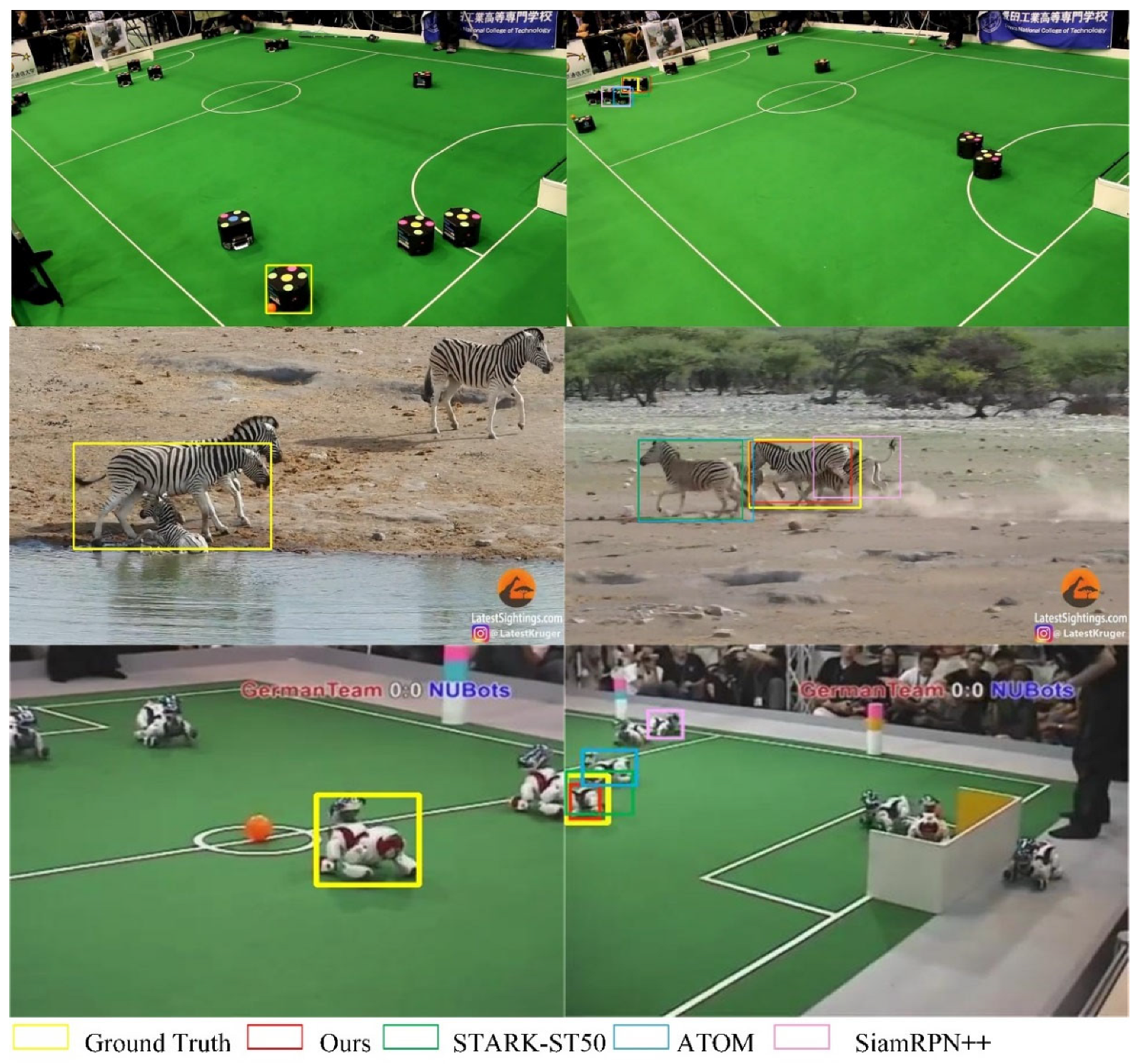

4.2. Evaluation

4.3. Ablation

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Wu, Y.; Lim, J.; Yang, M.-H. Object tracking benchmark. Proc. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1834–1848. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Chen, J.; Yuan, J.; Chen, Q.; Wang, J.; Wang, X.; Han, S.; Chen, X.; Pi, J.; Yao, K.; et al. Cae v2: Context autoencoder with clip target. arXiv 2022, arXiv:2211.097993. [Google Scholar] [CrossRef]

- Li, B.; Wu, W.; Wang, Q.; Zhang, F.; Xing, J.; Yan, J. SiamRPN++: Evolution of siamese visual tracking with very deep networks. arXiv 2019, arXiv:1812.11703. [Google Scholar] [CrossRef]

- Xu, Y.; Wang, Z.; Li, Z.; Yuan, Y.; Yu, G. SiamFC++: Towards robust and accurate visual tracking with target estimation guidelines. arXiv 2020, arXiv:1911.06188. [Google Scholar] [CrossRef]

- Zhang, Z.; Peng, H.; Fu, J.; Li, B.; Hu, W. Ocean: Object-Aware Anchor-Free Tracking. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020. [Google Scholar] [CrossRef]

- Zheng, L.; Tang, M.; Chen, Y.; Wang, J.; Lu, H. Learning Feature Embeddings for Discriminant Model Based Tracking. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020. [Google Scholar] [CrossRef]

- Choi, J.; Kwon, J.; Lee, K.M. Deep meta learning for real-time target-aware visual tracking. arXiv 2019, arXiv:1712.09153. [Google Scholar] [CrossRef]

- Du, F.; Liu, P.; Zhao, W.; Tang, X. Correlation-Guided Attention for Corner Detection Based Visual Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Yu, Y.; Xiong, Y.; Huang, W.; Scott, M.R. Deformable siamese attention networks for visual object tracking. arXiv 2020, arXiv:2004.06711. [Google Scholar] [CrossRef]

- Bergmann, P.; Meinhardt, T.; Leal-Taixe, L. Tracking without Bells and Whistles. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar] [CrossRef]

- Sadeghian, A.; Alahi, A.; Savarese, S. Tracking the Untrackable: Learning to Track Multiple Cues with Long-Term Dependencies. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, C.; Wang, X.; Zeng, W.; Liu, W. FairMOT: On the fairness of detection and re-identification in multiple object tracking. Int. J. Comput. Vis. 2021, 129, 3069–3087. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. arXiv 2017, arXiv:1706.03762. [Google Scholar] [CrossRef]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H. Fully-Convolutional Siamese Networks for Object Tracking. In Proceedings of the Computer Vision–ECCV 2016 Workshops, Amsterdam, The Netherlands, 15–16 October 2016. [Google Scholar] [CrossRef]

- Held, D.; Thrun, S.; Savarese, S. Learning to Track at 100 fps with Deep Regression Networks. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar] [CrossRef]

- Li, B.; Yan, J.; Wu, W.; Zhu, Z.; Hu, X. High Performance Visual Tracking with Siamese Region Proposal Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Tao, R.; Gavves, E.; Smeulders, A.W. Siamese Instance Search for Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1420–1429. [Google Scholar]

- Valmadre, J.; Bertinetto, L.; Henriques, J.; Vedaldi, A.; Torr, P.H. End-to-End Representation Learning for Correlation Filter Based Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2805–2813. [Google Scholar] [CrossRef]

- Wang, Q.; Gao, J.; Xing, J.; Zhang, M.; Hu, W. Dcfnet: Discriminant correlation fifilters network for visual tracking. arXiv 2017, arXiv:1704.04057. [Google Scholar] [CrossRef]

- Wang, Q.; Teng, Z.; Xing, J.; Gao, J.; Hu, W.; Maybank, S. Learning Attentions: Residual Attentional Siamese Network for High Performance Online Visual Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4854–4863. [Google Scholar]

- Wang, Q.; Zhang, M.; Xing, J.; Gao, J.; Hu, W.; Maybank, S.J. Do Not Lose the Details: Reinforced Representation Learning for High Performance Visual Tracking. In Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence, Stockholm Sweden, 13–19 July 2018; Available online: https://eprints.bbk.ac.uk (accessed on 16 July 2023).

- Zhu, Z.; Wang, Q.; Li, B.; Wu, W.; Yan, J.; Hu, W. Distractor-aware siamese networks for visual object tracking. arXiv 2018, arXiv:1808.06048. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R–CNN: Towards real-time object detection with region proposal networks. arXiv 2015, arXiv:1506.01497. [Google Scholar] [CrossRef] [PubMed]

- Danelljan, M.; Bhat, G.; Khan, F.S.; Felsberg, M. ATOM: Accurate tracking by overlap maximization. arXiv 2019, arXiv:1811.07628. [Google Scholar] [CrossRef]

- Bhat, G.; Danelljan, M.; Gool, L.V.; Timofte, R. Learning discriminative model prediction for tracking. arXiv 2019, arXiv:1904.07220. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. BERT: Pre-training of deep bidirectional transformers for language understanding. arXiv 2019, arXiv:1810.04805. [Google Scholar] [CrossRef]

- Luscher, C.; Beck, E.; Irie, K.; Kitza, M.; Michel, W.; Zeyer, A.; Schluter, R.; Ney, H. RWTH ASR Systems for LibriSpeech: Hybrid vs attention. arXiv 2019, arXiv:1905.03072. [Google Scholar] [CrossRef]

- Synnaeve, G.; Xu, Q.; Kahn, J.; Grave, E.; Likhomanenko, T.; Pratap, V.; Sriram, A.; Liptchinsky, V.; Collobert, R. End-to-end ASR: From supervised to semi-supervised learning with modern architectures. arXiv 2019, arXiv:1911.08460. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Maire, M.; Belongie, S.J.; Bourdev, L.D.; Girshick, R.B.; Hays, J.; Perona, P.; Ramanan, D.; Dollar, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014. [Google Scholar] [CrossRef]

- Chen, X.; Yan, B.; Zhu, J.; Wang, D.; Yang, X.; Lu, H. Transformer tracking. arXiv 2021, arXiv:2203.13533. [Google Scholar] [CrossRef]

- Fu, Z.; Fu, Z.; Liu, Q.; Cai, W.; Wang, Y. SparseTT: Visual Tracking with Sparse Transformers. arXiv 2022, arXiv:2205.03776. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. arXiv 2016, arXiv:1608.06993. [Google Scholar] [CrossRef]

- Lin, L.; Fan, H.; Zhang, Z.; Xu, Y.; Ling, H. SwinTrack: A Simple and Strong Baseline for Transformer Tracking. arXiv 2021, arXiv:2112.00995. [Google Scholar] [CrossRef]

- Zhang, Z.; Lin, Y.; Liu, Z.; Li, P.; Sun, M.; Zhou, J. MoEfication: Transformer Feed-forward Layers are Mixtures of Experts. arXiv 2022, arXiv:2110.01786. [Google Scholar] [CrossRef]

- Dedieu, A.; Lázaro-Gredilla, M.; George, D. Sample-Efficient L0-L2 Constrained Structure Learning of Sparse Ising Models. arXiv 2020, arXiv:2012.01744. [Google Scholar] [CrossRef]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU Loss: Faster and Better Learning for Bounding Box Regression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020. [Google Scholar] [CrossRef]

- Fan, H.; Lin, L.; Yang, F.; Chu, P.; Deng, G.; Yu, S.; Bai, H.; Xu, Y.; Liao, C.; Ling, H. LaSOT: A High-Quality Benchmark for Large-Scale Single Object Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Huang, L.; Zhao, X.; Huang, K. Got-10k: A large high-diversity benchmark for generic object tracking in the wild. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 1562–1577. [Google Scholar] [CrossRef] [PubMed]

- Muller, M.; Bibi, A.; Giancola, S.; Alsubaihi, S.; Ghanem, B. TrackingNet: A large-scale dataset and benchmark for object tracking in the wild. arXiv 2018, arXiv:1803.10794. [Google Scholar] [CrossRef]

- Yan, B.; Peng, H.; Fu, J.; Wang, D.; Lu, H. Learning spatio-temporal transformer for visual tracking. arXiv 2021, arXiv:2103.17154. [Google Scholar] [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Glorot, X.; Bengio, Y. Understanding the Difficulty of Training Deep Feedforward Neural Networks. In Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics, Sardinia, Italy, 13–15 May 2010. [Google Scholar]

- Loshchilov, I.; Hutter, F. Decoupled weight decay regularization. arXiv 2018, arXiv:1711.05101. [Google Scholar] [CrossRef]

- Zhang, D.; Zheng, Z.; Jia, R.; Li, M. Visual Tracking via Hierarchical Deep Reinforcement Learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2021. [Google Scholar] [CrossRef]

- Chen, B.; Wang, D.; Li, P.; Wang, S.; Lu, H. Real-Time ‘Actor-Critic’ Tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar] [CrossRef]

- Voigtlaender, P.; Luiten, J.; Torr, P.H.S.; Leibe, B. Siam R-CNN: Visual Tracking by Redetection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Guo, D.; Wang, J.; Cui, Y.; Wang, Z.; Chen, S. SiamCAR: Siamese Fully Convolutional Classification and Regression for Visual Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar] [CrossRef]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized Intersection Over Union: A Metric and a Loss for Bounding Box Regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar] [CrossRef]

- Staniszewski, M.; Foszner, P.; Kostorz, K.; Michalczuk, A.; Wereszczyński, K.; Cogiel, M.; Golba, D.; Wojciechowski, K.; Polański, A. Application of Crowd Simulations in the Evaluation of Tracking Algorithms. Sensors 2020, 20, 4960. [Google Scholar] [CrossRef] [PubMed]

- Ciampi, L.; Messina, N.; Falchi, F.; Gennaro, C.; Amato, G. Virtual to Real Adaptation of Pedestrian Detectors. Sensors 2020, 20, 5250. [Google Scholar] [CrossRef]

| Trackers | AUC (%) | PNorm (%) |

|---|---|---|

| SiamRPN++ [3] | 49.6 | 56.9 |

| SiamFC++ [4] | 54.4 | 62.3 |

| PACNet [47] | 55.3 | 62.8 |

| Ocean [5] | 56.0 | 65.1 |

| DiMP50 [48] | 56.9 | 64.3 |

| Transt [31] | 64.7 | 73.8 |

| SiamR-CNN [49] | 64.8 | 72.2 |

| STARK-ST50 [43] | 66.1 | 76.3 |

| Ours | 66.5 | 75.5 |

| Trackers | AO (%) | SR0.5 (%) | SR0.75 (%) |

|---|---|---|---|

| SiamRPN++ [3] | 51.7 | 61.6 | 32.5 |

| SiamFC++ [4] | 59.5 | 69.5 | 47.9 |

| SiamCAR [50] | 56.9 | 67.0 | 41.5 |

| Ocean [5] | 61.1 | 72.1 | 47.3 |

| DiMP50 [48] | 63.4 | 73.8 | 54.3 |

| Transt [31] | 66.2 | 75.5 | 58.7 |

| SiamR-CNN [49] | 64.9 | 72.8 | 59.7 |

| STARK-ST50 [43] | 68.0 | 77.7 | 62.3 |

| Ours | 67.5 | 76.5 | 60.3 |

| Trackers | AUC (%) | PNorm (%) |

|---|---|---|

| SiamRPN++ [3] | 73.3 | 80.0 |

| SiamFC++ [4] | 75.4 | 80.0 |

| SiamAttn [9] | 75.2 | 81.7 |

| CGACD [8] | 71.1 | 81.0 |

| DiMP50 [48] | 74.0 | 80.1 |

| Transt [31] | 81.4 | 86.7 |

| SiamR-CNN [49] | 81.2 | 85.4 |

| STARK-ST50 [43] | 81.3 | 86.1 |

| Ours | 82.4 | 87.0 |

| Trackers | SiamRPN++ [3] | STARK-ST50 [43] | ATOM [24] | DiMP [48] | Ocean [5] | Ours |

|---|---|---|---|---|---|---|

| Partial Occlusion | 46.5 | 58.2 | 47.4 | 51.5 | 50.9 | 62.1 |

| Full Occlusion | 37.4 | 52.4 | 41.8 | 51.0 | 42.3 | 55.5 |

| Deformation | 53.2 | 62.8 | 52.2 | 57.1 | 62.5 | 66.9 |

| Background Clutter | 44.9 | 55.0 | 45.0 | 48.8 | 54.3 | 56.4 |

| Method | LaSOT | TrackingNet | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Transformer | DAS and CRF | Two- Layer Output | Decoupling Prediction Heads | GIoU | DIoU | AUC (%) | PNorm (%) | AUC (%) | PNorm (%) |

| √ | √ | 64.2 | 73.7 | 81.1 | 86.8 | ||||

| √ | √ | 65.1 | 74.0 | 81.6 | 86.6 | ||||

| √ | √ | √ | 63.0 | 71.3 | 80.7 | 85.1 | |||

| √ | √ | √ | 63.9 | 73.1 | 80.9 | 86.2 | |||

| √ | √ | √ | √ | 66.3 | 75.5 | 82.3 | 86.8 | ||

| √ | √ | √ | √ | 66.5 | 75.5 | 82.4 | 87.0 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, L.; Zhou, H.; Yuan, G.; Xia, M.; Chen, D.; Shi, Z.; Chen, E. SiamUT: Siamese Unsymmetrical Transformer-like Tracking. Electronics 2023, 12, 3133. https://doi.org/10.3390/electronics12143133

Yang L, Zhou H, Yuan G, Xia M, Chen D, Shi Z, Chen E. SiamUT: Siamese Unsymmetrical Transformer-like Tracking. Electronics. 2023; 12(14):3133. https://doi.org/10.3390/electronics12143133

Chicago/Turabian StyleYang, Lingyu, Hao Zhou, Guowu Yuan, Mengen Xia, Dong Chen, Zhiliang Shi, and Enbang Chen. 2023. "SiamUT: Siamese Unsymmetrical Transformer-like Tracking" Electronics 12, no. 14: 3133. https://doi.org/10.3390/electronics12143133

APA StyleYang, L., Zhou, H., Yuan, G., Xia, M., Chen, D., Shi, Z., & Chen, E. (2023). SiamUT: Siamese Unsymmetrical Transformer-like Tracking. Electronics, 12(14), 3133. https://doi.org/10.3390/electronics12143133