1. Introduction

In recent years, with global public health events, one of the most effective ways to prevent the spread of viruses is to wear masks [

1,

2]. However, many people get infected by neglecting to wear masks, which burdens the public healthcare system more. Mask-wearing detection has become a hot topic in the field of computer vision. In order to ensure the safety of specific public places, mask-wearing detection technology has been widely used in many scenarios, such as hospitals, nursing homes, and schools. Mask-wearing detection utilizes deep learning techniques to automatically detect and identify masks on human faces, which has become a crucial method to help control disease spread. Mask-wearing regulations can be effectively enforced by automatically identifying who is wearing a mask in public places, workplaces, and other social settings. So far, much in-depth research has been carried out on face mask detection. Khandelwal et al. [

3] proposed a mask classification technique using the MobileNetv2 object classifier, which can binarize the images into two classes: faces with and without masks. Puja et al. [

4] reported having combined mask-wearing detection with the field of artificial intelligence to check whether a person is wearing a mask through existing monitoring systems and innovative neural network algorithms. He et al. [

5] proposed an algorithm for face-mask based on HSV, HOG features, and SVM to check faces wearing masks on a regular basis. In the past few years, deep learning and object detection technology have achieved remarkable results in many fields. However, there are still some challenges in the task of mask-wearing detection, such as situations where the target is too dense in a complex scene or the local scene is occluded. Therefore, it has great theoretical importance and practical application to accurately handle the task of mask-wearing detection in complex scenarios. This study aims to propose an improved YOLOv7 mask-wearing detection model to improve the performance of mask-wearing detection.

Deep learning is an essential part of machine learning. Sutskever et al. [

6] proposed deep learning, which can simulate the operation of the human brain and build a neural network for autonomous learning. Deep learning can be widely applied to robot vision [

7], natural language processing [

8], speech signal processing [

9] and other application fields. The commonly used deep learning model is a convolutional neural network (CNN) [

10], which consists of multiple convolutional and pooling layers and uses sparse concatenation methods. By computing in layers, rich feature information can be extracted from images for target classification and regression tasks. The local connection and parameter-sharing properties of CNN make feature extraction more efficient.

Object detection is mainly employed for object categorization and positioning. Object detection algorithms based on deep learning are primarily divided into two categories [

11]. The first is a two-stage object detection algorithm, such as R-CNN series algorithms [

12,

13,

14,

15]; the second is a one-stage object detection algorithm, such as RetinaNet [

16] and YOLO series algorithms [

17,

18,

19,

20,

21,

22]. The first step of the two-stage algorithm is to obtain the input image and extract candidate regions. The second step is to perform CNN classification and recognition on the regions. The two-stage algorithm can achieve high accuracy but low detection speed. The one-stage algorithm performs deep sampling of different scales on each region of the image, then extracts the image features and classifies the objects, outputting the class and bounding box information of the target directly from the image. Deep sampling is a technique used to deal with targets of different scales, introducing multiple layers of receptive field sizes into the network. For example, feature pyramid networks [

23] achieve multi-scale object detection by introducing multiple-feature pyramid layers of different scales, thereby improving the overall detection performance, although the computational efficiency and classification speed of the one-stage algorithm are relatively high, due to its uniform and dense sampling method, which poses a certain challenge to the training and fitting of the model.

This paper uses the one-stage algorithm for mask-wearing detection based on the comprehensive consideration of performance and complexity. Specifically, the one-stage algorithm has a high processing speed and low computational complexity and directly predicts objects from the image without a complex region proposal process, which is very important for real-time applications and processing large amounts of data. Because mask-wearing detection requires real-time performance, the one-stage algorithm is chosen. This paper aims to detect masks and obtain accurate detection results, and treat mask detection as an end-to-end object detection task, which can provide more accurate mask detection results.

Since mask-wearing images in complex scenes often face problems such as overly dense targets and localized scene occlusion, it is difficult to identify target features, making it difficult for traditional object detection algorithms to achieve satisfactory results. YOLOv7 not only meets real-time detection demand but is also very suitable for deployment in the actual environment. This article presents a new mask-wearing detection model, YOLOv7-CPCSDSA, which combines CPC structure, SD structure, and SA mechanism [

24] based on YOLOv7.

The contributions of this paper are as follows:

- (1)

This paper validates and analyzes the model through comparison and ablation experiments. YOLOv7 is chosen as the base model to demonstrate the superiority of YOLOv7-CPCSDSA in balancing accuracy and speed by comprehensively evaluating various metrics.

- (2)

The CPC structure is added to YOLOv7, which can better use computing power, effectively extract spatial features, and reduce computing redundancy and memory access.

- (3)

The SD structure is added to the YOLOv7 to enhance the detailed information and the range of the perceptual field of the feature map.

- (4)

The SA mechanism is combined in the YOLOv7, which can make the model focus on the local information in the image with a lower computational cost and improve the model’s accuracy.

The rest of the paper is organized as follows:

Section 2 outlines the related work in relevant fields.

Section 3 presents the network structure of YOLOv7 and YOLOv7-CPCSDSA, and introduces the principles of CPC, SD, and SA modules.

Section 4 introduces the experimental environment, experimental datasets, experimental parameter settings, comparative experiments, ablation experiments, and visualization.

Section 5 summarizes the advantages and disadvantages of the work and introduces further research.

2. Related Work

In recent years, both one-stage and two-stage algorithms have achieved remarkable results in the research of object detection. Ge et al. [

25] proposed a CNN model based on LLE-CNNs capable of recognizing human faces and those wearing masks. This model includes a proposal, embedding and verification modules, and has an average precision of 76.4%. Jiang et al. [

26] proposed a multi-scale facial detection algorithm that solves the difficulty of recognizing small and medium-scale faces based on Faster R-CNN. Although the model exhibits high precision in face detection, its model structure is relatively complex, and the detection effect on small targets is not so good. Loey et al. [

27] proposed a mask-wearing detection model based on deep learning, which uses the YOLOv2 model combined with ResNet50 for feature extraction. Attention is introduced to improve the model’s performance on mask-wearing detection tasks. Experimental results show that the proposed model outperforms other algorithms in accuracy. However, the model needs to improve in generalization ability. Su et al. [

28] proposed an improved YOLOv3 mask-wearing detection algorithm, which combines transfer learning and uses EfficientNet as the backbone network, which reduces the number of network parameters and improves the accuracy of mask-wearing detection. Wu et al. [

29] presented a mask-wearing detection model based on FMD-YOLO, which combines the deep residual network and the Res2Net module in feature enhancement network and uses the enhanced path aggregation network for feature fusion. In addition, the model adopts localization loss during the training stage and employs the Non-Maximum Suppression (NMS) technique during the inference phase to optimize detection efficiency and accuracy, resulting in a superior mAP value. Kumar et al. [

30] proposed an improved mask vision model based on YOLOv4-tiny, combining a spatial pyramid pooling module and an additional small detection layer. K-means++ clustering is used to determine the best initial value of the anchor box to achieve faster and more accurate regression, the CIoU loss function is used, and the mAP of the proposed improved network is 6.6% higher than that of YOLOv4-tiny. Zhao et al. [

31] presented an improved algorithm for face mask detection based on YOLOv4. The algorithm introduces an attention mechanism module at an appropriate network level, focusing on key facial feature points with masks and reducing irrelevant data. Feature extraction from images is carried out by employing a path-aggregation network and feature pyramid. The experimental data suggest better results when the Convolutional Block Attention Module (CBAM) is inserted before YOLOv4’s three heads and within the neck network. Guo et al. [

32] proposed the YOLOv5-CBD model, fusing a coordinated attention mechanism and a weighted bidirectional feature pyramid network for the purpose of bolstering detection accuracy. However, this model focuses on the recognition problem of the mask itself. Wang et al. [

33] proposed a mask detection technique employing the YOLO-GBC network designed to address issues of incorrect identification and high missed detection rate of existing mask detection algorithms in actual scenarios. The network adopts the global attention mechanism and content-aware reassembly of features, enabling key information extraction and retain global features. There are varying degrees of improvements in both accuracy and recall. The main problem of the above methods is the limited ability to express features in complex scenarios, and the detection accuracy needs to be improved.

3. Models

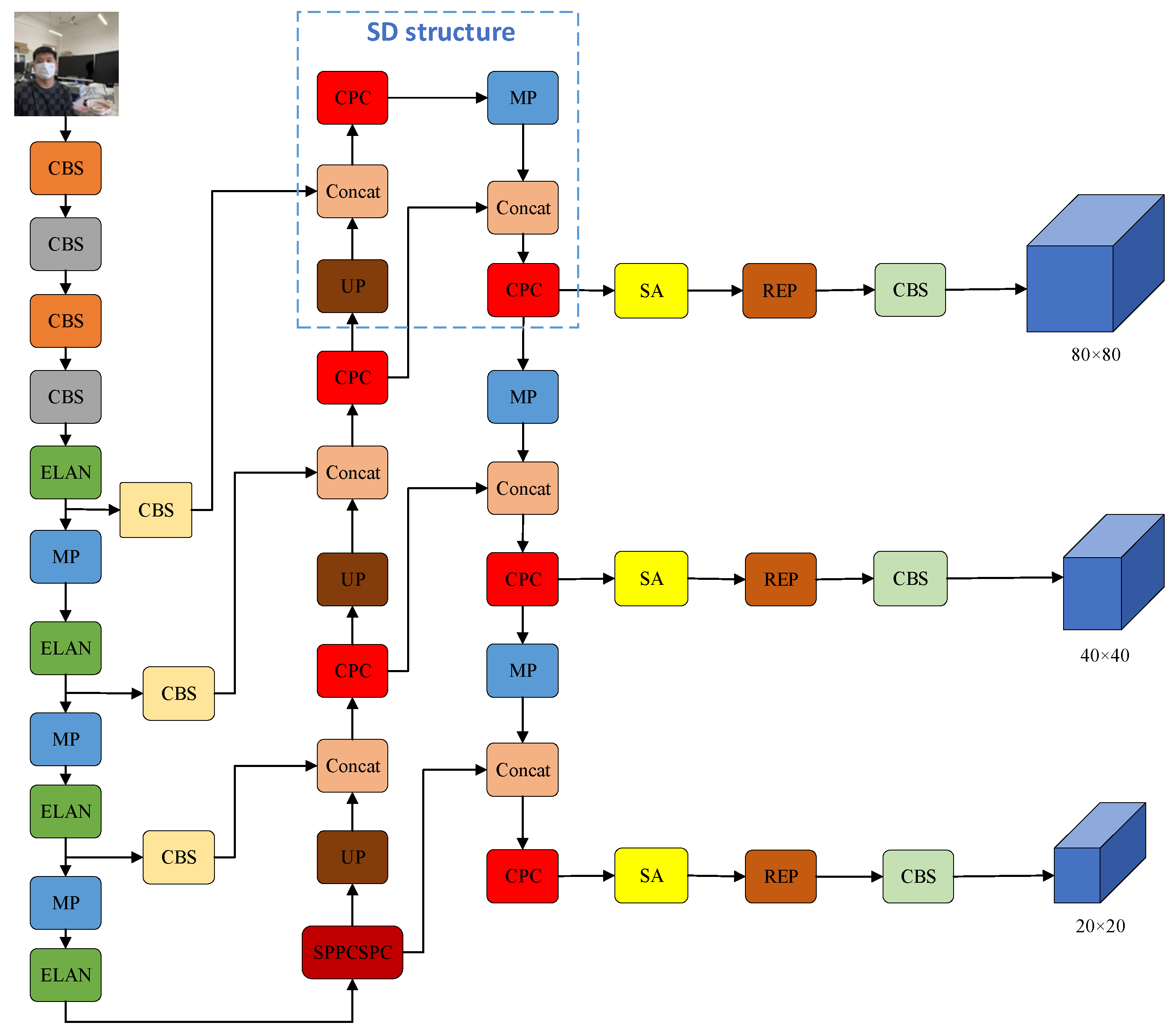

3.1. YOLOv7

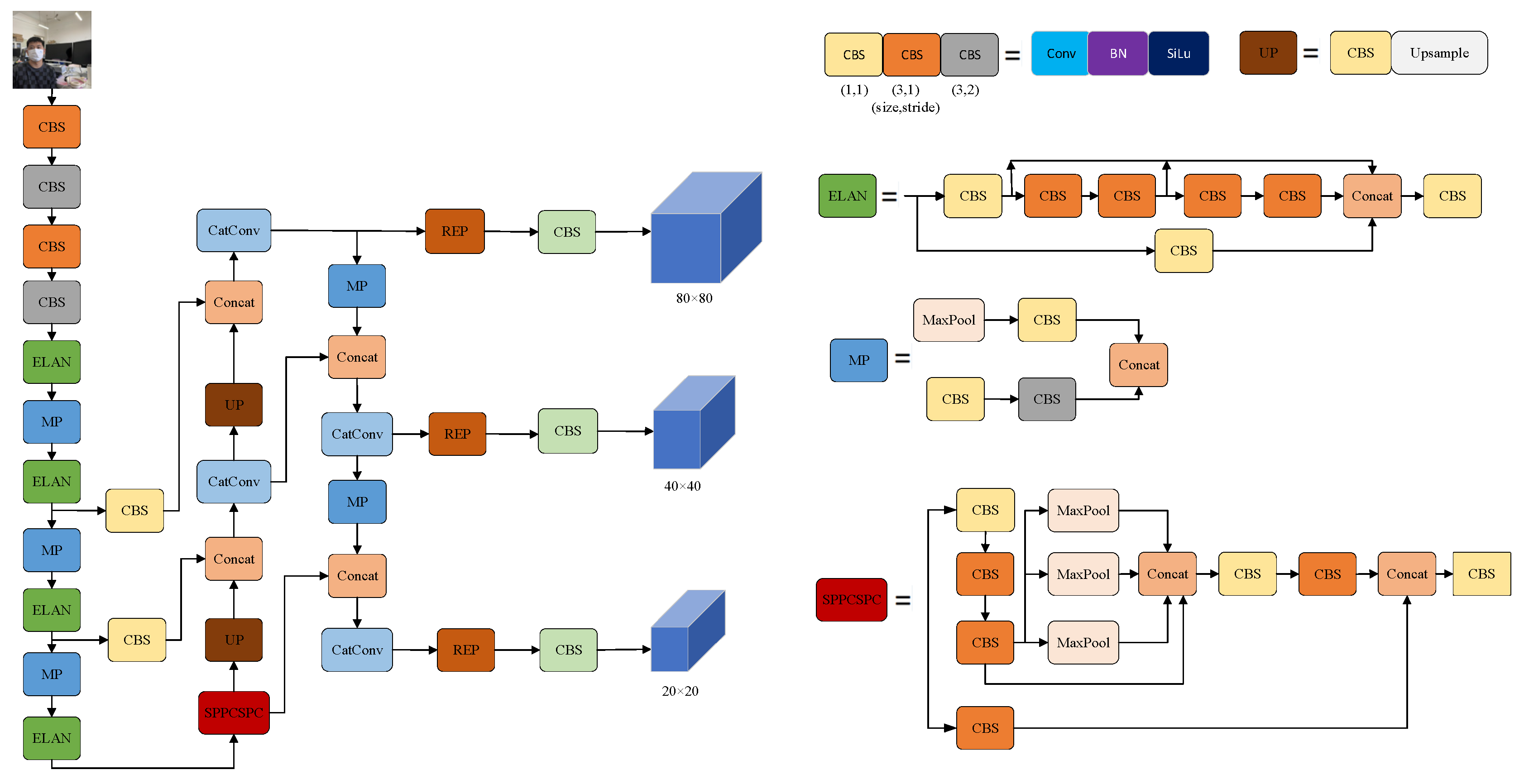

The basic architecture of YOLOv7 includes input, backbone, and head. Input first preprocesses the input image and aligns it into a 640 × 640 RGB image. The backbone includes CBS, ELAN, and MP modules. The input image undergoes feature extraction through the backbone. Then, the multi-scale feature fusion mode is adopted in the head, which can retain more details and help improve the model’s ability to represent the target. YOLOv7 has three detection heads, and the features after feature fusion will be passed to the output layer, which will predict the location and category of the target and generate the corresponding bounding box. The detection head outputs three feature scales: 20 × 20, 40 × 40 and 80 × 80, with the three scales detecting targets at scales corresponding to large, medium and small targets, respectively. YOLOv7 applies the NMS algorithm in the prediction results to remove redundant bounding boxes and ensure that each target has only one bounding box corresponding to it. The activation function of YOLOv7 uses SiLu. The structure diagram of YOLOv7 is shown in

Figure 1.

3.2. CPC

PConv [

34] is an efficient convolutional neural network structure that can improve network performance and efficiency. PConv takes advantage of the redundancy in feature maps, applying regular Conv to only some of the input channels while leaving the rest unchanged.

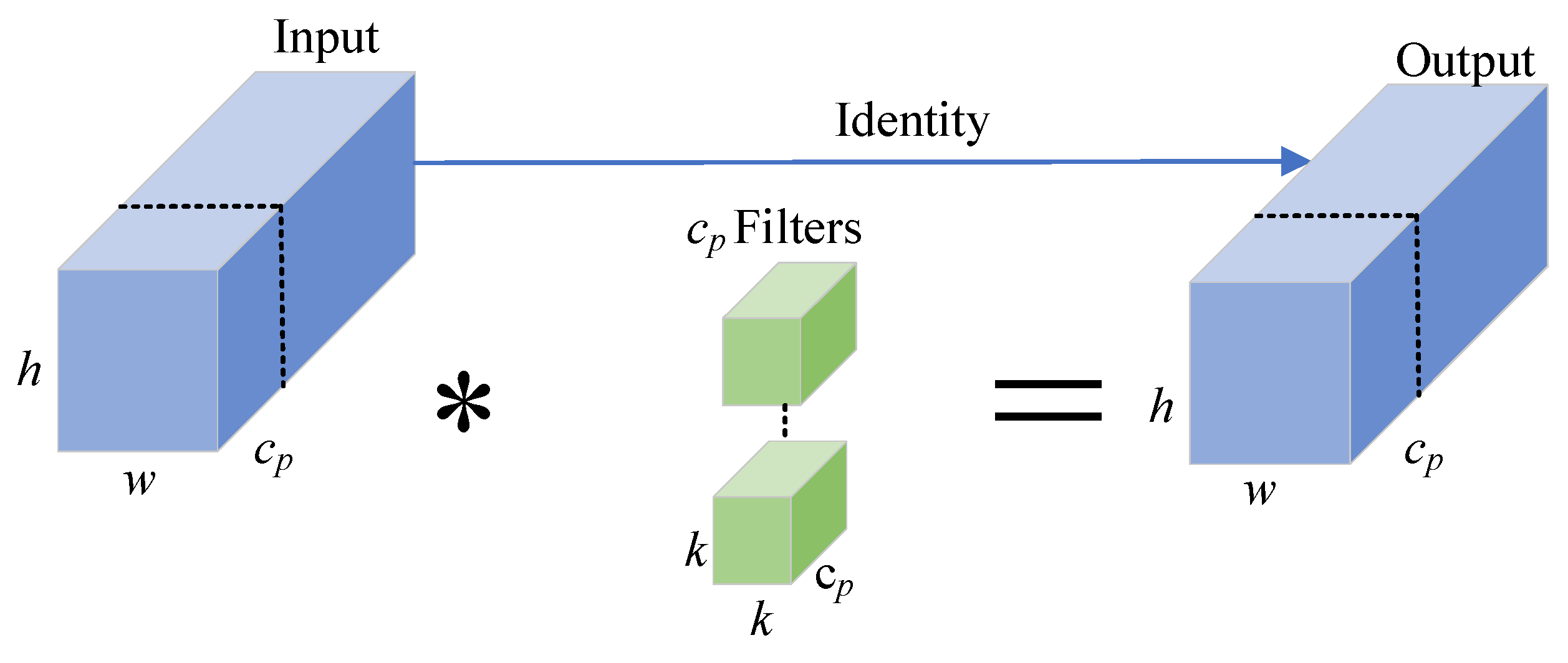

Figure 2 shows the feature redundancy map. PConv reduces the amount of redundant calculations and memory accesses, has lower floating point operations (FLOPs) than regular Conv, and can extract spatial features more efficiently.

Figure 3 shows the schematic diagram of PConv. PConv applies a regular Conv method for spatial feature extraction on a portion of the input channels, leaving the rest unaffected.

represents the number of channels of the input feature map.

represents the channels employed for spatial feature extraction within PConv. Moreover, the input and output feature maps have the same channels. * represents convolution operation.

The choice of value can be considered based on the following factors: observe the degree of redundancy between input feature map channels, if the redundancy between different channels is large, by using some channels for calculation, the amount of calculation and memory access can be reduced; A balance needs to be found between accuracy and computational efficiency based on performance requirements. Larger values may decrease accuracy, so a trade-off is required between accuracy and computational efficiency. The optimal value of can be selected according to the results of experiments and verifications to maintain a reasonable level of accuracy while reducing computational redundancy and memory access. In this paper, is set to .

The FLOPs of PConv are only , where is the height, is the width, is the height and width of the filters. Since , the FLOPs of PConv are only 1/16 of regular Conv. In addition, the memory access of PConv is small, that is, . Because , the memory access of PConv is only 1/4 of that of regular Conv. Because PConv only needs to read and process the data of some channels, while the conventional convolution operation needs to read and process the data of all channels. Therefore, PConv is beneficial to reduce FLOPs and memory access.

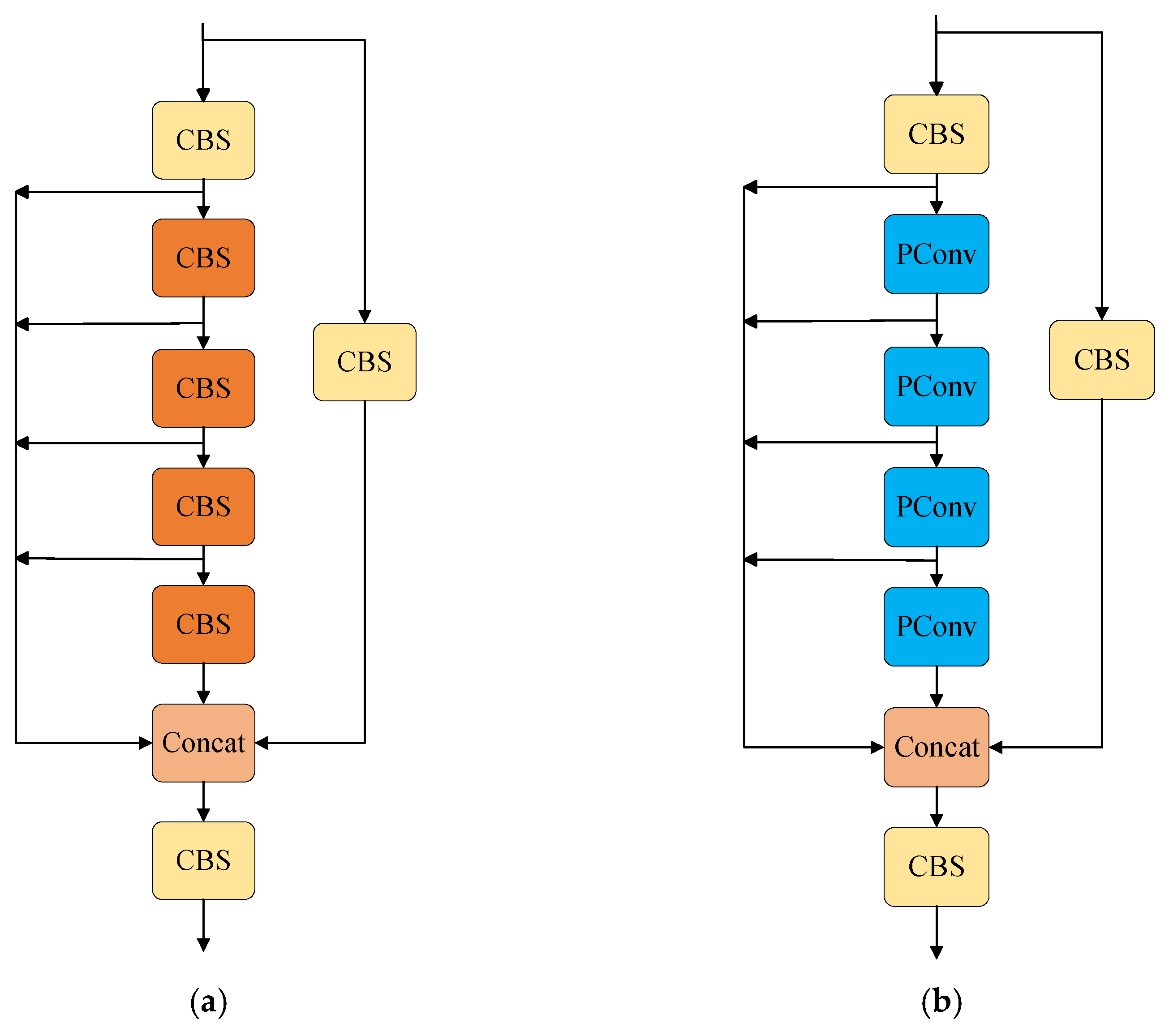

Replacing part of the convolution of CatConv in the YOLOv7 with PConv to generate a CPC structure can effectively reduce redundant computation and memory access while maintaining good feature extraction capabilities. Since the output feature maps of PConv have the same number of channels as the input feature maps, this enables PConv to integrate with the CatConv seamlessly. The structure of the CatConv module is shown in

Figure 4a, and that of the CPC module is shown in

Figure 4b.

3.3. SD

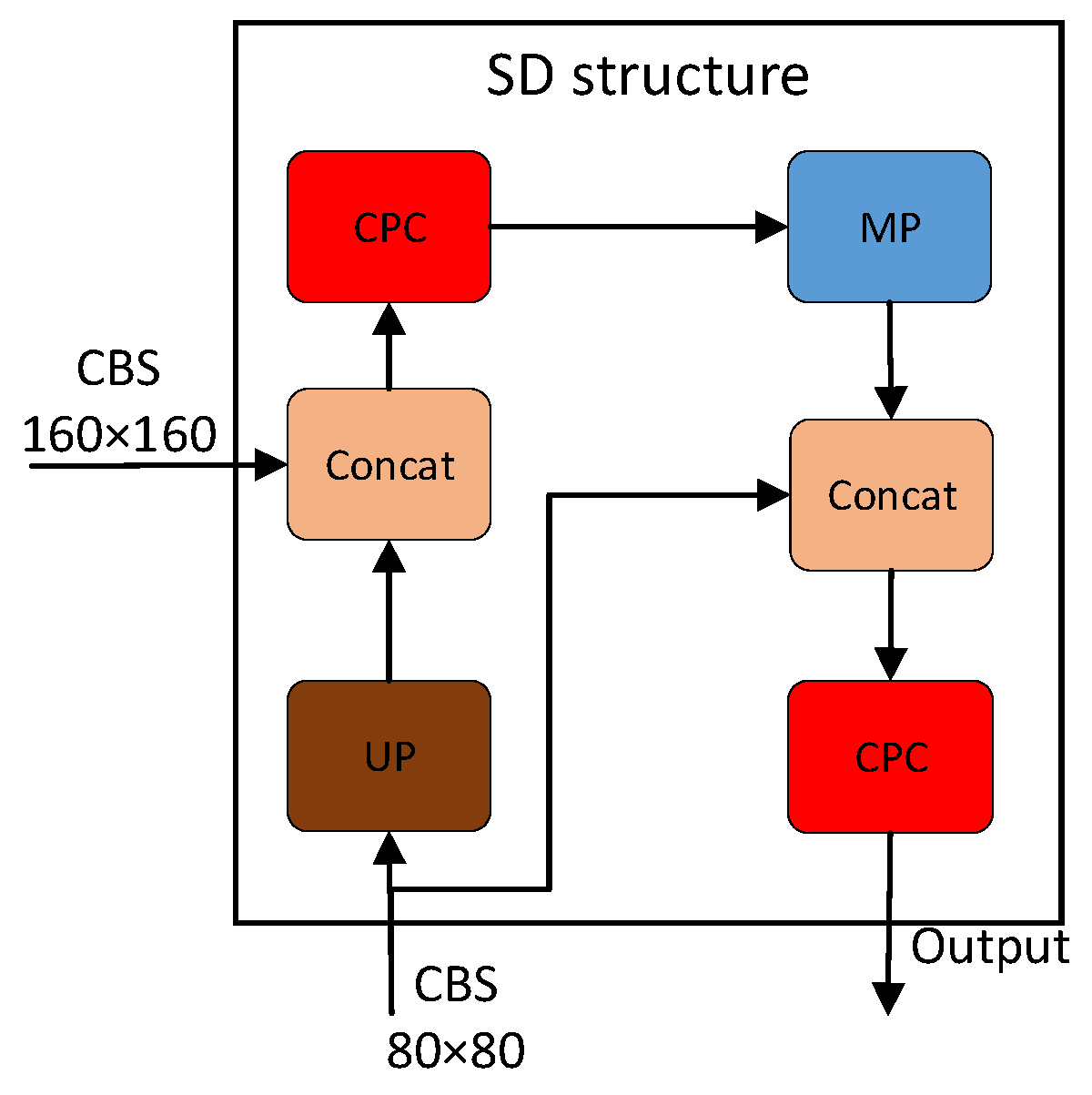

YOLOv7 can only capture small targets with a feature scale of 80 × 80. Small targets may contain finer details and structural information, which smaller feature maps may not adequately capture. By using larger feature maps, the range of the receptive field can be increased, thereby improving the ability to detect and recognize small targets. Larger feature maps can cover wider context information, enabling the model to understand the surrounding environment of small targets comprehensively.

Therefore, this paper designs the SD structure to capture small targets better. The input to the SD structure is an 80 × 80 feature map. The feature scale is first expanded to 160 × 160 by an upsampling operation and combined with a 160 × 160 feature map in the backbone, a step that increases the detailed information and the range of the perceptual field of the feature map. CPC can enable the network to learn the information of the feature map better and can better maintain the convergence when the model is scaled. The size of the feature map is then reduced back to 80 × 80 via the MaxPooling operation, which reduces the spatial size of the feature map while retaining the main features, thus controlling the complexity of the network and enabling information transfer from a higher to a lower scale. The SD structure is shown in

Figure 5.

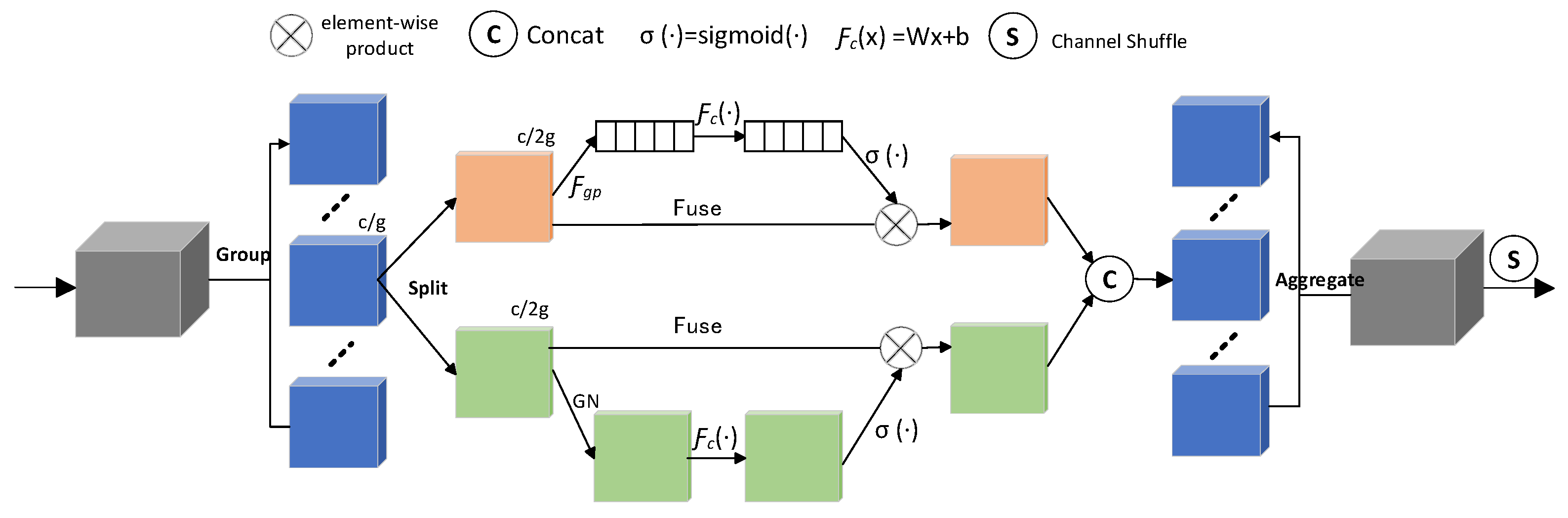

3.4. SA

The SA module can focus on the important information in the input feature map to improve the model’s performance. Compared with the CBAM, the SA module introduces channel shuffle, further enhancing attention features’ diversity. Its channel shuffle and the combination of channel and spatial attention give the SA module better generalization ability in various complex scenarios. The SA structure is shown in

Figure 6.

The SA module mainly includes three parts: a grouping feature, fusing attention, and an aggregating feature. The input feature map is divided into groups along the channel dimension, expressed as , where each sub-feature will capture a specific semantic response with training, which makes feature extraction more accurate and detailed.

Then,

is divided into two branches along the channel dimension, and two sub-features are denoted as

. The channel attention branch adopts the interrelationships of channels to generate channel attention maps, and uses global average pooling (GAP) to generate

to embed global information, which can be calculated by shrinking

on the spatial dimension

.

is formulated as:

where

is height,

is width, and

is GAP.

Then, create a compact feature through the simple gating mechanism with sigmoid activation. The output of channel attention is

formulated as:

there are only two transformation parameters in the Formula (2), namely

and

.

The spatial attention branch captures the spatial dependencies between features to generate spatial attention maps. The spatial attention branch performs group norm calculation on the input feature map and then uses

to enhance the representation of the input. The output of spatial attention is

formulated as:

where the parameters are

and

.

The outputs of the two branches are connected: . Channel shuffle is adopted to realize cross-group information exchange, and the final output of the SA module is consistent with the size of the input feature map.

3.5. YOLOv7-CPCSDSA

As shown in

Figure 7, the model structure of YOLOv7-CPCSDSA is shown. The backbone network continues the backbone of YOLOv7 and is used for feature extraction. Replacing part of the convolution of CatConv in the YOLOv7 with PConv to generate a CPC structure can reduce the redundancy of calculation and memory access. This combination significantly reduces the number of parameters and FLOPs and improves computational efficiency. Adding the SD structure to the network, as shown in the blue box in

Figure 7, enables the model to capture small targets better and improve the accuracy of dense objects. Adding SA to the model can make the model focus on the local information in the image with a lower computational cost, increase the detail of the output features, and improve the model’s accuracy.

5. Conclusions

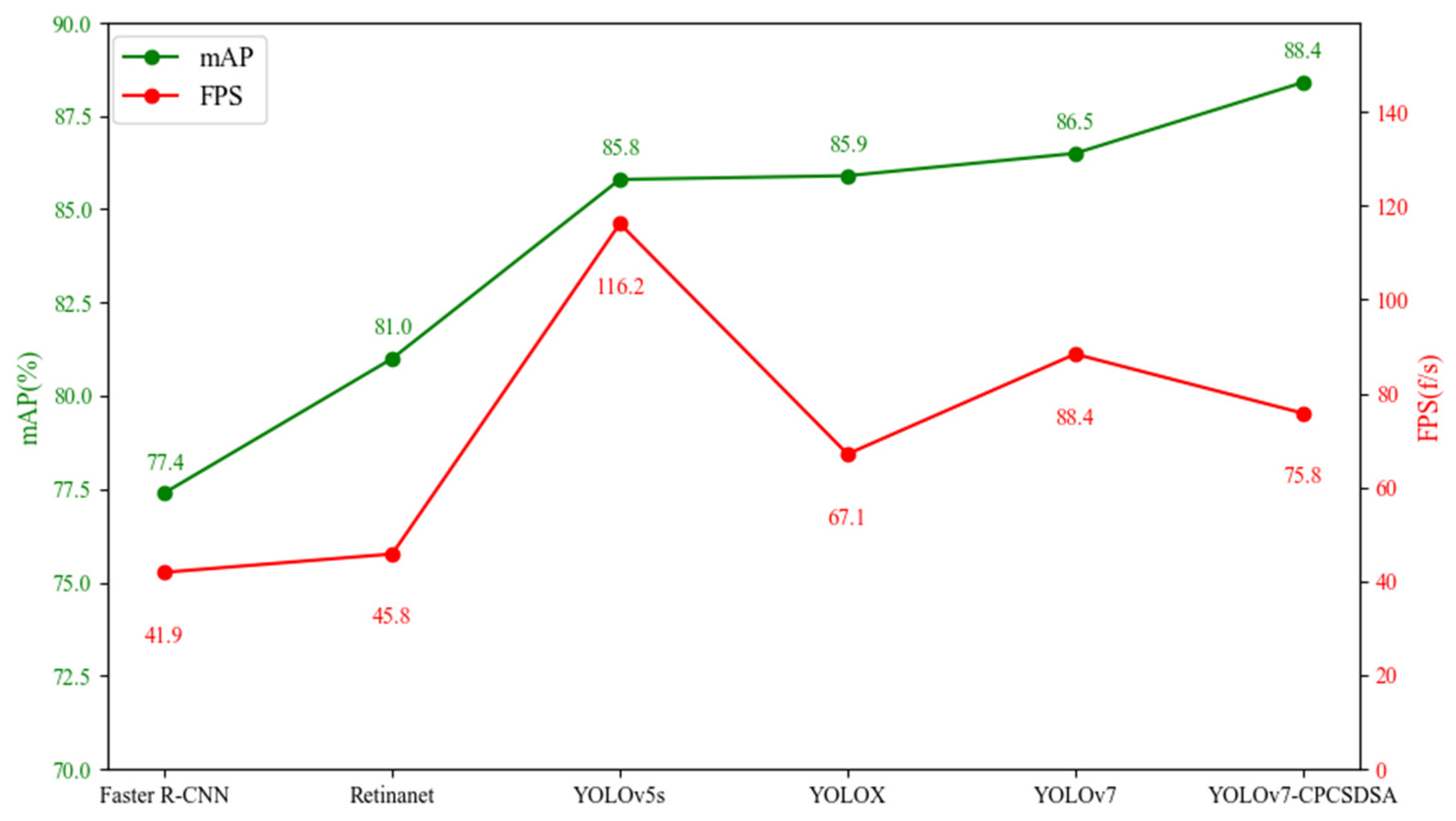

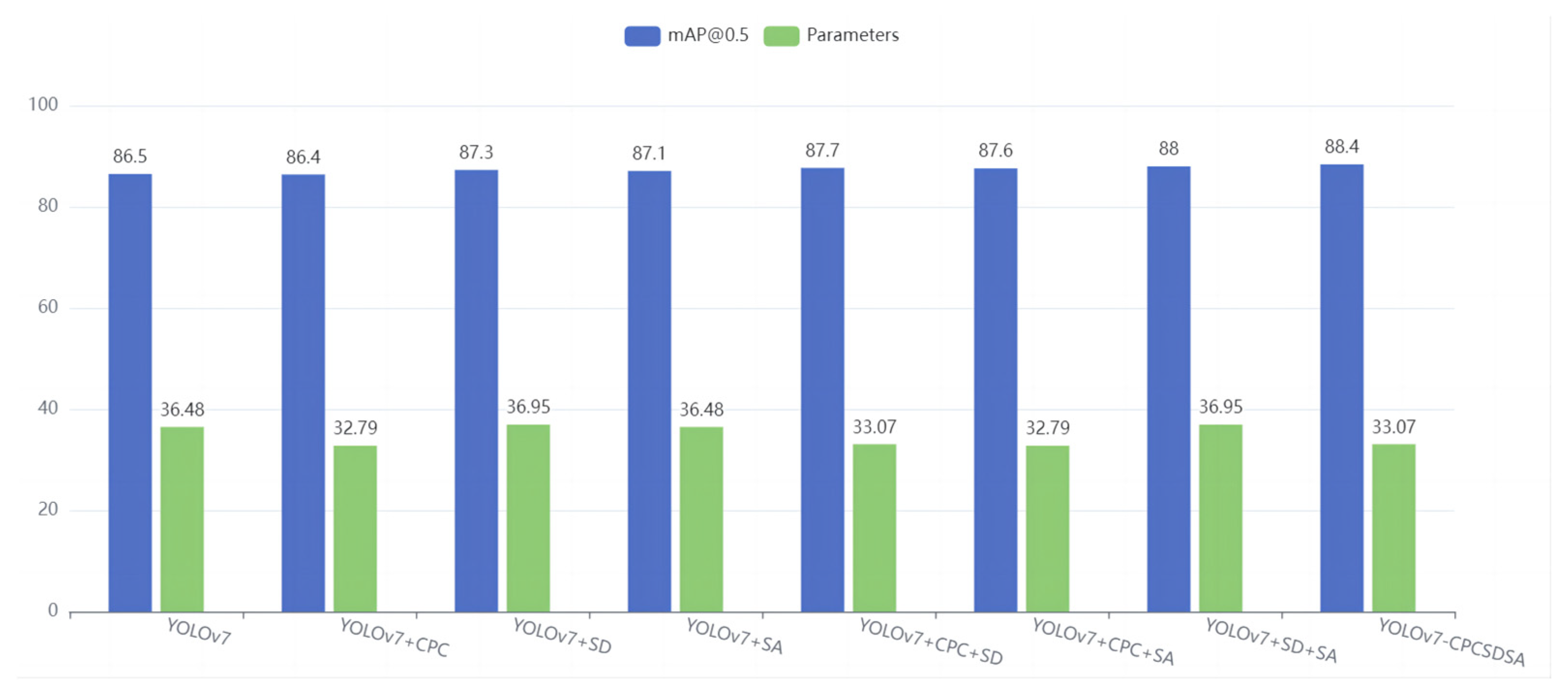

This paper proposes a mask-wearing detection model, YOLOv7-CPCSDSA, for mask-wearing detection tasks in complex scenarios. In the YOLOv7 network, the CPC structure is formed by replacing the part of convolution in CatConv with PConv. By introducing this structure, computational redundancy, memory accesses, and model parameters can be reduced, thus improving the computational efficiency of the network. The SD structure is added to the model to optimize the model for dense targets, which can better capture small targets and improve the detection accuracy of dense targets. The SA is added to the network to focus on locally important information in the image with a lower computational cost, and increase the details in the output features.

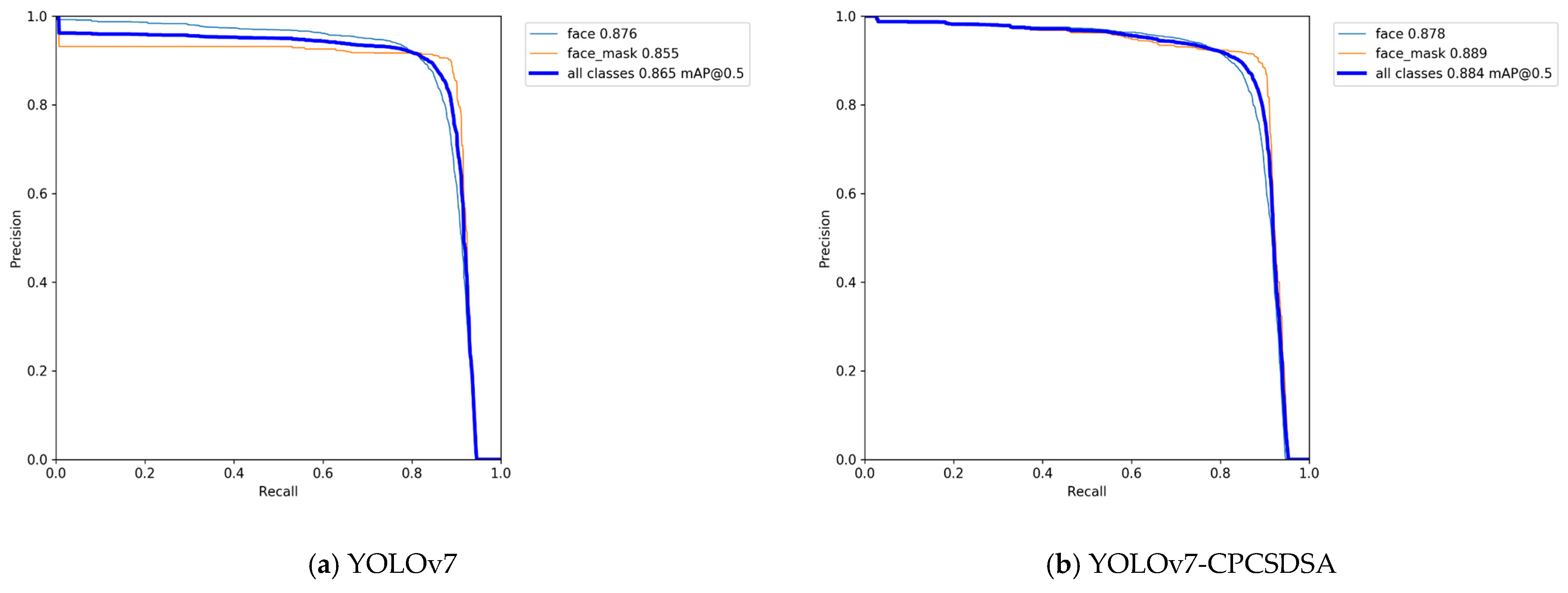

In the dataset of this article, compared with YOLOv7, the mAP@0.5 and mAP@0.5:0.95 of YOLOv7-CPCSDSA have been increased, and the number of parameters has been reduced. The comparison experiment has shown that the mAP of the proposed model is higher than other classical models. The ablation experiment results show that each module improves the detection performance. The visualization results show that YOLOv7-CPCSDSA has a good detection effect in complex scenarios. However, the FPS of this model is lower than that of YOLOv7.

The YOLOv7-CPCSDSA has certain limitations in the dataset. A dataset may mainly contain samples from specific populations or samples collected in specific environments, affecting models’ generalization ability in complex scenarios. The model also has certain limitations in performance, it may be difficult to detect masks accurately under certain lighting conditions, and the covering may pose a challenge for accurate detection, resulting in potential false positives or false negatives.

The YOLOv7-CPCSDSA can be used for real-time monitoring of public places, such as airports, railway stations, shopping malls, and schools, and can also be applied to other fields, such as healthcare and industrial safety, which can be used to detect whether patients and medical staff in hospitals are wearing masks correctly and to monitor whether factory workers are adhering to safety regulations. YOLOv7-CPCSDSA detection still has some defects when the mask-wearing is seriously occluded. Future research can focus on further optimizing the algorithm to improve the accuracy and robustness of mask-wearing detection.