Abstract

Scene text detection is a fundamental research work in the field of image processing and has extensive application value. Segmentation-based methods have time-consuming feature processing, while post-processing algorithms are excellent. Real-time semantic segmentation methods use lightweight backbone networks for feature extraction and aggregation but lack effective post-processing methods. The pure convolutional network improves model performance by changing key components. Combining the advantages of three types of methods, we propose a Pure Convolutional Bilateral Segmentation Network (PCBSNet) for real-time natural scene text detection. First, we constructed a bilateral feature extraction backbone network to significantly improve detection speed. The low extraction detail branch captures spatial information, while the efficient semantic extraction branch accurately captures semantic features through a series of micro designs. Second, we built an efficient attention aggregation module to guide the efficient and adaptive aggregation of features from the two branches. The fused feature map undergoes feature enhancement to obtain more accurate and reliable feature representation. Finally, we used differentiable binarization post-processing to construct text instance boundaries. To evaluate the effectiveness of the proposed model, we compared it with mainstream lightweight models on three datasets: ICDAR2015, MSRA-TD500, and CTW1500. The F-measure scores were 82.9%, 82.8%, and 78.9%, respectively, and the FPS were 59.1, 94.3, and 75.5 frames per second. We also conducted extensive ablation experiments on the ICDAR2015 dataset to validate the rationality of the proposed improvements. The obtained results indicate that the proposed model significantly improves inference speed while enhancing accuracy and demonstrates good competitiveness compared to other advanced detection methods. However, when faced with curved text, the detection performance of PCBSNet needs to be improved.

1. Introduction

Object detection is an important research direction in the field of computer vision, aiming to identify and locate different objects in images. In practical applications, object detection tasks face various challenges, such as vehicle trajectory extraction [1], single-object perception [2], precision agriculture detection [3], and scene text detection. Among these, scene text detection is a fundamental problem in the field of object detection and can be applied to many real-world tasks, such as license plate recognition [4], scene analysis [5], and autonomous driving [6]. The accuracy and real-time performance of scene text detection is of significant importance for the subsequent tasks of text recognition and understanding of a scene.

In recent years, significant progress has been made in scene text detection with the development of Convolutional Neural Networks (CNNs) [7]. Researchers have proposed various scene text detection methods based on CNNs, including regression-based and segmentation-based approaches. Regression-based scene text detection directly regresses text instance boundaries based on object detection methods [8], which enables fast detection and avoids error accumulation in multiple stages. However, this method has limited text representation forms (axis-aligned rectangles or rotated rectangles) and weak generalization capability. It struggles to produce satisfactory results when detecting arbitrary-shaped text, which greatly affects the performance of subsequent text recognition tasks. On the other hand, segmentation-based scene text detection locates text instances by pixel-level classification, allowing for accurate representation of arbitrarily shaped text. It has become the mainstream method in scene text detection. However, segmentation-based methods face challenges in complex post-processing and inefficient feature processing, making it difficult to simultaneously achieve high accuracy and inference speed.

The complexity of post-processing arises from the fact that most post-processing algorithms prioritize accuracy over speed. To address this issue, DBNet (Differentiable Binarization Network) [9] introduces differentiable binarization, replacing binary operations with approximate step functions. It incorporates this approach into a trainable segmented network during training to improve the slow inference speed of post-processing.

The reason for the inefficient feature processing methods is that segmentation-based methods are typically designed for general object detection, where high accuracy heavily relies on the performance of the backbone network for feature processing, often neglecting computational costs. For example, CRAFT [10] introduces additional computations on high-resolution feature maps using U-Net [11], resulting in reduced model speed. Pixel-Anchor [12] uses dilated convolutions [13] to remove downsampling operations, leading to high memory usage and computational complexity. ABCNet [14] employs Residual Networks (ResNets) [15] that require substantial computational resources for training and inference when the network structure is deep. TextFuseNet [16] utilizes Feature Pyramid Networks (FPNs) [17] to fuse multi-scale features in a bottom-up manner, incurring significant overhead.

In order to balance the accuracy and inference speed of the scene text detection model while meeting the requirements of real-time applications, we have constructed a Pure Convolutional Bilateral Segmentation Network (PCBSNet). The most critical issue we addressed was how to overcome the inefficient feature processing methods of existing approaches while avoiding accuracy loss.

The post-processing part of DBNet [9] maintains a high inference speed, but the feature extraction and fusion methods of ResNet + FPN are time-consuming. Considering that a lightweight backbone can significantly improve the feature extraction speed, but the accuracy inevitably decreases due to the fewer network layers, we introduce the design structure of ConvNeXt [18] into the backbone network of BiseNet V2 [19] to construct the Bilateral Feature Extraction (BFE) module, replacing the feature extraction method of ResNet. This significantly improves the inference speed while minimizing accuracy loss. The feature fusion part of BiseNet V2 [19] adopts a simple Bilateral Guide Aggregation (BGA) module, ignoring the importance difference between the two branch features. Therefore, we embedded the Triplet Attention Fusion (TAF) [20] and Triple Channel-Spatial Attention (TCSA) modules into the BGA module to construct the Efficient Attention Aggregation (EAA) module, allowing the network to focus more on important semantic information. We also replaced DWConv (Depthwise Convolution) in the BGA module with the faster Pconv (Partial Convolution) to further enhance our real-time requirements. Finally, we utilized Coordinate Attention (CA) [21] to design the Feature Strengthen (FS) module, which aggregates input features in the horizontal and vertical directions separately to capture position-sensitive information and channel long-range dependencies, compensating for the semantic bias in the fusion process. To summarize, the main contributions of this paper are as follows:

- We abandoned the inefficient feature extraction method of DBNet and used an improved Bilateral Feature Extraction module as a replacement for ResNet18 in the feature extraction part of PCBSNet. This improves the efficiency of feature capture and enables the implementation of a lightweight network.

- We embedded attention mechanisms in the fusion part of BiSeNet V2, creating the Efficient Attention Aggregation module. This module guides the network to efficiently and adaptively fuse both detail information and semantic information.

- We designed a Feature Enhancement module for the fused features to obtain more accurate and reliable feature representations.

- We evaluated our proposed PCBSNet for real-time scene text detection on the ICDAR2015, MSRA-TD500, and CTW1500 datasets, comparing it with existing mainstream lightweight methods. The experimental results demonstrate that our approach achieves a good balance between accuracy and real-time performance.

The rest of the paper is organized as follows. Section 2 provides an overview of the related work in scene text detection and the techniques used in our research. Section 3 presents a detailed description of our proposed real-time scene text detection network, PCBSNet. Section 4 presents extensive experiments and provides a detailed analysis of the results. Section 5 concludes the paper, summarizes the findings, and suggests future research directions.

2. Related Work

Semantic segmentation-based methods have become one of the mainstream approaches for scene text detection. These methods extract features using a backbone network to obtain pixel-level label predictions and employ post-processing algorithms to aggregate pixels for text detection. For instance, PAN (Pixel Aggregation Network) [22] utilizes a ResNet backbone network to extract features and introduces the Feature Pyramid Enhancement Module (FPEM) and the Feature Fusion Module (FFM) to improve feature fusion accuracy. The Pixel Aggregation (PA) post-processing method is used to aggregate text pixels. However, simple pixel aggregation may struggle with detecting adjacent text instances. To address this, PSENet (Progressive Scale Expansion Network) [23] employs ResNet + FPN backbone network feature extraction and proposes a progressive scale expansion post-processing algorithm based on a breadth-first search to accurately separate adjacent text instances. These post-processing methods involving expansion strategies are complex and computationally expensive. To tackle this issue, DBNet [9] introduces differentiable binarization (DB), which trains the binarization process along with the segmentation network to significantly improve post-processing efficiency. However, this method struggles to handle text within text instances. Therefore, FCENet (Fourier Contour Embedding Networks) [24] utilizes compact Fourier feature vectors to approximate text contours and employs Fourier inverse transformation as a post-processing step to accurately depict text contours and achieve correct text detection within text instances. Although these algorithms have achieved good performance in scene text detection, their effectiveness highly relies on feature processing and post-processing procedures. Additionally, these methods employ deep networks for feature extraction, which, while achieving desired accuracy, often come with long inference times that hinder real-time applications.

Considering the importance of real-time performance in scene text detection, researchers have recently explored the use of real-time semantic segmentation approaches for efficient feature processing, aiming to significantly reduce inference time. Real-time semantic segmentation employs simple multi-branch networks to extract different semantic features from images, thereby improving feature processing efficiency. For example, BiSeNet V1 and V2 [19,25] adopt a dual-branch structure for feature capturing and design parallel context and spatial paths to balance segmentation accuracy and inference speed. STDC [26] removes the spatial path of BiSeNet V1 [25] and enhances the base model by incorporating detail prediction. BiSeNet V3 [27] also eliminates the spatial path of BiSeNet V1 [25] and applies traditional edge detection techniques in this field, introducing coordinate feature refinement and aggregation modules to achieve the best trade-off between speed and accuracy. However, these methods often suffer from incomplete feature extraction when the text information in the image is complex, leading to missed or false detections.

To address this limitation, enhancing the network structure becomes crucial. The idea of designing network structures to enhance model detection performance originated from the Vision Transformer (ViT) [28]. ViT replaces convolutional operations with self-attention mechanisms, enabling extensive information, interaction, and global context capturing. However, the large number of parameters in ViT makes it challenging to apply to real-time tasks. To overcome this, recent studies have attempted to simulate several key success factors of ViT using convolutional operations, including long-range modeling, dynamic weights, robustness to distortion, and higher-order spatial interactions. For example, GFNet [29] combines 2D-FFT (Fast Fourier Transform) and 2D-IFFT (Inverse Fast Fourier Transform) to dynamically learn long-range spatial dependencies. RepLKNet [30] employs a 31 × 31 super-large convolution kernel to increase the effective receptive field, and small convolution kernels for parameter reorganization to alleviate distortion. HorNet [31] designs the Recursive Gated Convolution (RGConv) to explicitly model high-order interactions between any two spatial positions. ConvNeXt [18] incorporates the micro-design of Swin Transformer [32] into convolutional networks to explore the limits achievable with purely convolutional networks. ConvNeXt V2 [33] proposed a fully convolutional masked autoencoder framework based on ConvNeXt [18] and introduced the Global Response Normalization (GRN) layer, achieving a remarkable accuracy of 76.7% on the ImageNet dataset.

Through an in-depth investigation of the aforementioned methods and by leveraging their respective advantages, we propose a PCBSNet for real-time scene text detection.

3. Methods

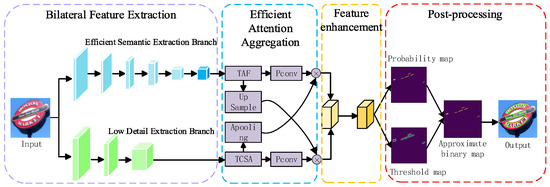

The structure of our proposed PCBSNet model is shown in Figure 1. Firstly, the image is input into the BFE module, and detailed and semantic information is captured through the LDEB and ESEB. The output feature maps of the two branches are adaptively aggregated through the EAA module, and the aggregated features enter the FE module to obtain more accurate and reliable feature expression. Finally, an approximate binary map is obtained through DB post-processing, which is our final prediction map.

Figure 1.

The diagram illustrates PCBSNet. The purple box represents the bilateral feature extraction module. The blue box represents the efficient attention aggregation module. The yellow box represents the feature enhancement module. The red box represents the post-processing step.

3.1. Bilateral Feature Extraction

This section introduces the proposed BFE module. Traditional segmentation-based text detection methods often use ResNet [15] for feature extraction, which is inefficient and inadequate for real-time detection tasks. To address this issue, we integrated the design of ConvNeXt [18] into the bilateral feature extraction network of BiSeNet V2 [19]. We design the LDEB and ESEB to capture target contour details and semantic information, respectively. We replaced the ResNet [15] backbone with BFE, significantly reducing the network parameters and computational complexity, thus achieving a lightweight improvement of the network.

3.1.1. Low Detail Extraction Branch

The LDEB is responsible for extracting spatial information from the image for text detection. Low-level details play a crucial role in semantic segmentation, as they capture the relationships between each pixel and its neighboring pixels, as well as the relationships between each pixel and all other pixels in the entire image, forming the semantic information of the image.

In order to capture rich spatial information while maintaining high inference speed, we have designed the LDEB using a VGG [34] network structure instead of a memory-intensive residual connection structure. We stacked three layers of the VGG network, with channel numbers set as 64, 128, and 256, respectively, to ensure a larger spatial size and channel capacity.

For the input image IM, the detail feature is obtained through the LDEB. The calculation process is as follows:

where represents the feature passing through the LDEB.

3.1.2. Efficient Semantic Extraction Branch

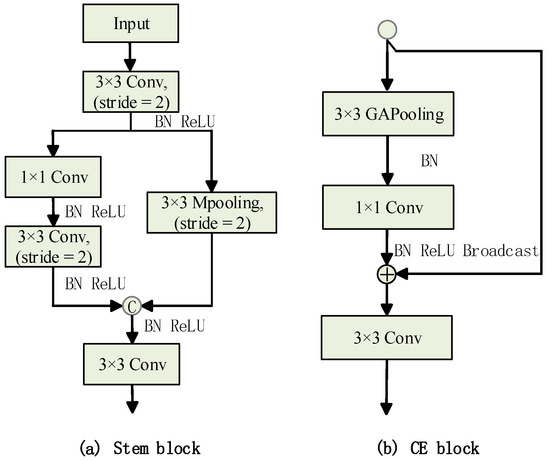

In parallel with the LDEB, the ESEB is designed to capture high-level semantics, which is characterized by a deep level, large receptive field, and low channel capacity. In the first stage, the Stem block is used to expand the receptive field using the fast downsampling strategy, and the structure is shown in Figure 2a. The final stage uses CE blocks, with global average pooling and residual connections effectively embedding global context information, as shown in Figure 2b. The remaining details of the middle layer will be explained in detail below.

Figure 2.

The structure of the Stem block (a) and CE block (b).

With the breakthrough development of pure convolutional networks, it can be observed that the effectiveness of BiSeNet V2 [19] is attributed to its high-level semantic branch aggregation and expansion layer GE (Gathering Expansion Layer) similar to the inverted bottleneck structure of ConvNeXt [18], as shown in Figure 3a–c. Compared with the standard convolution network, the GE1 and GE2 blocks have larger receptive field by superimposing the convolution layer. A design similar to ConvNeXt [18] can provide convolutional networks with higher feature expression capabilities to capture advanced semantics. Obviously, the more efficient the semantic extraction branch and the ConvNeXt [18] structure are, the more sufficient the semantic extraction is, and the higher the accuracy of scene text detection.

Figure 3.

The comparison diagram shows the GE block (a,b), ConvNeXt block (c), and PCB block (d,e). a and b represent the GE block. c represents the ConvNeXt block. d and e represent the PCB block proposed in this work.

Following the design principles of ConvNeXt [18], a pure convolutional bilateral (PCB) block was constructed on the basis of GE. The main improvements include:

- (1)

- Broader Channels: The original channel capacity of the pure convolutional network BiSeNet V2 [19] is (16, 32, 64, 128), which is one-fourth of ResNet18 (64, 128, 256, 512). In comparison, ConvNeXt [18] expands the channel numbers to (96, 192, 384, 768). Considering the potential misdetections and omissions of text instances, richer semantic information is needed. Therefore, PCBSNet enlarges the channel numbers (32, 64, 128, 256), which is one-third of ConvNeXt [18].

- (2)

- Agile Convolutions: DWConv [35] has a visible effect on reducing FLOPs, but replacing conventional convolutions with DWConv leads to a significant drop in accuracy. Research [36] has found that increasing the network width to compensate for accuracy typically results in higher memory access. PCBSNet employs PConv [37] with a ratio of 1/4 as a substitute for DWConv, optimizing the cost by leveraging feature map redundancy.

- (3)

- Larger Convolution Kernel Sizes: Since VGG, mainstream CNNs usually adopt smaller convolution kernels like 3 × 3 and 5 × 5. However, ConvNeXt [18] achieves saturated performance by using a 7 × 7 convolution. In the GE1 block of the BiSeNet V2 [19] network, a 5 × 5 receptive field is achieved by stacking two 3 × 3 convolutions, but it is still smaller than that of the ConvNeXt block. PCBSNet replaces the second 3 × 3 convolution in the stacked GE1 block with a 5 × 5 convolution, expanding the receptive field to 7 × 7, matching the receptive field of ConvNeXt [18].

- (4)

- More Suitable Activation Function: Most existing models attach a batch normalization layer (BN) and a ReLU (Rectified Linear Unit) layer after each convolutional layer. However, research [37] has shown that this model structure does not achieve the best performance for networks with smaller channel numbers. Considering the lightweight and high-performance aspects, PCBSNet uses GELU (Gaussian Error Linear Unit) as a replacement for ReLU to improve model convergence without increasing computational cost.

The improved PCB1 and PCB2 are shown in Figure 3d,e. For PCB1, 3 × 3 convolution effectively aggregates features and extends them to high-dimensional space. PConv efficiently process high-dimensional space features, and 1 × 1 convolution serves as a projection layer to project the upper output to low-dimensional space. For PCB2, when the step size is 2, two PConv are used to further expand the receptive field, and one PConv is used as a shortcut.

For the input image IM, the middle is first obtained through the Stem block of the ESEB, followed by the feature obtained through the proposed PCB block, and finally the semantic feature obtained through the CE block. The calculation process is as follows:

where represents the input feature passing through the Stem block, represents the intermediate feature passing through the PCB block 8 times, and represents the intermediate feature passing through the CE block.

The details of the final backbone network are shown in Table 1. The stage represents different stages of feature extraction, opr represents the operation, k represents the maximum kernel size in that operation, c represents the number of channels, s represents the stride, and r represents the number of repetitions.

Table 1.

Details of the backbone network.

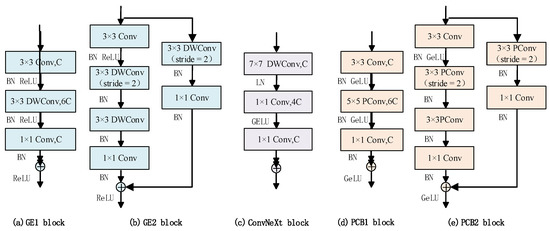

3.2. Efficient Attention Aggregation

This section introduces the EAA module proposed in this paper. BiSeNet V2 [19] incorporates a BGA layer to fuse complementary information from two branches, where the BGA utilizes the contextual information from the high-level semantic branch to guide the feature response of the low-level detail branch. However, the simple guidance overlooks the difference in the importance of the two types of information, and the BGA employs DWConv with unexpanded channel capacity, which inevitably leads to a decrease in accuracy. To allow the model to focus more efficiently and adaptively on important semantic information and to fuse the output features of the two branches, we propose the EAA module. Building upon the BGA, the EAA module introduces TAF [20] and TCSA, while replacing the DWConv with PConv. The structure of the EAA module is illustrated in Figure 4.

Figure 4.

Structure of the Efficient Attention Aggregation module.

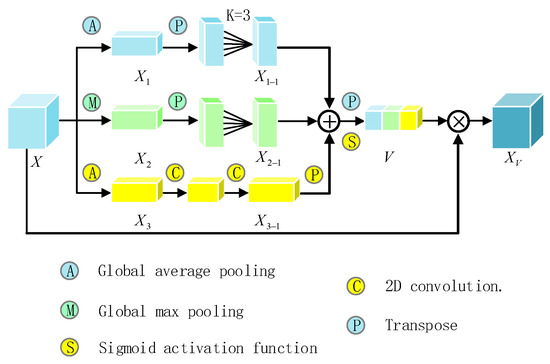

3.2.1. Triplet Attention Fusion

The ESEB is characterized by a large channel capacity and low resolution. The large channel capacity contains rich semantic information, and establishing channel relationships is beneficial for focusing on important semantic features between channels. The low resolution contains limited spatial information, and establishing spatial relationships can easily lead to pixel confusion. In view of this, this paper adopted the TAF module [20] to establish channel relationships for ESEB. Compared with other state-of-the-art channel attention modules, TAF has fewer parameters and lower computational complexity, which is in line with the goal of constructing a lightweight network. Its structure is shown in Figure 5.

Figure 5.

The structure of the TAF module.

For a given input semantic feature , one global max pooling and two average pooling operations in space are performed to obtain global feature vectors , , of size . The calculation process is as follows:

where represents the global average pooling operation and represents the global max pooling operation. , .

The global feature vectors and are transposed and then passed through a 1D convolution to obtain and . Both paths share the same convolution kernel to enhance generalization while reducing the number of parameters. The calculation process is as follows:

where represents the transpose operation and represents a 1D convolution operation with a kernel size of 3.

The global feature vector is used to capture the channel attention vector through a 2D convolution, and after reshaping the dimensions. The calculation process is as follows:

where represents a 2D convolution operation with a kernel size of 1, and represents the ReLU function.

After the fusion of , , and , the channel attention vector V is obtained through activation and dimension reshaping operations. The calculation process is as follows:

where represents the Sigmoid function and represents the element by element addition.

The original feature map X is element-wise multiplied with the channel attention vector to obtain enhanced semantic features . The calculation process is as follows:

where × represents the element by element multiplication.

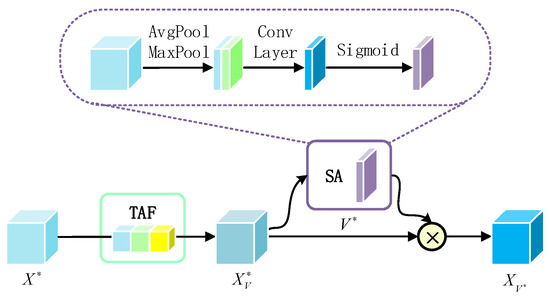

3.2.2. Triple Channel Spatial Attention

The LDEB has a higher resolution and larger channel capacity. The high resolution contains rich spatial information, while the large channel capacity contains rich semantic information. Establishing spatial and channel relationships is beneficial for capturing critical detail features. Drawing inspiration from the design architecture of CBAM [38], we proposed the TCSA module to establish spatial and channel relationships for LDEB. Its structure is shown in Figure 6.

Figure 6.

The structure of the TCSA.

Formally, for the input detail feature , the TAF module is embedded to capture the enhanced detail feature of the feature map . The calculation process is as follows:

where represents the TAF operation.

The enhanced detail features are embedded with spatial attention (SA), resulting in the spatial attention vector . The calculation process is as follows:

where represents the convolution operation with a kernel size of .

The enhanced detail features are element-wise multiplied with the spatial attention vector to obtain the enhanced detail features . The calculation process is as follows:

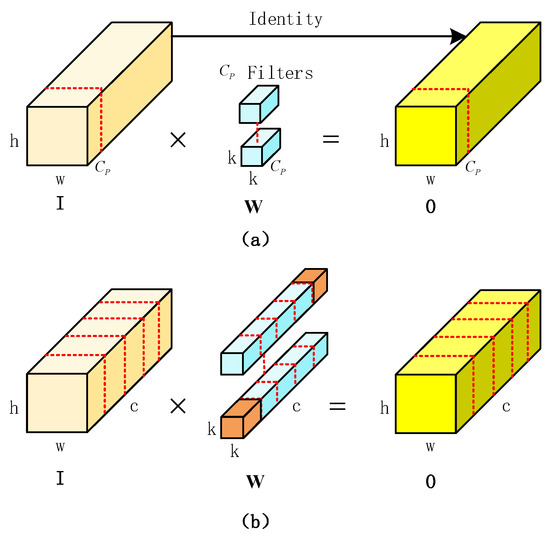

3.2.3. Partial Convolution

PConv [37] replaces DWConv [35] and reduces redundant calculations and memory access, effectively reducing the parameters and computational complexity of the network. DWConv first performs feature extraction on individual input channels and then applies pointwise convolution to change the channel count. On the other hand, PConv only performs a convolution of partial channels, further incorporating pointwise convolution into PConv to form a T-shaped receptive field.

As shown in Figure 7, for the input , DWConv applies c convolution kernels of to compute the output , and it has a total of FLOPs. Then, if it appends a pointwise convolution, the number of memory accesses increases to . On the other hand, PConv applies regular convolution for spatial feature extraction on only a subset of input channels while keeping the rest unchanged. Therefore, its FLOPs are only , when we take the partial ratio is . As a result, the FLOPs of PConv are only half of that of DWConv. Additionally, PConv has lower memory access, with the appended pointwise convolution being only .

Figure 7.

Computation processes for Depthwise Separable Convolution and Partial Convolution. (a) represents the computation process for Partial Convolution, and (b) represents the computation process for Depthwise Separable Convolution.

The guidance semantic feature and interactive semantic feature are obtained by enhancing the semantic feature through PConv and upsampling operations, respectively. The calculation process is as follows:

where represents the PConv operation and represents the upsampling operation.

The guidance detail feature and interaction detail feature are obtained by enhancing the detail feature through PConv and average pooling operations, respectively. The calculation process is as follows:

where represents the general average pooling operation.

guides through element-wise multiplication to generate the final detailed feature , while guides through element-wise multiplication to generate the final semantic feature . The calculation process is as follows:

The final and are added element by element after the fused feature is obtained through convolution to eliminate aliasing effects. The calculation process is as follows:

where represents the convolution operation.

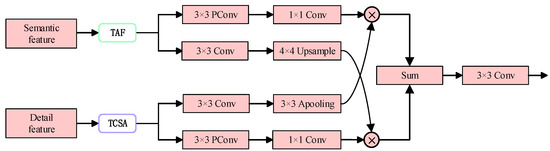

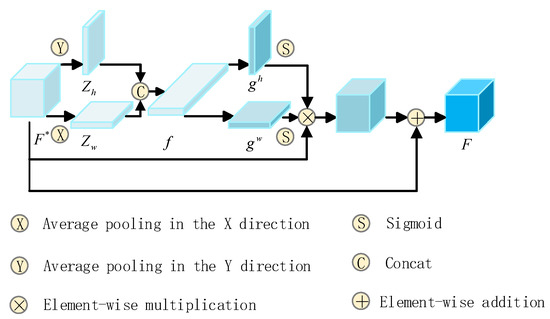

3.3. Feature Enhancement

In the processing by the EAA module, different branch feature maps may exhibit semantic differences, leading to inaccurate localization of text instances. To address this issue, we propose the FE Module, as illustrated in Figure 8. This module utilizes CA [21] to aggregate input features along the horizontal and vertical directions separately. It aims to capture position-sensitive information and channel long-range dependencies, compensating for the semantic discrepancy during the fusion process.

Figure 8.

The structure of the FE.

For a given fused features , the projection features and along the horizontal and vertical axes, respectively, are obtained by performing average pooling using pooling kernels of size (H, 1) and (1, W). The calculation process is as follows:

where represents the average pooling operation along the vertical axis and represents the average pooling operation along the horizontal axis.

The semantic features and are concatenated along the spatial direction and then compressed in the channel dimension through a convolution operation to generate the intermediate feature f. The calculation process is as follows:

where represents the concatenation operation between two tensors and represents the convolution operation.

The intermediate feature f is split along the spatial dimension into two separate tensors, and . The convolution operation is applied to transform and into the same number of channels as the input . The calculation process is as follows:

After the FE module, the final feature map is obtained. The calculation process is as follows:

where represents the element-wise addition.

3.4. Post-Processing

3.4.1. Differentiable Binarization

Traditional binarization is non-differentiable, which means it cannot directly be used for backpropagation and updating the network. PCBSNet uses a DB module, which allows for backpropagation and network updates. The expression for the module is as follows:

where is the approximate binary mapping for pixel (i, j), is the probability map indicating the probability of pixel (i, j) being text, and is the adaptive threshold learned from the network for pixel (i, j). K is the amplification factor, set to 50, which is used to amplify the gradients during backpropagation.

3.4.2. Loss Function

The loss function consists of the probability map loss , the binary map loss , and the threshold map loss , which are computed as follows:

where and are hyperparameters that determine the importance of each component of the loss. In this study, was set to 1 and was set to 10.

The and are calculated using binary cross-entropy (BCE) loss, addressing the issue of imbalanced positive and negative samples through hard example mining.

The is defined as the L1 distance between the probability map label and the predicted value , and it is computed as follows:

4. Experiment

In this section, we validate the proposed PCBSNet through a series of experiments conducted on four publicly available datasets. These experiments aim to demonstrate its effectiveness and feasibility in terms of performance.

4.1. Dataset

The four publicly available datasets used in the experiments are described below.

SynthText [39]: It is a large-scale synthetic text detection dataset consisting of approximately 800,000 images. The dataset provides annotations for text instances in the form of words, characters, and text lines. In the experiments, this dataset was used for model pre-training.

ICDAR2015 [40]: This dataset focuses on multi-oriented text detection. It contains 1500 images, with 1000 images in the training set and 500 images in the test set. The text in the images is small in size and mainly composed of English and digits. The text regions are annotated with rectangular boxes represented by four vertices. The dataset has complex backgrounds and significant variations in text scales. It is commonly used for evaluating text detection algorithms. In the experiments, this dataset was used for model fine-tuning and algorithm performance validation.

MSRA-TD500 [41]: This dataset was also designed for multi-oriented text detection. It includes 500 images, with 300 images in the training set and 200 images in the test set. The dataset consists of English and Chinese text, and the text instances are annotated at the line level using four-coordinate rectangles.

CTW1500 [42]: This dataset focuses on curved text in multiple languages. It consists of 1000 training images and 500 test images. The annotations are in the form of polygons with 14 points.

These datasets are widely used in the field of text detection and provide diverse challenges to evaluate the performance and effectiveness of the proposed PCBSNet model.

4.2. Experimental Settings

The training environment where the model is located was an NVIDIA GeForce RTX 3090. BiSeNet V2 [19] was pretrained on SynthText [39] for the backbone network. It underwent pretraining for 100,000 iterations. During fine-tuning experiments, the batch size was set to 8, and the model was trained for 1200 epochs. The Adam optimizer [43] was used for parameter optimization with an initial learning rate of 0.007, weight decay of 0.0001, and momentum of 0.9. The learning rate was adjusted iteratively using the formula , where power was set to 0.9 and is the maximum number of iterations. The training samples were resized to 640 × 640 pixels.

Data augmentation was applied during the training phase, including random rotation, changes in brightness and saturation, random flipping, and random cropping.

To account for different experimental environments, the ablation experiments provided the locally reproduced experimental data for the BiSeNet V2 [19] baseline model, while the parameter experiments provided the locally reproduced experimental data for the DBNet [9] baseline model.

4.3. Evaluation Indicators

To evaluate the detection performance of the model, four commonly used text detection evaluation metrics were employed in this paper. FPS represents the number of frames processed per second, indicating real-time capability. A higher FPS indicates better real-time performance. Recall (R) measures the proportion of valid ground truth data correctly detected and is calculated using Formula (31). Precision (P) measures the proportion of correctly detected text out of all detected text instances and is calculated using Formula (32). The F-measure combines Precision and Recall and is calculated using Formula (33).

In the formulas, TP represents the total number of text regions correctly predicted by the model, FP represents the total number of incorrectly predicted text regions, and FN represents the total number of text regions that were not predicted.

4.4. Ablation Experiments

To validate the effectiveness of the improvements in the ESEB, EAA module, and the overall model, we conducted extensive ablation experiments on the ICDAR2015 dataset. In the table, bold font indicates the best results for each metric. P, R, F, and FPS represent precision, recall, F-measure, and frames per second, respectively.

4.4.1. Efficient Semantic Extraction Branch Ablation Experiment

The ablation experiments for the ESEB involved the design of five comparative models on the ICDAR2015 dataset:

- (1)

- BVSE: Baseline model with the semantic extraction branch from BiSeNet V2 [19].

- (2)

- BVSE + W-C: ESEB with a wide range of channels.

- (3)

- BVSE + W-C + P: ESEB with wider channels and PConv.

- (4)

- BVSE + W-C + P + B-K: ESEB with wide channels, PConv, and large convolution kernels.

- (5)

- ESEB: ESEB with wide channels, PConv, large convolution kernels, and GELU activation function.

These models were designed to evaluate the effectiveness of the ESEB by gradually incorporating different enhancements to the semantic extraction branch.

The experimental results are shown in Table 2 and demonstrate the impact of different enhancements on the ESEB. Compared to the baseline model, BVSE + W-C achieved a 0.7% improvement in the Fmeasure. However, BVSE + W-C + P, while achieving a 0.5% improvement compared to the baseline model, showed a 0.2% decrease compared to BVSE + W-C. BVSE + W-C + P + B-K, built upon BVSE + W-C + P, further improved the F-measure by 0.8%. When the ReLU activation function in BVSE + W-C + P was replaced with the GELU function, suitable for lightweight networks, ESEB demonstrated an improved convergence and achieved the best F-measure of 80.7%. These results indicate that the proposed ESEB effectively captures the semantic features of text in images, while accelerating the inference speed of model and enhancing its real-time performance. The decrease in the F-measure observed in the BVSE + W-C + P model can be attributed to the replacement of DWConv with PConv, which led to insufficient feature extraction saturation.

Table 2.

Experimental results of Efficient Semantic Extraction Branch ablation.

4.4.2. Efficient Attention Aggregation Module Ablation Experiment

The experimental design for the ablation study on the EAA module included seven comparative models:

- (1)

- BVBGA: Baseline model with the bidirectional guided aggregation module of BiSeNet V2 [19].

- (2)

- BVBGA + S-T: EAA module with semantic feature embedding and TAF.

- (3)

- BVBGA + D-TC: EAA module with detail feature embedding and TCSA.

- (4)

- BVBGA + S-TC: EAA module with semantic feature embedding and TCSA.

- (5)

- BVBGA + D-T: EAA module with detail feature embedding and TAF.

- (6)

- BVBGA + P: EAA module that replaces DWConv with PConv.

- (7)

- EAA: EAA module with semantic feature embedding and TAF, detail feature embedding and TCS, and replacement of DWConv with PConv.

These models were designed to evaluate the effectiveness of different enhancements in the EAA module.

The experimental results are shown in Table 3. BVBGA + S-T establishes channel relationships for semantic features, capturing key semantic information. Compared to the BVBGA baseline, it improved the F-measure by 1.2%. BVBGA + D-TC establishes channel and spatial relationships for detail features, capturing critical details. Compared to the BVBGA baseline, it improved the F-measure by 0.9%. BVBGA + S-TC adds spatial attention on top of the BVBGA + S-T, which causes confusion in semantic encoding. As a result, compared to the BVBGA + S-T, the F-measure decreased by 0.3%. BVBGA + D-T removes spatial attention from BVBGA + D-TC, leading to inadequate capture of detail features. Compared to BVBGA + D-TC, the F-measure decreased by 0.3%. BVBGA + P replaces DWConv with PConv in the baseline model, significantly improving the speed but slightly decreasing the F-measure by 0.2%. The EAA module incorporates the optimal improvements, including semantic feature embedding TAF, detail feature embedding with TCSA, and replacing DWConv with PConv. It achieved an F-measure of 81.1%. Based on the experimental results, the proposed EAA module effectively aggregates detail information and semantic information, improving the robustness of the model.

Table 3.

Experimental results of efficient attention aggregation module ablation.

4.4.3. Overall Ablation Experiment

The experimental design for the overall ablation study included four comparative models:

- (1)

- BV: The baseline model based on BiSeNet V2 [19].

- (2)

- ESEB: A model where the original semantic branch was replaced with the ESEB.

- (3)

- ESEB + EAA: A model where the original semantic branch was replaced with the ESEB and the EAA module was added.

- (4)

- ESEB + EAA + FS: A model where the original semantic branch was replaced with the ESEB, and both the EAA module and the FE module were added.

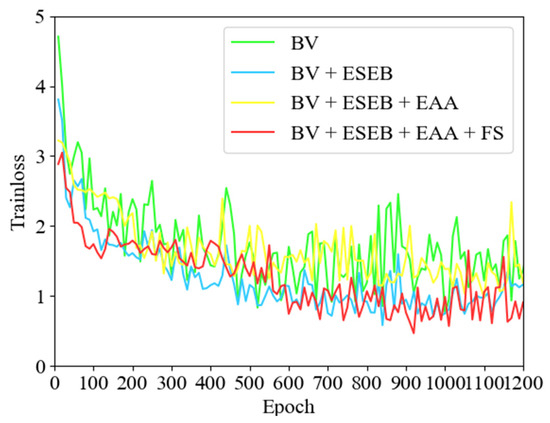

The experimental results are shown in Table 4. BV + ESEB replaces the original semantic branch with the ESEB, which improves the semantic extraction efficiency. It brought an 1.6% improvement in the F-measure to the baseline model, while slightly increasing the inference speed (by 0.04). BV + ESEB + EAA further replaces the original BGA module with the EAA module. By efficiently integrating the features from the dual branches using attention mechanisms and PConv adaptive guidance, the F-measure improved by an additional 1.0%, while the inference speed slightly decreased. BV + ESEB + EAA + FS enhances the fused features to obtain more accurate feature representations. As a result, the F-measure further improved by 1.2%, reaching 82.9% in total.

Table 4.

Overall ablation experimental results.

Figure 9 shows the loss curves of the different models in the overall ablation experiment.

Figure 9.

Overall ablation experiment loss chart.

4.4.4. Parameter Experiment

The Parameter experiment design used three comparative models:

- (1)

- Res + FPN: Network model using ResNet18 + FPN as adopted by DBNet [9].

- (2)

- BV + BGA: Network model using Bilateral Network + BGA as adopted by BiSeNet V2 [19].

- (3)

- Ours: Network model proposed in this paper.

The experimental results are shown in Table 5. Compared to the traditional feature processing mode of ResNet18 + FPN, the dual-branch structure of BiSeNet V2 [19] had a parameter size of only 3.48 M, resulting in a significant improvement in inference speed. However, there was a slight decrease in the three evaluation metrics. The proposed model achieved the optimal values in all three evaluation metrics and had an increase of 4.43 M parameters compared to BiSeNet V2 [19], but it was still much lower than ResNet + FPN.

Table 5.

Parameter experiment results.

Figure 10 displays the partial detection results and corresponding binary images of the proposed model on the three datasets.

Figure 10.

Partial detection result map and corresponding binary map.

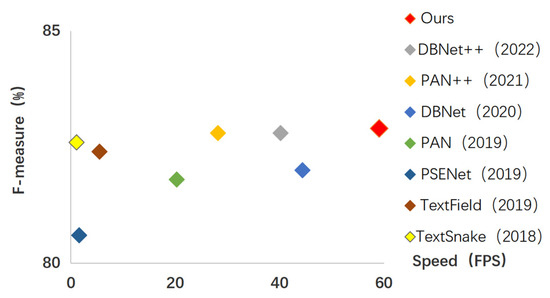

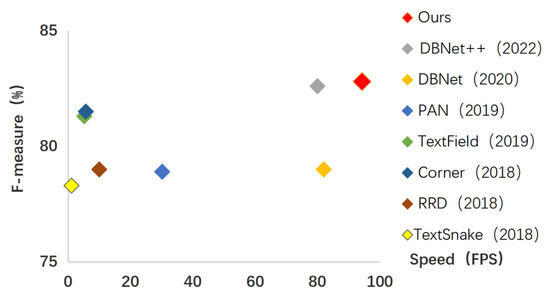

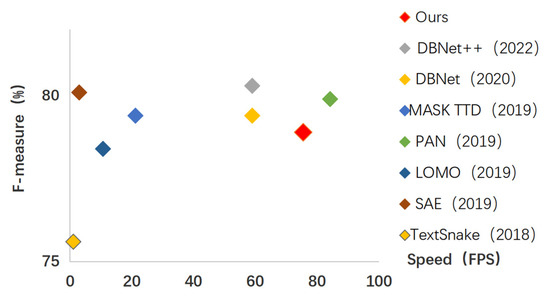

4.5. Comparative Experiment

To further validate the effectiveness of the proposed detection method, comparisons were made with recent mainstream lightweight methods on the ICDAR2015 [40] dataset, MSRA-TD500 [41] dataset, and CTW1500 [42] dataset. The segmentation-based detection methods all use ResNet18 as the backbone network without deformable convolutions. The comparative results on the three datasets are shown in Figure 11, Figure 12 and Figure 13. The experiments were conducted using the SynthText [39] dataset for pre-training, followed by 1200 iterations of fine-tuning training. The detailed experimental results are presented in Table 6, Table 7 and Table 8.

Figure 11.

Comparison of mainstream methods on ICDAR2015.

Figure 12.

Comparison of mainstream methods on MSRA-TD500.

Figure 13.

Comparison of mainstream methods on CTW1500.

According to the experimental results shown in Table 6, our proposed PCBSNet benefits from lightweight strategies and significantly improves the inference speed, achieving a state-of-the-art (SOTA) FPS 59.1 frames per second when faced with datasets like ICDAR2015 that contain small and low-resolution text instances. However, due to the abundance of small text instances in this dataset, our feature extraction capability was slightly inadequate, leading to the possibility of missing detections of small text instances and resulting in a third-best Precision of 88.0%. In comparison to the methods with the best Precision and Recall, DBNet++ [44] and PAN [22], the former has a complex model that overfits the training data, resulting in high Precision and low Recall, while the latter has a simple model that fails to capture complex data patterns and relationships, leading to more false positives and a trade-off between Recall and Precision. As a result, neither of these methods achieved SOTA results in terms of the F-measure. In contrast, our PCBSNet, with its effective fusion mechanism and feature enhancement, achieved a SOTA F-measure of 82.9%.

According to the experimental results shown in Table 7, our proposed PCBSNet achieves SOTA Precision, F-measure, and FPS when dealing with smaller datasets like MSRA-TD500, reaching 90.0%, 82.8%, and 94.3 frames per second, respectively. The MSRA-TD500 dataset contains larger text instances with high data quality and a balanced distribution of positive and negative samples, eliminating the need for deep convolutional networks like ResNet used by DBNet++ [44] or VGG used by Corner [45]. Our lightweight dual-branch approach effectively extracts semantic information and detailed information and combines them through attention fusion, resulting in accurate segmentation outcomes.

According to the experimental results shown in Table 8, PCBSNet falls short of achieving SOTA results when faced with curved text datasets like CTW1500, achieving only the second-best FPS 75.5 frames per second and the third-best Precision of 83.8%. The reason for obtaining high Precision but low Recall is that our lightweight PCBSNet lacks the feature representation capability required to adapt to the complexity and diversity of curved text, which typically necessitates more complex models. For example, LOMO [46] achieved the best Precision by employing ResNet for comprehensive feature extraction and FPN for multi-scale fusion, but it falls short in terms of Recall, while TextSnake [47] achieved the best Recall by treating curved text as a shape composed of continuous line segments for accurate segmentation but sacrifices precision by incorrectly segmenting a large portion of non-curved text.

In conclusion, our proposed PCBSNet, which incorporates a lightweight backbone for real-time semantic segmentation, demonstrates outstanding inference speed compared to other methods when handling text in various natural scenes, meeting the requirements of real-time applications. On datasets like ICDAR2015 [40] and MSRA-TD500 [41], PCBSNet achieves accurate segmentation results for text of various orientations, scales, and shapes, and it also performs competitively with small and low-contrast text. However, challenges still exist when dealing with curved text in the CTW1500 [42] dataset, as our model, designed to accommodate quadrilateral text annotations, fails to accurately capture the boundary features of curved text, resulting in false detections. Through experimental analysis, PCBSNet demonstrates good scalability when faced with horizontal text in flat advertisements, book pages, newspapers, as well as vertical text in signs, banners, and store names, and it can handle texts in multiple languages such as English and Chinese. Furthermore, its superior inference speed makes it well-suited for various real.

Based on the aforementioned experimental results, our proposed PCBSNet, with its lightweight backbone for real-time semantic segmentation, demonstrates outstanding inference speed compared to other methods, fulfilling the requirement for real-time applications. On the ICDAR2015 [40] and MSRA-TD500 [41] datasets, PCBSNet achieved accurate segmentation results for text of various orientations, scales, and arbitrary shapes, particularly for high-quality text. It also performed competitively on small text and low-contrast text. However, challenges still persist when dealing with curved text, as observed in the CTW1500 [42] dataset. Due to the adaptation to quadrilateral text annotations, the model struggles to accurately capture the boundary features of curved text, resulting in false detections. Through experimental analysis, PCBSNet exhibited good scalability when faced with horizontal text such as advertisements, book pages, and newspapers, as well as vertical text such as signs, banners, and store names. It also showed versatility in detecting text in multiple languages, including English and Chinese. Moreover, thanks to its excellent inference speed, PCBSNet was capable of handling text detection tasks in various real-time application scenarios where a quick response is crucial.

Table 6.

ICDAR2015 comparative experimental results.

Table 6.

ICDAR2015 comparative experimental results.

| Model | ICDAR2015 | ||||

|---|---|---|---|---|---|

| P% | R% | F% | FPS | Params | |

| TextSnake [47] | 84.9 | 80.4 | 82.6 | 1.1 | 19.1 M |

| TextField [48] | 84.3 | 80.5 | 82.4 | 5.5 | 17 M+ |

| PSENet [23] | 88.2 | 74.2 | 80.6 | 1.6 | 28.6 M |

| PAN [22] | 79.3 | 84.0 | 81.8 | 20.3 | 14 M+ |

| DBNet [9] | 86.8 | 77.7 | 82.0 | 44.4 | 11.4 M |

| PAN++ [49] | 85.9 | 79.9 | 82.8 | 28.2 | - |

| DBNet++ [44] | 90.1 | 75.1 | 82.8 | 40.2 | 12.4 M |

| Ours | 88.0 | 78.4 | 82.9 | 59.1 | 7.91 M |

Table 7.

MSRA-TD500 comparative experimental results.

Table 7.

MSRA-TD500 comparative experimental results.

| Model | MSRA-TD500 | ||||

|---|---|---|---|---|---|

| P% | R% | F% | FPS | Params | |

| TextField [48] | 87.4 | 75.9 | 81.3 | 5.2 | 32.7 M |

| TextSnake [47] | 83.2 | 73.9 | 78.3 | 1.1 | 19.1 M |

| RRD [50] | 87.0 | 73.0 | 79.0 | 10.0 | - |

| Corner [45] | 87.6 | 76.2 | 81.5 | 5.7 | 45.2 M |

| PAN [22] | 80.7 | 77.3 | 78.9 | 30.2 | 14 M+ |

| DBNet [9] | 85.7 | 73.2 | 79.0 | 82.0 | 11.4 M |

| DBNet++ [44] | 89.7 | 76.5 | 82.6 | 80.0 | 12.4 M |

| Ours | 90.0 | 76.7 | 82.8 | 94.3 | 7.91 M |

Table 8.

CTW1500 comparative experimental results.

Table 8.

CTW1500 comparative experimental results.

| Model | CTW1500 | ||||

|---|---|---|---|---|---|

| P% | R% | F% | FPS | Params | |

| TextSnake [47] | 67.9 | 85.3 | 75.6 | 1.1 | 19.1 M |

| SAE [51] | 82.7 | 77.8 | 80.1 | 3 | 27.8 M |

| MASK TTD [52] | 79.7 | 79.0 | 79.4 | - | 28 M+ |

| PAN [23] | 82.7 | 77.4 | 79.9 | 84.2 | 14 M+ |

| LOMO [46] | 89.2 | 69.9 | 78.4 | - | - |

| DBNet [9] | 82.4 | 76.6 | 79.4 | 59 | 11.4 M |

| DBNet++ [44] | 84.8 | 76.3 | 80.3 | 59 | 12.4 M |

| Ours | 83.8 | 74.5 | 78.9 | 75.5 | 7.91 M |

5. Conclusions

In order to address the real-time challenges of text detection in natural scenes, a high-efficiency and accurate real-time scene text detection model based on a pure convolutional bilateral segmentation network was proposed. It significantly improves the feature extraction efficiency by constructing a lightweight dual-branch architecture, and then efficiently and adaptively fuses the dual-branch output features through an efficient attention aggregation module. Additionally, a coordinate attention mechanism was introduced after feature fusion to construct a feature enhancement module, alleviating the distortion phenomenon in the fusion process. A series of experiments conducted on the ICDAR2015, MSRA-TD500, and CTW1500 datasets demonstrated that the proposed PCBSNet achieves a good balance between accuracy and real-time performance. However, like most methods, there is still room for improvement in the performance of PCBSNet when dealing with curved text.

In the future, we will improve the fast downsampling strategy used in the underlying detail branches to find more convenient ways to capture low-level information and further enhance the real-time performance of the model. We will also explore whether expanding the number of channels in the PCB blocks by six times is the optimal structure, and conduct experiments to find the best structure for improving scene text detection performance.

Author Contributions

Conceptualization, Q.X. and M.Z.; methodology, Y.Y.; software, Z.L.; validation, Z.L. and Y.Y.; formal analysis, M.Z.; investigation, Q.X.; resources, Y.Y.; data curation, Z.L.; writing—original draft preparation, Z.L.; writing—review and editing, Y.Y.; visualization, Z.L.; supervision, M.Z.; project administration, Q.X.; funding acquisition, Y.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Natural Science Foundation of Inner Mongolia, Grant Number 2021LHMS06009, Research Science Institute of Colleges and Universities in Inner Mongolia, Grant Number NJZZ21004, and the Natural Science Foundation of Inner Mongolia, Grant Number 2021MS06031.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Xia, X.; Meng, Z.; Han, X.; Li, H.; Tsukiji, T.; Xu, R.; Zheng, Z.; Ma, J. An automated driving systems data acquisition and analytics platform. Transp. Res. Part C Emerg. Technol. 2023, 151, 104120. [Google Scholar] [CrossRef]

- Meng, Z.; Xia, X.; Xu, R.; Liu, W.; Ma, J. HYDRO-3D: Hybrid Object Detection and Tracking for Cooperative Perception Using 3D LiDAR. IEEE Trans. Intell. Veh. 2023. [Google Scholar] [CrossRef]

- Liu, W.; Quijano, K.; Crawford, M.M. YOLOv5-Tassel: Detecting tassels in RGB UAV imagery with improved YOLOv5 based on transfer learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 8085–8094. [Google Scholar] [CrossRef]

- Shi, H.; Zhao, D. License Plate Recognition System Based on Improved YOLOv5 and GRU. IEEE Access 2023, 11, 10429–10439. [Google Scholar] [CrossRef]

- Yin, X.; Zuo, Z.; Tian, S.; Liu, C. Text detection, tracking and recognition in video: A comprehensive survey. IEEE Trans Image Process. 2016, 25, 2752–2773. [Google Scholar] [CrossRef]

- Zhu, Y.; Liao, M.; Yang, M.; Liu, W. Cascaded segmentation-detection networks for text-based traffic sign detection. IEEE Trans. Intell. Transp. Syst. 2018, 19, 209–219. [Google Scholar] [CrossRef]

- He, T.; Tian, Z.; Huang, W.; Shen, C.; Qiao, Y.; Sun, C. An end-to-end textspotter with explicit alignment and attention. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5020–5029. [Google Scholar]

- Yang, X.; Yang, J.; Yan, J.; Zhang, Y.; Zhang, T.; Guo, Z.; Sun, X.; Fu, K. Scrdet: Towards more robust detection for small, cluttered and rotated objects. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8231–8240. [Google Scholar]

- Liao, M.; Wan, Z.; Yao, C.; Chen, K.; Bai, X. Real-time scene text detection with differentiable binarization. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11474–11481. [Google Scholar]

- Baek, Y.; Lee, B.; Han, D.; Yun, S.; Lee, H. Character Region Awareness for Text Detection; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, Part III 18. Springer International Publishing: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Li, Y.; Yu, Y.; Li, Z.; Lin, Y.; Xu, M.; Li, J.; Zhou, X. Pixel-Anchor: A Fast Oriented Scene Text Detector with Combined Networks. arXiv 2018, arXiv:1811.07432. [Google Scholar]

- Yu, F.; Koltun, V. Multi-scale context aggregation by dilated convolutions. arXiv 2015, arXiv:1511.07122. [Google Scholar]

- Liu, Y.; Chen, H.; Shen, C.; He, T.; Jin, L.; Wang, L. ABCNet: Real-Time Scene Text Spotting with Adaptive Bezier-Curve Network. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Ye, J.; Chen, Z.; Liu, J.; Du, B. TextFuseNet: Scene Text Detection with Richer Fused Features. In Proceedings of the Twenty-Ninth International Joint Conference on Artificial Intelligence and Seventeenth Pacific Rim International Conference on Artificial Intelligence IJCAI-PRICAI-20, Yokohama, Japan, 7–15 January 2021. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Liu, Z.; Mao, H.; Wu, C.Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A convnet for the 2020s. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 11976–11986. [Google Scholar]

- Yu, C.; Gao, C.; Wang, J.; Yu, G.; Shen, C.; Sang, N. Bisenet v2: Bilateral network with guided aggregation for real-time semantic segmentation. Int. J. Comput. Vis. 2021, 129, 3051–3068. [Google Scholar] [CrossRef]

- Wu, Y.; Wang, G.; Wang, Z.; Wang, H.; Li, Y. Triplet attention fusion module: A concise and efficient channel attention module for medical image segmentation. Biomed. Signal Process. Control 2023, 82, 104515. [Google Scholar] [CrossRef]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 13713–13722. [Google Scholar]

- Wang, W.; Xie, E.; Song, X.; Zang, Y.; Wang, W.; Lu, T.; Yu, G.; Shen, C. Efficient and accurate arbitrary-shaped text detection with pixel aggregation network. In Proceedings of the IEEE/CVF International Conference on Computer Vision (CVPR), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8440–8449. [Google Scholar]

- Wang, W.; Xie, E.; Li, X.; Hou, W.; Lu, T.; Yu, G.; Shao, S. Shape robust text detection with progressive scale expansion network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 9336–9345. [Google Scholar]

- Zhu, Y.; Chen, J.; Liang, L.; Kuang, Z.; Jin, L.; Zhang, W. Fourier contour embedding for arbitrary-shaped text detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual Conference, 19–25 June 2021; pp. 3123–3131. [Google Scholar]

- Yu, C.; Wang, J.; Peng, C.; Gao, C.; Yu, G.; Sang, N. Bisenet: Bilateral segmentation network for real-time semantic segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 325–341. [Google Scholar]

- Hu, Z.; Chen, J.; Luo, Y.; Zhang, Y. STDC-SLAM: A Real-Time Semantic SLAM Detect Object by Short-Term Dense Concatenate Network. IEEE Access 2022, 10, 129419–129428. [Google Scholar] [CrossRef]

- Tsai, T.H.; Tseng, Y.W. BiSeNet V3: Bilateral segmentation network with coordinate attention for real-time semantic segmentation. Neurocomputing 2023, 532, 33–42. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Rao, Y.; Zhao, W.; Zhu, Z.; Lu, J.; Zhou, J. Global Filter Networks for Image Classification. Adv. Neural Inf. Process. Syst. 2021, 34, 980–993. [Google Scholar]

- Ding, X.; Zhang, X.; Han, J.; Ding, G. Scaling Up Your Kernels to 31x31: Revisiting Large Kernel Design in CNNs. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Rao, Y.; Zhao, W.; Tang, Y.; Zhou, J.; Lim, S.-N.; Lu, J. Hornet: Efficient high-order spatial interactions with recursive gated convolutions. arXiv 2022, arXiv:2207.14284. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual Conference, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Woo, S.; Debnath, S.; Hu, R.; Chen, X.; Liu, Z.; Kweon, I.S.; Xie, S. Convnext v2: Co-designing and scaling convnets with masked autoencoders. arXiv 2023, arXiv:2301.00808. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Guo, M.-H.; Lu, C.-Z.; Liu, Z.-N.; Cheng, M.-M.; Hu, S.-M. Visual attention network. arXiv 2022, arXiv:2202.09741. [Google Scholar]

- Chen, J.; Kao, S.; He, H.; Zhuo, W.; Wen, S.; Lee, C.H.; Chan, S.H.G. Run, Don’t Walk: Chasing Higher FLOPS for Faster Neural Networks. arXiv 2023, arXiv:2303.03667. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I. Cbam: Convolutional block attention module. In Proceedings of the European conference on computer vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Gupta, A.; Vedaldi, A.; Zisserman, A. Synthetic Data for Text Localisation in Natural Images. In Proceedings of the IEEE Conference on Computer Vision & Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; IEEE: Piscataway, NJ, USA, 2016. [Google Scholar]

- Karatzas, D.; Gomez-Bigorda, L.; Nicolaou, A.; Ghosh, S.; Bagdanov, A.; Iwamura, M.; Matas, J.; Neumann, L.; Chandrasekhar, V.; Lu, S.; et al. ICDAR 2015 competition on robust reading. In Proceedings of the 2015 13th International Conference on Document Analysis and Recognition (ICDAR), Tunis, Tunisia, 23–26 August 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1156–1160. [Google Scholar]

- Yao, C.; Bai, X.; Liu, W.; Ma, Y.; Tu, Z. Detecting texts of arbitrary orientations in natural images. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 1083–1090. [Google Scholar]

- Liu, Y.; Jin, L.; Zhang, S.; Luo, C.; Zhang, S. Curved scene text detection via transverse and longitudinal sequence connection. Pattern Recognit. 2019, 90, 337–345. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Liao, M.; Zou, Z.; Wan, Z.; Yao, C.; Bai, X. Real-time scene text detection with differentiable binarization and adaptive scale fusion. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 919–931. [Google Scholar] [CrossRef] [PubMed]

- Lyu, P.; Yao, C.; Wu, W.; Yan, S.; Bai, X. Multi-oriented scene text detection via corner localization and region segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 7553–7563. [Google Scholar]

- Zhang, C.; Liang, B.; Huang, Z.; En, M.; Han, J.; Ding, E.; Ding, X. Look more than once: An accurate detector for text of arbitrary shapes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Long, S.; Ruan, J.; Zhang, W.; He, X.; Wu, W.; Yao, C. Textsnake: A flexible representation for detecting text of arbitrary shapes. In Proceedings of the European Conference on Computer Vision (ECCV), ECCV, Amsterdam, The Netherlands, 8–16 October 2018; pp. 20–36. [Google Scholar]

- Xu, Y.; Wang, Y.; Zhou, W.; Wang, Y.; Yang, Z.; Bai, X. Textfield: Learning a deep direction field for irregular scene text detection. IEEE Trans. Image Process. 2019, 28, 5566–5579. [Google Scholar] [CrossRef] [PubMed]

- Wang, W.; Xie, E.; Li, X.; Liu, X.; Liang, D.; Yang, Z.; Lu, T.; Shen, C. Pan++: Towards efficient and accurate end-to-end spotting of arbitrarily-shaped text. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 5349–5367. [Google Scholar] [CrossRef] [PubMed]

- Liao, M.; Zhu, Z.; Shi, B.; Xia, G.-S.; Bai, X. Rotation-sensitive regression for oriented scene text detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Tian, Z.; Shu, M.; Lyu, P.; Li, R.; Zhou, C.; Shen, X.; Jia, J. Learning shape-aware embedding for scene text detection. In Proceedings of the CVPR, Computer Vision, Long Beach, CA, USA, 15–20 June 2019; pp. 4234–4243. [Google Scholar]

- Liu, Y.; Jin, L.; Fang, C. Arbitrarily shaped scene text detection with a mask tightness text detector. IEEE Trans. Image Process. 2019, 29, 2918–2930. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).