Abstract

Recent advancements in artificial intelligence have led to significant improvements in object detection. Researchers have focused on enhancing the performance of object detection in challenging environments, as this has the potential to enhance practical applications. Deep learning has been successful in image classification and target detection and has a wide range of applications, including vehicle detection. However, object detection models trained on high-quality images often struggle to perform well under adverse weather conditions, such as fog and rain. In this paper, we propose an improved vehicle detection method using weather classification and a Faster R-CNN with a dark channel prior (DCP). The proposed method first classifies the weather within the image, preprocesses the image using the dark channel prior (DCP) based on the classification result, and then performs vehicle detection on the preprocessed image using a Faster R-CNN. The effectiveness of the proposed method is shown through experiments with images in various weather conditions.

1. Introduction

Despite the development of numerous target detection methods for vehicle detection in real-world scenarios, the challenges of detecting vehicles under adverse weather conditions such as rain, snow, and fog remain significant. These weather conditions can greatly affect the visibility of vehicles, making it difficult for detection systems to accurately identify them. In foggy weather, for example, visibility is greatly reduced, which can significantly impair the ability of the detection system to accurately and effectively identify vehicles. These challenges can lead to poor performance of detection algorithms and pose risks to safety, particularly in foggy conditions with reduced visibility.

To address these challenges, it is important to incorporate techniques that can improve the accuracy and effectiveness of vehicle detection under difficult conditions. In this paper, we present a convolutional neural network (CNN)-based model that performs weather classification to identify the weather within an image. By recognizing the weather within the image, the model can adapt and improve its performance under different weather conditions. The images classified as severe weather, such as fog or low light intensity, are then preprocessed using a dark channel prior (DCP) [1] to boost pixels and reduce noise. This preprocessing step can significantly improve the visibility and clarity of the image, making it easier for the detection system to accurately identify vehicles.

After preprocessing, the improved Faster R-CNN [2] model is used to perform vehicle detection on the preprocessed images. Faster R-CNN is a variant of the R-CNN (Regional Convolutional Neural Network) model that is faster and more efficient, making it a popular choice for real-time applications. In this case, the Faster R-CNN model has been further improved to optimize its performance for small target detection. By using an improved Faster R-CNN model in combination with weather classification and image preprocessing, we aim to improve the accuracy and reliability of vehicle detection under difficult weather conditions.

The main contributions of this study are as follows:

- We propose a method to improve vehicle recognition in adverse weather conditions by introducing weather classification and a de-hazing module;

- We propose a modified faster R-CNN for improved small object detection;

- Through experiments, we show that the proposed vehicle detection model can improve the performance of vehicle detection.

2. Related Work

Deep learning techniques have been successful in various weather classification and recognition tasks, with some research indicating their superiority over traditional machine learning methods [3,4,5,6]. Deep learning frameworks such as ResNet and Google Net have been utilized in this context, often by training them on weather datasets and applying the trained models to recognize weather in images. In some instances, existing frameworks have been modified or improved before training, as in the use of the AlexNet model framework for the recognition of sunny and foggy weather, which achieved a normalized accuracy of 85% [7]. Other studies have focused on the classification and recognition of multiple weather categories [8], such as the utilization of Google Net on a dataset containing four types of weather, or the region selection and concurrency model for the classification and recognition of six weather categories. These approaches demonstrate the potential of deep learning for weather classification and recognition, but also highlight the need for continued research in this area.

The physical model recovery approach to image defogging relies on a model of the degradation process of foggy images to reverse this process and recover a clear image [9,10,11]. This approach tends to produce more natural defogged images with minimal loss of information. Examples of such algorithms include the dark channel prior (DCP) method and the De-haze Net image recovery method. The DCP method, proposed by Kai-Ming He et al. [12], found that many local patches in non-foggy outdoor images have at least one pixel with very low intensity in at least one-color channel. Using the principle of the haze imaging model, the thickness of the fog can be estimated and used to remove the fog from the input image and recover a high-quality, clear image. The dark channel is defined as the channel with the minimum intensity for each pixel across all channels. Low-intensity values in the dark channel of bright images are often due to the presence of shadows, colored objects or surfaces, and dark objects or surfaces in the image.

Faster R-CNN introduced the region proposal network, integrated feature extraction, candidate box extraction, bounding box regression, and classification into a single network, resulting in significant improvements in overall performance [13]. It is designed to efficiently identify and classify objects within an image while also accurately determining their positions. The model first inputs an image and uses a CNN—specifically, the VGG16 network—to extract features from the image and create a feature map. The RPN is then applied to the feature map to generate a set of candidate regions, or regions of interest (ROIs), which are mapped to the last layer of the CNN’s feature map. These ROIs are passed through an ROI pooling layer and normalized to create fixed-size regions, which are then passed through two fully connected layers, classified using a SoftMax classifier, and refined using a Smooth L1 border regressor. Faster R-CNN is capable of processing images of arbitrary sizes and has demonstrated good performance in object detection tasks.

3. Proposed Method

In practical applications, there are often various interferences that can distort the quality of images captured for vehicle inspections. One common type of interference is adverse weather conditions, such as haze, rain, and other forms of poor weather, which can significantly hinder the clarity and information content of the images. In particular, haze can be a major problem that obscures object information and makes it difficult to effectively detect targets in the images.

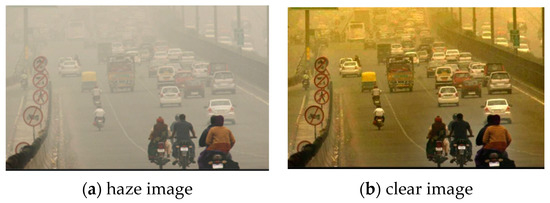

Image de-hazing techniques can be used to preprocess the captured images to improve their quality and restore information, as shown in Figure 1a,b.

Figure 1.

An example of (a) original haze image, and (b) de-hazed image.

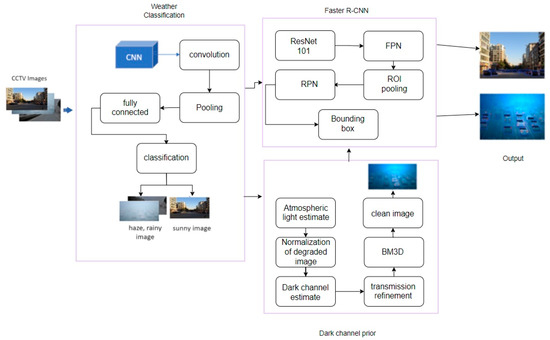

These techniques are particularly valuable for improving the availability of information contained in blurred images and for achieving accurate object detection. Our proposed method for vehicle detection is described in Figure 2. Weather classification is first performed to determine if defogging preprocessing is required. If the image is classified as a haze image, de-hazing is performed by using DCP. The improved Faster R-CNN model is then applied to the defogged images for vehicle detection. Moreover, the preprocessing of DCP and the use of the Faster R-CNN model significantly improve the recognition accuracy of the system.

Figure 2.

Overall structure of proposed method.

3.1. Weather Classification

In the previous introduction, various methods for weather image recognition were discussed, including traditional machine learning approaches and more recent deep learning techniques. In particular, the use of deep learning has shown promising results for weather classification and recognition tasks, with some studies demonstrating its superiority to traditional methods. However, a review of existing datasets for weather image recognition revealed a lack of relevance to traffic application scenarios and a limited number of weather categories. In order to address these issues, we created a weather dataset which includes images of sunny, rainy, snowy and foggy weather. From the COC dataset, 3000 images were, and 2000 images were added by gathering and augmenting from online repositories such as Baidu Image Library by using a Web crawler.

To train and build the weather image recognition model, a convolutional neural network (CNN) was implemented based on the VGG16 model, using the TensorFlow Keras library. The model was developed on PyCharm and PyQt5to analyze and classify the weather conditions within an image. The model was trained to accurately recognize the weather in an image, which was then confirmed through a PyQt5 window display. If the image contained good weather, it was directly passed to Faster R-CNN for vehicle detection. On the other hand, if the image contained haze, it was preprocessed using the dark channel prior method to improve its clarity before being passed to Faster R-CNN for vehicle detection.

3.2. DCP-Based De-Hazing Algorithm

Particulate matter in the atmosphere, such as haze and rain, can cause scattering of light and result in image blur. These can significantly impact the accuracy of object detection and classification by image recognition algorithms. To mitigate these effects, it is necessary to implement noise reduction and de-hazing techniques, which can remove the impact of particulate matter and improve the overall quality of the image. The value assignment method, which is based on the principles of smoke formation and uses physical degradation models to calculate the contributions of various factors to image blur, is a key component of these techniques. By applying this method to the image, it is possible to restore clarity and improve the performance of image recognition algorithms in detecting and classifying objects.

The dark channel prior (DCP) algorithm is a method for removing haze from images that is based on the theory of atmospheric scattering. The algorithm, proposed by Kai-Ming He et al., involves finding the dark channel image through minimum filtering and then using optimized transmittance and atmospheric light values to recover the original, clear image. The mathematical model equation of the atmospheric scattering theory is as follows:

In Equation (1), J(x) is the original image, I(x) is the actual hazed image obtained by the camera, t(x) represents the atmospheric transmittance (0~1), and A represents the atmospheric light coefficient. The atmospheric scattering model can be used to describe the process by which light is scattered by particulate matter in the atmosphere. When an image is captured, the resulting image is a combination of the fraction of light that is scattered (A(1 − t(x))) and not scattered (J(x)t(x)). The coordinates of each pixel in the image are considered in this model, allowing for the understanding of how the scattered light contributes to the overall degradation of the image.

To de-haze an image, that is, to recover the clear image J(x) from the foggy image I(x), it is necessary to consider the atmospheric transmittance t(x). When the atmospheric conditions are uniform, it is known that the atmospheric transmittance t(x) can be expressed as a function of the atmospheric scattering coefficient β and the distance between the object and the observer d(x), as in Equation (2):

The goal of de-hazing is to recover the clear image J(x) from the hazy image I(x). The DCP algorithm first estimates t(x) based on the intensity of the dark channel, which is defined as the channel with the minimum intensity for each pixel across all channels, then computes J(x) using Equation (1). This approach has been shown to be effective in improving the clarity of hazy images and has been widely used in various image-processing applications. However, the performance of the DCP algorithm can be affected by various factors, such as the choice of image features and the accuracy of the transmittance and atmospheric light estimates. As such, there is a need to continuously improve and optimize the DCP algorithm in order to achieve better image de-hazing performance.

Noise reduction is an important aspect of image processing, as noise can significantly affect the quality of captured images, particularly in low light conditions. One classical and powerful noise reduction algorithm is the block-matching and 3D filtering (BM3D) [14] method, which is particularly effective at removing additive white Gaussian noise (AWGN) from images. Additivity refers to the noise being able to be expressed as a linear superposition of its effect on the original signal, Gaussian refers to the noise transients following a Gaussian distribution, and white refers to the noise power spectral density following a uniform distribution.

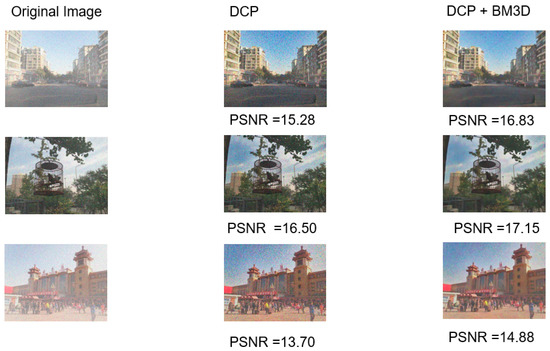

In this study, we utilized the BM3D denoising algorithm to enhance the clarity of the de-hazed image obtained through the DCP method. The use of BM3D aimed to reduce noise in the image, resulting in a processed image that more accurately represented the original scene. This can improve the performance of subsequent object detection tasks. The effectiveness of the denoising process was evaluated using the peak signal-to-noise ratio (PSNR) metric, which measures the differences in pixel intensity between the original and denoised images.

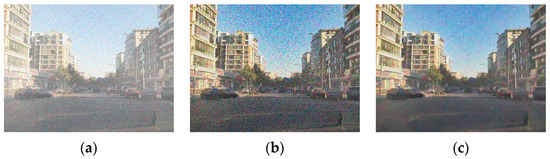

Figure 3 shows an example of preprocessing of an image using DCP and BM3D. The dark channel prior (DCP) method was modified and combined with the BM3D denoising algorithm to improve the clarity of hazy images. The results showed that the modified DCP method was effective in recovering color and contrast in hazy images, with the addition of the BM3D denoising algorithm further improving the overall quality of the processed images. This modified DCP method is particularly useful for top-view images, such as those commonly used in vehicle detection tasks.

Figure 3.

An example of preprocessing haze image using DCP and BM3D: (a) Original image, (b) preprocessed by DCP, (c) preprocessed by DCP + BM3D.

3.3. Improved Faster R-CNN-Based Vehicle Detection

In the field of vehicle detection, it is common for vehicle targets to be characterized by low pixel counts and high levels of background complexity. These factors can lead to challenges in accurately detecting and classifying vehicle targets using the Faster R-CNN network model, including false detections and missed detections. False detections may occur due to insufficient depth in the feature extraction process, leading to incorrect classification of the target. On the other hand, missed detections may be caused by a lack of breadth in the feature extraction process. To address these issues, this study presents an improved version of the Faster R-CNN network model.

We utilized the VGG16 network for feature extraction, while the ResNet101 [15] network was used to enhance the depth of target feature extraction and improve the accuracy of target classification. The main purpose of using the ResNet101 network was to reduce the number of computations and parameters, thereby decreasing the model computation time. Additionally, the network structure of the ResNet101 helps to prevent gradient disappearance.

The size of the feature map produced by the VGG16 network is small, which limits the amount of geometric information available for target detection. On the other hand, shallow networks may contain more geometric information, but lack sufficient semantic features for image classification. This is especially problematic for small target detection. To address these issues, we implemented a feature pyramid network (FPN) [16] to ensure that the details of small objects were adequately captured. Targets of different sizes in the image have different features, so we used feature pyramids to distinguish simple targets using shallow features and complex targets using deep features. In other words, we used large feature maps to distinguish simple targets and small feature maps to distinguish complex targets. The comparison between the VGG16 and ResNet network structures are shown in Table 1.

Table 1.

VGG16 and ResNet network structures.

The results of applying the improved Faster R-CNN model for vehicle detection without preprocessing images showed that the model was able to effectively detect vehicles in clear images, but the performance in hazy images was impaired due to the obscuration of vehicle information. To address this issue, the dark channel prior algorithm was applied to the hazy images to enhance their visibility before applying the improved Faster R-CNN model for detection. The results demonstrated the effectiveness of the dark channel prior algorithm in improving the performance of the Faster R-CNN model in detecting vehicles in haze images. This is due to the algorithm’s ability to capture the difference between hazy and clear images in certain color channels, which other algorithms may not be able to do.

4. Experiments

We evaluated the performance of a proposed vehicle detection method under haze conditions using CCTV images. The weather dataset consisted of images from the standard COCO dataset and images gathered from online repositories; the dataset was used for training and testing. These images encompassed diverse modes, including aerial, camera captures, news footage, traffic accidents, and car data recorders, captured from multiple angles to depict complex scenes. Our aim was to assess the effectiveness of the detection algorithm under adverse weather conditions, specifically haze. By testing the algorithm on CCTV images under hazy conditions, we aimed to identify any potential challenges or limitations in detecting vehicles in such conditions, as well as potential ways to improve the accuracy of the detection.

4.1. Weather Classification

In the experiments, the Keras deep learning framework on TensorFlow was used to construct and train the model. Specifically, the VGG16 architecture was employed for investigating weather recognition algorithms. The training of the VGG16 model involved utilizing the training data from the weather dataset, with the input images standardized to dimensions of 224 × 224 pixels. During the training process, batch sizes of 48 and 100 epochs were set. In total, 4000 images were used as training data, and 1000 images ware used as test data. Among the training images, 50% were the images in hazy weather conditions.

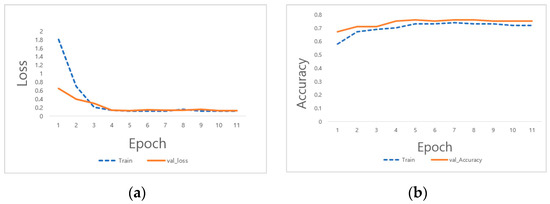

Using the weather images in the test set, the accuracy of the model classification and recognition was obtained, and the validity of the proposed weather dataset was verified. Additionally, by adjusting the weather parameters, the loss neutrality of the model and the test accuracy were able to be improved. The loss values and accuracies of the model during the training phases are shown in Figure 4. The final accuracy of the weather classification on the test dataset was 0.70.

Figure 4.

The loss and accuracy of the weather classification in training: (a) Loss, (b) Accuracy.

4.2. The Effect of DCP + BM3D

The results of the noise reduction process applied to the dark channel prior in this study demonstrated an improvement in the clarity of the preprocessed images. As shown in Figure 5, the preprocessing method used in this study was able to modify the appearance of the image, making it more visually appealing. In addition, the use of the peak signal-to-noise ratio (PSNR) as a metric for evaluating the similarity between the original and preprocessed images shows that the noise reduction process resulted in a higher PSNR value, indicating a closer match between the two images. These results suggest that the noise reduction process applied in this study is effective in improving the quality of preprocessed images.

Figure 5.

Comparison of peak signal-to-noise ratio (PSNR) of DCP and improved model.

4.3. Performance of Improved Faster R-CNN

This study employed a deep convolutional neural network with a ResNet101 architecture to extract high-dimensional features without gradient vanishing. The residual network demonstrated a superior recognition speed compared to the VGG16 model, while maintaining a comparable number of model parameters, thus reducing the computational complexity of the network training. Experiments show that the ResNet101 model effectively captured the semantic content of the input images, leading to improved accuracy in vehicle classification tasks and reduced false positives.

The FPN network utilized both the semantic information of high-resolution low-level features and high-level features, and improved the breadth of image feature extraction by fusing the features of different layers. The detection of vehicles under occlusion was improved by using top-down layers followed by high level semantic information. The FPN network operated on a larger feature map to obtain more useful information about small targets, which solved the problem of missed detection in vehicle detection to some extent. Table 2 shows the performance of the proposed improved Faster R-CNN(IFRCNN), which utilized the ResNet and FPN. The comparison here was mainly performed for normal vehicle detection within the COCO dataset.

Table 2.

The accuracy comparison of different models on the COCO dataset.

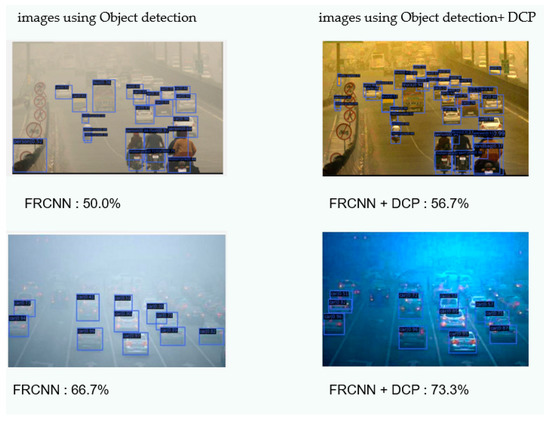

4.4. Overall Vehicle Detection Accuracy of Proposed Model

The performance of the vehicle detection algorithm was evaluated with and without the use of image de-hazing techniques. The results, shown in Figure 6, indicate that the use of de-hazing improved the accuracy of vehicle detection, although there were still some instances of false and missed detections. Especially, one can see that the proposed model was able to detect more objects in small sizes. The detection accuracy was found to be lower for images with more targets compared to those with fewer targets, possibly due to difficulty in recognizing distant targets. The proposed model also had the advantage of being faster to detect, due to the use of preprocessing techniques, which reduced the computation time required for training on the dataset.

Figure 6.

An example that shows the effectiveness of the proposed model.

Table 3 shows the overall object detection accuracy of the base model and our proposed model. The results indicate that the performance was improved for various objects—person, bus, car, and motorcycle detection in terms of average precision; this was particularly noticeable in the detection of smaller objects. The mean average precision was improved by 1.2% compared to the base model, which was due to the use of image de-hazing and the improved detection model.

Table 3.

Object detection accuracy of the base model and our proposed model.

5. Conclusions

In this study, we proposed a method for improving the accuracy of vehicle detection using the Faster R-CNN model in adverse weather conditions, specifically, foggy conditions. In order to improve the performance of detection, we classified the weather inside the images, focusing on images with haze, for preprocessing to make the detection more accurate.

Preprocessing of the input images using de-fogging algorithms was conducted to address the challenges posed by foggy weather. We used the dark channel prior (DCP) algorithm, which is a physical model recovery-based method and one of the most efficient de-hazing algorithms. We also used BM3D to enhance the image by handling the noise. The results also demonstrated that the detection accuracy was significantly improved with the use of de-hazing algorithms.

To address the issues of false and missed detections, the original Faster R-CNN model was improved by replacing the VGG16 network with the ResNet101 network for feature extraction and implementing the Feature Pyramid Network (FPN) to enhance the breadth of feature extraction, particularly for small objects. The improved Faster R-CNN model was then tested for vehicle detection, with an accuracy of 96.83%.

The overall mean average precision in object detection of proposed model, the DCP + IFRCNN, was improved by 1.2% compared to the base model, by utilizing the dark channel prior method to de-haze and reduce noise in the input image and by introducing a feature pyramid network to build the improved Faster R-CNN.

There are still areas for improvement in this method. Currently, the improved Faster R-CNN model does not have real-time capabilities, and there is a trade-off between accuracy and speed. Additionally, the dataset used in this study was relatively small and limited in terms of number and categories, so further expansion of the dataset would be beneficial in constructing a more comprehensive weather classification model.

Our research primarily focused on vehicle detection from CCTV images. Additionally, it can be extended to the ground vehicle detection and identification by unmanned aerial vehicles (UAVs) [17], or utilizing information from multiple vehicles and infrastructure sensors to improve detection accuracy [18], and weakly-supervised methods for detection [19]. These are possible future research directions.

Author Contributions

Conceptualization and methodology, E.T.; writing—original draft preparation, E.T.; writing—review and editing, J.K.; supervision, J.K. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIT) (2021R1A2C2008414) and also supported by the MSIT (Ministry of Science and ICT), Korea, under the ITRC (Information Technolo-gy Research Center) support program (IITP-2023-2020-0-01789) supervised by the IITP (Institute for Information & Communications Technology Planning & Evaluation).

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- He, K.; Sun, J.; Tang, X. Guided image filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1397–1409. [Google Scholar] [CrossRef] [PubMed]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Mudroch, M.; Pechac, P.; Grábner, M.; Kvicera, V. Classification and Prediction of Lower Troposphere Layers Influence on RF Propagation Using Artificial Neural Networks. In Proceedings of the International Conference on Advances in Neuro-information Processing, Auckland, New Zealand, 25–28 November 2008; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Zhong, Z.; Donghong, L.; Shuang, L. Salient Dual Activations Aggregation for Ground-Based Cloud Classification in Weather Station Networks. IEEE Access 2018, 6, 59173–59181. [Google Scholar] [CrossRef]

- Li, Q.; Kong, Y.; Xia, S.M. A method of weather recognition based on outdoor images. In Proceedings of the 2014 International Conference on Computer Vision Theory and Applications (VISAPP), Lisbon, Portugal, 5–8 January 2014; pp. 510–516. [Google Scholar]

- Shi, J.; Zhu, H.; Han, Y. Outdoor weather classification. Comput. Syst. Appl. 2018, 27, 259–263. [Google Scholar]

- Elhoseiny, M.; Huang, S.; Elgammal, A. Weather classification with deep convolutional neural networks. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015. [Google Scholar]

- Zhu, Z.; Li, Z.; Qu, P.; Zhou, K.; Zhang, J. Extreme Weather Recognition Using Convolutional Neural Networks. In Proceedings of the 2016 IEEE International Symposium on Multimedia (ISM), San Jose, CA, USA, 11–13 December 2016. [Google Scholar]

- Koschmieder, H. Theorie der horizontalen Sichtweite. Beitr. Phys. Freien Atmos. 1924, 12, 33–53. [Google Scholar]

- Ying, Z.; Li, G.; Ren, Y.; Wang, R.; Wang, W. A New Image Contrast Enhancement Algorithm Using Exposure Fusion Framework. In Proceedings of the 17th International Conference on Computer Analysis of Images and Patterns, Ystad, Sweden, 22–24 August 2017; Springer: Cham, Switzerland, 2017; pp. 36–46. [Google Scholar]

- Nayar, S.K.; Narasimhan, S.G. Vision in bad weather. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; pp. 820–827. [Google Scholar]

- Li, X.M. Image enhancement algorithm based on Retinex theory. Comput. Appl. Res. 2005, 22, 235–237. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-time Object Detection with Region Proposal Networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef] [PubMed]

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. Image denoising by sparse 3D transform-domain collaborative filtering. IEEE Trans. Image Process. 2007, 16, 2080–2095. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Lin, T.-Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Liu, W.; Quijano, K.; Crawford, M.M. YOLOv5-Tassel: Detecting Tassels in RGB UAV Imagery With Improved YOLOv5 Based on Transfer Learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 8085–8094. [Google Scholar] [CrossRef]

- Xia, X.; Meng, Z.; Han, X.; Li, H.; Tsukiji, T.; Xu, R.; Zheng, Z.; Ma, J. An automated driving systems data acquisition and analytics platform. Transp. Res. Part C Emerg. Technol. 2023, 151, 104120. [Google Scholar] [CrossRef]

- Shao, F.; Chen, L.; Shao, J.; Ji, W.; Xiao, S.; Ye, L.; Zhuang, Y.; Xiao, J. Deep Learning for Weakly-Supervised Object Detection and Localization: A Survey. Neurocomputing 2022, 496, 192–207. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).