MGFCTFuse: A Novel Fusion Approach for Infrared and Visible Images

Abstract

1. Introduction

- (1)

- In the encoding stage, due to insufficient utilization of details and background information, the expression of background and detail information in the fusion results is insufficient.

- (2)

- In the decoding stage, due to the lack of information exchange between different features, some essential feature information in the fused image is lost.

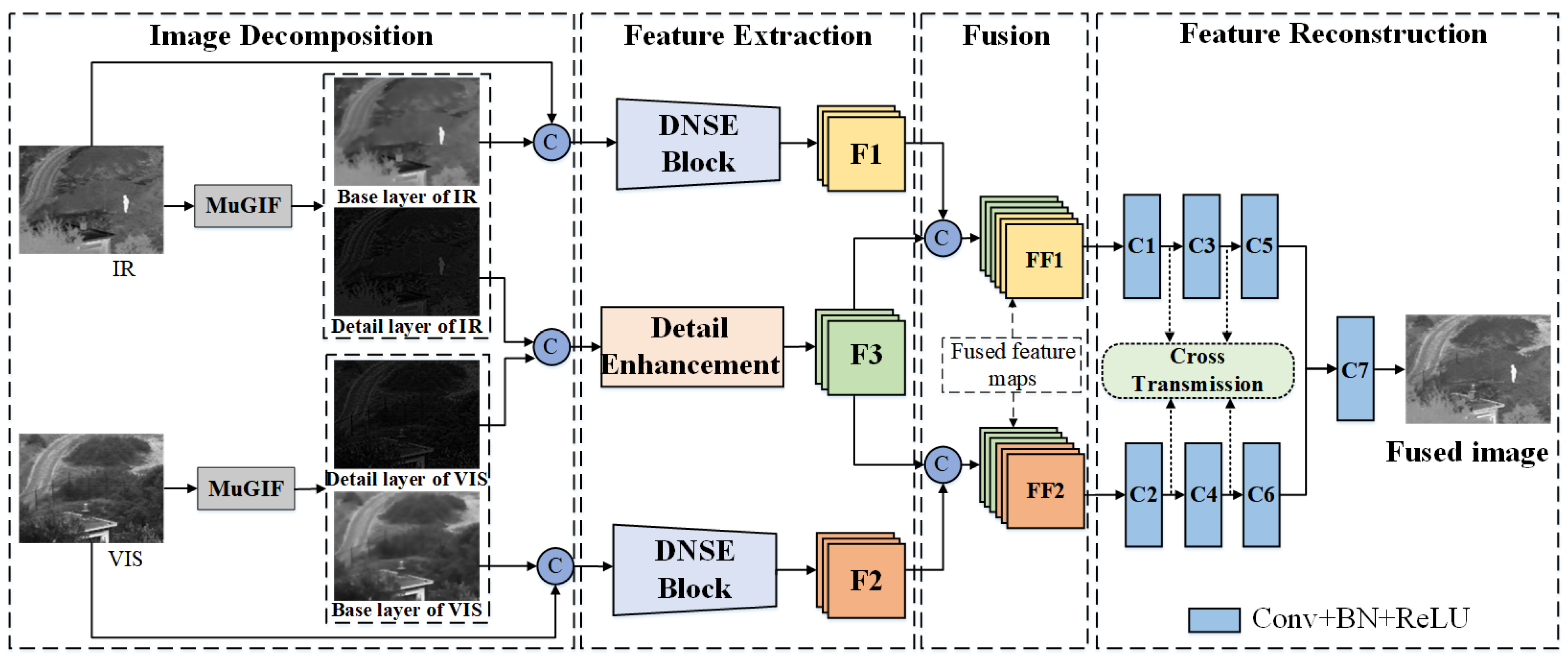

- We propose an image decomposition based on mutual guided image filtering, one which can obtain the base layer and the detail layer. On this basis, the base layer and the corresponding original image are concatenated to form the base image, and the infrared and visible detail layers are concatenated to form the detail image, where they are used as input for subsequent feature extraction.

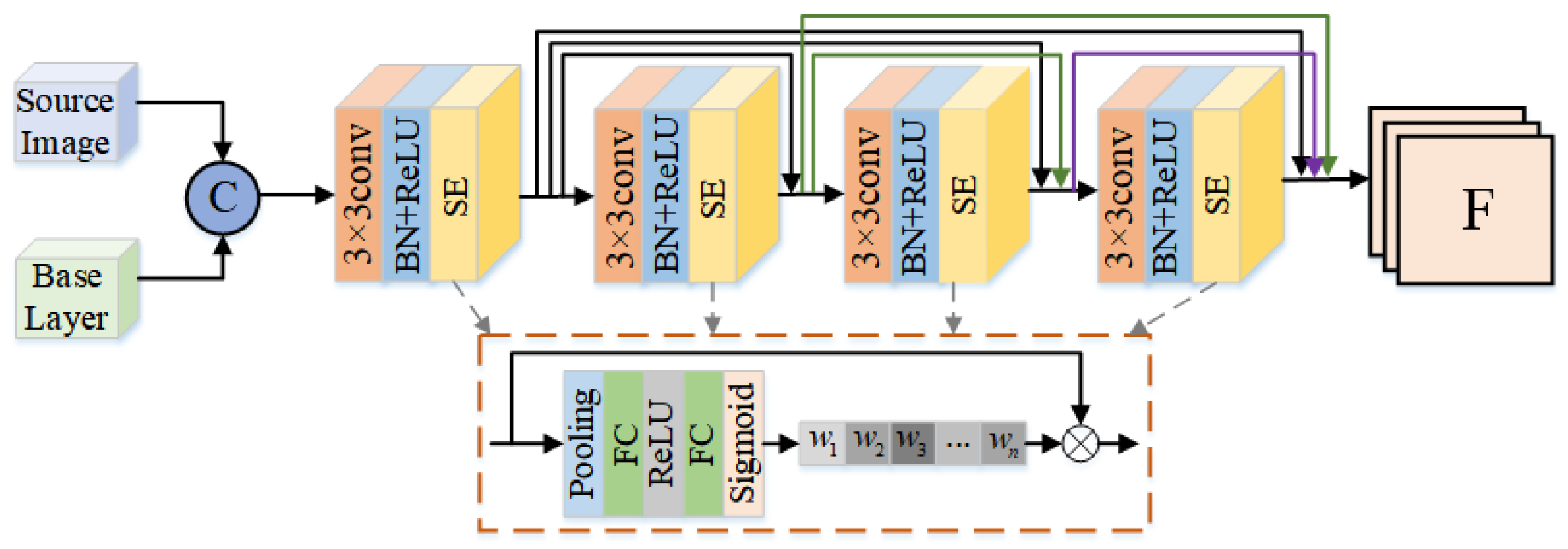

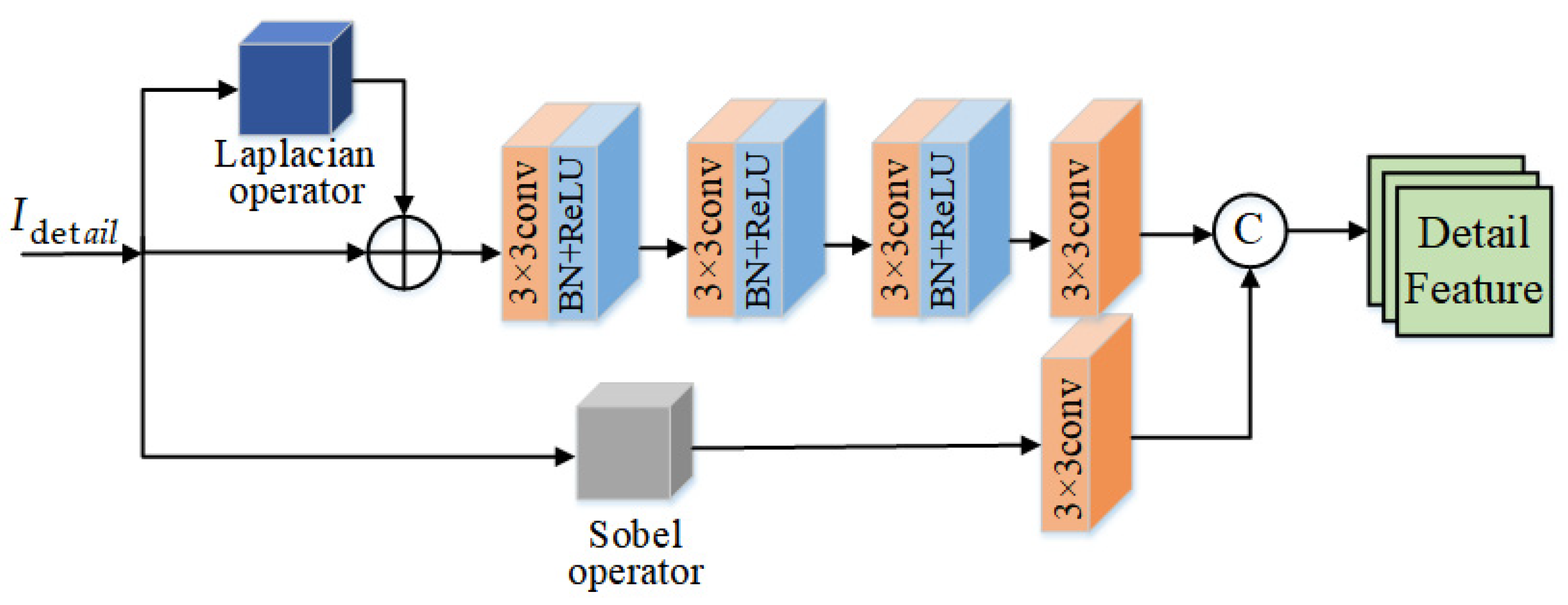

- We design an SE-based DenseNet module to extract the base image features, one which can refine the features along the channel dimension and enhance feature delivery. Meanwhile, a detail enhancement module is designed to enhance the contrast and texture details.

- We propose a dual-branch feature reconstruction network based on cross-transmission, one which enhances the information exchange and integration at different levels, thus improving the quality of image fusion.

2. Mutually Guided Image Filtering

3. Proposed MGFCTFuse

3.1. Network Structure

3.2. Details of the Network Architecture

3.2.1. Image Decomposition

3.2.2. Feature Extraction

- (1)

- DNSE Block

- (2)

- Detail Enhancement

3.2.3. Feature Fusion

3.2.4. Feature Reconstruction

3.3. Loss Function

| Algorithm 1: MGFCTFuse |

| Input: Infrared image(IR), visible image(VIS) |

| Step1: Image decomposition |

| do: Apply MuGIF on source images to obtain the base layers B1 and B2, respectively. |

| then: Obtain the detail layers D1 and D2, respectively. |

| Step2: Image concatenation |

| The detail layers, base layers, and source image are concatenated according to the rules as the input to the feature extraction network. |

| Step3: Feature extraction |

| The designed DNSE Block and detail enhancement module are used to extract image features of the concatenation to obtain the features F1, F2, and F3, respectively. |

|

|

|

| Step4: Feature fusion |

| The extracted features are fused to obtain fusion feature maps FF1 and FF2. |

| Step5: Feature reconstruction |

| A dual-branch feature reconstruction network based on cross-transmission in FF1 and FF2 is applied to obtain the fused image. |

| Output: Fused image |

4. Experiments and Analysis

4.1. Dataset and Parameter Settings

4.2. Experimental Analysis

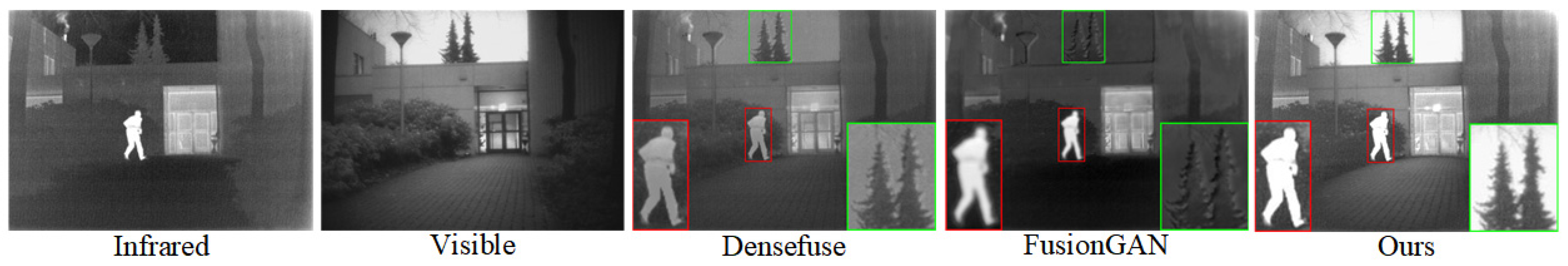

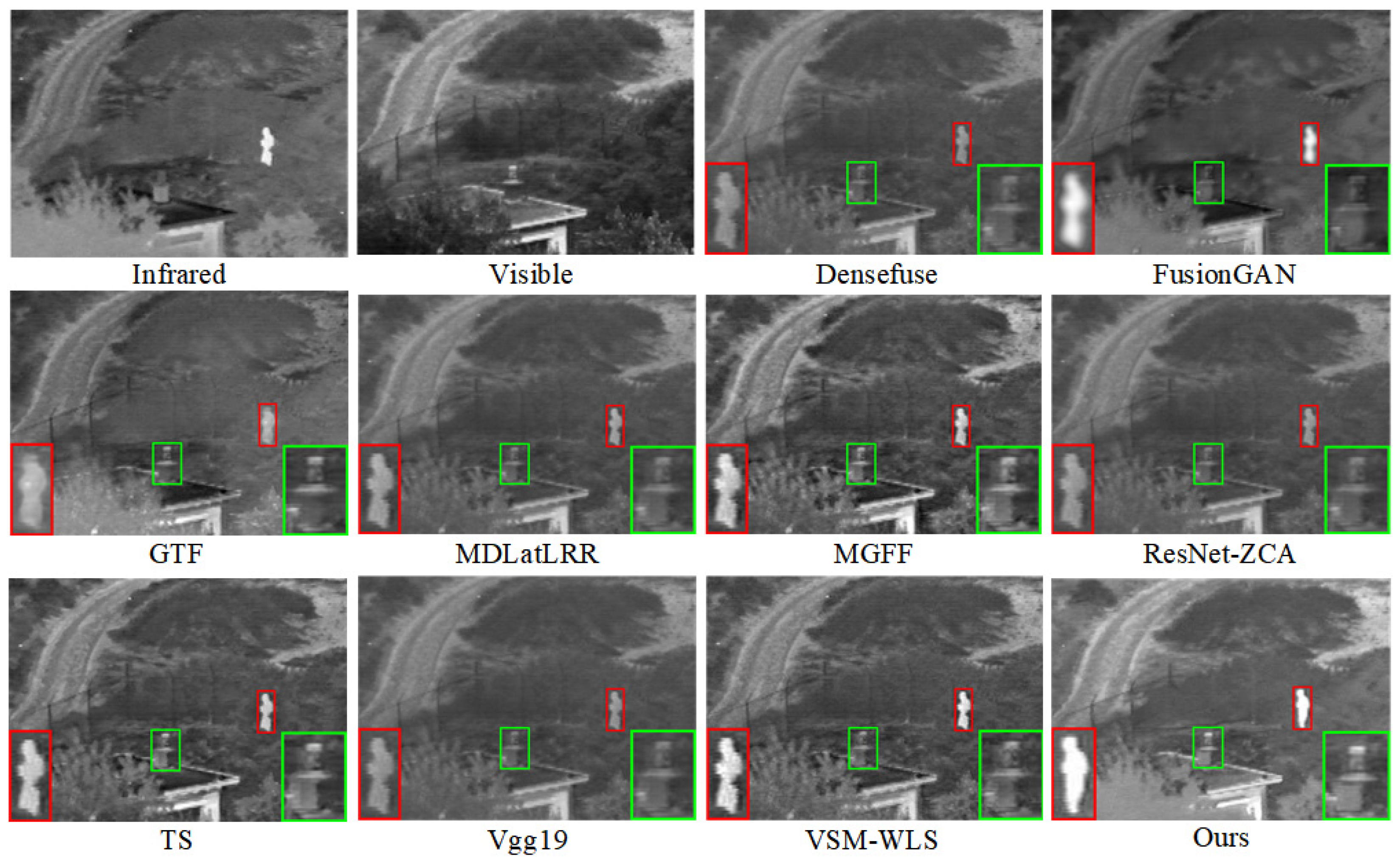

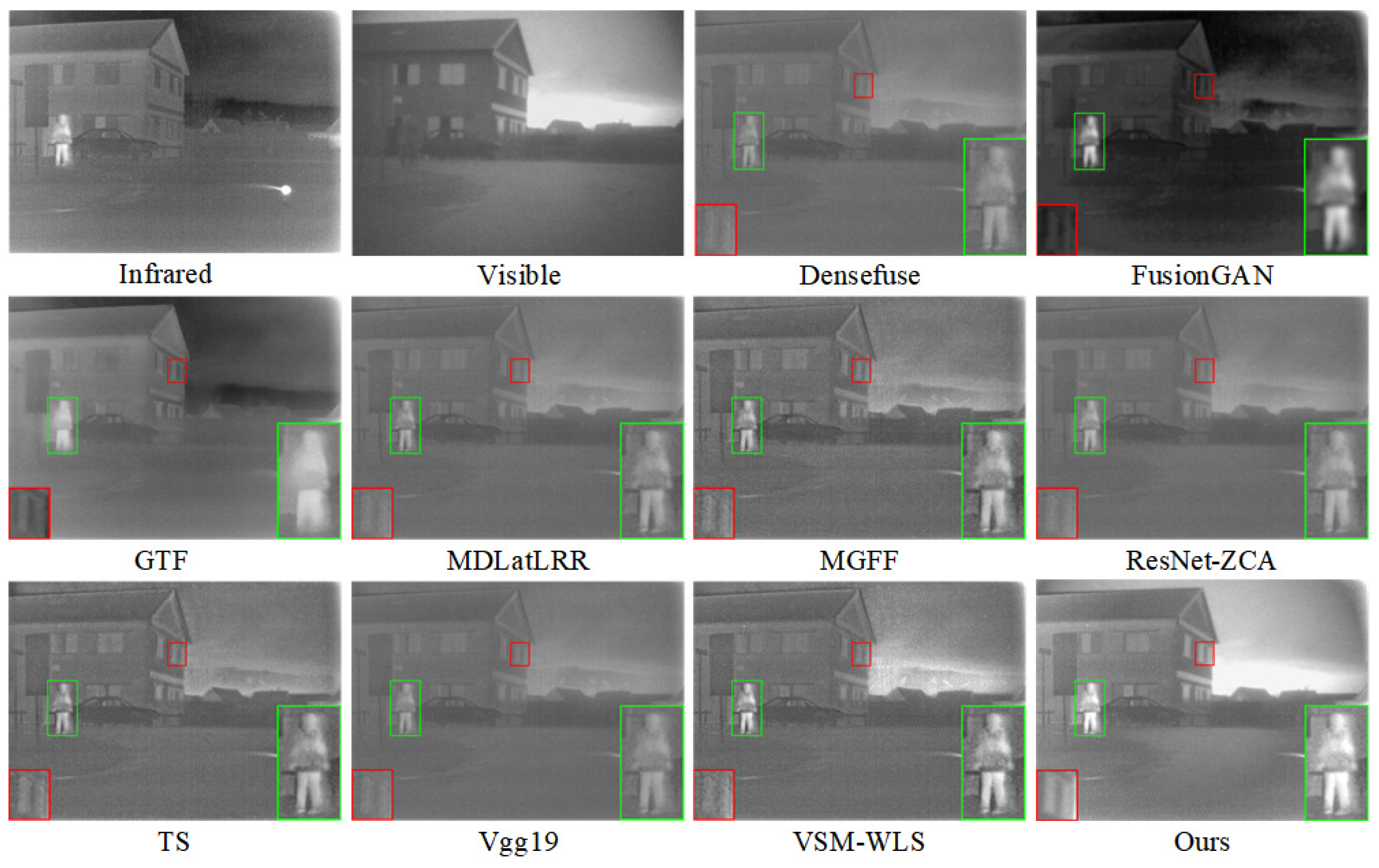

4.2.1. Subjective Evaluation

4.2.2. Objective Analysis

- (1)

- EN is usually used to measure the amount of information contained in the image. The larger its value, the more information the fused image contains from the source image, defined as follows:where L represents the pixel-level distribution of the image and represents the distribution of pixels with grayscale i points.

- (2)

- SD characterizes the degree of discretization of the information from the average value, which can reflect image distribution and contrast. The larger its value, the higher the image contrast, and the better the fusion effect of the image, defined as follows:This obtains where I(i, j) denotes the pixel gray value of image I at pixel (i, j), the image size I is H × W, and is the average gray value of image I.

- (3)

- MI is used to measure the amount of information in the fused image obtained from the source image. The larger the value, the more information is retained, and the better the quality of fusion, defined as follows:where PA(a) and PB(b) represent the edge histograms of A and B, and PA, B(a,b) represents the joint histogram.

- (4)

- AG is used to measure the gradient information of the fused image, which can reflect the detailed texture of the image to a certain extent, defined as follows:where W and H denote the width and height of the fused image, respectively, and represents the pixel value at the position.

- (5)

- VIF is usually used to evaluate the information fidelity. The larger its value, the better the subjective visual effect of the image. Its calculation is achieved through four steps, giving a simplified formula:where VID and VIND represent the visual information of the fused image extracted from the source image.

4.2.3. Running Efficiency Analysis

4.3. Ablation Experiments

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Luo, Y.; Wang, X.; Wu, Y.; Shu, C. Infrared and Visible Image Homography Estimation Using Multiscale Generative Adversarial Network. Electronics 2023, 12, 788. [Google Scholar] [CrossRef]

- Ji, J.; Zhang, Y.; Lin, Z.; Li, Y.; Wang, C. Fusion of Infrared and Visible Images Based on Optimized Low-Rank Matrix Factorization with Guided Filtering. Electronics 2022, 11, 2003. [Google Scholar] [CrossRef]

- Li, G.; Lin, Y.; Qu, X. An infrared and visible image fusion method based on multi-scale transformation and norm optimization. Inf. Fusion 2021, 71, 109–129. [Google Scholar] [CrossRef]

- Tu, Z.; Li, Z.; Li, C.; Lang, Y.; Tang, J. Multi-interactive dual-decoder for RGB-thermal salient object detection. IEEE Trans. Image Process. 2021, 30, 5678–5691. [Google Scholar] [CrossRef] [PubMed]

- Nagarani, N.; Venkatakrishnan, P.; Balaji, N. Unmanned Aerial vehicle’s runway landing system with efficient target detection by using morphological fusion for military surveillance system. Comput. Commun. 2020, 151, 463–472. [Google Scholar] [CrossRef]

- Dinh, P. Combining gabor energy with equilibrium optimizer algorithm for multi-modality medical image fusion. Biomed. Signal Process. Control. 2021, 68, 102696. [Google Scholar] [CrossRef]

- Ma, J.; Ma, Y.; Li, C. Infrared and visible image fusion methods and applications: A survey. Inf. Fusion 2019, 45, 153–178. [Google Scholar] [CrossRef]

- Hao, S.; He, T.; Ma, X.; An, B.; Hu, W.; Wang, F. NOSMFuse: An infrared and visible image fusion approach based on norm optimization and slime mold architecture. Appl. Intell. 2022, 53, 5388–5401. [Google Scholar] [CrossRef]

- Bavirisetti, D.; Dhuli, R. Fusion of infrared and visible sensor images based on anisotropic diffusion and Karhunen-Loeve transform. IEEE Sens. J. 2015, 16, 203–209. [Google Scholar] [CrossRef]

- Li, S.; Yang, B.; Hu, J. Performance comparison of different multi-resolution transforms for image fusion. Inf. Fusion 2011, 12, 74–84. [Google Scholar] [CrossRef]

- Zhou, Z.; Dong, M.; Xie, X.; Gao, Z. Fusion of infrared and visible images for night-vision context enhancement. Appl. Opt. 2016, 55, 6480–6490. [Google Scholar] [CrossRef]

- Wang, J.; Peng, J.; Feng, X.; He, G.; Fan, J. Fusion method for infrared and visible images by using non-negative sparse representation. Infrared Phys. Technol. 2014, 67, 477–489. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, X.; Ward, R.; Wang, Z. Image fusion with convolutional sparse representation. IEEE Signal Process. Lett. 2016, 23, 1882–1886. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, S.; Wang, Z. A general framework for image fusion based on multi-scale transform and sparse representation. Inf. Fusion 2015, 24, 147–164. [Google Scholar] [CrossRef]

- Liu, C.; Qi, Y.; Ding, W. Infrared and visible image fusion method based on saliency detection in sparse domain. Infrared Phys. Technol. 2017, 83, 94–102. [Google Scholar] [CrossRef]

- Bavirisetti, D.; Dhuli, R. Two-scale image fusion of visible and infrared images using saliency detection. Infrared Phys. Technol. 2016, 76, 52–64. [Google Scholar] [CrossRef]

- Zhang, X.; Ma, Y.; Fan, F.; Zhang, Y.; Huang, J. Infrared and visible image fusion via saliency analysis and local edge-preserving multi-scale decomposition. J. Opt. Soc. Am. A 2017, 34, 1400–1410. [Google Scholar] [CrossRef] [PubMed]

- Ma, J.; Zhou, Z.; Wang, B.; Zong, H. Infrared and visible image fusion based on visual saliency map and weighted least square optimization. Infrared Phys. Technol. 2017, 82, 8–17. [Google Scholar] [CrossRef]

- Kong, W.; Lei, Y.; Zhao, H. Adaptive fusion method of visible light and infrared images based on non-subsampled shearlet transform and fast non-negative matrix factorization. Infrared Phys. Technol. 2014, 67, 161–172. [Google Scholar] [CrossRef]

- Ibrahim, R.; Alirezaie, J.; Babyn, P. Pixel level jointed sparse representation with RPCA image fusion algorithm. In Proceedings of the 2015 38th International Conference on Telecommunications and Signal Processing (TSP), Prague, Czech Republic, 9–11 July 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 592–595. [Google Scholar]

- Li, J.; Huo, H.; Li, C.; Wang, R.; Sui, C. Multigrained attention network for infrared and visible image fusion. IEEE Trans. Instrum. Meas. 2020, 70, 5002412. [Google Scholar] [CrossRef]

- Yue, J.; Fang, L.; Xia, S.; Deng, Y.; Ma, J. Dif-fusion: Towards high color fidelity in infrared and visible image fusion with diffusion models. arXiv 2023, arXiv:2301.08072. [Google Scholar]

- Ram, P.; Sai, S.; Venkatesh, B. Deepfuse: A deep unsupervised approach for exposure fusion with extreme exposure image pairs. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4714–4722. [Google Scholar]

- Li, H.; Wu, X. DenseFuse: A fusion approach to infrared and visible images. IEEE Trans. Image Process. 2018, 28, 2614–2623. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Liu, Y.; Chen, X.; Cheng, J.; Peng, H.; Wang, Z. Infrared and visible image fusion with convolutional neural networks. Int. J. Wavelets Multiresolution Inf. Process. 2018, 16, 1850018. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M. Generative Adversarial Networks. Adv. Neural Inf. Process. Syst. 2014, 3, 2672–2680. [Google Scholar] [CrossRef]

- Ma, J.; Yu, W.; Liang, P.; Li, C.; Jiang, J. FusionGAN: A generative adversarial network for infrared and visible image fusion. Inf. Fusion 2019, 48, 11–26. [Google Scholar] [CrossRef]

- Ma, J.; Xu, H.; Jiang, J.; Mei, X.; Zhang, X. DDcGAN: A dual-discriminator conditional generative adversarial network for multi-resolution image fusion. IEEE Trans. Image Process. 2020, 29, 4980–4995. [Google Scholar] [CrossRef]

- Guo, X.; Li, Y.; Ma, J. Mutually guided image filtering. In Proceedings of the 25th ACM International Conference on Multimedia, Mountain View, CA, USA, 23–27 October 2017; pp. 1283–1290. [Google Scholar]

- Hu, J.; Shen, L.; Aibanie, S. Squeeze-and-excitation networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 45, 2011–2023. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.; Sheikh, H.; Simoncelli, E. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Hou, R. VIF-Net: An Unsupervised Framework for Infrared and Visible Image Fusion. IEEE Trans. Comput. Imaging 2020, 6, 640–651. [Google Scholar] [CrossRef]

- Toet, A. The TNO multiband image data collection. Data Brief 2017, 15, 249–251. [Google Scholar] [CrossRef]

- Tang, L.; Yuan, J.; Zhang, H.; Jiang, X.; Ma, J. PIAFusion: A progressive infrared and visible image fusion network based on illumination aware. Inf. Fusion 2022, 83–84, 79–92. [Google Scholar] [CrossRef]

- Xu, H.; Ma, J.; Jiang, J.; Guo, X.; Ling, H. U2Fusion: A Unified Unsupervised Image Fusion Network. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 502–518. [Google Scholar] [CrossRef]

- Ma, J.; Chen, C.; Li, C.; Huang, J. Infrared and visible image fusion via gradient transfer and total variation minimization. Inf. Fusion 2016, 31, 100–109. [Google Scholar] [CrossRef]

- Li, H.; Wu, X.; Kittler, J. MDLatLRR: A novel decomposition method for infrared and visible image fusion. IEEE Trans. Image Process. 2020, 29, 4733–4746. [Google Scholar] [CrossRef] [PubMed]

- Bavirisetti, D.; Xiao, G.; Zhao, J.; Dhuli, R.; Liu, G. Multi-scale guided image and video fusion: A fast and efficient approach. Circuits Syst. Signal Process. 2019, 38, 5576–5605. [Google Scholar] [CrossRef]

- Li, H.; Wu, X.; Durrani, T. Infrared and visible image fusion with ResNet and zero-phase component analysis. Infrared Phys. Technol. 2019, 102, 103039. [Google Scholar] [CrossRef]

- Li, H.; Wu, X.; Kittler, J. Infrared and visible image fusion using a deep learning framework. In Proceedings of the 2018 24th international conference on pattern recognition (ICPR), Beijing, China, 20–24 August 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 2705–2710. [Google Scholar]

- Roberts, J.; Van, J.; Ahmed, F. Assessment of image fusion procedures using entropy, image quality, and multispectral classification. J. Appl. Remote Sens. 2008, 2, 023522. [Google Scholar]

- Rao, Y. In-fibre Bragg grating sensors. Meas. Sci. Technol. 1997, 8, 355. [Google Scholar] [CrossRef]

- Qu, G.; Zhang, D.; Yan, P. Information measure for performance of image fusion. Electron. Lett. 2002, 38, 1. [Google Scholar] [CrossRef]

- Cui, G.; Feng, H.; Xu, Z.; Li, Q.; Chen, Y. Detail preserved fusion of visible and infrared images using regional saliency extraction and multi-scale image decomposition. Opt. Commun. 2015, 341, 199–209. [Google Scholar] [CrossRef]

- Han, Y.; Cai, Y.; Cao, Y.; Xu, X. A new image fusion performance metric based on visual information fidelity. Inf. Fusion 2013, 14, 127–135. [Google Scholar] [CrossRef]

| Part | Block | Layer | Kernel Size | Input Channel | Output Channel | Activation |

|---|---|---|---|---|---|---|

| Feature extraction | DNSE Block | Conv1_ir/vi | 3 × 3 | 2 | 16 | ReLU |

| Conv2_ir/vi | 3 × 3 | 16 | 16 | ReLU | ||

| Conv3_ir/vi | 3 × 3 | 32 | 16 | ReLU | ||

| Conv4_ir/vi | 3 × 3 | 48 | 16 | ReLU | ||

| Detail Enhancement | Conv1_L | 3 × 3 | 2 | 16 | ReLU | |

| Conv2_L | 3 × 3 | 16 | 32 | ReLU | ||

| Conv3_L | 3 × 3 | 32 | 64 | ReLU | ||

| Conv4_L | 3 × 3 | 64 | 64 | - | ||

| Conv1_S | 3 × 3 | 1 | 64 | - | ||

| Feature reconstruction | CT Block | Conv1_FF1/FF2 | 3 × 3 | 192 | 64 | ReLU |

| Conv2_FF1/FF2 | 3 × 3 | 128 | 32 | ReLU | ||

| Conv3_FF1/FF2 | 3 × 3 | 64 | 32 | ReLU | ||

| Conv4_F | 3 × 3 | 32 | 1 | ReLU |

| Algorithm | EN | SD | MI | AG | VIF |

|---|---|---|---|---|---|

| Densefuse | 6.0519 | 23.6291 | 2.3142 | 2.4781 | 0.6475 |

| FusionGAN | 6.3162 | 26.8713 | 2.1156 | 2.3614 | 0.5995 |

| GTF | 6.7116 | 33.6217 | 2.2149 | 3.2213 | 0.6127 |

| MDLatLRR | 6.2946 | 21.7386 | 2.2301 | 2.4591 | 0.6653 |

| MGFF | 6.6215 | 32.4519 | 1.5219 | 4.3147 | 0.7111 |

| ResNet-ZCA | 6.3736 | 25.9146 | 2.2214 | 2.6610 | 0.6984 |

| TS | 6.6413 | 27.5519 | 1.5549 | 3.8497 | 0.7958 |

| Vgg19 | 6.2910 | 23.1463 | 2.0017 | 2.5479 | 0.6664 |

| VSM-WLS | 6.6619 | 36.4002 | 2.2242 | 4.7649 | 0.8126 |

| Ours | 6.8796 | 38.4795 | 3.7378 | 3.3831 | 0.8922 |

| Dataset | Densefuse | FusionGAN | GTF | MDLatLRR | MGFF |

| TNO | 0.091 | 1.571 | 6.715 | 5.397 | 0.362 |

| MSRS | 0.085 | 1.329 | 6.809 | 4.948 | 0.231 |

| RoadScene | 0.064 | 1.112 | 6.569 | 5.165 | 0.134 |

| Dataset | ResNet-ZCA | TS | Vgg19 | VSM-WLS | Ours |

| TNO | 1.481 | 0.759 | 2.746 | 2.054 | 0.048 |

| MSRS | 1.6328 | 0.846 | 3.215 | 1.541 | 0.077 |

| RoadScene | 1.390 | 0.669 | 3.672 | 1.088 | 0.058 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hao, S.; Li, J.; Ma, X.; Sun, S.; Tian, Z.; Cao, L. MGFCTFuse: A Novel Fusion Approach for Infrared and Visible Images. Electronics 2023, 12, 2740. https://doi.org/10.3390/electronics12122740

Hao S, Li J, Ma X, Sun S, Tian Z, Cao L. MGFCTFuse: A Novel Fusion Approach for Infrared and Visible Images. Electronics. 2023; 12(12):2740. https://doi.org/10.3390/electronics12122740

Chicago/Turabian StyleHao, Shuai, Jiahao Li, Xu Ma, Siya Sun, Zhuo Tian, and Le Cao. 2023. "MGFCTFuse: A Novel Fusion Approach for Infrared and Visible Images" Electronics 12, no. 12: 2740. https://doi.org/10.3390/electronics12122740

APA StyleHao, S., Li, J., Ma, X., Sun, S., Tian, Z., & Cao, L. (2023). MGFCTFuse: A Novel Fusion Approach for Infrared and Visible Images. Electronics, 12(12), 2740. https://doi.org/10.3390/electronics12122740