A Cross-Modality Person Re-Identification Method Based on Joint Middle Modality and Representation Learning

Abstract

:1. Introduction

- (1)

- We use MMG to generate middle modality images by projecting VIS and IR images into a unified feature space, and then jointly input the middle modality images and the original images to a two-stream parameter sharing network for feature extraction to reduce the modality differences.

- (2)

- We use a multi-granularity pooling strategy combining global and local features to improve the representational learning capability of the model.

- (3)

- We jointly optimize the model by combining the distribution consistency loss, label smoothing cross-entropy loss, and hetero-center triplet loss to reduce the intra-class distance and accelerate the model convergence.

2. Related Work

2.1. Single-Modality Person Re-Identification

2.2. Cross-Modality Person Re-Identification

3. Methods

3.1. Overall Network Structure

- (1)

- A Middle Modality Generator (MMG) [10] maps the VIS and IR images to a unified feature space via the encoder and decoder to generate the middle modality images, and then distributes the generated middle modality images consistently via Distribution Consistency Loss (DCL) [10] to reduce the difference between the different modality images.

- (2)

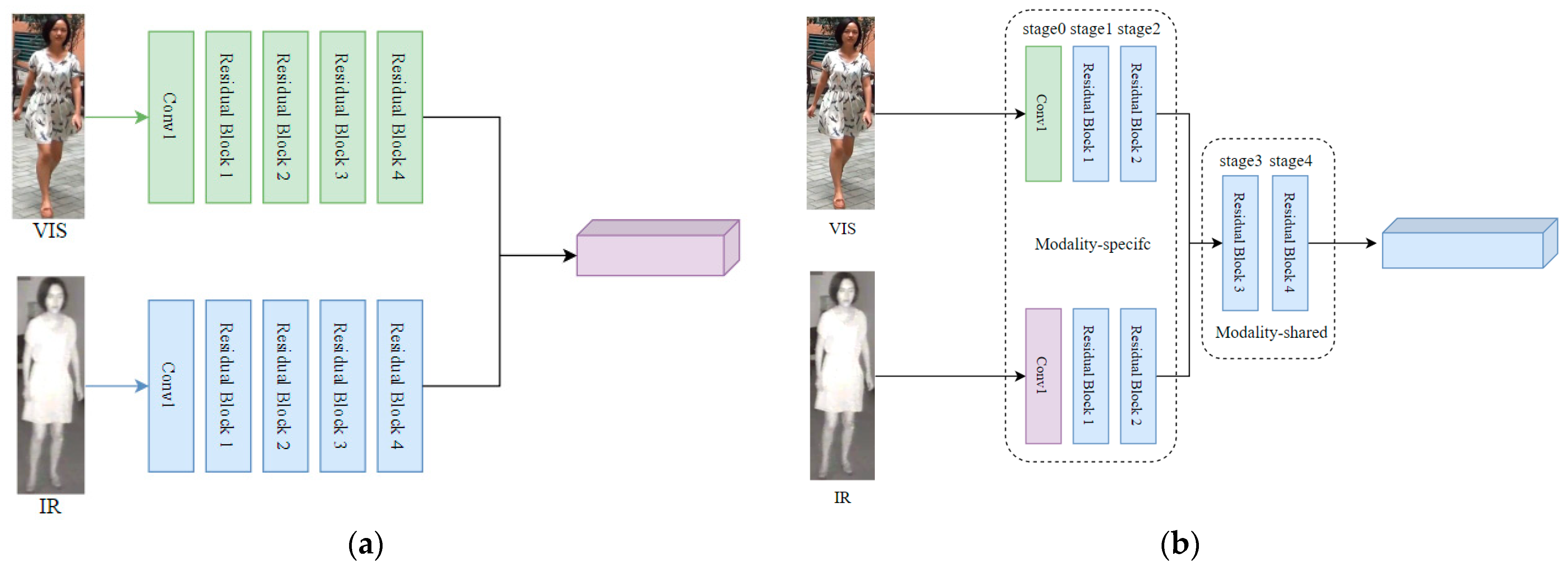

- In this paper, we use ResNet50 as the base network of a two-stream network with parameter sharing [5], and the first convolutional layer and the first two residual blocks of ResNet50 as the feature extractor to extract the independent features of each modality, and the last two residual blocks as the feature embedders for weight sharing to further reduce the modality differences.

- (3)

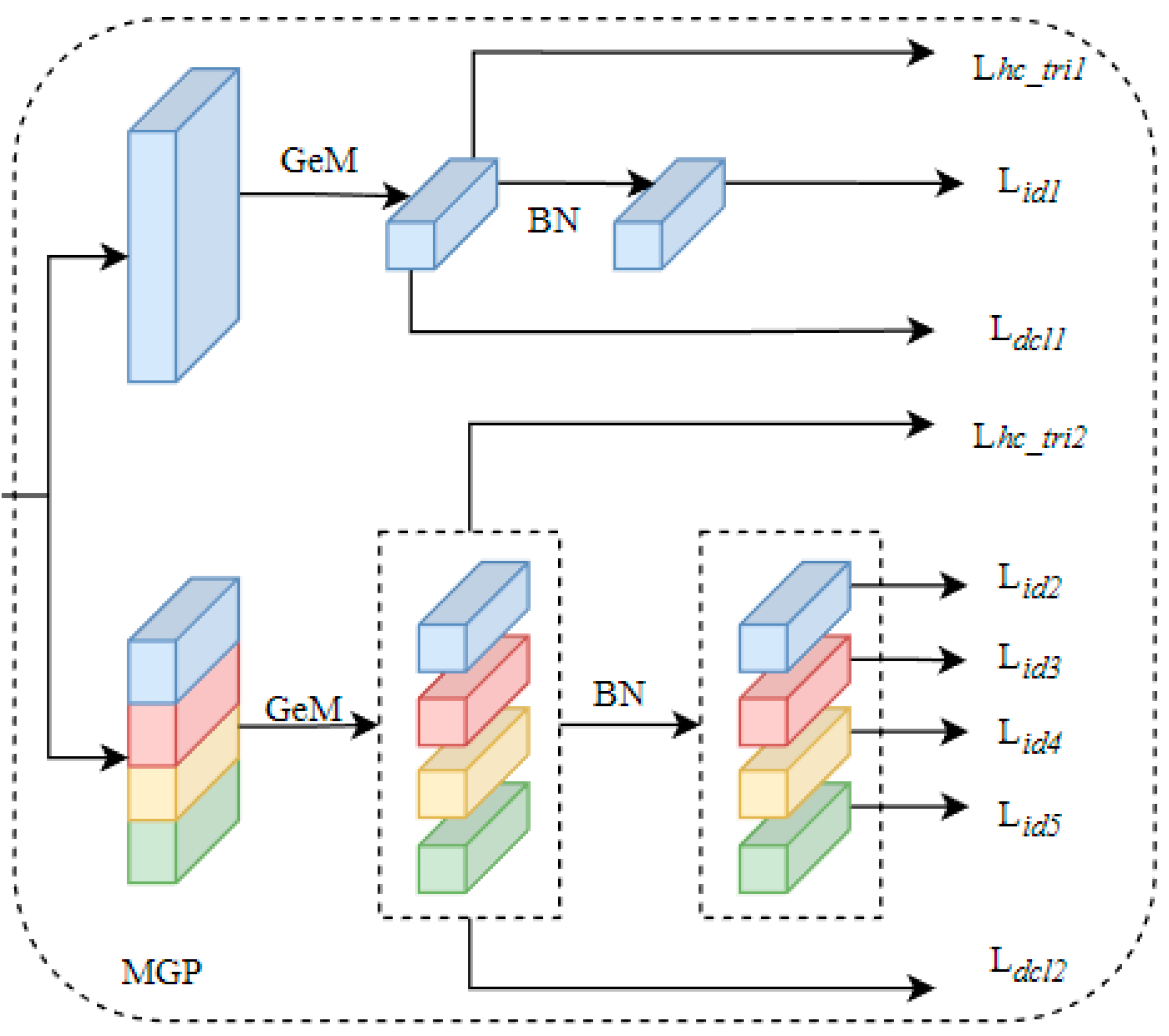

- A Multi-granularity Pooling (MGP) strategy combines global features and local features to enhance the correlation between features; the pooling method adopts Generalized Mean Pooling (GeM), which pays more attention to image detail information to improve the representation learning capability of the model.

- (4)

3.2. Middle Modality Generator

3.3. The Design of a Two-Stream Network with Parameter Sharing

3.4. Multi-Granularity Pooling Strategy

3.5. The Design of Joint Loss Function

3.5.1. Distributional Consistency Loss

3.5.2. Label Smoothing Cross-Entropy Loss

3.5.3. Hetero-Center Triplet Loss

3.5.4. Joint Loss

4. Experiments

4.1. Datasets and Evaluation Metrics

4.2. Experimental Environment and Parameter Settings

4.3. Comparison with State-of-the-Art Methods

- (1)

- The X-modal-based method uses auxiliary modes to reduce the modal differences, but it only generates middle modality images for VIS images. However, the method proposed in this paper maps the VIS and IR modal images into a unified space to generate middle modality images, which can further reduce the modality differences.

- (2)

- The main task of the representation-learning-based method is to extract more discriminative features. In this paper, we use a combination of global and local features to improve the representation learning capability of the model, which has better performance compared with the DDAG method that focuses only on global features.

- (3)

- The main task of the metric-based learning method is to map the learned features to a new space, and then reduce the intra-class distance by a loss function. In this paper, the joint distributed consistency loss, label-smoothed cross-entropy loss, and hetero-center triplet loss jointly optimize the model, which has more advantages over the methods using only HC or HcTri.

4.4. Ablation Study

4.4.1. Two-Stream Parameter-Sharing Experiments

4.4.2. Multi-Granularity Pooling Experiments

4.4.3. Joint Loss Function Experiments

4.4.4. Ablation Experiments

5. Conclusions

- In this paper, the middle modality images and the original images are jointly input to a two-stream parameter sharing network for feature extraction, and then the extracted features are directly stitched together. In the future, we will design a more reasonable parameter sharing network, focusing on pedestrian feature alignment and avoiding the introduction of noise.

- In this paper, we use a multi-granularity pooling strategy combining global features and local features to improve the representation learning capability of the model. Later, we will discuss the chunking of local features and design a better combination of global and local features to further improve the model’s representation learning capability.

- In this paper, we use joint distribution consistency loss, label smoothing cross-entropy loss, and heterogeneous center triplet loss to jointly optimize the model, which is slow to converge during training. In the future, we will optimize the loss function to make it converge quickly.

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ming, Z.; Zhu, M.; Wang, X.; Zhu, J.; Cheng, J.; Gao, C.; Yang, Y.; Wei, X. Deep learning-based person re-identification methods: A survey and outlook of recent works. Image Vis. Comput. 2022, 119, 104394. [Google Scholar] [CrossRef]

- Yaghoubi, E.; Kumar, A.; Proença, H. Sss-pr: A short survey of surveys in person re-identification. Pattern Recognit. Lett. 2021, 143, 50–57. [Google Scholar] [CrossRef]

- Wu, A.; Zheng, W.S.; Yu, H.X.; Gong, S.; Lai, J. RGB-infrared cross-modality person re-identification. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5380–5389. [Google Scholar]

- Zhu, Y.; Yang, Z.; Wang, L.; Zhao, S.; Hu, X.; Tao, D. Hetero-center loss for cross-modality person re-identification. Neurocomputing 2020, 386, 97–109. [Google Scholar] [CrossRef] [Green Version]

- Liu, H.; Tan, X.; Zhou, X. Parameter sharing exploration and hetero-center triplet loss for visible-thermal person re-identification. IEEE Trans. Multimed. 2020, 23, 4414–4425. [Google Scholar] [CrossRef]

- Ye, M.; Lan, X.; Li, J.; Yuen, P. Hierarchical discriminative learning for visible thermal person re-identification. In Proceedings of the 32nd AAAI Conference on Artificial Intelligence, AAAI 2018, New Orleans, LA, USA, 2–7 February 2018; pp. 7501–7508. [Google Scholar]

- Dai, P.; Ji, R.; Wang, H.; Wu, Q.; Huang, Y. Cross-modality person re-identification with generative adversarial training. In Proceedings of the IJCAI: International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018; Volume 1, p. 6. [Google Scholar]

- Wang, G.; Zhang, T.; Cheng, J.; Liu, S. Rgb-infrared cross-modality person re-identification via joint pixel and feature alignment. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 3623–3632. [Google Scholar]

- Li, D.; Wei, X.; Hong, X.; Gong, Y. Infrared-visible cross-modal person re-identification with an x modality. In Proceedings of the AAAI conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 4610–4617. [Google Scholar]

- Zhang, Y.; Yan, Y.; Lu, Y.; Wang, H. Towards a unified middle modality learning for visible-infrared person re-identification. In Proceedings of the 29th ACM International Conference on Multimedia, Chengdu, China, 20–24 October 2021; pp. 788–796. [Google Scholar]

- Sun, Y.; Qi, K.; Chen, W.; Xiong, W.; Li, P.; Liu, Z. Fusional Modality and Distribution Alignment Learning for Visible-Infrared Person Re-Identification. In Proceedings of the 2022 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Prague, Czech Republic, 9–12 October 2022; IEEE: New York, NY, USA, 2022; pp. 3242–3248. [Google Scholar]

- Geng, M.; Wang, Y.; Xiang, T.; Tian, Y. Deep transfer learning for person re-identification. arXiv 2016, arXiv:1611.05244. [Google Scholar]

- Sun, Y.; Zheng, L.; Yang, Y.; Tian, Q. Beyond part models: Person retrieval with refined part pooling (and a strong convolutional baseline). In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 480–496. [Google Scholar]

- Kalayeh, M.M.; Basaran, E.; Gökmen, M.; Kamasak, M.E.; Shah, M. Human semantic parsing for person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 1062–1071. [Google Scholar]

- Zhao, H.; Tian, M.; Sun, S.; Shao, J.; Yan, J.; Yi, S.; Wang, X.; Tang, X. Spindle net: Person re-identification with human body region guided feature decomposition and fusion. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1077–1085. [Google Scholar]

- Schroff, F.; Kalenichenko, D.; Philbin, J. Facenet: A unified embedding for face recognition and clustering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 815–823. [Google Scholar]

- Varior, R.R.; Haloi, M.; Wang, G. Gated siamese convolutional neural network architecture for human re-identification. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part VIII 14. Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 791–808. [Google Scholar]

- Hermans, A.; Beyer, L.; Leibe, B. In defense of the triplet loss for person re-identification. arXiv 2017, arXiv:1703.07737. [Google Scholar]

- Chen, W.; Chen, X.; Zhang, J.; Huang, K. Beyond triplet loss: A deep quadruplet network for person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 403–412. [Google Scholar]

- Xia, D.; Liu, H.; Xu, L.; Wang, L. Visible-infrared person re-identification with data augmentation via cycle-consistent adversarial network. Neurocomputing 2021, 443, 35–46. [Google Scholar] [CrossRef]

- Almahairi, A.; Rajeshwar, S.; Sordoni, A.; Bachman, P.; Courville, A. Augmented cyclegan: Learning many-to-many mappings from unpaired data. In Proceedings of the International Conference on Machine Learning, PMLR, Stockholm, Sweden, 10–15 July 2018; pp. 195–204. [Google Scholar]

- Luo, H.; Gu, Y.; Liao, X.; Lai, S.; Jiang, W. Bag of tricks and a strong baseline for deep person re-identification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019. [Google Scholar]

- Wang, G.; Yuan, Y.; Chen, X.; Li, J.; Zhou, X. Learning discriminative features with multiple granularities for person re-identification. In Proceedings of the 26th ACM International Conference on Multimedia, Seoul, Republic of Korea, 22–26 October 2018; pp. 274–282. [Google Scholar]

- Munaro, M.; Fossati, A.; Basso, A.; Menegatti, E.; Van Gool, L. One-shot person re-identification with a consumer depth camera. In Person Re-Identification; Springer: London, UK, 2014; pp. 161–181. [Google Scholar]

- Hao, Y.; Wang, N.; Li, J.; Gao, X. HSME: Hypersphere manifold embedding for visible thermal person re-identification. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 8385–8392. [Google Scholar]

- Wang, Z.; Wang, Z.; Zheng, Y.; Chuang, Y.Y.; Satoh, S.I. Learning to reduce dual-level discrepancy for infrared-visible person re-identification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 618–626. [Google Scholar]

- Ye, M.; Shen, J.; Lin, G.; Xiang, T.; Shao, L.; Hoi, S.C. Deep learning for person re-identification: A survey and outlook. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 2872–2893. [Google Scholar] [CrossRef] [PubMed]

- Ye, M.; Shen, J.; Crandall, D.J.; Shao, L.; Luo, J. Dynamic dual-attentive aggregation learning for visible-infrared person re-identification. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XVII 16. Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 229–247. [Google Scholar]

- Fu, C.; Hu, Y.; Wu, X.; Shi, H.; Mei, T.; He, R. CM-NAS: Cross-modality neural architecture search for visible-infrared person re-identification. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 11823–11832. [Google Scholar]

- Liu, H.; Chai, Y.; Tan, X.; Li, D.; Zhou, X. Strong but simple baseline with dual-granularity triplet loss for visible-thermal person re-identification. IEEE Signal Process. Lett. 2021, 28, 653–657. [Google Scholar] [CrossRef]

- Zhang, Q.; Lai, C.; Liu, J.; Huang, N.; Han, J. Fmcnet: Feature-level modality compensation for visible-infrared person re-identification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 7349–7358. [Google Scholar]

| Methods | RegDB | SYSU-MM01 | ||||||

|---|---|---|---|---|---|---|---|---|

| VtI | ItV | All Search | Indoor Search | |||||

| R-1 | mAP | R-1 | mAP | R-1 | mAP | R-1 | mAP | |

| HCML [6] | 24.44 | 20.08 | 21.70 | 22.24 | 14.32 | 16.16 | 24.52 | 30.08 |

| HSME [25] | 41.34 | 38.82 | 40.67 | 37.50 | 20.68 | 23.12 | - | - |

| D2RL [26] | 43.40 | 44.10 | - | - | 28.90 | 29.20 | - | - |

| AliGAN [8] | 57.90 | 53.60 | 56.30 | 53.40 | 42.40 | 40.70 | 45.90 | 54.30 |

| HC [4] | - | - | - | - | 56.96 | 54.95 | 59.74 | 64.91 |

| HcTri [5] | 91.05 | 83.28 | 89.30 | 81.46 | 61.68 | 57.51 | 63.41 | 68.17 |

| X-modality [9] | 62.21 | 60.18 | - | - | 49.92 | 50.73 | - | - |

| DDAG [28] | 69.34 | 63.46 | 68.06 | 61.80 | 54.75 | 53.02 | 61.02 | 67.98 |

| AGW [27] | 70.05 | 66.37 | - | - | 47.50 | 47.65 | 54.17 | 62.97 |

| CM-NAS [29] | 84.54 | 80.32 | 82.57 | 78.31 | 61.99 | 60.02 | 67.01 | 72.95 |

| DGTL [30] | 83.92 | 73.78 | 81.59 | 71.65 | 57.34 | 55.13 | 63.11 | 69.20 |

| FMCNet [31] | 89.12 | 84.43 | 88.38 | 83.86 | 66.34 | 62.51 | 68.15 | 74.09 |

| Ours | 94.18 | 86.54 | 91.16 | 83.67 | 71.27 | 68.11 | 77.64 | 81.06 |

| Experiments | Modality-Specific Feature Extractor | Modality-Shared Feature Embedder | RegDB | SYSU-MM01 | ||

|---|---|---|---|---|---|---|

| R-1 | mAP | R-1 | mAP | |||

| 1 | stage 0 | stage 1–stage 4 | 89.94 | 83.25 | 67.68 | 64.82 |

| 2 | stage 0–stage 1 | stage 2–stage 4 | 90.24 | 83.36 | 68.85 | 66.15 |

| 3 | stage 0–stage 2 | stage 3–stage 4 | 90.31 | 83.48 | 69.53 | 66.90 |

| 4 | stage 0–stage 3 | stage 4 | 86.86 | 80.22 | 64.32 | 62.54 |

| Experiments | Multi-Granularity | Pooling Methods | RegDB | SYSU-MM01 | |||

|---|---|---|---|---|---|---|---|

| GAP | GeM | R-1 | mAP | R-1 | mAP | ||

| 1 | ✓ | 89.94 | 83.25 | 67.68 | 64.82 | ||

| 2 | ✓ | 90.60 | 84.10 | 67.94 | 65.27 | ||

| 3 | ✓ | ✓ | 89.68 | 83.24 | 69.47 | 66.37 | |

| 4 | ✓ | ✓ | 90.03 | 83.78 | 68.54 | 66.74 | |

| Experiments | Joint Loss Functions | RegDB | SYSU-MM01 | |||

|---|---|---|---|---|---|---|

| DCL-LS-T | DCL-LS-HCT | R1 | mAP | R1 | mAP | |

| 1 | ✓ | 89.94 | 83.25 | 67.68 | 64.82 | |

| 2 | ✓ | 91.68 | 83.48 | 69.11 | 65.87 | |

| Methods | SYSU-MM01 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| All Search | Indoor Search | ||||||||||

| Baseline | PS | MGP | DCL-LS-HCT | R-1 | R-10 | R-20 | mAP | R-1 | R-10 | R-20 | mAP |

| ✓ | 67.68 | 94.94 | 98.45 | 64.82 | 74.26 | 98.09 | 99.70 | 78.49 | |||

| ✓ | ✓ | 69.54 | 96.04 | 98.91 | 66.90 | 76.69 | 98.72 | 99.75 | 80.82 | ||

| ✓ | ✓ | 69.47 | 95.95 | 98.86 | 66.37 | 76.18 | 98.14 | 99.63 | 80.24 | ||

| ✓ | ✓ | 69.11 | 95.53 | 98.46 | 65.87 | 75.59 | 98.15 | 99.53 | 79.63 | ||

| ✓ | ✓ | ✓ | ✓ | 71.27 | 96.11 | 98.72 | 68.11 | 77.64 | 98.16 | 99.42 | 81.06 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, L.; Guan, Z.; Dai, X.; Gao, H.; Lu, Y. A Cross-Modality Person Re-Identification Method Based on Joint Middle Modality and Representation Learning. Electronics 2023, 12, 2687. https://doi.org/10.3390/electronics12122687

Ma L, Guan Z, Dai X, Gao H, Lu Y. A Cross-Modality Person Re-Identification Method Based on Joint Middle Modality and Representation Learning. Electronics. 2023; 12(12):2687. https://doi.org/10.3390/electronics12122687

Chicago/Turabian StyleMa, Li, Zhibin Guan, Xinguan Dai, Hangbiao Gao, and Yuanmeng Lu. 2023. "A Cross-Modality Person Re-Identification Method Based on Joint Middle Modality and Representation Learning" Electronics 12, no. 12: 2687. https://doi.org/10.3390/electronics12122687

APA StyleMa, L., Guan, Z., Dai, X., Gao, H., & Lu, Y. (2023). A Cross-Modality Person Re-Identification Method Based on Joint Middle Modality and Representation Learning. Electronics, 12(12), 2687. https://doi.org/10.3390/electronics12122687