A Multiformalism-Based Model for Performance Evaluation of Green Data Centres

Abstract

1. Introduction

- Storage: Servers are responsible for storing vast amounts of data, from mission-critical business data to personal photos and videos. Without servers, data centres would not be able to store and manage the enormous amount of data that is generated every day.

- Processing: Servers also provide the processing power required to perform complex operations on the data stored in the data centre. This includes running applications, analysing data, and performing calculations.

- Scalability: Servers can be easily scaled up or down depending on the needs of the data centre. As the amount of data grows, additional servers can be added to the data centre to handle the increased workload.

- Reliability: Servers are designed to be highly reliable and available. They are built with redundant components and are monitored 24/7 to ensure they are functioning correctly.

- Security: Servers are also responsible for ensuring the security of the data stored in the data centre. They provide features like encryption, access controls, and firewalls to protect against unauthorised access and cyber threats.

2. Related Work

2.1. Hybrid ARM/INTEL Architectures

2.2. Modelling Data Centres

2.3. Multiformalism Modelling

3. Case Study

3.1. Introduction

3.2. A Green Data Centre

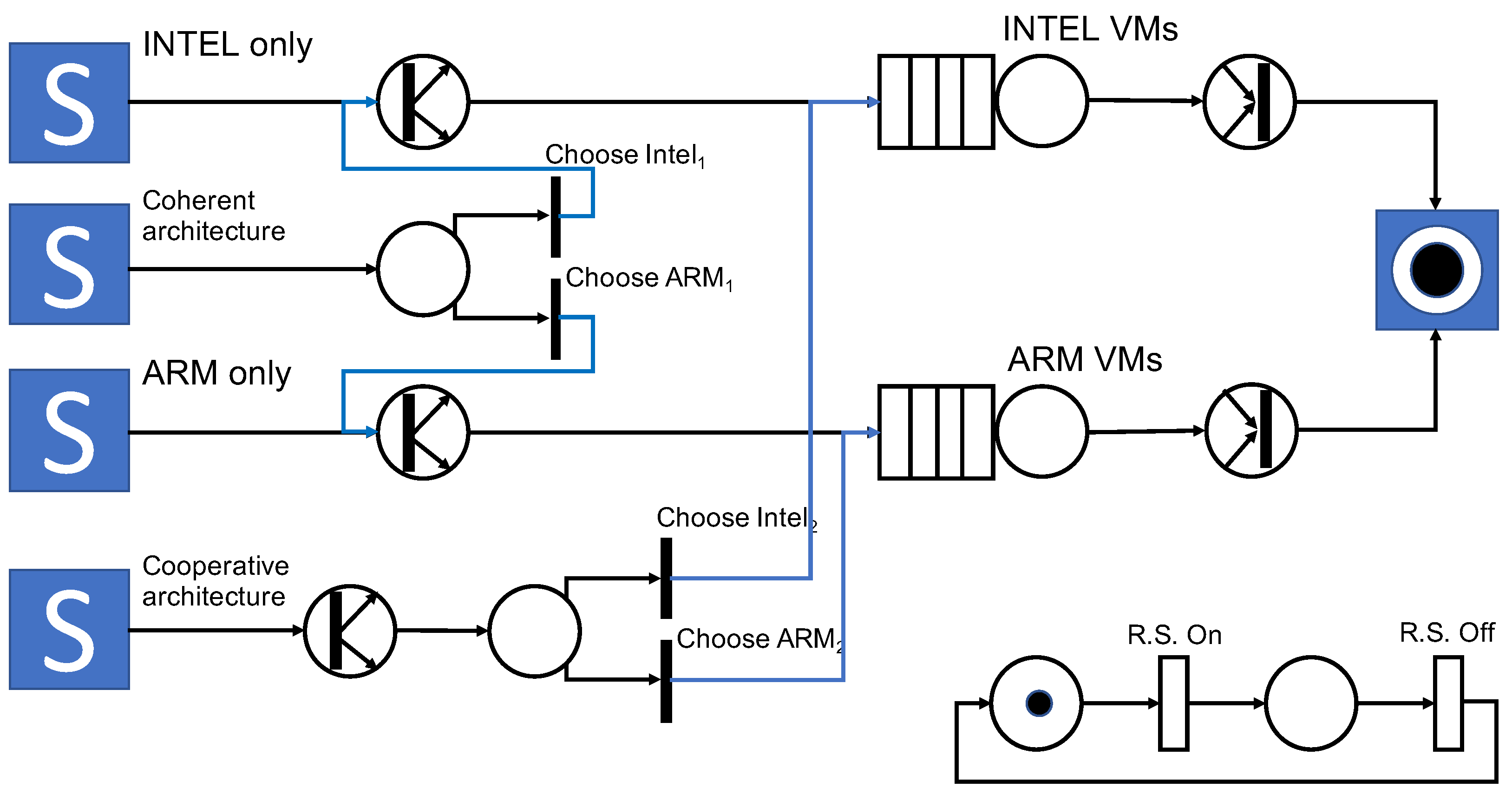

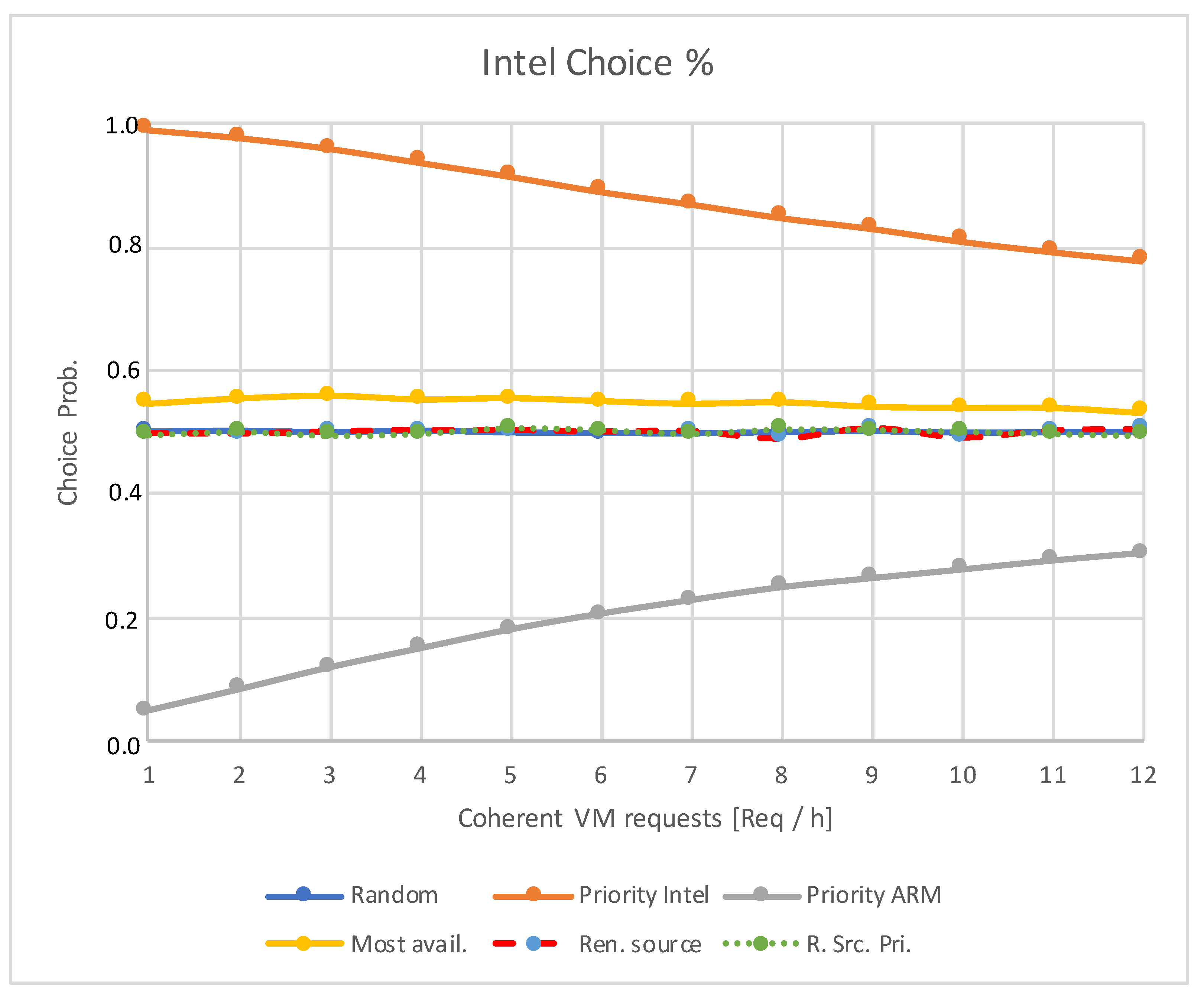

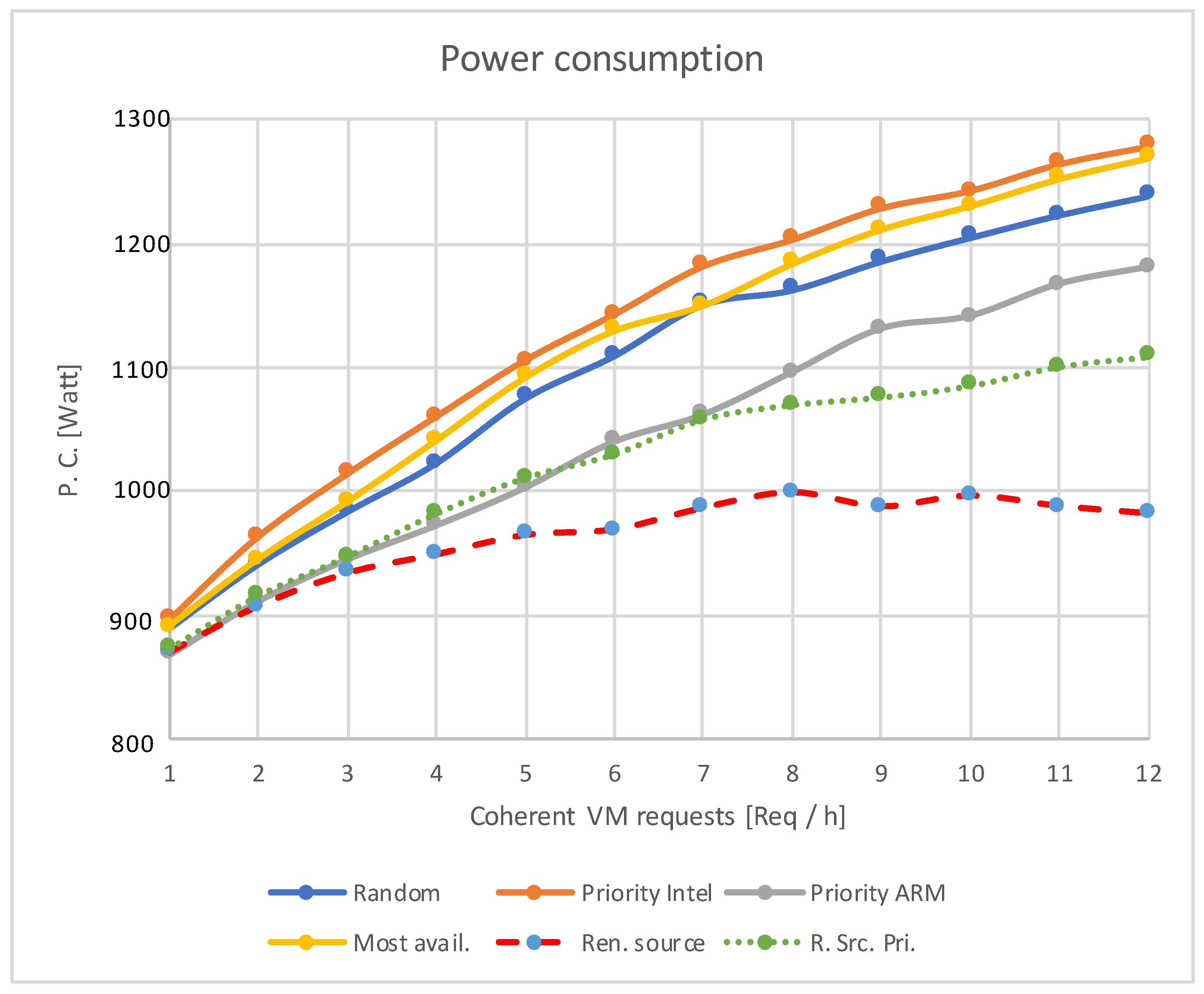

- INTEL priority and ARM priority, regardless of the resource availability. These policies take a VM of a certain type if it is available; otherwise, a switch to the alternative policy is taken;

- Random policy: if there are multiple machines of a particular type available, one is randomly chosen with a probability of . If a resource type is not available, the policy applies to the other one).

- A policy that selects the resource with the most availability (if there are more ARM-based than INTEL-based VMs, then ARM-VMs will be chosen, and vice versa when there are more INTEL-based VMs).

- A further policy considers the added solar panel. It then selects the INTEL architecture when the renewable source is providing energy, and it reroutes the VMs to the ARM architecture when only the main source of electricity is available.

- The last policy is a mixture of three of the previous ones. When the renewal sources are available, it gives priority to INTEL VMs. However, if there are no more INTEL VMs available, it executes a VM on an ARM architecture. Conversely, when no renewable energy is available, this policy gives priority to ARM VMs, resorting to INTEL VMS only if the former ones are all in use.

4. The Multiformalism Model

Introduction

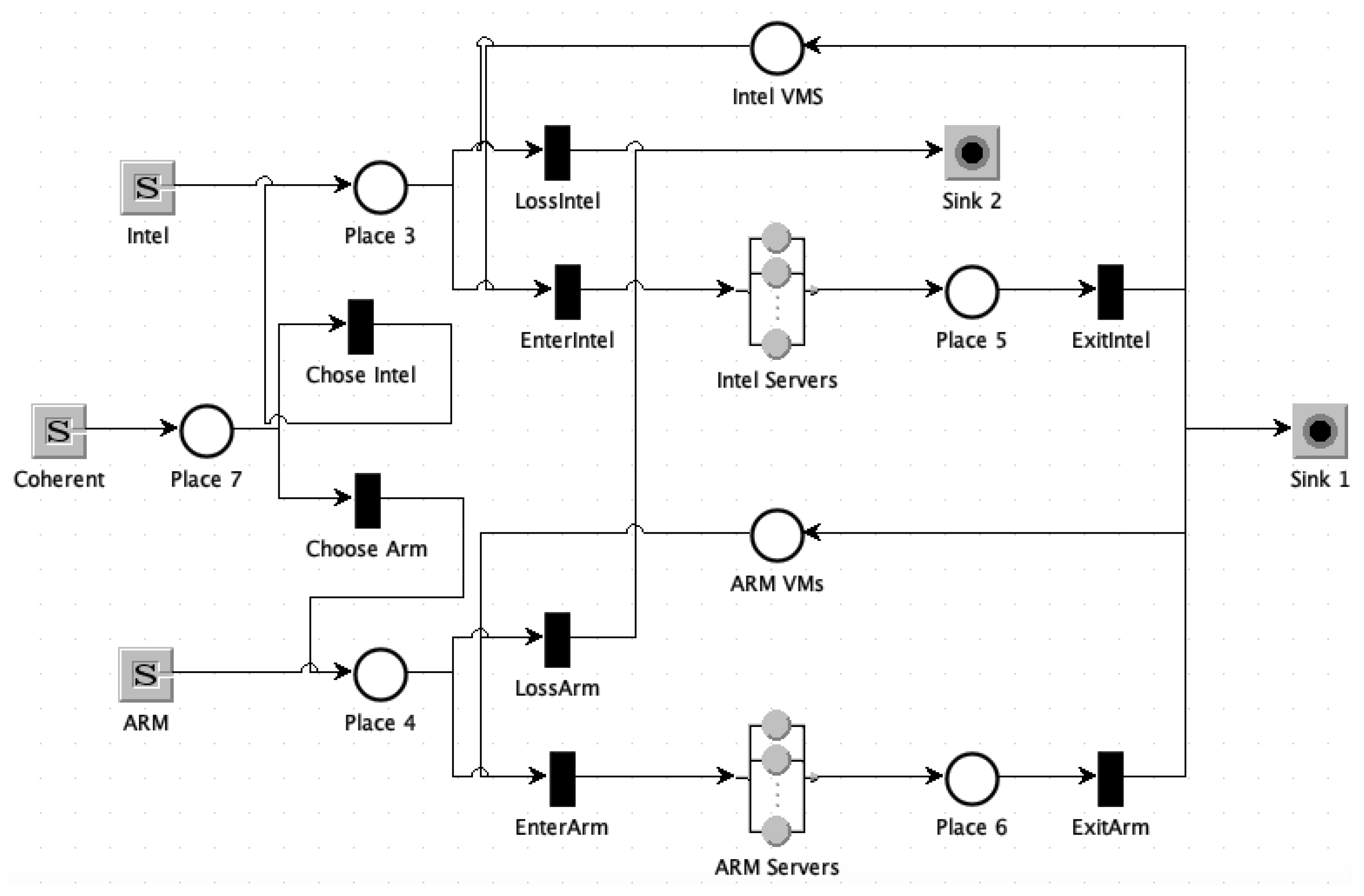

- In INTEL (resp. ARM) priority transitions, (resp. ) fires if there are INTEL (resp. ARM) virtual machines available; otherwise, (resp. ) is selected.

- In the random policy, whenever VMs of both types are available, transitions and fire randomly with equal probabilities. However, if one of the resources is missing, only the other will fire.

- In the most available resource policy, only the transition belonging to the type with more VMs is enabled. If both architectures are characterised by the same number of VMs, the choice is random.

- The fifth policy alternates between the two priority policies based on the state of the renewable source (submodel in the bottom right of Figure 1). In particular, it inhibits the selection of the ARM architecture when the renewable source is on: in this way, VMs are routed to the INTEL architecture. Conversely, when the renewable source is off, the selection of INTEL VMs is inhibited.

- The last policy is realised by duplicating the immediate transitions that perform the choice, implementing both the INTEL and ARM priority policies. The inhibitor ARC allows the proper pair of transitions to be enabled, according to the state of the renewable source.

5. Discussion

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| TLA | Three letter acronym |

| LD | Linear dichroism |

References

- Che, S.; Boyer, M.; Meng, J.; Tarjan, D.; Sheaffer, J.W.; Lee, S.H.; Skadron, K. Rodinia: A benchmark suite for heterogeneous computing. In Proceedings of the 2009 IEEE International Symposium on Workload Characterization (IISWC), Austin, TX, USA, 4–6 October 2009; pp. 44–54. [Google Scholar]

- Danalis, A.; Marin, G.; McCurdy, C.; Meredith, J.S.; Roth, P.C.; Spafford, K.; Tipparaju, V.; Vetter, J.S. The scalable heterogeneous computing (SHOC) benchmark suite. In Proceedings of the 3rd Workshop on General-Purpose Computation on Graphics Processing Units, Pittsburgh, PA, USA, 14 March 2010; pp. 63–74. [Google Scholar]

- Aroca, R.V.; Gonçalves, L.M.G. Towards green data centers: A comparison of x86 and ARM architectures power efficiency. J. Parallel Distrib. Comput. 2012, 72, 1770–1780. [Google Scholar] [CrossRef]

- Mittal, S.; Vetter, J.S. A survey of CPU-GPU heterogeneous computing techniques. ACM Comput. Surv. (CSUR) 2015, 47, 1–35. [Google Scholar] [CrossRef]

- Brodtkorb, A.R.; Dyken, C.; Hagen, T.R.; Hjelmervik, J.M.; Storaasli, O.O. State-of-the-art in heterogeneous computing. Sci. Program. 2010, 18, 1–33. [Google Scholar] [CrossRef]

- Khokhar, A.A.; Prasanna, V.K.; Shaaban, M.E.; Wang, C.L. Heterogeneous computing: Challenges and opportunities. Computer 1993, 26, 18–27. [Google Scholar] [CrossRef]

- Padoin, E.L.; Pilla, L.L.; Castro, M.; Boito, F.Z.; Alexandre Navaux, P.O.; Méhaut, J.F. Performance/energy trade-off in scientific computing: The case of ARM big.LITTLE and Intel Sandy Bridge. IET Comput. Digit. Tech. 2015, 9, 27–35. [Google Scholar] [CrossRef]

- Jarus, M.; Varrette, S.; Oleksiak, A.; Bouvry, P. Performance evaluation and energy efficiency of high-density HPC platforms based on Intel, AMD and ARM processors. In Proceedings of the Energy Efficiency in Large Scale Distributed Systems: COST IC0804 European Conference, EE-LSDS 2013, Vienna, Austria, 22–24 April 2013; Revised Selected Papers. Springer: Berlin/Heidelberg, Germany, 2013; pp. 182–200. [Google Scholar]

- Madni, S.; Shafiq, M.; Rashid, H.U. ARMINTEL: A heterogeneous microprocessor architecture enabling intel applications on ARM. In Proceedings of the 2020 17th International Bhurban Conference on Applied Sciences and Technology (IBCAST), Islamabad, Pakistan, 14–18 January 2020; pp. 370–377. [Google Scholar]

- Dayarathna, M.; Wen, Y.; Fan, R. Data center energy consumption modeling: A survey. IEEE Commun. Surv. Tutor. 2015, 18, 732–794. [Google Scholar] [CrossRef]

- Rambo, J.; Joshi, Y. Modeling of data center airflow and heat transfer: State of the art and future trends. Distrib. Parallel Databases 2007, 21, 193–225. [Google Scholar] [CrossRef]

- Zhang, Q.; Meng, Z.; Hong, X.; Zhan, Y.; Liu, J.; Dong, J.; Bai, T.; Niu, J.; Deen, M.J. A survey on data center cooling systems: Technology, power consumption modeling and control strategy optimization. J. Syst. Archit. 2021, 119, 102253. [Google Scholar] [CrossRef]

- Calheiros, R.N.; Ranjan, R.; Rose, C.A.F.D.; Buyya, R. CloudSim: A Novel Framework for Modeling and Simulation of Cloud Computing Infrastructures and Services. arXiv 2009, arXiv:0903.2525. [Google Scholar]

- Luo, L.; Wu, W.; Tsai, W.T.; Di, D.; Zhang, F. Simulation of power consumption of cloud data centers. Simul. Model. Pract. Theory 2013, 39, 152–171. [Google Scholar] [CrossRef]

- Prevost, J.J.; Nagothu, K.; Kelley, B.; Jamshidi, M. Prediction of cloud data center networks loads using stochastic and neural models. In Proceedings of the 2011 6th International Conference on System of Systems Engineering, Albuquerque, NM, USA, 27–30 June 2011; pp. 276–281. [Google Scholar]

- Meisner, D.; Wenisch, T.F. Stochastic Queuing Simulation for Data Center Workloads. In Proceedings of the Exascale Evaluation and Research Techniques Workshop; 2010; Volume 16, p. 37. Available online: https://web.eecs.umich.edu/~twenisch/papers/exert10.pdf (accessed on 5 May 2023).

- Shen, D.; Luo, J.; Dong, F.; Fei, X.; Wang, W.; Jin, G.; Li, W. Stochastic modeling of dynamic right-sizing for energy-efficiency in cloud data centers. Future Gener. Comput. Syst. 2015, 48, 82–95. [Google Scholar] [CrossRef]

- Balbo, G.; Bruell, S.C.; Ghanta, S. Combining queueing networks and generalized stochastic Petri nets for the solution of complex models of system behavior. IEEE Trans. Comput. 1988, 37, 1251–1268. [Google Scholar] [CrossRef]

- Bause, F. Queueing Petri Nets-A formalism for the combined qualitative and quantitative analysis of systems. In Proceedings of the 5th International Workshop on Petri Nets and Performance Models, Toulouse, France, 19–22 October 1993; pp. 14–23. [Google Scholar]

- Lara, J.d.; Vangheluwe, H. AToM 3: A Tool for Multi-formalism and Meta-modelling. In Proceedings of the Fundamental Approaches to Software Engineering: 5th International Conference, FASE 2002 Held as Part of the Joint European Conferences on Theory and Practice of Software, ETAPS 2002, Grenoble, France, 8–12 April 2002; Proceedings 5. Springer: Berlin/Heidelberg, Germany, 2002; pp. 174–188. [Google Scholar]

- Ciardo, G.; Jones, R.L., III; Miner, A.S.; Siminiceanu, R.I. Logic and stochastic modeling with SMART. Perform. Eval. 2006, 63, 578–608. [Google Scholar] [CrossRef]

- Trivedi, K.S. SHARPE 2002: Symbolic Hierarchical Automated Reliability and Performance Evaluator. In Proceedings of the 2002 International Conference on Dependable Systems and Networks, Washington, DC, USA, 23–26 June 2002; IEEE Computer Society: Washington, DC, USA, 2002; p. 544. [Google Scholar]

- Franceschinis, F.; Gribaudo, M.; Iacono, M.; Mazzocca, N.; Vittorini, V. Towards an Object Based Multi-Formalism Multi-Solution Modeling Approach. In Proceedings of the Second International Workshop on Modelling of Objects, Components, and Agents (MOCA’02), Aarhus, Denmark, 26–27 August 2002; Technical Report DAIMI PB-561. Moldt, D., Ed.; 2002; pp. 47–66. [Google Scholar]

- Clark, G.; Courtney, T.; Daly, D.; Deavours, D.; Derisavi, S.; Doyle, J.; Sanders, W.; Webster, P. The Mobius modeling tool. In Proceedings of the 9th International Workshop on Petri Nets and Performance Models, Aachen, Germany, 11–14 September 2001; pp. 241–250. [Google Scholar] [CrossRef]

- Barbierato, E.; Bobbio, A.; Gribaudo, M.; Iacono, M. Multiformalism to support software rejuvenation modeling. In Proceedings of the 2012 IEEE 23rd International Symposium on Software Reliability Engineering Workshops, Dallas, TX, USA, 27–30 November 2012; pp. 271–276. [Google Scholar]

- Bertoli, M.; Casale, G.; Serazzi, G. JMT: Performance engineering tools for system modeling. ACM SIGMETRICS Perform. Eval. Rev. 2009, 36, 10–15. [Google Scholar] [CrossRef]

- Li, Z.; Kandlikar, S.G. Current status and future trends in data-center cooling technologies. Heat Transf. Eng. 2015, 36, 523–538. [Google Scholar] [CrossRef]

- Bilal, K.; Khalid, O.; Erbad, A.; Khan, S.U. Potentials, trends, and prospects in edge technologies: Fog, cloudlet, mobile edge, and micro data centers. Comput. Netw. 2018, 130, 94–120. [Google Scholar] [CrossRef]

- SG Andrae, A. New perspectives on internet electricity use in 2030. Eng. Appl. Sci. Lett. 2020, 3, 19–31. [Google Scholar]

- Heath, J.P.; Harding, J.H.; Sinclair, D.C.; Dean, J.S. The analysis of impedance spectra for core–shell microstructures: Why a multiformalism approach is essential. Adv. Funct. Mater. 2019, 29, 1904036. [Google Scholar] [CrossRef]

- Khan, S.; Volk, M.; Katoen, J.P.; Braibant, A.; Bouissou, M. Model checking the multi-formalism language Figaro. In Proceedings of the 2021 51st Annual IEEE/IFIP International Conference on Dependable Systems and Networks (DSN), Taipei, Taiwan, 21–24 June 2021; pp. 463–470. [Google Scholar]

| Issue | Risk |

|---|---|

| Cooling and energy consumption | High electricity bills and environmental concerns |

| Security | Unauthorised access |

| Maintenance and repair | Time-consuming and possibly expensive process |

| Capacity and scalability | Significant investments in new hardware and infrastructure |

| Disaster recovery | Profit loss without a proper recovery plan and robust architecture |

| Component | Description |

|---|---|

| Servers | The physical computer systems that enforce data processing and storage. |

| Networking equipment | includes switches, routers, firewalls, and other components connecting servers to each other and to the external world. |

| Storage devices | Used to store vast amounts of data, such as hard disk drives (HDDs), solid-state drives (SSDs), and possibly tape drives. |

| Power and cooling systems | Data centres require an impressive amount of power to work. As a result, they generate a significant amount of heat; therefore, cooling systems are necessary to prevent overheating. |

| Backup and redundancy systems | To ensure that data are available on a 24/7 basis, data centres often have backup power supplies, backup networking equipment, and redundant storage devices and servers. |

| Physical security | Stored data contain sensitive and valuable information, so physical security measures, such as biometric access controls, should be used. |

| Management software | Data centres require software to manage and monitor the networking equipment, servers, and storage devices. |

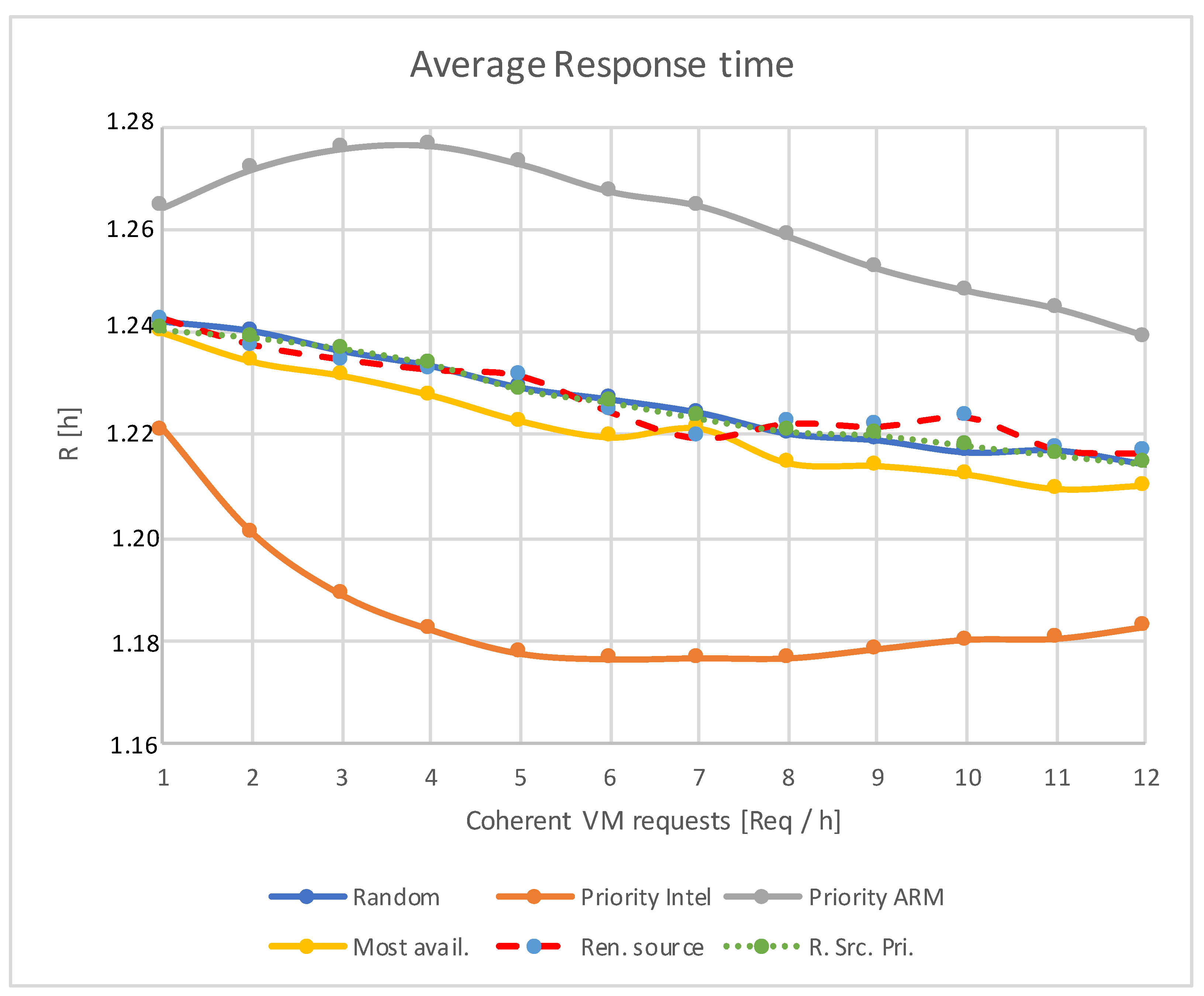

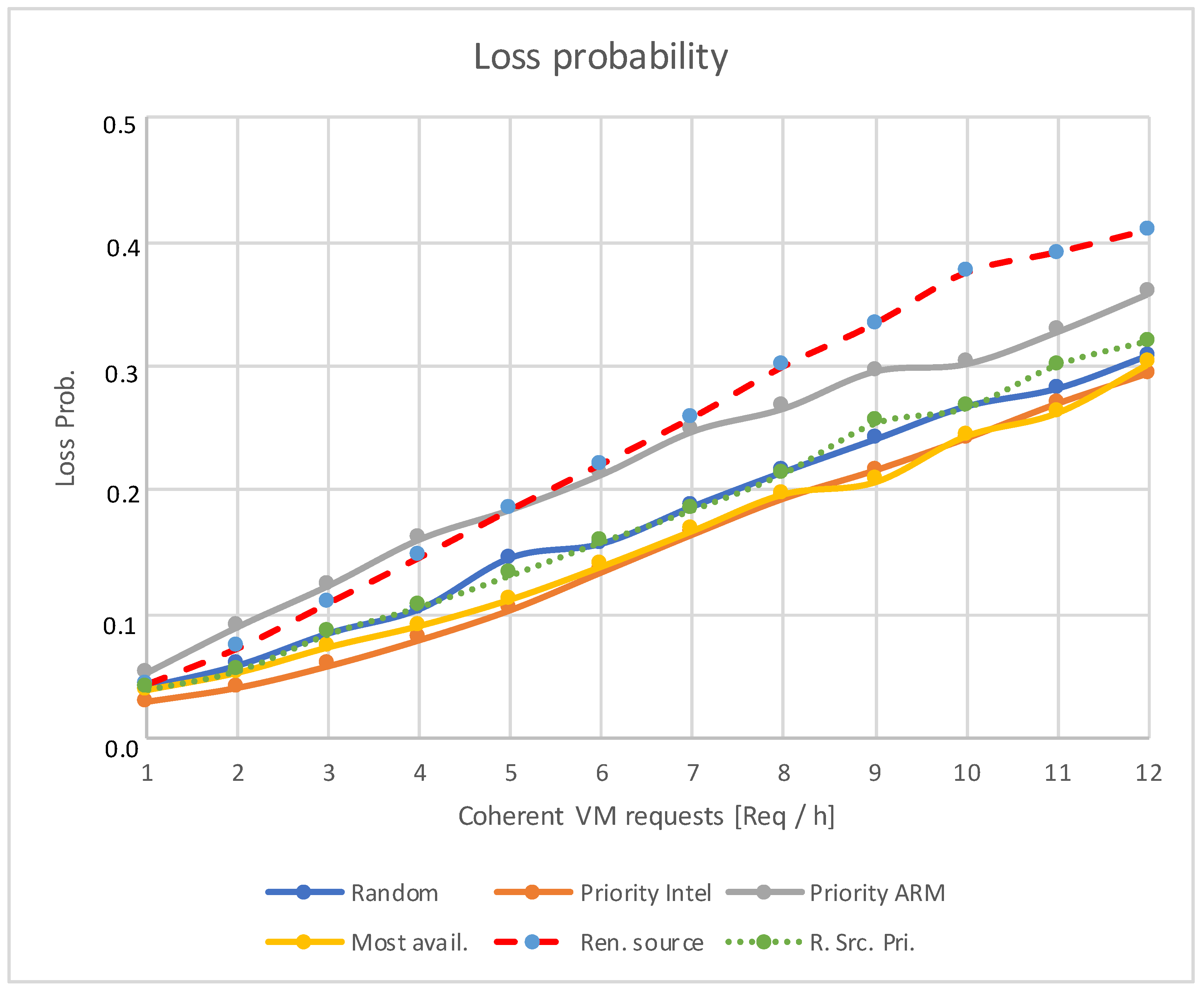

| Performance Index | Description |

|---|---|

| Probability of choosing one architecture over another | It models a situation when INTEL servers are not available to satisfy the demand. Consequently, ARM servers are allocated |

| Power consumption | The different power consumption depending on the chosen policy. For example, if INTEL servers are preferred, the energy consumption tends to be higher |

| Response time | The total time a request spends in the system |

| Loss probability | The probability of losing access to a VM |

| Parameter | Value |

|---|---|

| Arrival rate: INTEL | 4 VM/h |

| Arrival rate: ARM | 4 VM/h |

| Arrival rate: Coherent | between 1 and 12 VM/h |

| Service time: INTEL job on INTEL VM | 1 h |

| Service time: ARM job on ARM VM | 1.5 h |

| Service time: job (coherent) on INTEL VM | 1 h |

| Service time: job (coherent) on ARM VM | 1.5 h |

| Average ON time of renewable source | 12 h |

| Average OFF time of renewable source | 12 h |

| Baseline power consumption of all the VMs | 600 W |

| Utilisation-dependent power consumption per INTEL VM | 150 W |

| Utilisation-dependent power consumption per ARM VM | 80 W |

| ARM VMs | 10 |

| INTEL VMs | 10 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Barbierato, E.; Manini, D.; Gribaudo, M. A Multiformalism-Based Model for Performance Evaluation of Green Data Centres. Electronics 2023, 12, 2169. https://doi.org/10.3390/electronics12102169

Barbierato E, Manini D, Gribaudo M. A Multiformalism-Based Model for Performance Evaluation of Green Data Centres. Electronics. 2023; 12(10):2169. https://doi.org/10.3390/electronics12102169

Chicago/Turabian StyleBarbierato, Enrico, Daniele Manini, and Marco Gribaudo. 2023. "A Multiformalism-Based Model for Performance Evaluation of Green Data Centres" Electronics 12, no. 10: 2169. https://doi.org/10.3390/electronics12102169

APA StyleBarbierato, E., Manini, D., & Gribaudo, M. (2023). A Multiformalism-Based Model for Performance Evaluation of Green Data Centres. Electronics, 12(10), 2169. https://doi.org/10.3390/electronics12102169