Automatic Modulation Classification Based on CNN and Multiple Kernel Maximum Mean Discrepancy

Abstract

1. Introduction

2. Related Work

2.1. Signal Model

2.2. Multiple Kernel Variant of Maximum Mean Discrepancies (MK-MMD)

3. Methodology

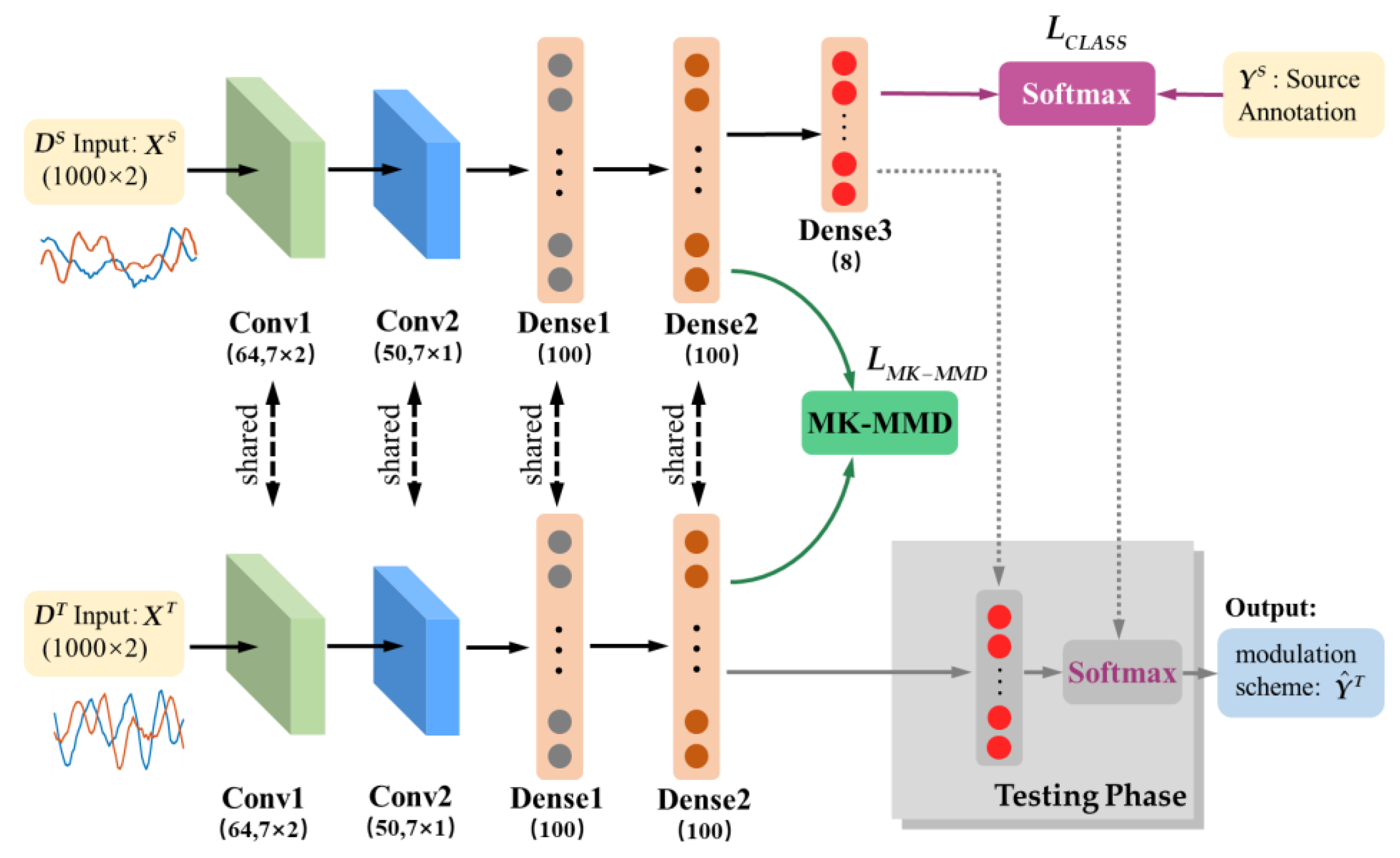

3.1. The Proposed CNN-MK-MMD Network for AMC

3.2. Loss Function and Training Strategy

4. Experiments and Result Analysis

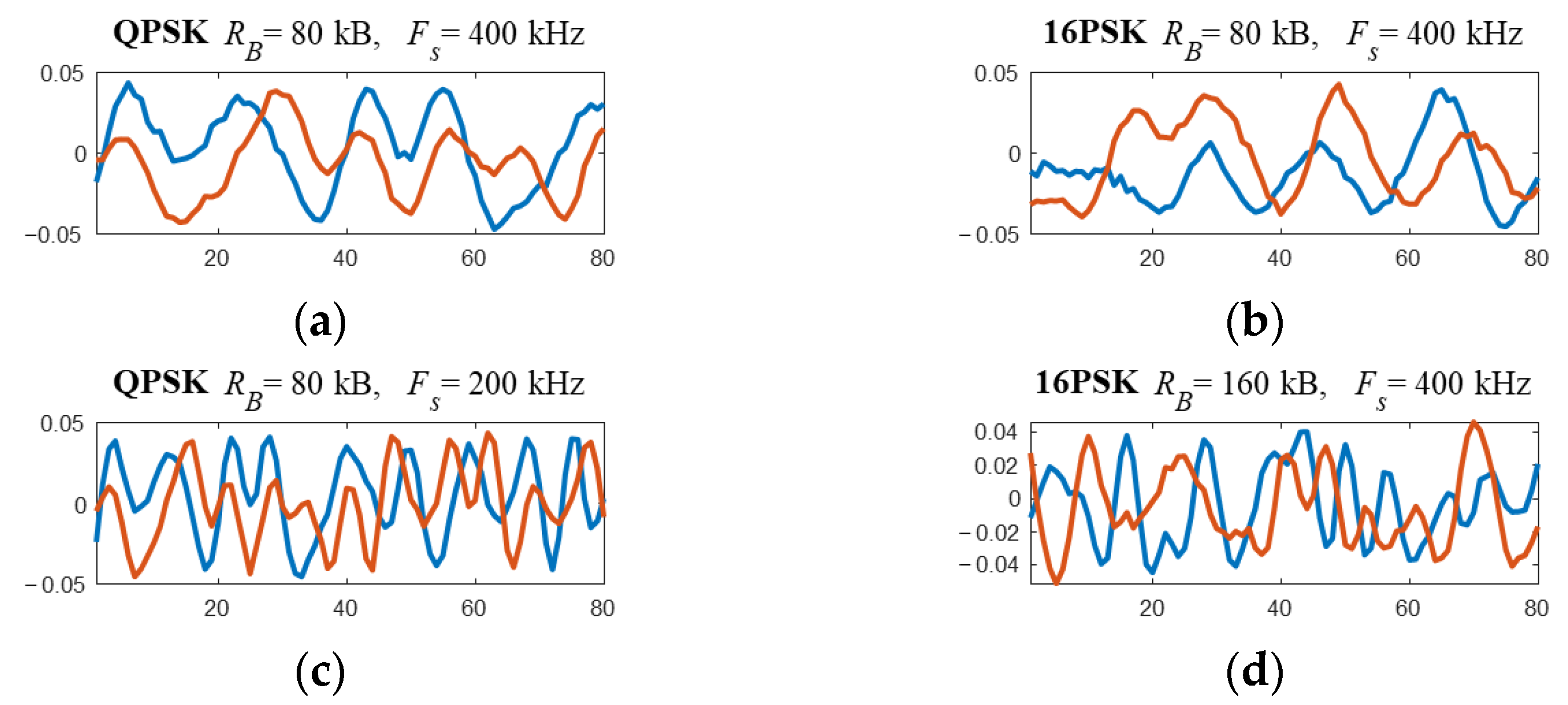

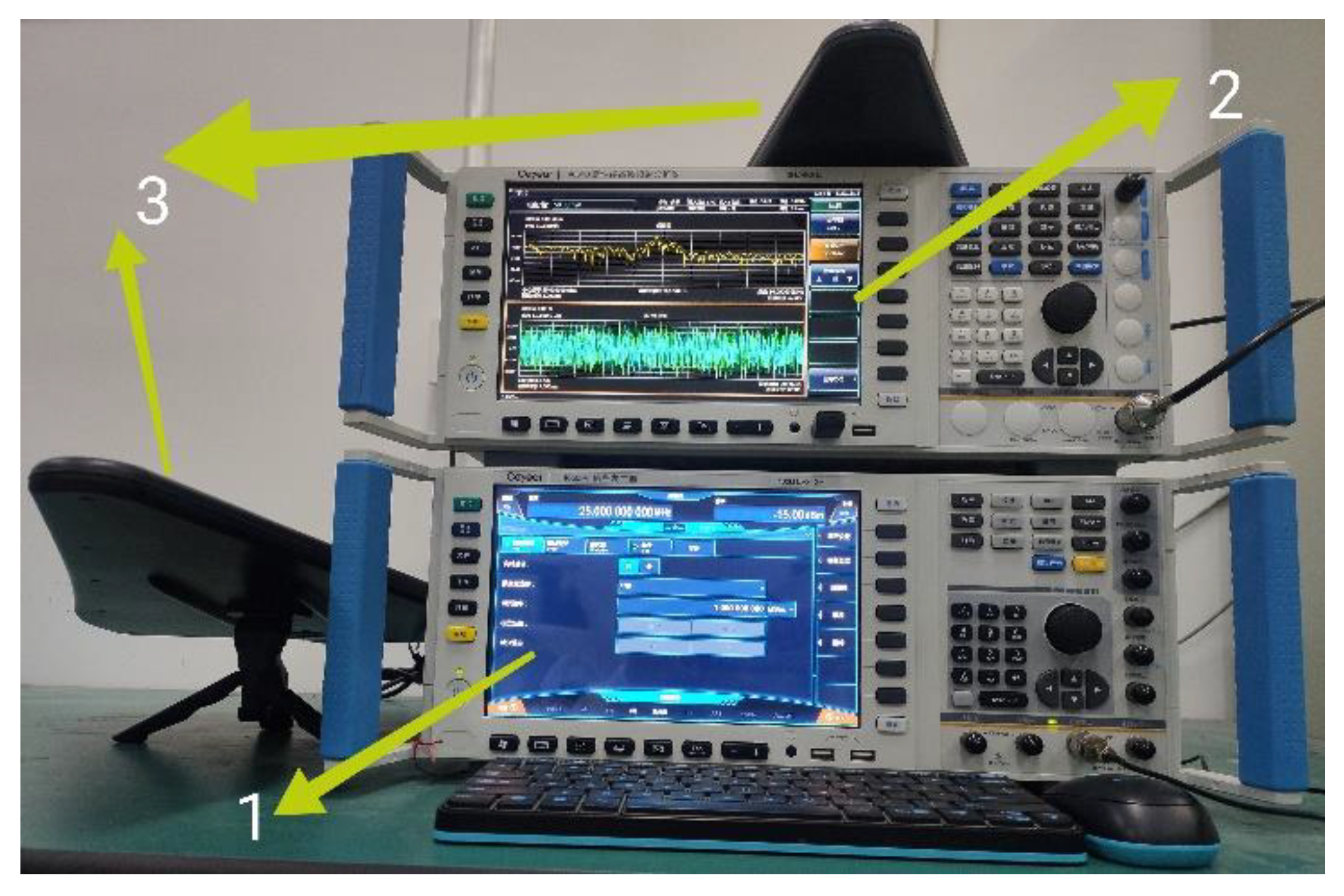

4.1. Dataset and Experimental Settings

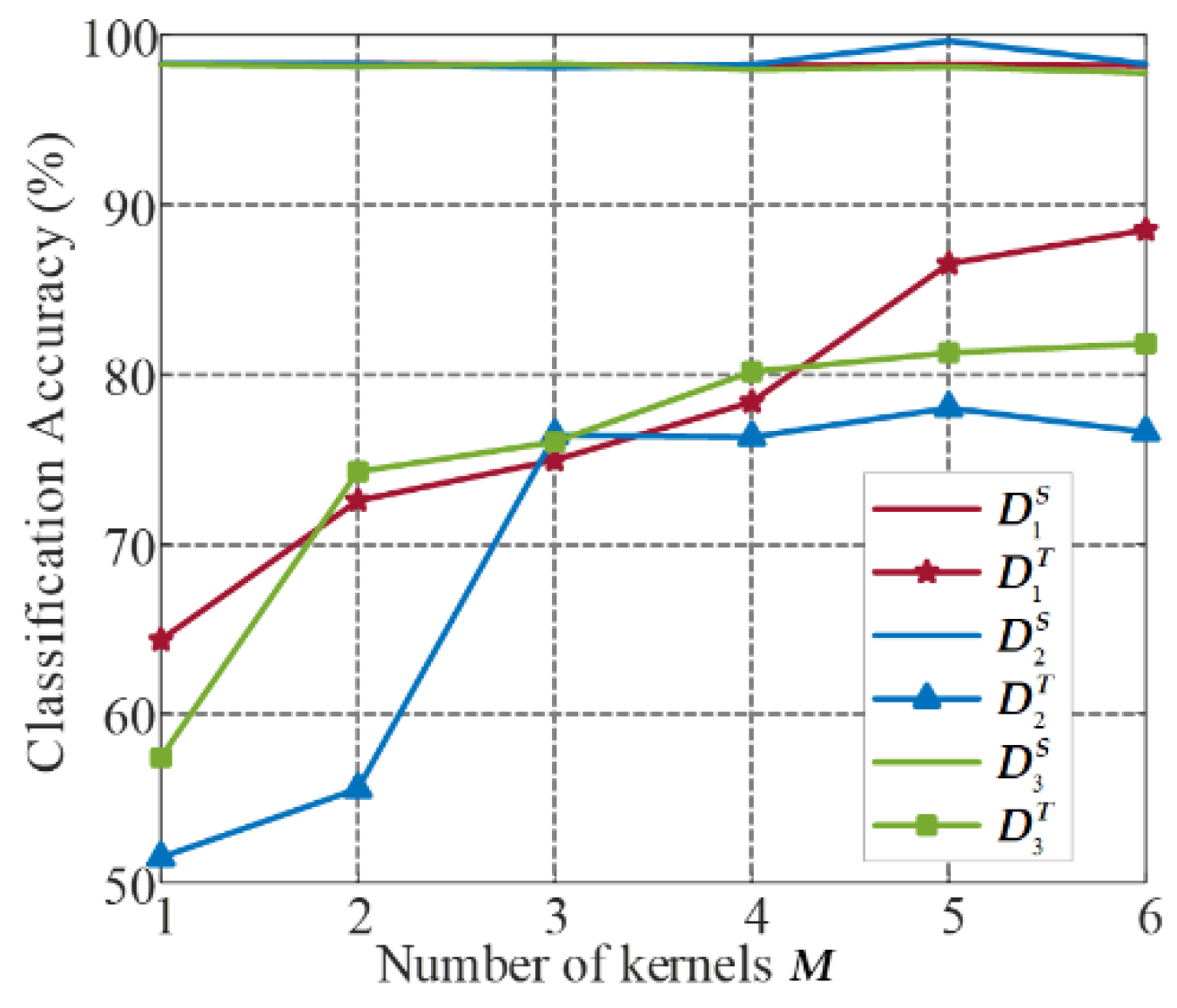

4.2. Determination of Number of Kernels

4.3. Performance Comparison with State-of-the-Art Methods

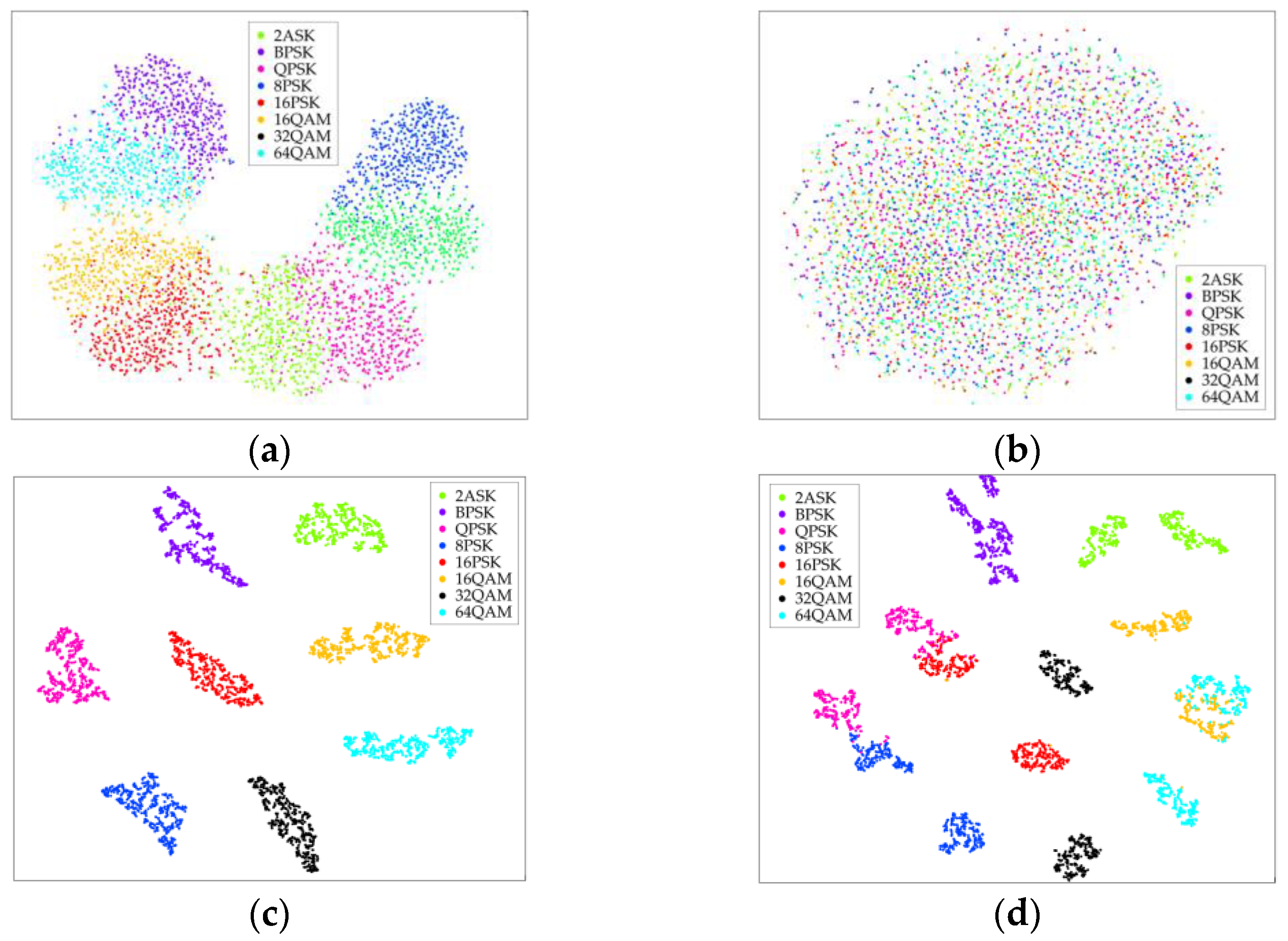

4.4. Visualizaiton of Confusion Matrix and Feature Distribution

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Dobre, O.A.; Abdi, A.; Bar-Ness, Y.; Su, W. Survey of automatic modulation classification techniques: Classical approaches and new trends. IET Commun. 2007, 1, 137–156. [Google Scholar] [CrossRef]

- Huang, S.; Yao, Y.; Wei, Z.; Feng, Z.; Zhang, P. Automatic modulation classification of overlapped sources using multiple cumulants. IEEE Trans. Veh. Technol. 2017, 66, 6089–6101. [Google Scholar] [CrossRef]

- Han, L.; Gao, F.; Li, Z.; Dobre, O.A. Low complexity automatic modulation classification based on order-statistics. IEEE Trans. Wirel. Commun. 2017, 16, 400–411. [Google Scholar] [CrossRef]

- Jafar, N.; Paeiz, A.; Farzaneh, A. Automatic modulation classification using modulation fingerprint extraction. J. Syst. Eng. Electron. 2021, 32, 799–810. [Google Scholar] [CrossRef]

- Wu, H.; Hua, X. A review of digital signal modulation methods based on wavelet transform. In Proceedings of the 2020 IEEE International Conference on Mechatronics and Automation (ICMA), Beijing, China, 13–16 October 2020; pp. 1123–1128. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Eliasmith, Y.T.C. Deep networks for robust visual recognition. In Proceedings of the 27th International Conference on International Conference on Machine Learning, Omnipress, Haifa, Israel, 21–24 June 2010; pp. 1055–1062. [Google Scholar]

- Voulodimos, A.; Doulamis, N.; Doulamis, A.; Protopapadakis, E. Deep learning for computer vision: A brief review. Comput. Intell. Neurosci. 2018, 2018, 7068349. [Google Scholar] [CrossRef]

- Hinton, G.; Deng, L.; Yu, D.; Dahl, G.; Mohamed, A.-R.; Jaitly, N.; Senior, A.; Vanhoucke, V.; Nguyen, P.; Sainath, T.; et al. Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups. IEEE Signal Process. Mag. 2012, 29, 82–97. [Google Scholar] [CrossRef]

- Dahl, G.E.; Dong, Y.; Li, D.; Acero, A. Context-dependent pre-trained deep neural networks for large-vocabulary speech recognition. IEEE Trans. Audio Speech Lang. Process. 2012, 20, 30–42. [Google Scholar] [CrossRef]

- Young, T.; Hazarika, D.; Poria, S.; Cambria, E. Recent trends in deep learning based natural language processing [Review Article]. IEEE Comput. Intell. Mag. 2018, 13, 55–75. [Google Scholar] [CrossRef]

- O’Shea, T.J.; Corgan, J.; Clancy, T.C. Convolutional radio modulation recognition networks. Comm. Com. Inf. Sci. 2016, 629, 213–226. [Google Scholar] [CrossRef]

- O’Shea, T.J.; Roy, T.; Clancy, T.C. Over-the-air deep learning based radio signal classification. IEEE J. Sel. Top. Signal Process. 2018, 12, 168–179. [Google Scholar] [CrossRef]

- Zhang, Z.; Luo, H.; Wang, C.; Gan, C.; Xiang, Y. Automatic modulation classification using CNN-LSTM Based dual-stream structure. IEEE Trans. Veh. Technol. 2020, 69, 13521–13531. [Google Scholar] [CrossRef]

- Zhang, F.; Luo, C.; Xu, J.; Luo, Y.; Zheng, F.-C. Deep learning based automatic modulation recognition: Models, datasets, and challenges. Digit. Signal Process. 2022, 129, 103650. [Google Scholar] [CrossRef]

- Zhou, R.; Liu, F.; Gravelle, C.W. Deep learning for modulation recognition: A survey with a demonstration. IEEE Access 2020, 8, 67366–67376. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, Y.; Yang, C. Modulation recognition with graph convolutional network. IEEE Wirel. Commun. Lett. 2020, 9, 624–627. [Google Scholar] [CrossRef]

- Krzyston, J.; Bhattacharjea, R.; Stark, A. Complex-valued convolutions for modulation recognition using deep learning. In Proceedings of the 2020 IEEE International Conference on Communications Workshops (ICC Workshops), Dublin, Ireland, 7–11 June 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Zeng, Y.; Zhang, M.; Han, F.; Gong, Y.; Zhang, J. Spectrum analysis and convolutional neural network for automatic modulation recognition. IEEE Wirel. Commun. Lett. 2019, 8, 929–932. [Google Scholar] [CrossRef]

- Peng, S.; Jiang, H.; Wang, H.; Alwageed, H.; Zhou, Y.; Sebdani, M.M.; Yao, Y.D. Modulation classification based on signal constellation diagrams and deep learning. IEEE Trans. Neural. Netw. Learn. Syst. 2019, 30, 718–727. [Google Scholar] [CrossRef] [PubMed]

- Huang, S.; Jiang, Y.; Gao, Y.; Feng, Z.; Zhang, P. Automatic modulation classification using contrastive fully convolutional network. IEEE Wirel. Commun. Lett. 2019, 8, 1044–1047. [Google Scholar] [CrossRef]

- Kumar, Y.; Sheoran, M.; Jajoo, G.; Yadav, S.K. Automatic modulation classification based on constellation density using deep learning. IEEE Commun. Lett. 2020, 24, 1275–1278. [Google Scholar] [CrossRef]

- Liu, M.; Liao, G.; Zhao, N.; Song, H.; Gong, F. Data-Driven deep learning for signal classification in industrial cognitive radio networks. IEEE Trans. Ind. Inform. 2021, 17, 3412–3421. [Google Scholar] [CrossRef]

- Zhang, M.; Zeng, Y.; Han, Z.D.; Gong, Y. Automatic modulation recognition using deep learning architectures. IEEE Int. Work. Sign. P 2018, 281–285. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, M.; Yang, J.; Gui, G. Data-driven deep learning for automatic modulation recognition in cognitive radios. IEEE Trans. Veh. Technol. 2019, 68, 4074–4077. [Google Scholar] [CrossRef]

- O’Shea, N.E.W.T.J. Deep architectures for modulation recognition. In Proceedings of the 2017 IEEE International Symposium on Dynamic Spectrum Access Networks (DySPAN), Baltimore, MD, USA, 6–9 March 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Ghasemzadeh, P.; Hempel, M.; Sharif, H. GS-QRNN: A high-efficiency automatic modulation classifier for cognitive radio IoT. IEEE Internet Things J. 2022, 9, 9467–9477. [Google Scholar] [CrossRef]

- Ghasemzadeh, P.; Banerjee, S.; Hempel, M.; Sharif, H. A novel deep learning and polar transformation framework for an adaptive automatic modulation classification. IEEE Trans. Veh. Technol. 2020, 69, 13243–13258. [Google Scholar] [CrossRef]

- Chang, S.; Huang, S.; Zhang, R.; Feng, Z.; Liu, L. Multitask-learning-based deep neural network for automatic modulation classification. IEEE Internet Things J. 2022, 9, 2192–2206. [Google Scholar] [CrossRef]

- Perenda, E.; Rajendran, S.; Bovet, G.; Pollin, S.; Zheleva, M. Learning the unknown: Improving modulation classification performance in unseen scenarios. In Proceedings of the IEEE Conference on Computer Communications, Vancouver, BC, Canada, 10–13 May 2021. [Google Scholar] [CrossRef]

- Bu, K.; He, Y.; Jing, X.; Han, J. Adversarial transfer learning for deep learning based automatic modulation classification. IEEE Signal Process. Lett. 2020, 27, 880–884. [Google Scholar] [CrossRef]

- Wang, Q.; Du, P.; Yang, J.; Wang, G.; Lei, J.; Hou, C. Transferred deep learning based waveform recognition for cognitive passive radar. Signal Process. 2019, 155, 259–267. [Google Scholar] [CrossRef]

- Xu, Y.; Li, D.; Wang, Z.; Guo, Q.; Xiang, W. A deep learning method based on convolutional neural network for automatic modulation classification of wireless signals. Wirel. Netw. 2018, 25, 3735–3746. [Google Scholar] [CrossRef]

- Long, M.S.; Cao, Y.; Wang, J.M.; Jordan, M.I. Learning transferable features with deep adaptation networks. Pr. Mach. Learn. Res. 2015, 37, 97–105. [Google Scholar]

- Sun, B.C.; Saenko, K. Deep CORAL: Correlation alignment for deep domain adaptation. Lect. Notes Comput. Sci. 2016, 9915, 443–450. [Google Scholar] [CrossRef]

- Inoue, N.; Furuta, R.; Yamasaki, T.; Aizawa, K. Cross-domain weakly-supervised object detection through progressive domain adaptation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5001–5009. [Google Scholar] [CrossRef]

- Tang, Y.; Wang, J.; Gao, B.; Dellandrea, E.; Gaizauskas, R.; Chen, L. Large Scale Semi-Supervised Object Detection Using Visual and Semantic Knowledge Transfer. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2119–2128. [Google Scholar] [CrossRef]

- Zhang, J.; Li, W.; Ogunbona, P. Joint geometrical and statistical alignment for visual domain adaptation. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5150–5158. [Google Scholar] [CrossRef]

- Tzeng, E.; Hoffman, J.; Saenko, K.; Darrell, T. Adversarial discriminative domain adaptation. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2962–2971. [Google Scholar] [CrossRef]

- Han, Z.; Gui, X.J.; Sun, H.; Yin, Y.; Li, S. Towards accurate and robust domain adaptation under multiple noisy environments. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 1–18. [Google Scholar] [CrossRef]

- Gallego, A.J.; Calvo-Zaragoza, J.; Fisher, R.B. Incremental unsupervised domain-adversarial training of neural networks. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 4864–4878. [Google Scholar] [CrossRef] [PubMed]

- Zhu, Y.; Zhuang, F.; Wang, J.; Ke, G.; Chen, J.; Bian, J.; Xiong, H.; He, Q. Deep subdomain adaptation network for image classification. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 1713–1722. [Google Scholar] [CrossRef] [PubMed]

- Wang, M.; Deng, W. Deep visual domain adaptation: A survey. Neurocomputing 2018, 312, 135–153. [Google Scholar] [CrossRef]

- Patel, V.M.; Gopalan, R.; Li, R.; Chellappa, R. Visual domain adaptation: A survey of recent advances. IEEE Signal Process. Mag. 2015, 32, 53–69. [Google Scholar] [CrossRef]

- Borgwardt, K.M.; Gretton, A.; Rasch, M.J.; Kriegel, H.P.; Scholkopf, B.; Smola, A.J. Integrating structured biological data by Kernel maximum mean discrepancy. Bioinformatics 2006, 22, e49–e57. [Google Scholar] [CrossRef] [PubMed]

- Sun, B.; Feng, J.; Saenko, K.J. Return of frustratingly easy domain adaptation. arXiv 2015, arXiv:1511.05547. [Google Scholar] [CrossRef]

- Long, M.; Zhu, H.; Wang, J.; Jordan, M.I.J. Deep transfer learning with joint adaptation networks. arXiv 2016, arXiv:1605.06636. [Google Scholar]

- O’Shea, T.J.; West, N. Radio machine learning dataset generation with GNU radio. In Proceedings of the GNU Radio Conference, Boulder, CO, USA, 12–16 September 2016. [Google Scholar]

- Maaten, L.J.P.; Hinton, G.E. Visualizing high-dimensional data using t-sne. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

| Methods | Model | Dataset Type | Variation Setting (DS—DT) | No. of Models | Improved Domain |

|---|---|---|---|---|---|

| Feature Extraction | RN [13] | Both | Synthetic—Real | 2 | Target |

| STN-ResNeXt [30] | Synthetic | Fs:1.5k—1k and 2k | 1 | Both | |

| Fine-Tuning | ATLA [31] | Synthetic | Fs:Fs—1/2 Fs | 2 | Target |

| TCNN-BL [32] | Synthetic | Fs:1M—0.5M | 2 | Target | |

| CNNs-TR [33] | Real | SNR Variation | 2 | Target |

| Number | Device Names | Model Number |

|---|---|---|

| 1 | signal generator | Ceyear 1465D-V (Ceayer Technologies Co., Ltd., Qingdao, China) |

| 2 | frequency analyzer | Ceyear 4051B(Ceayer Technologies Co., Ltd., Qingdao, China) |

| 3 | antenna | HyperLOG3080X(HyperLOG3080X is AARONIA, Germany) |

| Dataset | Symbol Rate RB (Baud) | Sampling Frequency FS (Hz) | No. Points per Symbol α (FS/RB) |

|---|---|---|---|

| Source dataset 1 () | 80k | 400k | 6.250 |

| Target dataset 1 () | 120k | 400k | 4.167 |

| Source dataset 2 () | 120k | 400k | 4.167 |

| Target dataset 2 () | 160k | 400k | 3.125 |

| Source dataset 3 () | 80k | 400k | 6.250 |

| Target dataset 3 () | 80k | 200k | 3.125 |

| Methods | Target Label Needed | No. of Models | Parameter (MB) | Trainning Time (Epoch/s) |

|---|---|---|---|---|

| CNN | N | 1 | 8.73 | 1.628 |

| CNN-TR [32,33] | Y | 2 | 8.73 | 1.625 |

| CNN-STN [30] | N | 1 | 10.11 | 1.731 |

| CNN-CORAL [35] | N | 1 | 8.73 | 1.639 |

| CNN-1K-MMD | N | 1 | 8.73 | 1.653 |

| CNN-5K-MMD | N | 1 | 8.73 | 1.659 |

| Methods | Average | ||||||

|---|---|---|---|---|---|---|---|

| CNN | 99.20% | 29.69% | 97.64% | 36.82% | 97.50% | 21.25% | 63.68% |

| 64.45% | 67.23% | 59.38% | |||||

| CNN-TR [32,33] | 15.70% | 96.71% | 23.26% | 78.50% | 21.25% | 79.79% | 52.54% |

| 56.21% | 50.88% | 50.52% | |||||

| CNN-STN [30] | 97.62% | 45.38% | 90.50% | 46.38% | 98.25% | 41.00% | 69.86% |

| 71.50% | 68.44% | 69.63% | |||||

| CNN-CORAL [35] | 98.29% | 70.54% | 87.39% | 50.32% | 98.29% | 52.25% | 76.18% |

| 84.42% | 68.86% | 75.27% | |||||

| CNN-1K-MMD | 98.25% | 64.29% | 98.29% | 51.29% | 98.25% | 57.25% | 77.94% |

| 81.27% | 74.79% | 77.75% | |||||

| CNN-5K-MMD | 98.25% | 86.61% | 98.21% | 77.36% | 98.21% | 80.11% | 89.79% |

| 92.43% | 87.79% | 89.16% | |||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, N.; Liu, Y.; Ma, L.; Yang, Y.; Wang, H. Automatic Modulation Classification Based on CNN and Multiple Kernel Maximum Mean Discrepancy. Electronics 2023, 12, 66. https://doi.org/10.3390/electronics12010066

Wang N, Liu Y, Ma L, Yang Y, Wang H. Automatic Modulation Classification Based on CNN and Multiple Kernel Maximum Mean Discrepancy. Electronics. 2023; 12(1):66. https://doi.org/10.3390/electronics12010066

Chicago/Turabian StyleWang, Na, Yunxia Liu, Liang Ma, Yang Yang, and Hongjun Wang. 2023. "Automatic Modulation Classification Based on CNN and Multiple Kernel Maximum Mean Discrepancy" Electronics 12, no. 1: 66. https://doi.org/10.3390/electronics12010066

APA StyleWang, N., Liu, Y., Ma, L., Yang, Y., & Wang, H. (2023). Automatic Modulation Classification Based on CNN and Multiple Kernel Maximum Mean Discrepancy. Electronics, 12(1), 66. https://doi.org/10.3390/electronics12010066