Voltage Based Electronic Control Unit (ECU) Identification with Convolutional Neural Networks and Walsh–Hadamard Transform

Abstract

1. Introduction

2. Related Work

3. Methodology and Materials

3.1. SAE J1939 and ISO 11898

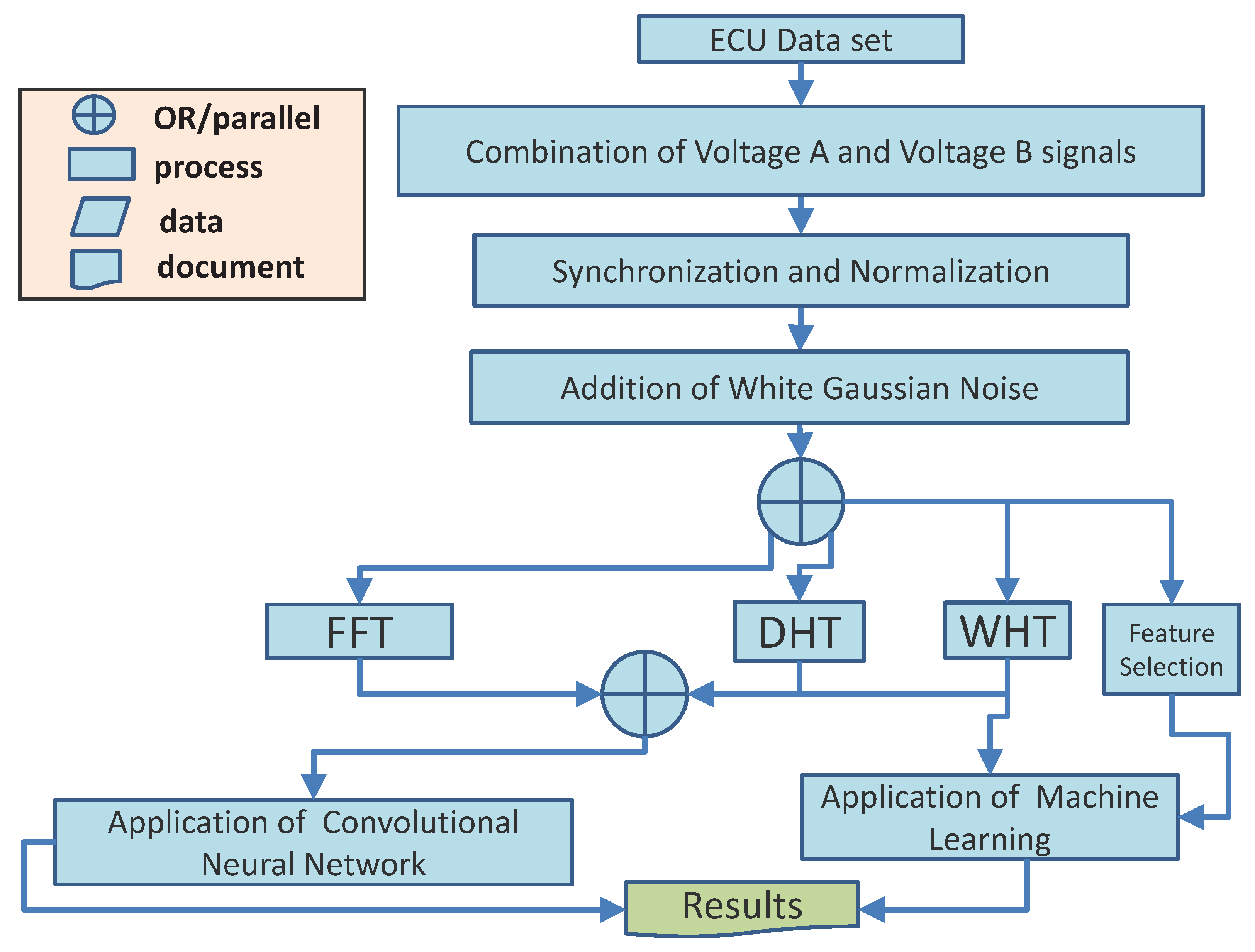

3.2. Workflow

- In the first step, the ECU data set (described in detail in Section 3.3) is processed to combine the signals of Voltage A and Voltage B, as the approach proposed in this study is based on both signals. As shown later, in the Results Section 6, a comparison with a data set composed only of Voltage A or Voltage B is also executed, but the results show that the combination of the two signals, Voltage A and B, provides a higher identification performance.

- The signals are synchronized and normalized to ensure that the approach is uniquely based on the shape of the signals and not on other factors such as the difference in timing (e.g., based on the evaluation of the skew). In this way, the proposed approach is robust against spoofing attacks based on timing/skew.

- To emulate the presence of noise in the vehicle environment, and in particular, in the in-vehicle network where the ECU is connected, AWGN is added to the signals in the data set to emulate different SNR conditions. The ’awgn’ MATLAB function from the signal processing toolbox is used for this purpose.

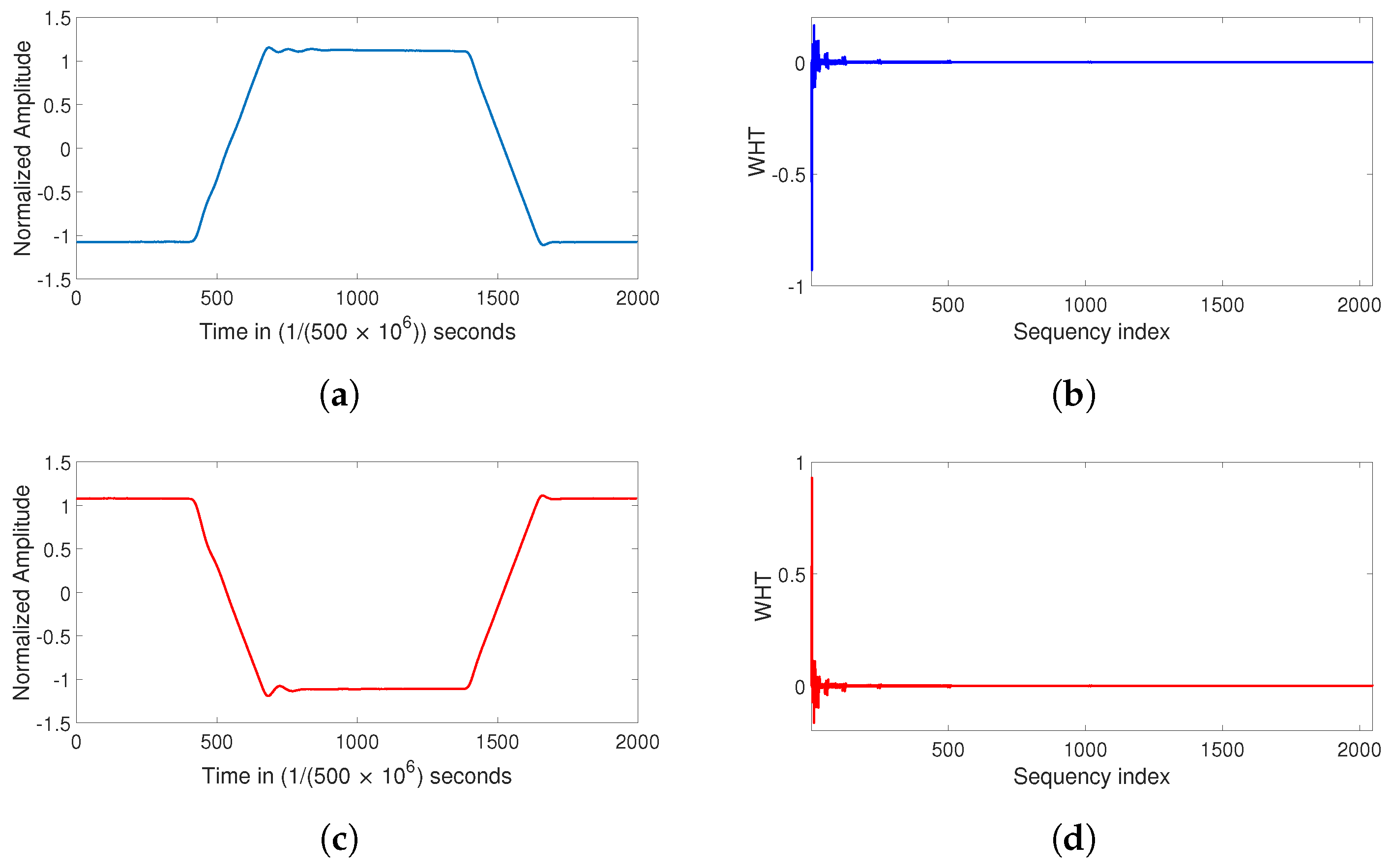

- The combination of Voltage A and Voltage B signals with the added noise are submitted to different transforms. In particular, these are WHT, FFT, and DHT. To make the comparison consistent (since both WHT and DHT provide a real value), only the amplitude component of FFT is used in the analysis. Further results not presented in this paper for space reasons show that the identification accuracy with only the amplitude component of the FFT significantly outperforms both the phase component of the FFT and the combination of the amplitude and phase components. For this reason, only the amplitude of FFT is used in the rest of this study. The FFT is used because it is a common choice in the literature for physical layer authentication [6,20]. DHT was chosen because it is another Fourier-related transform that transforms a real signal to a real signal like WHT. In addition, it has been used with success [21] for sensor physical layer authentication based on voltage signals in the automotive sector. All of these transforms are implemented and executed in parallel (as shown in Figure 1).In addition, for the original time domain signal, statistical features are applied to generate a feature space for classification. A feature-based approach was also used on the same data set in [5], and it is also common in physical layer authentication [20]. This paper has used the following statistical features: mean, variance, skewness, kurtosis, Shannon entropy, and maximum value, which are chosen because they are related to features already used in the literature for physical layer identification [5,13,20], and because of their efficient computation. The statistical features are applied to the Voltage A and Voltage B signals.

- CNN was used to process the output of WHT, FFT (amplitude), DHT, and the original time representation. Instead, machine learning algorithms (i.e., Decision Tree and K-Nearest Neighbor) were used for the feature-based approach and for a subset of WHT when it is significantly reduced.

3.3. Electronic Control Unit Data Set

4. Transforms Used in This Study

4.1. The Walsh–Hadamard Transform

4.2. The Fast Fourier Transform

4.3. The Discrete Hartley Transform

5. Deep Learning and Machine Learning Algorithms

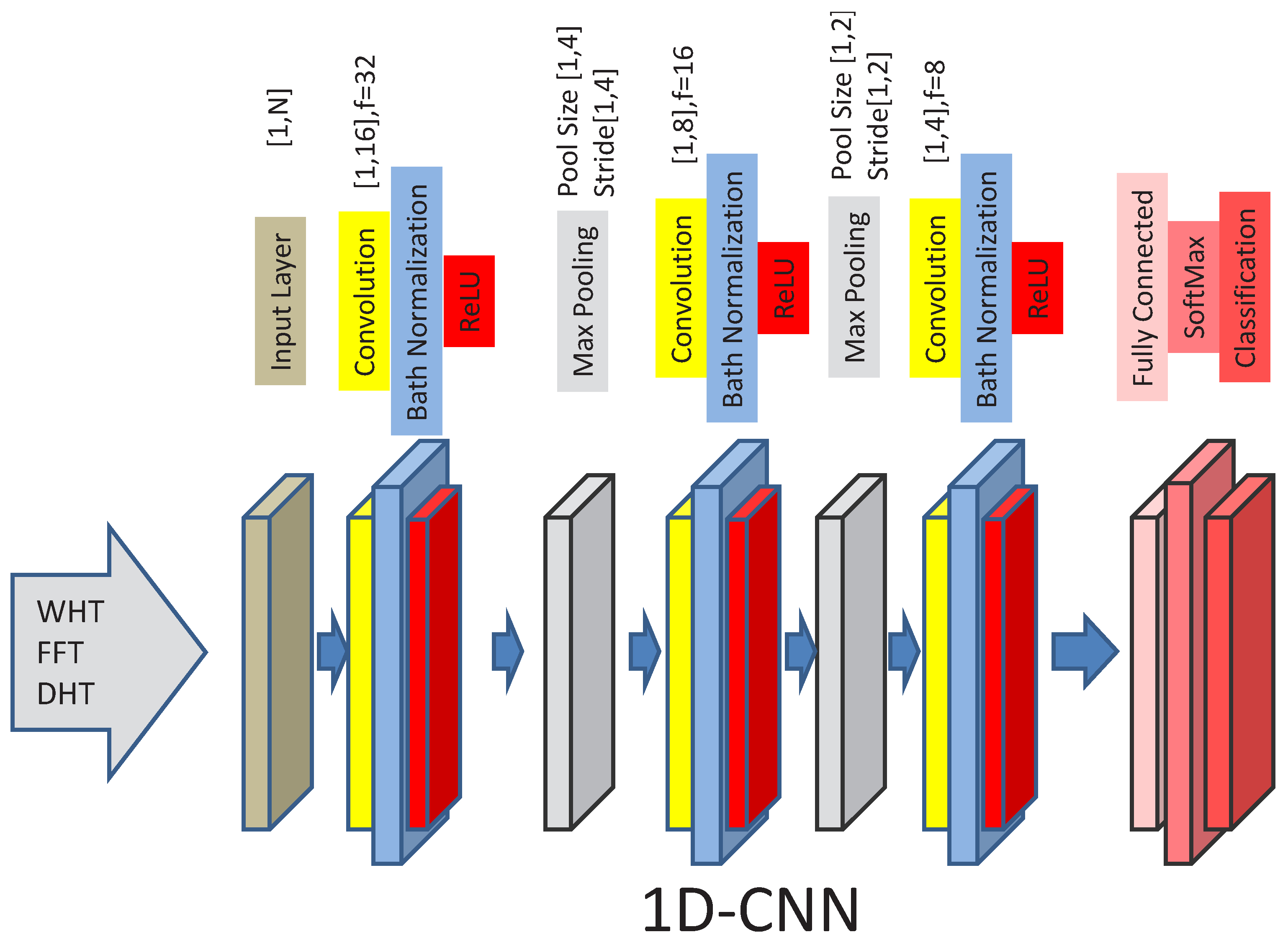

5.1. Convolutional Neural Network Architecture and Parameters

5.2. Long Short-Term Memory (LSTM)

5.3. K-Nearest Neighbor

5.4. Decision Tree

5.5. Training and Test Data Set Composition

5.6. Evaluation Metrics

5.7. Computing Platform

6. Results

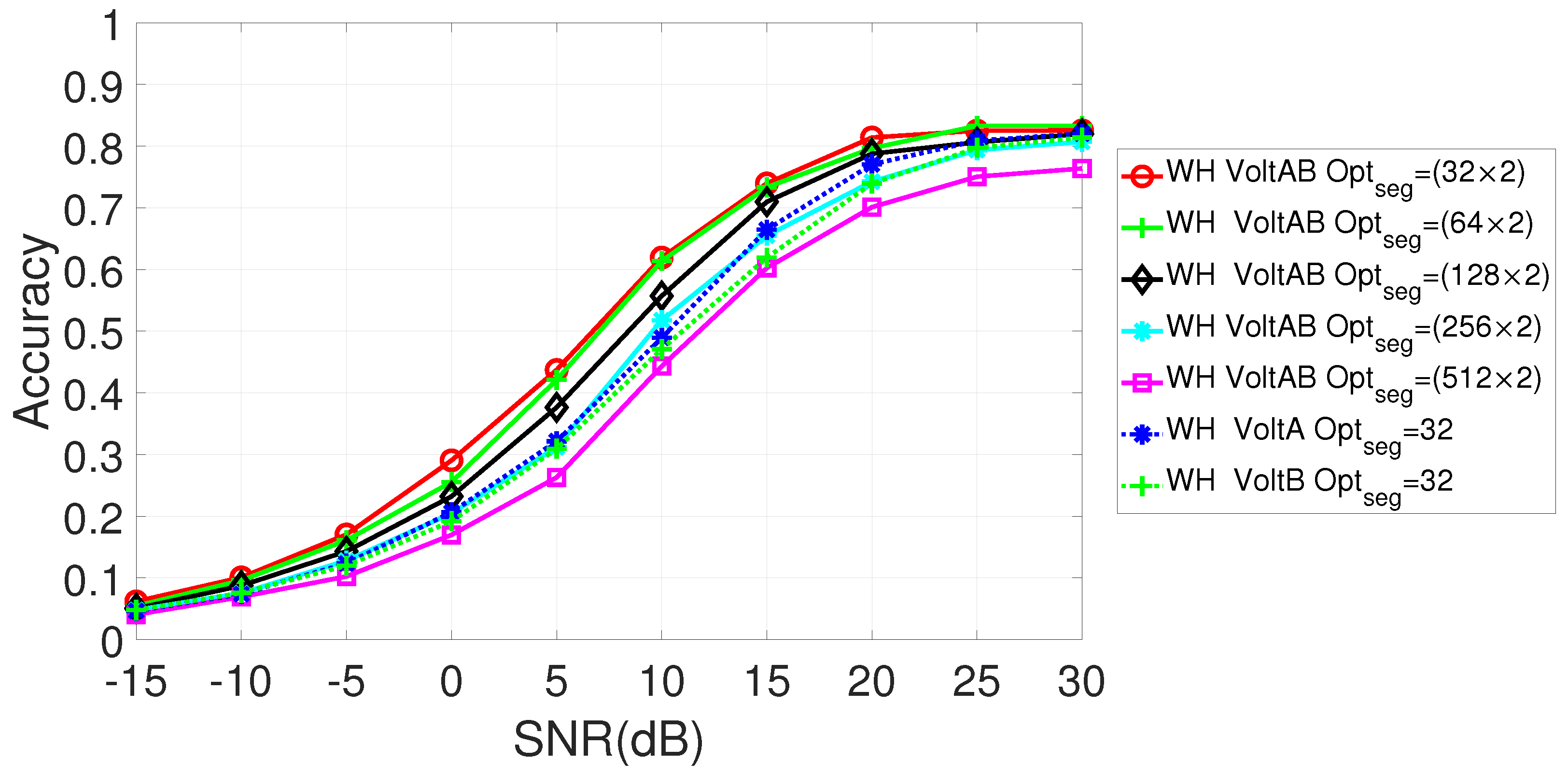

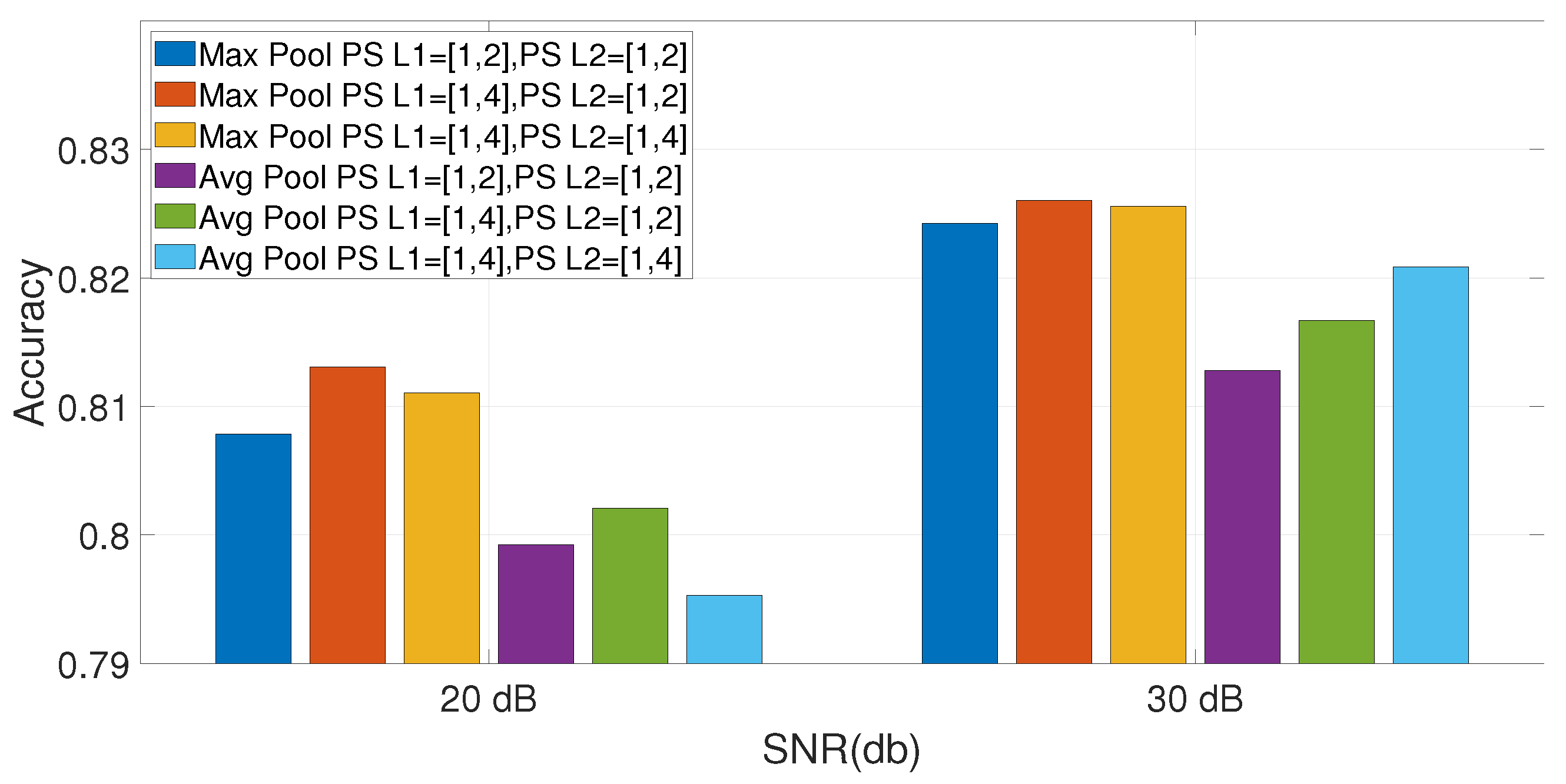

6.1. Parameters Optimization

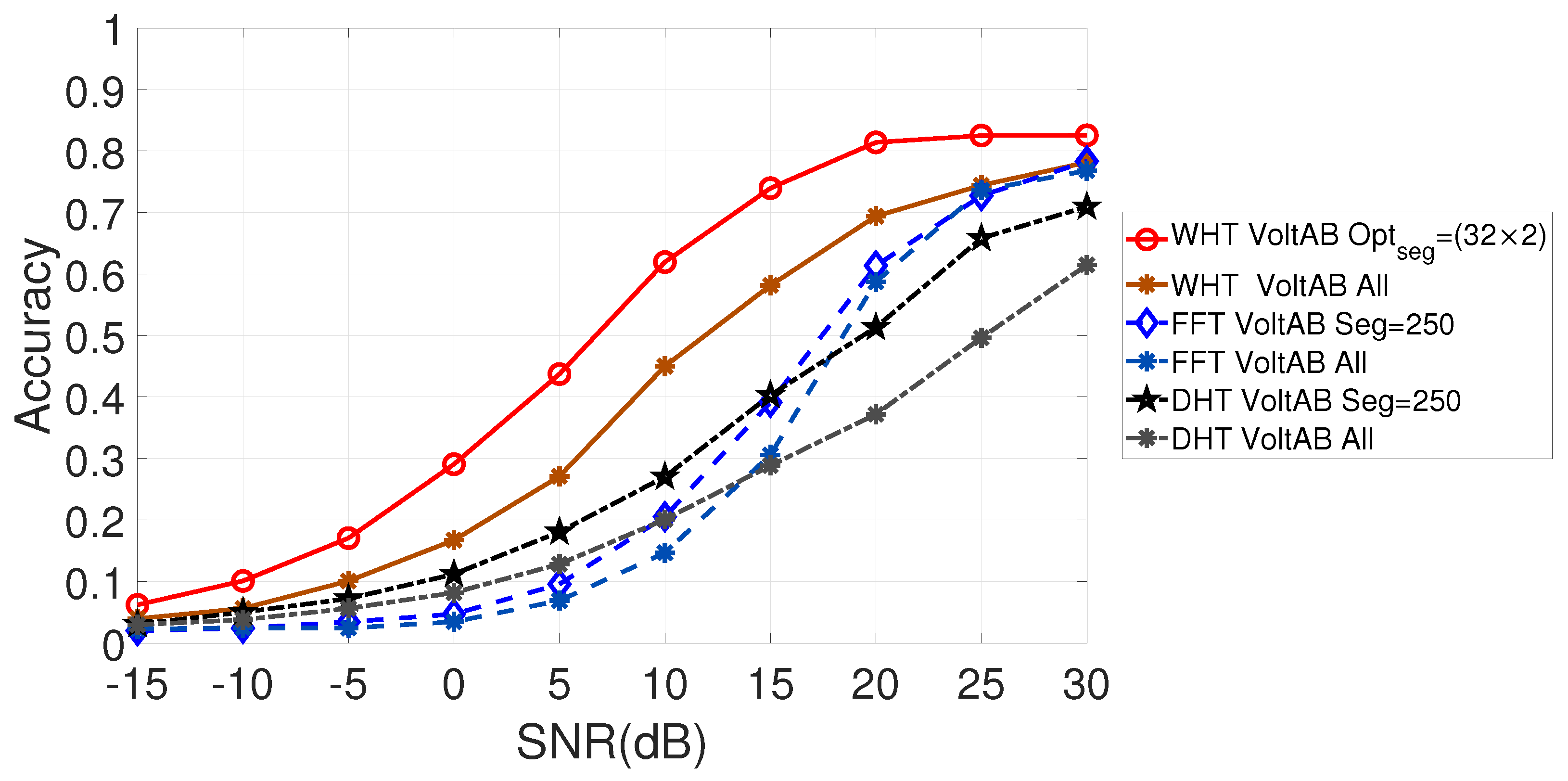

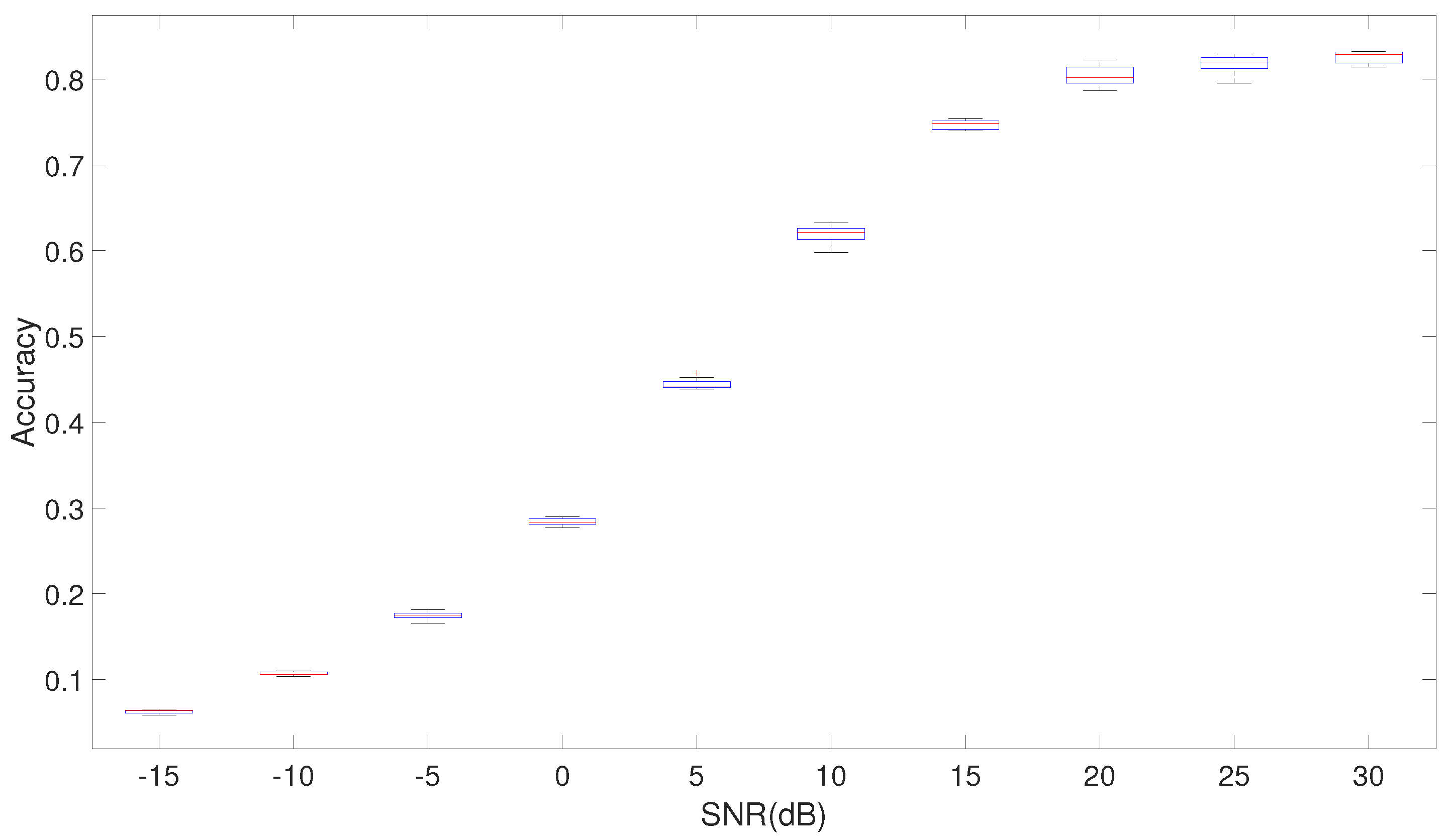

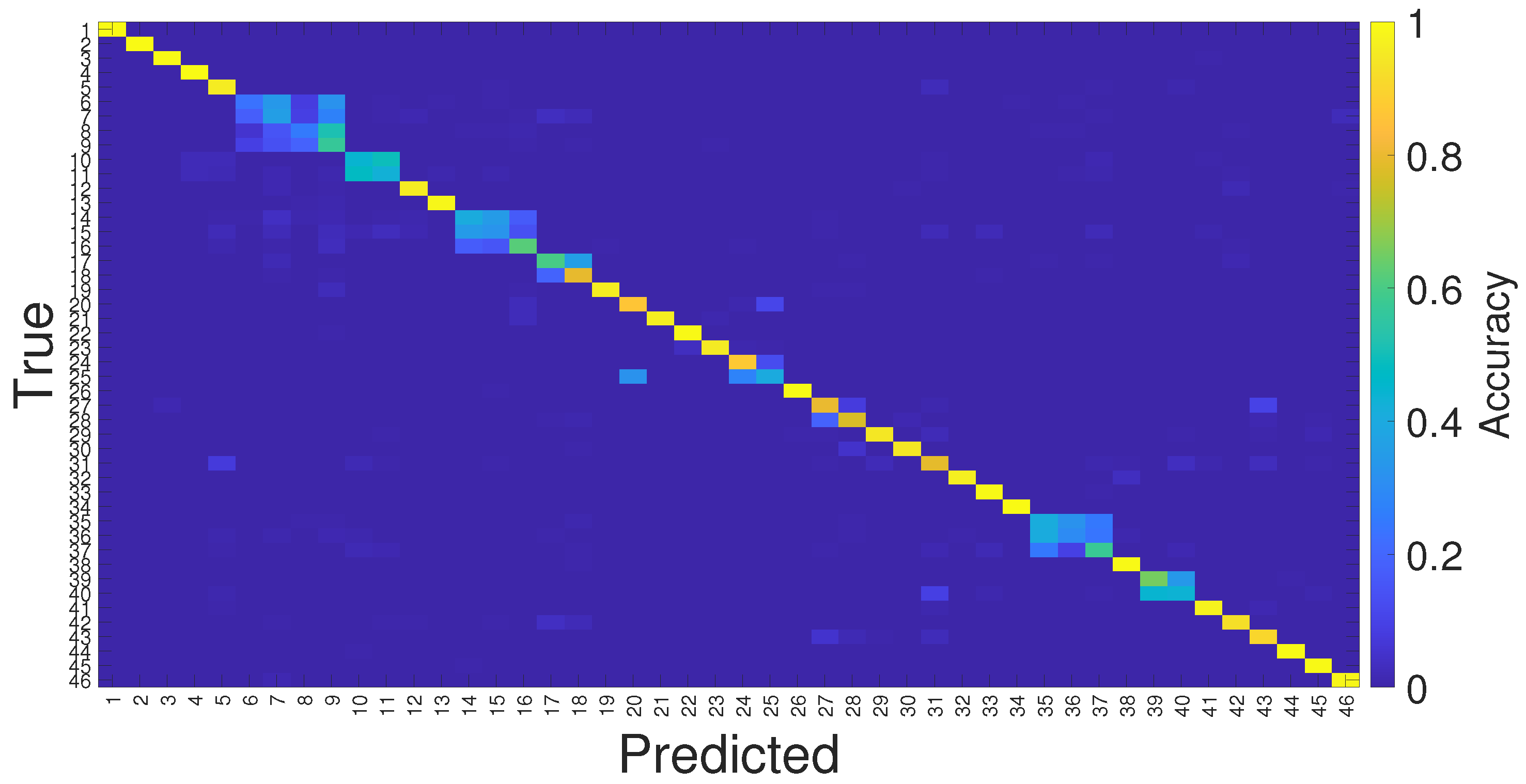

6.2. Comparison of the Approaches

7. Conclusions and Future Developments

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CNN | Convolutional Neural Network |

| DL | Deep Learning |

| DFT | Discrete Fourier Transform |

| DHT | Discrete Hartley Transform |

| DT | Decision Tree |

| ECU | Electronic Control Unit |

| FFT | Fast Fourier Transform |

| FN | False Negative |

| FP | False Positive |

| IRL | Initial Learning Rate |

| KNN | K-Nearest Neighbour |

| LSTM | Long Short-Term Memory |

| ReLU | Rectified Linear Unit |

| SNR | Signal-to-Noise Ratio |

| TN | True Negative |

| TP | True Positive |

| WHT | Walsh–Hadamard Transform |

References

- Miller, C.; Valasek, C. Remote Exploitation of an Unaltered Passenger Vehicle; Black Hat: San Francisco, CA, USA, 2015. [Google Scholar]

- Ebert, C.; Favaro, J. Automotive software. IEEE Softw. 2017, 34, 33–39. [Google Scholar] [CrossRef]

- Petit, J.; Shladover, S.E. Potential cyberattacks on automated vehicles. IEEE Trans. Intell. Transp. Syst. 2014, 16, 546–556. [Google Scholar] [CrossRef]

- Jeong, W.; Han, S.; Choi, E.; Lee, S.; Choi, J.W. CNN-based adaptive source node identifier for controller area network (CAN). IEEE Trans. Veh. Technol. 2020, 69, 13916–13920. [Google Scholar] [CrossRef]

- Popa, L.; Groza, B.; Jichici, C.; Murvay, P.S. ECUPrint—Physical Fingerprinting Electronic Control Units on CAN Buses Inside Cars and SAE J1939 Compliant Vehicles. IEEE Trans. Inf. Forensics Secur. 2022, 17, 1185–1200. [Google Scholar] [CrossRef]

- Soltanieh, N.; Norouzi, Y.; Yang, Y.; Karmakar, N.C. A review of radio frequency fingerprinting techniques. IEEE J. Radio Freq. Identif. 2020, 4, 222–233. [Google Scholar] [CrossRef]

- Jian, T.; Rendon, B.C.; Ojuba, E.; Soltani, N.; Wang, Z.; Sankhe, K.; Gritsenko, A.; Dy, J.; Chowdhury, K.; Ioannidis, S. Deep learning for RF fingerprinting: A massive experimental study. IEEE Internet Things Mag. 2020, 3, 50–57. [Google Scholar] [CrossRef]

- Zhao, Y.; Xun, Y.; Liu, J. ClockIDS: A Real-time Vehicle Intrusion Detection System Based on Clock Skew. IEEE Internet Things J. 2022, 9, 15593–15606. [Google Scholar] [CrossRef]

- Cho, K.T.; Shin, K.G. Fingerprinting electronic control units for vehicle intrusion detection. In Proceedings of the 25th USENIX Security Symposium (USENIX Security 16), Austin, TX, USA, 10–12 August 2016; pp. 911–927. [Google Scholar]

- Ying, X.; Sagong, S.U.; Clark, A.; Bushnell, L.; Poovendran, R. Shape of the cloak: Formal analysis of clock skew-based intrusion detection system in controller area networks. IEEE Trans. Inf. Forensics Secur. 2019, 14, 2300–2314. [Google Scholar] [CrossRef]

- Choi, W.; Joo, K.; Jo, H.J.; Park, M.C.; Lee, D.H. Voltageids: Low-level communication characteristics for automotive intrusion detection system. IEEE Trans. Inf. Forensics Secur. 2018, 13, 2114–2129. [Google Scholar] [CrossRef]

- Choi, W.; Jo, H.J.; Woo, S.; Chun, J.Y.; Park, J.; Lee, D.H. Identifying ecus using inimitable characteristics of signals in controller area networks. IEEE Trans. Veh. Technol. 2018, 67, 4757–4770. [Google Scholar] [CrossRef]

- Kneib, M.; Huth, C. Scission: Signal characteristic-based sender identification and intrusion detection in automotive networks. In Proceedings of the 2018 ACM SIGSAC Conference on Computer and Communications Security, Toronto, ON, Canada, 15–19 October 2018; pp. 787–800. [Google Scholar]

- Yang, Y.; Duan, Z.; Tehranipoor, M. Identify a spoofing attack on an in-vehicle CAN bus based on the deep features of an ECU fingerprint signal. Smart Cities 2020, 3, 17–30. [Google Scholar] [CrossRef]

- Pan, H.; Badawi, D.; Chen, C.; Watts, A.; Koyuncu, E.; Cetin, A.E. Deep Neural Network With Walsh-Hadamard Transform Layer for Ember Detection During a Wildfire. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18 June 2022; pp. 257–266. [Google Scholar]

- Badawi, D.; Agambayev, A.; Ozev, S.; Cetin, A.E. Real-time low-cost drift compensation for chemical sensors using a deep neural network with hadamard transform and additive layers. IEEE Sensors J. 2021, 21, 17984–17994. [Google Scholar] [CrossRef]

- SAE J1939-21; Data Link Layer. SAE International: Warrendale, PN, USA, 2001.

- Hell, M.M. The physical layer in the CAN FD world-The update. In Proceedings of the Introduction to the Controller Area Network Conference, Washington, DC, USA, 23–25 January 2015; pp. 1–2. [Google Scholar]

- Othman, H.; Aji, Y.; Fakhreddin, F.; Al-Ali, A. Controller area networks: Evolution and applications. In Proceedings of the 2006 2nd International Conference on Information & Communication Technologies, Damascus, Syria, 24–28 April 2006; IEEE: New York, NY, USA, 2006; Volume 2, pp. 3088–3093. [Google Scholar]

- Reising, D.; Cancelleri, J.; Loveless, T.D.; Kandah, F.; Skjellum, A. Radio identity verification-based IoT security using RF-DNA fingerprints and SVM. IEEE Internet Things J. 2020, 8, 8356–8371. [Google Scholar] [CrossRef]

- Baldini, G.; Giuliani, R.; Gemo, M. Mitigation of Odometer Fraud for In-Vehicle Security Using the Discrete Hartley Transform. In Proceedings of the 2020 11th IEEE Annual Ubiquitous Computing, Electronics & Mobile Communication Conference (UEMCON), New York, NY, USA, 28–31 October 2020; IEEE: New York, NY, USA, 2020; pp. 0479–0485. [Google Scholar]

- Beauchamp, K.G. Applications of Walsh and Related Functions, with an Introduction to Sequency Theory; Academic Press: Cambridge, MA, USA, 1984; Volume 2. [Google Scholar]

- Fino, B.J.; Algazi, V.R. Unified matrix treatment of the fast Walsh-Hadamard transform. IEEE Trans. Comput. 1976, 25, 1142–1146. [Google Scholar] [CrossRef]

- Hosseini-Nejad, H.; Jannesari, A.; Sodagar, A.M. Data compression in brain-machine/computer interfaces based on the Walsh–Hadamard transform. IEEE Trans. Biomed. Circuits Syst. 2013, 8, 129–137. [Google Scholar] [CrossRef]

- Sneha, P.; Sankar, S.; Kumar, A.S. A chaotic colour image encryption scheme combining Walsh–Hadamard transform and Arnold–Tent maps. J. Ambient Intell. Humaniz. Comput. 2020, 11, 1289–1308. [Google Scholar] [CrossRef]

- Elliott, D.F.; Rao, K.R. Fast Transforms Algorithms, Analyses, Applications; Elsevier: Amsterdam, The Netherlands, 1983. [Google Scholar]

- Bracewell, R.N. Discrete hartley transform. JOSA 1983, 73, 1832–1835. [Google Scholar] [CrossRef]

- Waibel, A.; Hanazawa, T.; Hinton, G.; Shikano, K.; Lang, K.J. Phoneme recognition using time-delay neural networks. IEEE Trans. Acoust. Speech Signal Process. 1989, 37, 328–339. [Google Scholar] [CrossRef]

- Zhang, W.; Tanida, J.; Itoh, K.; Ichioka, Y. Shift-invariant pattern recognition neural network and its optical architecture. In Proceedings of the Annual Conference of the Japan Society of Applied Physics, Tokyo, Japan, 24–26 August 1988; pp. 2147–2151. [Google Scholar]

- Li, Z.; Liu, F.; Yang, W.; Peng, S.; Zhou, J. A survey of convolutional neural networks: Analysis, applications, and prospects. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 6999–7019. [Google Scholar] [CrossRef]

- Scherer, D.; Müller, A.; Behnke, S. Evaluation of pooling operations in convolutional architectures for object recognition. In Proceedings of the International Conference on Artificial Neural Networks, Thessaloniki, Greece, 15–18 September 2010; Springer: Berlin/Heidelberg, Germany, 2010; pp. 92–101. [Google Scholar]

- Zafar, A.; Aamir, M.; Mohd Nawi, N.; Arshad, A.; Riaz, S.; Alruban, A.; Dutta, A.K.; Almotairi, S. A Comparison of Pooling Methods for Convolutional Neural Networks. Appl. Sci. 2022, 12, 8643. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Cover, T.; Hart, P. Nearest neighbor pattern classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef]

- Loh, W.Y. Classification and regression trees. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2011, 1, 14–23. [Google Scholar] [CrossRef]

| ID | Vehicle Model | ID | Vehicle Model | ID | Vehicle Model | ID | Vehicle Model |

|---|---|---|---|---|---|---|---|

| 1 | Dacia Duster | 13 | Ford Ecosport | 25 | Ford Kuga | 37 | Hyundai 20 |

| 2 | Dacia Duster | 14 | Ford Fiesta | 26 | Ford Kuga | 38 | Hyundai ix35 |

| 3 | Dacia Duster | 15 | Ford Fiesta | 27 | Honda Civic | 39 | Hyundai ix35 |

| 4 | Dacia Logan | 16 | Ford Fiesta | 28 | Honda Civic | 40 | Hyundai ix35 |

| 5 | Dacia Logan | 17 | Ford Fiesta | 29 | Honda Civic | 41 | Hyundai ix35 |

| 6 | Dacia Logan | 18 | Ford Fiesta | 30 | Honda Civic | 42 | Hyundai ix35 |

| 7 | Dacia Logan | 19 | Ford Fiesta | 31 | Honda Civic | 43 | Opel Corsa |

| 8 | Dacia Logan | 20 | Ford Kuga | 32 | Hyundai i20 | 44 | Opel Corsa |

| 9 | Dacia Logan | 21 | Ford Kuga | 33 | Hyundai i20 | 45 | Opel Corsa |

| 10 | Ford Ecosport | 22 | Ford Kuga | 34 | Hyundai i20 | 46 | Opel Corsa |

| 11 | Ford Ecosport | 23 | Ford Kuga | 35 | Hyundai i20 | 47 | - |

| 12 | Ford Ecosport | 24 | Ford Kuga | 36 | Hyundai i20 | 48 | - |

| Approach | Accuracy | F-Score |

|---|---|---|

| SNR = 30 dB | ||

| CNN WHT | 0.826 | 0.810 |

| CNN WHT | 0.835 | 0.820 |

| CNN WHT all | 0.781 | 0.780 |

| CNN FFT Seg = 250 * 2 | 0.783 | 0.781 |

| CNN FFT all | 0.768 | 0.764 |

| CNN DHT Seg = 250 * 2 | 0.709 | 0.710 |

| CNN DHT all | 0.614 | 0.614 |

| CNN Time domain all (A+B) [4] | 0.771 | 0.765 |

| LSTM WHT [14] | 0.811 | 0.807 |

| DT WHT | 0.803 | 0.800 |

| KNN WHT | 0.770 | 0.760 |

| DT Stat.Feat. (inspired by [5,13]) | 0.635 | 0.628 |

| KNN Stat.Feat. (inspired by [5,13]) | 0.537 | 0.528 |

| SNR = 10 dB | ||

| CNN WHT | 0.619 | 0.608 |

| CNN WHT | 0.613 | 0.597 |

| CNN WHT All | 0.450 | 0.449 |

| CNN FFT Seg = 250 * 2 | 0.205 | 0.203 |

| CNN FFT All | 0.146 | 0.146 |

| CNN DHT Seg = 250 * 2 | 0.270 | 0.263 |

| CNN DHT All | 0.200 | 0.194 |

| CNN Time domain all (A+B) [4] | 0.292 | 0.286 |

| LSTM WHT [14] | 0.572 | 0.568 |

| DT WHT | 0.500 | 0.498 |

| KNN WHT | 0.346 | 0.344 |

| DT Stat.Feat. (inspired by [5,13]) | 0.06 | 0.06 |

| KNN Stat.Feat. (inspired by [5,13]) | 0.04 | 0.04 |

| SNR = 0 dB | ||

| CNN WHT | 0.290 | 0.264 |

| CNN WHT | 0.255 | 0.242 |

| CNN WHT All | 0.167 | 0.168 |

| CNN FFT Seg = 250 * 2 | 0.047 | 0.045 |

| CNN FFT All | 0.034 | 0.034 |

| CNN DHT Seg = 250 * 2 | 0.111 | 0.104 |

| CNN DHT All | 0.081 | 0.079 |

| CNN Time domain all [4] | 0.132 | 0.129 |

| LSTM WHT [14] | 0.270 | 0.262 |

| DT WHT | 0.240 | 0.237 |

| KNN WHT | 0.128 | 0.118 |

| DT Stat.Feat. (inspired by [5,13]) | 0.02 | 0.02 |

| KNN Stat.Feat. (inspired by [5,13]) | 0.02 | 0.02 |

| Approach | Time |

|---|---|

| CNN WHT | 1 |

| CNN WHT | 1.034 |

| CNN Time Voltage A | 1.332 |

| CNN Time Voltage B | 1.298 |

| CNN Time Voltage A+B | 1.971 |

| DT WHT | 0.712 |

| KNN WHT | 0.546 |

| DT Feature Based | 0.459 |

| KNN Feature Based | 0.32 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Baldini, G. Voltage Based Electronic Control Unit (ECU) Identification with Convolutional Neural Networks and Walsh–Hadamard Transform. Electronics 2023, 12, 199. https://doi.org/10.3390/electronics12010199

Baldini G. Voltage Based Electronic Control Unit (ECU) Identification with Convolutional Neural Networks and Walsh–Hadamard Transform. Electronics. 2023; 12(1):199. https://doi.org/10.3390/electronics12010199

Chicago/Turabian StyleBaldini, Gianmarco. 2023. "Voltage Based Electronic Control Unit (ECU) Identification with Convolutional Neural Networks and Walsh–Hadamard Transform" Electronics 12, no. 1: 199. https://doi.org/10.3390/electronics12010199

APA StyleBaldini, G. (2023). Voltage Based Electronic Control Unit (ECU) Identification with Convolutional Neural Networks and Walsh–Hadamard Transform. Electronics, 12(1), 199. https://doi.org/10.3390/electronics12010199