Abstract

Nowadays, palmprint recognition has been well developed since plenty of promising algorithms have emerged. Palmprints have also been applied under various authentication scenarios. However, these approaches are designed and tested only when the registration images and probe images are taken under the same illumination condition; thus, a cross-spectral performance degradation is speculated. Therefore, we test the cross-spectral performance of extended binary orientation co-occurrence vector (E-BOCV), which is unsatisfactory, illustrating the necessity of a specific algorithm. Trying to achieve the cross-spectral palmprint recognition with image-to-image translation, we have made efforts in the following two aspects. First, we introduce a scheme to evaluate the images of different spectra, which is a reliable basis for translation direction determination. Second, in this paper, we propose a palmprint translation convolutional neural network (PT-net) and the performance of translation from NIR to blue is tested on the PolyU multispectral dataset, which achieves a 91% decrease in Top-1 error using E-BOCV as the recognition framework.

1. Introduction

Palmprint recognition has been a hot topic for a long time. Compared with fingerprints, palmprints are much larger in area with more texture features which makes them stable in recognition. It has been widely applied in many safety-concerned scenarios, such as identification in hospitals and banks. Specifically, low resolution palmprint images, which are about 150 dpi, are used in civil applications. Algorithms are proposed to make use of abundant features, from EigenPalm [1] to the double-orientation code (DOC) approach [2].

In most cases, the palmprint images of registration and recognition are collected with the same type of equipment, in other words, shot in the same spectrum. The discrepancy is vast between palm images in different spectra. Images gathered in blue and green light only contain textures on the surface, while those gathered in near-infrared (NIR) and red light also show veins underneath. Thus, when the registration and validation images are in two different domains, the aforementioned performance drop can be speculated. In this paper, we refer to the task as cross-spectral palmprint recognition.

Recently, researchers have shown interest in the problem, taking advantage of powerful convolution neural networks (CNNs). PalmGAN [3] utilized CycleGAN [4] to translate the palm images and a Deep Hash Network (DHN) for identification. Shao et al. [5] introduced an autoencoder to extract shared features in different domains for verification. However, these methods have their limitations as they attempt to solve two problems simultaneously. Both the feature gap between the spectra and the performance of the recognition algorithm have massive impact on the overall capability.

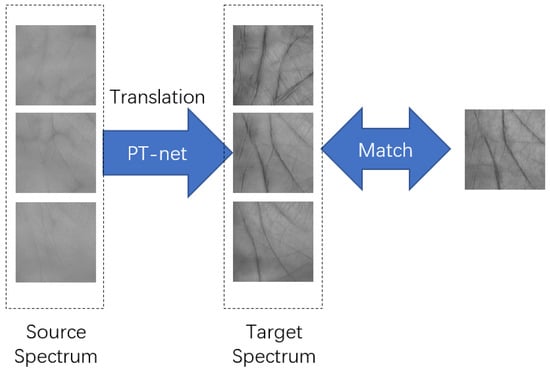

Zhu et al. [6] proposed a low-rank canonical correlation analysis method to construct a unified subspace of different spectral images. Instead, we focus on removing the feature gap of multispectral palmprint images and defining the problem as palmprint image translation. The advantage is that it can fit in any palmprint recognition system without altering the current recognition algorithm. The proposed recognition framework is shown in Figure 1. For translation, we propose palmprint translation network (PT-net), which is a convolutional neural network. For recognition, in this paper, we adopt E-BOCV [7], which is a widely adopted method with high performance. For convenience, we use “A2B” to simplify the representation of the translation task where the spectrum A images are used as source and B as target.

Figure 1.

Proposed cross-spectral recognition process.

Our contributions are described as follows:

- (1)

- We propose a scheme to evaluate the relative relationship between palmprint spectra to guide choice of the translation direction.

- (2)

- A novel palmprint translation network (PT-net) is proposed. Experiments show that the improvement of recognition accuracy introduced by PT-net is considerable.

- (3)

- Training details are discussed, including the data alignment method and loss function hyperparameter setting.

2. Related Work

2.1. Palmprint Recognition

Palmprint recognition algorithms take advantage of various kinds of information of palmprints [8]. For example, principle line features are used in [9], while subspace features extracted with principal components analysis (PCA) are used in EigenPalm [1]. In addition, Morales, Ferrer, and Kumar proposed a method using the scale-invariant feature transform (SIFT), which is robust to various illumination and rotation [10].

Features with orientation are testified promising in the following studies. Palmcode, proposed by Zhang et al. [11], applied a normalized Gabor filter to the palmprint image and encoded the filtered image for verification. Competitive code [12] adopted six Gabor filters with different orientations and angular matching for fast verification. Binary Orientation Co-occurrence Vector (BOCV) proposed by Guo et al. [13] preserved all the information in the features extracted by six Gabor filters which were encoded binarily afterward. BOCV is robust to slight rotation and relatively fast due to the binary operations. Fragile bits were masked out in E-BOCV [7], making it a more robust approach.

In recent years, convolution neural network (CNN) has achieved great success in computer vision tasks, such as classification and object detection. Palmprint recognition approaches using CNN are emerging rapidly. Minaee and Wang [14] used predefined convolution filters to extract features and a trained support vector machine (SVM) to match the palmprints. A CNN-based method was proposed by Liu and Sun [15], which adopts the Alex-Net structure for feature extracting and the Hausdorff distance for matching.

Limited by the amount of data in one dataset, it becomes unapplicable to train a palm translation network and a recognition CNN with exclusive training sets. Therefore, for the convenience of experiments, we choose E-BOCV, a training-free recognition method with high performance, as our recognition method in the framework.

2.2. Image-to-Image Translation

In recent years, image-to-image translation has been widespread, thanks to the rise of deep learning and generative adversarial network (GAN). Pix2Pix [16] has been demonstrated effective in various image-to-image translation tasks, such as synthesizing photos from label maps and objects from edge maps. However, it still needs paired ground truth correspondingly. Afterward, Zhu et al. proposed CycleGAN [4] which retains high-quality translation with no need of paired training data. Derived from Coupled GAN, an unsupervised image translation framework was proposed by Liu [17], where the shared latent space assumption was made.

Faces are another biometric feature widely used for identification. Matching face images taken under different light circumstances is challenging. In order to match NIR and visible light (VIS) face images, Xu et al. developed a method to reconstruct VIS images in the NIR domain and vice versa [18]. A system was reported in [19], which is capable of heterogenous cross-spectral and cross-distance face matching, where high-quality face images are restored from degraded probe images. He et al. introduced an inpainting component and a warping procedure to synthesise VIS faces for recognition [20].

Compared with face recognition, texture is relatively more important for palmprint recognition. Therefore, methods for cross-spectral face recognition may not be suitable for palmprints.

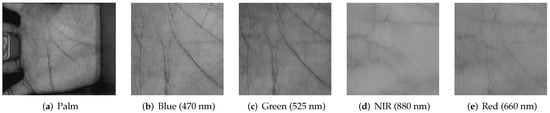

2.3. Multispectral Palmprint Dataset

PolyU multispectral dataset is a commonly employed database [21]. It is made of 6000 images taken from 250 people under 4 different illuminations. The pictures are shot in two sessions with a time interval around 9 days. The four spectra involved are blue, green, red, and NIR. As region of interest (ROI) extraction is commonly included in the preprocessing, the extracted ROI images using the algorithm described in [11] are also released. An example of the dataset is shown in Figure 2.

Figure 2.

Example of PolyU multispectral palmprint dataset: (a) is a whole palm example, (b–e) are the region of interest (ROI) figures in different spectra.

CASIA-MS-PalmprintV1 [22] is a multispectral palmprint database built by the Chinese Academy of Sciences’ Institute of Automation (CASIA). It contains 7200 palm images of 100 people taken under 6 spectrum illuminations. Since there are no pegs on the collection device, palm postures and positions are not restricted. Similarly, images taken under different illuminations in the same session of the same palm are not aligned.

There are also other multispectral datasets, such as the semi-uncontrolled palmprint database and the uncontrolled palmprint database established by Shao et al. [3]. Because of the different modalities of the datasets and space limitations, in this paper, we conduct all the experiments on the PolyU multispectral dataset.

3. Method

In this section, the importance of palmprint translation is illustrated firstly. Then, to determine in which spectrum the palmprint images should be chosen as the source, a comparison method utilizing Star-GAN, a successful framework in domain adaptation, is proposed. Last, we present the PT-net designed for palmprint translation.

3.1. Necessity of Palmprint Translation

E-BOCV [7] has been a successful method for palmprint recognition, in which Gabor filters along six orientations are adopted to extract features, and fragile bits are eliminated, making E-BOCV both robust and accurate. While it performs well using palmprint images in the same spectrum, its performance is questionable when the registration palm image and the verification one are shot in different spectra, in other words, in the cross-spectral task. Therefore, we make a test to find out if palm image translation is necessary.

In this test, images of all the 500 palms in the PolyU multispectral dataset [21] are used. Following [11], the ROIs are extracted. The first one of the first session is used for registration, while all the images of the second session are used as probe images. Corresponding Top-1 error is listed in Table 1. As the table shows, the Top-1 errors of cross-spectral recognition are always higher than single-spectral recognition, which is intuitive because the palmprint recognition algorithm is designed for one spectrum situation. For convenience, we denoted the Top-1 error tested with registration spectrum A and verification spectrum B as “Error-A2B”. Specifically, the Error-Blue2Green and Error-Green2Blue are 1.00 and 0.83%, which is relatively low compared with others. The results make sense because the images taken under the blue and green spectrum look similar and reveal similar surface texture features of the palms. On the contrary, Error-NIR2Blue, Error-NIR2Green, Error-Green2NIR, and Error-Blue2NIR are all above 20%, which is unacceptably high, indicating the huge feature gap between these spectrum pairs. It is interesting to find out the Top-1 errors involving the red spectrum are neither too high, which can be attributed to the shared information of texture and vein.

Table 1.

Top-1 error results of cross-spectral E-BOCV without palmprint translation. RegSpec denotes the spectrum used in registration, while RecSpec in recognition.

As the domain gap between NIR and blue is big, we choose the NIR and blue spectrum pair as our primary objective translation pair.

3.2. Translation Direction

As mentioned in the Introduction, another problem is to determine the translation direction. In order to achieve a better verification performance, it is intuitive to choose a translation direction where the image translation performs better.

The key point of choosing the translation direction is that the images of the source domain are supposed to retain more information in the target domain after translation. Taking PolyUDataset as an example, we have images from four domains, which make six spectrum pairs. In order to make a fair assessment of the spectrum pairs, here, we propose an evaluation framework based on one translation model, StarGAN [23]. StarGAN is a generative adversarial neural network for multi-domain image transfer, which has been verified to be satisfactory for face attribute modification and other tasks. After training with the multispectral palmprint images, a comparison of translation quality between different spectrum pairs is made. In this process, the structural similarity index (SSIM) [24] of the translated images and their counterparts in the target spectrum is used as the evaluation metric. SSIM is independent of brightness and contrast, making it suitable for evaluating the translation quality where texture or other structural information reconstruction is the most valued aspect. For cross-spectral recognition, the performance of StarGAN, as shown in the experiment described in Section 4.1, is unsatisfactory. Thus, it is adopted only in translation direction determination.

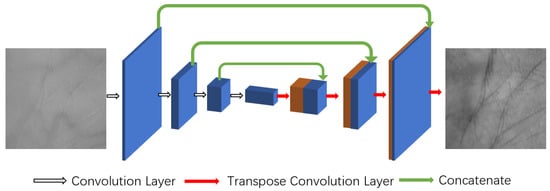

3.3. Palmprint Translation Network

Unlike the existing methods [3,5], as the problem is defined differently, CNN is adopted instead of GAN. The palmprint translation network (PT-net) proposed by us applied a U-net [25] like structure, which is similar to [16]. The difference is that fewer layers and filters are used. Our PT-net is much shallower with fewer parameters. The overall network structure is shown in Figure 3.

Figure 3.

PT-net struction.

In the proposed network, leaky ReLU and batch normalization are adopted after each convolutional layer. In addition, the input data are normalized to the range of −1 to 1, and tanh operation is applied to the output of the network to maintain the same data range.

In the following, the loss function is introduced. First, same as other image manipulation methods, loss is adopted, which is shown in Equation (1).

where I is the output of the network and stands for the ground truth target image.

Line and orientation features are critical in palmprint recognition algorithms [8]. Inspired by the excellent feature extraction performance gained by Gabor filters with different orientations, Gabor loss is introduced in [26], which minimizes the gap between orientation features of target images and translated ones. As verified, Gabor loss effectively maintains the texture and orientation features during translation. The Gabor loss can be expressed as follows:

in which denotes the convolution result using Gabor filter with the orientation of [11], M and N denote the height and width of the images correspondingly. So, the total loss is

where is a hyperparameter controlling the trade-off between the overall and line reconstruction.

4. Experiments

4.1. Comparison of Spectral Pairs

As described in Section 3.2, the spectra are treated as different attributes regarding StarGAN [23]. In the palmprint translation task, we randomly split the 500 palms in the PolyU dataset into 400 for training and 100 for testing. The original implementation is used. Figure 4 show the example translation results.

Figure 4.

Star-GAN results example: (a) is the NIR input palmprint image, (b–e) are the translated results to blue, green, NIR, and red spectrum.

Then, SSIM numbers of the translated ROI and the ground truth are listed in Table 2. Higher SSIM means higher feasibility of the translation, which makes it a referable guideline for choosing the translation direction. For example, given images in blue and NIR spectra, we should choose the NIR to be the source domain and blue the target one since the SSIM of NIR to blue is higher.

Table 2.

SSIM results in the evaluation of spectrum pairs using StarGAN. The higher one is shown in bold.

This experiment also supports our analysis in Section 3.1. Blue and green spectra contain similar information, resulting in similar translation quality. NIR performs best as the source, which may be attributed to both texture and vein features. The red spectrum is a mediocre one that can outperform green and blue but cannot beat NIR.

As shown in Figure 4, given a NIR palmprint image, which contains information of the subcutaneous vein, even though the altered images are quite similar to the target spectrum, the model performance is still dissatisfying as the vein can still be observed in the visible spectral counterparts. As a generic translation network, Star-GAN does not utilize aligned image pairs. It is possible to obtain more accurate translation with alignment.

We also test the recognition performance of StarGAN after applying “NIR2Blue” translation. Evaluation details are as follows. For registration, images of all the 500 palms are used, to be more specific, the first ROI image in the first session of the target spectrum is used. For recognition, we only use images of the source spectrum in the test set, which contains images of 100 palms. In details, all the six palmprints taken in the second session are used as probe images. Not surprisingly, the Top-1 error is 15.29%, which shows the effectiveness of image translation by StarGAN. It further validates that palmprint translation needs a specific designed network.

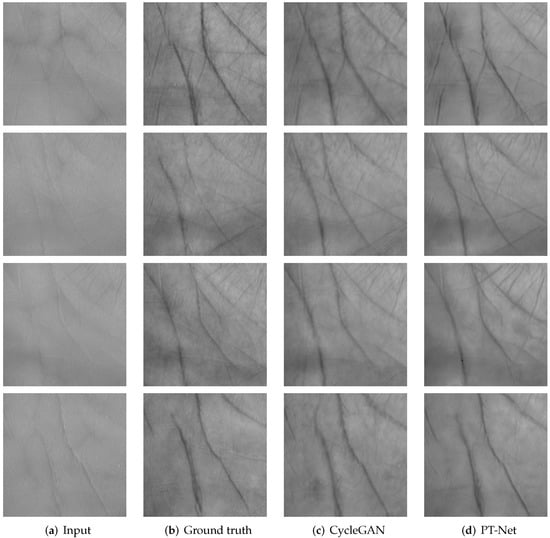

4.2. Recognition after Translation

In this work, we focus on the “NIR2Blue” translation task, which is challenging because of the huge domain gap described above, which causes poor performance in plain recognition (see Table 1).

The split of training and test data is the same as in Section 4.1. Data augmentation includes random crop with size of 64, random flip horizontally and vertically. The whole training scheme is written in TensorFlow [27]. The PT-net is trained for 400 epochs with Adam optimizer and linear learning rate scheduler whose initial learning rate is set to 1 × 10. As explained above, Top-1 error of the translated test set is tested.

Because our focus on the cross-spectral recognition issue is different from the one of [3], it is not easy to compare our methods fairly. Palm-GAN also includes a deep hashing network to re-identify palms, while we pay our main attention to spectrum translation. So, for comparison, we reimplement the translation part of Palm-GAN, which is CycleGAN [4]. Sample translation results are shown in Figure 5. Recognition results are shown in Table 3. The recognition Top-1 error is 3.33%, 16.7% better than CycleGAN, relatively, with fewer parameters and easier training scheme.

Figure 5.

Translation results of CycleGAN and PT-net.

Table 3.

Results of NIR2Blue recognition. PT-net refers to PT-net trained with aligned data, introduced in Section 4.3.

4.3. Data Alignment

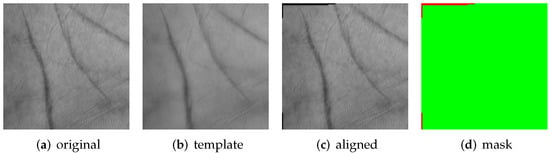

In addition, we find that even though the data in different spectra are collected within a short time, images may not be well aligned because of the little time interval, leading to minor palm morph or displacement. Since PT-net is trained under pixel-wise supervision, data misalignment lead to blurry translation. To alleviate the problem, DIS optical flow [28] alignment is adopted to warp the training data in target domain, in other words, blue spectrum. Due to the huge domain gap between the images captured in the NIR and blue spectra, aligning the training pairs directly is impossible. Thus, we train a PT-net with the original data first, and then align the images in the blue spectrum to its counterpart, the output of trained PT-net. Afterward, a new PT-net is trained with the aligned data. After the distortion, some areas around the border are filled with invalid black pixels (see in Figure 4), which should be masked out during the training process. Eventually, the loss function is:

where M is the mask filled with zeros and ones, ⊙ denotes the Hadamard multiplication. An example of mask generated is shown in Figure 6.

Figure 6.

Example of data alignment. The template is the output of PT-net trained with original PolyU dataset. (a) original; (b) template; (c) aligned; (d) mask. In (d), areas of ones are filled with green pixels, while a red pixel represents a zero. When the two images are severely misaligned, our method can effectively align the data.

As shown in row 5, Table 3, the error rate is reduced by 1.5% after applying data alignment. To further investigate the effect of the data misalignment and the alignment method, we compare the performance of different models when the displacement problem is more severe. We apply affine transformations to the training data, which are random rotation in the range of −5 to 5 degree and random translation in a 3 pixel range. Without image alignment, the performance of CycleGAN and PT-net drops dramatically, with the Top-1 error rate of 17 and 21.5%. The degradation of CycleGAN may be due to the increased uncertainty of translation, while the degradation of PT-net is simply attributed to false labels. After aligning the jittered data, the error rate of PT-net drops to 6.83%, which illustrates the effectiveness of image alignment. Related numbers are shown in Table 4. It is worth mentioning that CycleGAN does not need paired aligned data; thus, data alignment is not applied in its training.

Table 4.

Results of different models trained with jittered data.

4.4. Gabor Loss

To confirm that the Gabor loss is effective in PT-net training, we also present the result of models trained with different in Equation (3) in Table 5. In these experiments, aligned data is used. As we can see, the recognition performance is the best with setting to 0.5, reaching 1.83% Top-1 error rate, reducing the errors relatively by 91% compared to original E-BOCV.

Table 5.

Comparison of different ; 0 means no Gabor loss.

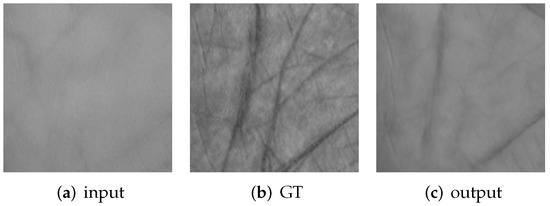

4.5. Failure Cases

Figure 7 shows one of the failure cases. As we can see, the input NIR image is shot with fewer textures than others, which may be caused by an illumination glitch, leading to a blurry translation result.

Figure 7.

Failure case example. GT denotes the ground truth image in blue spectrum.

5. Conclusions

In this paper, we break down the cross-spectral palmprint recognition problem into palmprint image-to-image translation and recognition parts. Thus, we propose a framework involving translation direction determination, palmprint translation using PT-net. Based on StarGAN, we propose a method to evaluate the pros and cons of the two directions in order to reduce the difficulty of translation. As for palmprint translation, we introduce PT-net with Gabor loss. Experiments show that our framework performs well in the NIR to blue translation, reducing the Top-1 error rate by 91% relatively.

Author Contributions

Conceptualization, Y.M.; methodology, Y.M. and Z.G.; writing—original draft preparation, Y.M.; writing—review and editing, Z.G.; supervision, Z.G.; funding acquisition, Z.G. All authors have read and agreed to the published version of the manuscript.

Funding

This work is partially supported by the Natural Science Foundation of China (NSFC) (No. 61772296).

Data Availability Statement

Publicly available datasets were analyzed in this study. This data can be found here: https://www4.comp.polyu.edu.hk/~biometrics/MultispectralPalmprint/MSP.htm/.

Conflicts of Interest

The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Lu, G.; Zhang, D.; Wang, K. Palmprint recognition using eigenpalms features. Pattern Recognit. Lett. 2003, 24, 1463–1467. [Google Scholar] [CrossRef]

- Fei, L.; Xu, Y.; Tang, W.; Zhang, D. Double-orientation code and nonlinear matching scheme for palmprint recognition. Pattern Recognit. 2016, 49, 89–101. [Google Scholar] [CrossRef]

- Shao, H.; Zhong, D.; Li, Y. PalmGAN for Cross-Domain Palmprint Recognition. In Proceedings of the 2019 IEEE International Conference on Multimedia and Expo (ICME), Shanghai, China, 8–12 July 2019; pp. 1390–1395. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Shao, H.; Zhong, D.; Du, X. Cross-Domain Palmprint Recognition Based on Transfer Convolutional Autoencoder. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 1153–1157. [Google Scholar]

- Zhu, Q.; Xu, N.; Zhang, Z.; Guan, D.; Wang, R.; Zhang, D. Cross-spectral palmprint recognition with low-rank canonical correlation analysis. Multimed. Tools Appl. 2020, 79, 33771–33792. [Google Scholar] [CrossRef]

- Zhang, L.; Li, H.; Niu, J. Fragile bits in palmprint recognition. IEEE Signal Process. Lett. 2012, 19, 663–666. [Google Scholar] [CrossRef] [Green Version]

- Kong, A.; Zhang, D.; Kamel, M. A survey of palmprint recognition. Pattern Recognit. 2009, 42, 1408–1418. [Google Scholar] [CrossRef]

- Huang, D.S.; Jia, W.; Zhang, D. Palmprint verification based on principal lines. Pattern Recognit. 2008, 41, 1316–1328. [Google Scholar] [CrossRef]

- Morales, A.; Ferrer, M.A.; Kumar, A. Towards contactless palmprint authentication. IET Comput. Vis. 2011, 5, 407–416. [Google Scholar] [CrossRef] [Green Version]

- Zhang, D.; Kong, W.K.; You, J.; Wong, M. Online palmprint identification. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 1041–1050. [Google Scholar] [CrossRef] [Green Version]

- Kong, A.K.; Zhang, D. Competitive coding scheme for palmprint verification. In Proceedings of the 17th International Conference on Pattern Recognition (ICPR 2004), Cambridge, UK, 23–26 August 2004; Volume 1, pp. 520–523. [Google Scholar]

- Guo, Z.; Zhang, D.; Zhang, L.; Zuo, W. Palmprint verification using binary orientation co-occurrence vector. Pattern Recognit. Lett. 2009, 30, 1219–1227. [Google Scholar] [CrossRef]

- Minaee, S.; Wang, Y. Palmprint recognition using deep scattering convolutional network. arXiv 2016, arXiv:1603.09027. [Google Scholar]

- Dian, L.; Dongmei, S. Contactless palmprint recognition based on convolutional neural network. In Proceedings of the 2016 IEEE 13th International Conference on Signal Processing (ICSP), Chengdu, China, 6–10 November 2016; pp. 1363–1367. [Google Scholar]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Liu, M.Y.; Breuel, T.; Kautz, J. Unsupervised image-to-image translation networks. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 700–708. [Google Scholar]

- Juefei-Xu, F.; Pal, D.K.; Savvides, M. NIR-VIS heterogeneous face recognition via cross-spectral joint dictionary learning and reconstruction. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Boston, MA, USA, 7–12 June 2015; pp. 141–150. [Google Scholar]

- Kang, D.; Han, H.; Jain, A.K.; Lee, S.W. Nighttime face recognition at large standoff: Cross-distance and cross-spectral matching. Pattern Recognit. 2014, 47, 3750–3766. [Google Scholar] [CrossRef]

- He, R.; Cao, J.; Song, L.; Sun, Z.; Tan, T. Cross-spectral face completion for nir-vis heterogeneous face recognition. arXiv 2019, arXiv:1902.03565. [Google Scholar]

- PolyU Multispetral Palmprint Dataset. Available online: https://www4.comp.polyu.edu.hk/~biometrics/MultispectralPalmprint/MSP.htm/ (accessed on 8 April 2019).

- CASIA-MS-PalmprintV1 Collected by the Chinese Academy of Sciences’ Institute of Automation (CASIA). Available online: http://biometrics.idealtest.org/ (accessed on 8 April 2019).

- Choi, Y.; Choi, M.; Kim, M.; Ha, J.W.; Kim, S.; Choo, J. Stargan: Unified generative adversarial networks for multi-domain image-to-image translation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8789–8797. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Chen, S.; Chen, S.; Guo, Z.; Zuo, Y. Low-resolution palmprint image denoising by generative adversarial networks. Neurocomputing 2019, 358, 275–284. [Google Scholar] [CrossRef]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems, 2015. Software. Available online: tensorflow.org (accessed on 8 April 2019).

- Kroeger, T.; Timofte, R.; Dai, D.; Van Gool, L. Fast optical flow using dense inverse search. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 471–488. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).