Generating Synthetic Images for Healthcare with Novel Deep Pix2Pix GAN

Abstract

1. Introduction

2. Related Works

3. Methods

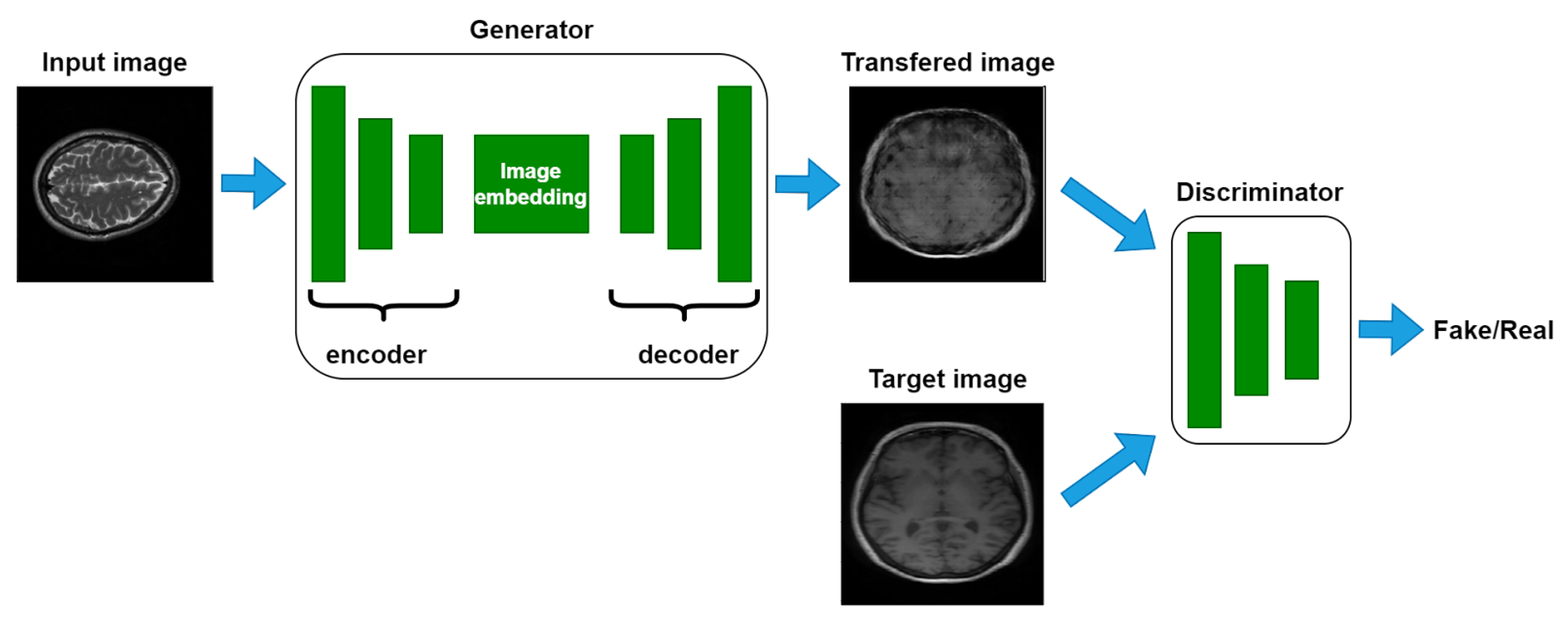

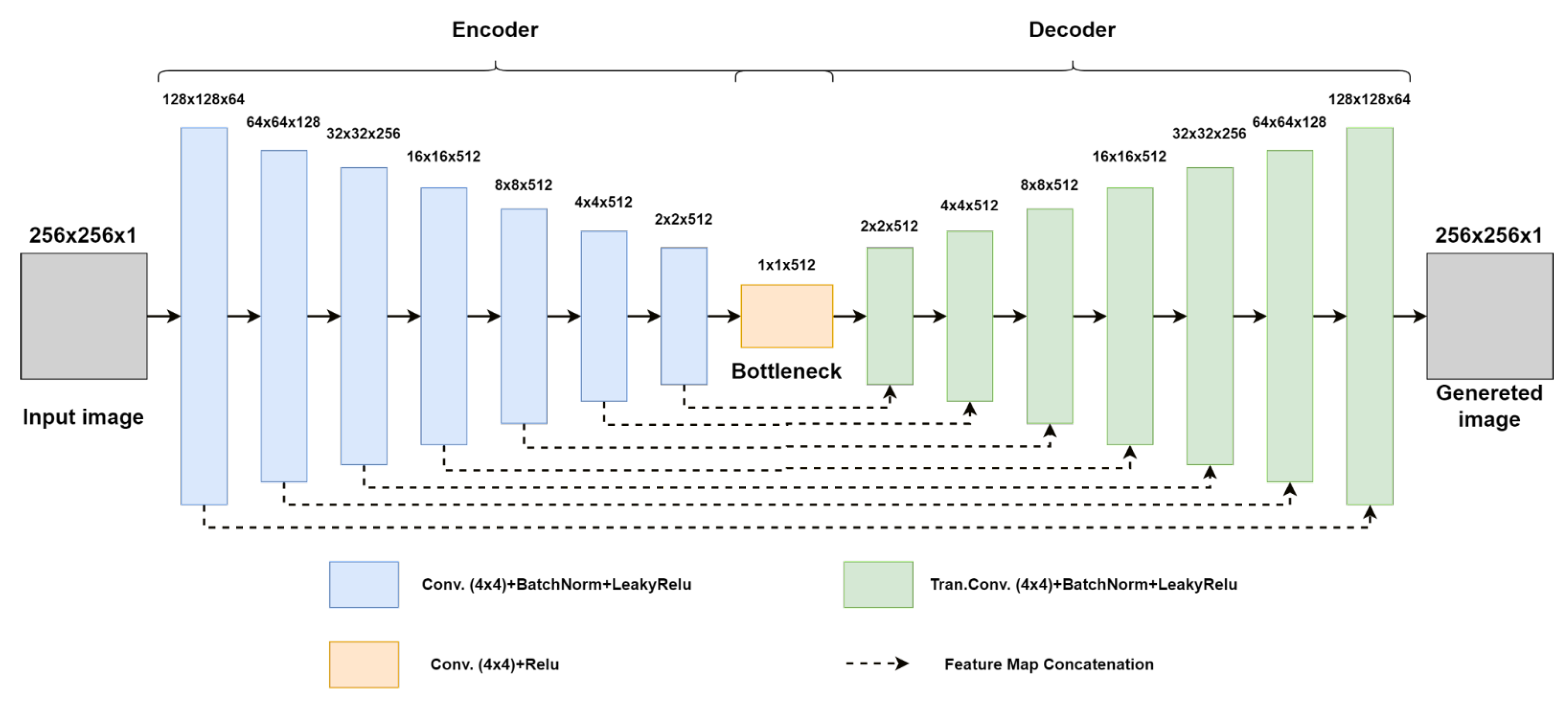

3.1. GAN Neural Network

3.2. CycleGAN

3.3. Pix2Pix GAN

| Algorithm 1 Pseudo code of training of Pix2Pix GAN. k is the number of network updates per training iteration |

1: for number of training…do |

2: for k steps… do |

3: Sample minibatch of m samples from data distribution . |

4: Sample minibatch of m noise samples from noise prior . |

5: Sample minibatch of m samples from data generating distribution . |

6: Update the discriminator by ascending its stochastic gradient: |

7: end for |

8: Sample minibatch of m noise samples from noise prior . |

9: Update the generator by descending its stochastic gradient: |

10: end for |

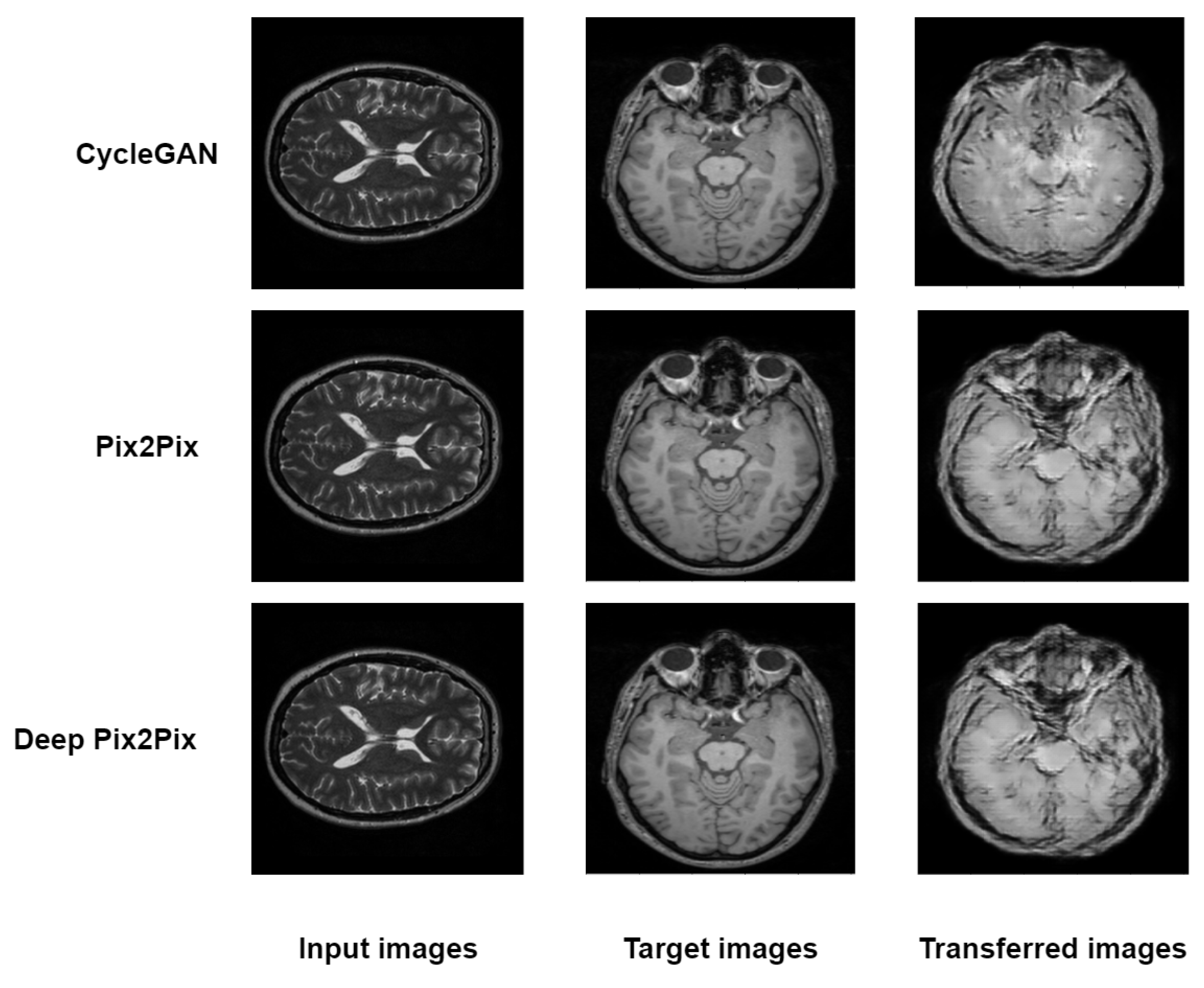

4. Experimental Results and Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Kingma, D.P.; Welling, M. Auto-encoding variational bayes. arXiv 2013, arXiv:1312.6114. [Google Scholar]

- Jimenez Rezende, D.; Mohamed, S.; Wierstra, D. Stochastic backpropagation and approximate inference in deep generative models. In Proceedings of the 31st International Conference on Machine Learning, Beijing, China, 21–26 June 2014; Volume 32, pp. 1278–1286. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the 2014 Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014. [Google Scholar]

- Bermudez, J.D.; Happ, P.N.; Feitosa, R.Q.; Oliveira, D.A. Synthesis of multispectral optical images from SAR/optical multitemporal data using conditional generative adversarial networks. IEEE Geosci. Remote. Sens. Lett. 2019, 8, 1220–1224. [Google Scholar] [CrossRef]

- Baur, C.; Albarqouni, S.; Navab, N. Generating highly realistic images of skin lesions with GANs. In OR 2.0 Context-Aware Operating Theaters, Computer Assisted Robotic Endoscopy, Clinical Image-Based Procedures, and Skin Image Analysis; Springer: Cham, Switzerland, 2018; pp. 260–267. [Google Scholar]

- Chuquicusma, M.J.M.; Hussein, S.; Burt, J.; Bagci, U. How to fool radiologists with generative adversarial networks? A visual turing test for lung cancer diagnosis. In Proceedings of the 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018), Washington, DC, USA, 4–7 April 2018; pp. 240–244. [Google Scholar]

- Frid-Adar, M.; Diamant, I.; Klang, E.; Amitai, M.; Goldberger, J.; Greenspan, H. GAN-based synthetic medical image augmentation for increased CNN performance in liver lesion classification. Neurocomputing 2018, 321, 321–331. [Google Scholar] [CrossRef]

- Jin, D.; Xu, Z.; Tang, Y.; Harrison, A.P.; Mollura, D.J. CT-realistic lung nodule simulation from 3D conditional generative adversarial networks for robust lung segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Granada, Spain, 16–20 September 2018; pp. 732–740. [Google Scholar]

- Mok, T.C.W.; Chung, A.C.S. Learning data augmentation for brain tumor segmen-tation with coarse-to-fine generative adversarial networks. arXiv 2018, arXiv:1805.11291. [Google Scholar]

- Gu, X.; Knutsson, H.; Nilsson, M.; Eklund, A. Generating diffusion MRI scalar maps from T1 weighted images using generative adversarial networks. In Scandinavian Conference on Image Analysis; Springer: Norrköping, Sweden, 2019; pp. 489–498. [Google Scholar]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv 2015, arXiv:1511.06434. [Google Scholar]

- Yeh, R.A.; Chen, C.; Yian, L.T.; Schwing, A.G.; Hasegawa-Johnson, M.; Do, M.N. Semantic image inpainting with deep generative models. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5485–5493. [Google Scholar]

- Costa, P.; Adrian, G.; Maria, I.M.; Michael, D.A.; Meindert, N.; Ana, M.M.; Aurélio, C. Towards adversarial retinal image synthesis. arXiv 2017, arXiv:1701.08974. [Google Scholar]

- Dai, W.; Dong, N.; Wang, Z.; Liang, X.; Zhang, H.; Xing, E.P. Structure correcting adversarial network for organ segmentation in chest x-rays. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support; Springer: Cham, Switzerland, 2018; pp. 263–273. [Google Scholar]

- Xue, Y.; Xu, T.; Zhang, H.; Long, L.R.; Huang, X. SegAN: Adversarial network with multi-scale L1 loss for medical image segmentation. Neuroinformatics 2018, 16, 383–392. [Google Scholar] [CrossRef] [PubMed]

- Nie, D.; Trullo, R.; Lian, J.; Petitjean, C.; Ruan, S.; Wang, Q.; Shen, D. Medical Image Synthesis with Context-Aware Generative Adversarial Networks; Springer: Berlin, Germany, 2017; pp. 417–425. [Google Scholar]

- Ben-Cohen, A.; Klang, E.; Raskin, S.P.; Amitai, M.M.; Greenspan, H. Virtual PET Images from CT Data Using Deep Convolutional Networks: Initial Results; Springer: Berlin, Germany, 2017; pp. 49–57. [Google Scholar]

- Schlegl, T.; Seeb, P.; Waldstein, S.M.; Schmidt-Erfurth, U.; Langs, G. Unsupervised anomaly detection with generative adversarial networks to guide marker discovery. In Advances in Neural Information Processing Systems; Springer: Berlin, Germany, 2014; pp. 146–157. [Google Scholar]

- Rocca, J. Understanding generative adversarial networks (gans). Medium 2019, 20. Available online: https://towardsdatascience.com/understanding-generative-adversarial-networks-gans-cd6e4651a29 (accessed on 21 October 2022).

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Wu, H.; Jiang, X.; Jia, F. UC-GAN for MR to CT image synthesis. In Workshop on Artificial Intelligence in Radiation Therapy; Springer: Berlin, Germany, 2019; pp. 146–153. [Google Scholar]

- Mirza, M.; Osindero, S. Conditional generative adversarial nets. arXiv 2014, arXiv:1411.1784. [Google Scholar]

- Odena, A.; Olah, C.; Shlens, J. Conditional image synthesis with auxiliary classifier gans. In Proceedings of the International Conference on Machine Learning, Sydney, NSW, Australia, 6–11 August 2017; pp. 2642–2651. [Google Scholar]

- Wang, X.; Yan, H.; Huo, C.; Yu, J.; Pant, C. Enhancing Pix2Pix for remote sensing image classification. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; pp. 2332–2336. [Google Scholar]

- Popescu, D.; Deaconu, M.; Ichim, L.; Stamatescu, G. Retinal blood vessel segmentation using pix2pix gan. In Proceedings of the 2021 29th Mediterranean Conference on Control and Automation (MED), Virtually, 22–25 June 2021; pp. 1173–1178. [Google Scholar]

- Ixi Dataset. Available online: https://brain-development.org/ixi-dataset/ (accessed on 21 October 2022).

- Yao, S.; Tan, J.; Chen, Y.; Gu, Y. A weighted feature transfer gan for medical image synthesis. Mach. Vis. Appl. 2021, 32, 1–11. [Google Scholar] [CrossRef]

| Method | MAE | PSNR | SSIM |

|---|---|---|---|

| Cycle GAN | 0.059 | 59.56 | 0.0344 |

| Pix2Pix GAN | 0.057 | 60.51 | 0.354 |

| Deep Pix2Pix GAN | 0.038 | 61.32 | 0.391 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aljohani, A.; Alharbe, N. Generating Synthetic Images for Healthcare with Novel Deep Pix2Pix GAN. Electronics 2022, 11, 3470. https://doi.org/10.3390/electronics11213470

Aljohani A, Alharbe N. Generating Synthetic Images for Healthcare with Novel Deep Pix2Pix GAN. Electronics. 2022; 11(21):3470. https://doi.org/10.3390/electronics11213470

Chicago/Turabian StyleAljohani, Abeer, and Nawaf Alharbe. 2022. "Generating Synthetic Images for Healthcare with Novel Deep Pix2Pix GAN" Electronics 11, no. 21: 3470. https://doi.org/10.3390/electronics11213470

APA StyleAljohani, A., & Alharbe, N. (2022). Generating Synthetic Images for Healthcare with Novel Deep Pix2Pix GAN. Electronics, 11(21), 3470. https://doi.org/10.3390/electronics11213470