1. Introduction

Integrated development in information technologies have lead to the emergence of cyber-physical systems (CPS). CPS is a modern control system that deeply integrates computation, communication, control technologies (3C) with physical systems. It monitors and controls the physical processes through computing system in real time. As one of the core technologies in industry 4.0 at the current stage, CPS now has a wide application prospect in many fields, including aerospace, civil infrastructure energy, intelligent manufacturing, intelligent transportation, new materials, etc. [

1]. Mass-deployed wireless network in CPS has facilitated production mode and lifestyle, but it has meanwhile brought security risks [

2,

3,

4]. Cyber attackers are able to destroy the physical control process of the system through various attack methods. Frequent CPS safety accidents in recent years have indicated that it is of great significance to explore the security issue of CPS under cyber attacks.

Cyber attacks have become one of the main threats to CPS security. Investigating their characteristics is helpful for better protection of CPS. Typical attacks include denial-of-service (DoS) attack [

5,

6], deception attack [

7,

8] and replay attack [

9,

10]. Among them, the DoS attack is the easiest to implement, but its destructive power is considerable. DoS attacks have the ability to block the data communication between system components by physical layer congestion or transmission layer flooding without any information disclosure and channel monitoring. To measure the damage on CPS performance caused by a DoS attack, the state estimation technique is a common method which reflects the internal control information of system [

11,

12]. Thus, the research task of this paper mainly focuses on the issue of secure state estimation in CPS under DoS attack.

Current studies mainly consider the secure state estimation of CPS under DoS attack from three perspectives: sensor, attacker and the game between two sides. From the perspective of sensors, reasonable and effective defending strategies should be studied to minimize the impact of state estimation caused by DoS attacks. To solve the CPS security problem under continuous random DoS attacks, Ref. [

13] designed a dynamic output feedback controller to provide control inputs data. To achieve stable control for the CPS under DoS jamming attack, controllers with state feedback and output feedback were designed in [

14] based on a passive attack-tolerant mechanism. Directed against DoS attacks with different energy levels, a control method combined active and passive attack tolerance strategies was proposed in [

15], which could resist the impact of DoS attacks on the CPS effectively. From the perspective of attacker, some researchers considered how to design attack strategies to deteriorate the performance of the system as much as possible. An optimal attack strategy was proposed based on local Kalman filtering in [

16,

17] when DoS attack energy was limited. The secure state estimation problem of CPS with two subsystems was researched in [

18], where the optimal attack scheduling was proposed. The works in [

19,

20] considered the optimal power allocation of DoS attacker, and the multi-agent issue was involved. Furthermore, the goals of both sides are opposite under cyber attacks. The combat between system defender and attacker could be regarded as a game process. The study in [

21] considered the remote state estimation problem of CPS under DoS attack based on signal-to-interference-plus-noise ratio using the two-player game method. In [

22], a static cooperative game was formulated for the collaboration among multiple DoS attackers, while a non-cooperative game described the competition among the multiple controllers. On the basis of above research, this paper addresses the issue within the unified framework from three different perspectives to develop a universal solution.

The above literature mostly adopts the methods of cybernetics or game theory. CPSs in reality are mostly complex nonlinear systems, which are complicated in terms of constructing the model and designing the control law. Reinforcement learning (RL) provides an innovative approach to addressing this problem. With the rapid development of artificial intelligence in recent years, RL algorithms have been widely used in many fields due to their model-free learning mechanism [

23,

24,

25,

26]. The extension of the RL method on secure state estimation problem in CPS is reasonable as the attack and defense issue of CPS under cyber attacks could be described by a Markov decision process (MDP). Relevant studies have proven the practicability [

27,

28,

29,

30]. In [

27], the action-state function in RL framework was trained to find the optimal energy management strategy of a hybrid electric system.

Q-learning, as a typical off-policy RL algorithm, was modified in [

29] to find the Nash equilibrium policies between sensors and attackers. The problem of CPS against DoS attack with reliable channel and unreliable channel was addressed in [

30], where the RL method was proved to find the optimal policy selection problem of attacker and sensor. In the framework of RL, the action selection strategy is an important component.

-greedy [

31] and Ant-TD [

32] combine both probabilistic and greedy rules to balance exploration and exploitation. EFR-ESO [

33] could solve the high-dimensional selection problem when dealing with complex systems. In this paper,

-greedy is chosen as the main action selection strategy due to its simplicity and versatility.

Furthermore, RL algorithms are divided into on-policy and off-policy due to different iteration patterns. Normally, on-policy algorithms focus on stability, while off-policy algorithms emphasize efficiency [

34]. To investigate the effect of different policy iteration patterns on algorithm performance, this paper applies the framework of two classical RL algorithms:

Q-learning (off-policy) and SARSA (on-policy), to the security issue of CPS against DoS attack. The confrontation game process between the attacker and the defender under cyber attack are modeled as an MDP, and then the corresponding RL method is proposed in this paper.

The research task of this paper is to estimate the secure state of CPS based on RL under a DoS attack. Firstly, the interactive combat process of CPS defender and DoS attacker are established comprehensively, where the energy constraints of sensor and attacker are taken into account. Moreover, the Kalman filter is involved as the evaluation standard of CPS security issues. Then, the transition of state estimation error covariance against the DoS attack is characterized as an MDP to lay a foundation for RL algorithm design. The framework of Q-learning and SARSA, which are the representatives of off-policy and on-policy algorithms, respectively, is introduced into secure state estimation. The contributions of this paper are the following:

(i) Kalman filter is combined with reinforcement learning to estimate the secure state of CPS under cyber attacks. The state estimation error covariance is selected as the state in reinforcement learning algorithm, and its transition is described as a Markov decision process. The output of this artificial intelligence method could guide the sensor or attacker to find optimal countermeasure;

(ii) Based on the framework of Q-learning and SARSA, the secure state estimation algorithm is designed with reinforcement learning from three different perspectives: defender, attacker and the game of both opposite sides;

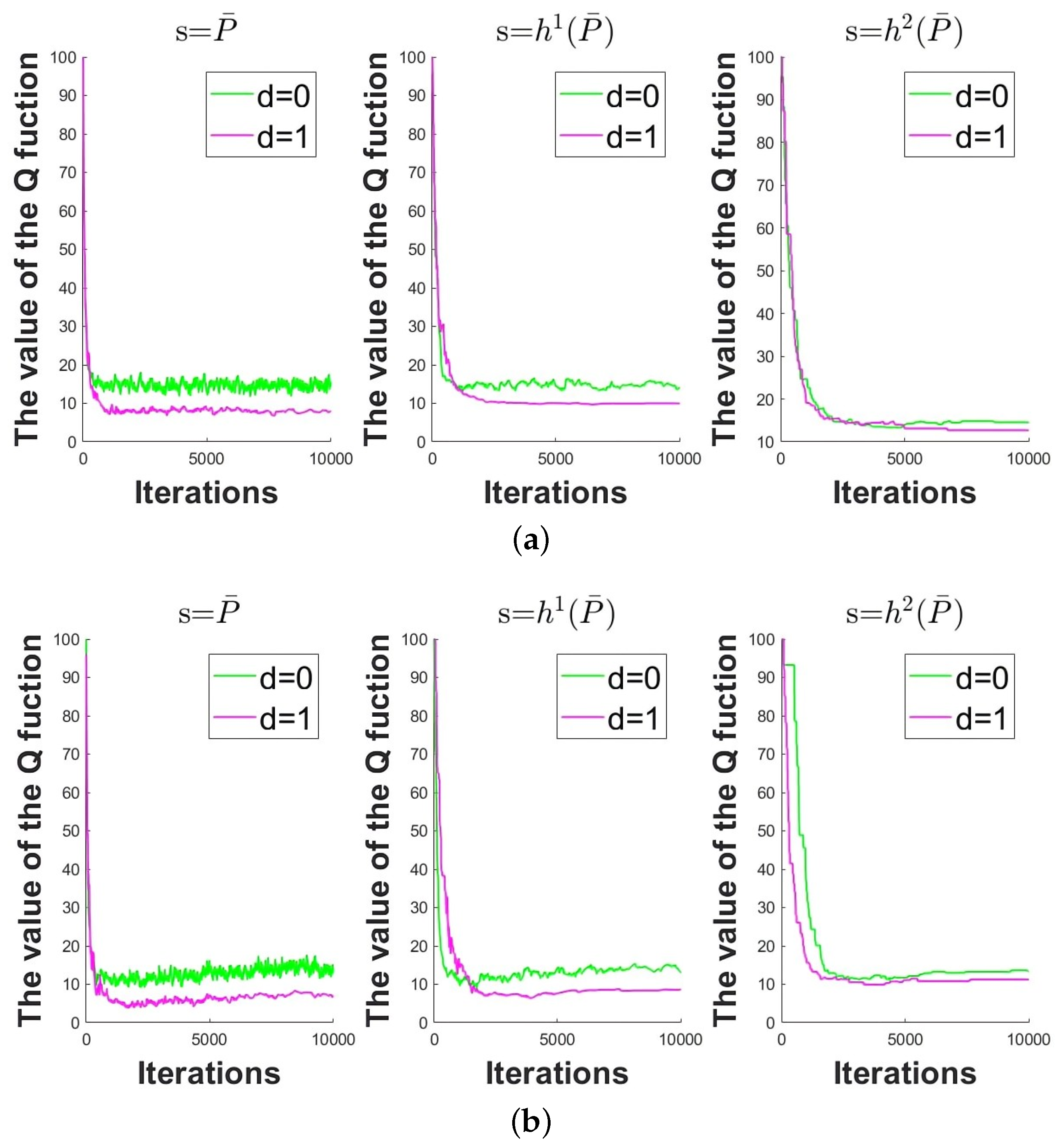

(iii) Through comparative analysis of the two algorithms, the differences between Q-learning and SARSA are verified. It is demonstrated that the SARSA method is a little more conservative than the Q-learning method due to the lower value in the Q-table.

The rest of this paper is organized as follows.

Section 2 introduces the relevant theoretical background, including two types of RL algorithms and the concept of two-player zero-sum game. In

Section 3, the framework of CPS under DoS attack is formed, where the CPS structure and DoS attack model are established. In

Section 4, an MDP is set up to describes the RL problem under the interactive game process between sensor and attacker. Based on the framework of

Q-learning and SARSA, the RL algorithms are designed to find the optimal policy and the Nash equilibrium policy.

Section 5 presents the simulation results and proves the effectiveness of the algorithms. Different algorithms are compared and analyzed according to numerical values of

Q-table.

Section 6 presents conclusions on the research task and achievements of the whole paper and identifies the future research direction.

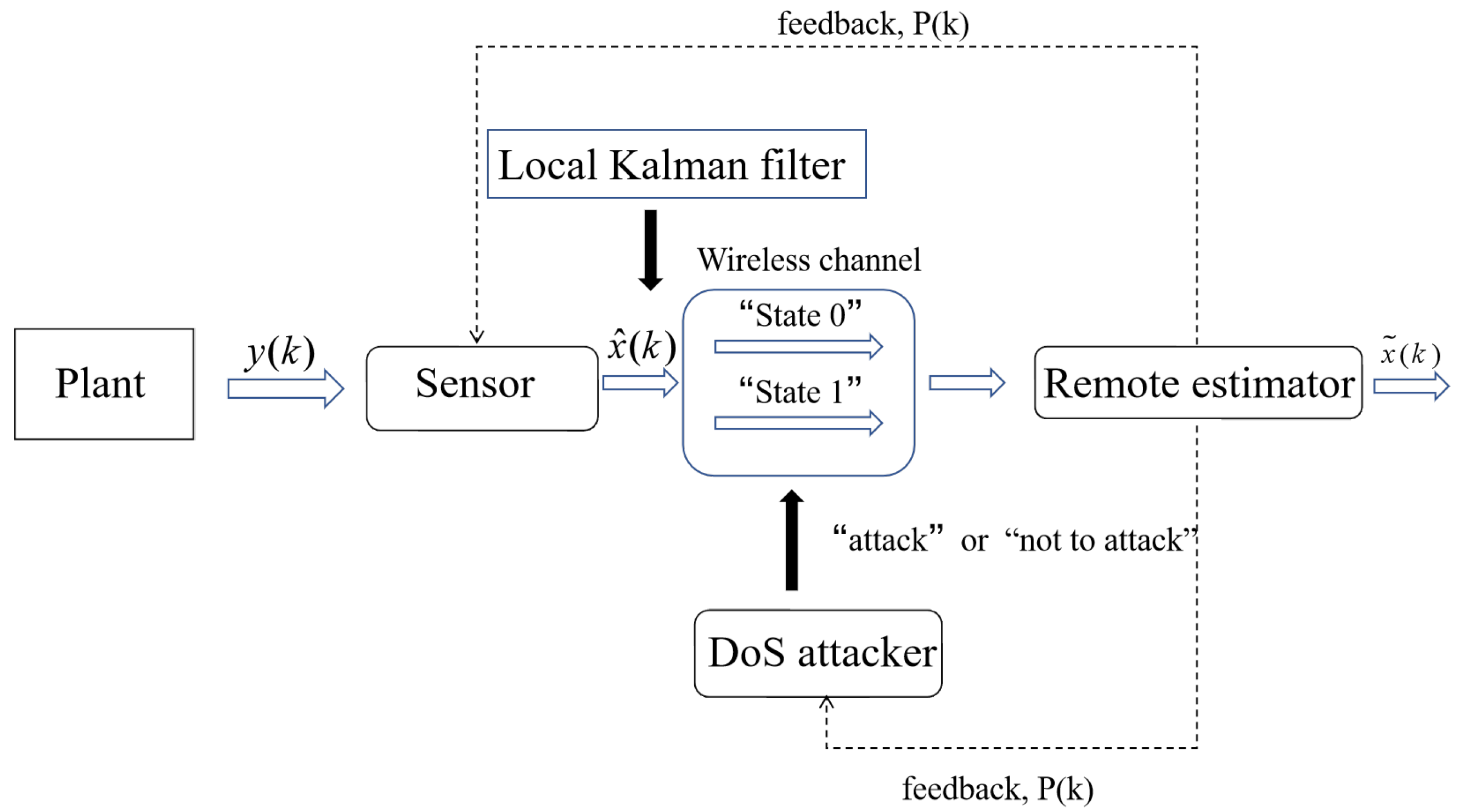

4. Reinforcement Learning Methods Finding Optimal Policies

An MDP is established to describe the RL problem of CPS against DoS attack. The elements of the MDP are as follows:

State: Denote the estimation error covariance of remote estimators as the state variable. at time is decided by , and . S, as the set of state in RL problem, is denoted by .

Action: The sensor needs to select the channel in “state 0” or “state 1” to send the packet of the local estimation data to the remote estimator, . The DoS attacker needs to choose whether to attack the channel or not, . Denote A as the action sets in RL problems, .

State transition rule: The estimation of the remote estimator will depend on its current state and the actions of sensors and attackers. The next state of the estimator

is obtained by the following formula:

Reward function: The reward function, as an assessment of the current state and action behaviors, is defined as follows:

where

is the trace of the matrix

. The reward function is designed based on the principle that the sensor’s goal to minimize the estimated error covariance while the attacker’s goal is to maximize it. The cost constraints of the sensor and the attacker are also considered.

Discount factor: characterizes the feature that sensor and attacker‘s focus more on current rewards than future ones. Meanwhile, it ensures the convergence of the Q-function.

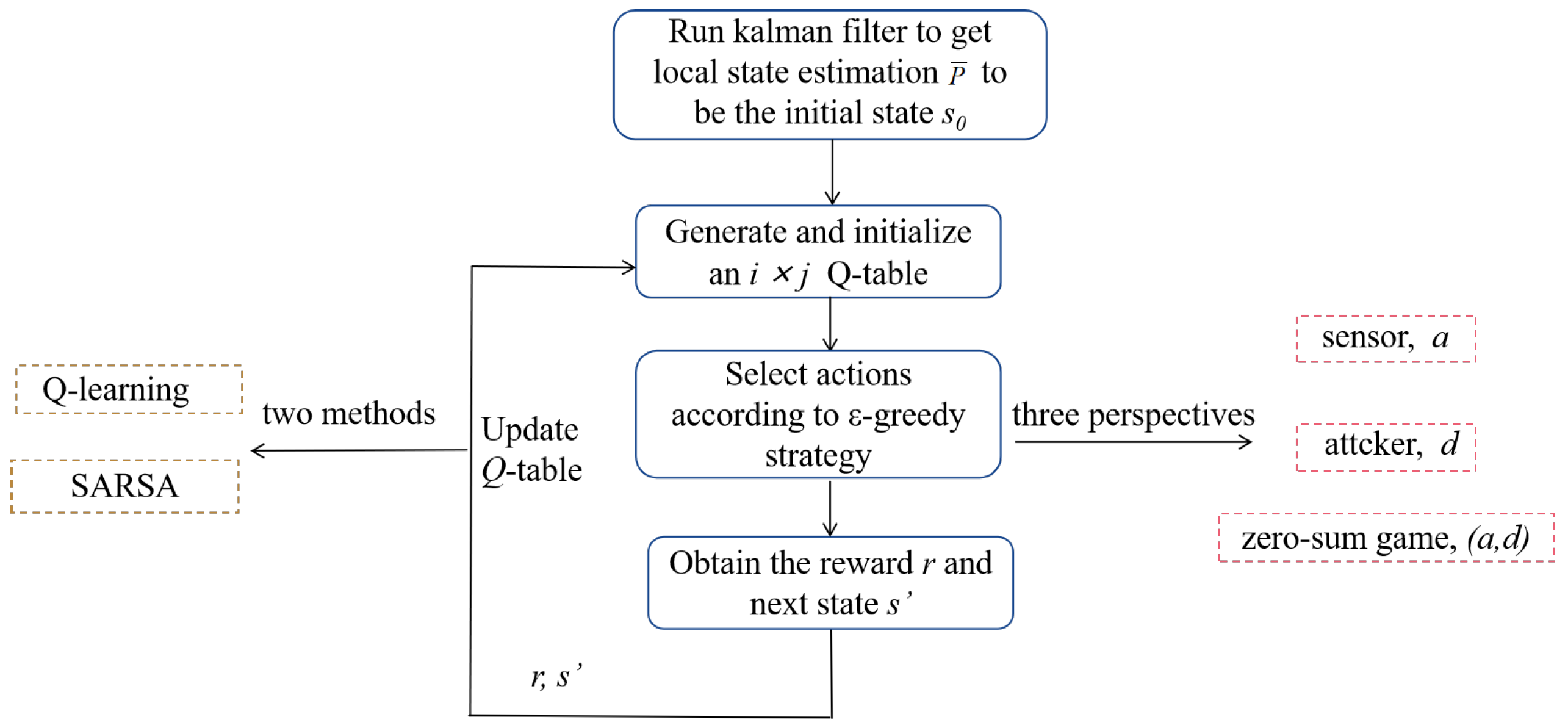

Consider the problems from three perspectives: sensor, attacker and the game between sensor and attacker. The algorithm is designed based on the framework of Q-learning and SARSA, respectively. The overall framework of the algorithm is universal, which is described as follows:

(i) Given the system parameters, the estimation error covariance of the steady state is obtained by running the Kalman filter. will be regarded as the initial state s of RL algorithm;

(ii) Create an Q table and initialize it, where i is the number of states and j is the number of actions;

(iii) Select actions according to the current state and the -greedy strategy;

(iv) Obtain the instant reward and the next state according to the reward function and state transition rule of MDP;

(v) Update Q-table and the state.

After a sufficient number of time steps, the Q-table eventually converges. According to the convergent Q-table, the optimal strategy of sensor or attacker and Nash equilibrium strategy could be obtained.

Remark 3. The ϵ-greedy strategy is described as follows: when an agent makes a decision, it selects an unknown action randomly with a probability of ϵ, and the leaving 1−ϵ probability follows a greedy strategy (like choosing the action with the highest value in the Q-table). The ϵ-greedy strategy achieves a good balance between “exploration” and “exploitation”. “exploration” refers to trying an action that has not yet been attempted and “exploitation” refers to choosing the next action from a known action and which may maximize the total payoff in the long run. The balance between them is the key to determining whether the RL system is able to obtain the optimal solution. The general ϵ-greedy strategy converges slowly and is not suitable for non-stationary distribution. To improve it, this paper sets ϵ to a large value at the beginning of training and lets it decay gradually over time.

The action selection strategy and Q-table update method differ from different perspectives, which will be addressed in detail in the following sections.

4.1. Optimal Policy for the Sensor

From the perspective of sensors, the sensor selects actions according to the -greedy strategy, while the attacker selects actions with a uniform random strategy. As the purpose of the sensor is to reduce the estimated error covariance mostly to maintain system stability, it selects the action with the lowest Q-value in the 1- probability of -greedy strategy. As the design of the reward function includes the influence of the attack behavior on the system estimation error, the attacker’s action selection strategy also needs to be determined: the attacker selects the action with a uniform random strategy.

Based on

Q-learning, the update rule of

Q-table is as follows:

where the action used to update the

Q-table is selected based on the greedy strategy, that is, the action with the lowest

Q-value under the state s’. This action is only used to update the

Q-table and will not be actually executed.

Based on SARSA, the update rule of

Q table is as follows:

which indicates that the agent’s strategy for selecting actions is the same as that for updating the

Q-table.

The algorithm flow is conducted according to

Figure 3 when the action strategy and the update rule of

Q-table are determined. The

Q-table converges through sufficient time steps. According to the convergent

Q-table, the sensor’s optimal policy is to choose the sensor action with the largest value in each state.

4.2. Optimal Policy for the Attacker

In contrast to the sensor, the attacker’s goal is to maximize the estimated error covariance to destroy the system performance. Based on the -greedy strategy, attacker select an action randomly with a probability of , and selects the action of the highest Q-value with a probability of 1-. Meanwhile, the sensor selects actions according to the uniform random strategy.

Based on

Q-learning, the update rule of

Q-table is as follows:

where the action used to update the

Q-table is the action with the highest

Q-value under the state s’.

Based on the SARSA, the update rule of

Q-table is as follows:

where the action

corresponding to the next state

used to update

Q-table is the action selected by the

-greedy strategy, and will be executed at the next time step, i.e.,

.

For the attacker, the RL algorithm is run in

Figure 3 when the sensor strategy is set as random. After a certain time of iterative learning, the

Q-table could converge to be relatively stable. The optimal policy for the attacker is to choose the action with lowest value in each states.

4.3. Nash Equilibrium Policy of the Sensor and Attacker

Under the framework of two-player zero-sum game, the sensor and attacker select actions according to a modified -greedy strategy combining uniform random and max-min and min-max criterion together, which will be addressed in detail as follows.

For the sensor, it selects unknown actions randomly with the probability of . In the remaining probability 1-, firstly, it needs to consider the constraints in Remark 1: when , choosing the channel in “state 1” is the only choice for sensor, i.e., . In other cases, the sensor selects actions based on the max-min criterion: the attacker chooses the action with the highest value in each sensor’s action selection case, which means the worst situation to sensor. Among the chosen actions, the sensor continues to select the actions with the relatively lowest values, thus achieving the best results from the worst case for the sensor.

From the perspective of the attacker, there are no constraints for the attacker. The attacker selects an unknown action with the probability of and selects the action according to min-max criterion with the probability of 1 1-: the sensor selects the actions of lowest values in each attacker’s action selection case, which means the worst situation to attacker. Among the chosen actions, the attacker continues to select the actions with the relatively highest values, thus achieving the best results from the worst case for the attacker.

Based on

Q-learning, the update rule of

Q-table is as follows:

where the action pair used to update the

Q-table is selected according to the max-min criterion under state

.

Based on SARSA, the update rule of

Q-table is as follows:

where the action pair used to update the

Q-table is from the action selection process, and will be executed in the next time step, i.e.,

.

The each line of the

Q-table could be converted to a strategy matrix where the action sets of sensor and attacker constitute the rows and columns of the matrix. When the

Q-table converges, the Nash equilibrium is solved if the sensor’s action obtained by max-min criterion and the attacker’s action obtained by min-max criterion are matched. Algorithm 1 describes the flow to find Nash equilibrium policy, which is displayed as follows.

| Algorithm 1 RL method to find Nash equilibrium policy |

Input: CPS parameters A, C; cost c and cd; learning rate α, discount factor ρ and exploration rate ε

Output: Converged Q-table, Nash equilibrium policy.

Initialize: Initial state , initialize Q-table, initialize time step .

- 1:

whiledo - 2:

if then - 3:

Choose actions randomly; - 4:

else - 5:

Find the optimal actions obtained according to max-min and min-max criterion. - 6:

end if - 7:

Observe the reward by (15). - 8:

Observe the next state according to (14). - 9:

Update the Q-table according to (20 or 21). - 10:

- 11:

- 12:

end while - 13:

Return the final Q-table. - 14:

Observe the Nash equilibrium policy.

|