Abstract

Person re-identification (ReID) is the problem of cross-camera target retrieval. The extraction of robust and discriminant features is the key factor in realizing the correct correlation of targets. A model based on convolutional neural networks (CNNs) can extract more robust image features. Still, it completes the extraction of images from local information to global information by continuously accumulating convolution layers. As a complex CNN, a vision transformer (ViT) captures global information from the beginning to extract more powerful features. This paper proposes an unsupervised domain adaptive person re-identification model (ViTReID) based on the vision transformer, taking the ViT model trained on ImageNet as the pre-training weight and a transformer encoder as the feature extraction network, which makes up for some defects of the CNN model. At the same time, the combined loss function of cross-entropy and triplet loss function combined with the center loss function is used to optimize the network; the person’s head is evaluated and trained as a local feature combined with the global feature of the whole body, focusing on the head, to enhance the head feature information. The experimental results show that ViTReID exceeds the baseline method (SSG) by 14% (Market1501 → MSMT17) in mean average precision (mAP). In MSMT17 → Market1501, ViTReID is 1.2% higher in rank-1 (R1) accuracy than a state-of-the-art method (SPCL); in PersonX → MSMT17, the mAP is 3.1% higher than that of the MMT-dbscan method, and in PersonX → Market1501, the mAP is 1.5% higher than that of the MMT-dbscan method.

1. Introduction

Person re-identification (ReID) is a prominent research topic in computer vision. It is widely regarded as a sub-problem of image retrieval. It mainly involves the use of computer vision technology to judge whether there is a specific person in the image or video, i.e., to retrieve the picture of a pedestrian across devices given a monitored pedestrian image. ReID technology can make up for the visual angle limitation of the current fixed cameras and can be combined with person detection and person tracking technology. It is widely used in intelligent security, video surveillance, and many other fields. Due to the different scenes in real life, ReID also faces different challenges, such as changes in pedestrian appearance brought on by various cameras, the loss of pedestrian features due to target occlusion, and diminished differentiation due to close clothing color matching between different pedestrians. For a long time, CNN-based methods have dominated ReID research [1,2,3,4,5].

The actual receptive field based on the CNN method is far smaller than the theoretical receptive field, which is not conducive to entirely using context information for feature capture. Although the receptive field can be expanded by continuously stacking deeper convolution layers, it will cause the model to be too bloated. The amount of calculation will increase sharply, contrary to the original intention of simplicity and practicality. The transformer network does not have this concern [6]. Its advantage is the use of the way of attention to capture the global context information, establish a long-distance dependence on the target, and extract more powerful features. In addition, the migration effect of the transformer is better than that of CNN, and the model pre-trained on large datasets can be easily migrated to small datasets.

The following three improvements are made in this paper to solve the problem of feature loss caused by target occlusion and the discrimination degradation caused by the similar clothing color of different pedestrians. (1) In Section 3.3, the transformer encoder replaces ResNet [7] as our feature extraction network for unsupervised adaptive ReID so that we can better combine the global information of the image and creatively use ViT [8] for unsupervised domain adaptive person re-identification. (2) In Section 3.2, to mine the potential similarity from the whole to the part, the grouping idea of the improved SSG method [3] is adopted, and the person’s head is trained as the local feature combined with the two branches of the person global feature. (3) In Section 3.4, to make the class more compact, the center loss function [9] proposed in face recognition is introduced, and the cross-entropy and triplet loss function [10] are combined as the combined loss function to update the network.

2. Related Work

At present, person re-identification has two primary directions: one is a supervised learning method with labeled data training, and the other is an unsupervised learning method without labeled data. Unsupervised learning is usually divided into sub-problems to study, such as the unsupervised domain adaptive model and the semi-supervised model.

In the supervised learning of person re-identification, the method based on local features extracts the feature of a region in the image. It uses multiple local features as the final feature [1,2,11,12,13]. The method based on video sequence uses a continuous series of person pictures for re-identification or a sequence search sequence to solve the problem [14,15,16]. The method based on GAN Network [17] uses GAN to generate picture samples and increase the data for training [18,19,20,21]. Because there is data bias between the labeled source domain and the unlabeled target domain, the ReID model trained on the source domain often performs poorly in the target domain. At the same time, due to the high acquisition cost of annotated large-scale datasets, research on person re-identification is gradually changing to an unsupervised domain adaptive method (UDA) [22].

Unlike unsupervised learning, which is not needed to label the source domain, the adaptive domain learning of person re-identification uses the labeled source domain data for training. In recent years, the unsupervised domain adaptive method of person re-identification is mainly the pseudo-label algorithm based on clustering. This algorithm’s main challenge is improving the accuracy of pseudo-labels and reducing the impact caused by noisy pseudo-labels. Representative algorithms such as SSG [3] use the potential similarity of unlabeled target domain data (from global to local), automatically construct multiple clusters from different feature groups, and assign labels to these independent clusters, and these labels are used as pseudo-labels to supervise training. This allocation and training process is performed repeatedly and alternately until the model converges. PAST [23] proposes a new self-training strategy, which gradually enhances the model’s ability by alternately using the conservative and promoting stages. In addition, to improve the reliability of the selected triplet samples, the triplet loss function [10] based on the ranking is introduced into the conservative stage; it is an unlabeled objective function based on the similarity between data pairs. MMT [4] uses the “synchronous average teaching” framework to optimize pseudo-tags. The core idea is to use more robust “soft” tags to optimize pseudo-tags online. AD-Cluster [24] uses GAN [21] to generate new sample data, inputs all data (new + old) into a CNN for feature extraction and re-clustering, and is iteratively optimized according to max–min (maximization of sample generation diversity and minimization of intra-class distance). SPCL [5] proposes the use of all source domain data and target domain data in training; the use of a hybrid memory model to treat all source domain classes, target domain clusters, and each cluster outlier instance of the target domain as equal categories for training supervision; and the use of momentum update to dynamically update and change the prototypes of these three categories in the hybrid memory model. MAR [25] puts forward a new idea of ReID applied to unsupervised learning: soft multilabel. Three weighted combinations of loss functions are designed to train the model based on soft multilabel. DG-Net++ [26] proposes a cross-domain and cyclic consistent image generation method, which decomposes the source image and target image by simulating three potential spaces (including the shared appearance space capturing ID-related features) through the corresponding encoder to jointly solve the problem of mutual promotion decoupling and domain adaptation. D-MMD [27] puts forward the UDA method in a different space: the D-MMD loss realized in the other areas, the distance aligned by D-MMD loss, and the optimization recognized by gradient descent on a relatively small batch size. There are also some studies based on the adversarial discriminative domain adaptation method, which explores the impact of domain-invariant features of pedestrian attributes (e.g., hair length, clothing style, and color) on person re-identification methods [28]. Some other studies propose a style transfer-based approach (STReID), which converts the source domain image to the style of the target domain image and trains the converted style data with the source domain data to obtain better performance across domains [29]. Some other studies proposed the camera penalty learning (CPL) method, which adds camera-ID-penalty to the traditional triplet loss to solve the pseudo-label noise caused by cross-camera target retrieval [30].

The transformer [6] emerged in the field of machine translation. It proposes an attention-based encoder–decoder architecture, which uses the attention mechanism to improve the model training speed and automatically capture the relative correlation at different positions of the input sequence. It can work in high parallel, and the training speed is breakneck. The potential of the transformer blooms everywhere in the field of natural language processing and shines brightly in the field of computer vision. The conformer [31] presents a hybrid network structure, which combines convolution operation and a self-attention mechanism to enhance feature representation. It relies on a feature coupling unit (FCU) to interactively integrate local and global feature representation at different resolutions. The ViT [8] indicates that the transformer has robust scalability (the more extensive the model, the better the effect). Further improving the number of parameters can bring better results; a pure transformer can also perform well directly as a feature extraction network for computer vision tasks. In the study of supervised person re-identification in 2021, TransReID [32] has proved its effectiveness as a transformer. It uses a transformer as the backbone network to extract features alone, rather than simply using a CNN (such as ResNet [7]) or CNN+ transformer [31] to extract features. TransReID obtains a state-of-the-art (SOTA) effect.

3. Proposed Method

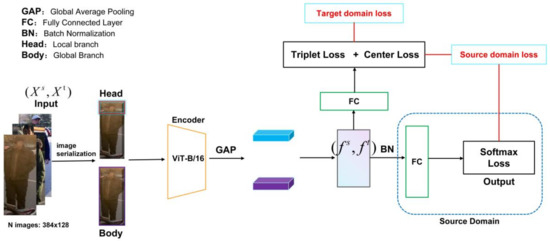

Figure 1 shows the overall framework. Given the strong representation ability and feature extraction ability of the transformer’s attention mechanism, this paper proposes an unsupervised domain adaptive method (ViTReID) based on the transformer, composed of three parts. Firstly, the transformer network pre-trained on ImageNet [33] is used as the pre-training weight (this section is described in Section 3.1). Then the transformer encoder is used to replace the traditional CNN neural network as the feature extraction network. Finally, the network is updated by stochastic gradient descent (SGD), and the network is optimized by combining the loss function of triplet loss, center loss, and cross-entropy loss function. and are the training images of the source and target domains, respectively. The ViTReID network is trained in two branches (see Section 3.2): the local branch (head) and the global branch (body). Then the source and target domain data are passed through the feature extraction network (see Section 3.3) and the global average pooling (GAP) to obtain the feature vectors and in the source and target domains, respectively. GAP is a structured regularization of the network that prevents overfitting, incidentally using global information. Finally, the feature vectors are optimized by the fully connected layer and the loss functions in the source and target domains to obtain the final weight results (see Section 3.4). FC is the feature vector layer after full connection; it has the output size of 1 × 1 × 768 after feature extraction is output as 768 after the full connection.

Figure 1.

Diagram of the ViTReID network structure.

3.1. Pre-Training Weight

For application in industrial scenes, the weight of the basic ViT model pre-trained on the large dataset ImageNet [33] is used as the pre-training weight. Because a transformer model pre-trained by large datasets can achieve better results, the backbone of the pre-training model adopts the transformer base model, and the picture size is set to 384 × 128, the embedding dimension is set to 768, and the attention header is set to 12. We use the SSG model of the ResNet50 backbone network as the baseline model and compare it with the ViTReID model. ViTReID adopts the standard paradigm of the transformer and fully follows the pre-training–fine-tuning process. It is trained on the extensive dataset ImageNet. When the bottom feature extraction reaches good performance, it is combined with the person re-identification task, retains the bottom feature extractor, and fine-tunes the upper layer. The training optimizer uses SGD, the learning rate is set to 3 × 10−4, the weight decay is set to 5 × 10−4, and the epochs are set to 120.

3.2. Head Branch Training

For local branch training, the feature grouping idea proposed by SSG [3] divides the person’s features into upper and lower parts. The features based on clothing will change with the external environment or monitoring equipment, resulting in the low accuracy of the extracted features. Using head feature training, the extracted facial features and facial features have better robustness and higher discrimination. Therefore, this paper will focus on head information as local features.

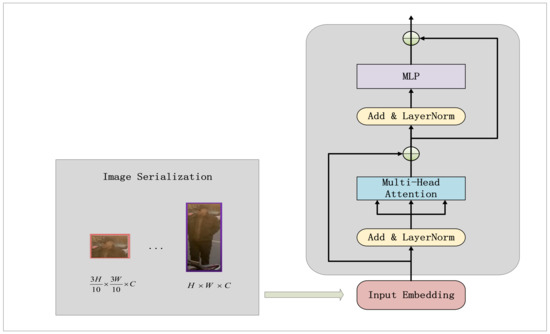

To construct the head branching algorithm for ViTReID, We first perform image serialization of the pedestrian image in the dataset by dividing the pedestrian image horizontally into two parts and taking only three-tenths of it as the head. Next, the tokens generated from the image sequences of the overall image and the head image are input to the ViT/B-384 backbone for feature extraction, as shown in Figure 2, to obtain the overall body feature map (denoted as ) and the low-slice head feature map (denoted as ). Then the global average pooling (GAP) operation is applied to the body feature map and the head feature map to obtain the and the two low feature vectors of . We repeat the above steps for each image to generate the two sets of feature vectors as shown in Equation (1).

Figure 2.

Feature extraction network.

3.3. Feature Extraction Network

In the feature extraction stage of the network, ViTReID uses a transformer encoder (ViT) as the feature extraction network to replace the traditional CNN or recurrent neural network (RNN) feature extraction network. The whole network structure is wholly composed of an attention mechanism. It consists of multiple self-attentions and MLPs. The structure of the feature extraction network is shown in Figure 2.

To make the network more suitable for person re-identification tasks, the transformer network structure is improved accordingly, and the lightweight transformer network structure is adopted to reduce the complexity of the network. The specific structure comprises a multi-head self-attention (MSA) mechanism and an MLP module. LayerNorm (LN) is applied before each module and residual connection is applied after each module. The MLP module contains two fully connected layers with the GELU nonlinear activation function. The implementation of the transformer encoder feature extraction network structure is shown in Equations (2)–(5).

The image is divided into N slices, and is the input sequence code. A learnable tag class is added before the input sequence. is obtained by mapping to the linear projection function f in the D dimension, is the location code, and is the layer of the transformer encoder. is the result of each slice after is the transformer encoder’s multi-head self-attention layer, is the result after transformer encoder’s MLP layer, and finally, is the result of the transformer encoder’s output. is the state of after LayerNorm operation.

The specific implementation of the network is as follows: First, embed 384 × 128 size picture , and then encode positional encodings. In the even position, use the sine encoding. In the odd position, use the cosine encoding and output the position vector to retain location information. Next, perform linear mapping of to three matrix vectors Q(Query), K(Key), V(Value) passing through the self-attention module to obtain a weighted eigenvector , namely Attention, as shown in Equation (6).

The dimensions of the three matrix vectors are the same. represents the dimension of K and then calculates the softmax function to obtain the weight of this self-attention. To reduce the network’s burden, the attention mechanism’s head is set to 12, and the final output result is obtained as after circulating the multi-head attention layer. After that, it will be sent to the next module of the encoder, the MLP module. This full connection has two layers. The activation function of the first layer is GELU, and the second layer is a linear activation function, as shown in Equation (7).

where W is the weight and b is the offset. A feed-forward network, that is, the fully connected layer 1 → GELU → dropout → the fully connected layer 2 → dropout, is a standard MLP structure.

3.4. STC Combined Loss

To train better discriminant features, the combined cross-entropy loss function, triplet loss, and center loss are taken as the loss function of the whole network. The cross-entropy loss function is used for the final classification learning, and triplet loss and center loss are used for measurement learning.

Softmax + cross-entropy loss function is used as ID loss, as shown in Equation (8).

where n is the number of samples trained in each batch; represents the input image and its category label . Classified by softmax, is the prediction probability of class . It is expressed by . The predicted probability distribution is the distribution learned by the trained model. To make the expected probability distribution as close as possible to the actual probability distribution, the cross-entropy loss function can be used to reduce the difference between the two probability distributions.

The triplet loss function is used to shorten the distance between the same IDs and expand the distance between different IDs. The clustering effect achieved by triplet loss is shown in Equation (9).

where d(·) represents the Euclidean distance between two vectors and α represents the margin between two vectors. To prevent = = 0, we minimize the triplet loss function to achieve this goal, as shown in Equation (10).

The online training method is used. Different IDs of the same identity p are selected. Each ID contains k pictures, forming a mini-batch with . Thus, a complex or easy example can be selected to form loss in this mini-batch.

Therefore, the triplet loss function in this paper can be set as shown in Equation (11).

where f is the global feature representation, <a, p, n> is a triplet, a is an anchor, p is a positive sample, n is a negative sample, p and a are samples of the same category, and n and a are samples of different categories. The effect is to pull in the distance of a and p and further the distance of a and n. Triplet loss is used to train samples with slight differences, and the similarity between samples is calculated by optimizing that the distance between the same samples a and p is smaller than the distance between different samples a and n. The same samples refer to people with the same identity (ID), and different samples are different people.

To improve the distinguishing ability of features, this paper focuses on supplementing the local features of the head and introduces the center loss function on the premise of maintaining the separability of data, further reducing the differences between classes, and further enhancing the face features while ensuring the global features. The center loss function is added to achieve this purpose, as shown in Equation (12).

Two superparameters are introduced using the center loss function, α and . Here, indicates the punishment intensity of center loss, and α controls the learning rate at center point of class. denotes the center of class , and the center point of the intra-class will change with different features. Generally, the center point in the class will be updated in each mini-batch. For data with a complex distribution such as head data, it is more critical to maintain intra-class compactness while keeping the feature space inter-class separable. Because the intra-class variation of the same person is likely to be larger than the inter-class variation, only by keeping the intra-class compactness can we have a more robust decision result for those samples with a considerable intra-class variation. The center loss constrains the intra-class compactness condition.

Finally, ViTReID combines center loss, triplet loss, and cross-entropy loss function to form STC combined loss function, as shown in Equation (13).

4. Experiments and Results

4.1. Datasets

The mainstream public datasets used for training and evaluation of person re-identification models include Market1501 [34], MSMT17 [18], and PersonX [35]. Market1501 and MSMT17 are commonly used datasets for person re-identification tasks. MSMT17 has the most image data and is more challenging. PersonX is a synthetic dataset, which is quite different from the domain of real data, and there will be much room for improvement in the algorithm. Our experiments are tested on public person re-identification datasets, including Market1501, MSMT17, and PersonX datasets. Table 1 gives detailed information corresponding to each dataset.

Table 1.

Experimental datasets.

Six cameras were used in the Market1501 [34] dataset collection. The dataset contains 32,668 annotated bounding boxes with 1501 identities. Each annotated logo must appear in at least two cameras for a cross-camera search to be performed. The deformable part model (DPM) [36] is used as a person detector. Each recognition can have multiple images under each camera. During cross-camera search, each identity has multiple queries and multiple ground truths.

MSMT17 [18] is a new giant dataset closer to the actual scene, covering multiple scenes and periods. It adopts 15 camera networks on the campus, including 12 outdoor cameras and 3 indoor cameras. Four days with different weather conditions were selected in a month to collect the original surveillance video. Three hours of videos were collected every day, covering three time periods: morning, noon, and afternoon. Therefore, the total length of the original video is 180 h. Based on Faster RCNN [37] as a person detector, three manual taggers viewed the detected bounding box and marked person labels for two months. Finally, 126,441 bounding boxes of 4101 persons were obtained.

The PersonX [35] synthetic dataset is based on Unity and contains 1266 manual person models, including 547 women and 719 men, covering various types of clothing, walking postures, ages, body shapes, and skin colors. It can provide different backgrounds, character actions, clothing accessories, etc., for different modeling applications according to specific tasks. At the same time, this dataset has a viewpoint label. The dataset can reduce the dependence on manually collecting and labeling data. It can generate a large amount of data, which is cheaper, faster, and more accurate than manually marking data. It can generate data that are difficult to capture in the real world (such as visual content in traffic conflict areas). It can generate data (such as occlusion and edge conditions) that do not often occur in nature but are essential for training. It can reduce security and privacy issues.

4.2. Evaluation Protocol

Rank-k [38] and mAP are used as evaluating indicators in the experiment. The target re-identification task aims to find the target most similar to the query under different cameras. There are query sets and test sets (or gallery sets) in the dataset. When a vehicle image in the query set is input, the most similar vehicle image will be found in the gallery set. The processing method often uses the trained network to feed forward and extract the characteristics of the last FC layer. Its similarity with all images in the gallery set is calculated for the feature of the image in each query set.

Rank-k indicates the accuracy rate of the first k images sorted by the similarity that belongs to the same ID as the query image. We calculated the accuracy of rank-k (k = 1, 5, 10), i.e., that the images with the same label as the query image could be found in the first 1, 5, and 10 images of the sorted test set. The mAP is a commonly used evaluation index in target detection and multi-label image classification tasks. We take it as an essential standard to measure the quality of the ReID model.

4.3. Comparision with State-of-the-Art Methods

We compared the model with the unsupervised domain adaptive person re-identification method based on CNN. The experimental results are shown in Table 2. It can be seen from the table that using the ViTReID model has increased the mAP by 14% and the rank-1 accuracy (R1) by 22.6% compared with the baseline method (SSG) on the datasets from Market1501 to MSMT17. On MSMT17 to Market1501, ViTReID is similar to SPCL in mAP, but R1 increases by 1.2% and the rank-5 accuracy (R5) and rank-10 accuracy (R10) increase by 0.5% and 0.6% respectively. This is because the ViTReID method proposed in this paper adopts the ViT-based feature extraction network and head branch training, which can help the model learn more global context information to establish a long-distance dependence on the target and make the model have better robustness and discriminant features.

Table 2.

Performance comparison between adaptive methods with unsupervised domain.

At the same time, we also conducted experiments on the PersonX synthetic dataset with the Market1501 dataset and MSMT17 dataset in the source domain and target domain. The experimental results are shown in Table 3. The results of MMT-dbscan [4] are obtained according to the author code implementation of MMT [4]. Due to the domain difference between synthetic data and real data, when PersonX is trained as the source domain, when migrating to independently and identically distributed datasets of MSMT17 and Market1501 target domains, the ViTReID method is more accurate than MMT-dbscan (as shown in Table 3), indicating that ViTReID is more generalizable. Compared with the MMT-dbscan method, ViTReID improves mAP by 3.1% and R1 by 7.7% on PersonX to MSMT17. On PersonX to Market1501, the mAP of the ViTReID method is increased by 1.5%, and R1 is also increased by 0.8%.

Table 3.

Experimental results of PersonX synthetic dataset to pedestrian real dataset.

4.4. Ablation Study

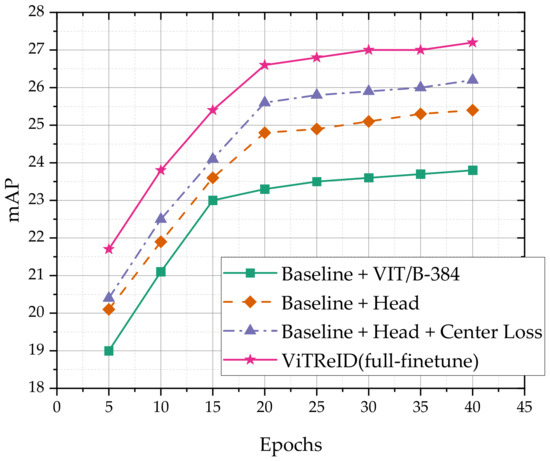

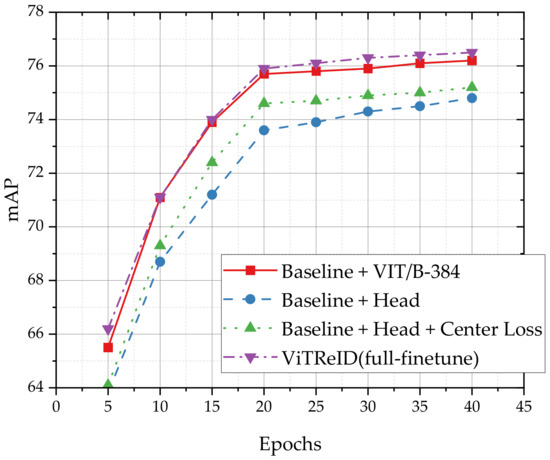

To fully explore the impact of model parameters on our algorithm in different situations, several scenarios are set up to analyze the effect of varying parameter weights on performance. The performance of ViT after mapping and learning the target project in the same backbone network is verified. Specifically, ViTReID shows the performance change trend of the target item ViT/B-384 with the different parameters of the loss item (see Table 4). The specific change trend of mAP with the increase in epochs is shown in Figure 3 and Figure 4.

Table 4.

Comparison of method performance with different parameter items (fine-tuned adds RandomGrayscale data enhancement method).

Figure 3.

mAP change of Market1501 → MSMT17.

Figure 4.

mAP change of MSMT7 → Market1501.

Based on the experimental results, we first changed the feature extraction network of the baseline to cope with the variation of pedestrian features under different cameras and the partial feature loss due to target occlusion. Because ViTReID uses ViT with a more robust learning ability of long dependence as the backbone network, it is stronger than the model based on CNN. Therefore, it improves the mAP by nearly 10% on the datasets from Market1501 to MSMT17. It shows that the transformer can capture global features better than the CNN model. At the same time, we changed the baseline’s local branching structure to solve the differentiation degradation problem due to color approximation and feature approximation of different target clothes. The combined head features include the most representative discriminant features in pedestrian information, so they can also improve the mAP by nearly 2%. This shows that the head branch plays a significant role. Meanwhile, after adding the center loss function to the head branch, the mAP is improved by nearly 1% again, indicating that the center loss dramatically contributes to the more complex head feature extraction. On MSMT17 to Market1501, after using the ViT feature extraction network and head branch training, as well as fine-tuning steps, such as data enhancements, the results are also improved greatly.

As can be seen from Figure 3 and Figure 4, both from the large dataset (MSMT17) to the small dataset (Market1501) and from a small dataset to an extensive dataset, mAP increases significantly with the increase in the number of training rounds. However, the promotion range from the small dataset to the large dataset is more extensive; from the large dataset to the small dataset, the promotion range is smaller. It can be seen that using large datasets as the source domain or directly using large datasets for unsupervised training has good enforceability for the transformer model, and the results will be much better than those of the CNN model.

5. Conclusions

This paper proposes an unsupervised domain adaptive person re-identification method (ViTReID) based on the transformer. The grouping idea of the feature extraction network ViT and a head branch is introduced. The long-distance dependence can be constructed through the global context information obtained by the feature extraction network. The discriminative nature of local (head) features is improved by using the idea of local and global feature grouping. The above operations enhance the accuracy of the ViTReID network but also increase the complexity of the network, leading to offline training and inference only. Experiments show that ViTReID can achieve good results on several popular datasets. According to the advantages of the transformer’s parallel computing and its self-attention mechanism, it can produce a more interpretable model. At the same time, with the rapid development of image classification, target detection, and semantic segmentation in recent years, the transformer will have great potential in ReID tasks. It will become a mainstream research direction in the future. For example, person re-identification is applied in public safety, new retail, and intelligent transportation. Our future works are to improve the attention mechanism of the transformer network by MLP-like methods, using a better loss function and the Adam optimization algorithm, and further subdivide the head features to obtain a more efficient network.

Author Contributions

Conceptualization, X.Y. and S.D.; methodology, S.D.; validation, X.Y., S.D. and W.Z.; formal analysis, X.Y. and W.Z.; investigation, W.S. and H.T.; resources, X.Y. and S.D.; data curation, X.Y. and S.D.; writing—original draft preparation, S.D.; writing—review and editing, X.Y. and S.D.; visualization, S.D.; supervision, X.Y., W.S. and H.T.; project administration, S.D.; funding acquisition, X.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by the National Natural Science Foundation of China (62072170), the Innovation Platform Open Fund Project of Hunan Province Department of Education (18k110, 20k048), and the 13th ‘Five-Year Plan’ of Educational Science in Hunan Province (xjk18cxx014).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to the data provider’s request for confidentiality of the data and results.

Acknowledgments

We thank our institute teachers and students for their support in the work of this paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zhang, X.; Luo, H.; Fan, X.; Xiang, W.; Sun, Y.; Xiao, Q.; Sun, J. Alignedreid: Surpassing human-level performance in person re-identification. arXiv 2017, arXiv:1711.08184. [Google Scholar]

- Sun, Y.; Zheng, L.; Yang, Y.; Tian, Q.; Wang, S. Beyond part models: Person retrieval with refined part pooling (and a strong convolutional baseline). In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 480–496. [Google Scholar]

- Fu, Y.; Wei, Y.; Wang, G.; Zhou, Y.; Shi, H.; Huang, T.S. Self-similarity grouping: A simple unsupervised cross domain adaptation approach for person re-identification. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 6112–6121. [Google Scholar]

- Ge, Y.; Chen, D.; Li, H. Mutual mean-teaching: Pseudo label refinery for unsupervised domain adaptation on person re-identification. In Proceedings of the International Conference on Learning Representations (ICLR), Addis Ababa, Ethiopia, 26 April–1 May 2020. [Google Scholar]

- Ge, Y.; Zhu, F.; Chen, D.; Zhao, R. Self-paced contrastive learning with hybrid memory for domain adaptive object re-id. Adv. Neural Inf. Process. Syst. 2020, 33, 11309–11321. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 6000–6010. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Houlsby, N. An image is worth 16 × 16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Wen, Y.; Zhang, K.; Li, Z.; Qiao, Y. A Discriminative Feature Learning Approach for Deep Face Recognition. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 8–16 October 2016; pp. 499–515. [Google Scholar]

- Hermans, A.; Beyer, L.; Leibe, B. In defense of the triplet loss for person re-identification. arXiv 2017, arXiv:1703.07737. [Google Scholar]

- Zhang, Z.; Si, T.; Liu, S. Integration Convolutional Neural Network for Person Re-Identification in Camera Networks. IEEE Access 2018, 6, 36887–36896. [Google Scholar] [CrossRef]

- Fan, X.; Luo, H.; Zhang, X.; He, L.; Zhang, C.; Jiang, W. Scpnet: Spatial-channel parallelism network for joint holistic and partial person re-identification. In Proceedings of the Asian Conference on Computer Vision (ACCV), Perth, WA, USA, 2–6 December 2018; pp. 19–34. [Google Scholar]

- Su, C.; Li, J.; Zhang, S.; Xing, J.; Gao, W.; Tian, Q. Pose-driven deep convolutional model for person re-identification. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 3960–3969. [Google Scholar]

- Liu, H.; Jie, Z.; Jayashree, K.; Qi, M.; Jiang, J.; Yan, S.; Feng, J. Video-based person re-identification with accumulative motion context. IEEE Trans. Circuits Syst. Video Technol. 2017, 28, 2788–2802. [Google Scholar] [CrossRef]

- Li, Y.; Zhuo, L.; Li, J.; Zhang, J.; Liang, X.; Tian, Q. Video-Based Person Re-identification by Deep Feature Guided Pooling. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 July 2017; pp. 1454–1461. [Google Scholar]

- Song, G.; Leng, B.; Liu, Y.; Hetang, C.; Cai, S. Region-based quality estimation network for large-scale person re-identification. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI), New Orleans, LA, USA, 2–7 February 2018; pp. 7347–7354. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2014, 27, 2672–2680. [Google Scholar]

- Zheng, Z.; Zheng, L.; Yang, Y. Unlabeled samples generated by gan improve the person re-identification baseline in vitro. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 3754–3762. [Google Scholar]

- Wei, L.; Zhang, S.; Gao, W.; Tian, Q. Person transfer gan to bridge domain gap for person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 79–88. [Google Scholar]

- Deng, W.; Zheng, L.; Ye, Q.; Kang, G.; Yang, Y.; Jiao, J. Image-image domain adaptation with preserved self-similarity and domain-dissimilarity for person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 994–1003. [Google Scholar]

- Qian, X.; Fu, Y.; Xiang, T.; Wang, W.; Qiu, J.; Wu, Y.; Xue, X. Pose-normalized image generation for person re-identification. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 650–667. [Google Scholar]

- Song, L.; Wang, C.; Zhang, L.; Du, B.; Zhang, Q.; Huang, C.; Wang, X. Unsupervised domain adaptive re-identification: Theory and practice. Pattern Recognit. 2020, 102, 107173. [Google Scholar] [CrossRef]

- Zhang, X.; Cao, J.; Shen, C.; You, M. Self-training with progressive augmentation for unsupervised cross-domain person re-identification. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 8222–8231. [Google Scholar]

- Zhai, Y.; Lu, S.; Ye, Q.; Shan, X.; Chen, J.; Ji, R.; Tian, Y. Ad-cluster: Augmented discriminative clustering for domain adaptive person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 9021–9030. [Google Scholar]

- Yu, H.X.; Zheng, W.S.; Wu, A.; Guo, X.; Gong, S.; Lai, J.H. Unsupervised person re-identification by soft multilabel learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 2148–2157. [Google Scholar]

- Zou, Y.; Yang, X.; Yu, Z.; Kumar, B.V.K.; Kautz, J. Joint disentangling and adaptation for cross-domain person re-identification. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; pp. 87–104. [Google Scholar]

- Mekhazni, D.; Bhuiyan, A.; Ekladious, G.; Granger, E. Unsupervised domain adaptation in the dissimilarity space for person re-identification. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; pp. 159–174. [Google Scholar]

- Zhu, X.; Morerio, P.; Murino, V. Unsupervised domain-adaptive person re-identification based on attributes. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, China, 22–25 September 2019; pp. 4110–4114. [Google Scholar]

- Chong, Y.W.; Peng, C.W.; Zhang, J.J.; Pan, S.M. Style transfer for unsupervised domain-adaptive person re-identification. Neurocomputing 2021, 422, 314–321. [Google Scholar] [CrossRef]

- Zhu, X.; Li, Y.; Sun, J. Unsupervised domain adaptive person re-identification via camera penalty learning. Multimed. Tools Appl. 2021, 80, 15215–15232. [Google Scholar] [CrossRef]

- Peng, Z.; Huang, W.; Gu, S.; Xie, L.; Wang, Y.; Jiao, J.; Ye, Q. Conformer: Local features coupling global representations for visual recognition. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Virtual, 11–17 October 2021; pp. 367–376. [Google Scholar]

- He, S.; Luo, H.; Wang, P.; Wang, F.; Li, H.; Jiang, W. Transreid: Transformer-based object re-identification. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Virtual, 11–17 October 2021; pp. 15013–15022. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Li, F.F. ImageNet: A large-scale hierarchical image database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Miami Beach, FL, USA, 20–26 June 2009; pp. 248–255. [Google Scholar]

- Zheng, L.; Shen, L.; Tian, L.; Wang, S.; Wang, J.; Tian, Q. Scalable Person Re-identification: A Benchmark. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1116–1124. [Google Scholar]

- Bai, S.; Tang, P.; Torr, P.H.S.; Latecki, L.J. Re-Ranking via Metric Fusion for Object Retrieval and Person Re-Identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 740–749. [Google Scholar]

- Felzenszwalb, P.F.; Girshick, R.B.; McAllester, D.; Ramanan, D. Object Detection with Discriminatively Trained Part-Based Models. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1627–1645. [Google Scholar] [CrossRef] [PubMed]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Zhong, Z.; Zheng, L.; Cao, D.; Li, S. Re-ranking person re-identification with k-reciprocal encoding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1318–1327. [Google Scholar]

- Zhong, Z.; Zheng, L.; Luo, Z.; Li, S.; Yang, Y. Invariance matters: Exemplar memory for domain adaptive person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 598–607. [Google Scholar]

- Wang, D.; Zhang, S. Unsupervised person re-identification via multi-label classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 10981–10990. [Google Scholar]

- Yang, Q.; Yu, H.X.; Wu, A.; Zheng, W.S. Patch-Based Discriminative Feature Learning for Unsupervised Person Re-Identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 3628–3637. [Google Scholar]

- Wu, A.; Zheng, W.; Lai, J. Unsupervised Person Re-Identification by Camera-Aware Similarity Consistency Learning. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 6921–6930. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).