An Empirical Comparison of Pen-Testing Tools for Detecting Web App Vulnerabilities

Abstract

:1. Introduction

- We have conducted a literature review on the work done by various researchers in the area of web application pen-testing.

- We have also studied the various tools used for PEN-testing in terms of their performance, vulnerability detection, test coverage, etc.

- We have proposed an enhanced benchmarking framework for web application pen-testing tools.

2. Background

3. Problem Statement

4. Related Work

5. Research Methodology

5.1. Selection of Top 6 Tools

5.2. Design of a Framework for Evaluation Criteria

- Scanner Scoring System: The selected criteria will be kept in mind while benchmarking the top 6 web application PEN-testing tools. We use the proposed score system in [10] to evaluate the tools. Furthermore, each key metric has a point system of up to 5 points.

- Criteria and Metric Selection: The used benchmarking metrics and criteria for tool evaluation are presented as follows:

- –

- Graphic user interface (GUI);

- –

- Command-line interface (CLI). The GUI interfaces are always preferred by most pen-testers in PEN-testing web applications, rather than CLI.Score for tool type:

- ∗

- 1: only CLI or only GUI;

- ∗

- 2: both CLI and GUI.

- Penetration Testing Level: Recent scanning tools can grasp web application sessions and detect variations in web application source code. Most automated web application PEN-testing tools only use the black box test method in authenticated scans. Score for the type of penetration test:

- –

- 1: only use the black box test method;

- –

- 2: methods of black box and gray box testing;

- –

- 3: methods of testing in the black box, gray box, and white box.

- Crawling Types: There are two types of crawling: passive crawl and active crawl. The active crawl is the first step before the active scanning, which catalogs the found links. However, the passive crawl is best for covering. Score for crawling ability:

- –

- 1: only passive crawler or only active crawler;

- –

- 2: active crawler and passive crawler.

- Number of URLs Covered: Web application crawling is a part of the information gathering stage in the PEN-testing process [10]. In this stage, a penetration tester would like to gather as much information as possible about the web application. Crawler coverage can be signified by the number of URLs crawled by the scanner; the more URLs the scanner covers, the higher the score as follows. Score for covered URLs:

- –

- 1: less than 25% coverage;

- –

- 2: 25% to 50% coverage;

- –

- 3: 50% to 70% coverage;

- –

- 4: 70% to 90% coverage;

- –

- 5: more than 90% coverage.

- Scanning Time: The automated tools developed by penetration testers cover a greater area in a large web application with less possible time. Therefore, the time taken is important for scanner evolution. Score for scanning time:

- –

- 1: more than 6 h;

- –

- 2: more than 3 h;

- –

- 3: more than 2 h;

- –

- 4: more than 45 min;

- –

- 5: less than 30 min.

- Types of Scan: There are two types of scans in web application PEN-testing, passive and active. In this metric, the scanner with active and passive options takes the highest point. Score for scan type:

- –

- 1: only active scan or only passive scan;

- –

- 2: active and passive scan;

- –

- 3: active, passive, or policy scan.

- Reporting Features: The reports can be formatted depending on the compliance policy that the penetration tester needs to analyze, which is a recent feature in scanners. Some of these standards are OWASP Top 10, HIPAA, and so on. There are several normal formats for reporting, such as HTML, PDF, and XML. The compliance policy reports are emptier and easier to analyze by the penetration tester. Score for reporting features:

- –

- 0: HTML, PDF, and XML reports;

- –

- 1: compliance standers report such OWASP Top 10 and HIPAA.

- Added Features: Some automated tools have add-ons and extension features that improve the scanner performance in vulnerability detection. Most penetration testers take advantage from these features. Score for add-ons and extension features:

- –

- 0: no add-ons and extension features;

- –

- 1: with add-ons and extension features.

- Configuration Effortlessness: A previous article [10] defined three levels of configuration (difficult, hard, and easy). The difficult level means needing requirements such as server and database configuration to launch the scanner; hard requires some dependencies before tool installation; easy does not need any obligations to launch the scan. Score for configuration level:

- –

- 1: difficult: requirements are needed, such as server and database configuration to launch the scanner;

- –

- 2: hard: some dependencies are needed for installation;

- –

- 3: easy: (plug-and-play) out-of-the-box ready-to-use application.

- Scans Logging Option: The logs are essential in PEN-testing to monitor and detect thousands of requests and responses. Logging these processes is important to retrieve them when needed. Some automated tools provide these options to store logs in formats such as txt, csv, html, or xml [10]. Score for scan logs:

- –

- 0: no scan log option;

- –

- 1: scan log option.

- Tool Cost: The cost of the tool is an important factor in choosing the right tool. More features with low cost are an essential metric for penetration testers and organizations. In addition, some frameworks have better performance depending on their brand and continued development by offered cost.

- OWASP Top 10 Vulnerabilities Coverage: The OWASP Top 10 Vulnerabilities are essential for evaluating many organizations and penetration testers use penetrating tools to cover the top 10 vulnerabilities in their web applications and protect their assets from the known vulnerabilities. Developers and software testers are also trying to avoid these top 10 vulnerabilities. This metric will evaluate the degree of covered vulnerabilities from the total existing vulnerabilities in the OWASP benchmark. Score for vulnerabilities coverage:

- –

- 1: less than 25% coverage;

- –

- 2: 25% to 50% coverage;

- –

- 3: 50% to 70% coverage;

- –

- 4: 70% to 90% coverage;

- –

- 5: more than 90% coverage.

- Pause and Resume Scans: The ability to pause and resume the scan from the same point is a strength factor for the scanner and it helps the pen-tester reduce the time for rescanning the web application. Score for test coverage:

- –

- 0: no ability to pause and resume scans;

- –

- 1: the ability to only pause or only resume scans;

- –

- 2: the ability to pause and resume scans.

- Number of Test Cases Generated: This evaluates the number of test cases produced by a web application security scanner in a scanning session [13]. Score for the number of test cases generated:

- –

- 1: less than 100 test cases;

- –

- 2: 200–300 test cases;

- –

- 3: 500–700 test cases;

- –

- 4: 800–1000 test cases;

- –

- 5: more than 1000 test cases.

- Automation Level: In this metric, we evaluate the scanner proficiency to automate the scan without penetration tester manual association. Score for automation level:

- –

- 1: 100% tester involvement needed;

- –

- 2: 80% tester involvement needed;

- –

- 3: 70% tester involvement needed;

- –

- 4: 50% tester involvement needed;

- –

- 5: less than 30% tester involvement needed.

- Number of False Positives: The false positive is an unreal indicator for vulnerabilities in the OWASP benchmark reported by the scanner. Fewer false positive percentages are helpful for penetration.

- –

- False positive formula:

- –

- Score for false positive number:

- ∗

- 1: greater than 50%;

- ∗

- 2: greater than 30%;

- ∗

- 3: less than 30%.

- Number of True Positives: The true positive means that the real vulnerability number in the OWASP benchmark is detected correctly by the scanner. It is the most important metric in vulnerability detection criteria.

- –

- True positive formula:

- –

- Score for true positive number:

- ∗

- 1: less than 10%;

- ∗

- 2: up to 25%;

- ∗

- 3: up to 50%;

- ∗

- 4: 50% and higher.

- Youden’s Index: The Youden index was proposed to evaluate the performance of analytical (diagnostic) tests [11]. The Youden equation outputs either 1 or −1, as follows:

- –

- {1} means that the scanner detected the vulnerabilities absolutely with no false-positive vulnerabilities;

- –

- {−1} means that the scanner detects only false-positive vulnerabilities with no actual vulnerabilities;

- –

- {0} means that the tool outputs the same expected result from the web application (FP, TP). The formula of the Youden index [11] and our scoring system are as follows:

- ∗

- Youden index formula:

- ∗

- Score for Youden’s index:

- ⋅

- 1: only FP no TP {−1};

- ⋅

- 2: same expected {0};

- ⋅

- 3: detected vulnerabilities absolutely {1}.

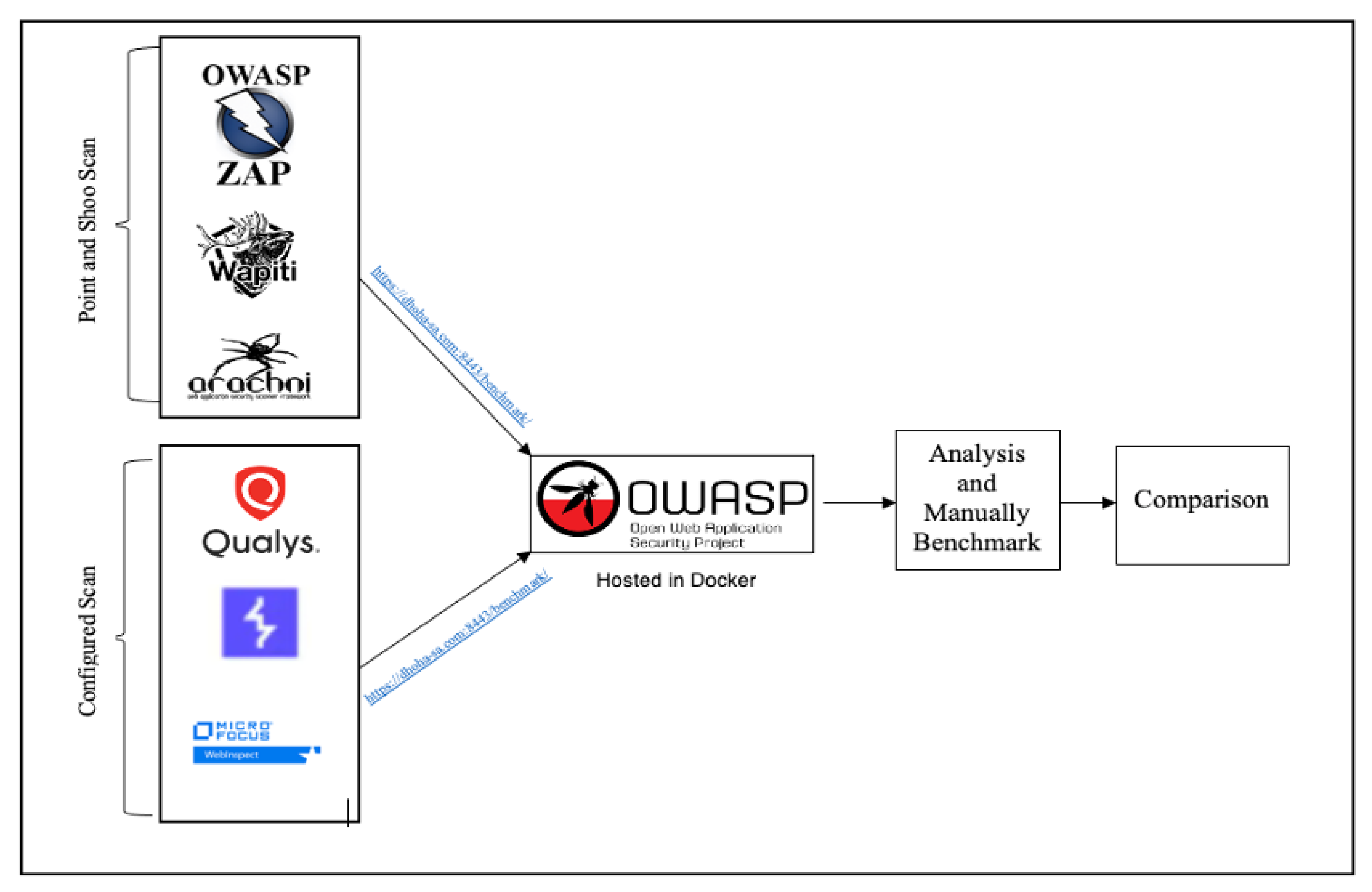

6. Experimental Setup

6.1. Environment Setup

6.2. Evaluation Approach

- Download the OWASP Benchmark Project in docker.

- Register DNS with GoDaddy to make the OWASP Benchmark Project publicly available.

- Set a port-forwarding in our home router.

- Download the top 6 tools, each tool has its own needed environment as detailed in Table 3.

- Set the configuration for each tool depending on the type of pre-scan selected.

- Start attacking the OWASP Benchmark Project with the tools.

- Generate the results in XML format.

- Put the results into the OWASP Benchmark Project.

- Run the score calculator by the OWASP Benchmark Project against the XML reports for the tools.

- Take the score results and start our manual benchmarking using our proposed framework.

- Compare the tools after the overall benchmarking.

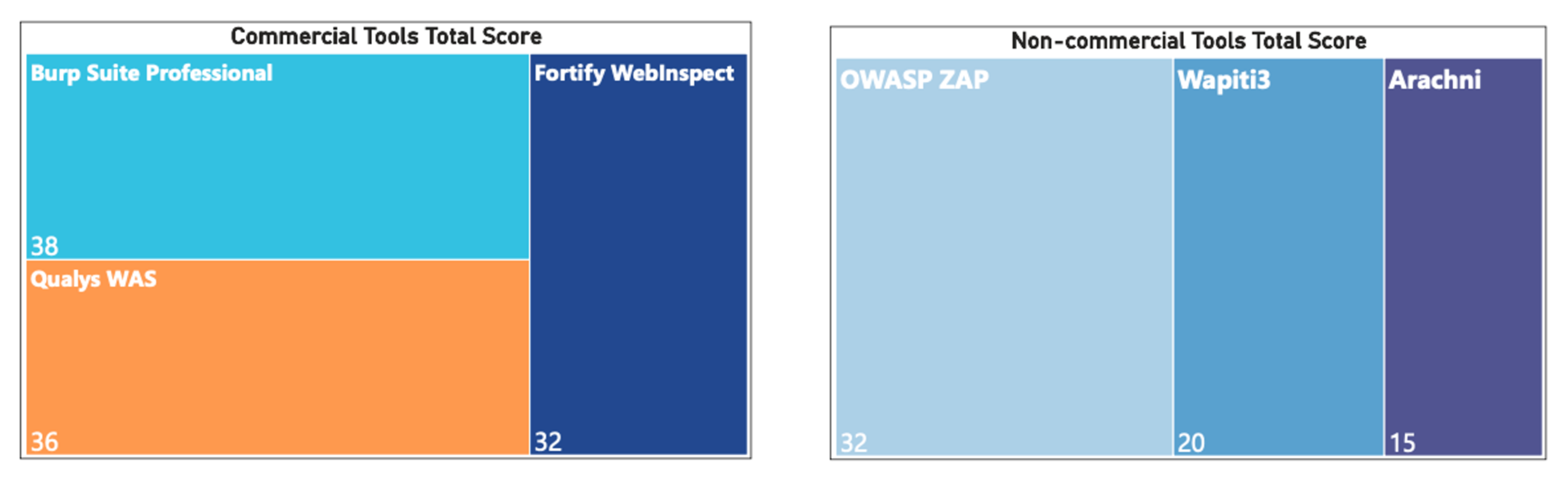

7. Result

7.1. Case One: Commercial Tools

7.2. Case Two: Non-Commercial Tools

8. Discussion

9. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

| Metric | Score | Score Details | Score Reason |

|---|---|---|---|

| Tool Type | 1 | 1 point for Only Command line interface (CLI) or only Graphic user interface (GUI) | GUI As Expected |

| Penetration Testing Level | 1 | 1 point for Only Black box test method | It uses Only Black box test method |

| Crawling types | 2 | 2 points for Active crawler and Passive crawler | It uses both Active crawler and Passive crawler in the same scan |

| Number of URLs covered | 5 | 5 points for More than 90% coverage | OWASP benchmark has nearly 5500 URLs and ZAP was able to discover 30,369 URLs which is covered all the benchmark project |

| Scanning Time | 1 | 1 point for More than 6 h | The scan time was over 7 h |

| Type of Scan | 3 | 3 points for Active and Passive or policy scan | It uses both Active and Passive scan modes |

| Reporting Features | 0 | 0 for only HTML or PDF or XML reports | It produces only standard reports |

| Added Features | 1 | 1 point for add-ons and extension features | It has updated Marketplace for installing add-ons |

| Configuration Effortlessness | 3 | 3 points for Easy | Its config Easy (Plug and Play) out of the box ready to use application |

| Scans Logging Option | 1 | 1 point for scan log option | It logs all request and response during the scan |

| Tool Cost | Free Tool | ||

| OWASP Top 10 Vulnerabilities Coverage | 1 | 1 point for Less than 25% coverage | The scan results only covered 1% of Cross-Site Scripting category; however, the result of benchmarking the rest was 0% |

| Pause and Resume Scans | 2 | 2 points for The ability to pause and resume scans | OWASP ZAP can pause and resume the scan |

| Number of test cases generated | 5 | 5 points for More than 1000 test cases | OWASP ZAP generated 8799 test cases in one scan which is more than 1000 test cases |

| Automation level | 5 | 5 points for Less than 30%, needs tester involvement | no need for pen-tester interaction |

| Number of False Positives | 0 | 0 for None | The scan results only covered 1% of Cross-Site Scripting category; however, the result of benchmarking the rest was 0%. Therefore, no FP vulnerabilities |

| Number of True Positives | 1 | 1 point for Less than 10% | The scan results only covered 1% of Cross-Site Scripting category; however, the result of benchmarking the rest was 0%. Therefore, no FP vulnerabilities and the TP number is 3 which is less than 10% |

| Youden’s Index | 0 | 0 for None | The benchmark did not detect any FP; therefore, no calculation for Youden’s Index |

| Total score | 32 |

| Metric | Score | Score Details | Score Reason |

|---|---|---|---|

| Tool Type | 1 | 1 point for Only Command line interface (CLI) or only Graphic user interface (GUI) | GUI As Expected |

| Penetration Testing Level | 1 | 1 point for Only Black box test method | It uses Only Black box test method |

| Crawling types | 2 | 2 points for Active crawler and Passive crawler | It uses both Active crawler and Passive crawler |

| Number of URLs covered | 3 | 3 points 50% to 70% coverage | The crawled URLs is 3231 which is 58% of the benchmark URLs |

| Scanning Time | 1 | 1 point for More than 6 h | It took over 12 h |

| Type of Scan | 3 | 3 points for Active and Passive or policy scan | It uses both Active and Passive scan modes |

| Reporting Features | 0 | 0 for only HTML or PDF or XML reports | It uses Only standard reports |

| Added Features | 1 | 1 point for add-ons and extension features | Pen-tester can configure the scanner specifications and download add-ons as needed |

| Configuration Effortlessness | 3 | 3 points for Easy | Its configuration is Easy (Plug and Play), out of the box ready to use application |

| Scans Logging Option | 1 | 1 point for scan log option | It logging all request and response during the scan |

| Tool Cost | USD 399 Per Year | ||

| OWASP Top 10 Vulnerabilities Coverage | 4 | 4 points for 70% to 90% coverage | In one scan Burp Suite Professional covered 5% Command Injection, 8% Cross-Site Scripting, 3% Insecure Cookie, 4% LDAP Injection, 3% Path Traversal, 8% SQL Injection, 7% XPath Injection. The scanner covers 70% from OWASP Top 10. |

| Pause and Resume Scans | 2 | 2 points for the ability to pause and resume scans | Burp Suite Professional can pause and resume the scan |

| Number of test cases generated | 5 | 5 points for More than 1000 test cases | Burp Suite Professional generated 3235 test cases in one scan which is more than 1000 test cases |

| Automation level | 5 | 5 points for Less than 30%, needs tester involvement | no need for pen-tester interaction |

| Number of False Positives | 3 | 3 points for Less than 30% | The number of FP vulnerabilities in Burp Suite Professional is 3, 3/3235 × 100 = 0.09% which is less than 30% |

| Number of True Positives | 1 | 1 point for Less than 10% | The TP number is (56) which is less than 10%, 56/3235 × 100 = 1.73% |

| Youden’s Index | 2 | 2 points for Same expected {0} | The Youden Index for Burp Suite Professional is (0.0%) means the tool outputs the same expected result from the web application (FP,TP) |

| Total score | 38 |

| Metric | Score | Score Details | Score Reason |

|---|---|---|---|

| Tool Type | 1 | 1 point for Only Command line interface (CLI) or only Graphic user interface (GUI) | GUI As Expected |

| Penetration Testing Level | 3 | 3 points for Black box/Grey box/White box test methods | It uses all test methods |

| Crawling types | 1 | 1 point for Only passive crawler or Only Active crawler | It uses only Active crawler |

| Number of URLs covered | 5 | 5 points for More than 90% coverage | Qualys WAS crawled 4979 URLS which is 90.5% of 5500 URLs |

| Scanning Time | 1 | 1 point for More than 6 h | The scan took 24 h and did not cover the hole site |

| Type of Scan | 1 | 1 point for Only active scan or only passive scan | It uses only Active scan modes such as discovery scan and vulnerability scan |

| Reporting Features | 1 | 1 point for compliance standers report | It uses compliance standard reports |

| Added Features | 0 | 0 for none add-ons and extension features | Qualys WAS not using add-ons |

| Configuration Effortlessness | 3 | 3 points for Easy | Its configuration is Easy (Plug and Play), out of the box ready to use application |

| Scans Logging Option | 1 | 1 point for scan log option | It logs all scans with date filtering option |

| Tool Cost | USD 30,000 per year to cover WAS and VM Security | ||

| OWASP Top 10 Vulnerabilities Coverage | 2 | 2 points for 25% to 50% coverage | Qualys WAS covered the following categories in one scan: 24% Command Injection, 38% Cross-Site Scripting, 53% Insecure Cookie, 32% SQL Injection. Qualys Was covered 40% of the OWASP Top 10 Vulnerabilities. |

| Pause and Resume Scans | 2 | 2 points for the ability to pause and resume scans | Qualys WAS can pause and resume the scan |

| Number of test cases generated | 3 | 3 points for 500–700 test cases | Qualys WAS generated 591 test cases in one scan. |

| Automation level | 5 | 5 points for Less than 30%, needs tester involvement | no need for pen-tester interaction |

| Number of False Positives | 3 | 3 points for Less than 30 % | The number of FP vulnerabilities in Qualys WAS is 3, 3/591 × 100 = 0.50% which is less than 30% |

| Number of True Positives | 2 | 2 points for Up to 25% | The TP number is (233) which is less than 10%, 233/591 × 100 = 39.4% |

| Youden’s Index | 2 | 2 points for Same expected {0} | The Youden Index for Qualys WAS is (0.14%) means the tool outputs the same expected result from the web application (FP,TP) |

| Total score | 36 |

| Metric | Score | Score Details | Score Reason |

|---|---|---|---|

| Tool Type | 1 | 1 point for Only Command line interface (CLI) or only Graphic user interface (GUI) | GUI As Expected |

| Penetration Testing Level | 1 | 1 point for Only Black box test method | It uses black box method |

| Crawling types | 1 | 1 point for Only passive crawler or Only Active crawler | It uses Active crawler |

| Number of URLs covered | 3 | 3 points 50% to 70% coverage | Fortify WebInspect crawled 3598 URLS which is 65% of 5500 URLs |

| Scanning Time | 5 | 5 points for Less than 30 min | the scan was 15 min |

| Type of Scan | 3 | 3 points for Active and Passive or policy scan | It uses Active and passive scan modes, also scan by policy |

| Reporting Features | 1 | 1 point for compliance standers report | It uses standard reports HTML, PDF also reports by needed policy |

| Added Features | 1 | 1 point for add-ons and extension features | It has simulated attack tools for SQL injection, HTTP editor, server analyzer, web proxy, traffic viewer, SWFscan. They are available during the scan, manually and automatically. |

| Configuration Effortlessness | 1 | 1 point for Difficult | require dependencies like SQL server for installation |

| Scans Logging Option | 1 | 1 point for scan log option | It logs all scans with its results and gives the ability to generate reports for the logs |

| Tool Cost | USD 24,000.00 peer Year | ||

| OWASP Top 10 Vulnerabilities Coverage | 1 | 1 point for Less than 25% coverage | Only detect one SSL cipher and the rest of vulnerabilities was best practice. It might be 1% or less in OWASP Top 10 Vulnerabilities Coverage |

| Pause and Resume Scans | 2 | 2 points for The ability to pause and resume scans | Fortify WebInspect can pause and resume the scan |

| Number of test cases generated | 5 | 5 points for More than 1000 test cases | sent 68,730 attacks in one scan which is more than 1000 test cases |

| Automation level | 5 | 5 points for Less than 30%, needs tester involvement | no need for pen-tester interaction |

| Number of False Positives | 0 | 0 for None | The scan results only covered 1% of SSL cipher; however, the results of benchmarking the rest was 0%. Therefore, no FP vulnerabilities. |

| Number of True Positives | 1 | 1 point for Less than 10% | The scan results only covered 1% of SSL cipher; however, the result of bechnmarking the rest was 0%. Therefore, the TP number is 261 (261/2741 × 100) which is 9.5% less than 10%. The number of expected vulnerabilities from the OWASP benchmark project are 2741. |

| Youden’s Index | 0 | 0 for None | The benchmark did not detect any FP; therefore, no calculation for Youden’s Index |

| Total score | 32 |

| Metric | Score | Score Details | Score Reason |

|---|---|---|---|

| Tool Type | 1 | 1 point for Only Command line interface (CLI) or only Graphic user interface (GUI) | GUI a newer version recently |

| Penetration Testing Level | 1 | 1 point for Only Black box test method | It uses only the blackbox method |

| Crawling types | 1 | 1 point for Only passive crawler or Only Active crawler | It uses Active crawler |

| Number of URLs covered | 0 | 0 for None | Arachni did not crawl |

| Scanning Time | 1 | 1 point for More than 6 Hours | the scan was over 48 h |

| Type of Scan | 1 | 1 point for Only active scan or Only passive scan | It uses only Active scan modes |

| Reporting Features | 0 | 0 for only HTML or PDF or XML reports | It uses standard reports HTML, PDF |

| Added Features | 0 | 0 for none add-ons and extension features | no features |

| Configuration Effortlessness | 1 | 1 point for Difficult | It need JAVA JRE, PostgreSQL server for installation |

| Scans Logging Option | 1 | 1 point for scan log option | It logs all scans but without the results |

| Tool Cost | Free Tool | ||

| OWASP Top 10 Vulnerabilities Coverage | 0 | 0 for None | Arachni did not detect any vulnerability. This issue was in version 0.5.12 in paper [10], and the updated version v1.5.1 did not patch the issue as we considered it. |

| Pause and Resume Scans | 2 | 2 points for The ability to pause and resume scans | Arachni can pause and resume the scan |

| Number of test cases generated | 1 | 1 point for Less than 100 test cases | Arachni generated nearly 50 test cases in one scan which is less than 100 test cases |

| Automation level | 5 | 5 points for Less than 30%, needs tester involvement | no need for pen-tester interaction |

| Number of False Positives | 0 | 0 for None | Arachni did not detect any vulnerability; therefore, no FP vulnerabilities |

| Number of True Positives | 0 | 0 for None | Arachni did not detect any vulnerability; therefore, no FP and the TP vulnerabilities. |

| Youden’s Index | 0 | 0 for None | The benchmark did not detect any FP; therefore, no calculation for Youden’s Index |

| Total score | 15 |

| Metric | Score | Score Details | Score Reason |

|---|---|---|---|

| Tool Type | 1 | 1 point for Only Command line interface (CLI) or only Graphic user interface (GUI) | CLI As Expected |

| Penetration Testing Level | 1 | 1 point for Only Black box test method | It uses only the black box method |

| Crawling types | 1 | 1 point for Only passive crawler or Only Active crawler | It uses Active crawler |

| Number of URLs covered | 1 | 1 point for Less than 25% coverage | crawled 621 URLS which is 11% of 5500 URLs |

| Scanning Time | 1 | 1 point for More than 6 Hours | the scan was over 21 h |

| Type of Scan | 1 | 1 point for Only active scan or Only passive scan | It uses only Active scan modes |

| Reporting Features | 0 | 0 for only HTML or PDF or XML reports | It uses standard reports HTML, PDF |

| Added Features | 0 | 0 for none add-on and extension features | None |

| Configuration Effortlessness | 1 | 1 point for Difficult | require dependencies such as JAVA JRE, Python 3.x, httpx, BeautifulSoup, yaswfp, tld, Mako, httpx-socks for installation |

| Scans Logging Option | 0 | 0 for no scan logging option | no logs |

| Tool Cost | Free Tool | ||

| OWASP Top 10 Vulnerabilities Coverage | 2 | 2 points for 25% to 50% coverage | covered the following categories in one scan: 9% Command Injection, 9% Cross-Site Scripting, 1% Path Traversal, 3% SQL Injection. Wapiti3 covered 40% of the OWASP Top 10 Vulnerabilities. |

| Pause and Resume Scans | 0 | 0 for No ability to pause and resume scans | Wapiti3 cannot pause or resume the scan |

| Number of test cases generated | 3 | 3 points for 500–700 test cases | Wapiti3 generated 621 test cases in one scan |

| Automation level | 5 | 5 points for Less than 30%, needs tester involvement | no need for pen-tester interaction |

| Number of False Positives | 0 | 0 for None | no FP vulnerabilities seen in benchmark results |

| Number of True Positives | 1 | 1 point for Less than 10% | The TP number is (42) which is less than 10% |

| Youden’s Index | 2 | 2 points for Same expected {0} | The Youden Index for Wapiti3 is 0.03% which means that the tool outputs the same expected result from the web application (FP,TP) |

| Total score | 20 |

References

- Im, J.; Yoon, J.; Jin, M. Interaction Platform for Improving Detection Capability of Dynamic Application Security Testing. In Proceedings of the 14th International Joint Conference on e-Business and Telecommunications, Madrid, Spain, 26–28 July 2017; pp. 474–479. [Google Scholar]

- Li, J. Vulnerabilities mapping based on OWASP-SANS: A survey for static application security testing (SAST). Ann. Emerg. Technol. Comput. (AETiC) 2020, 4, 1–8. [Google Scholar] [CrossRef]

- OWASP Top 10:2021. 2021. Available online: https://owasp.org/Top10/ (accessed on 14 November 2021).

- Pan, Y. Interactive application security testing. In Proceedings of the 2019 International Conference on Smart Grid and Electrical Automation (ICSGEA), Xiangtan, China, 10–11 August 2019; pp. 558–561. [Google Scholar]

- Meyers, B.S.; Almassari, S.F.; Keller, B.N.; Meneely, A. Examining Penetration Tester Behavior in the Collegiate Penetration Testing Competition. ACM Trans. Softw. Eng. Methodol. 2022, 31, 1–25. [Google Scholar] [CrossRef]

- Scanlon, T.P. Fundamentals of Application Security Testing Tools; Carnegie Mellon University: Pittsburgh, PA, USA, 2021. [Google Scholar]

- Antunes, N.; Vieira, M. Assessing and Comparing Vulnerability Detection Tools for Web Services: Benchmarking Approach and Examples. IEEE Trans. Serv. Comput. 2015, 8, 269–283. [Google Scholar] [CrossRef]

- Mburano, B.; Si, W. Evaluation of web vulnerability scanners based on owasp benchmark. In Proceedings of the 2018 26th International Conference on Systems Engineering (ICSEng), Sydney, NSW, Australia, 18–20 December 2018; pp. 1–6. [Google Scholar]

- Vats, P.; Mandot, M.; Gosain, A. A Comprehensive Literature Review of Penetration Testing & Its Applications. In Proceedings of the 2020 8th International Conference on Reliability, Infocom Technologies and Optimization (Trends and Future Directions) (ICRITO), Noida, India, 4–5 June 2020; pp. 674–680. [Google Scholar]

- Shebli, H.M.Z.A.; Beheshti, B.D. A study on penetration testing process and tools. In Proceedings of the 2018 IEEE Long Island Systems, Applications and Technology Conference (LISAT), Farmingdale, NY, USA, 4 May 2018. [Google Scholar]

- Yadav, D.; Gupta, D.; Singh, D.; Kumar, D.; Sharma, U. Vulnerabilities and Security of Web Applications. In Proceedings of the 2018 4th International Conference on Computing Communication and Automation (ICCCA), Greater Noida, India, 14–15 December 2018. [Google Scholar] [CrossRef]

- Zuehlke, A.K. An Analysis of Tools, Techniques, and Mathematics Involved in a Penetration Test. Doctoral Dissertation, Appalachian State University, Boone, NC, USA, 2017. [Google Scholar]

- Amankwah, R.; Chen, J.; Kudjo, P.K.; Towey, D. An empirical comparison of commercial and open-source web vulnerability scanners. Softw. Pract. Exp. 2020, 50, 1842–1857. [Google Scholar] [CrossRef]

- Saeed, F.A.; Elgabar, E.A. Assessment of open source web application security scanners. J. Theor. Appl. Inf. Technol. 2014, 61, 281–287. [Google Scholar]

- Shah, M.P. Comparative Analysis of the Automated Penetration Testing Tools. Master’s Thesis, National College of Ireland, Dublin, Ireland, 2020. [Google Scholar]

- Syaikhuddin, M.M.; Anam, C.; Rinaldi, A.R.; Conoras, M.E.B. Conventional Software Testing Using White Box Method. In Kinetik: Game Technology, Information System, Computer Network, Computing, Electronics, and Control; Universitas Muhammadiyah Malang: Kota Malang, Indonesia, 2018; pp. 65–72. [Google Scholar]

- Seng, L.K.; Ithnin, N.; Said, S.Z.M. The approaches to quantify web application security scanners quality: A review. Int. J. Adv. Comput. Res. 2018, 8, 285–312. [Google Scholar] [CrossRef]

- Bacudio, A.G.; Yuan, X.; Bill Chu, B.T.; Jones, M. An Overview of Penetration Testing. Int. J. Netw. Secur. Appl. 2011, 3, 19–38. [Google Scholar] [CrossRef]

- Goutam, A.; Tiwari, V. Vulnerability Assessment and Penetration Testing to Enhance the Security of Web Application. In Proceedings of the 2019 4th International Conference on Information Systems and Computer Networks (ISCON), Mathura, India, 21–22 November 2019. [Google Scholar] [CrossRef]

- Alazmi, S.; De Leon, D.C. A Systematic Literature Review on the Characteristics and Effectiveness of Web Application Vulnerability Scanners. IEEE Access 2022, 10, 33200–33219. [Google Scholar] [CrossRef]

- Qiu, X.; Wang, S.; Jia, Q.; Xia, C.; Xia, Q. An automated method of penetration testing. In Proceedings of the 2014 IEEE Computers, Communications and IT Applications Conference, Beijing, China, 20–22 October 2014. [Google Scholar] [CrossRef]

- Shanley, A.; Johnstone, M. Selection of penetration testing methodologies: A comparison and evaluation. In Proceedings of the 13th Australian Information Security Management Conference, Perth, Australia, 30 November–2 December 2015. [Google Scholar] [CrossRef]

- Dalalana Bertoglio, D.; Zorzo, A.F. Overview and open issues on penetration test. J. Braz. Comput. Soc. 2017, 23, 2. [Google Scholar] [CrossRef]

- Kritikos, K.; Magoutis, K.; Papoutsakis, M.; Ioannidis, S. A survey on vulnerability assessment tools and databases for cloud-based web applications. Array 2019, 3–4, 100011. [Google Scholar] [CrossRef]

- Mirjalili, M.; Nowroozi, A.; Alidoosti, M. A survey on web penetration test. Adv. Comput. Sci. Int. J. 2014, 3, 107–121. [Google Scholar]

| Tool Name | Tool Type | License | Version | Last Update | Price |

|---|---|---|---|---|---|

| OWASP ZAP | Proxy | Apache license Version 2.0 | Version: 2.11.0 | October 2021 | Free |

| BurpSuite Professional | Proxy | Commercial | Version: 2021.9.1 | October 2021 | USD 399 per year |

| Qualys WAS | Scanner | Commercial | Version: 8.12.55-1 | self-updating | USD 30,000 per year |

| Arachni | Scanner | Arachni Public Source License Version 1.0 | Version: 1.5.1 | November 2017 | Free |

| Wapiti3 | Scanner | GNU General Public License version 2 | Version: 3.0.5 | May 2021 | Free |

| Fortify WebInspect | Scanner | Commercial | Version: 21.2.0 | December 2021 | USD 24,000 peer year |

| Criteria | Metric | Score Range |

|---|---|---|

| Test coverage | Test Coverage | 1–5 |

| pen-testing Level | 1–3 | |

| Number of URLs covered | 1–5 | |

| Attack coverage | Number of test case generated | 1–5 |

| Efficiency | Scanning Time | 1–5 |

| Vulnerability detection | OWASP Top 10 Vulnerabilities Coverage | 1–5 |

| Number of False Positive | 1–3 | |

| Number of True Positive | 1–4 | |

| Youden Index | 1–3 | |

| Automation level | 1–5 | |

| Other New | Crawling types | 1–2 |

| Added features | 0–1 | |

| Reporting Features | 0–1 | |

| configuration Effortlessness | 1–3 | |

| Scans Logging Option | 0–1 | |

| Tool Cost | NA | |

| Tool Type | NA | |

| Scan Type | 1–3 | |

| Pause and Resume Scans | 0–2 |

| Tool Name | Tool-Hosed Environment | Attack Route |

|---|---|---|

| OWASP ZAP | MacBook Pro 2.3 GHz 8-Core Intel Core i9 RAM 64 GB 2667 MHz DDR4 | External |

| Burp Suite Professional | MacBook Pro 2.3 GHz 8-Core Intel Core i9 AM 64 GB 2667 MHz DDR4 | External |

| Qualys WAS | Cloud Based | External |

| Arachni | MacBook Pro 2.3 GHz 8-Core Intel Core i9 RAM 64 GB 2667 MHz DDR4 | External |

| Wapiti3 | Kali Linux hosted in Parallels MacBook Pro 2.3 GHz 8-Core Intel Core i9 RAM 64 GB 2667 MHz DDR4 | External |

| Fortify WebInspect | Windows 10 Pro hosted in Parallels MacBook Pro 2.3 GHz 8-Core Inte RAM 64 GB 2667 MHz DDR4 | External |

| Burp Suite Professional | Qualys WAS | Fortify WebInspect |

|---|---|---|

| 70% | 40% | 1% |

| Wapiti3 | OWASP ZAP | Arachni |

|---|---|---|

| 40% | 1% | 0% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Albahar, M.; Alansari, D.; Jurcut, A. An Empirical Comparison of Pen-Testing Tools for Detecting Web App Vulnerabilities. Electronics 2022, 11, 2991. https://doi.org/10.3390/electronics11192991

Albahar M, Alansari D, Jurcut A. An Empirical Comparison of Pen-Testing Tools for Detecting Web App Vulnerabilities. Electronics. 2022; 11(19):2991. https://doi.org/10.3390/electronics11192991

Chicago/Turabian StyleAlbahar, Marwan, Dhoha Alansari, and Anca Jurcut. 2022. "An Empirical Comparison of Pen-Testing Tools for Detecting Web App Vulnerabilities" Electronics 11, no. 19: 2991. https://doi.org/10.3390/electronics11192991

APA StyleAlbahar, M., Alansari, D., & Jurcut, A. (2022). An Empirical Comparison of Pen-Testing Tools for Detecting Web App Vulnerabilities. Electronics, 11(19), 2991. https://doi.org/10.3390/electronics11192991