Abstract

To improve the generation quality of image style transfer, this paper proposes a light progressive attention adaptive instance normalization (LPAdaIN) model that combines the adaptive instance normalization (AdaIN) layer and the convolutional block attention module (CBAM). In the construction of the model structure, first, a lightweight autoencoder is built to reduce the information loss in the encoding process by reducing the number of network layers and to alleviate the distortion of the stylized image structure. Second, each AdaIN layer is progressively applied after the three relu layers in the encoder to obtain the fine-grained stylized feature maps. Third, the CBAM is added between the last AdaIN layer and the decoder, ensuring that the main objects in the stylized image are clearly visible. In the model optimization, a reconstruction loss is designed to improve the decoder’s ability to decode stylized images with more precise constraints and refine the structure of the stylized images. Compared with five classical style transfer models, the LPAdaIN is visually shown to more finely apply the texture of the style image to the content image, in order to obtain a stylized image, in which the main objects are clearly visible and the structure can be maintained. In terms of quantitative metrics, the LPAdaIN achieved good results in running speed and structural similarity.

1. Introduction

Image style transfer, which is widely used in artistic creation, industrial design, film and television entertainment and other fields, is the process of rendering content images with styles such as the color and texture of style images. With the development of applying deep learning techniques to style transfer tasks, many researchers have explored the diversity and speed of transfer styles, as well as the quality and application scenarios of stylized images. The deep learning-based style transfer can be divided into the optimization-based style transfer methods and the feed-forward style transfer methods. The optimization-based style transfer methods can only stylize one image at a time by optimizing the white noise image; therefore, its application is relatively limited. The more widely used feed-forward style transfer methods optimize the parameters of the neural network to obtain a style transfer model, which can stylize images in batches.

Among the optimization-based style transfer methods, Gatys et al. [1,2] employed deep neural networks to encode the content and style representations of an image and calculated the content loss and the style loss to optimize a white noise image to achieve style transfer. To improve the transfer speed, Kolkin et al. [3] used the relaxed earth movers distance to calculate the style loss, which was empirically easy to optimize and yielded good results. In terms of improving the quality of stylized images, Xie et al. [4] introduced the classic total variation regularization, which effectively suppressed the noise generated in the style transfer process and made the stylized image look better. Nikolai et al. [5] adopted the dual form of central moment discrepancy (CMD) to minimize the difference between the target style and the feature distribution of the output image and achieved a visually better transfer of many artistic styles. As the artistic style transfer, the above basic style transfer methods can only transfer color and texture to the content image, and the stylized image is similar to an art painting. To improve the photorealism of stylization outputs achieved by the neural style transfer algorithm, Luan et al. [6] incorporated a new loss term to the optimization objective to better preserve local structures in the content photo. Kim et al. [7] proposed a method to incorporate deformation targets which were derived from domain-agnostic point matching between content and style images into the objective of style transfer. Among the feed-forward style transfer methods, Johnson et al. [8] proposed a real time style transfer (RTST), which realized single style transfer by encoding a style in the model parameters through training. To realize multi-style transfer, Dumoulin et al. [9] introduced a conditional instance normalization (CIN) layer for the network to encode 32 styles and their interpolations. To achieve arbitrary style transfer, Huang et al. [10] proposed an adaptive instance normalization (AdaIN) layer which aligned the mean and variance of the content feature maps to those of the style feature maps to produce the stylized feature maps. Without training a specific style transfer network, Li et al. [11] proposed whitening and coloring transforms (WCT), which were integrated in the feed-forward procedure to match the statistical distributions and correlations between the intermediate features of content and style. Arbitrary style transfer models in feed-forward style transfer methods are more efficient and do not require online training. They are also more convenient for deploying applications. However, the quality of stylized images obtained by arbitrary style transfer models decreases slightly. To solve this problem, Yao et al. [12] proposed the attention-aware multi-stroke (AAMS) model, which employed self-attention as a residual to obtain the attention map. The attention map expanded the participation of salient regions, as well as kept the correlation between distant regions and maintained the structure of the main areas in the stylized image. Liu et al. [13] proposed the adaptive attention normalization model (AdaAttn), which took the shallow and deep features into account when calculating the attention weight. AdaAttn aligned the attention-weighted mean and variance of content feature maps and style feature maps well in a per-point basis. In addition, a local feature loss was used in AdaAttn to help the model improve the transfer quality. Shen et al. [14] added an edge detection network to the neural style transfer model to extract the edge contour of the content image and achieved a refined representation of the overall structure of the stylized image.

Current style transfer models mainly focus on the diversity of transfer styles. When dealing with the complex structure of the content image and the artistic abstraction of the style image, most of these style transfer models are still unified, processing a style image semantic pattern, leading to a rough stylized image texture, unclear main objects, as well as a distorted structure. Although some style transfer models are proposed to improve the quality of stylized images, most of these models are complex and require additional computational cost. In order to reduce computational consumption and obtain stylized images with artistic beauty, a feed-forward arbitrary style transfer model is proposed in this paper, named light progressive attention adaptive instance normalization (LPAdaIN) model. LPAdaIN is a three-branch model combining the convolutional attention mechanism CBAM and the AdaIN layer, including a master branch for style transfer and two auxiliary branches for image reconstruction. It can transfer global style while retaining local structure, make the style distribution more delicate and the main objects visible, and result in high quality stylized images.

The main contributions of this work are:

- In the construction of the model structure, first, the influence of different network depths on style transfer effects is compared, and a lightweight autoencoder with shallow network layers and an AdaIN layer is designed as a base style transfer model to improve the running speed of the model and alleviate the distortion of the stylized image structure. Second, the strategy of progressively applying the AdaIN layer is adopted in the master branch to achieve the fully fine-grained transfer of style textures. Third, a CBAM module is embedded before the decoder of the master branch to reduce the loss of important information and ensure that the main objects in the stylized image are clearly visible.

- In the optimization of the model, a new optimization objective named reconstruction loss is proposed. In auxiliary branches, the per-pixel loss on the image is computed in a supervised manner to assist the optimization of the decoder training.

- Experiments and comparisons with other models are provided to demonstrate the validity of the proposed LPAdaIN model. The LPAdaIN achieves better performance in terms of stylized image style texture refinement, the visibility of the main objects, and structure preservation.

2. Preliminary

2.1. Adaptive Instance Normalization Layer

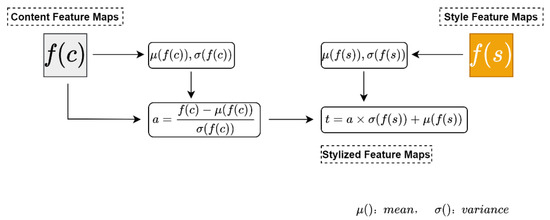

Feed-forward style transfer methods encode the style in model parameters through training; however, the training can only make the model learn one style. Dumoulin et al. [9] conjectured that the single style transfer model cannot fully utilize its capacity. Only a part of the parameters in the model represents the style information, and single style transfer models of different styles can share some parameters. They proposed the CIN layer, which enabled multi-style transfer by learning multiple pairs of affine parameters representing multiple styles at the instance normalization layer. Inspired by the CIN, Huang et al. [10] applied the AdaIN layer to replace the mean and variance of content feature maps with the mean and variance of style feature maps to obtain stylized feature maps, so as to achieve arbitrary style transfer. Unlike CIN, AdaIN has no learnable affine parameters. Instead, it adaptively computes the affine parameters from the style image. Content feature maps and style feature maps are extracted from the content image and the style image using pre-trained VGG-19 [15], then both feature maps are input to an AdaIN layer. The flow chart of the AdaIN layer is shown in Figure 1. First, is subtracted from its own mean and divided by its own variance, in order to erase the style and obtain feature maps a. Secondly, a multiplies the variance of and adds the mean of to obtain the stylized feature maps , as in Equation (1).

where and are the mean and variance of the feature maps, respectively. For a clearer description, is used to denote the stylized feature maps, as in Equation (2).

Figure 1.

Flow chart of AdaIN.

Randomly initialize a decoder , which maps the stylized feature maps back to the stylized image in the image space, as in Equation (3).

2.2. Attention Mechanism

The attention mechanism originates from the study of human visual perception. Due to the bottleneck of information processing, humans will focus on salient regions and ignore other information when observing objects. Inspired by this, some scholars have applied the attention mechanism to the field of deep learning. The commonly used attention mechanisms include channel attention [16,17] and spatial attention [17].

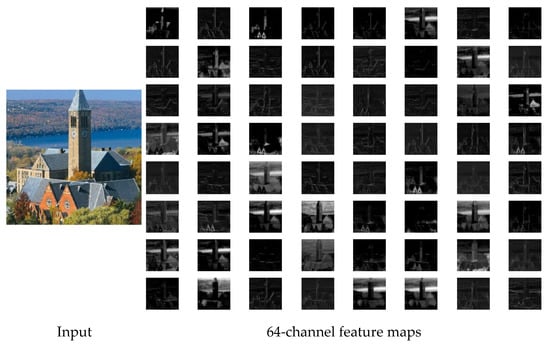

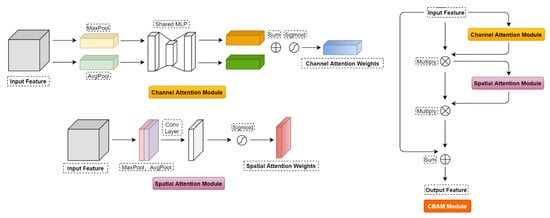

An image has multiple channels when it is encoded to the intermediate feature layers. As shown in Figure 2, we provide the visualization of the 64-channel feature maps extracted by the relu1-1 layer in pre-trained VGG-19 [15]. It can be seen that the contour information of the object is more obvious in some channel feature maps, while it is rather blurry in other channel feature maps. The channel attention mechanism squeezes the spatial dimension of the input feature maps to obtain a one-dimensional vector as channel attention weights, which are learned through the loss function. The channel attention mechanism assigns larger weights to the effective channel and enhances the important channel information with a large amount of information. Specifically, as shown in Figure 3, the feature maps of size H × W × C are input into the channel attention module, where H, W, C are the height, width, and the number of channels of the feature maps, respectively. For aggregating spatial information, both average-pooling and max-pooling operations are used on input feature maps to generate two different spatial context descriptors of size 1 × 1 × C. Both descriptors are then forwarded to a shared Multi-Layer Perception to produce two feature maps; then, the feature maps are merged using element-wise summation. Through the sigmoid activation function, the final channel attention weights are generated.

Figure 2.

The 64-channel feature maps extracted by relu1-1 in VGG-19.

Figure 3.

Attention mechanism.

Similarly, the spatial attention mechanism squeezes the channel dimension of the input feature maps to obtain a two-dimensional vector as spatial attention weights, which are learned through loss function. The spatial attention mechanism assigns larger weights to the effective region and the object structure in this region is preserved. Specifically, as shown in Figure 3, the feature maps of size H × W × C are input into the spatial attention module. Global maximum pooling and average pooling along the channel axis are performed to obtain two feature maps of size H × W × 1, which are then concatenated to generate an efficient feature descriptor. On the concatenated feature descriptor, the final spatial attention weights are generated by applying a convolution layer and through the sigmoid activation function.

The convolutional block attention model (CBAM) [17] is a simple and effective attention module for feed-forward convolutional neural networks. As shown in Figure 3, first, input the feature maps to generate channel attention weights through the channel attention module and multiply them with the input feature maps element-wise in the channel dimension to generate channel attention feature maps. Channel attention feature maps are continuously input into the spatial attention module to generate spatial attention weights, and the element-wise multiplication operations are performed with the input feature maps in the spatial dimension to generate the spatial attention feature maps. Finally, the spatial attention feature maps and the original input feature maps are connected by residual summation to obtain the final output feature maps.

The attention mechanism requires the participation of the target domain, and the attention weights are obtained through loss optimization. The self-attention mechanism [18] is a variant of the attention mechanism that reduces the dependence on external information and is a better choice to capture the internal correlations of data or features. The self-attention mechanism can obtain attention weights by calculating the internal elements of the source domain. It does not require the target domain to participate in the calculation of loss optimization. Attention-aware multi-stroke (AAMS) [12] used the self-attention mechanism with a sparse loss on the self-attention feature map to encourage the self-attention encoder to pay more attention to small and salient regions rather than the entire image, therefore effectively protecting the structure of the saliency area in the stylized image.

3. Proposed Method

3.1. Algorithm of the Proposed Method

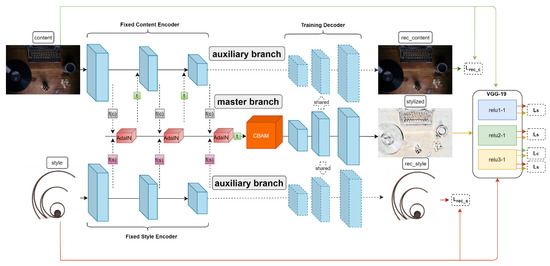

In order to realize style transfer with texture refinement and structure preservation, the AdaIN layer and the CBAM are combined to construct a lightweight progressive attention adaptive instance normalization model, LPAdaIN, as shown in Algorithm 1. It consists of the content encoder, the style encoder and the decoder. The structure of LPAdaIN is shown in Figure 4, with one master branch and two auxiliary branches. The task of the master branch is style transfer, using the content encoder and style encoder of the two auxiliary branches to encode the content image and the style image into the content feature maps f(c) and the style feature maps f(s), respectively. The AdaIN layer is progressively applied after the relu layer to achieve the final stylized feature maps t with a fine-grained texture. Attention mechanism CBAM is added between the last AdaIN layer and the decoder to reduce the loss of important information and ensure that the main objects in the stylized image are clearly visible. Finally, the decoder is used to decode the stylized feature maps back to the stylized image. The tasks of the two auxiliary branches are image reconstruction and sharing the decoder with the master branch. Two encoders are used to encode the content image and style image into the content feature maps and style feature maps, respectively. Then, the decoder is used to decode the content feature maps and style feature maps back to the reconstructed content image and the reconstructed style image.

Figure 4.

LPAdaIN network structure diagram.

The training of the model is constrained by the content loss, the style loss, and the reconstruction loss. Specifically, the master branch trains the decoder through the content loss and the style loss. The auxiliary branches train the decoder through the reconstruction loss. Since the master branch shares the decoder with the auxiliary branches, this co-training allows the model decoder to be more fully trained, refining the stylized image structure. In addition, since the encoder can well extract image feature maps using pre-trained VGG-19 [15], the parameters of two encoders are not updated during model training and only the parameters of the decoder are updated to reduce the model training time.

| Algorithm 1: LPAdaIN Algorithm. |

| Step 1: Input a pair of content image Ic and style image Is Step 2: In Master Branch Step 2.1: for Ic and Is Step 2.1.1: use relu1-1 layer to extract f(c) and f(s), use AdaIN to get t Step 2.1.2: use relu2-1 layer to extract f(c) and f(s), use AdaIN to get t Step 2.1.3: use relu3-1 layer to extract f(c) and f(s), use AdaIN to get t Step 2.1.4: use CBAM to enhance important information Step 2.1.5: feed t to decoder, then get stylized image Ics Step 2.1.6: calculate content loss and style loss, then optimize decoder Step 2.2: end for Step 3: In Auxiliary Branch One Step 3.1: for Ic Step 3.1.1: use relu3-1 layer to extract f(c) Step 3.1.2: feed f(c) to decoder, then get reconstructed content image Ic_rec Step 3.1.3: calculate content reconstruction loss, then optimize decoder Step 3.2: end for Step 4: In Auxiliary Branch Two Step 4.1: for Is Step 4.1.1: use relu3-1 layer to extract f(s) Step 4.1.2: feed f(s) to decoder, then get reconstructed style image Is_rec Step 4.1.3: calculate style reconstruction loss, then optimize decoder Step 4.2: end for Step 5: Output stylized image Ics Step 6: end |

3.2. LPAdaIN Architecture

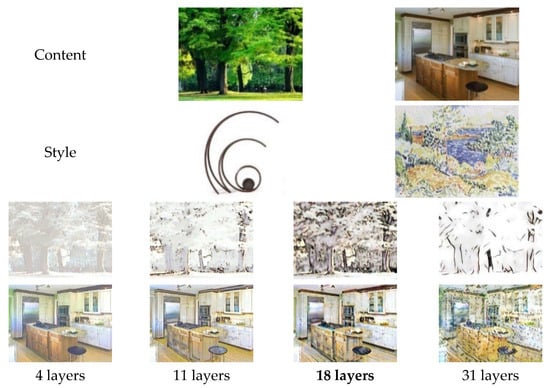

In the network structure design of the model, first, the influence of the number of autoencoder network layers on style transfer effects is analyzed through experiments. Four types of autoencoders with different network layers are set up for training. The encoder uses pre-trained VGG-19 [15], and the depth is, respectively, relu1-1 layer (four layers); relu2-1 layer (11 layers); relu3-1 layer (18 layers); and relu4-1 layer (31 layers). The decoder structure is symmetrical with the encoder structure. An AdaIN layer is used between the encoder and the decoder for style rendering. Style transfer effects are shown in Figure 5. It can be seen that when the number of autoencoder network layers is small, only color information is transferred without texture information. When the number of autoencoder network layers is large, the stylized image structure is prone to distortion. In addition, the more layers in the autoencoder network, the longer the training and testing time. In order to balance the style transfer effects and training time, the first 18 layers of pre-trained VGG-19 are used as the content encoder and the style encoder, and a decoder is constructed with a symmetrical structure. In this scheme, the stylized image contains color and texture information while maintaining good structure, and the training time is moderate. In this paper, the convolutional layers in the autoencoder all use 3 × 3 convolution kernels, which can capture the detailed statistics of natural images and reduce the number of parameters.

Figure 5.

Comparison of style transfer effects of autoencoders with different network layers.

A single application of the AdaIN layer for style rendering results in the insufficient distribution of style information in the stylized image. Therefore, the AdaIN layer is progressively applied to make the style transfer more sufficient. As shown in Figure 4, each AdaIN layer is applied after the relu1-1, relu2-1, and relu3-1 layers, respectively, in the encoder. The input feature maps of each AdaIN layer are incremented in the channel dimension, which are 64, 128, and 256, respectively. In the multi-scale channel dimension, through the AdaIN layer, the mean and variance of the style feature maps are used to replace the mean and variance of the content feature maps to obtain stylized feature maps. Stylized feature maps t are used as content feature maps and encoded in the content encoder again. The next AdaIN layer would render style information to the content feature maps again, so that the style can be evenly and finely applied to them.

Since the encoding step in style transfer will lose the important information of the image, the recognizability of the main objects in the stylized image is low. Using an attention mechanism can alleviate this problem, making the model training process focus on significant channels and spatial regions. In this paper, the attention mechanism CBAM module is added between the last AdaIN layer and the decoder, that is, the CBAM module can be treated as a part of the decoder. The CBAM module is placed here for four main reasons: (1) In this paper, the pre-trained VGG-19 is used as the encoder. The parameters of the encoder are fixed during training, and only the decoder is trained, which can greatly save on training time. If the CBAM module is embedded in the encoder, in order to learn accurate spatial and channel attention weights, the encoder also needs to be trained when training the model. This will greatly increase the training time. (2) The AdaIN layer has certain disadvantages. When erasing the style of the content image, part of the outline structure will be erased. Because there are multiple AdaIN layers in the encoder, the CBAM module applied after the relu1-1 or relu2-1 layer in the encoder can enhance the salient information in that position, but the subsequent operation of erasing the style in AdaIN layer will again destroy the structure and the salient information. (3) Generally speaking, there is information loss on the input image during the encoding process, and the attention mechanism needs to be used to enhance important information. The decoding process is the process of information enhancement, and it does not need to use the attention mechanism. (4) The position between the last AdaIN layer and the decoder, where the input of CBAM is the fine and fully stylized output of the last AdaIN layer, and the number of channels is the largest. Here, the CBAM module is subsequently applied to learn the weights of the different channels and spatial regions with higher reliability.

3.3. Training with Improved Loss Function

Style transfer needs to retain the content of the content image and obtain the style of the style image, and there is no target stylized image as a real label. The per-pixel loss function of the image cannot be selected, and only the perceptual loss function on image feature maps can be calculated to train the model. On the selection of feature layers for calculating loss, the content loss measures the degree of preservation of the contour structure of the stylized image. The feature maps of the deeper feature layers extract the image contour structure. Therefore, the content loss is calculated directly on the feature maps of the deeper feature layers of the stylized image and content image. The style loss measures the similarity between the color and texture in the stylized image and the style image. The image style information of each feature layer can be extracted by correlation transformation. Therefore, the style loss is calculated on the feature maps after the transformation of multiple feature layers of the stylized image and style image. So far, the correlation transformations that can extract the style information of the feature maps include calculating the Gram matrix of the feature maps, calculating the mean and variance of the feature maps, and calculating the histogram of the feature maps.

However, detailed information is lost when the image is encoded to the feature layer. Therefore, perceptual loss calculated at the feature layers is difficult for fully measuring the stylized image quality. Perceptual loss cannot optimize the decoder perfectly. Content images and style images can be used as ground truth labels for reconstructed content images and reconstructed style images. The per-pixel reconstruction loss on the images can be calculated in a supervised manner to optimize the decoder training. Therefore, the perceptual loss—including the content loss and the style loss in the master branch—is calculated. Meanwhile, we calculate the per-pixel reconstruction loss in the auxiliary branches. The decoder is jointly optimized to realize the structure refinement of the stylized images.

In the master branch, we use the stylized image feature maps and content image feature maps extracted from the relu3-1 layer in the model to calculate L2 loss as the content loss. The content loss is shown in Equation (4).

where represents the feature maps extraction of the image with the relu3-1 layer, is the content image, is the style image, and is the stylized image.

Different from the method of calculating the style loss with the Gram matrix of style feature maps [1,2], the AdaIN layer is adopted, so the mean and variance are used to measure the style information. We directly use the relu1-1 layer, relu2-1 layer, and relu3-1 layer in the model to extract the stylized image feature maps and the style image feature maps. Their mean and variance are used to calculate the style loss. The style loss is shown in Equation (5).

where represents the image feature maps extracted by the relu layer of the VGG-19 encoder, is the mean of the feature maps, and is the variance of the feature maps.

In the auxiliary branches, a per-pixel reconstruction loss is designed to optimize the decoder. The content reconstruction loss is calculated on the reconstructed content image and the content image. The style reconstruction loss is calculated on the reconstructed style image and the style image. Since the decoder is shared, if the reconstructed image quality of the auxiliary branches is improved, the ability of the decoder to decode the image will be enhanced and the quality of the stylized image of the master branch will be improved. The reconstruction loss is divided into content reconstruction loss and style reconstruction loss , as shown in Equation (6).

where is the content feature maps, is the style feature maps, is the decoder, is the reconstructed content image, and is the reconstructed style image.

The total loss function is shown in Equation (7). The total loss is used to constrain the network to learn appropriate parameters and then perform the style transfer with texture refinement and structure preservation.

where are the weight coefficients of , and , respectively.

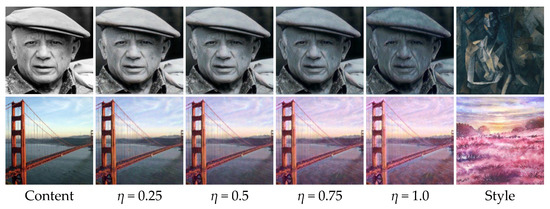

In the style transfer model, the gradient of each layer is calculated by backpropagation, thereby updating the model parameters. The AdaIN layer is used to align the data distribution and add the reconstruction loss to the overall loss function. By using Equation (2), the mean and variance of content feature maps are replaced by the mean and variance of style feature maps to obtain stylized feature maps. Then, the stylized feature maps are decoded back to the image space to achieve style transfer. As shown in Equation (8), the balance between content and style can also be controlled by setting a weight coefficient for the content feature maps and the stylized feature maps obtained in Equation (2). As shown in Figure 6, a transition between content-similarity and style-similarity can be observed by changing from 0 to 1.

Figure 6.

Content–style trade-off.

4. Experimental Results and Discussion

4.1. Implementation Details

Our experimental environment is PyCharm and PyTorch, and the graphics card model is Nvidia GeForce RTX 2080Ti with 12G of video memory. The processor model is Core i7-9700K with 32 GB of running memory. Our network is trained using MS-COCO [19] as content images and a dataset of paintings, mostly collected from WikiArt [20], as style images, following the setting of [10]. Each dataset contains roughly 80,000 training examples. The Adam optimizer [21] is used, as well as a batch size of eight content–style image pairs for 40 k iterations. We set the learning rate size as 1 × 10−4. During training, the aspect ratio of the image is preserved and the smaller dimension is rescaled to 512 pixels, and then randomly cropped to 256 × 256 pixels. Since our network is fully convolutional, it can be applied to images of any size during testing. Our encoder contains the first few layers from the VGG-19 model [15] pre-trained on the ImageNet dataset [22]. The decoder is symmetric to the encoder structure. The reluX-1 (X = 1, 2, 3 in the encoder is used to compute the perceptual loss. The reconstructed content image and reconstructed style image reconstructed by the decoder and the corresponding content and style image are used to calculate the reconstruction loss. In Equation (7), are set as 1, 10, 100 to balance each loss.

4.2. Results Analysis

4.2.1. Qualitative Evaluations

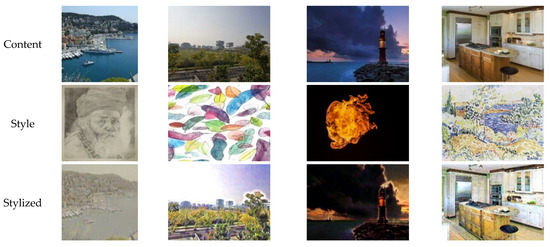

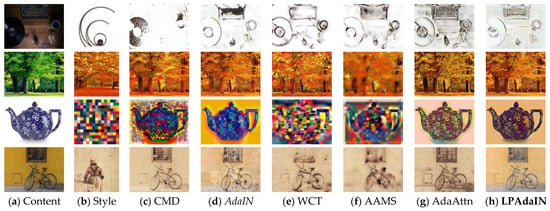

Our LPAdaIN is processed by the progressive application of the AdaIN layer, and the attention mechanism and the improved loss function are added. The stylized results for various content/style pairs are shown in Figure 7. It can be seen that the stylized images have a good visual quality and look naturally smooth without artifacts. As an arbitrary style transfer model, the LPAdaIN is compared with the optimization-based style transfer methods CMD, the currently commonly used arbitrary style transfer models AdaIN and WCT, as well as the models based on attention mechanism AAMS and AdaAttn, as shown in Figure 8. In Figure 8c, using CMD, the main objects in the stylized images in the first and third rows are difficult to identify, while the stylized images in the second and fourth rows maintain a better structure and the main objects can be identified. In Figure 8d, there are artifacts and structural distortions in the stylized images, and the main objects in the first row of the stylized image are unrecognizable. In the stylized images of Figure 8e, the texture strokes are too heavy, and the semantic information of the objects in the first and third rows are difficult to identify. In the stylized images of Figure 8f, the structure of the salient region is well maintained, the main objects can be identified, but the background area is blurred. In the stylized image of Figure 8g, the overall content structure remains good, but there are still minor artifacts. Overall, the stylized images in Figure 8c–g all suffer from the problem of rough texture. Figure 8h shows the stylized results of our model LPAdaIN. It can be seen that the texture is finely distributed, the main object is clearly visible, the structure is well maintained, the original semantic information is maintained, and it has a more reasonable visual effect.

Figure 7.

Style transfer effects of the LPAdaIN model.

Figure 8.

Style transfer effects comparisons of different models.

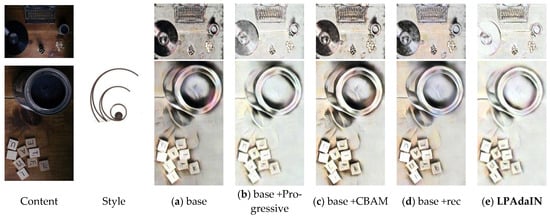

In order to verify the effectiveness of each module proposed in this paper, each module is separately added to the basic network for training. The style transfer model of the master branch and the image reconstruction model of the auxiliary branches are obtained. In Figure 9, the squares with letters in the content image are defined as the main objects. As shown in Figure 9a, in the style transfer model of the master branch obtained by using the basic lightweight network training, the stylized images still retain some style information of the content images, with artifacts and a rough texture, and the low visibility of the main objects. As shown in Figure 9b, progressively applying the AdaIN layer makes the stylization more fully refined and reduces the artifacts. However, the main objects become more blurred due to the destruction of the image structure by the AdaIN layer. As shown in Figure 9c, the attention mechanism is added to improve the structure of the salient region, the artifacts are reduced, and the main objects are clearer, but the stylization is insufficient. As shown in Figure 9d, adding reconstruction loss refines the stylized image structure. The visibility of the main objects is very high, but there is still the problem of insufficient stylization. When all the modules are used together, we can obtain the fine-texture transfer results of the structure preservation. As shown in Figure 9e, the style rendering of color and texture in the stylized image is fine and sufficient, the main objects are clearly visible, and the structure is well maintained.

Figure 9.

Style transfer effects of each module. “base” represents the basic lightweight network only using one AdaIN layer after the relu3-1 layer; “Progressive” represents the strategy of progressively applying the AdaIN layer; “CBAM” represents the attention module; “rec” represents the reconstruction loss. “LPAdaIN” represents a model that incorporates all modules presented in this article.

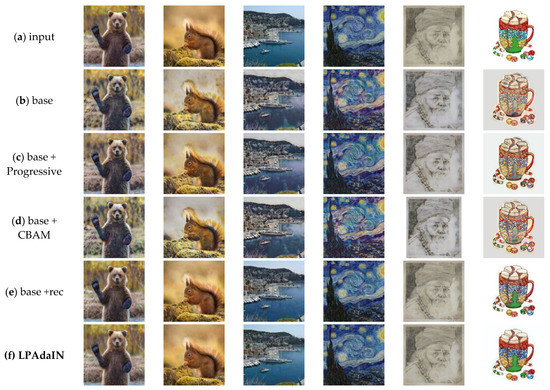

In order to further verify the effectiveness of the reconstruction loss, we compare the image reconstruction effects of the auxiliary branches’ image reconstruction model, which is trained by adding each module separately, as shown in Figure 10. Figure 10a shows the input real images. As shown in Figure 10b–d, images reconstructed by the image reconstruction model without reconstruction loss training are relatively blurry with artifacts, and some areas of the images have a different color to the input real images. As shown in Figure 10c,d, applying the progressive AdaIN layer and the CBAM module can improve the image reconstruction quality. The blurring phenomenon is reduced, but the color is still inconsistent with the input real images. Using the image reconstruction model trained with reconstruction loss, the reconstructed image is clearer and the color is more close to the input real images, as shown in Figure 10e,f. It shows that the reconstruction loss enhances the ability of the decoder to reconstruct the images.

Figure 10.

Image reconstruction effects of each module. “base” represents the basic lightweight network only using one AdaIN layer after the relu3-1 layer; “Progressive” represents the strategy of progressively applying the AdaIN layer; “CBAM” represents the attention module; “rec” represents the reconstruction loss. “LPAdaIN” represents a model that incorporates all modules presented in this article.

4.2.2. Quantitative Evaluations

In this paper, objective evaluation and comparison are made in the number of parameters, structural similarity (SSIM), peak signal-to-noise ratio (PSNR) and stylization time.

The number of parameters of the proposed model and other style transfer models are shown in Table 1. The proposed LPAdaIN model is based on a lightweight autoencoder structure; therefore, the number of parameters is significantly reduced compared with other style transfer models. Adding a CBAM module slightly increases the number of parameters, while adding the progressively AdaIN layer and the reconstruction loss has no effect on the number of parameters.

Table 1.

Number of parameters of different models.

The goal of the style transfer is to apply the style of the style image to the content image to generate a stylized image; the stylized image should be consistent with the content image in terms of semantic structure. SSIM is a metric to measure the similarity of two images, and it can be used to measure the degree of structural preservation of stylized images. The closer the SSIM value is to 1, the more similar the structure. For the CMD model, the AdaIN model, the WCT model, the AAMS model, the AdaAttn model, the model with each module proposed in this paper and the LPAdaIN model, we calculate the average structure similarity of multiple stylized images and corresponding content images, respectively. As shown in Table 2, each module proposed in this paper can improve the SSIM. The LPAdaIN model has a significant SSIM improvement compared with other models. The CMD model is an optimization-based method, which consumes a lot of calculation and can achieve good transfer effects only by setting appropriate weights for different images. Therefore, the LPAdaIN model maintains a better stylized image structure with a low computational cost.

Table 2.

Structural similarity comparison between stylized images obtained by different style transfer models and content images.

PSNR is often used as a measurement for signal reconstruction quality in image compression and other fields. The higher the value, the better the reconstructed image quality would be. In order to measure the effectiveness of the reconstruction loss, that is, whether it enhances the image reconstruction capability of the decoder, for the model obtained by adding each module training separately and the LPAdaIN model, SSIM and PSNR on the auxiliary branch reconstruction images and input image is calculated, respectively. As shown in Table 3, progressively applying the AdaIN layer strategy and the addition of the CBAM module can slightly improve SSIM and PSNR. SSIM and PSNR are greatly improved with the addition of reconstruction loss. This shows that reconstruction loss can help the decoder to reconstruct images with high quality.

Table 3.

Structural similarity and peak signal-to-noise ratio comparison between the reconstructed images of LPAdaIN model, as well as its ablation part and the input images.

In terms of execution time, the above models are compared on images with different pixel sizes. As shown in Table 4, the proposed LPAdaIN model uses a lightweight autoencoder with fewer parameters and applies the AdaIN layer for style rendering, which reduces the execution time.

Table 4.

Comparison of style transfer time of different models (seconds).

5. Conclusions

In this paper, the LPAdaIN model is proposed to achieve style transfer. In the construction of the model structure, first, a lightweight autoencoder is used to reduce the information loss in the encoding process and alleviate the distortion of the stylized image structure. The number of parameters is reduced to improve the running speed. Secondly, AdaIN layers are progressively applied for multiple stylizations to make the color and texture distribution in the stylized image more complete and finer. Third, the attention mechanism CBAM is used to focus on significant channels and spatial regions in the model training process, then reduce the loss of important information and make the significant objects in the stylized images clearly visible. In the optimization of the model, the per-pixel reconstruction loss which is calculated in a supervised manner is introduced. Reconstruction loss improves the decoder’s ability to reconstruct the stylized image with more precise constraints, and then refines the stylized image structure. Experiments show that the proposed LPAdaIN model runs faster, the texture distribution in stylized images is more natural and delicate, the main objects are more clearly visible, and the structure is better maintained.

Author Contributions

Conceptualization, Q.Z. and X.L.; methodology, Q.Z. and X.L.; validation, Q.Z., X.L., H.B. and J.S.; writing—original draft preparation, Q.Z.; writing—review and editing, X.L., J.S. and H.B.; data curation, C.C.; supervision, X.L.; funding acquisition, X.L and H.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by The National Natural Science Foundation of China, grant number 61801159, 61571174.

Data Availability Statement

The dataset used in this research is publicly available on https://cocodataset.org/#download (accessed on 21 May 2022).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Gatys, L.A.; Ecker, A.S.; Bethge, M. Image style transfer using convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition(CVPR), Piscataway, NJ, USA, 27–30 June 2016; pp. 2414–2423. [Google Scholar]

- Gatys, L.A.; Ecker, A.S.; Bethge, M. Controlling perceptual factors in neural style transfer. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition(CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 3985–3993. [Google Scholar]

- Kolkin, N.; Jason, S.; Gregory, S. Style transfer by relaxed optimal transport and self-similarity. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition(CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 10051–10060. [Google Scholar]

- Xie, B.; Wang, N.; Fan, Y.W. Correlation alignment total variation model and algorithm for style transfer. J. Image Graph. 2020, 25, 0241–0254. [Google Scholar]

- Nikolai, K.; Jan, D.W.; Konrad, S. In the light of features distributions: Moment matching for neural style transfer. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021; pp. 9382–9391. [Google Scholar]

- Luan, F.J.; Paris, S.; Shechtman, E.; Bala, K. Deep photo style transfer. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4990–4998. [Google Scholar]

- Kim, S.S.Y.; Kolkin, N.; Salavon, J.; Shakhnarovich, G. Deformable style transfer. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; pp. 246–261. [Google Scholar]

- Johnson, J.; Alahi, A.; Li, F.F. Perceptual losses for real-time style transfer and super-resolution. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 694–711. [Google Scholar]

- Dumoulin, V.; Shlens, J.; Kudlur, M. A learned representation for artistic style. arXiv 2017, arXiv:1610.07629. [Google Scholar]

- Huang, X.; Belongie, S. Arbitrary style transfer in real-time with adaptive instance normalization. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 1501–1510. [Google Scholar]

- Li, Y.; Fang, C.; Yang, J.; Wang, Z.; Lu, X.; Yang, M.H. Universal style transfer via features transforms. In Proceedings of the Neural Information Processing Systems (NIPS), Long Beach, CA, USA, 4–9 December 2017; pp. 386–396. [Google Scholar]

- Yao, Y.; Ren, J.Q.; Xie, X.S.; Liu, W.; Liu, Y.J.; Wang, J. Attention-aware multi-stroke style transfer. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 1467–1475. [Google Scholar]

- Liu, S.H.; Lin, T.W.; He, D.L.; Li, F.; Wang, M.L.; Li, X.; Sun, Z.X.; Li, Q.; Ding, E. Adaattn: Revisit attention mechanism in arbitrary neural style transfer. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 6649–6658. [Google Scholar]

- Shen, Y.; Yang, Q.; Chen, X.P.; Fan, Y.B.; Zhang, H.G.; Wang, L. Structure refinement neural style transfer. J. Electron. Inf. Technol. 2021, 43, 2361–2369. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the 3rd International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Peter, S.; Jakob, U.; Ashish, V. Self-attention with relative position representations. arXiv 2018, arXiv:1803.02155. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollar, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Nichol, K. Painter by Numbers, Wikiart. Available online: https://www.kaggle.com/c/painter-by-numbers/ (accessed on 20 November 2021).

- Kingma, D.; Ba, J. Adam: A method for stochastic optimization. In Proceedings of the 3rd International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Li, F. Imagenet: A large-scale hierarchical image database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).