A Content-Aware Non-Local Means Method for Image Denoising

Abstract

:1. Introduction

2. Effect of Image Content on NLM Smoothing Parameter

3. Content-Aware Smoothing Parameter Selection via Hessian Matrix Analysis

4. Representing Euclidean Distance of Patches in Terms of Statistical Features

5. The Search Strategy of Similar Patches from 2D Histogram

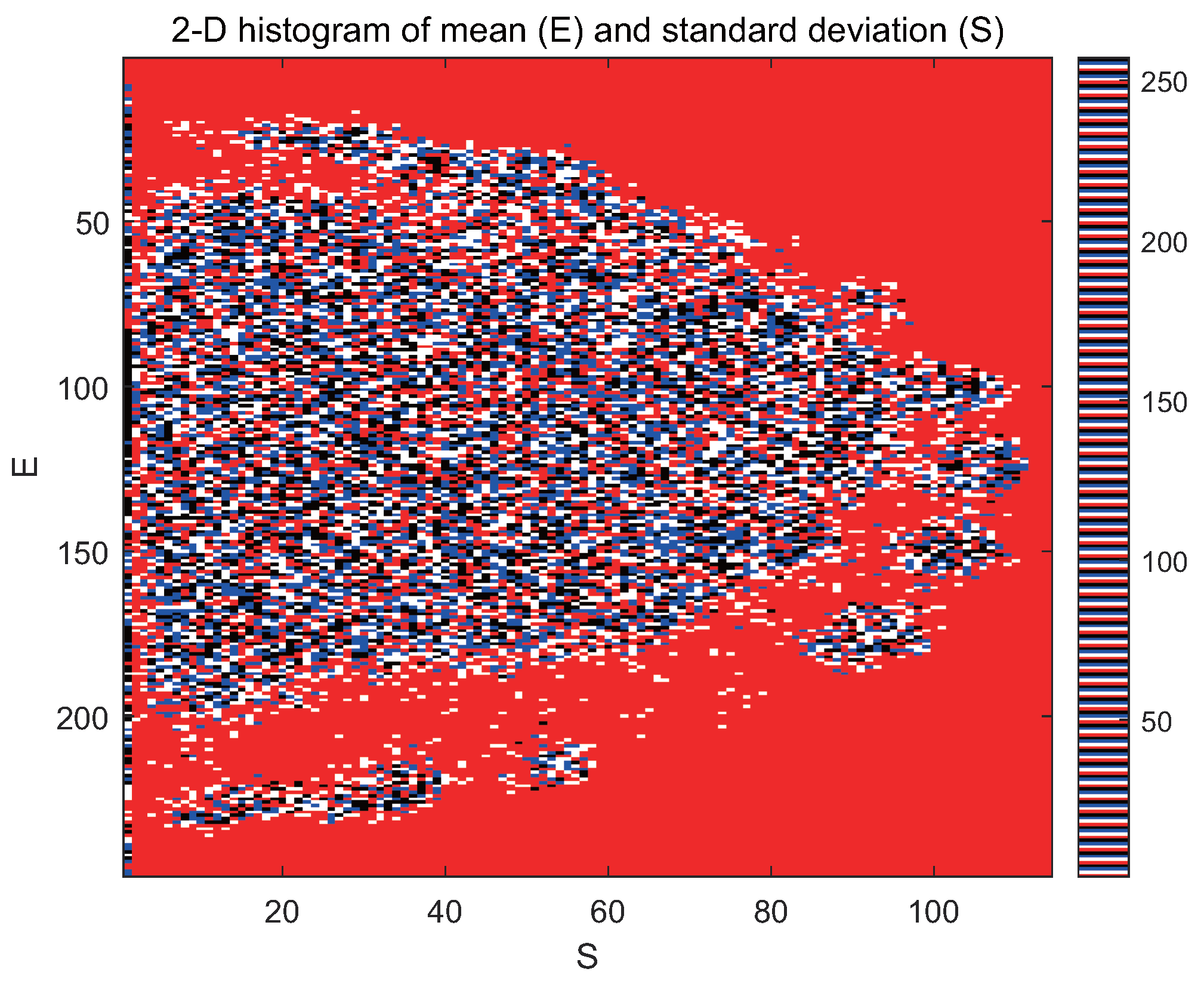

5.1. 2D Histogram to Represent Statistical Features

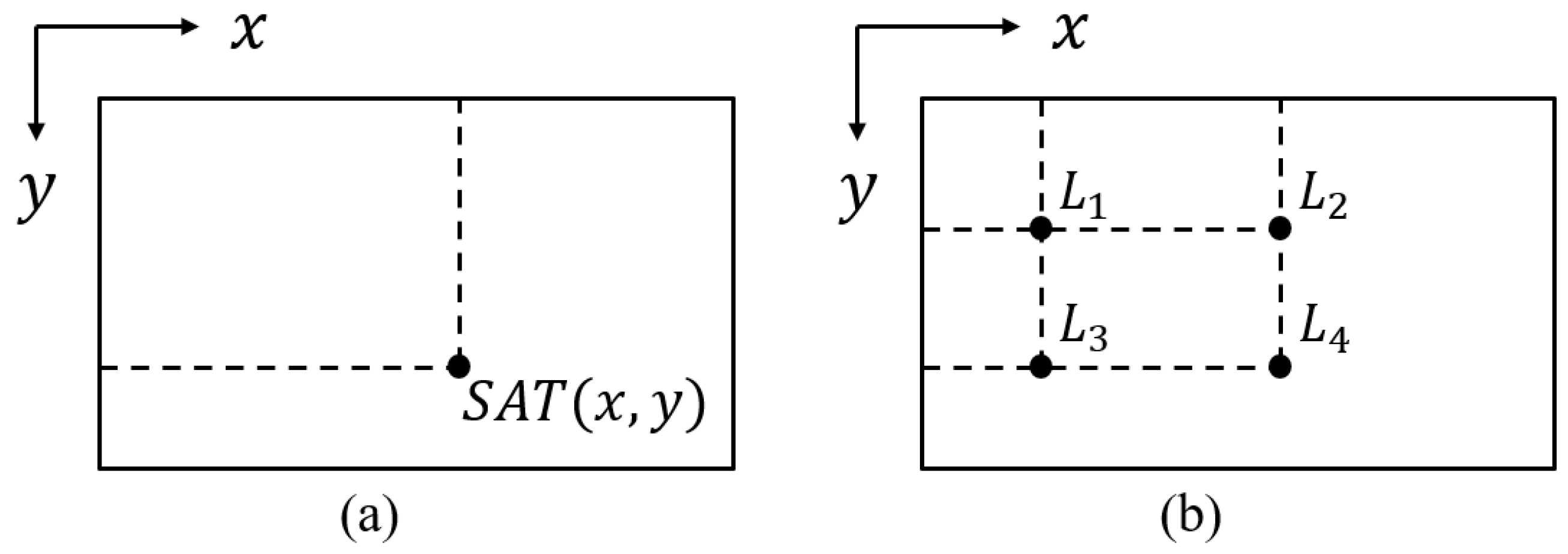

5.2. Summed-Area Table for Fast Searching Similar Patches

5.3. Threshold for Searching Patches

- (1)

- Analyze the eigenvalues of each image pixel based on the Hessian matrix, then use the Canny operator to obtain an adaptive filtering parameter .

- (2)

- Represent Euclidean distance of patches in terms of statistical features.

- (3)

- Obtain a 2D histogram based on statistical features.

- (4)

- Search the similar patches according to a 2D histogram with a regular threshold based on summed area table.

- (5)

- Denoise the noisy image using a patchwise process such as NLM in the remaining steps.

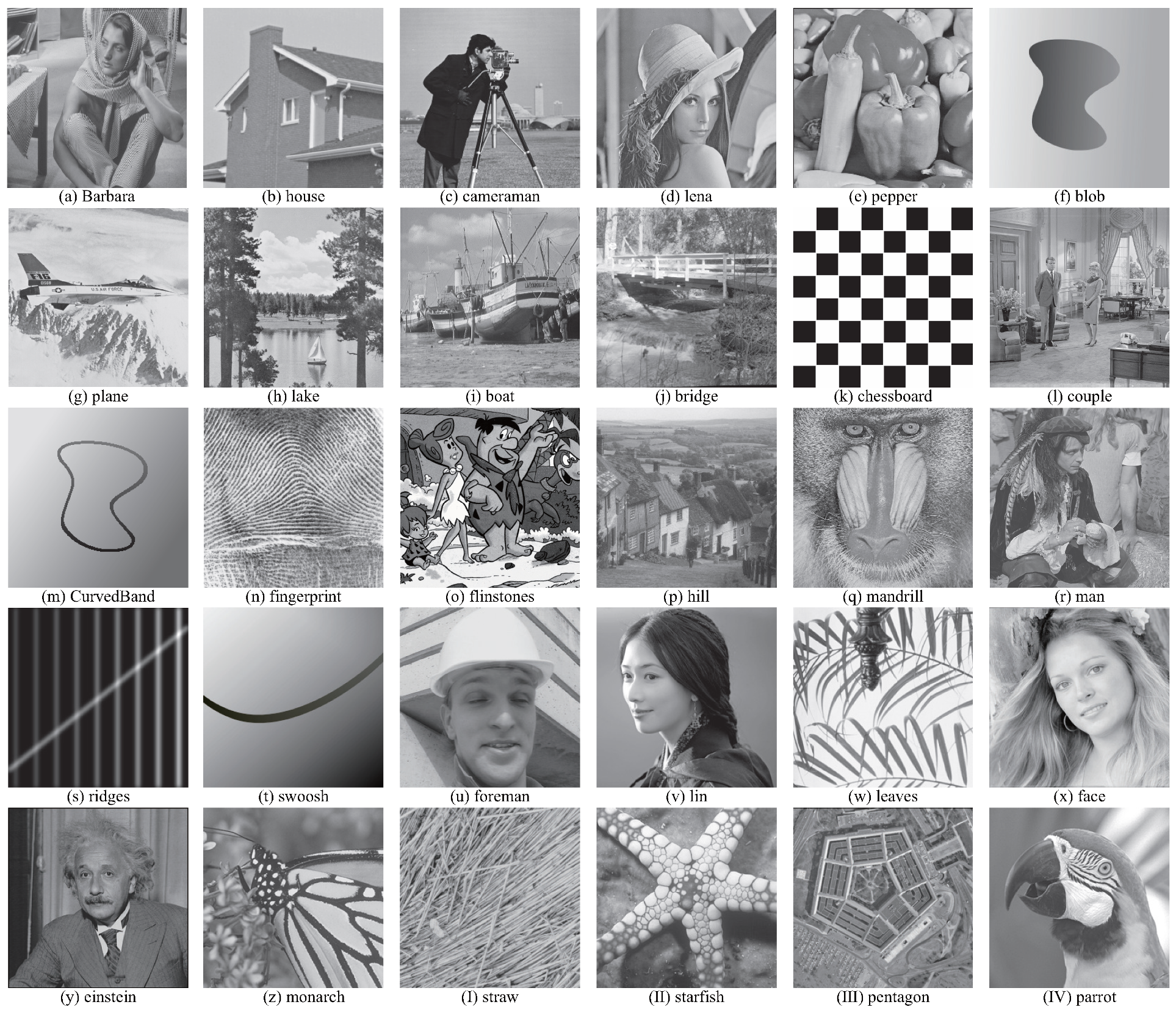

6. Experimental Results

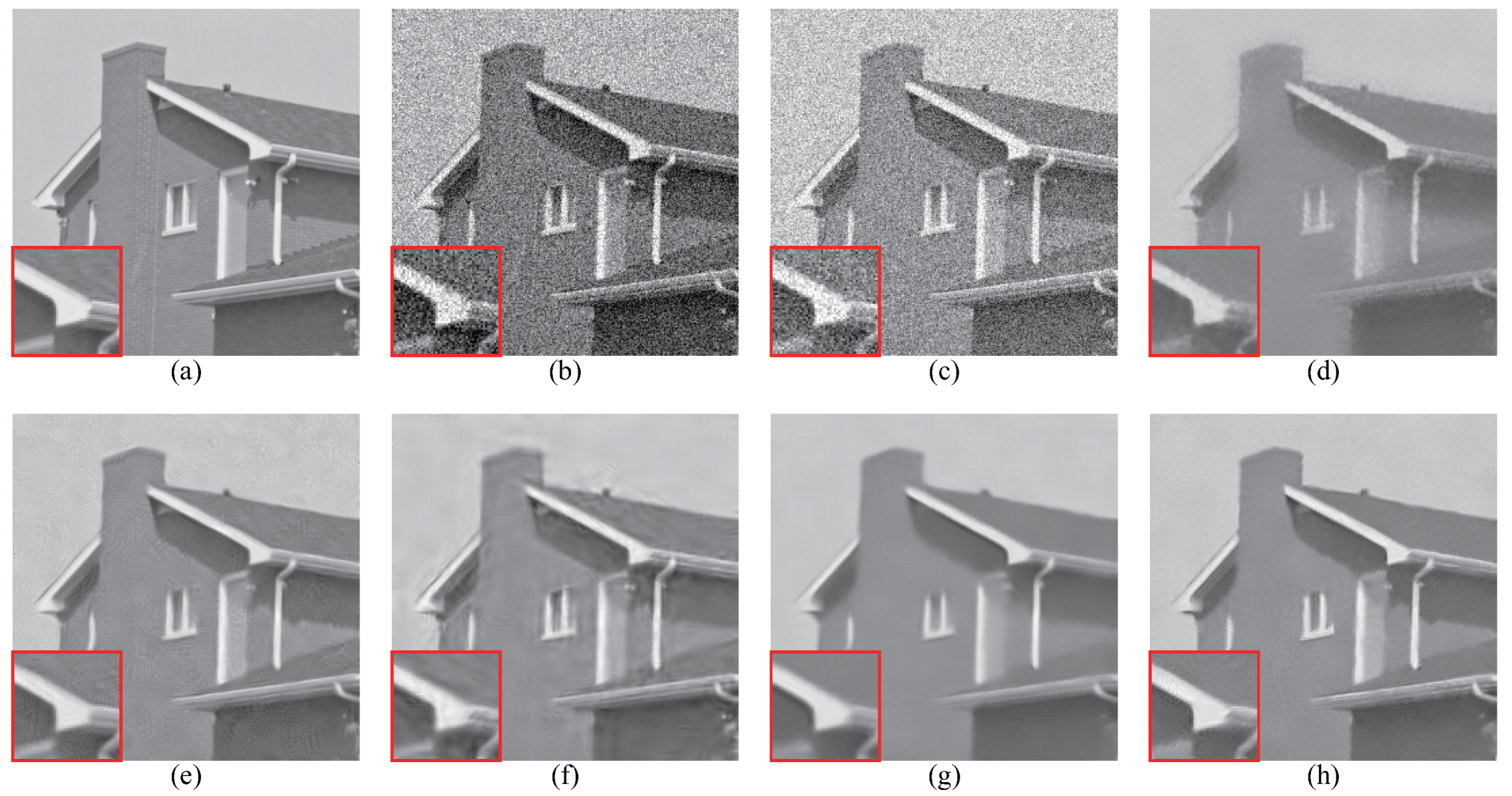

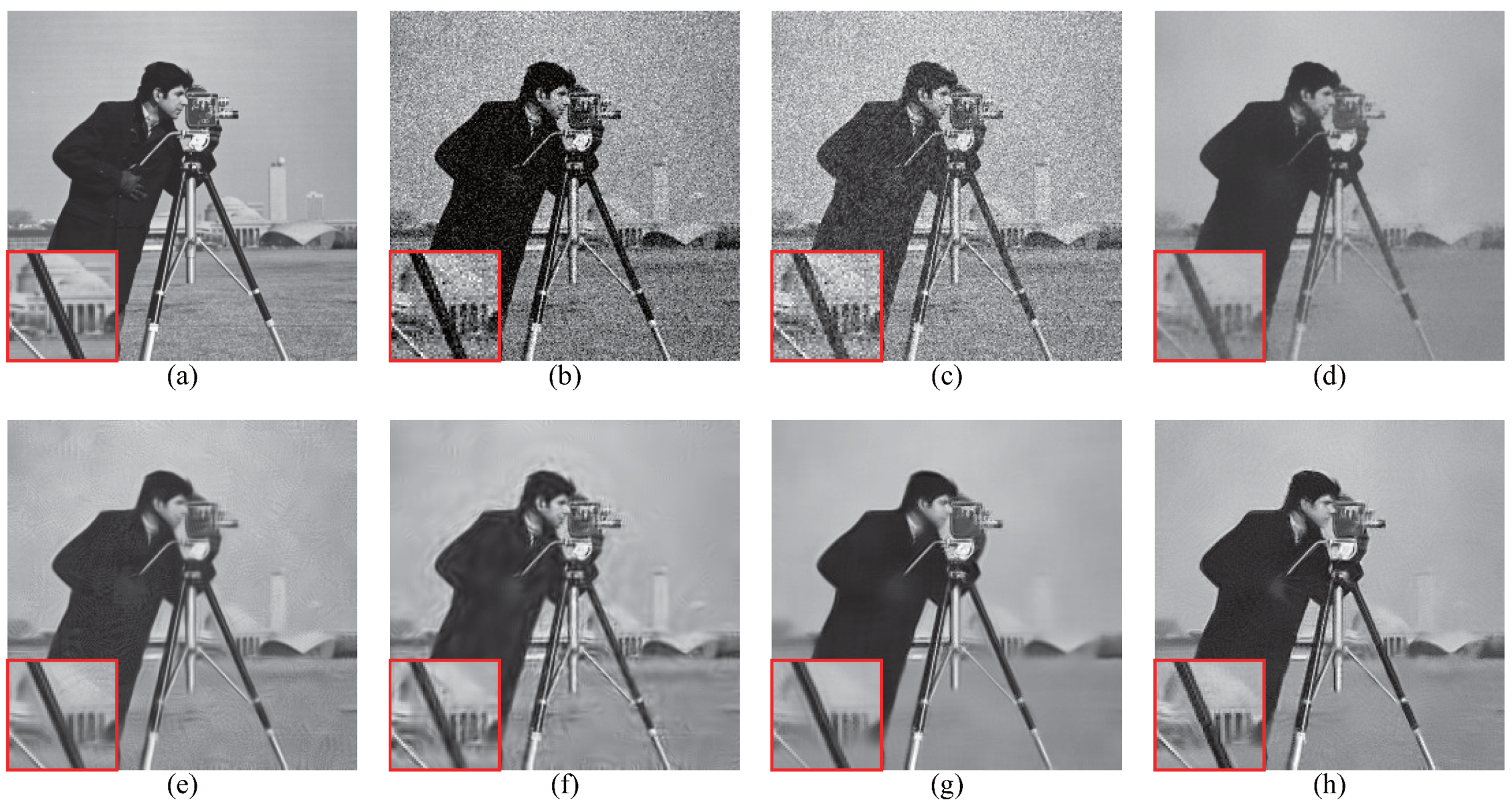

6.1. Image with Smooth Regions

6.2. Image with Complex Regions

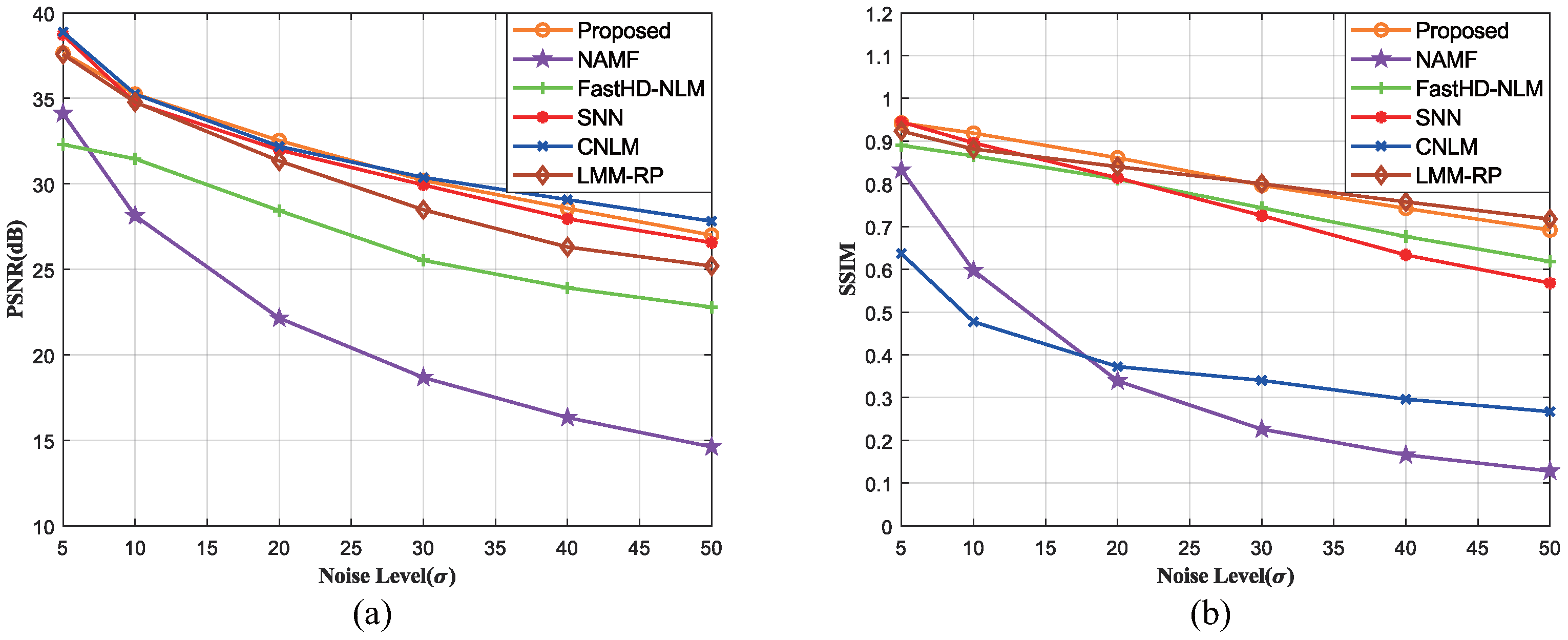

6.3. Synthetical Comparison

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

References

- Buades, A.; Coll, B.; Morel, J. A non-local algorithm for image denoising. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; pp. 60–65. [Google Scholar]

- Alkinani, M.H.; El-Sakka, M.R. Patch-based models and algorithms for image denoising: A comparative review between patch-based images denoising methods for additive noise reduction. EURASIP J. Image Video Process. 2017, 2017, 58. [Google Scholar] [CrossRef] [PubMed]

- Buades, A.; Coll, B.; Morel, J. A review of image denoising algorithms, with a new one. Multiscale Model. Simul. 2005, 4, 490–530. [Google Scholar] [CrossRef]

- Deledalle, C.; Duval, V.; Salmon, J. Non-local methods with shape-adaptive patches (NLM-SAP). J. Math. Imaging Vis. 2012, 43, 103–120. [Google Scholar] [CrossRef]

- Yin, R.; Gao, T.; Lu, Y.M.; Daubechies, I. A tale of two bases: Local-nonlocal regularization on image patches with convolution framelets. SIAM J. Imaging Sci. 2017, 10, 711–750. [Google Scholar] [CrossRef]

- Guo, Q.; Zhang, C.; Zhang, Y.; Liu, H. An efficient SVD-based method for image denoising. IEEE Trans. Circ. Syst. Video 2015, 26, 868–880. [Google Scholar] [CrossRef]

- Yousif, O.; Ban, Y. Improving urban change detection from multitemporal SAR images using PCA-NLM. IEEE Trans. Geosci. Remote Sens. 2013, 51, 2032–2041. [Google Scholar] [CrossRef]

- Cai, S.; Kang, Z.; Yang, M.; Xiong, X.; Peng, C.; Xiao, M. Image denoising via improved dictionary learning with global structure and local similarity preservations. Symmetry 2018, 10, 167. [Google Scholar] [CrossRef]

- Cai, S.; Liu, K.; Yang, M.; Tang, J.; Xiong, X.; Xiao, M. A new development of non-local image denoising using fixed-point iteration for non-convex ℓp sparse optimization. PLoS ONE 2018, 13, e208503. [Google Scholar] [CrossRef]

- Mahmoudi, M.; Sapiro, G. Fast image and video denoising via nonlocal means of similar neighborhoods. IEEE Signal Proc. Lett. 2005, 12, 839–842. [Google Scholar] [CrossRef]

- Tristán-Vega, A.; García-Pérez, V.; Aja-Fernández, S.; Westin, C. Efficient and robust nonlocal means denoising of MR data based on salient features matching. Comput. Methods Programs Biol. 2012, 105, 131–144. [Google Scholar] [CrossRef] [Green Version]

- Duval, V.; Aujol, J.; Gousseau, Y. A bias-variance approach for the nonlocal means. SIAM J. Imaging Sci. 2011, 4, 760–788. [Google Scholar] [CrossRef]

- Dauwe, A.; Goossens, B.; Luong, H.Q.; Philips, W. A fast non-local image denoising algorithm. In Proceedings of the Image Processing: Algorithms and Systems VI, San Jose, CA, USA, 28–29 January 2008; pp. 324–331. [Google Scholar]

- Brox, T.; Kleinschmidt, O.; Cremers, D. Efficient nonlocal means for denoising of textural patterns. IEEE Trans. Image Process. 2008, 17, 1083–1092. [Google Scholar] [CrossRef]

- Zeng, W.; Du, Y.; Hu, C. Noise suppression by discontinuity indicator controlled non-local means method. Multimed. Tools Appl. 2017, 76, 13239–13253. [Google Scholar] [CrossRef]

- Verma, R.; Pandey, R. Grey relational analysis based adaptive smoothing parameter for non-local means image denoising. Multimed. Tools Appl. 2018, 77, 25919–25940. [Google Scholar] [CrossRef]

- Panigrahi, S.K.; Gupta, S.; Sahu, P.K. Curvelet-based multiscale denoising using non-local means & guided image filter. IET Image Process. 2018, 12, 909–918. [Google Scholar]

- Frangi, A.F.; Niessen, W.J.; Vincken, K.L.; Viergever, M.A. Multiscale vessel enhancement filtering. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Cambridge, MA, USA, 11–13 October 1998; pp. 130–137. [Google Scholar]

- Lindeberg, T. Scale-Space Theory in Computer Vision; Springer Science & Business Media: Berlin, Germany, 2013; Volume 256. [Google Scholar]

- Starck, J.; Elad, M.; Donoho, D.L. Image decomposition: Separation of texture from piecewise smooth content. In Wavelets: Applications in Signal and Image Processing X; SPIE: Bellingham, WA, USA, 2003; pp. 571–582. [Google Scholar]

- Deledalle, C.; Denis, L.; Tupin, F. How to compare noisy patches? Patch similarity beyond Gaussian noise. Int. J. Comput. Vis. 2012, 99, 86–102. [Google Scholar] [CrossRef]

- Crow, F.C. Summed-area tables for texture mapping. In Proceedings of the 11th Annual Conference on Computer Graphics and Interactive Techniques, Minneapolis, MN, USA, 23–27 July 1984; pp. 207–212. [Google Scholar]

- Zhang, H.; Zhu, Y.; Zheng, H. NAMF: A nonlocal adaptive mean filter for removal of salt-and-pepper noise. Math. Probl. Eng. 2021, 2021, 4127679. [Google Scholar] [CrossRef]

- Nair, P.; Chaudhury, K.N. Fast high-dimensional bilateral and nonlocal means filtering. IEEE Trans. Image Process. 2018, 28, 1470–1481. [Google Scholar] [CrossRef]

- Frosio, I.; Kautz, J. Statistical nearest neighbors for image denoising. IEEE Trans. Image Process. 2018, 28, 723–738. [Google Scholar] [CrossRef]

- Yamanappa, W.; Sudeep, P.V.; Sabu, M.K.; Rajan, J. Non-local means image denoising using Shapiro-Wilk similarity measure. IEEE Access 2018, 6, 66914–66922. [Google Scholar] [CrossRef]

- Nguyen, M.P.; Chun, S.Y. Bounded self-weights estimation method for non-local means image denoising using minimax estimators. IEEE Trans. Image Process. 2017, 26, 1637–1649. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Weber, A.G. The USC-SIPI Image Database: Version 5. 2006. Available online: http://sipi.usc.edu/database/ (accessed on 1 July 2022).

| Images | FastHD-NLM | SNN | CNLM | LMM-RP | NAMF | Proposed | |

|---|---|---|---|---|---|---|---|

| Barbara | 5 | 25.63/0.90 | 37.83/0.96 | 38.28/0.82 | 37.49/0.95 | 34.13/0.88 | 35.92/0.95 |

| 10 | 26.40/0.87 | 33.09/0.92 | 34.55/0.74 | 33.79/0.92 | 28.12/0.70 | 33.88/0.93 | |

| 20 | 24.83/0.80 | 30.60/0.85 | 30.67/0.65 | 29.03/0.83 | 22.13/0.46 | 30.68/0.87 | |

| 30 | 23.55/0.73 | 28.53/0.77 | 28.54/0.58 | 26.36/0.76 | 18.72/0.33 | 28.69/0.79 | |

| 40 | 22.47/0.67 | 26.79/0.69 | 26.76/0.50 | 24.73/0.69 | 16.38/0.24 | 26.62/0.72 | |

| 50 | 21.88/0.63 | 25.28/0.61 | 25.19/0.45 | 23.75/0.65 | 14.65/0.19 | 25.31/0.65 | |

| Pepper | 5 | 28.92/0.89 | 37.45/0.95 | 37.30/0.83 | 36.79/0.94 | 29.82/0.87 | 34.34/0.94 |

| 10 | 29.17/0.87 | 31.94/0.91 | 33.75/0.75 | 32.99/0.90 | 26.79/0.69 | 32.61/0.91 | |

| 20 | 26.02/0.81 | 29.15/0.83 | 30.10/0.67 | 28.76/0.83 | 21.98/0.44 | 29.82/0.85 | |

| 30 | 22.89/0.72 | 27.45/0.76 | 27.87/0.60 | 26.08/0.78 | 18.75/0.32 | 27.91/0.79 | |

| 40 | 21.05/0.65 | 25.97/0.70 | 26.28/0.55 | 24.19/0.73 | 16.58/0.24 | 26.28/0.73 | |

| 50 | 19.78/0.59 | 24.54/0.64 | 24.71/0.49 | 22.86/0.69 | 15.04/0.19 | 25.05/0.68 | |

| Lena | 5 | 31.79/0.95 | 37.84/0.94 | 37.68/0.94 | 37.58/0.93 | 34.02/0.84 | 36.63/0.93 |

| 10 | 31.32/0.92 | 34.00/0.89 | 34.54/0.89 | 34.29/0.89 | 28.09/0.61 | 34.63/0.90 | |

| 20 | 28.59/0.86 | 31.48/0.81 | 30.75/0.78 | 30.43/0.83 | 22.18/0.34 | 31.61/0.84 | |

| 30 | 26.30/0.80 | 29.52/0.73 | 28.38/0.67 | 28.30/0.78 | 18.87/0.22 | 29.61/0.77 | |

| 40 | 24.58/0.74 | 27.96/0.66 | 26.56/0.56 | 26.95/0.75 | 16.63/0.16 | 28.13/0.76 | |

| 50 | 23.42/0.70 | 26.59/0.61 | 25.99/0.49 | 26.01/0.73 | 15.08/0.12 | 27.06/0.75 | |

| Plane | 5 | 31.27/0.91 | 38.37/0.95 | 39.33/0.96 | 35.70/0.94 | 33.08/0.90 | 37.79/0.96 |

| 10 | 31.92/0.90 | 33.08/0.90 | 35.66/0.93 | 33.94/0.91 | 30.49/0.85 | 34.00/0.92 | |

| 20 | 28.96/0.85 | 30.44/0.82 | 32.09/0.89 | 31.07/0.85 | 27.45/0.83 | 29.90/0.88 | |

| 30 | 26.16/0.81 | 28.70/0.73 | 30.01/0.85 | 29.04/0.80 | 24.67/0.77 | 30.35/0.86 | |

| 40 | 24.17/0.77 | 27.17/0.64 | 28.55/0.76 | 27.51/0.77 | 22.45/0.73 | 28.75/0.82 | |

| 50 | 22.89/0.74 | 25.80/0.56 | 27.63/0.72 | 26.34/0.74 | 19.85/0.48 | 27.68/0.80 | |

| Lake | 5 | 29.03/0.84 | 36.04/0.93 | 36.68/0.94 | 35.76/0.92 | 32.55/0.91 | 32.39/0.91 |

| 10 | 29.17/0.82 | 30.28/0.85 | 32.94/0.86 | 31.75/0.85 | 30.11/0.85 | 31.14/0.88 | |

| 20 | 26.54/0.75 | 28.26/0.77 | 29.76/0.80 | 27.60/0.76 | 25.45/0.74 | 28.81/0.82 | |

| 30 | 24.41/0.70 | 26.91/0.70 | 27.91/0.72 | 25.17/0.69 | 22.65/0.68 | 27.99/0.78 | |

| 40 | 22.74/0.65 | 25.63/0.62 | 26.67/0.69 | 23.81/0.64 | 18.65/0.52 | 26.68/0.67 | |

| 50 | 21.54/0.61 | 24.46/0.55 | 25.35/0.70 | 22.92/0.61 | 15.42/0.42 | 25.60/0.61 | |

| Flinstones | 5 | 25.42/0.83 | 35.52/0.94 | 35.84/0.95 | 35.15/0.93 | 34.16/0.92 | 31.32/0.91 |

| 10 | 26.72/0.84 | 29.89/0.88 | 31.77/0.89 | 31.41/0.88 | 30.11/0.88 | 30.19/0.89 | |

| 20 | 25.02/0.79 | 27.10/0.83 | 28.31/0.82 | 27.74/0.83 | 26.89/0.81 | 28.35/0.85 | |

| 30 | 22.29/0.72 | 25.98/0.78 | 26.32/0.80 | 24.81/0.76 | 22.24/0.69 | 26.46/0.80 | |

| 40 | 20.13/0.65 | 24.80/0.72 | 24.77/0.74 | 22.46/0.70 | 18.12/0.42 | 24.81/0.75 | |

| 50 | 18.58/0.58 | 22.70/0.67 | 23.38/0.70 | 20.67/0.63 | 15.65/0.26 | 23.53/0.69 | |

| Hill | 5 | 28.21/0.79 | 35.35/0.93 | 35.76/0.91 | 35.52/0.94 | 34.57/0.93 | 31.85/0.95 |

| 10 | 27.44/0.74 | 29.15/0.81 | 31.47/0.87 | 30.46/0.82 | 30.12/0.80 | 30.07/0.84 | |

| 20 | 24.78/0.62 | 27.46/0.73 | 28.06/0.73 | 25.99/0.63 | 25.42/0.68 | 28.52/0.76 | |

| 30 | 23.05/0.53 | 26.81/0.65 | 26.41/0.68 | 24.06/0.54 | 22.15/0.42 | 26.87/0.64 | |

| 40 | 22.07/0.47 | 24.88/0.57 | 25.41/0.57 | 23.10/0.49 | 17.87/0.36 | 25.54/0.62 | |

| 50 | 21.51/0.44 | 23.71/0.51 | 24.67/0.52 | 22.58/0.46 | 15.55/0.30 | 24.80/0.57 | |

| Monach | 5 | 28.31/0.92 | 36.30/0.96 | 37.54/0.97 | 36.95/0.97 | 34.12/0.90 | 32.68/0.96 |

| 10 | 29.03/0.91 | 31.84/0.90 | 31.37/0.94 | 32.69/0.94 | 28.14/0.74 | 31.31/0.94 | |

| 20 | 26.41/0.85 | 27.35/0.78 | 27.36/0.89 | 28.46/0.88 | 22.16/0.52 | 28.81/0.88 | |

| 30 | 23.59/0.79 | 24.70/0.67 | 26.23/0.85 | 25.96/0.82 | 18.74/0.39 | 27.05/0.82 | |

| 40 | 21.04/0.71 | 22.84/0.58 | 25.70/0.70 | 24.31/0.77 | 16.47/0.32 | 25.63/0.77 | |

| 50 | 19.21/0.64 | 21.25/0.50 | 24.46/0.67 | 22.52/0.70 | 14.71/0.26 | 24.63/0.71 |

| Standard Deviations | 5 | 10 | 20 | 30 | 40 | 50 |

|---|---|---|---|---|---|---|

| Proposed | 34.91/0.96 | 33.17/0.93 | 30.26/0.87 | 28.20/0.80 | 26.68/0.75 | 25.44/0.69 |

| NAMF | 34.39/0.89 | 28.41/0.74 | 22.49/0.53 | 19.08/0.41 | 16.74/0.33 | 15.00/0.28 |

| FastHD-NLM | 31.29/0.91 | 31.09/0.88 | 28.20/0.79 | 25.71/0.72 | 24.04/0.65 | 22.98/0.61 |

| SNN | 37.17/0.96 | 32.54/0.90 | 28.09/0.77 | 25.51/0.66 | 23.68/0.57 | 22.24/0.50 |

| CNLM | 38.12/0.89 | 33.45/0.86 | 29.58/0.82 | 27.41/0.78 | 25.80/0.74 | 24.70/0.69 |

| LMM-RP | 38.33/0.97 | 34.21/0.93 | 29.82/0.85 | 27.29/0.78 | 25.64/0.73 | 24.44/0.68 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fang, S.; Wu, J.; Wu, S. A Content-Aware Non-Local Means Method for Image Denoising. Electronics 2022, 11, 2898. https://doi.org/10.3390/electronics11182898

Fang S, Wu J, Wu S. A Content-Aware Non-Local Means Method for Image Denoising. Electronics. 2022; 11(18):2898. https://doi.org/10.3390/electronics11182898

Chicago/Turabian StyleFang, Shun, Jiaxin Wu, and Shiqian Wu. 2022. "A Content-Aware Non-Local Means Method for Image Denoising" Electronics 11, no. 18: 2898. https://doi.org/10.3390/electronics11182898

APA StyleFang, S., Wu, J., & Wu, S. (2022). A Content-Aware Non-Local Means Method for Image Denoising. Electronics, 11(18), 2898. https://doi.org/10.3390/electronics11182898