Abstract

Existing remote sensing images of ground objects are difficult to annotate, and building a hyperspectral dataset requires huge resources. To tackle these problems, this paper proposes a new method with low requirements for the scale of the dataset that involves correcting the inter-class differences of hyperspectral images and eliminating the redundant information of spatial–spectral features. Firstly, the algorithm introduces the spatial information of hyperspectral images into the classification task through the superpixel definition based on the entropy rate, which not only reduces the spatial redundancy information of hyperspectral images but also uses the similarity of spatial neighbors to increase the differences between classes and alleviate the differences within classes. Secondly, under the theoretical guidance of similar spectral fluctuation trends of similar objects, a feature extraction method based on fast Fourier transform is proposed to alleviate spectral feature redundancy and further eliminate the inter-class differences of the algorithm. Finally, to verify the improvement effect of the proposed idea on the traditional classification method, the idea was applied to the traditional SVM algorithm, and experiments were carried out based on the PaviaU and Indian Pines hyperspectral datasets. The simulation results show that the proposed (ERS–FFT–SVM) algorithm shows a significant improvement in classification accuracy when compared with the traditional classification algorithm and is able to perform the small sample classification of hyperspectral images.

1. Introduction

Hyperspectral images (HSIs) contain spatial information of ground objects and rich spectral information, which can provide reliable spectral fingerprint features for ground object classification tasks [1,2,3]. There are broad application prospects in all walks of life, such as crop growth assessment [4,5], mineral identification and mapping [6], and meteorological monitoring and early warning systems [7]. However, due to the differences in the reflection of different objects, the internal noise of the detector, the atmospheric correction error, and other factors, the spectral characteristics of similar objects are different, i.e., there is a phenomenon of “same object and different spectrum” in HSIs [8]. Secondly, HSIs have high-dimensional spectral features which make adjacent spectral band information similar and are, thus, prone to spectral information redundancy, “dimension disaster”, and the “Hughes phenomenon” [9]. The inter-class differences and feature redundancy of the spectral features of HSIs greatly increase the difficulty of classification. To achieve high-precision ground object classification results, large-scale labeled datasets are often required. However, with the development of hyperspectral remote sensing technology, although a large number of HSIs are easy to obtain, accurate image annotation incurs great labor and time costs [10]. Therefore, how to ensure the high-precision classification of HSIs in the case of small samples has become a research hotspot.

The spectral fingerprint features contained in HSIs can be used as the main basis for the classification of ground objects. Classical HSI classification algorithms use only spectral dimension information for classification. Among them, the K-means clustering algorithm (K-means) is one of the simplest classifiers, which considers only the Euclidean distance between training samples and test samples [11]. K nearest neighbor classification (KNN) does not require specialized training models. When there are new samples, they are compared with the training dataset, and the K nearest neighbors are found to determine the category of unknown pixels [12]. A support vector machine (SVM) can eliminate the need for a large number of training samples, and a small number of training samples can achieve good generalization ability and high classification accuracy, which can help to solve the problem of small sample learning in the classification process [13,14]. However, since these algorithms consider only the spectral information in the image and ignore the relationship between the spatial positions of different pixels, the spatial information in the image is not fully utilized, especially in the case of small samples, and the classification effect is not ideal.

With the development of hyperspectral imaging technology, the spatial resolution of HSIs is increasing, which can provide accurate ground feature information for classification tasks. At present, the method of combining spectral information and spatial information for HSI classification is receiving extensive attention. Based on spectral feature information, spatial features are introduced to make more comprehensive use of the information in HSIs [15]. Compared with single spectral feature classification, the classification accuracy of this joint feature method is significantly improved. Past studies [16,17] have introduced the method of deep learning and proposed a method of spatial–spectral feature extraction based on a deep belief network (DBN) for image classification tasks, but the huge learning network involves complex parameter selection. If the parameter selection is not appropriate, it will seriously affect the classification accuracy and computational complexity. Other researchers [18,19] have proposed an HSI classification method that combines spatial–spectral information and sparse representation. After the original image is dimensionally reduced, the local pixels on the principal component map are reorganized and sorted, and the sparse representation of the test sample of the spatial spectrum feature kernel is obtained by the supervised learning method. The extraction of spatial–spectral features via this method is relatively simple, and the sparse representation of feature information can reduce information redundancy to a certain extent. However, because the algorithm does not have special coding processing for image edge pixels, there is a phenomenon of edge misclassification, resulting in sometimes unsatisfactory classification results. Although the above spatial–spectral joint classification methods consider the use of spatial and spectral features of HSIs simultaneously, there is a substantial redundancy of feature information, leading to high computational complexity and long processing times.

In order to make up for the shortcomings of existing algorithms and the problem of low classification accuracy caused by intra-class differences and dimensional disaster in HSIs, this paper proposes an efficient ground object classification method based on an inter-class difference correction and spatial–spectral redundancy information elimination (ERS–FFT) method, and its main innovations are described as follows:

(1) This paper makes full use of the spatial information of HSIs and realizes the definition of superpixels based on the entropy rate superpixel segmentation (ERS) algorithm. Under the prior guidance that the samples contained in the superpixel are objects of the same category, the intra-class differences of the spectral features within the superpixel are corrected, and the spatial information redundancy is reduced to improve the performance of the classification algorithm.

(2) In accordance with the guidance that the fluctuation trend of spectral features of the same category of targets tends to be consistent, this paper proposes a feature extraction method based on the fast Fourier transform method. After the Fourier transformation of the spectral features, the low-frequency part represents the overall slow-changing fluctuation trend of the spectral curve, and the high-frequency part represents the fast-changing trend of the spectral curve. Extracting high- and low-frequency information to represent the fluctuation trend of spectral features can further alleviate the differences between classes.

The core objective for classification tasks with small samples is to obtain the optimal solution using the available information. SVM performs the classification task by finding the classification hyperplane and keeping the sample points in the training samples separated and as far as possible from the classification plane. For linearly indistinguishable classification problems, SVM can transform linearly indistinguishable problems in low-dimensional space into linearly separable problems in high-dimensional space by introducing kernel methods. It has good adaptability for hyperspectral datasets. The KNN classification algorithm is suitable for sample sets with many overlapping features, it is easy to operate without complicated parameter settings, but it is prone to misclassification when the number of samples is not balanced. For example, when the number of samples in a particular class is large, but the number of surrounding samples is small, this may lead to an unknown sample being classified as a large class sample when the K nearest neighbors of the sample are located more in the large class sample. Therefore, the SVM algorithm is more suitable for the problem faced in this paper, so the proposed algorithm is considered to be used in combination with SVM in this paper.

2. Proposed Algorithm

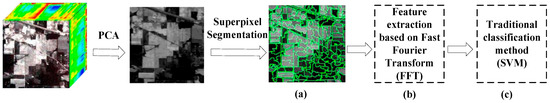

In this paper, an efficient HSI classification algorithm framework for inter-class difference correction and spatial spectrum redundancy information elimination is proposed, which is shown in Figure 1. It consists of spectral feature correction based on entropy rate superpixel segmentation, spectral curve fluctuation feature extraction, and a classical classification method (SVM). The entropy superpixel segmentation defines the superpixel block by using the spatial feature of HSI, and the spectral feature correction module uses a median filter to represent the overall spectral feature of the superpixel. The spectral curve fluctuation feature extraction uses high-frequency and low-frequency fast Fourier transform value representations. The classical classification method then achieves the final classification.

Figure 1.

Flow field diagram of general algorithm. (a) The result of superpixel segmentation. (b) FFT. (c) SVM.

2.1. Inter-Class Difference Correction Based on Entropy Rate Superpixel Segmentation

Superpixel segmentation involves placing similar pixels in the same superpixel block. The superpixel block has boundary information, and the adjacent superpixel blocks satisfy a seamless topological relationship [20]. In the HSIs, there is a high probability that adjacent objects in space are similar. Therefore, our method uses hyper-pixel segmentation based on the entropy rate of HSIs [21,22]. By using the boundary information of hyper-pixel blocks, the subpixels in the hyper-pixel blocks of the HSIs are considered objects. The subsequent classification algorithm needs only to classify the hyper-pixel blocks to reduce the spatial redundancy information of HSI classification and improve the detection efficiency.

The input HSI can be expressed as X∈Rm×n×l, where m and n are the spatial dimensions of the HSIs, and l is the spectral segment of the HSIs. The spectral characteristics of X are analyzed by principal component analysis, and the first principal component graph Xpca∈Rm×n is obtained. A superpixel segmentation algorithm based on the entropy rate is developed for Xpca. The superpixel segmentation algorithm based on the entropy rate introduces the definition of entropy from information theory into image segmentation and performs a superpixel transformation on the image by optimizing an objective function composed of entropy rate function and balance function.

The key to a superpixel segmentation algorithm based on the entropy rate is to obtain the objective function. The objective function can be composed of the random entropy rate F(c) and equilibrium term G(c) and is expressed as Equation (1):

where c is the edge set of image topology. Firstly, the image is initialized to obtain the graph . The set of these vertices is V, and the set of the edges is denoted as E. By calculating the feature distance d(vi,vj) between pixels, the weight between vertex i and vertex j can be expressed as Equation (2):

The definition of the transition probability of a random walk model is shown in Equation (3):

where A denotes a set of combinations satisfying segmentation. Thus, the transition probability between the two vertices i and j can be obtained. According to the transition probability, F(c) and G(c) can be obtained using Equations (4) and (5):

where is a fixed allocation of random walk.

where zc is the clustering distribution, is the probability of clustering distribution, and Nc is the image topology parameters. During the process of random walk, F(c) and G(c) are monotonically increasing functions with decreasing growth rates [23]. Since is a positive real number, the combined function has the property of monotonically increasing with a decreasing growth rate. When the image segmentation runs according to the objective function, the segmentation ends when c equals the initial number of superpixels K.

The superpixels of the HSIs after entropy rate segmentation can be expressed as [S1, S2, …, Sq], where q represents the number of superpixels. The i-th superpixel Si∈Ra×l, where a represents the number of subpixels contained in the i-th superpixel and l represents the number of spectral features of pixels. In this way, we can correct the difference in the spectral characteristics of similar targets in HSIs. The overall spectral feature HFi∈Rl of the supernode is the result of the supernode subpixel passing through the median filter, as is shown in Equation (6), where Via represents the spectral feature of the a-th pixel of the i-th superpixel:

2.2. Spectral Fluctuation Feature Extraction Based on Fast Fourier Transform

Due to the high spectral dimension of HSIs, the “Hughes phenomenon” is prone to occur during classification, which leads to a significant deterioration in classification performance. In small sample classification, high-dimensional features have a more obvious influence on the final classification accuracy. The traditional spectral dimension feature extraction algorithm is designed for specific data distribution. This paper uses the fluctuation characteristics of the spectrum of the spectral curve to achieve feature extraction.

Although the spectral characteristics of the same target in HSIs are different, their fluctuation characteristics are similar. Therefore, after the fast Fourier transformation of the spectral characteristic curve, the low-frequency part of the spectrum represents the slow fluctuation trend of the target spectral characteristics, and the high-frequency part represents the rapid fluctuation trend [24]. The high-frequency and low-frequency values can be used as the extraction features of the target. The original spectral feature number of superpixels is l, assuming that the extracted feature number is k. The l-point fast Fourier transform is performed on the spectral characteristics of each superpixel, and the first k/2 low-frequency and the second k/2 high-frequency information are selected as the extraction features to realize the feature extraction process from l-dimensional to k. The extracted spectral features can be expressed as Equation (7):

where k represents the k-th point after fast Fourier transform.

2.3. ERS–FFT–SVM Overall Algorithm Steps

| Input: HSIs dataset X∈Rm×n×l with m × n l-dimensional data points, number of training samples , number of superpixel blocks Output: Category of test samples |

| Step 1: Extract the first principal component of the high-dimensional hyperspectral data to form a two-dimensional image; Step 2: According to the number of input superpixel blocks , the image is segmented by the designed entropy-based superpixel segmentation algorithm, and superpixel blocks are obtained; Step 3: According to the number of training samples , the training samples and test samples are randomly selected from the dataset , where the training sample category is known; Step 4: Similar to the SVM algorithm, the test set is classified by the obtained superpixel blocks and the trained model. |

3. Experimental Results and Analysis

3.1. Experimental Datasets

To verify the classification of the proposed algorithm in the case of small samples, the experiment in this paper was carried out on commonly used datasets, namely the PaviaU and Indian Pines datasets. The following is a brief introduction to each dataset.

- PaviaU dataset

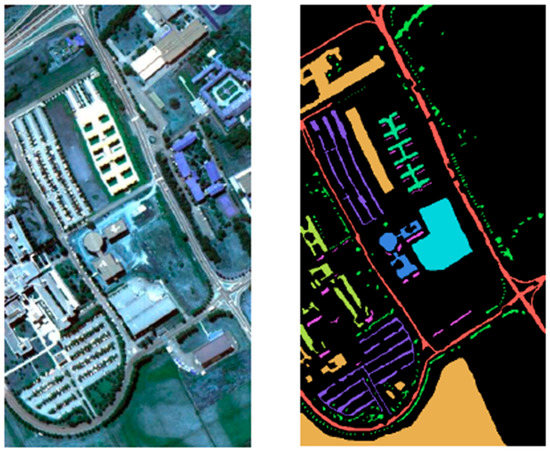

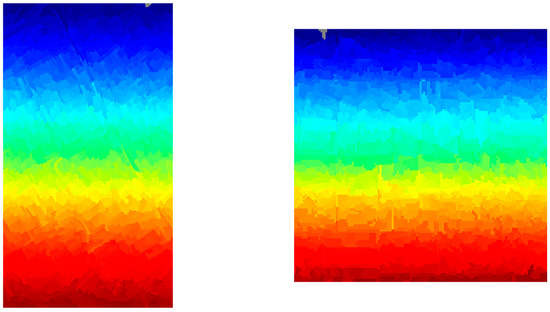

The scene covers the University of Pavia, Italy, and the image size is 610 × 340 pixels. The dataset has a total of 103 spectral bands after removing noise. The spatial resolution of the dataset is 1.3 m, and the spectral band coverage is 0.43–0.86 microns. Pavia University has nine types of land, including natural vegetation, urban construction materials, and background. Its pseudo-color image and factual ground information are shown in Figure 2. The details of the dataset are shown in Table 1.

Figure 2.

PaviaU dataset diagram.

Table 1.

Features of datasets.

The left is a pseudo-color map, and the right is a true feature category map.

- 2.

- Indian Pines dataset

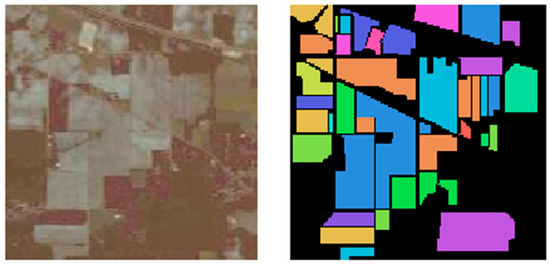

The dataset concerns a farm in northwestern Indiana collected by the spectrometer AVRIS in 1992. The image is 145 × 145 pixels, and the dataset contains 200 spectral bands. The spatial resolution of the dataset is a 20 m spectral band covering a range of 0.2–2.4 μm and containing 16 real ground object categories. Its pseudo-color image and factual ground information are shown in Figure 3, and the details are shown in Table 1.

Figure 3.

Indian Pines dataset diagram.

The left is a pseudo-color map, and the right is a true feature category map.

3.2. Experiment Setting

In terms of the experimental setup to evaluate the classification performance of the proposed algorithm, the proposed algorithm was compared with KNN and SVM, which use only spectral dimension information. To achieve better results, the parameters of each algorithm are adjusted to their optima, and the nearest neighbor number selected for KNN was nine. The optimal parameters of SVM were determined by 10 cross-validations; the input parameter K of ERS–FFT–SVM was selected (from 200, 400, 600, 800, 1000, and 1200).

The evaluation indexes used in this paper include overall accuracy (OA) and the Kappa coefficient [25]. To improve the accuracy and reliability of the experiment, the average classification accuracy of each algorithm after five repetitions was calculated to obtain the final result.

3.3. Experimental Results and Analysis

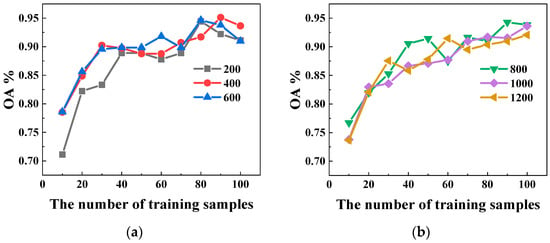

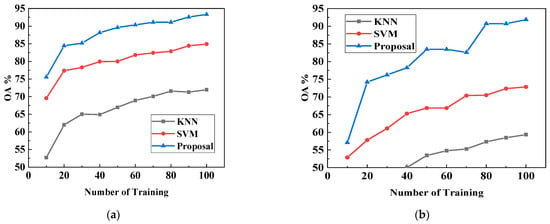

Figure 4 shows the HSI classification algorithm based on the superpixel segmentation of the PaviaU dataset. For each subgraph, the abscissa axis represents the number of samples, increasing from 10 samples per class to 100 samples per class, and the ordinate axis is the overall classification accuracy of OA.

Figure 4.

Experimental results with the PaviaU dataset.

It can be seen from Figure 4b that when the number of superpixel blocks is greater than 600, the classification accuracy becomes more accurate with an increase in the number of training samples. The proposed algorithm has only 20 training samples for each type of ground object, and the OA value of the classification results can reach more than 80%. This reflects the introduction of the superpixel segmentation algorithm which combines the spatial information of HSIs and can effectively improve the classification accuracy in the case of small samples. With an increase in the number of training samples, the difference in the classification accuracy based on different superpixels is reduced. The classification accuracy is very close when the training sample is 100 and the superpixel block is 800 and 1000. This shows that as the number of training samples increases, the sensitivity of classification accuracy to the number of superpixels decreases. However, in Figure 4a, the number of superpixel blocks is 200, 400, and 600. When the training sample is greater than 80, the classification accuracy shows a downward trend because the number of superpixel blocks is too small. Usually, the greater the number of superpixel blocks, the fewer pixels are contained in each class of pixels, and when the number of superpixel blocks is small while more pixels are contained in the superpixel block, it is possible to contain multiple classes of pixel blocks in the superpixel block. In the results shown in Figure 5, the segmentation result is 1000 superpixel blocks. Each superpixel block is the same class of features, which is consistent with the assumption of the proposed algorithm that each superpixel block has the same class of pixels; thus, the number of superpixel blocks selected in this paper is 1000 to ensure the classification accuracy.

Figure 5.

Pseudo-color plot of the dataset after superpixel segmentation of 1000 blocks. PaviaU dataset on the left, Indian Pines dataset on the right.

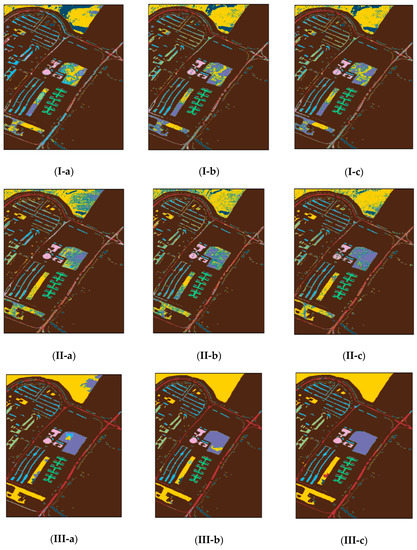

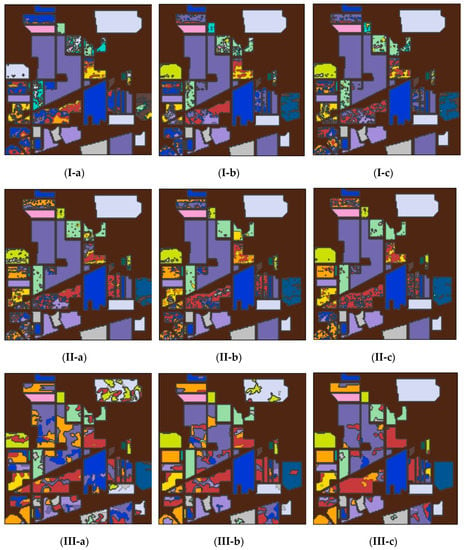

Figure 6 and Figure 7 show the classification results of PaviaU and Indian Pines when the training samples of each class are 10, 30, and 50, respectively, and the superpixel block is set to 1000. To analyze the classification results in detail, Table 2 and Table 3 provide more detailed classification OA values for training sample changes. The corresponding OA value curves are shown in Figure 8. As the number of samples increases from 10 to 100, the accuracies of different classification methods improve, which indicates that the increase in the number of samples does help to improve the classification accuracy. In addition, Figure 8 reflects that the classification accuracy using the ERS–FFT–SVM algorithm is significantly better than that of the KNN and SVM algorithms which use only spectral information. From Table 2 and Table 3, we can determine that the overall classification accuracy (OA) of ERS–FFT–SVM on the PaviaU dataset is improved by 7.99% on average compared with SVM and 21.60% on average compared with KNN in the case of small samples; in the Indian Pines dataset using ERS–FFT–SVM, accuracy is improved by 15.18% on average compared with the SVM algorithm and by 29.61% on average compared with KNN. Due to the introduction of the superpixel segmentation algorithm in the classification process, the spatial information and spectral information of HSIs are fully utilized, so the ERS–FFT–SVM algorithm shows good performance under small-sample conditions. In the experimental results with the PaviaU dataset and Indian Pines dataset, the Kappa coefficient under the ERS–FFT–SVM algorithm demonstrates that the classification results are in good agreement with the real ground objects, indicating the superior performance of the ERS–FFT–SVM algorithm.

Figure 6.

PaviaU classification results. (a) KNN. (b) SVM. (c) Proposal. (I) Training sample 10. (II) Training sample 30. (III) Training sample 50.

Figure 7.

Indian Pines classification results. (a) KNN. (b) SVM. (c) Proposal. (I) Training sample 10. (II) Training sample 30. (III) Training sample 50.

Table 2.

Overall classification accuracy and Kappa coefficient of classification algorithms under different sample numbers with the PaviaU dataset (K = 1000).

Table 3.

Overall classification accuracy and Kappa coefficient of classification algorithms under different sample numbers with the Indian Pines dataset (K = 1000).

Figure 8.

OA comparison figure. (a) PaviaU. (b) Indian Pines.

4. Conclusions

In this paper, an efficient spatial–spectral joint classification method based on the correction of inter-class differences and the elimination of spatial–spectral redundancy information is proposed. The superpixel correction of inter-class differences is constructed by ERS and the spatial redundancy of data is reduced. The fluctuation characteristics of the spectral curve are extracted by FFT to reduce the spectral band redundancy. Finally, a complete (ERS–FFT–SVM) spatial–spectral joint classification method is performed and combined with a support vector machine, which greatly improves the classification accuracy. The experimental results with the PaviaU and Indian Pines hyperspectral datasets show that the proposed algorithm has a better classification effect and greatly improves the classification accuracy of ground objects for classification algorithms when considering only spectral dimension information. Moreover, when the training samples are scarce, the classification effect is improved significantly. When the number of samples is 10, the overall accuracy of ERS–FFT–SVM on the PaviaU dataset is 5.99% higher than that of SVM and 22.86% higher than that of KNN. The overall accuracy on the Indian Pines dataset is 4.22% higher than that of SVM and 24.31% higher than that of KNN. From the Kappa coefficient columns in Table 2 and Table 3, the Kappa coefficient values of the ERS–FFT–SVM algorithm are higher than those of the KNN and SVM algorithms for different sample numbers, which indicates that the classification results of the ERS–FFT–SVM algorithm possess a high degree of consistency with the real feature conditions.

The proposed ERS–FFT–SVM algorithm is based on the classical method of hyperspectral image classification (the SVM algorithm) and corrects the intra-class differences of spectral features within superpixels and reduces the redundancy of spatial information. Moreover, unlike traditional classification methods that use only dimensional spectral information, this algorithm considers the fusion of spatial–spectral features, resulting in a good performance in terms of classification accuracy and consistency of classification results. Especially in the case of small sample classification, the ERS–FFT–SVM algorithm still has better classification results.

However, the proposed algorithm in this paper has not yet considered the best matching relationship between the number of training samples and the number of superpixel segmentation blocks, which leads to the unstable classification performance of the algorithm. Moreover, only the combination with the SVM method has been considered, not with KNN or other classification algorithms. The subsequent work will further focus on the profound fusion of spatial–spectral features and the applicability of other classification methods, as well as clarify the relationship between the training samples and the number of superpixel segmentation blocks, to propose a classification method with better efficiency and higher classification accuracy for small-sample features. For example, if the KNN algorithm can overcome the problem of misclassification or misclassification when the number of samples is not balanced, or if it can take advantage of other algorithms, it may be able to form a more efficient and accurate classification algorithm.

Author Contributions

L.Z. and L.S. proposed research ideas and methods; Q.P. performed the simulations and analyzed the data; L.Z. and S.Y. wrote the paper. All authors have read and agreed to the published version of the manuscript.

Funding

The authors gratefully acknowledge financial support from the National Natural Science Foundation of China (Nos. 61871302, 62101406, and 62001340), the Innovation Capability Support Program of Shaanxi (Program No. 2022TD-37), and the Fundamental Research Funds for the Central Universities (No. JB211311).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Khan, M.J.; Khan, H.S.; Yousaf, A.; Khurshid, K.; Abbas, A. Modern Trends in Hyperspectral Image Analysis: A Review. IEEE Access 2018, 6, 14118–14129. [Google Scholar] [CrossRef]

- Pei, S.; Song, H.; Lu, Y. Small Sample Hyperspectral Image Classification Method Based on Dual-Channel Spectral Enhancement Network. Electronics 2022, 11, 2540. [Google Scholar] [CrossRef]

- Sohail, M.; Wu, H.; Chen, Z.; Liu, G. Unsupervised and Self-Supervised Tensor Train for Change Detection in Multitemporal Hyperspectral Images. Electronics 2022, 11, 1486. [Google Scholar] [CrossRef]

- Lacar, F.M.; Lewis, M.M.; Grierson, I.T. Use of Hyperspectral Imagery for Mapping Grape Varieties in the Barossa Valley, South Australia. In Proceedings of the IGARSS 2001. Scanning the Present and Resolving the Future. In Proceedings of the IEEE 2001 International Geoscience and Remote Sensing Symposium (Cat. No.01CH37217), Sydney, NSW, Australia, 9–13 July 2001; Volume 6, pp. 2875–2877. [Google Scholar] [CrossRef]

- Zhuang, L.; Wang, J.; Bai, L.; Jiang, G.; Sun, S.; Yang, P.; Wang, S. Cotton Yield Estimation Based on Hyperspectral Remote Sensing in Arid Region of China. Trans. Chin. Soc. Agric. Eng. 2011, 27, 176–181. [Google Scholar] [CrossRef]

- van der Meer, F. Analysis of Spectral Absorption Features in Hyperspectral Imagery. Int. J. Appl. Earth Obs. Geoinf. 2004, 5, 55–68. [Google Scholar] [CrossRef]

- Zhang, P.; Lu, Q.; Hu, X.; Gu, S.; Yang, L.; Min, M.; Chen, L.; Xu, N.; Sun, L.; Bai, W.; et al. Latest Progress of the Chinese Meteorological Satellite Program and Core Data Processing Technologies. Adv. Atmos. Sci. 2019, 36, 1027–1045. [Google Scholar] [CrossRef]

- Yu, L.; Lan, J.; Zeng, Y.; Zou, J.; Niu, B. One Hyperspectral Object Detection Algorithm for Solving Spectral Variability Problems of the Same Object in Different Conditions. J. Appl. Remote Sens. 2019, 13, 026514. [Google Scholar] [CrossRef]

- Pal, M.; Mather, P.M. Assessment of the Effectiveness of Support Vector Machines for Hyperspectral Data. Future Gener. Comput. Syst. 2004, 20, 1215–1225. [Google Scholar] [CrossRef]

- Wang, C.; Zhang, J.; Zhang, L.; Wei, W.; Zhang, Y. Small sample hyperspectral image classification method based on memory association learning. J. Beijing Univ. Aeronaut. Astronaut. 2021, 47, 549–557. [Google Scholar] [CrossRef]

- Boukhdhir, A.; Lachiheb, O.; Gouider, M.S. An Improved MapReduce Design of Kmeans for Clustering Very Large Datasets. In Proceedings of the 2015 IEEE/ACS 12th International Conference of Computer Systems and Applications (AICCSA), Marrakech, Morocco, 17–20 November 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Li, R.; Li, S. Multimedia Image Data Analysis Based on KNN Algorithm. Comput. Intell. Neurosci. 2022, 2022, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Fauvel, M.; Benediktsson, J.A.; Chanussot, J.; Sveinsson, J.R. Spectral and Spatial Classification of Hyperspectral Data Using SVMs and Morphological Profiles. IEEE Trans. Geosci. Remote Sens. 2008, 46, 3804–3814. [Google Scholar] [CrossRef]

- Mounika, K.; Aravind, K.; Yamini, M.; Navyasri, P.; Dash, S.; Suryanarayana, V. Hyperspectral Image Classification Using SVM with PCA. In Proceedings of the 2021 6th International Conference on Signal Processing, Computing and Control (ISPCC), Solan, India, 7 October 2021; pp. 470–475. [Google Scholar] [CrossRef]

- Qu, S.; Li, X.; Gan, Z. A Review of Hyperspectral Image Classification Based on Joint Spatial-Spectral Features. J. Phys. Conf. Ser. 2022, 2203, 012040. [Google Scholar] [CrossRef]

- Guofeng, T.; Yong, L.; Lihao, C.; Chen, J. A DBN for Hyperspectral Remote Sensing Image Classification. In Proceedings of the 2017 12th IEEE Conference on Industrial Electronics and Applications (ICIEA), Siem Reap, Cambodia, 18–20 June 2017; pp. 1757–1762. [Google Scholar] [CrossRef]

- Li, T.; Sun, J.; Zhang, X.; Wang, X. A spectral-spatial joint classification metond of hyperspectral remote sensing image. Chin. J. Sci. Instrum. 2016, 37, 1379–1389. [Google Scholar] [CrossRef]

- Xiang-Fa, S.; Li-Cheng, J. Classification of Hyperspectral Remote Sensing Image Based on Sparse Representation and Spectral Information. J. Electron. Inf. Technol. 2012, 34, 268–272. [Google Scholar] [CrossRef]

- Chen, Y.; Nasrabadi, N.M.; Tran, T.D. Hyperspectral Image Classification Using Dictionary-Based Sparse Representation. IEEE Trans. Geosci. Remote Sens. 2011, 49, 3973–3985. [Google Scholar] [CrossRef]

- Liu, T.; Dai, F.; Guo, W.; Zhao, F.; Wang, J.; Wang, X. Superpixel Segmentation Algorithm Based on Local Network Modularity Increment. IET Image Process. 2022, 16, 1822–1830. [Google Scholar] [CrossRef]

- Liu, M.-Y.; Tuzel, O.; Ramalingam, S.; Chellappa, R. Entropy Rate Superpixel Segmentation. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 2097–2104. [Google Scholar] [CrossRef]

- Tang, Y.; Zhao, L.; Ren, L. Different Versions of Entropy Rate Superpixel Segmentation for Hyperspectral Image. In Proceedings of the 2019 IEEE 4th International Conference on Signal and Image Processing (ICSIP), Wuxi, China, 19–21 July 2019; pp. 1050–1054. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, X.; Gao, X.; Zhang, C. A Simple Algorithm of Superpixel Segmentation with Boundary Constraint. IEEE Trans. Circuits Syst. Video Technol. 2017, 27, 1502–1514. [Google Scholar] [CrossRef]

- Nussbaumer, H.J. The Fast Fourier Transform. In Fast Fourier Transform and Convolution Algorithms; Nussbaumer, H.J., Ed.; Springer Series in Information Sciences; Springer: Berlin/Heidelberg, Germany, 1981; pp. 80–111. ISBN 978-3-662-00551-4. [Google Scholar]

- Thompson, W.D.; Walter, S.D. A Reappraisal of the Kappa Coefficient. J. Clin. Epidemiol. 1988, 41, 949–958. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).