Multi-Objective Multi-Learner Robot Trajectory Prediction Method for IoT Mobile Robot Systems

Abstract

1. Introduction

1.1. Literature Review

1.2. The Novelty of the Study

- A multi-objective multi-learner prediction structure is proposed for trajectory prediction. This model structure can fit the complex linear and non-linear components of the trajectory while ensuring accuracy and robustness simultaneously. The effectiveness of the proposed prediction model is validated with several real robot trajectories over several benchmark models.

- Previous studies only utilized a single model for trajectory prediction, which has a limited learning scope and cannot cope with complex components of trajectory. In this study, a multi-learner prediction method is proposed which uses ARMA, MLP, ENN, DESN, and LSTM for prediction. The ARMA can fit the linear components. The MLP and ENN can describe the weak non-linear components in a non-recursive and recursive manner, respectively. The DESN and LSTM can grasp strong non-linear components non-recursively and recursively. These diverse learners can achieve omnidirectional capture of trajectory features.

- The existing studies barely consider robustness when constructing an ensemble model, which makes the ensemble model prone to overfit and limited generalization performance. To mitigate the research gaps, an ensemble strategy based on the multi-objective optimization method is proposed. The multi-objective ensemble strategy can generate ensemble weights considering the accuracy and robustness simultaneously, leading to better comprehensive performance.

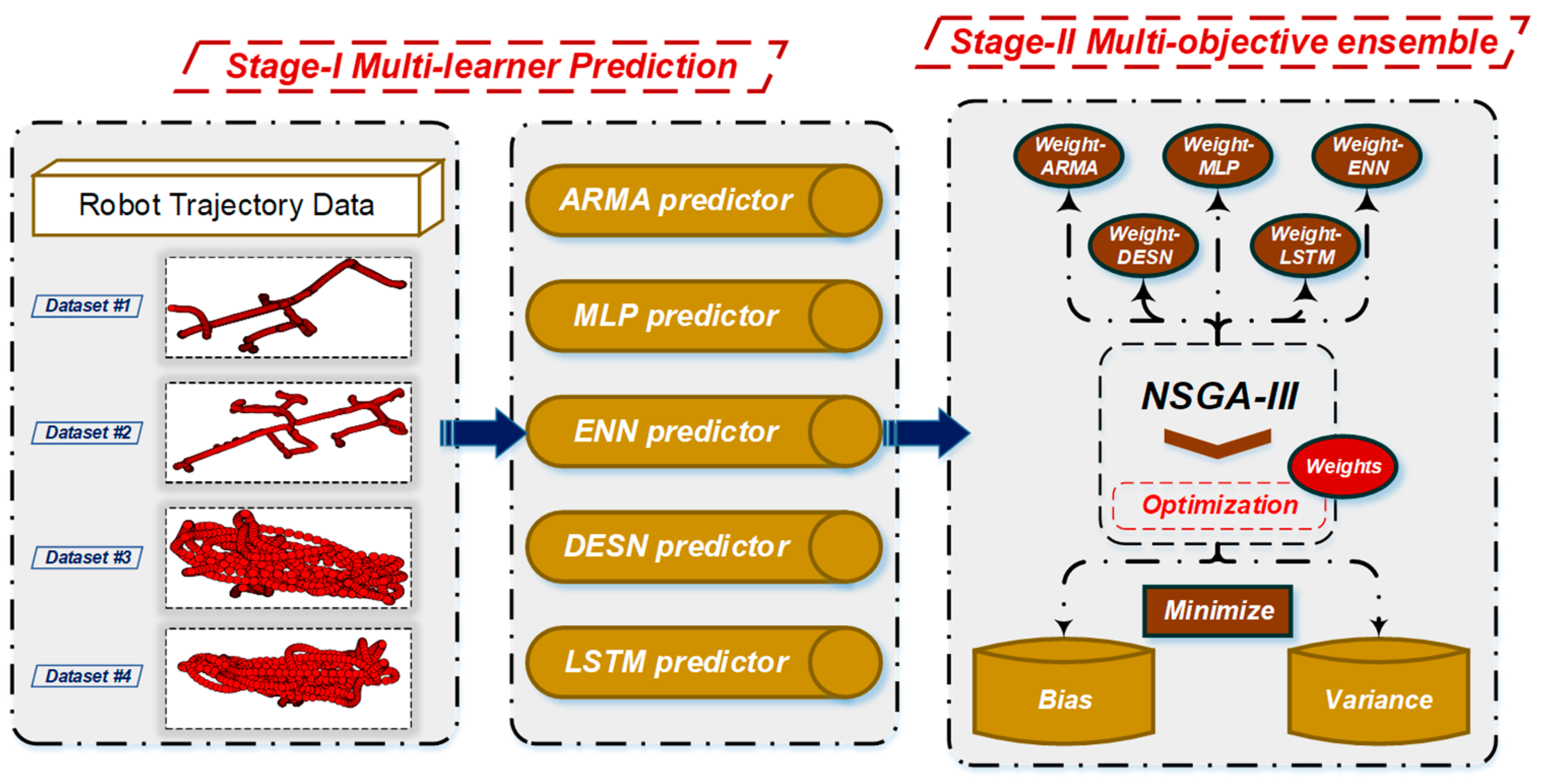

2. Methods

- Stage 1: multi-learner prediction. The trajectory dataset is divided into training and testing datasets. The trajectory is separated into several series in the orthogonal coordinate system. Fed with all orthogonal series, the ARMA, MLP, ENN, DESN, and LSTM are trained with the training dataset and generate forecasting results in the testing datasets.

- Stage 2: multi-objective optimization. The forecasting results of the multiple learners are combined by the NSGA-III to construct the ensemble model. Setting bias and variance as the objective function, the NSGA-III is applied to optimize the ensemble weights. Applying the obtained weights to the testing dataset, the ensemble forecasting results of the orthogonal series can be obtained. Synthesizing series forecasting results in the orthogonal coordinate system, the final deterministic trajectory forecasting results can be obtained.

2.1. Stage 1: Multi-Learner Prediction

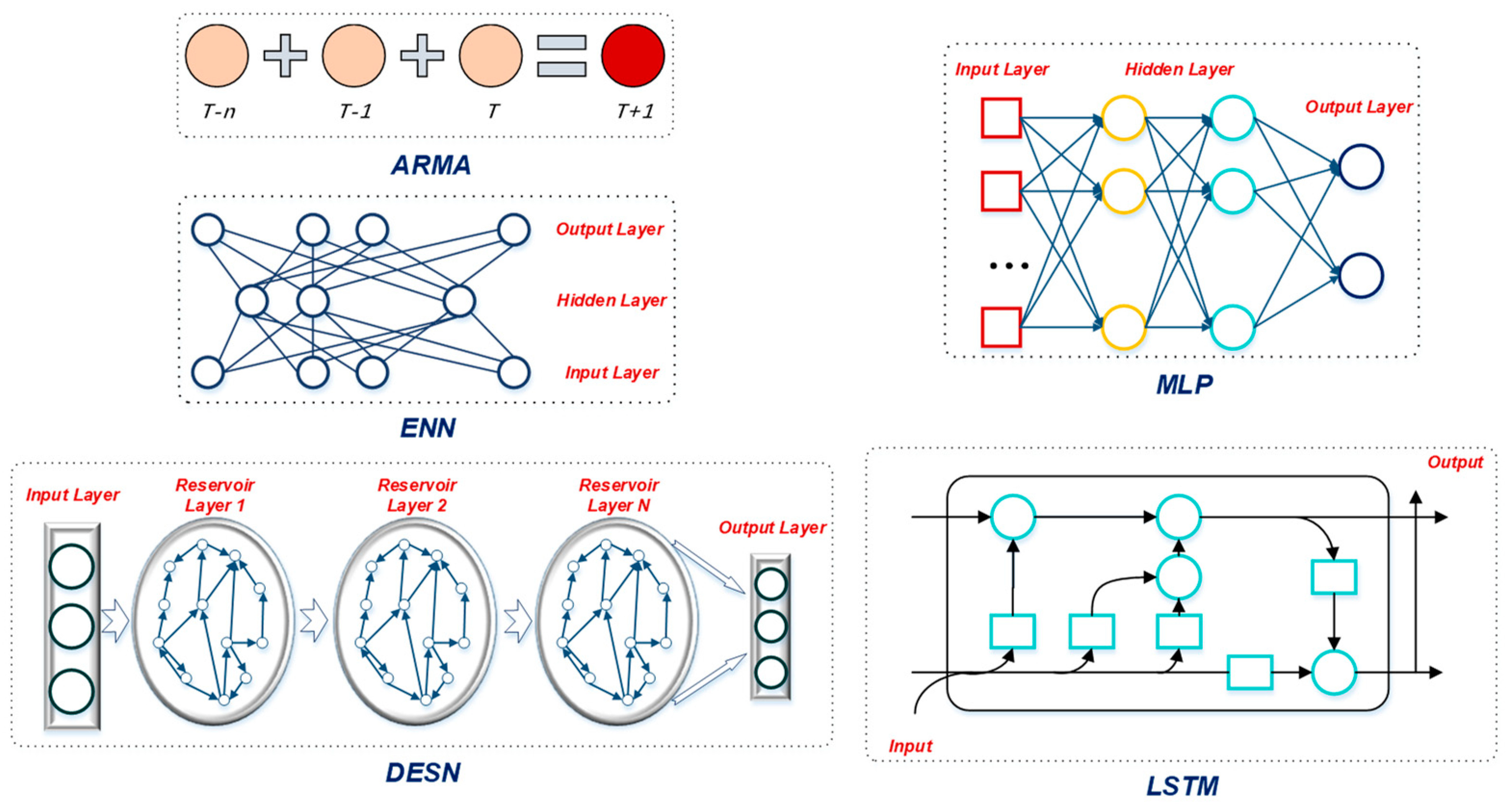

- The ARMA is a stochastic model in time series analysis, and can build regression equations through the correlation between data [36].

- The MLP is a feedforward artificial neural network, which can map multiple input datasets to output datasets [37].

- The ENN is a simple recurrent neural network, which consists of an input layer, a hidden layer, and an output layer; it has a context layer that feeds back the hidden layer outputs in the previous time steps [38].

- The DESN is the extension of the ESN (echo state network) approach to the deep learning framework, which is composed of multiple reservoir layers stacked on top of each other [39].

- The LSTM model is a kind of recurrent neural network; this model can address gradient exploration and vanishing problems during training [40].

2.2. Stage 2: Multi-Objective Ensemble

- Generate reference points on the hyper-plane of the multi-objective functions.

- Normalize population members by constructing extreme points.

- Associate population members to the reference points, and carry out the niching-preserving operation to balance population member distribution.

- Execute genetic crossover and mutation operation to generate an offspring population.

2.3. Data Description

2.4. Evaluation Metrics

3. Results and Discussions

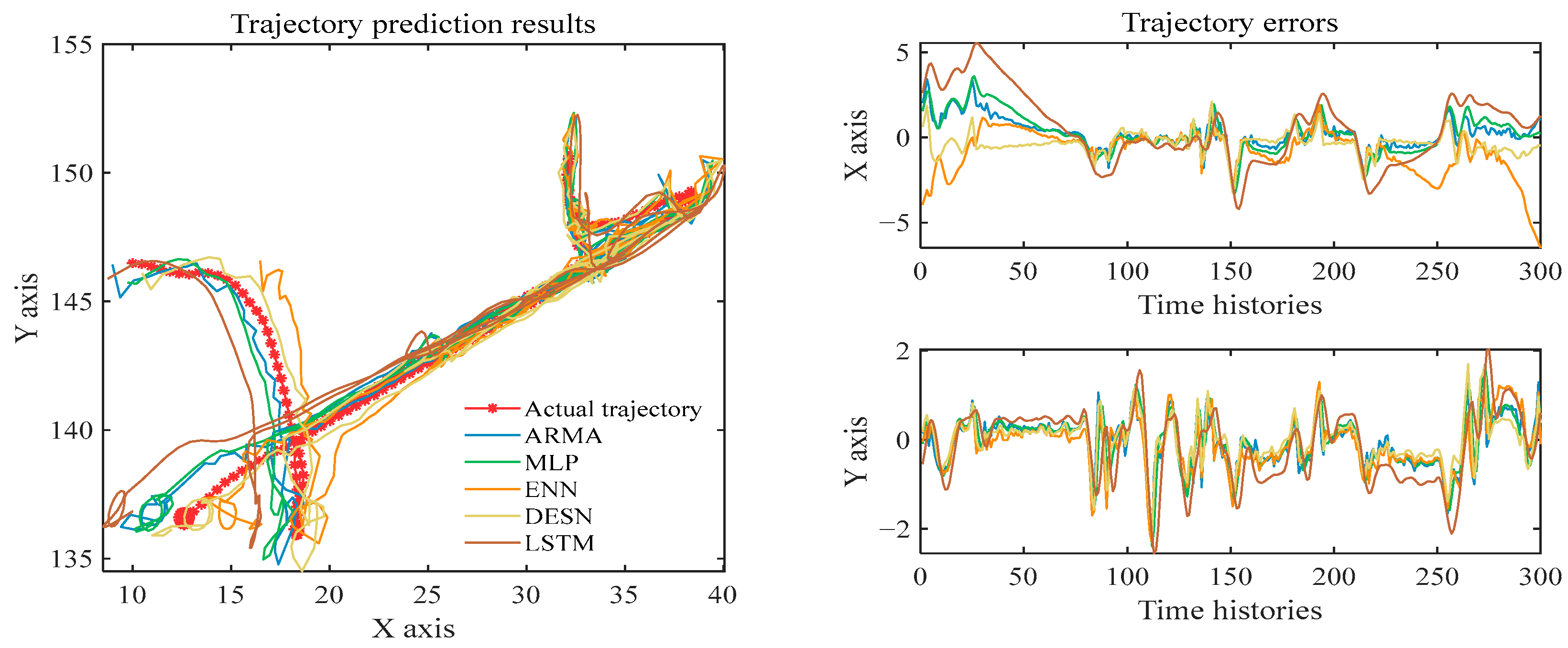

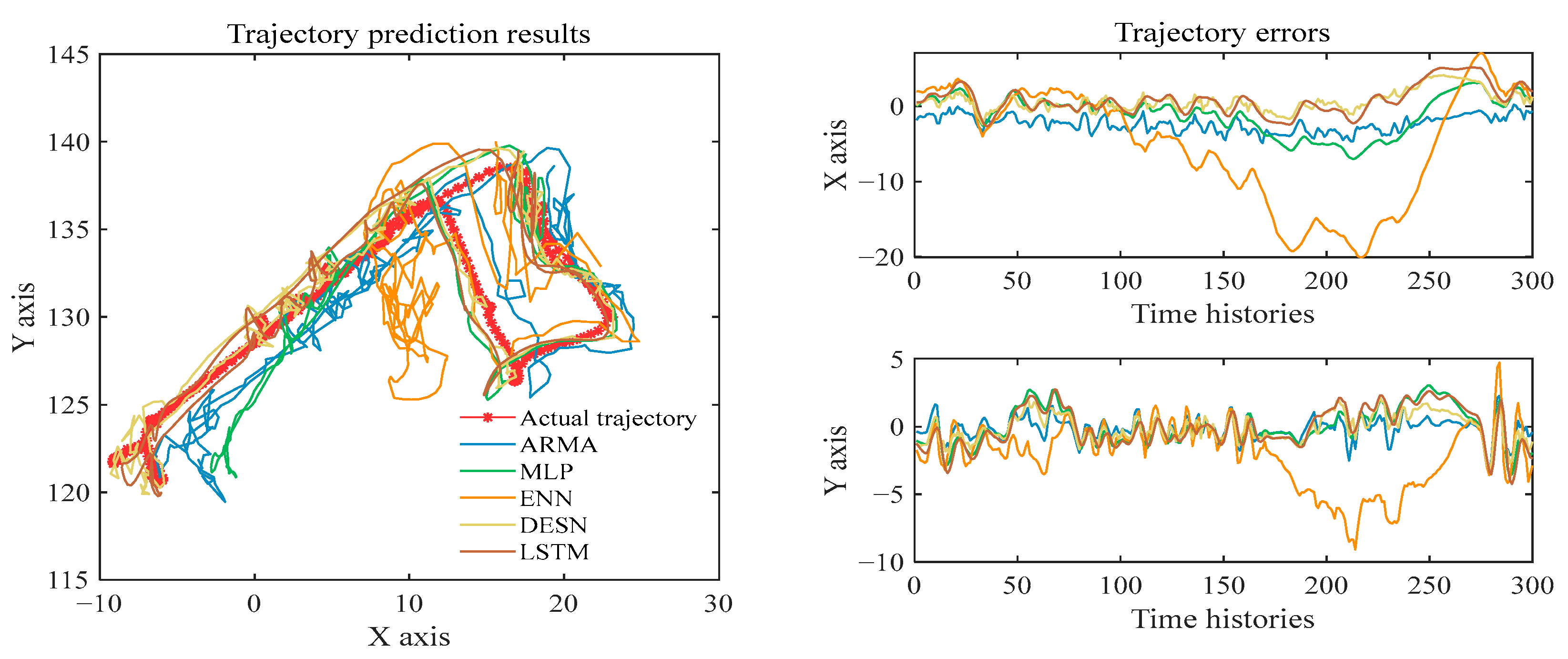

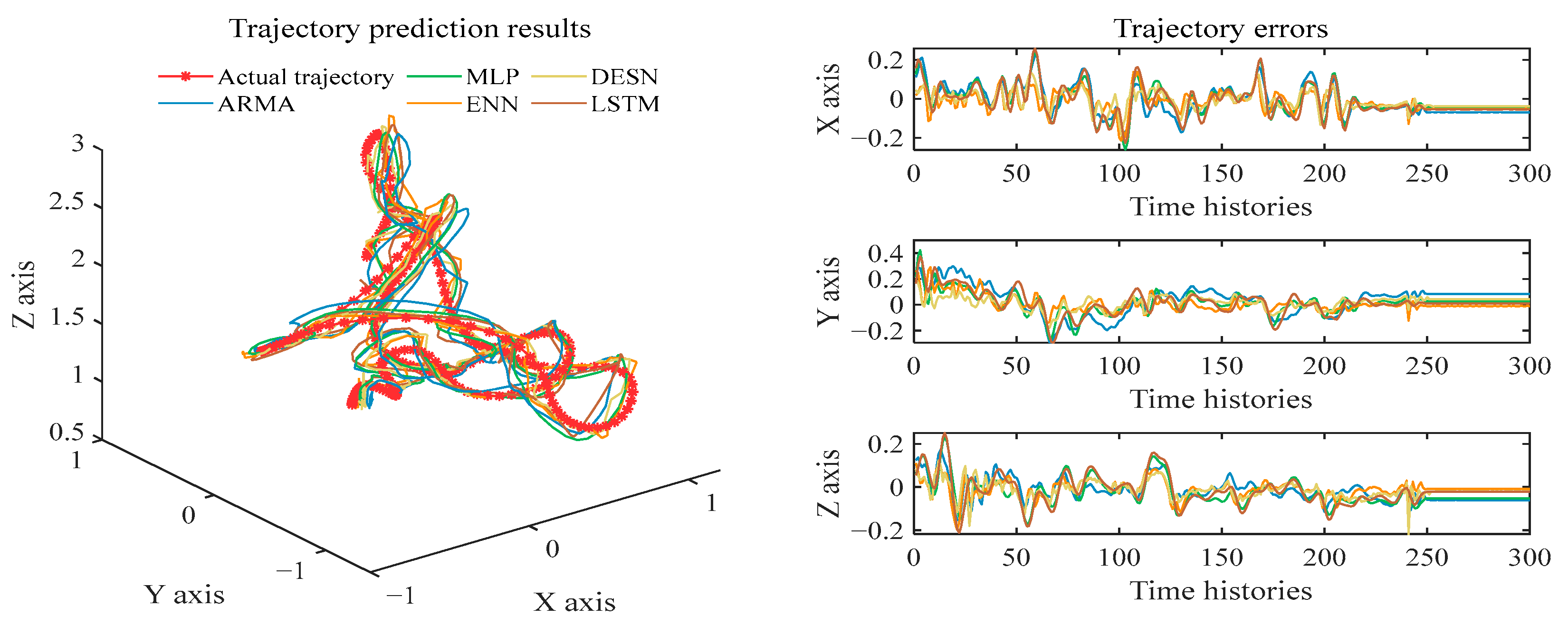

3.1. Multi-Learner Prediction

- The different base learners have various performances on robot trajectory prediction. For instance, the ADE values of the ARMA, MLP, ENN, DESN, and LSTM models are 0.455, 0.580, 0.940, 0.364, and 1.160 for dataset #1, respectively. The essential reason is the difference between model theories and parameters. Such differences contribute to the subsequent construction of multi-objective ensemble learning models, because ensemble learning requires that the base learners should be unique. Only in this way can more accurate prediction results be obtained through ensemble learning.

- Among all the base learners, the prediction error of DESN remains the lowest in the three datasets, and the prediction result of the trajectory is closer to the real trajectory. Taking the prediction result of dataset #4 as an example, the ADE, NLADE, and FDE of the DESN model are 0.057, 0.057, and 0.129, respectively. They are much lower than those of the other base learners. This phenomenon may be because the DESN benefits from its deep network structure and has a strong ability to learn sequential data such as robot trajectory.

- The prediction effects of ENN on the three datasets are significantly different. In dataset #2, the prediction error is the highest among the five models. However, it is second only to the DESN model in datasets #3 and 4. The instability of prediction results may be because ENN is sensitive to data and parameters. It is difficult to obtain satisfactory results for all data without parameter tuning.

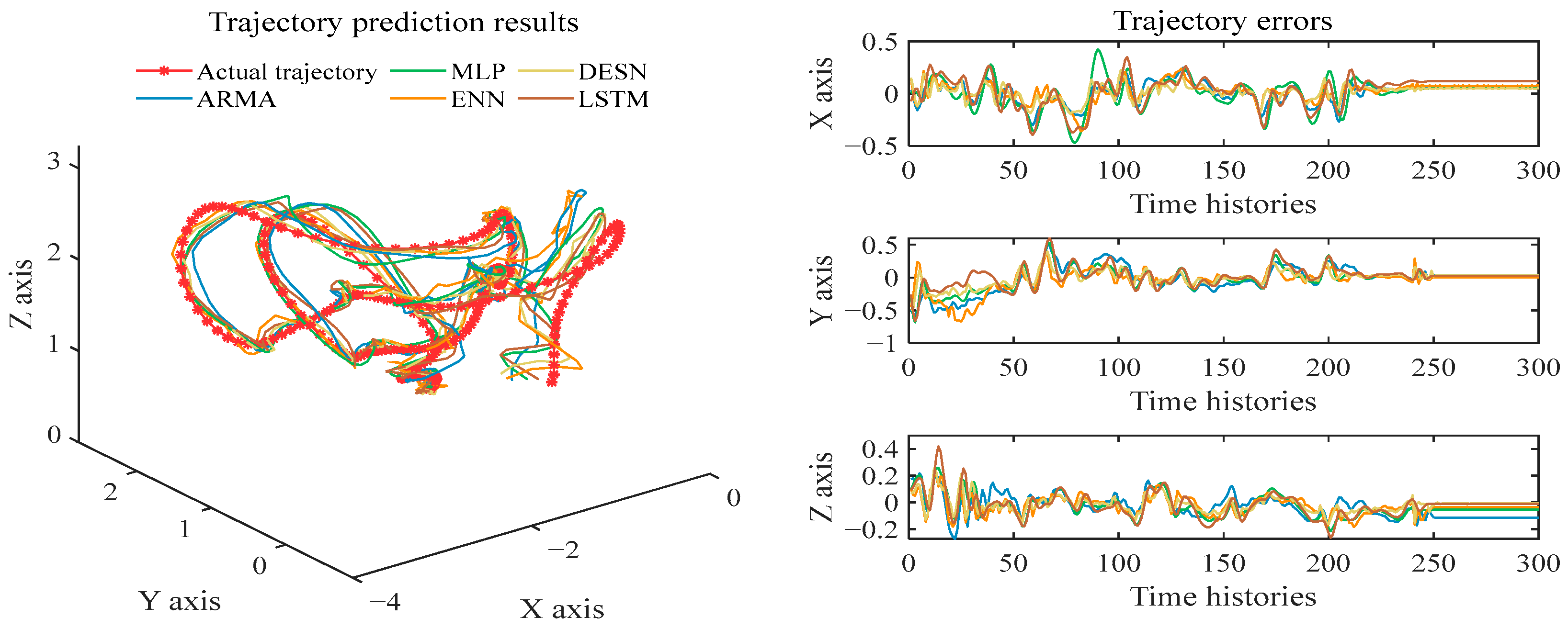

3.2. Multi-Objective Ensemble

3.3. Comparison with Other Optimization Algorithms

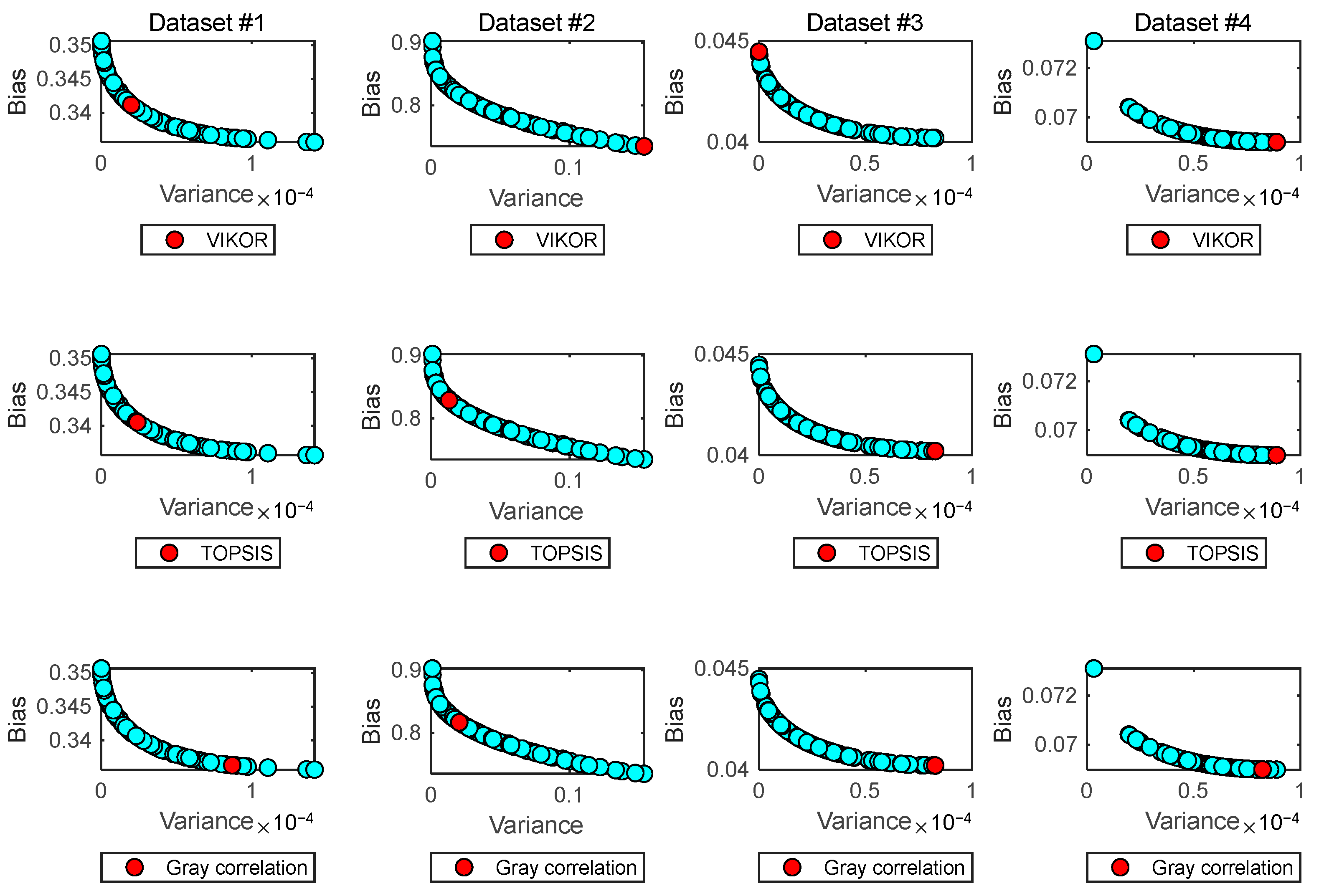

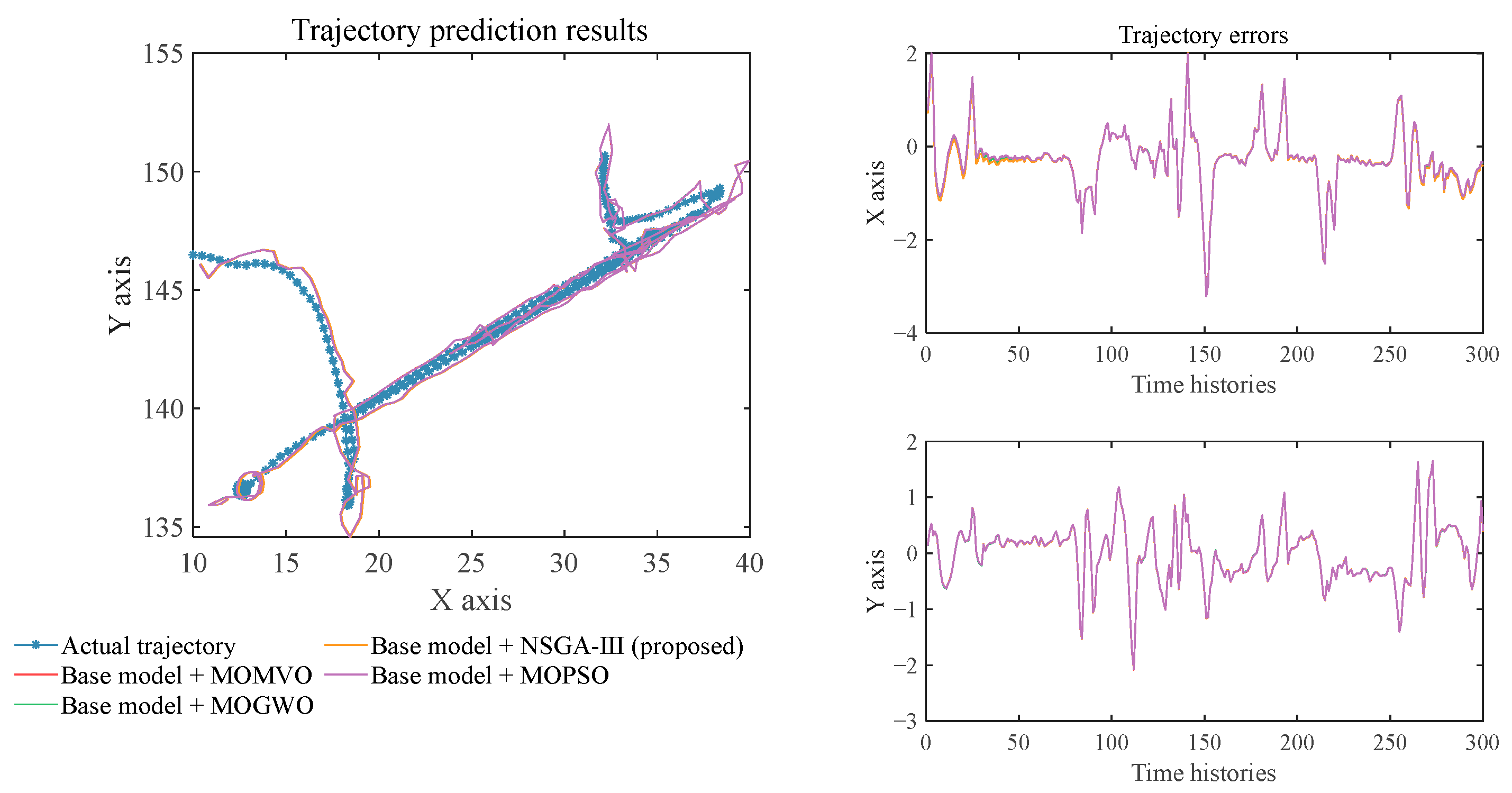

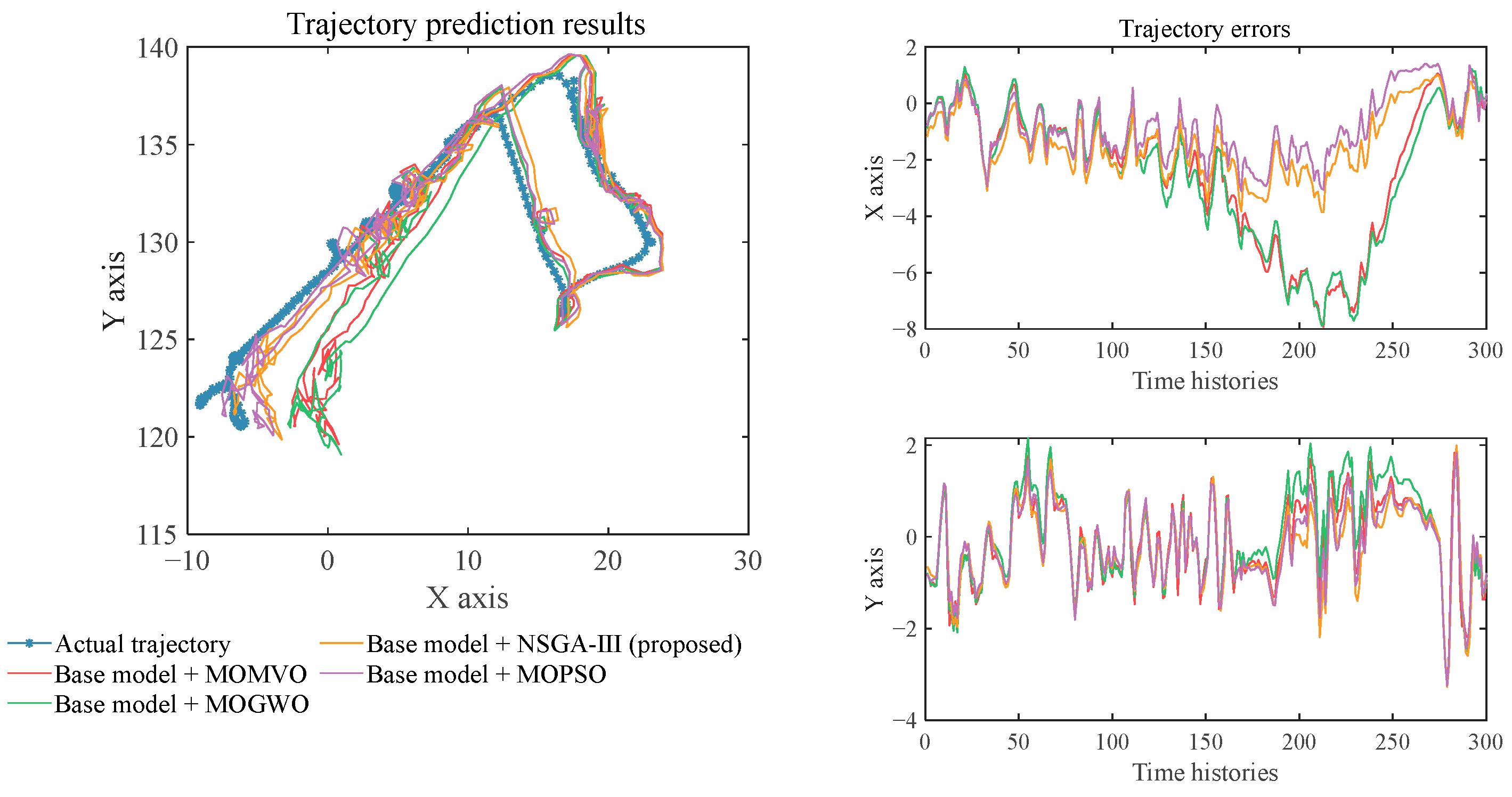

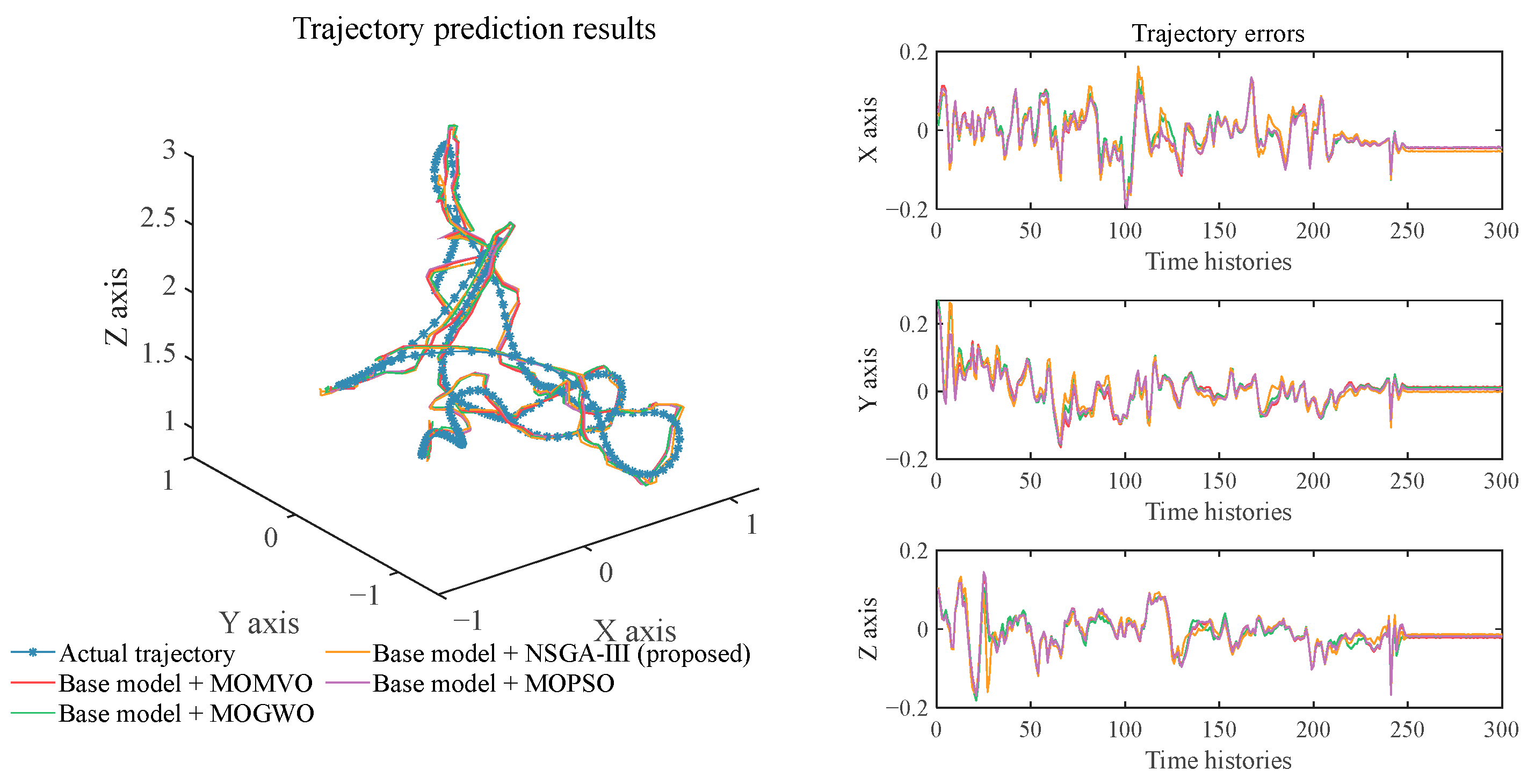

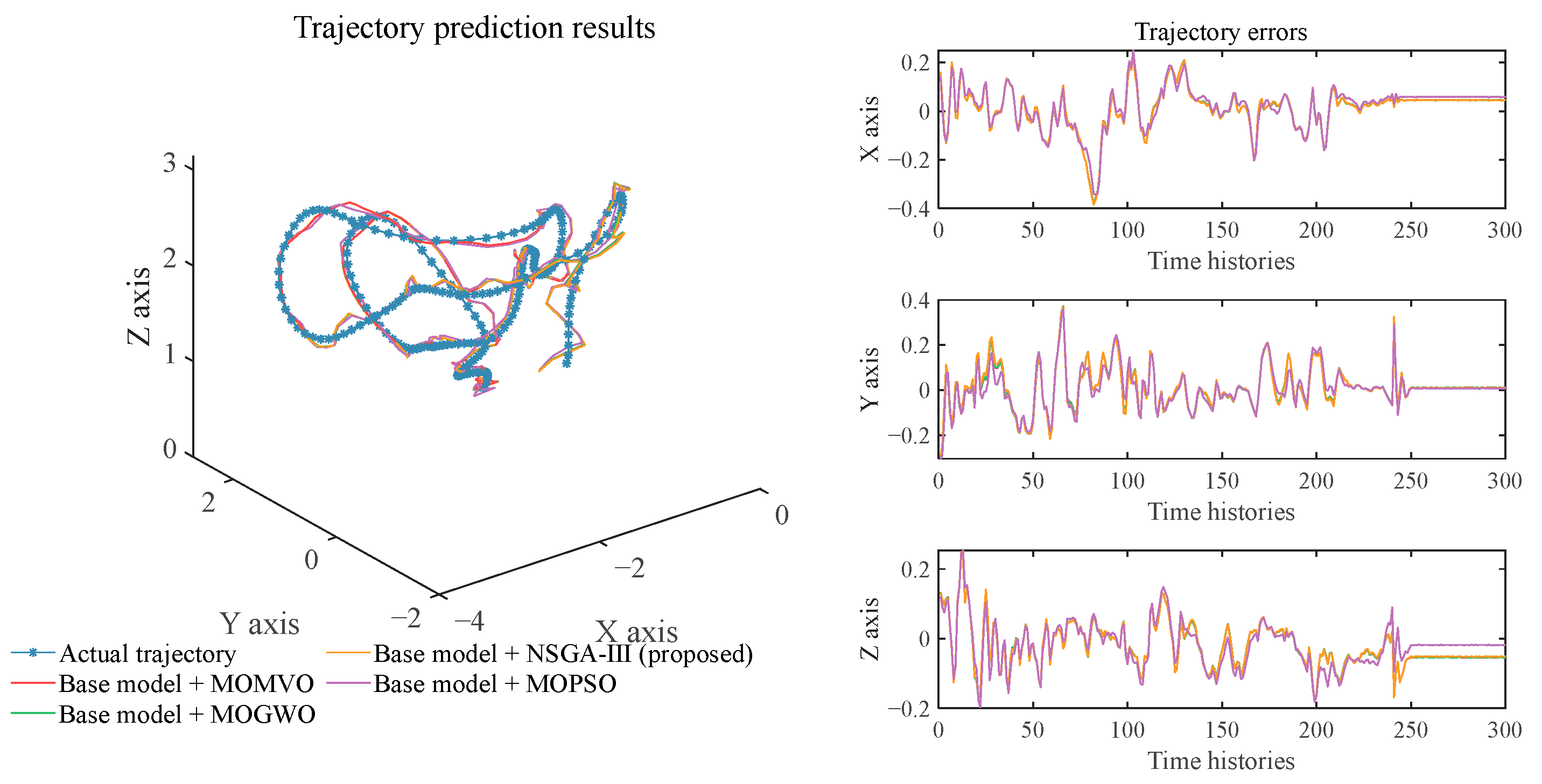

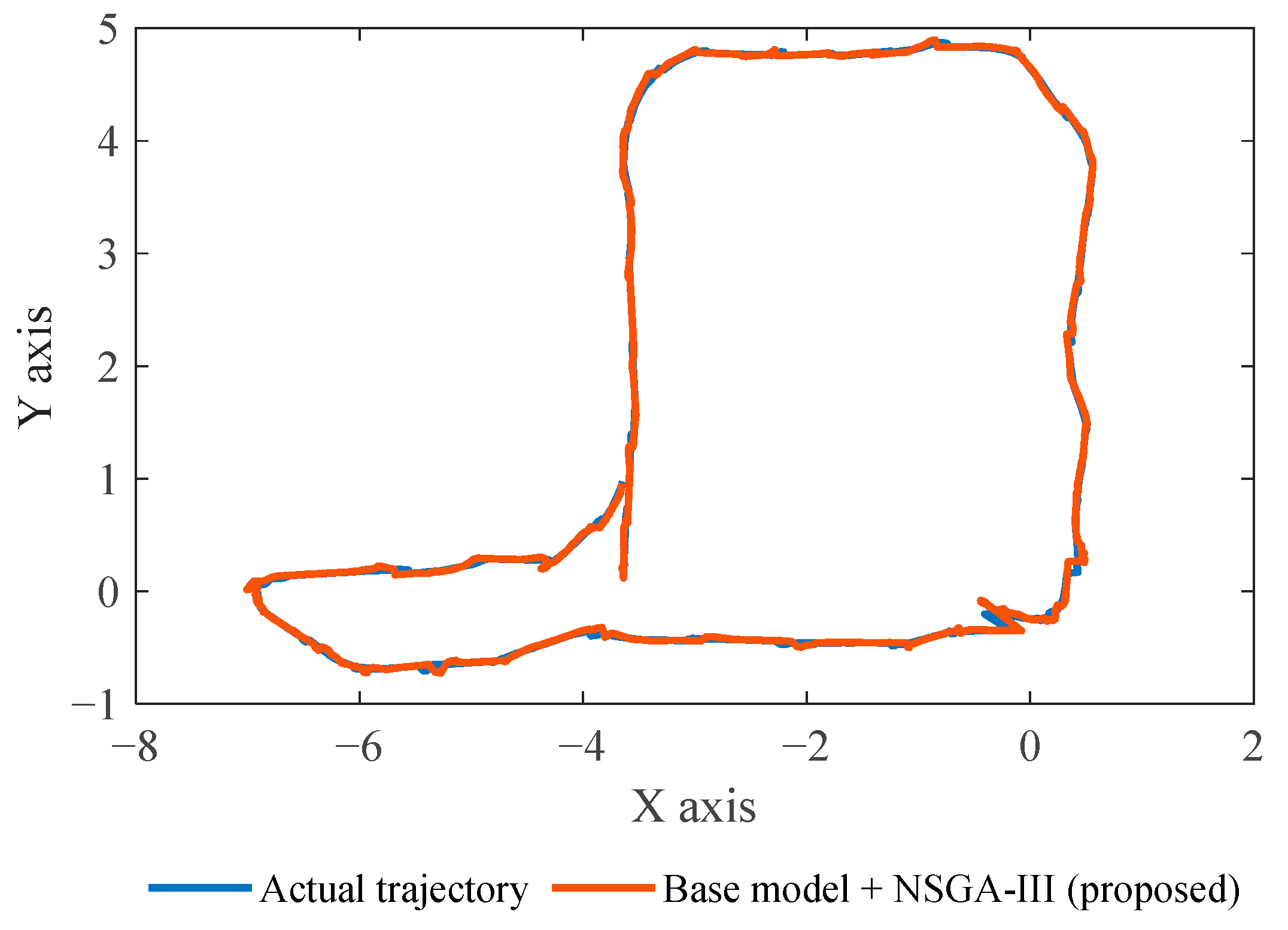

- All multi-objective optimization algorithms are effective for ensemble prediction of robot trajectory. Comparing the prediction error metrics based on the optimization algorithms with the five base learners, it can be found that the prediction error is further reduced. Moreover, the trajectory prediction results based on the optimization algorithms are all close to the actual trajectory of the robot movement, and satisfactory prediction accuracy has been achieved. This demonstrates that the comparative experimental settings of the multi-objective ensemble models are effective, and each algorithm is set reasonably, which helps to fairly evaluate the effectiveness of the proposed model.

- Compared with the other three optimization algorithms, the proposed model has the lowest prediction error. Taking dataset #2 as an example, the ADE, NLADE, and FDE of the MOMVO algorithm are 1.534, 1.534, and 2.794, respectively; the ADE, NLADE, and FDE of the MOGWO algorithm are 1.663, 1.663, and 2.953, respectively; and the ADE, NLADE, and FDE of the MOPSO algorithm are 1.103, 1.103, and 1.828, respectively. However, the ADE, NLADE, and FDE of the proposed MMP model are only 0.783, 0.783, and 1.425, respectively. This shows the superiority of the proposed model, which can achieve satisfactory prediction results on all datasets.

- By comparing the performance of each optimization algorithm on different datasets, it can be found that they have slight differences in the prediction results of datasets #1, #2, and #3. The reason for this phenomenon may be that the optimization goal is set to minimize the deviation and variance, which leads to relatively limited optimization space and a simple optimization problem. Different algorithms can approximate the global optimal solution, resulting in similar prediction results.

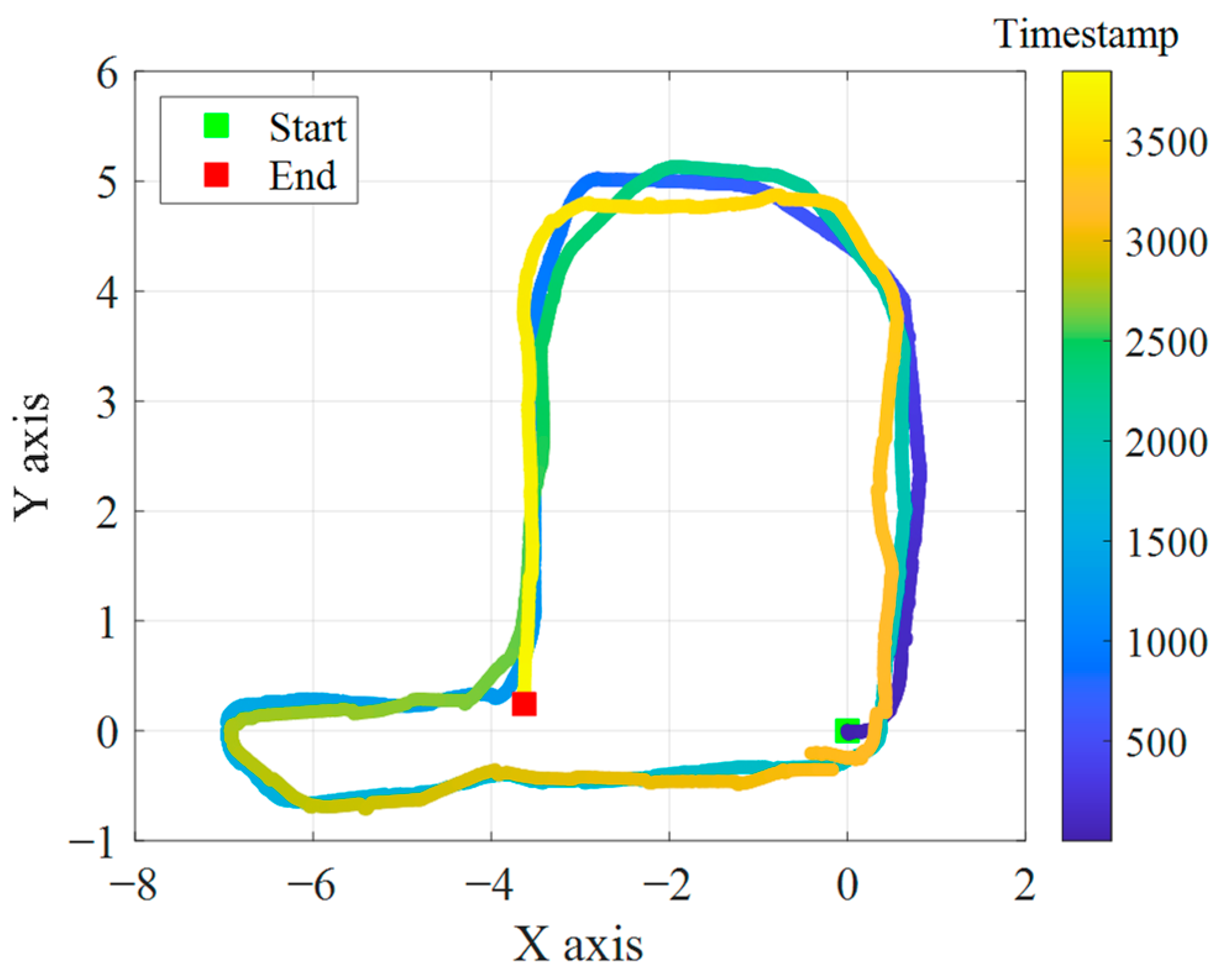

4. Additional Case for Inspection Robot

5. Generalization Analysis

6. Conclusions and Future Works

- The proposed multi-objective ensemble method is effective in improving the robot trajectory prediction accuracy of base learners. Its basic principle is to synchronously minimize the bias and variance of the model.

- The NSGA-III shows superiority in robot trajectory prediction. It significantly outperforms other optimization algorithms in dataset #1, and slightly outperforms other optimization algorithms in datasets #2 and #3.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Al-Okby, M.F.R.; Neubert, S.; Roddelkopf, T.; Thurow, K. Mobile Detection and alarming systems for hazardous gases and volatile chemicals in laboratories and industrial locations. Sensors 2021, 21, 8128. [Google Scholar] [CrossRef] [PubMed]

- Lee, C.-T.; Sung, W.-T. Controller Design of Tracking WMR system based on deep reinforcement learning. Electronics 2022, 11, 928. [Google Scholar] [CrossRef]

- Thamrongaphichartkul, K.; Worrasittichai, N.; Prayongrak, T.; Vongbunyong, S. A framework of IoT platform for autonomous mobile robot in hospital logistics applications. In Proceedings of the 2020 15th International Joint Symposium on Artificial Intelligence and Natural Language Processing (iSAI-NLP), Bangkok, Thailand, 18–20 November 2020; pp. 1–6. [Google Scholar]

- Patel, A.R.; Azadi, S.; Babaee, M.H.; Mollaei, N.; Patel, K.L.; Mehta, D.R. Significance of robotics in manufacturing, energy, goods and transport sector in internet of things (iot) paradigm. In Proceedings of the 2018 Fourth International Conference on Computing Communication Control and Automation (ICCUBEA), Pune, India, 16–18 August 2018; pp. 1–4. [Google Scholar]

- Zacharia, P.T.; Xidias, E.K. AGV routing and motion planning in a flexible manufacturing system using a fuzzy-based genetic algorithm. Int. J. Adv. Manuf. Technol. 2020, 109, 1801–1813. [Google Scholar] [CrossRef]

- Diez-Gonzalez, J.; Alvarez, R.; Prieto-Fernandez, N.; Perez, H. Local wireless sensor networks positioning reliability under sensor failure. Sensors 2020, 20, 1426. [Google Scholar] [CrossRef] [PubMed]

- Chang, L.; Shan, L.; Jiang, C.; Dai, Y. Reinforcement based mobile robot path planning with improved dynamic window approach in unknown environment. Auton. Robot. 2021, 45, 51–76. [Google Scholar] [CrossRef]

- Kousi, N.; Gkournelos, C.; Aivaliotis, S.; Lotsaris, K.; Bavelos, A.C.; Baris, P.; Michalos, G.; Makris, S. Digital twin for designing and reconfiguring human–robot collaborative assembly lines. Appl. Sci. 2021, 11, 4620. [Google Scholar] [CrossRef]

- Nabeeh, N.A.; Abdel-Basset, M.; Gamal, A.; Chang, V. Evaluation of production of digital twins based on blockchain technology. Electronics 2022, 11, 1268. [Google Scholar] [CrossRef]

- He, B.; Bai, K.-J. Digital twin-based sustainable intelligent manufacturing: A review. Adv. Manuf. 2021, 9, 1–21. [Google Scholar] [CrossRef]

- Havard, V.; Jeanne, B.; Lacomblez, M.; Baudry, D. Digital twin and virtual reality: A co-simulation environment for design and assessment of industrial workstations. Prod. Manuf. Res. 2019, 7, 472–489. [Google Scholar] [CrossRef]

- Pivarčiová, E.; Božek, P.; Turygin, Y.; Zajačko, I.; Shchenyatsky, A.; Václav, Š.; Císar, M.; Gemela, B. Analysis of control and correction options of mobile robot trajectory by an inertial navigation system. Int. J. Adv. Robot. Syst. 2018, 15, 172988141875516. [Google Scholar] [CrossRef]

- Duan, Z.; Liu, H.; Lv, X.; Ren, Z.; Junginger, S. Hybrid position forecasting method for mobile robot transportation in smart indoor environment. In Proceedings of the 2019 4th International Conference on Big Data and Computing, New York, NY, USA, 10 May 2019; pp. 333–338. [Google Scholar]

- Issa, H.; Tar, J.K. Preliminary design of a receding horizon controller supported by adaptive feedback. Electronics 2022, 11, 1243. [Google Scholar] [CrossRef]

- Murray, B.; Perera, L.P. A data-driven approach to vessel trajectory prediction for safe autonomous ship operations. In Proceedings of the 2018 Thirteenth International Conference on Digital Information Management (ICDIM), Berlin, Germany, 24–26 September 2018; pp. 240–247. [Google Scholar]

- QIiao, S.-J.; Han, N.; Zhu, X.-W.; Shu, H.-P.; Zheng, J.-L.; Yuan, C.-A. A dynamic trajectory prediction algorithm based on Kalman filter. Acta Electonica Sin. 2018, 46, 418. [Google Scholar]

- Xing, Y.; Wang, G.; Zhu, Y. Application of an autoregressive moving average approach in flight trajectory simulation. In Proceedings of the AIAA Atmospheric Flight Mechanics Conference, Washington, DC, USA, 13–17 June 2016; p. 3846. [Google Scholar]

- Heravi, E.J.; Khanmohammadi, S. Long term trajectory prediction of moving objects using gaussian process. In Proceedings of the 2011 First International Conference on Robot, Vision and Signal Processing, Kaohsiung, Taiwan, 21–23 November 2011; pp. 228–232. [Google Scholar]

- Liu, H.; Yang, R.; Duan, Z.; Wu, H. A hybrid neural network model for marine dissolved oxygen concentrations time-series forecasting based on multi-factor analysis and a multi-model ensemble. Engineering 2021, 7, 1751–1765. [Google Scholar] [CrossRef]

- Yang, R.; Liu, H.; Nikitas, N.; Duan, Z.; Li, Y.; Li, Y. Short-term wind speed forecasting using deep reinforcement learning with improved multiple error correction approach. Energy 2022, 239, 122128. [Google Scholar] [CrossRef]

- Seker, M.Y.; Tekden, A.E.; Ugur, E. Deep effect trajectory prediction in robot manipulation. Robot. Auton. Syst. 2019, 119, 173–184. [Google Scholar] [CrossRef]

- Sun, L.; Yan, Z.; Mellado, S.M.; Hanheide, M.; Duckett, T. 3DOF pedestrian trajectory prediction learned from long-term autonomous mobile robot deployment data. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 5942–5948. [Google Scholar]

- Zhang, J.; Liu, H.; Chang, Q.; Wang, L.; Gao, R.X. Recurrent neural network for motion trajectory prediction in human-robot collaborative assembly. CIRP Ann. 2020, 69, 9–12. [Google Scholar] [CrossRef]

- Nikhil, N.; Tran Morris, B. Convolutional neural network for trajectory prediction. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018; pp. 1–11. [Google Scholar]

- Chen, C.; Liu, H. Medium-term wind power forecasting based on multi-resolution multi-learner ensemble and adaptive model selection. Energy Convers. Manag. 2020, 206, 112492. [Google Scholar] [CrossRef]

- Liu, H.; Yang, R.; Wang, T.; Zhang, L. A hybrid neural network model for short-term wind speed forecasting based on decomposition, multi-learner ensemble, and adaptive multiple error corrections. Renew. Energy 2021, 165, 573–594. [Google Scholar] [CrossRef]

- Kim, Y.; Hur, J. An ensemble forecasting model of wind power outputs based on improved statistical approaches. Energies 2020, 13, 1071. [Google Scholar] [CrossRef]

- Liu, H.; Duan, Z.; Chen, C. A hybrid multi-resolution multi-objective ensemble model and its application for forecasting of daily PM2.5 concentrations. Inf. Sci. 2020, 516, 266–292. [Google Scholar] [CrossRef]

- Ribeiro, M.H.D.M.; dos Santos Coelho, L. Ensemble approach based on bagging, boosting and stacking for short-term prediction in agribusiness time series. Appl. Soft Comput. 2020, 86, 105837. [Google Scholar] [CrossRef]

- Wang, F.; Li, Y.; Liao, F.; Yan, H. An ensemble learning based prediction strategy for dynamic multi-objective optimization. Appl. Soft Comput. 2020, 96, 106592. [Google Scholar] [CrossRef]

- Wang, H.; Yang, Z.; Shi, Y. Next location prediction based on an adaboost-markov model of mobile users. Sensors 2019, 19, 1475. [Google Scholar] [CrossRef] [PubMed]

- Rasouli, A.; Kotseruba, I.; Tsotsos, J.K. Pedestrian action anticipation using contextual feature fusion in stacked rnns. arXiv 2020, arXiv:2005.06582. [Google Scholar]

- Xu, Y.; Liu, H. Spatial ensemble prediction of hourly PM2. 5 concentrations around Beijing railway station in China. Air Qual. Atmos. Health 2020, 13, 563–573. [Google Scholar] [CrossRef]

- Weisberg, M. Robustness analysis. Philos. Sci. 2006, 73, 730–742. [Google Scholar] [CrossRef]

- Liu, Y.; Gong, C.; Yang, L.; Chen, Y. DSTP-RNN: A dual-stage two-phase attention-based recurrent neural network for long-term and multivariate time series prediction. Expert Syst. Appl. 2020, 143, 113082. [Google Scholar] [CrossRef]

- Lin, C.-Y.; Hsieh, Y.-M.; Cheng, F.-T.; Huang, H.-C.; Adnan, M. Time series prediction algorithm for intelligent predictive maintenance. IEEE Robot. Autom. Lett. 2019, 4, 2807–2814. [Google Scholar] [CrossRef]

- Chen, W.; Xu, H.; Chen, Z.; Jiang, M. A novel method for time series prediction based on error decomposition and nonlinear combination of forecasters. Neurocomputing 2021, 426, 85–103. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, X.; Tang, H. An improved Elman neural network with piecewise weighted gradient for time series prediction. Neurocomputing 2019, 359, 199–208. [Google Scholar] [CrossRef]

- Wang, Z.; Yao, X.; Huang, Z.; Liu, L. Deep echo state network with multiple adaptive reservoirs for time series prediction. IEEE Trans. Cogn. Dev. Syst. 2021, 13, 693–704. [Google Scholar] [CrossRef]

- Yan, B.; Aasma, M. A novel deep learning framework: Prediction and analysis of financial time series using CEEMD and LSTM. Expert Syst. Appl. 2020, 159, 113609. [Google Scholar]

- Zhou, Z. Ensemble Methods: Foundations and Algorithms; Taylor & Francis—Chapman and Hall/CRC: New York, NY, USA, 2012. [Google Scholar]

- Deb, K.; Jain, H. An evolutionary many-objective optimization algorithm using reference-point-based nondominated sorting approach, part I: Solving problems with box constraints. IEEE Trans. Evol. Comput. 2013, 18, 577–601. [Google Scholar] [CrossRef]

- Das, I.; Dennis, J.E. Normal-boundary intersection: A new method for generating the Pareto surface in nonlinear multicriteria optimization problems. SIAM J. Optim. 1998, 8, 631–657. [Google Scholar] [CrossRef]

- Taieb, S.B.; Atiya, A.F. A bias and variance analysis for multistep-ahead time series forecasting. IEEE Trans. Neural Netw. Learn. Syst. 2016, 27, 62–76. [Google Scholar] [CrossRef]

- Wang, C.; Zhang, S.; Xiao, L.; Fu, T. Wind speed forecasting based on multi-objective grey wolf optimisation algorithm, weighted information criterion, and wind energy conversion system: A case study in Eastern China. Energy Convers. Manag. 2021, 243, 114402. [Google Scholar] [CrossRef]

- Liu, H.; Yang, R. A spatial multi-resolution multi-objective data-driven ensemble model for multi-step air quality index forecasting based on real-time decomposition. Comput. Ind. 2021, 125, 103387. [Google Scholar] [CrossRef]

- Wang, J.; Wang, R.; Li, Z. A combined forecasting system based on multi-objective optimization and feature extraction strategy for hourly PM2.5 concentration. Appl. Soft Comput. 2022, 114, 108034. [Google Scholar] [CrossRef]

- Felfel, H.; Ayadi, O.; Masmoudi, F. Pareto optimal solution selection for a multi-site supply chain planning problem using the VIKOR and TOPSIS methods. Int. J. Serv. Sci. Manag. Eng. Technol. 2017, 8, 21–39. [Google Scholar] [CrossRef][Green Version]

- Taleizadeh, A.A.; Niaki, S.T.A.; Aryanezhad, M.-B. A hybrid method of Pareto, TOPSIS and genetic algorithm to optimize multi-product multi-constraint inventory control systems with random fuzzy replenishments. Math. Comput. Model. 2009, 49, 1044–1057. [Google Scholar] [CrossRef]

- WANG, D.; LI, S. Lightweight optimization design of side collision safety parts for BIW based on pareto mining. China Mech. Eng. 2021, 32, 1584. [Google Scholar]

- Saeedi, S.; Carvalho, E.D.C.; Li, W.; Tzoumanikas, D.; Leutenegger, S.; Kelly, P.H.J.; Davison, A.J. Characterizing visual localization and mapping datasets. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 6699–6705. [Google Scholar]

- Zhang, G.; Ouyang, R.; Lu, B.; Hocken, R.; Veale, R.; Donmez, A. A displacement method for machine geometry calibration. CIRP Ann. 1988, 37, 515–518. [Google Scholar] [CrossRef]

- Huang, C.-H. A non-linear inverse vibration problem of estimating the external forces for a system with displacement-dependent parameters. J. Sound Vib. 2001, 248, 789–807. [Google Scholar] [CrossRef]

- Keil, C.; Craig, G.C. A displacement-based error measure applied in a regional ensemble forecasting system. Mon. Weather. Rev. 2007, 135, 3248–3259. [Google Scholar] [CrossRef]

- Mirjalili, S.; Jangir, P.; Mirjalili, S.Z.; Saremi, S.; Trivedi, I.N. Optimization of problems with multiple objectives using the multi-verse optimization algorithm. Knowl. Based Syst. 2017, 134, 50–71. [Google Scholar] [CrossRef]

- Mirjalili, S.; Saremi, S.; Mirjalili, S.M.; Coelho, L.d.S. Multi-objective grey wolf optimizer: A novel algorithm for multi-criterion optimization. Expert Syst. Appl. 2016, 47, 106–119. [Google Scholar] [CrossRef]

- Mostaghim, S.; Teich, J. Strategies for finding good local guides in multi-objective particle swarm optimization (MOPSO). In Proceedings of the 2003 IEEE Swarm Intelligence Symposium. SIS’03 (Cat. No.03EX706), Indianapolis, IN, USA, 26–26 April 2003; pp. 26–33. [Google Scholar]

- Tao, T. Research on intelligent robot patrol route based on cloud computing. In Proceedings of the 2019 International Conference on Computer Network, Electronic and Automation (ICCNEA), Xi’an, China, 27–29 September 2019; pp. 511–516. [Google Scholar]

- Liu, H. Robot Systems for Rail Transit Applications; Elsevier: Amsterdam, Netherlands, 2020. [Google Scholar]

- Bendre, M.; Manthalkar, R. Time series decomposition and predictive analytics using MapReduce framework. Expert Syst. Appl. 2019, 116, 108–120. [Google Scholar] [CrossRef]

- Zaharia, M.; Xin, R.S.; Wendell, P.; Das, T.; Armbrust, M.; Dave, A.; Meng, X.; Rosen, J.; Venkataraman, S.; Franklin, M.J. Apache spark: A unified engine for big data processing. Commun. ACM 2016, 59, 56–65. [Google Scholar] [CrossRef]

- Meng, X.; Bradley, J.; Yavuz, B.; Sparks, E.; Venkataraman, S.; Liu, D.; Freeman, J.; Tsai, D.; Amde, M.; Owen, S. Mllib: Machine learning in apache spark. J. Mach. Learn. Res. 2016, 17, 1235–1241. [Google Scholar]

| Dataset | Model | ADE | NLADE | FDE |

|---|---|---|---|---|

| #1 | ARMA | 0.455 | 0.455 | 0.904 |

| MLP | 0.580 | 0.580 | 1.084 | |

| ENN | 0.940 | 0.940 | 1.262 | |

| DESN | 0.364 | 0.364 | 0.730 | |

| LSTM | 1.160 | 1.160 | 1.954 | |

| #2 | ARMA | 1.485 | 1.485 | 2.487 |

| MLP | 2.433 | 2.433 | 2.652 | |

| ENN | 4.879 | 4.879 | 7.583 | |

| DESN | 1.301 | 1.301 | 1.545 | |

| LSTM | 1.635 | 1.635 | 2.170 | |

| #3 | ARMA | 0.063 | 0.063 | 0.142 |

| MLP | 0.060 | 0.060 | 0.116 | |

| ENN | 0.034 | 0.034 | 0.079 | |

| DESN | 0.033 | 0.033 | 0.076 | |

| LSTM | 0.064 | 0.064 | 0.123 | |

| #4 | ARMA | 0.096 | 0.097 | 0.216 |

| MLP | 0.114 | 0.114 | 0.198 | |

| ENN | 0.071 | 0.071 | 0.165 | |

| DESN | 0.057 | 0.057 | 0.129 | |

| LSTM | 0.104 | 0.105 | 0.202 |

| Dataset | Optimization Method | ADE | NLADE | FDE |

|---|---|---|---|---|

| #1 | VIKOR | 0.346 | 0.346 | 0.685 |

| TOPSIS | 0.347 | 0.347 | 0.687 | |

| Gray correlation | 0.347 | 0.347 | 0.688 | |

| #2 | VIKOR | 0.783 | 0.783 | 1.425 |

| TOPSIS | 0.946 | 0.946 | 1.700 | |

| Gray correlation | 0.916 | 0.916 | 1.668 | |

| #3 | VIKOR | 0.032 | 0.032 | 0.073 |

| TOPSIS | 0.029 | 0.029 | 0.068 | |

| Gray correlation | 0.029 | 0.029 | 0.068 | |

| #4 | VIKOR | 0.052 | 0.052 | 0.119 |

| TOPSIS | 0.052 | 0.052 | 0.119 | |

| Gray correlation | 0.051 | 0.051 | 0.118 |

| Dataset | Model | ADE | NLADE | FDE |

|---|---|---|---|---|

| #1 | Base model + MOMVO | 0.349 | 0.349 | 0.692 |

| Base model + MOGWO | 0.349 | 0.349 | 0.693 | |

| Base model + MOPSO | 0.353 | 0.353 | 0.701 | |

| Base model + NSGA-III (proposed) | 0.346 | 0.346 | 0.685 | |

| #2 | Base model + MOMVO | 1.534 | 1.534 | 2.794 |

| Base model + MOGWO | 1.663 | 1.663 | 2.953 | |

| Base model + MOPSO | 1.103 | 1.103 | 1.828 | |

| Base model + NSGA-III (proposed) | 0.783 | 0.783 | 1.425 | |

| #3 | Base model + MOMVO | 0.032 | 0.032 | 0.075 |

| Base model + MOGWO | 0.032 | 0.032 | 0.074 | |

| Base model + MOPSO | 0.032 | 0.032 | 0.075 | |

| Base model + NSGA-III (proposed) | 0.029 | 0.029 | 0.068 | |

| #4 | Base model + MOMVO | 0.056 | 0.056 | 0.126 |

| Base model + MOGWO | 0.056 | 0.056 | 0.126 | |

| Base model + MOPSO | 0.056 | 0.056 | 0.126 | |

| Base model + NSGA-III (proposed) | 0.051 | 0.051 | 0.118 |

| Model | ADE | NLADE | FDE |

|---|---|---|---|

| ARMA | 0.034 | 0.035 | 0.055 |

| MLP | 0.028 | 0.028 | 0.035 |

| ENN | 0.023 | 0.023 | 0.033 |

| DESN | 0.022 | 0.022 | 0.031 |

| LSTM | 0.038 | 0.038 | 0.055 |

| Base model + MOMVO | 0.023 | 0.023 | 0.031 |

| Base model + MOGWO | 0.023 | 0.023 | 0.032 |

| Base model + MOPSO | 0.022 | 0.022 | 0.031 |

| Base model + NSGA-III (proposed) | 0.021 | 0.021 | 0.030 |

| Model | Dataset #1 | Dataset #2 | Dataset #3 | Dataset #4 |

|---|---|---|---|---|

| NSGA-III | 138.71 s | 143.53 s | 189.22 s | 177.99 s |

| MOMVO | 141.11 s | 146.78 s | 188.15 s | 180.31 s |

| MOGWO | 145.62 s | 151.25 s | 193.43 s | 184.94 s |

| MOPSO | 139.77 s | 145.83 s | 190.66 s | 182.81 s |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Peng, F.; Zheng, L.; Duan, Z.; Xia, Y. Multi-Objective Multi-Learner Robot Trajectory Prediction Method for IoT Mobile Robot Systems. Electronics 2022, 11, 2094. https://doi.org/10.3390/electronics11132094

Peng F, Zheng L, Duan Z, Xia Y. Multi-Objective Multi-Learner Robot Trajectory Prediction Method for IoT Mobile Robot Systems. Electronics. 2022; 11(13):2094. https://doi.org/10.3390/electronics11132094

Chicago/Turabian StylePeng, Fei, Li Zheng, Zhu Duan, and Yu Xia. 2022. "Multi-Objective Multi-Learner Robot Trajectory Prediction Method for IoT Mobile Robot Systems" Electronics 11, no. 13: 2094. https://doi.org/10.3390/electronics11132094

APA StylePeng, F., Zheng, L., Duan, Z., & Xia, Y. (2022). Multi-Objective Multi-Learner Robot Trajectory Prediction Method for IoT Mobile Robot Systems. Electronics, 11(13), 2094. https://doi.org/10.3390/electronics11132094