Computer Vision Meets Educational Robotics

Abstract

1. Introduction

- Improve problem-solving skills by helping the student understand difficult concepts more easily, research, and conduct decisions.

- Increase self-efficacy: The machine’s natural handling promotes experimentation, discovery, and rejection and, consequently, enhances the student’s self-confidence because the student feels that he controls the machine. This also strengthens the students’ critical thinking.

- Improve computational thinking: Students acquire algorithmic thinking to break down a large problem into smaller ones and then solve it. Students learn how to focus on important information and reject irrelevant ones.

- Increase creativity by learning with play-transmitting knowledge in a more playful form. Learning turns into a fun activity and becomes more attractive and interesting for the student.

- Increase motivation as educational robotics enables students to engage and persist at a particular activity.

- Improve collaboration as the team spirit and the cooperation between the students are promoted.

Motivation

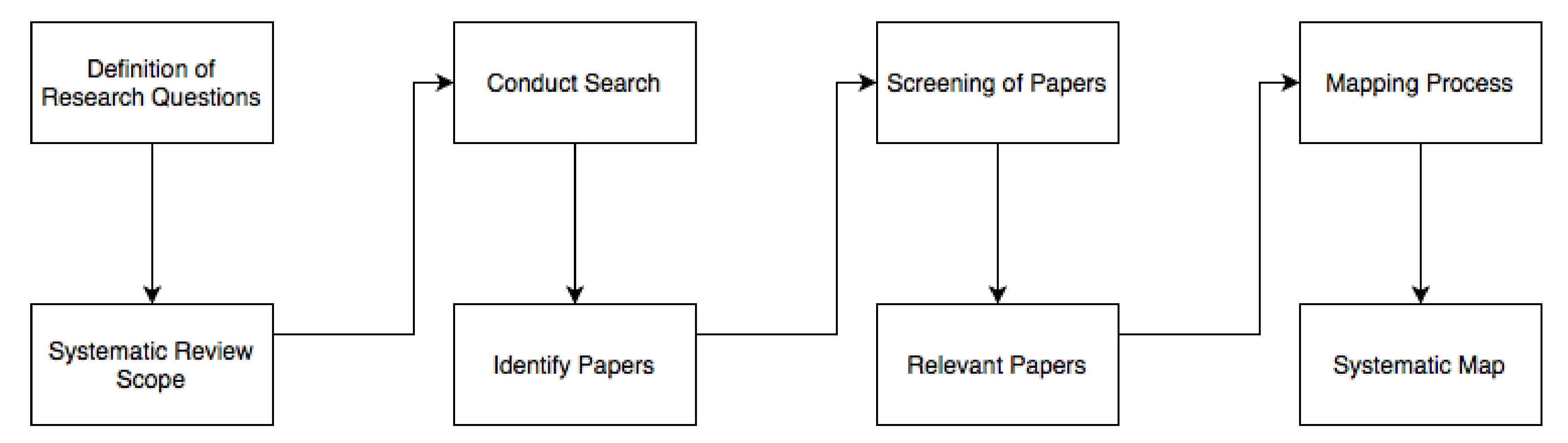

2. Research Methodology

2.1. Definition of Research Questions

- RQ1: What is the role of computer vision in educational robotics?The first research question helps the reader identify the current research that has been conducted on computer vision in educational robotics and attempts to provide answers on how computer vision can be used during the learning process.

- RQ2: How computer vision benefits educational robotics’ expected learning outcomes in K-12 education?The second research question revisits educational robotics’ expected learning outcomes and investigates how computer vision benefits K-12 education.

- RQ3: How affordable and feasible is the integration of solutions that combine educational robotics and computer vision in K-12 instructional activities?The third research question aims to reveal if the integration of computer vision and educational robotics activities in education can be adopted from a cost–benefit perspective. The same research question investigates also the ease of access and the availability of tools.

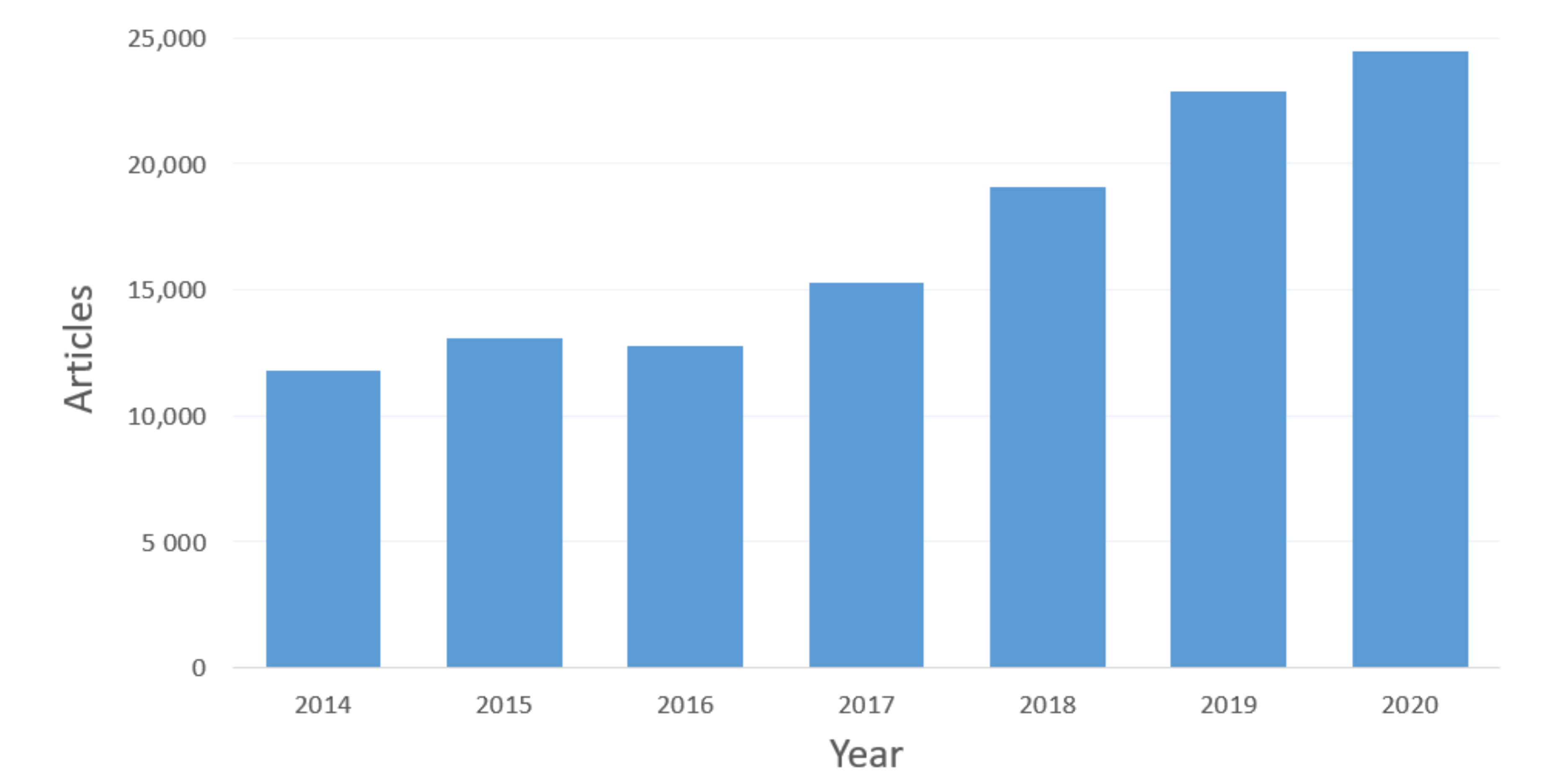

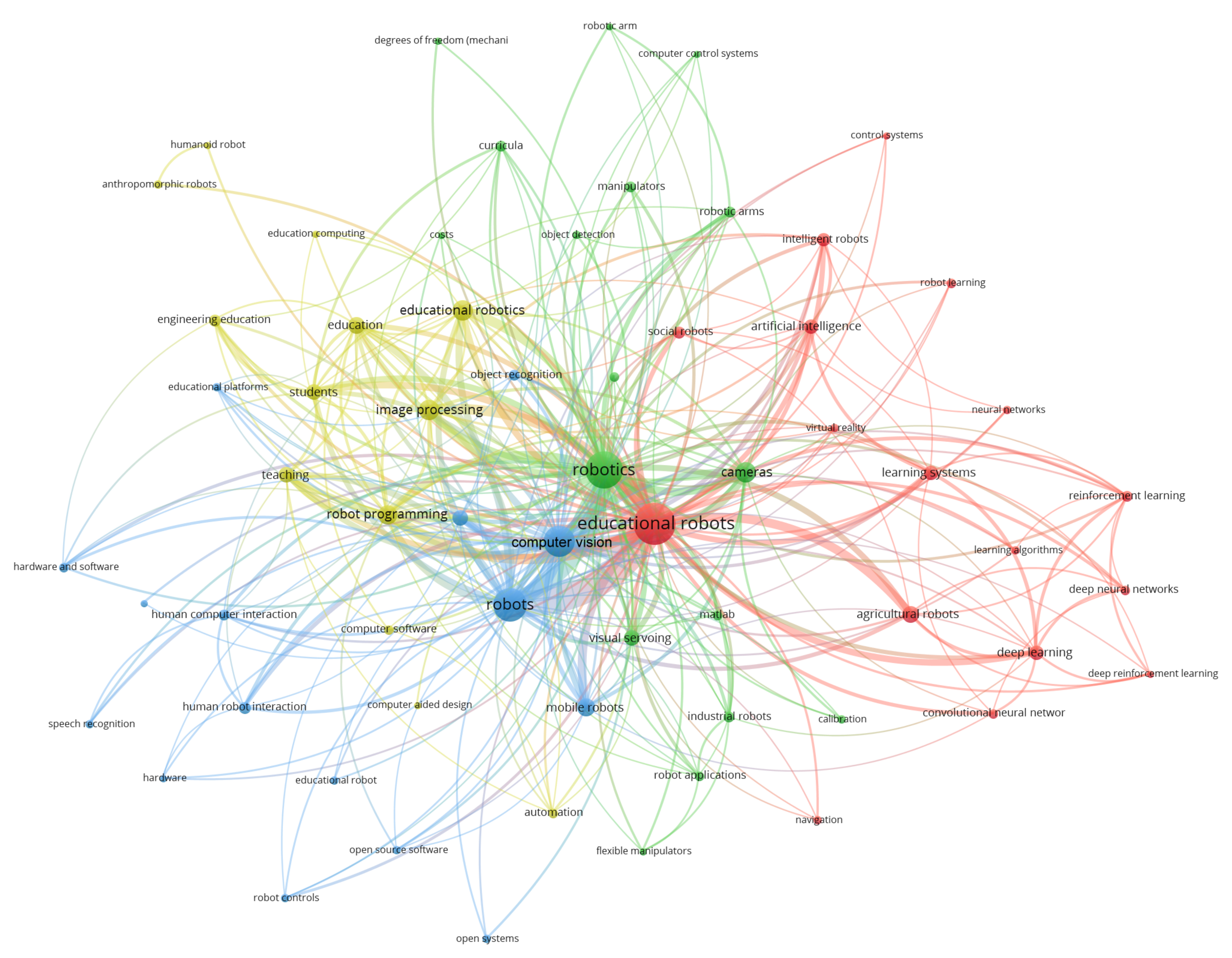

2.2. Search Approach

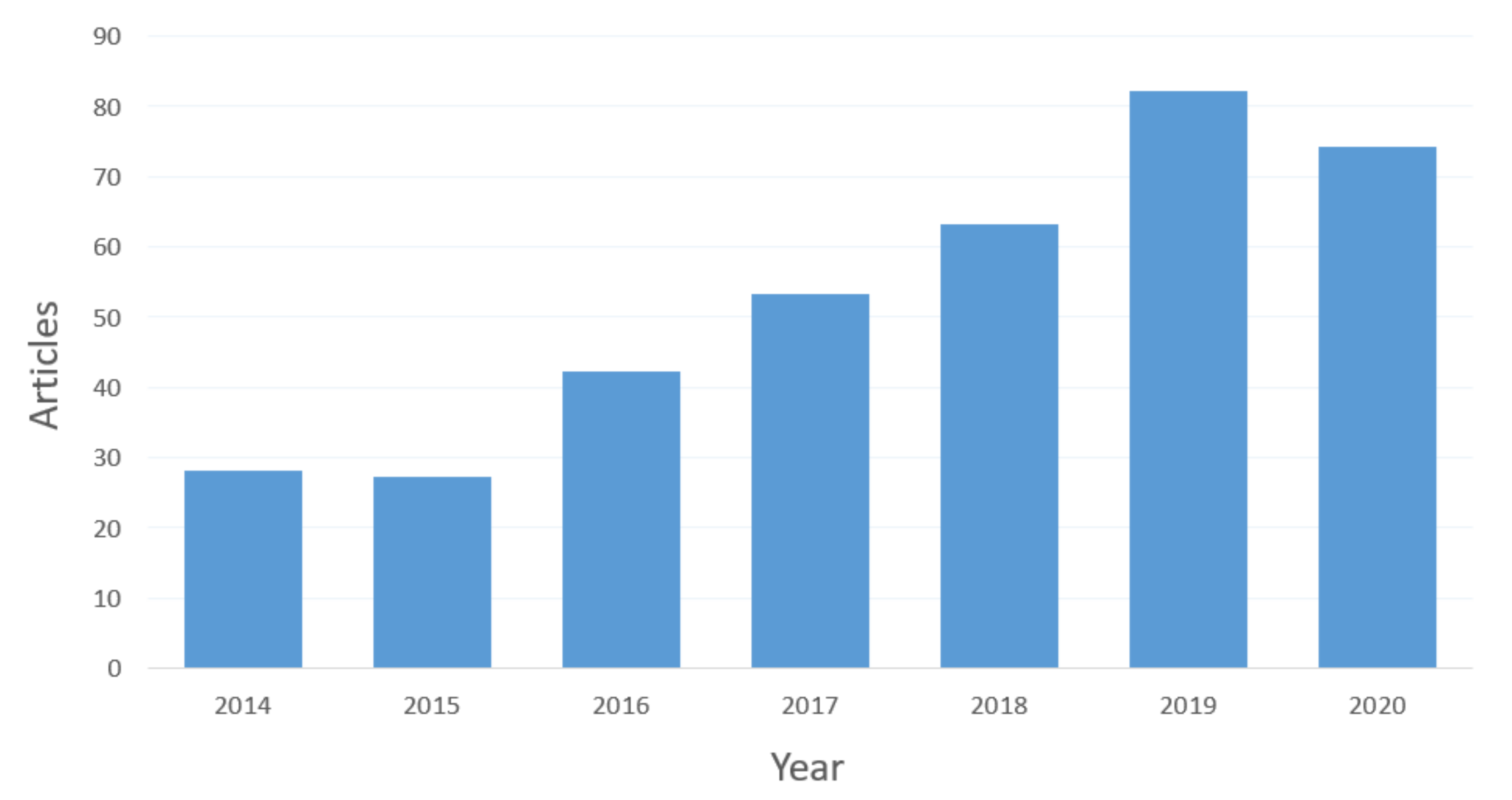

2.3. Screening of Relevant Papers

- I.C.1: Articles that present the use of computer vision in schools along with experimental outcomes.

- I.C.2: Articles that outline computer vision as an educational tool from pre-schools to the high school context.

- I.C.3: Articles that present computer vision as an assistive tool to support the educational process from pre-school to the high school context.

- E.C.1: Articles that did not mention the use of computer vision in educational robotics.

- E.C.2: Articles that outline the positive effects of computer vision in education, but they do not provide experimental results.

- E.C.3: Articles that are related to higher education.

- E.C.4: Articles that mention the use of robotics in education without utilizing computer vision techniques.

- E.C.5: Theses or books or annual reports.

- E.C.6: Articles that describe teachers’ efforts on educational robotics.

- E.C.7: Articles that were not written in English.

- E.C.8: Articles published before 2014.

2.4. Mapping Process

3. Analyzing the Literature

3.1. What Is the Role of Computer Vision in Educational Robotics?

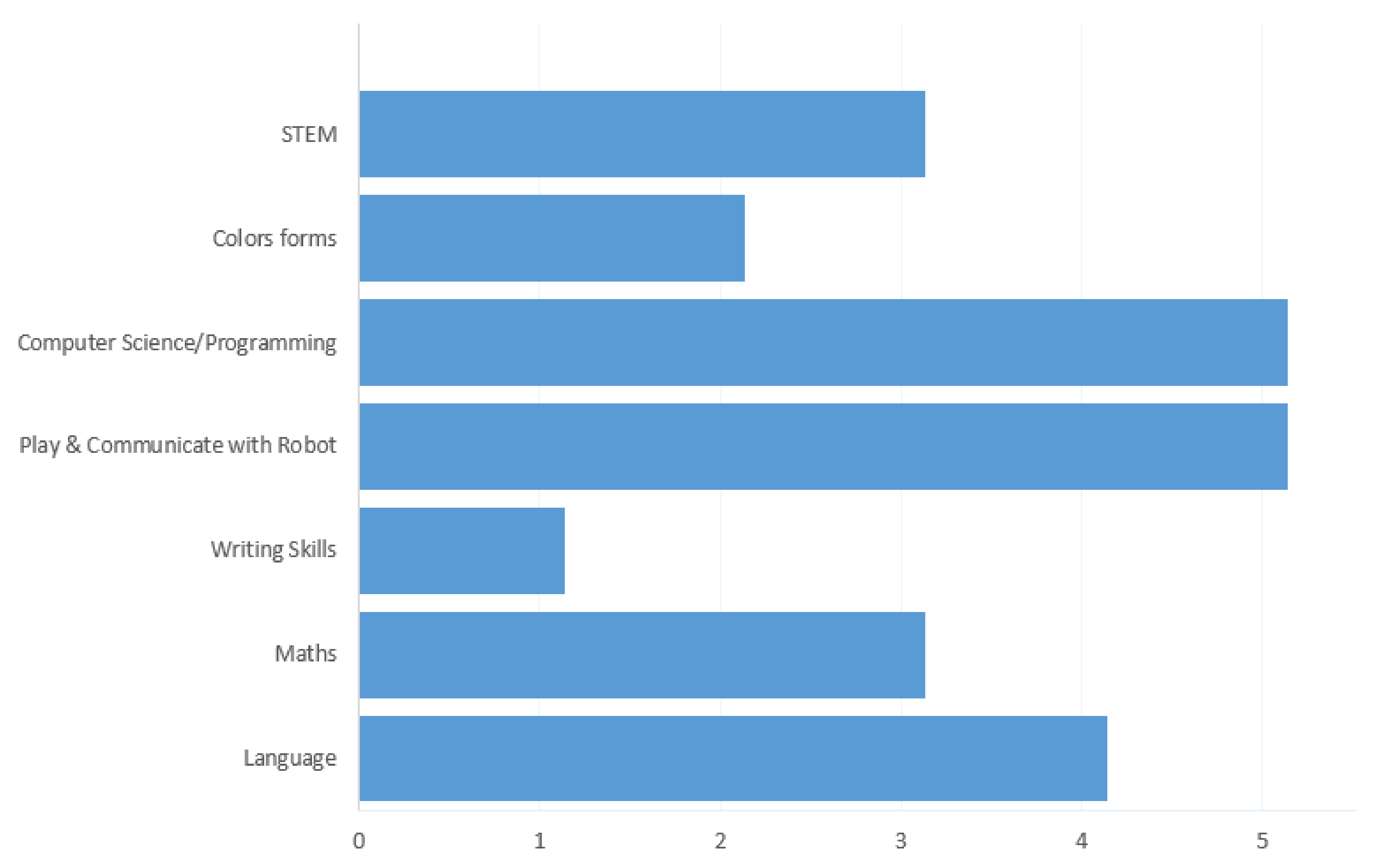

3.2. How Computer Vision Benefits Educational Robotics’ Expected Learning Outcomes in K-12 Education?

3.3. How Affordable and Feasible Is the Integration of Solutions That Combine Educational Robotics and Computer Vision in K-12 Instructional Activities?

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Goswami, S.; Uddin, M.S.; Islam, M.R. Implementation of Active Learning for ICT Education in Schools. Int. J. Innov. Sci. Res. Technol. 2020, 5, 455–459. [Google Scholar] [CrossRef]

- Hegedus, S.; Moreno-Armella, L. Information and communication technology (ICT) affordances in mathematics education. Encycl. Math. Educ. 2020, 380–384. [Google Scholar] [CrossRef]

- Qi, Y.; Pan, Z.; Zhang, S.; van den Hengel, A.; Wu, Q. Object-and-Action Aware Model for Visual Language Navigation. arXiv 2020, arXiv:2007.14626. [Google Scholar]

- Hong, Y.; Wu, Q.; Qi, Y.; Rodriguez-Opazo, C.; Gould, S. A Recurrent Vision-and-Language BERT for Navigation. arXiv 2020, arXiv:2011.13922. [Google Scholar]

- Scaramuzza, D.; Achtelik, M.; Doitsidis, L.; Fraundorfer, F.; Kosmatopoulos, E.B.; Martinelli, A.; Achtelik, M.W.; Chli, M.; Chatzichristofis, S.A.; Kneip, L.; et al. Vision-Controlled Micro Flying Robots: From System Design to Autonomous Navigation and Mapping in GPS-Denied Environments. IEEE Robot. Autom. Mag. 2014, 21, 26–40. [Google Scholar] [CrossRef]

- Kouskouridas, R.; Amanatiadis, A.; Chatzichristofis, S.A.; Gasteratos, A. What, Where and How? Introducing pose manifolds for industrial object manipulation. Expert Syst. Appl. 2015, 42, 8123–8133. [Google Scholar] [CrossRef]

- Evripidou, S.; Georgiou, K.; Doitsidis, L.; Amanatiadis, A.A.; Zinonos, Z.; Chatzichristofis, S.A. Educational Robotics: Platforms, Competitions and Expected Learning Outcomes. IEEE Access 2020, 8, 219534–219562. [Google Scholar] [CrossRef]

- Benitti, F.B.V. Exploring the educational potential of robotics in schools: A systematic review. Comput. Educ. 2012, 58, 978–988. [Google Scholar] [CrossRef]

- Toh, L.P.E.; Causo, A.; Tzuo, P.W.; Chen, I.M.; Yeo, S.H. A review on the use of robots in education and young children. J. Educ. Technol. Soc. 2016, 19, 148–163. [Google Scholar]

- Piaget, J. Part I: Cognitive development in children: Piaget development and learning. In J. Res. Sci. Teach. 1964, 2, 176–186. [Google Scholar] [CrossRef]

- Majgaard, G. Multimodal robots as educational tools in primary and lower secondary education. In Proceedings of the International Conferences Interfaces and Human Computer Interaction, Las Palmas de Gran Canaria, Spain, 22–24 July 2015; pp. 27–34. [Google Scholar]

- Shoikova, E.; Nikolov, R.; Kovatcheva, E. Conceptualizing of Smart Education. Electrotech. Electron. E+ E 2017, 52. [Google Scholar]

- Savov, T.; Terzieva, V.; Todorova, K. Computer Vision and Internet of Things: Attention System in Educational Context. In Proceedings of the 19th International Conference on Computer Systems and Technologies, Ruse, Bulgaria, 13–14 September 2018; pp. 171–177. [Google Scholar]

- Zhong, B.; Xia, L. A systematic review on exploring the potential of educational robotics in mathematics education. Int. J. Sci. Math. Educ. 2020, 18, 79–101. [Google Scholar] [CrossRef]

- Angel-Fernandez, J.M.; Vincze, M. Towards a definition of educational robotics. In Proceedings of the Austrian Robotics Workshop, Innsbruck, Austria, 17–18 May 2018; p. 37. [Google Scholar]

- Gabriele, L.; Tavernise, A.; Bertacchini, F. Active learning in a robotics laboratory with university students. In Increasing Student Engagement and Retention Using Immersive Interfaces: Virtual Worlds, Gaming, and Simulation; Emerald Group Publishing Limited: West Yorkshire, UK, 2012. [Google Scholar]

- Misirli, A.; Komis, V. Robotics and programming concepts in Early Childhood Education: A conceptual framework for designing educational scenarios. In Research on e-Learning and ICT in Education; Springer: New York, NY, USA, 2014; pp. 99–118. [Google Scholar]

- Alimisis, D. Educational robotics: Open questions and new challenges. Themes Sci. Technol. Educ. 2013, 6, 63–71. [Google Scholar]

- Mubin, O.; Stevens, C.; Shahid, S.; Mahmud, A.; Jian-Jie, D. A Review of the Applicability of Robots in Education. Available online: http://roila.org/wp-content/uploads/2013/07/209-0015.pdf (accessed on 1 January 2013).

- Jin, L.; Tan, F.; Jiang, S. Generative Adversarial Network Technologies and Applications in Computer Vision. Comput. Intell. Neurosci. 2020, 2020, 1459107. [Google Scholar] [CrossRef]

- Klette, R. Concise Computer Vision; Springer: Cham, Switzerland, 2014. [Google Scholar]

- Szeliski, R. Computer Vision: Algorithms and Applications; Springer Science & Business Media: Cham, Switzerland, 2010. [Google Scholar]

- Boronski, T.; Hassan, N. Sociology of Education; SAGE Publications Limited: London, UK, 2020. [Google Scholar]

- Bebis, G.; Egbert, D.; Shah, M. Review of computer vision education. IEEE Trans. Educ. 2003, 46, 2–21. [Google Scholar] [CrossRef]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G.; PRISMA Group. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS Med. 2009, 6, e1000097. [Google Scholar] [CrossRef]

- Petersen, K.; Feldt, R.; Mujtaba, S.; Mattsson, M. Systematic mapping studies in software engineering. In Proceedings of the 12th International Conference on Evaluation and Assessment in Software Engineering (EASE), Bari, Italy, 26–27 June 2008; pp. 1–10. [Google Scholar]

- Yli-Huumo, J.; Ko, D.; Choi, S.; Park, S.; Smolander, K. Where is current research on blockchain technology?—A systematic review. PLoS ONE 2016, 11, e0163477. [Google Scholar] [CrossRef]

- Altin, H.; Aabloo, A.; Anbarjafari, G. New era for educational robotics: Replacing teachers with a robotic system to teach alphabet writing. In Proceedings of the 4th International Workshop Teaching Robotics, Teaching with Robotics & 5th International Conference Robotics in Education, Padova, Italy, 18 July 2014; pp. 164–166. [Google Scholar]

- Wu, Q.; Wang, S.; Cao, J.; He, B.; Yu, C.; Zheng, J. Object recognition-based second language learning educational robot system for chinese preschool children. IEEE Access 2019, 7, 7301–7312. [Google Scholar] [CrossRef]

- Madhyastha, M.; Jayagopi, D.B. A low cost personalised robot language tutor with perceptual and interaction capabilities. In Proceedings of the 2016 IEEE Annual India Conference (INDICON), Bangalore, India, 16–18 December 2016; pp. 1–5. [Google Scholar]

- Jia, Y.; Shelhamer, E.; Donahue, J.; Karayev, S.; Long, J.; Girshick, R.; Guadarrama, S.; Darrell, T. Caffe: Convolutional architecture for fast feature embedding. In Proceedings of the 22nd ACM international conference on Multimedia, Mountain View, CA, USA, 18–19 June 2014; pp. 675–678. [Google Scholar]

- Kusumota, V.; Aroca, R.; Martins, F. An Open Source Framework for Educational Applications Using Cozmo Mobile Robot. In Proceedings of the 2018 Latin American Robotic Symposium, 2018 Brazilian Symposium on Robotics (SBR) and 2018 Workshop on Robotics in Education (WRE), João Pessoa, Brazil, 6–10 November 2018; pp. 569–576. [Google Scholar]

- Rios, M.L.; Netto, J.F.d.M.; Almeida, T.O. Computational vision applied to the monitoring of mobile robots in educational robotic scenarios. In Proceedings of the 2017 IEEE Frontiers in Education Conference (FIE), Indianapolis, IN, USA, 18–21 October 2017; pp. 1–7. [Google Scholar]

- He, B.; Xia, M.; Yu, X.; Jian, P.; Meng, H.; Chen, Z. An educational robot system of visual question answering for preschoolers. In Proceedings of the 2017 2nd International Conference on Robotics and Automation Engineering (ICRAE), Shanghai, China, 29–31 December 2017; pp. 441–445. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. arXiv 2015, arXiv:1506.01497. [Google Scholar] [CrossRef]

- Amanatiadis, A.; Kaburlasos, V.G.; Dardani, C.; Chatzichristofis, S.A. Interactive social robots in special education. In Proceedings of the 2017 IEEE 7th International Conference on Consumer Electronics-Berlin (ICCE-Berlin), Berlin, Germany, 3–6 September 2017; pp. 126–129. [Google Scholar]

- Chatzichristofis, S.A.; Boutalis, Y.S. CEDD: Color and Edge Directivity Descriptor: A Compact Descriptor for Image Indexing and Retrieval. In Computer Vision Systems, Proceedings of the 6th International Conference, ICVS 2008, Santorini, Greece, 12–15 May 2008; Lecture Notes in Computer Science; Gasteratos, A., Vincze, M., Tsotsos, J.K., Eds.; Springer: Berlin/Heidelberg, Germany, 2008; Volume 5008, pp. 312–322. [Google Scholar] [CrossRef]

- Amanatiadis, A.; Kaburlasos, V.G.; Dardani, C.; Chatzichristofis, S.A.; Mitropoulos, A. Social robots in special education: Creating dynamic interactions for optimal experience. IEEE Consum. Electron. Mag. 2020, 9, 39–45. [Google Scholar] [CrossRef]

- Jiménez, M.; Ochoa, A.; Escobedo, D.; Estrada, R.; Martinez, E.; Maciel, R.; Larios, V. Recognition of Colors through Use of a Humanoid Nao Robot in Therapies for Children with Down Syndrome in a Smart City. Res. Comput. Sci. 2019, 148, 239–252. [Google Scholar] [CrossRef]

- Olvera, D.; Escalona, U.; Sossa, H. Teaching Basic Concepts: Geometric Forms and Colors on a NAO Robot Platform. Res. Comput. Sci. 2019, 148, 323–333. [Google Scholar] [CrossRef]

- Darrah, T.; Hutchins, N.; Biswas, G. Design and development of a low-cost open-source robotics education platform. In Proceedings of the 50th International Symposium on Robotics (ISR 2018), Munich, Germany, 20–21 June 2018; pp. 1–4. [Google Scholar]

- Kaburlasos, V.; Bazinas, C.; Siavalas, G.; Papakostas, G. Linguistic social robot control by crowd-computing feedback. In Proceedings of the JSME annual Conference on Robotics and Mechatronics (Robomec), Kyushu, Japan, 2–5 June 2018; pp. 1A1–B13. [Google Scholar]

- Efthymiou, N.; Filntisis, P.P.; Koutras, P.; Tsiami, A.; Hadfield, J.; Potamianos, G.; Maragos, P. ChildBot: Multi-Robot Perception and Interaction with Children. arXiv 2020, arXiv:2008.12818. [Google Scholar]

- Vrochidou, E.; Najoua, A.; Lytridis, C.; Salonidis, M.; Ferelis, V.; Papakostas, G.A. Social robot NAO as a self-regulating didactic mediator: A case study of teaching/learning numeracy. In Proceedings of the 2018 26th International Conference on Software, Telecommunications and Computer Networks (SoftCOM), Split, Croatia, 13–15 September 2018; pp. 1–5. [Google Scholar]

- Alemi, M.; Meghdari, A.; Ghazisaedy, M. Employing humanoid robots for teaching English language in Iranian junior high-schools. Int. J. Humanoid Robot. 2014, 11, 1450022. [Google Scholar] [CrossRef]

- Tozadore, D.; Pinto, A.; Romero, R.; Trovato, G. Wizard of oz vs. autonomous: Children’s perception changes according to robot’s operation condition. In Proceedings of the 2017 26th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Lisbon, Portugal, 28 August–1 September 2017; pp. 664–669. [Google Scholar]

- Karalekas, G.; Vologiannidis, S.; Kalomiros, J. EUROPA: A Case Study for Teaching Sensors, Data Acquisition and Robotics via a ROS-Based Educational Robot. Sensors 2020, 20, 2469. [Google Scholar] [CrossRef] [PubMed]

- Salomi, E.; Amanatiadis, A.; Christodoulou, K.; Chatzichristofis, S.A. Introducing Algorithmic Thinking and Sequencing using Tangible Robots. IEEE Trans. Learn. Technol. 2021. [Google Scholar] [CrossRef]

- Vega, J.; Cañas, J.M. PiBot: An open low-cost robotic platform with camera for STEM education. Electronics 2018, 7, 430. [Google Scholar] [CrossRef]

- Park, J.S.; Lenskiy, A. Mobile robot platform for improving experience of learning programming languages. J. Autom. Control Eng. 2014, 2, 265–269. [Google Scholar] [CrossRef]

- Pachidis, T.; Vrochidou, E.; Kaburlasos, V.; Kostova, S.; Bonković, M.; Papić, V. Social robotics in education: State-of-the-art and directions. In Proceedings of the International Conference on Robotics in Alpe-Adria Danube Region, Patras, Greece, 6–8 June 2018; Springer: Cham, Switzerland, 2018; pp. 689–700. [Google Scholar]

| Primary Educational Tool | Assistive Technology | |

|---|---|---|

| Altin et al. (2014) | • | |

| Wu et al. (2019) | • | |

| Madhyastha (2016) | • | |

| Kusumota et al. (2018) | • | |

| Rios et al. (2017) | • | |

| He et al. (2017) | • | |

| Amanatiadis et al. (2017) | • | |

| Amanatiadis et al. (2020) | • | |

| Jimenez et al. (2019) | • | |

| Olvera et al. (2019) | • | |

| Darrah et al. (2018) | • | |

| Kaburlasos et al. (2018) | • | |

| Efthymiou et al. (2020) | • | |

| Vrochidou et al. (2018) | • | |

| Majgaard et al. (2015) | • | |

| Alemi et al. (2014) | • | |

| Tozadore et al. (2017) | • | • |

| Karalekas et al. (2020) | • | • |

| Evripidou et al. (2021) | • | • |

| Vega et al. (2018) | • | • |

| Park and Lenskiy (2014) | • | • |

| Problem Solving Skills | Self Efficacy | Computat. Thinking | Creativity | Motivation | Collaborat. | |

|---|---|---|---|---|---|---|

| Altin et al. (2014) | • | • | ||||

| Wu et al. (2019) | • | • | ||||

| Madhyastha (2016) | • | |||||

| Kusumota et al. (2018) | • | |||||

| Rios et al. (2017) | • | |||||

| He et al. (2017) | • | |||||

| Amanatiadis et al. (2017) | • | |||||

| Amanatiadis et al. (2020) | • | • | ||||

| Jimenez et al. (2019) | • | |||||

| Olvera et al. (2018) | • | • | ||||

| Darrah et al. (2018) | • | • | ||||

| Kaburlasos et al. (2018) | • | |||||

| Efthymiou et al. (2020) | • | • | ||||

| Vrochidou et al. (2018) | • | |||||

| Majgaard et al. (2015) | • | |||||

| Alemi et al. (2014) | • | • | • | |||

| Tozadore et al. (2017) | • | |||||

| Karalekas et al. (2020) | • | • | ||||

| Evripidou et al. (2021) | • | • | • | • | ||

| Vega et al. (2018) | • | |||||

| Park and Lenskiy (2014) | • | • | • |

| Paper | Age/Level | Area Explored/Topic(s) | Computer Vision | Robot Used |

|---|---|---|---|---|

| Altin et al. (2014) | Young children | Writing Skills | Image Processing | NAO |

| Wu et al. (2019) | Pre-school children | English as a second language | Object Recognition | Kinect, Projector, PC |

| Madhyastha (2016) | From 14+ ages | French or Spanish | Image Processing | A prototype robot |

| Object Recognition | language tutor | |||

| Kusumota et al. (2018) | Elementary Children | Mathematical operations, spelling, | Image and Textual Processing | Cozmo |

| directions, and questions functions | ||||

| Rios et al. (2017) | Secondary School | Teaching Robotics | Color and Image processing | MonitoRE |

| He et al. (2017) | Pre-school children | Metacognition tutoring and geometrical | Image processing | NAO |

| thinking training the pronunciation of the | Object Recognition | |||

| vocabulary in both Chinese and English | ||||

| Amanatiadis et al. (2017) | Children diagnosed with | Social communication and interaction skills, | Color Recognition and | NAO |

| Autism Spectrum Condition (ASC) | joint attention, response inhibition | Image Processing | ||

| Amanatiadis et al. (2020) | ASC | Social communication and interaction skills, | Color Recognition and | 2 NAOs |

| joint attention, response inhibition | Image Processing | |||

| Jimenez et al. (2019) | Children diagnosed with | Colors Forms | Color Recognition | NAO |

| Syndrome Down | ||||

| Olvera et al. (2018) | Pre-school, 3 and 4 years | Geometric Forms and Colors | Object and Color Recognition | NAO |

| Darrah et al. (2018) | Secondary Children | learning of computational thinking and STEM, | Shape and Color detection | Open-Source Robotics |

| with an emphasis on Computer Science concepts | ||||

| Kaburlasos et al. (2018) | From 5 to 14 years old | Play and Communicate with Robots | Image Processing and | NAO |

| Pattern Recognition | ||||

| Efthymiou et al. (2020) | Elementary, 6 to 11 years | Play and Communicate with Robots | Image Processing Object | ChildBot |

| recognition, Distance | (NAO, Furhat, Zeno) | |||

| Speech Recognition | ||||

| Vrochidou et al. (2018) | K12 system (ages 16–17) | Mathematics | Camera Presenting | NAO |

| Majgaard et al. (2015) | Between 11 and 16 years old | Programming, language learning, | Camera Presenting | NAO |

| ethics, technology and mathematics | ||||

| Alemi et al. (2014) | Children at the age of 12 | English Vocabulary | Camera Presenting | NAO |

| Tozadore et al. (2017) | Elementary Between 7 and 11 years | Interact with robot | Camera Presenting/ | NAO |

| object recognition | ||||

| Karalekas et al. (2020) | K12 system (ages 16–17) | STEM Teaching in sciences, | Using camera for watching | EUROPA |

| engineering and programming | and as a color sensor | |||

| Evripidou et al. (2021) | Pre-school children | Programming/algorithmic thinking | Camera Presenting/ Fuzzy system | Bee-bot |

| Vega et al. (2018) | Pre-university children in secondary schools | STEM Teaching | Color Recognition, | PiBot |

| Visualisation through camera | ||||

| Park and Lenskiy (2014) | Secondary School | Language Programming | Camera with computer vision algorithms | Hamster robot |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sophokleous, A.; Christodoulou, P.; Doitsidis, L.; Chatzichristofis, S.A. Computer Vision Meets Educational Robotics. Electronics 2021, 10, 730. https://doi.org/10.3390/electronics10060730

Sophokleous A, Christodoulou P, Doitsidis L, Chatzichristofis SA. Computer Vision Meets Educational Robotics. Electronics. 2021; 10(6):730. https://doi.org/10.3390/electronics10060730

Chicago/Turabian StyleSophokleous, Aphrodite, Panayiotis Christodoulou, Lefteris Doitsidis, and Savvas A. Chatzichristofis. 2021. "Computer Vision Meets Educational Robotics" Electronics 10, no. 6: 730. https://doi.org/10.3390/electronics10060730

APA StyleSophokleous, A., Christodoulou, P., Doitsidis, L., & Chatzichristofis, S. A. (2021). Computer Vision Meets Educational Robotics. Electronics, 10(6), 730. https://doi.org/10.3390/electronics10060730