Constructing Emotional Machines: A Case of a Smartphone-Based Emotion System

Abstract

1. Introduction

- (1).

- Factors requiring attention during the development of emotional machines.

- (2).

- Users’ attitudes toward emotional messages.

- (3).

- Effects of the developed emotion system on user behavior.

2. Literature Review

2.1. Affective Computing

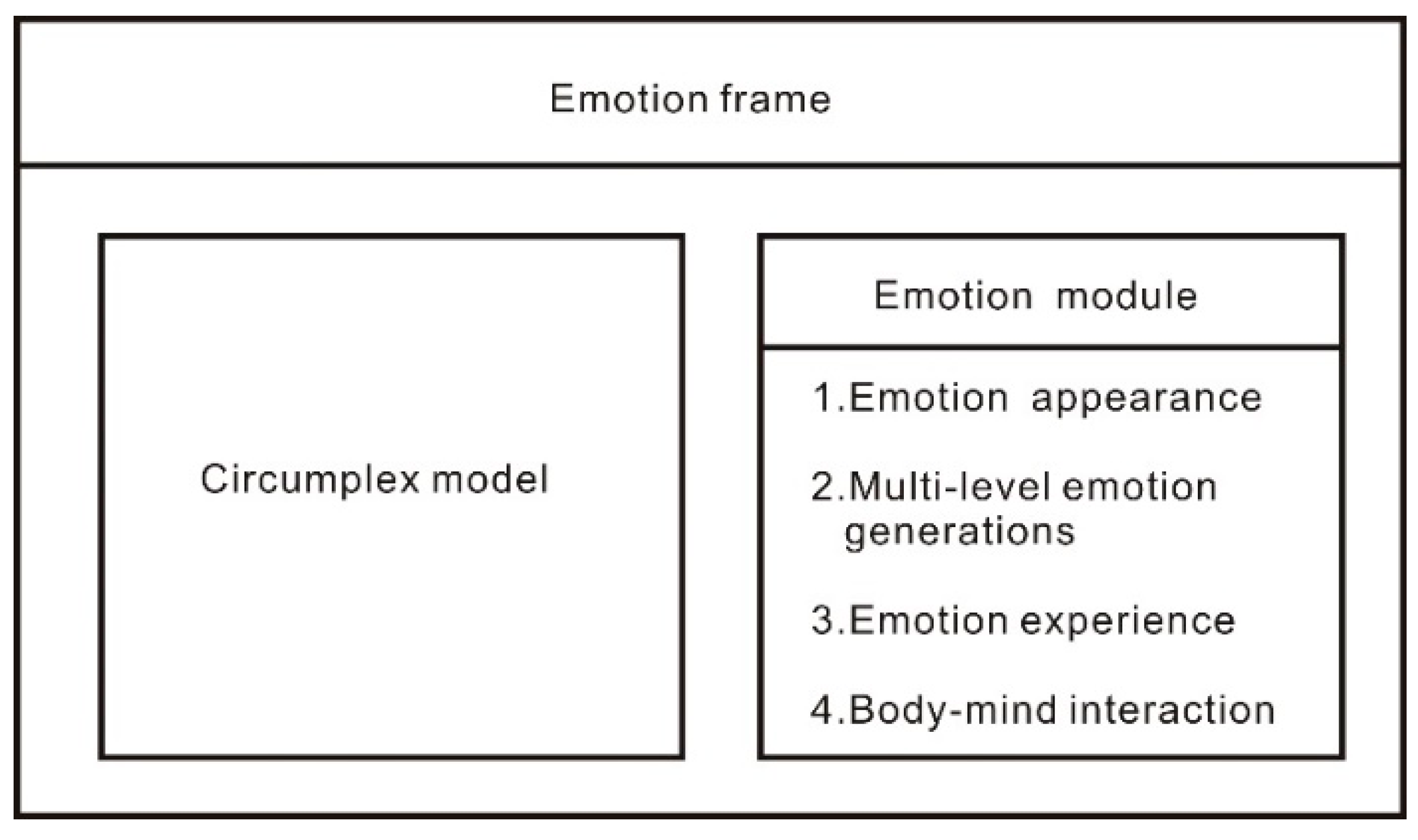

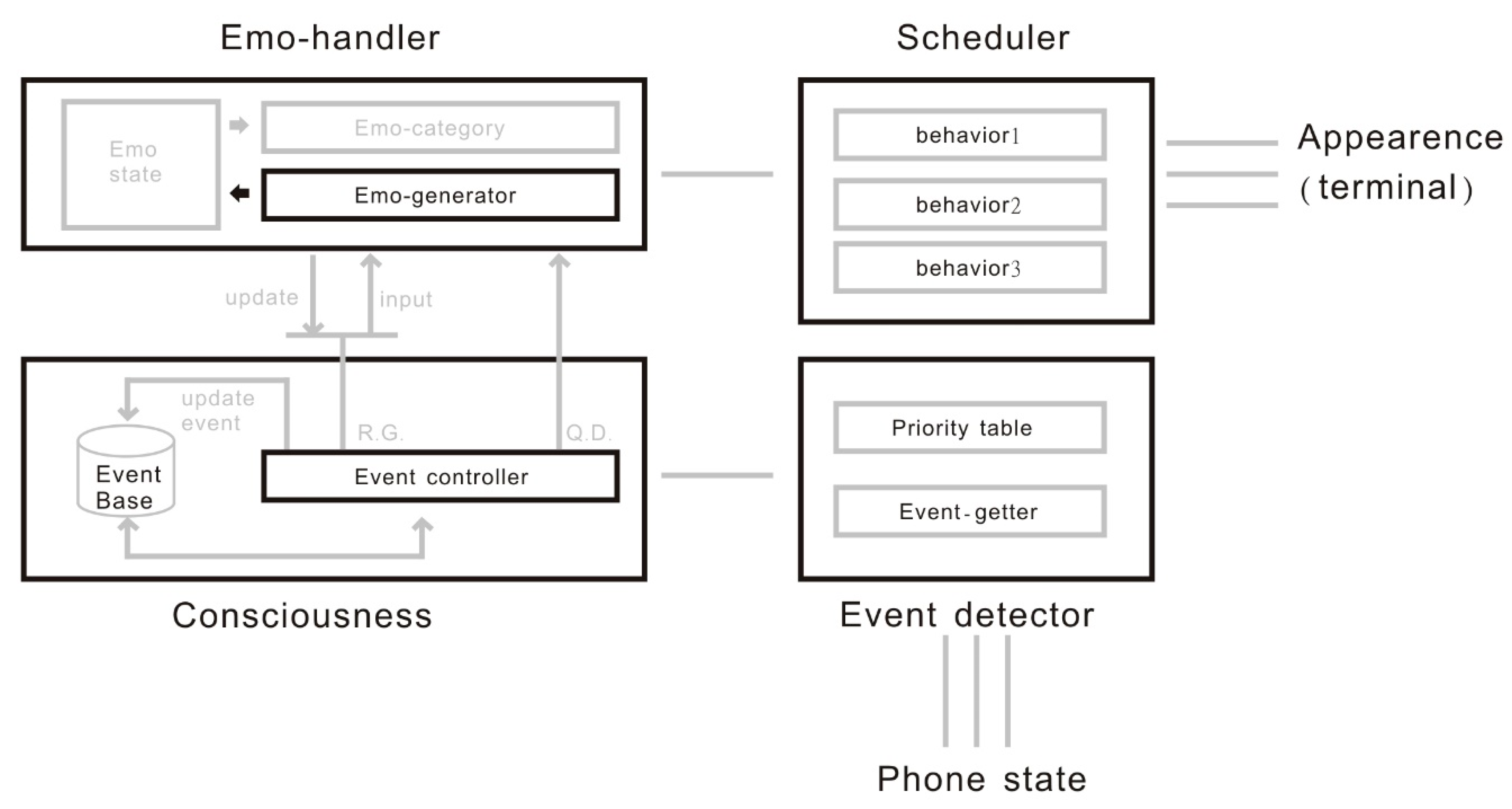

2.2. Emotion Systems

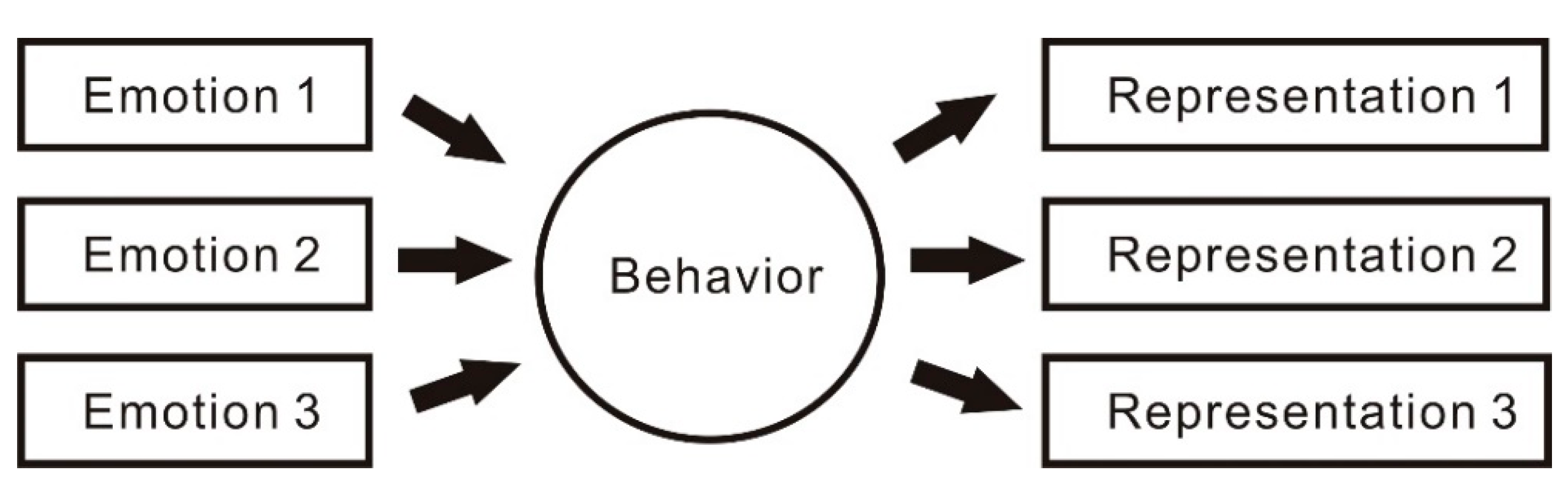

2.3. Emotional Expression

3. Research Methods

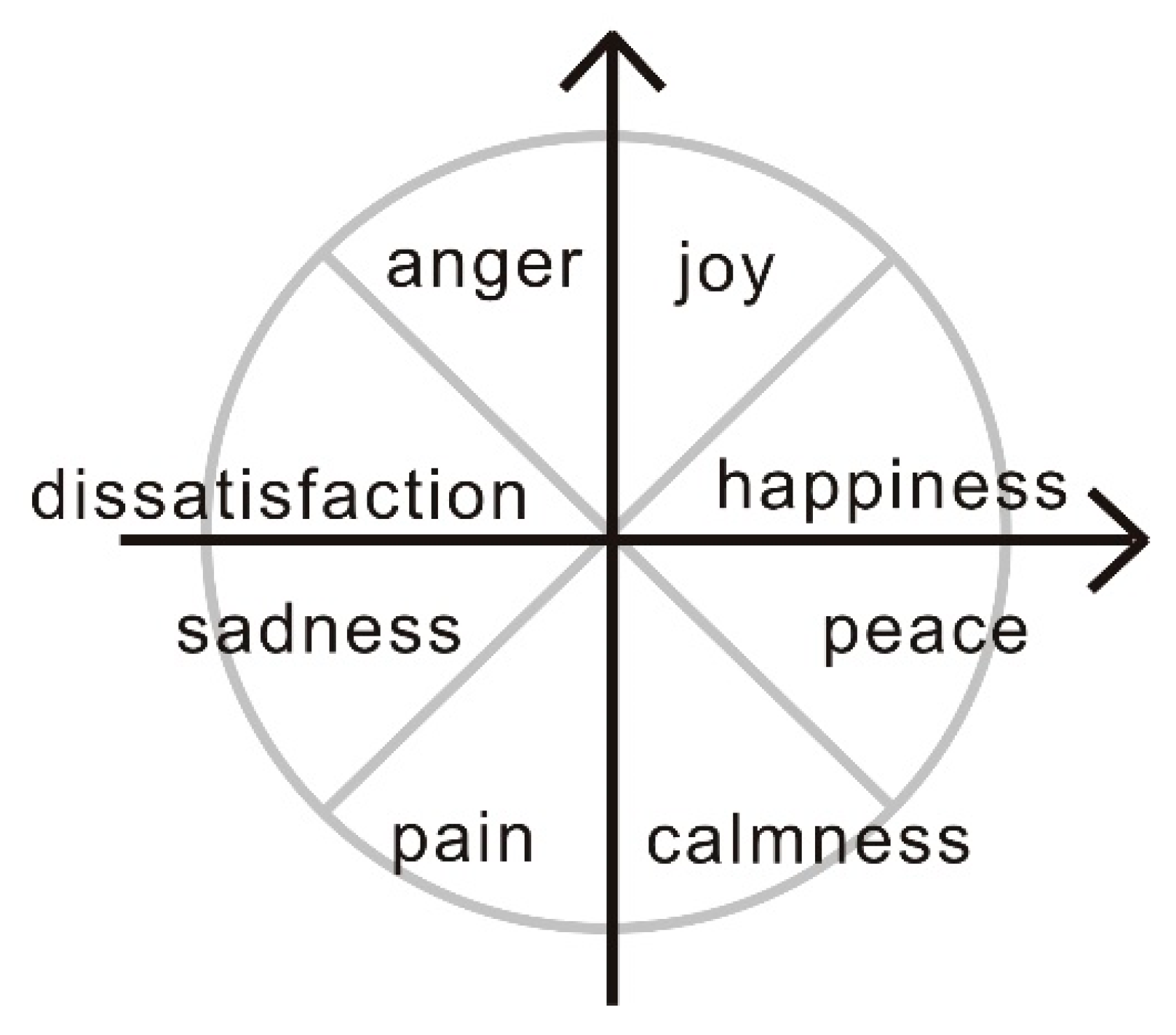

3.1. Assigning Emotions to Smartphones

3.1.1. Developing Emotional Expression

3.1.2. Developing Emotional Representation

3.1.3. Principles for Multidimensional Emotion Generation

3.1.4. Developing Emotional Experiences

3.1.5. Developing Psychophysical Interactionism

3.1.6. Method for Detecting Smartphone Conditions

3.2. Assigning Emotions to Messages

3.3. Developing a Crucial Notification Mechanism

3.4. Research Tools

3.4.1. System Usability Scale

3.4.2. Research Questionnaire

4. Research Results and Analysis

4.1. Reliability Analysis

4.2. Descriptive Statistics

4.2.1. Existence of Emotions in Machines

4.2.2. Attention Paid to Emotional Messages

4.2.3. Interest in Emotional Messages

4.2.4. Effects of Emotional Messages

4.2.5. System Usability

4.2.6. View Rate of Crucial Notifications and Instruction Execution Rate

4.3. Experimental Results

4.3.1. Factors Influencing the Participants’ Perception of Emotions in Machines

4.3.2. Participants’ Attitudes toward Emotional Messages

4.3.3. Effects of Crucial Notifications on User Behavior

4.3.4. Research Limitations

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A

| Items | Descriptions | - | ||||

|---|---|---|---|---|---|---|

| 1 | I think that I would like to use this system frequently. | 1 | 2 | 3 | 4 | 5 |

| 2 | I found the system to be unnecessarily complex. | 1 | 2 | 3 | 4 | 5 |

| 3 | I thought the system was easy to use. | 1 | 2 | 3 | 4 | 5 |

| 4 | I think that I would need the support of a technician to use this system. | 1 | 2 | 3 | 4 | 5 |

| 5 | I found the various functions in this system to be well integrated. | 1 | 2 | 3 | 4 | 5 |

| 6 | I think that excessive inconsistency exists in this system. | 1 | 2 | 3 | 4 | 5 |

| 7 | I believe that most people would learn to use this system very quickly. | 1 | 2 | 3 | 4 | 5 |

| 8 | I found the system to be very cumbersome to use. | 1 | 2 | 3 | 4 | 5 |

| 9 | I felt very confident using the system. | 1 | 2 | 3 | 4 | 5 |

| 10 | I had to learn many things before I could begin using this system. | 1 | 2 | 3 | 4 | 5 |

| Items | Descriptions | - | ||||

|---|---|---|---|---|---|---|

| 1 | When the smartphone expresses emotions, I feel as if the smartphone is alive. | 1 | 2 | 3 | 4 | 5 |

| 2 | Irrespective of whether the smartphone has emotions, I have the same view of the smartphone. | 1 | 2 | 3 | 4 | 5 |

| 3 | I care about the emotions of the smartphone. | 1 | 2 | 3 | 4 | 5 |

| 4 | Every time I turn on the smartphone, I often observe the emotions of the smartphone. | 1 | 2 | 3 | 4 | 5 |

| 5 | Even if I see the system’s emotion icon, I may not always check its emotions. | 1 | 2 | 3 | 4 | 5 |

| 6 | After closing other apps, if I see the agent of the system, I pay attention to the emotions of the smartphone. | 1 | 2 | 3 | 4 | 5 |

| 7 | I turn on the smartphone specifically to check emotional messages. | 1 | 2 | 3 | 4 | 5 |

| 8 | When I think about the emotions of the smartphone, I do not turn on the smartphone. | 1 | 2 | 3 | 4 | 5 |

| 9 | Sometimes, I turn on the smartphone simply to check the agent’s emotions. | 1 | 2 | 3 | 4 | 5 |

| 10 | I pay attention to the messages and emotional statements of the system. | 1 | 2 | 3 | 4 | 5 |

| 11 | I am not interested in the messages and emotional statements of the system. | 1 | 2 | 3 | 4 | 5 |

| 12 | I am curious about the emotional state of the system. | 1 | 2 | 3 | 4 | 5 |

| 13 | After reading the message of the system, I think about what makes the smartphone produce such emotions. | 1 | 2 | 3 | 4 | 5 |

| 14 | Even if I read the messages of the system, I do not understand the reason why the mobile smartphone produces such emotions. | 1 | 2 | 3 | 4 | 5 |

| 15 | After reading the information of the system, I can understand why the smartphone has negative or positive emotions. | 1 | 2 | 3 | 4 | 5 |

| 16 | The information provided by the system indicates the ways in which I should avoid using my smartphone. | 1 | 2 | 3 | 4 | 5 |

| 17 | The information provided by the system does not cause me to change the way I use my smartphone. | 1 | 2 | 3 | 4 | 5 |

| 18 | I adjust how I use my smartphone according to the information provided by the system and guess the emotions that the smartphone may generate. | 1 | 2 | 3 | 4 | 5 |

References

- Tang, G.; Shi, Q.; Zhang, Z.; He, T.; Sun, Z.; Lee, C. Hybridized wearable patch as a multi-parameter and multi-functional human-machine interface. Nano Energy 2021, 81. [Google Scholar] [CrossRef]

- Gadzama, W.; Joseph, B.; Aduwamai, N. Global smartphone ownership, internet usage and their impacts on humans. J. Commun. Net. 2017, 1. Available online: http://www.researchjournali.com/view.php?id=3876 (accessed on 26 January 2021).

- Taylor, K.; Silver, L. Smartphone Ownership is Growing Rapidly around the World, but Not Always Equally; Pew Research Center: Washington, DC, USA, 2019. [Google Scholar]

- An, T.; Anaya, D.V.; Gong, S.; Yap, L.W.; Lin, F.; Wang, R.; Yuce, M.R.; Cheng, W. Self-powered gold nanowire tattoo triboelectric sensors for soft wearable human-machine interface. Nano Energy 2020, 77. [Google Scholar] [CrossRef]

- Xiao, G.; Ma, Y.; Liu, C.; Jiang, D. A machine emotion transfer model for intelligent human-machine interaction based on group division. Mech. Syst. Signal Process. 2020, 142. [Google Scholar] [CrossRef]

- Liang, Z.; Oba, S.; Ishii, S. An unsupervised EEG decoding system for human emotion recognition. Neural Net. 2019, 116, 257–268. [Google Scholar] [CrossRef]

- Kaklauskas, A.; Kuzminske, A.; Zavadskas, E.K.; Daniunas, A.; Kaklauskas, G.; Seniut, M.; Raistenskis, J.; Safonov, A.; Kliukas, R.; Juozapaitis, A.; et al. Affective tutoring system for built environment management. Comput. Educ. 2015, 82, 202–216. [Google Scholar] [CrossRef]

- Dinakaran, K.; Ashokkrishna, E.M. Efficient regional multi feature similarity measure based emotion detection system in web portal using artificial neural network. Microprocess. Microsyst. 2020, 77. [Google Scholar] [CrossRef]

- Górriz, J.M.; Ramírez, J.; Ortíz, A.; Martínez-Murcia, F.J.; Segovia, F.; Suckling, J.; Leming, M.; Zhang, Y.-D.; Álvarez-Sánchez, J.R.; Bologna, G.; et al. Artificial intelligence within the interplay between natural and artificial computation: Advances in data science, trends and applications. Neurocomputing 2020, 410, 237–270. [Google Scholar] [CrossRef]

- Shi, Y.; Zhang, Z.; Huang, K.; Ma, W.; Tu, S. Human-computer interaction based on face feature localization. J. Vis. Commun. Image Represent. 2020, 70. [Google Scholar] [CrossRef]

- Feng, C.M.; Park, A.; Pitt, L.; Kietzmann, J.; Northey, G. Artificial intelligence in marketing: A bibliographic perspective. Australas. Mark. J. 2020. [Google Scholar] [CrossRef]

- Picard, R.W. Affective computing: From laughter to IEEE. IEEE Trans. Affect. Comput. 2010, 1, 11–17. [Google Scholar] [CrossRef]

- Yadegaridehkordi, E.; Noor, N.F.B.M.; Ayub, M.N.B.; Affal, H.B.; Hussin, N.B. Affective computing in education: A systematic review and future research. Comput. Educ. 2019, 142. [Google Scholar] [CrossRef]

- Poria, S.; Cambria, E.; Bajpai, R.; Hussain, A. A review of affective computing: From unimodal analysis to multimodal fusion. Inf. Fusion 2017, 37, 98–125. [Google Scholar] [CrossRef]

- Calvo, R.A.; D’Mello, S.; Gratch, J.; Kappas, A. The Oxford Handbook of Affective Computing; Oxford University Press: Oxford, UK, 2015. [Google Scholar]

- Nalepa, G.J.; Kutt, K.; Bobek, S. Mobile platform for affective context-aware systems. Future Gener. Comput. Syst. 2019, 92, 490–503. [Google Scholar] [CrossRef]

- Shi, Q.; Zhang, Z.; Chen, T.; Lee, C. Minimalist and multi-functional human machine interface (HMI) using a flexible wearable triboelectric patch. Nano Energy 2019, 62, 355–366. [Google Scholar] [CrossRef]

- Mao, X.; Li, Z. Agent based affective tutoring systems: A pilot study. Comput. Educ. 2010, 55, 202–208. [Google Scholar] [CrossRef]

- Sundström, P.; Ståhl, A.; Höök, K. A user-centered approach to affective interaction. Lect. Notes Comput. Sci. 2005, 1, 931–938. [Google Scholar]

- Ståhl, A.; Höök, K.; Svensson, M.; Taylor, A.S.; Combetto, M. Experiencing the affective diary. Pers. Ubiquitous Comput. 2009, 13, 365–378. [Google Scholar] [CrossRef]

- Tsutsumi, D.; Gyulai, D.; Takács, E.; Bergmann, J.; Nonaka, Y.; Fujita, K. Personalized work instruction system for revitalizing human-machine interaction. Procedia CIRP 2020, 93, 1145–1150. [Google Scholar] [CrossRef]

- Bretan, M.; Hoffman, G.; Weinberg, G. Emotionally expressive dynamic physical behaviors in robots. Int. J. Human Comput. Stud. 2015, 78, 1–16. [Google Scholar] [CrossRef]

- Bates, J. The role of emotion in believable agents. Commun. ACM 1994, 37, 122–125. [Google Scholar] [CrossRef]

- Ushida, H.; Hirayama, Y.; Nakajima, H. Emotion model for life-like agent and its evaluation. In Proceedings of the 15th National Conference on Artificial Intelligence and 10th Innovative Applications of Artificial Intelligence Conference, Madison, WI, USA, 26–30 July 1998; pp. 62–69. [Google Scholar]

- Maria, K.A.; Zitar, R.A. Emotional agents: A modeling and an application. Inf. Softw. Technol. 2007, 49, 695–716. [Google Scholar] [CrossRef]

- Posner, J.; Russell, J.A.; Peterson, B.S. The circumplex model of affect: An integrative approach to affective neuroscience, cognitive development, and psychopathology. Dev. Psychopathol. 2005, 17, 715–734. [Google Scholar] [CrossRef]

- Savery, R.; Weinberg, G. A survey of robotics and emotion: Classifications and models of emotional interaction. In Proceedings of the 2020 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Naples, Italy, 31 August–4 September 2020; pp. 986–993. [Google Scholar]

- Balzer, W.; Moulines, C.U.; Sneed, J.D. Structuralist Knowledge Representation: Paradigmatic Examples; Rodopi: Amsterdam, The Netherlands, 2000. [Google Scholar]

- Woodworth, R.S.; Schlosberg, H. (Eds.) Experimental Psychology; Holt, Rinehart and Winston: New York, NY, USA, 1954. [Google Scholar]

- Russell, J.A. A circumplex model of affect. J. Personal. Soc. Psychol. 1980, 39, 1161. [Google Scholar] [CrossRef]

- Bradley, M.M.; Greenwald, M.K.; Petry, M.C.; Lang, P.J. Remembering pictures: Pleasure and arousal in memory. J. Exp. Psychol. Learn. Mem. Cogn. 1992, 18, 379. [Google Scholar] [CrossRef]

- Watson, D.; Tellegen, A.; Weinberg, G. Toward a consensual structure of mood. Psychol. Bull. 1985, 78, 1–16. [Google Scholar] [CrossRef]

- Brooke, J. SUS: A quick and dirty usability scale. In Usability Evaluation in Industry; CRC Press: Boca Raton, FL, USA, 1995; Volume 189. [Google Scholar]

- Alepis, E.; Virvou, M. Automatic generation of emotions in tutoring agents for affective e-learning in medical education. Expert Syst. Appl. 2011, 55, 9840–9847. [Google Scholar] [CrossRef]

- Bohn, N.; Kundisch, D. What are we talking about when we talk about technology pivots?—A Delphi study. Inf. Manag. 2020, 57. [Google Scholar] [CrossRef]

- Meinlschmidt, G.; Tegethoff, M.; Belardi, A.; Stalujanis, E.; Oh, M.; Jung, E.K.; Kim, H.C.; Yoo, S.S.; Lee, J.H. Personalized prediction of smartphone-based psychotherapeutic micro-intervention success using machine learning. J. Affect. Disord. 2020, 264, 430–437. [Google Scholar] [CrossRef]

| Event Content | Positive and Negative Emotions | Intensity of Emotion |

|---|---|---|

| Power < 25% | −4 | −4 |

| RAM dosage > 75% | −4 | −4 |

| Call notification | 0 | 1 |

| Charging | 1 | 2 |

| When the power < 50%, sleeping, and charging | 2 | 3 |

| Event Id | Name | Valence | Arousal | Quick and Dirty |

|---|---|---|---|---|

| 1 | Battery > 75 | 3 | 2 | false |

| 2 | Battery 50~75 | 1 | 1 | false |

| 3 | Battery 25~50 | −1 | −2 | false |

| 4 | Battery < 50 | −4 | −4 | true |

| 5 | RAM > 75 | 1 | −4 | true |

| 6 | RAM 50~75 | −1 | 2 | false |

| 7 | RAM 25~50 | 1 | 1 | false |

| 8 | RAM < 50 | 3 | 2 | false |

| 9 | Storage > 90 | −4 | −4 | false |

| 10 | Storage 70~90 | −1 | −2 | false |

| 11 | Storage 40~70 | 1 | 1 | false |

| 12 | Storage < 40 | 3 | 2 | false |

| 13 | Network increased | −1 | −1 | false |

| 14 | Network decreased | 1 | 1 | false |

| 15 | Temperature good | 1 | 1 | false |

| 16 | Overheat | −1 | −1 | true |

| 17 | Charging | 1 | 2 | true |

| 18 | Call incoming | 0 | 1 | true |

| 19 | Sleep-negative charging | 2 | 3 | true |

| Cronbach’s Alpha | Internal Consistency |

|---|---|

| α ≥ 0.9 | Excellent |

| 0.9 > α ≥ 0.8 | Good |

| 0.8 > α ≥ 0.7 | Acceptable |

| 0.7 > α ≥ 0.6 | Questionable |

| 0.6 > α ≥ 0.5 | Poor |

| 0.5 > α | Unacceptable |

| Cronbach’s Alpha | Internal Consistency |

|---|---|

| 0.914 | Excellent |

| Research Orientation | Cronbach’s Alpha | Internal Consistency |

|---|---|---|

| Existence of Emotions in Machines | 0.790 | Acceptable |

| Attention Paid to Emotional Messages | 0.792 | Acceptable |

| Interest Toward Emotional Messages | 0.707 | Acceptable |

| Effects of Emotional Messages | 0.751 | Acceptable |

| System Usability | 0.807 | Good |

| Mean | Max | Min | SD | SE |

|---|---|---|---|---|

| 3.6 | 5 | 2 | 0.6 | 0.1 |

| Item | Strongly Disagree | Disagree | Neutral | Agree | Strongly Agree |

|---|---|---|---|---|---|

| Q1 | 0 | 0 | 32 (25%) | 68 (54%) | 24 (19%) |

| Q2 | 0 | 20 (16%) | 72 (58%) | 24 (19%) | 8 (6%) |

| Q3 | 0 | 8 (6%) | 40 (32%) | 60 (48%) | 16 (12%) |

| Mean | Max | Min | SD | SE |

|---|---|---|---|---|

| 3 | 5 | 1 | 0.8 | 0.1 |

| Item | Strongly Disagree | Disagree | Neutral | Agree | Strongly Agree |

|---|---|---|---|---|---|

| Q4 | 0 | 36 (29%) | 40 (32%) | 28 (22%) | 20 (16%) |

| Q5 | 8 (6%) | 24 (19%) | 40 (32%) | 32 (25%) | 20 (16%) |

| Q6 | 8 (6%) | 12 (9%) | 28 (22%) | 48 (38%) | 28 (22%) |

| Q7 | 28 (22%) | 28 (22%) | 40 (32%) | 20 (16%) | 8 (6%) |

| Q8 | 4 (3%) | 16 (12%) | 48 (38%) | 48 (38%) | 8(6%) |

| Q9 | 48 (38%) | 28 (22%) | 28 (22%) | 16 (12%) | 4 (3%) |

| Mean | Max | Min | SD | SE |

|---|---|---|---|---|

| 3.5 | 5 | 1 | 0.8 | 0.2 |

| Item | Strongly Disagree | Disagree | Neutral | Agree | Strongly Agree |

|---|---|---|---|---|---|

| Q10 | 12 (9%) | 16 (12%) | 24 (19%) | 56 (45%) | 16(12%) |

| Q11 | 4 (3%) | 12 (9%) | 40 (32%) | 68 (54%) | 0 |

| Q12 | 8 (6%) | 12 (9%) | 28 (22%) | 48 (38%) | 28 (22%) |

| Mean | Max | Min | SD | SE |

|---|---|---|---|---|

| 3.5 | 5 | 1 | 0.7 | 0.1 |

| Item | Strongly Disagree | Disagree | Neutral | Agree | Strongly Agree |

|---|---|---|---|---|---|

| Q13 | 4 (3%) | 12 (9%) | 16 (12%) | 56 (45%) | 36 (29%) |

| Q14 | 0 | 16 (12%) | 32 (25%) | 56 (45%) | 20 (16%) |

| Q15 | 4 (3%) | 16 (2%) | 8 (6%) | 60 (48%) | 36 (29%) |

| Q16 | 4 (3%) | 8 (6%) | 36(29%) | 60 (48%) | 16 (12%) |

| Q17 | 4 (3%) | 40 (32%) | 32(25%) | 40 (32%) | 8 (6%) |

| Q18 | 12 (9%) | 28 (22%) | 24 (19%) | 44 (35%) | 16 (12%) |

| Mean | Max | Min | SD | SE |

|---|---|---|---|---|

| 4.1 | 5 | 2 | 0.5 | 0.1 |

| Item | Strongly Disagree | Disagree | Neutral | Agree | Strongly Agree |

|---|---|---|---|---|---|

| Q1 | 0 | 4 (3%) | 48 (38%) | 56 (45%) | 16 (12%) |

| Q2 | 0 | 0 | 24 (19%) | 68(54%) | 32 (25%) |

| Q3 | 0 | 0 | 20 (16%) | 68 (54%) | 36 (29%) |

| Q4 | 0 | 0 | 16(12%) | 40 (32%) | 68 (54%) |

| Q5 | 0 | 8 (6%) | 44 (35%) | 48 (38%) | 24 (19%) |

| Q6 | 0 | 4 (3%) | 32 (25%) | 48 (38%) | 40 (32%) |

| Q7 | 0 | 0 | 16 (12%) | 52 (41%) | 56 (45%) |

| Q8 | 0 | 4 (3%) | 28 (22%) | 36 (29%) | 56 (45%) |

| Q9 | 0 | 0 | 12 (9%) | 52 (41%) | 60 (48%) |

| Q10 | 0 | 0 | 16 (12%) | 48 (38%) | 60 (48%) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, H.-C.K.; Ma, Y.-C.; Lee, M. Constructing Emotional Machines: A Case of a Smartphone-Based Emotion System. Electronics 2021, 10, 306. https://doi.org/10.3390/electronics10030306

Lin H-CK, Ma Y-C, Lee M. Constructing Emotional Machines: A Case of a Smartphone-Based Emotion System. Electronics. 2021; 10(3):306. https://doi.org/10.3390/electronics10030306

Chicago/Turabian StyleLin, Hao-Chiang Koong, Yu-Chun Ma, and Min Lee. 2021. "Constructing Emotional Machines: A Case of a Smartphone-Based Emotion System" Electronics 10, no. 3: 306. https://doi.org/10.3390/electronics10030306

APA StyleLin, H.-C. K., Ma, Y.-C., & Lee, M. (2021). Constructing Emotional Machines: A Case of a Smartphone-Based Emotion System. Electronics, 10(3), 306. https://doi.org/10.3390/electronics10030306