Classification of Microscopic Laser Engraving Surface Defect Images Based on Transfer Learning Method

Abstract

:1. Introduction

2. Related Works

2.1. Machine Learning Methods

2.2. Deep Learning Methods

3. Proposed Method

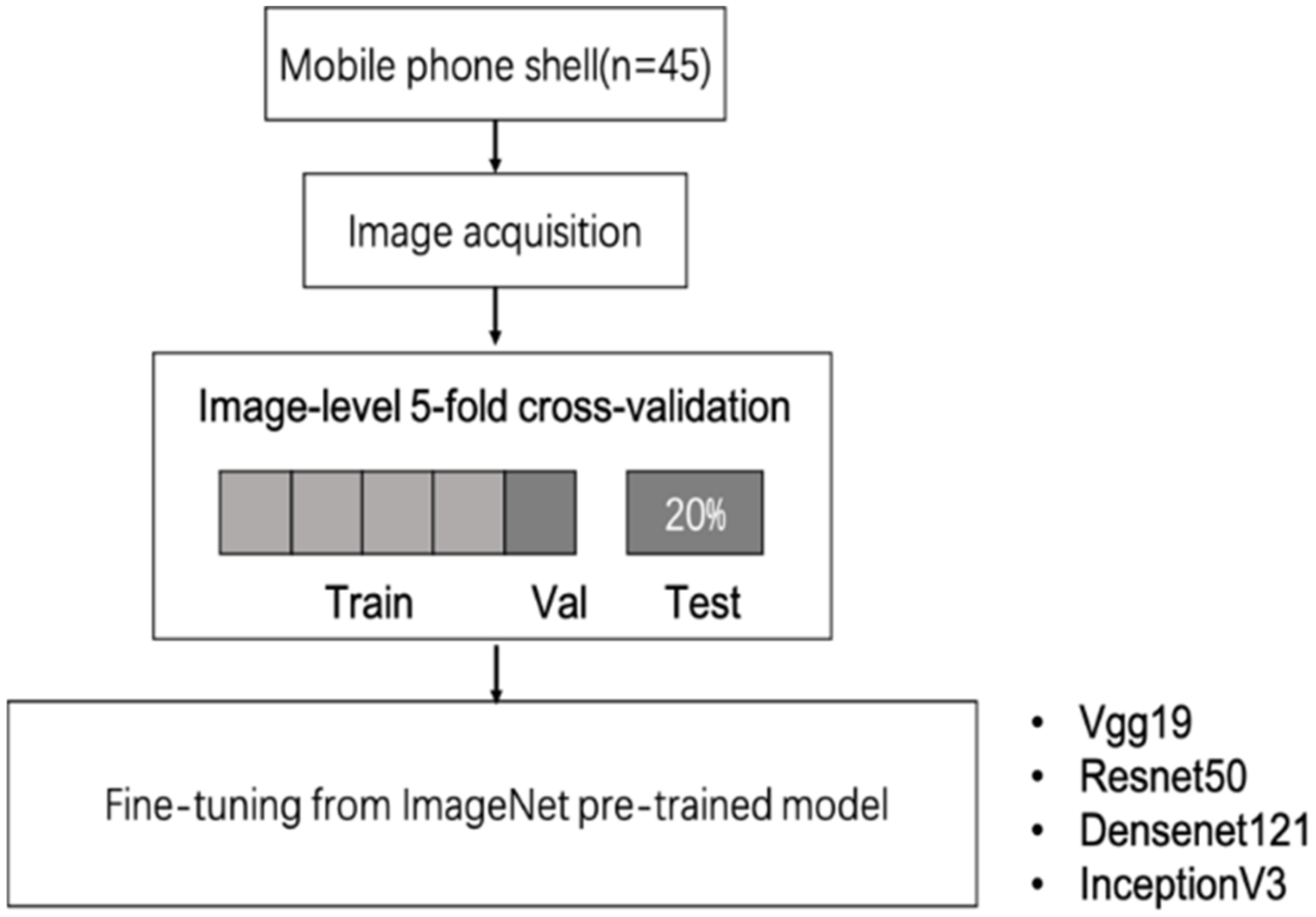

3.1. Overview of Method

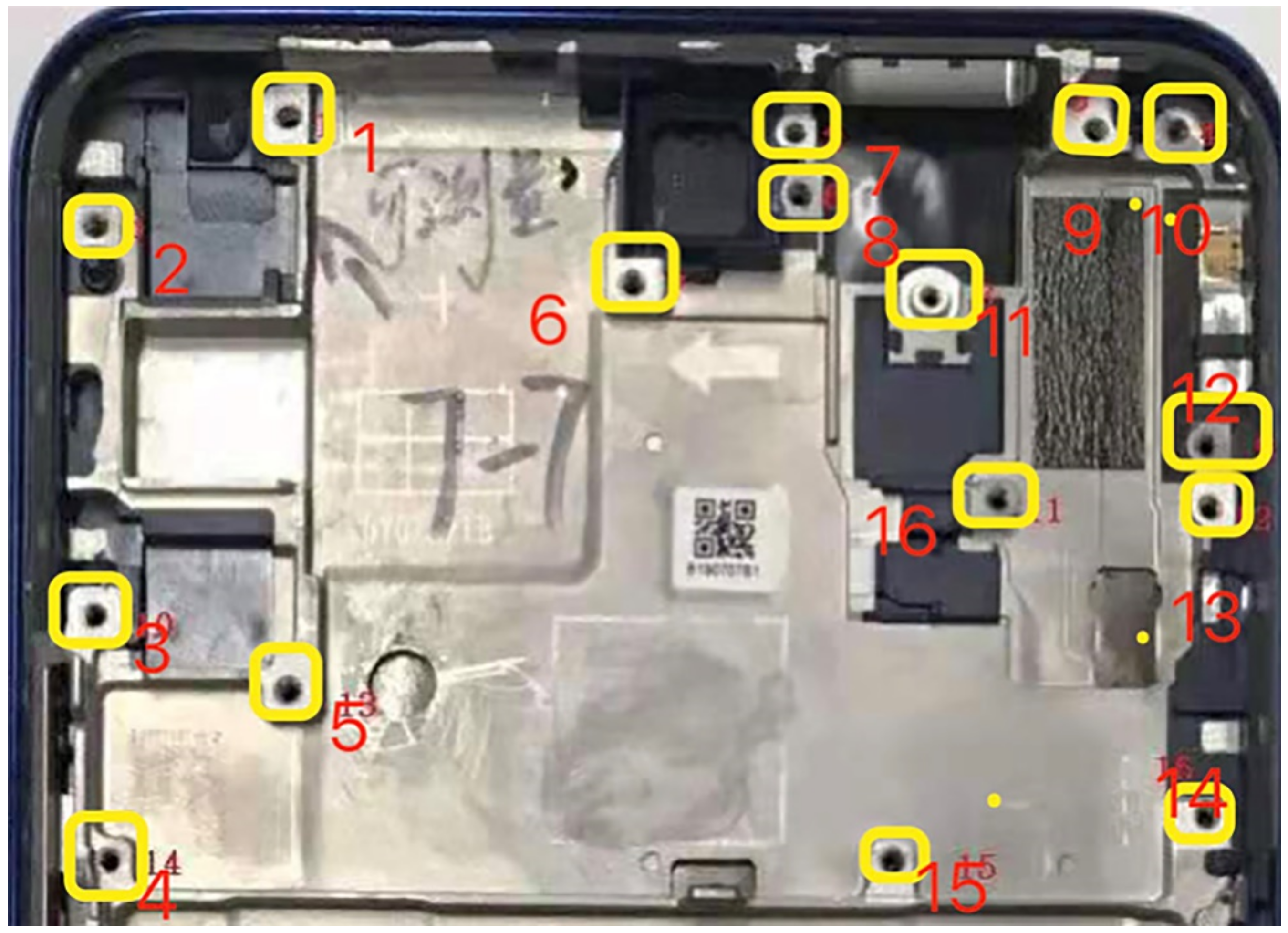

3.2. Dataset

3.3. CNN Models

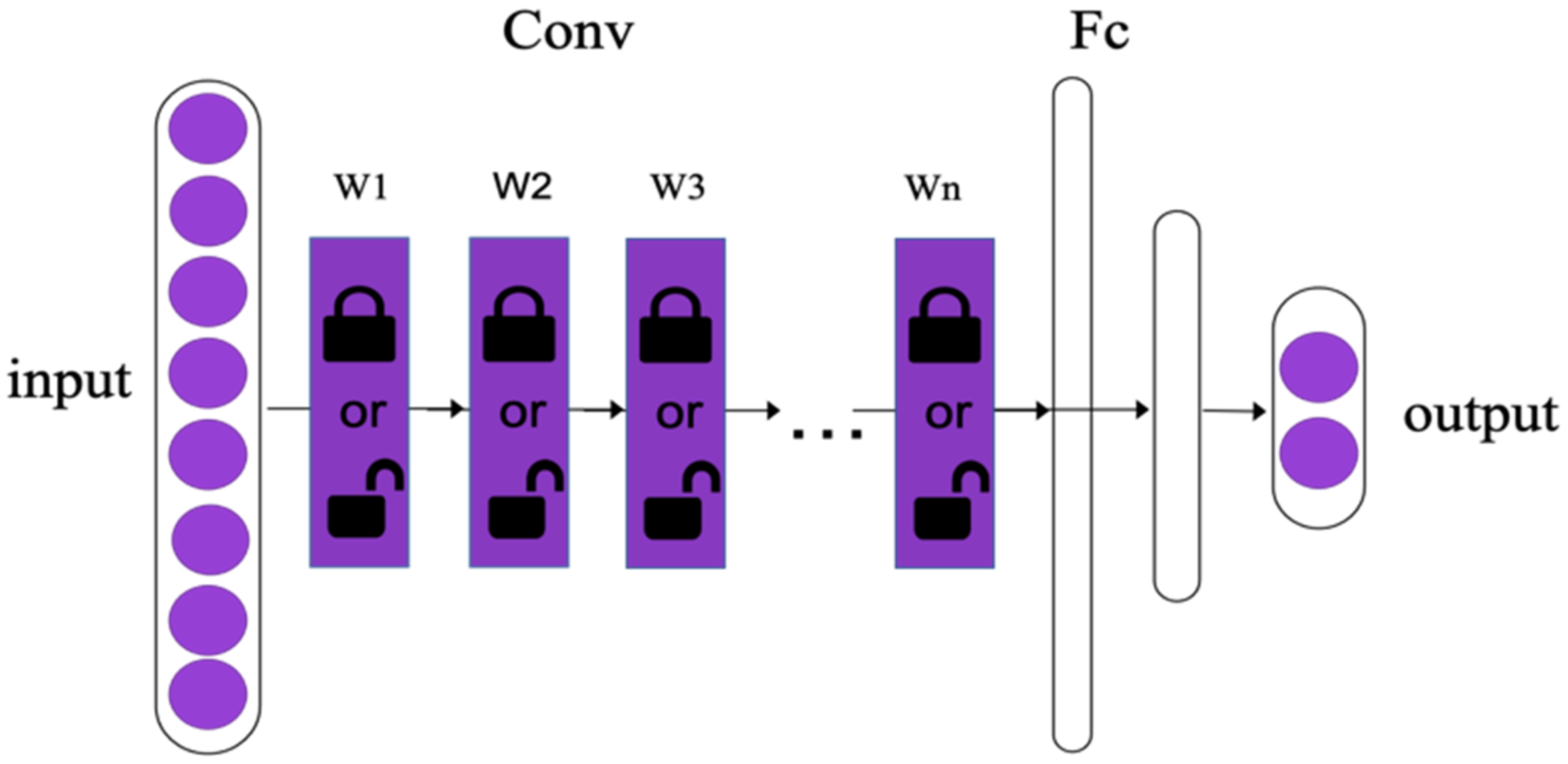

3.4. Transfer-Learning

3.5. General Configuration

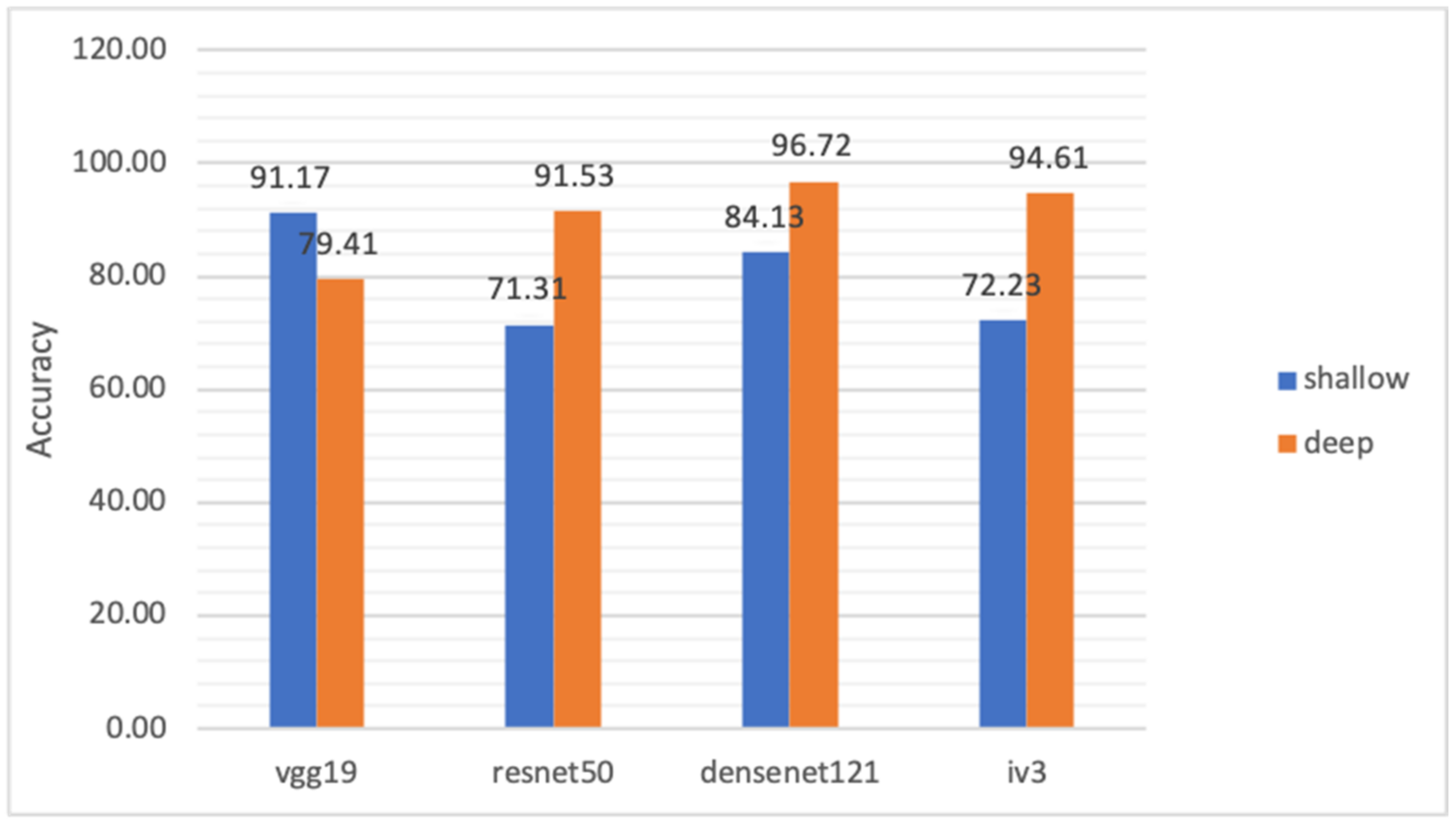

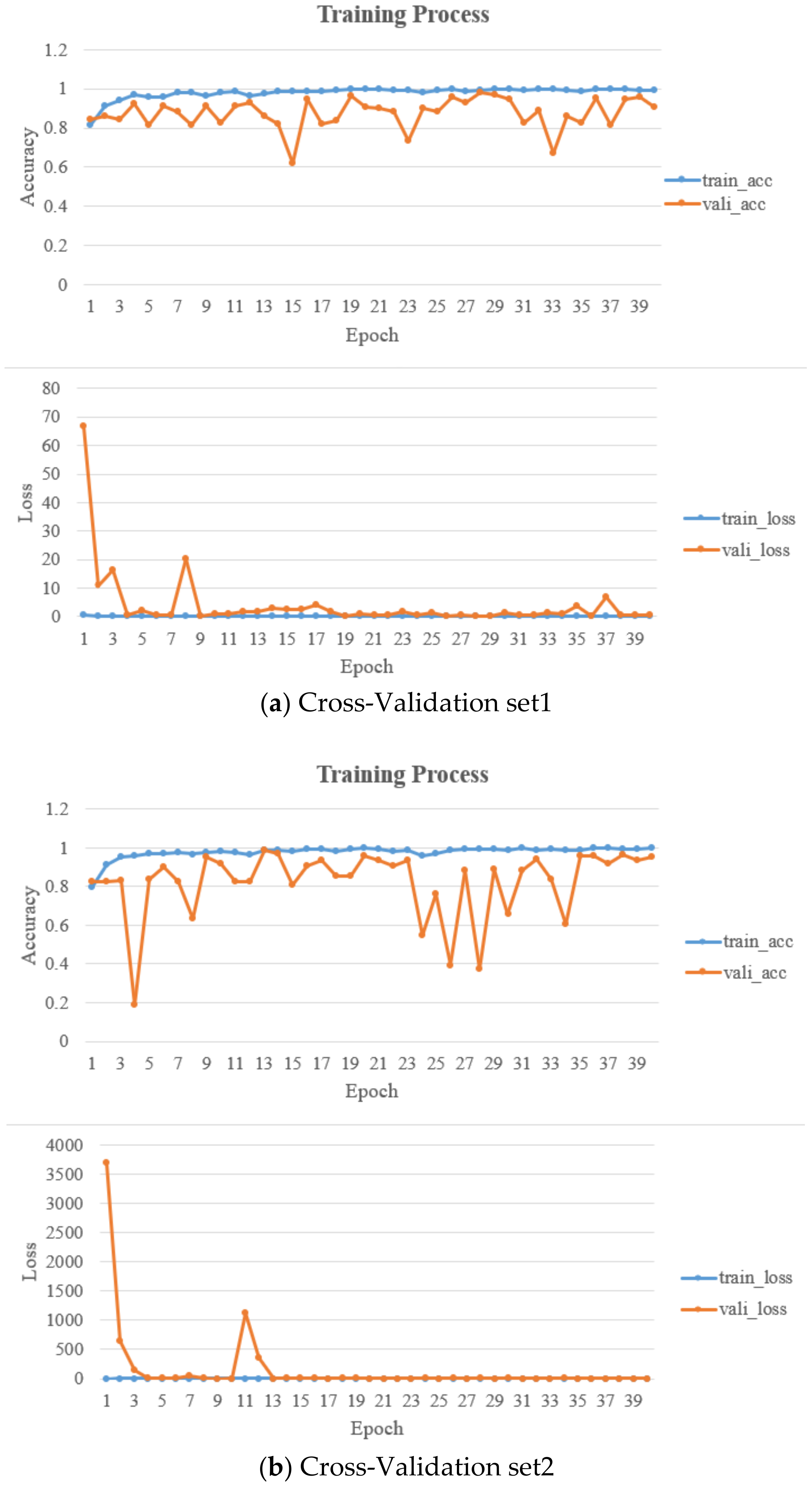

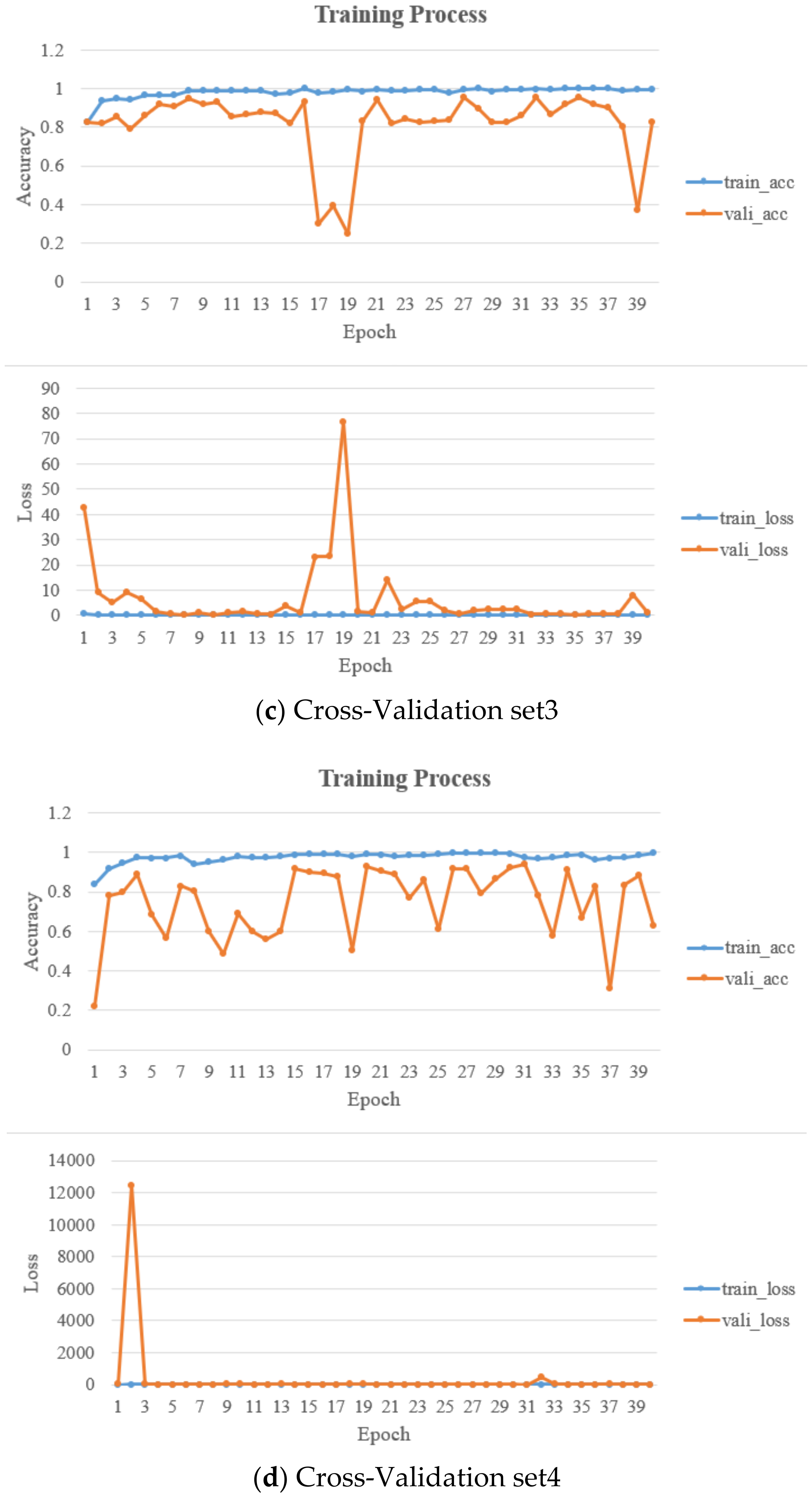

4. Experimental Results and Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CNNs | convolutional neural networks |

| FFT | fast Fourier transform |

| GLCM | gray-level Co-occurrence matrix |

| ILSVRC | ImageNet Large Scale Visual Recognition Challenge |

| LBP | local binary pattern |

| LVQ | learning vector quantization |

| MLP | multilayer perception |

| PCB | printed circuit board |

| SVM | support vector machine |

References

- Ebayyeh, A.A.R.M.A.; Mousavi, A. A Review and Analysis of Automatic Optical Inspection and Quality Monitoring Methods in Electronics Industry. IEEE Access 2020, 8, 183192–183271. [Google Scholar] [CrossRef]

- Wang, M.-J.J.; Huang, C.-L. Evaluating the Eye Fatigue Problem in Wafer Inspection. IEEE Trans. Semicond. Manuf. 2004, 17, 444–447. [Google Scholar] [CrossRef] [Green Version]

- Jiang, B.C.; Wang, Y.M.W.C.C. Bootstrap sampling technique applied to the PCB golden fingers defect classification study. Int. J. Prod. Res. 2001, 39, 2215–2230. [Google Scholar] [CrossRef]

- Kim, B.; Jeong, Y.S.; Tong, S.H.; Jeong, M.K. A generalized uncertain decision tree for defect classification of multiple wafer maps. Int. J. Prod. Res. 2020, 58, 2805–2821. [Google Scholar] [CrossRef]

- Kang, S.; Cho, S.; An, D.; Rim, J. Using Wafer Map Features to Better Predict Die-Level Failures in Final Test. IEEE Trans. Semicond. Manuf. 2015, 28, 431–437. [Google Scholar] [CrossRef]

- Baly, R.; Hajj, H. Wafer Classification Using Support Vector Machines. IEEE Trans. Semicond. Manuf. 2012, 25, 373–383. [Google Scholar] [CrossRef]

- Ooi, P.L.; Sok, H.K.; Kuang, Y.C.; Demidenko, S.; Chan, C. Defect cluster recognition system for fabricated semiconductor wafers. Eng. Appl. Artif. Intell. 2013, 26, 1029–1043. [Google Scholar] [CrossRef] [Green Version]

- Kuo, C.-F.J.; Tung, C.-P.; Weng, W.-H. Applying the support vector machine with optimal parameter design into an automatic inspection system for classifying micro-defects on surfaces of light-emitting diode chips. J. Intell. Manuf. 2016, 34, 123–141. [Google Scholar] [CrossRef]

- Sun, T.-H.; Tien, F.-C.; Kuo, R.-J. Automated thermal fuse inspection using machine vision and artificial neural networks. J. Intell. Manuf. 2016, 27, 639–651. [Google Scholar] [CrossRef]

- Chiou, Y.-C.; Lin, C.-S.; Chiou, B.-C. The feature extraction and analysis of flaw detection and classification in BGA gold-plating areas. Expert Syst. Appl. 2008, 35, 1771–1779. [Google Scholar] [CrossRef]

- Hussain, B.; Jalil, B.; Pascali, M.A.; Imran, M.; Serafino, G.; Moroni, D.; Ghelfi, P. Thermal vulnerability detection in integrated electronic and photonic circuits using infrared thermography. Appl. Opt. 2020, 59, E97–E106. [Google Scholar] [CrossRef]

- Lu, H.; Mehta, D.; Paradis, O.; Asadizanjani, N.; Tehranipoor, M.; Woodard, D. FICS-PCB: A Multi-Modal Image Dataset for Automated Printed Circuit Board Visual Inspection. IACR Cryptol. ePrint Arch. 2020, 2020, 366. [Google Scholar]

- Sathiaseelan, M.A.M.; Paradis, O.P.; Taheri, S.S.T.; Asadizanjani, N. Why Is Deep Learning Challenging for Printed Circuit Board (PCB) Component Recognition and How Can We Address It? Cryptography 2021, 5, 9. [Google Scholar] [CrossRef]

- Senthikumar, M.; Palanisamy, V.; Jaya, J. Metal surface defect detection using iterative thresholding technique. In Proceedings of the Second International Conference on Current Trends in Engineering and Technology—ICCTET, Piscataway, NJ, USA, 8 July 2014; pp. 561–654. [Google Scholar]

- Wang, C.; Guan, S.; Li, W.; Hong, B.; Liang, H. Surface defect detection method of mechanical parts based on target feature. In Proceedings of the 6th International Conference on Mechatronics, Materials, Biotechnology and Environment (ICMMBE 2016), Yinchuan, China, 13–14 August 2016; pp. 229–232. [Google Scholar]

- Medina, R.; Gayubo, F.; Gonzalez, L.M.; Olmedo, D.; García-Bermejo, J.G.; Casanova, E.Z.; Per’an, J.R. Surface defects detection on rolled steel strips by Gabor filters. In Proceedings of the Third International Conference on Computer Vision Theory and Applications, Funchal, Portugal, 22–25 January 2008; pp. 479–485. [Google Scholar]

- Wu, G.; Zhang, H.; Sun, X.; Xu, J.; Xu, K. A brand-new feature extraction method and its application to surface defect recognition of hot rolled strips. In Proceedings of the IEEE International Conference on Automation and Logistics, Jinan, China, 18–21 August 2017; pp. 2069–2074. [Google Scholar]

- Liu, W.; Yan, Y. Automated surface defect detection for cold-rolled steel strip based on wavelet anisotropic diffusion method. Int. J. Ind. Syst. Eng. 2014, 17, 224–239. [Google Scholar] [CrossRef]

- Ashour, M.W.; Khalid, F.; Halin, A.A.; Abdullah, L.N.; Darwish, S.H. Surface defects classification of hot-rolled stell strip using multi-directional shearlet features. Arab. J. Sci. Eng. 2019, 44, 2925–2932. [Google Scholar] [CrossRef]

- Ojala, T.; Pietikinen, M.; Menp, T. Gray Scale and Rotation Invariant Texture Classification with Local Binary Patterns; Springer: Berlin/Heidelberg, Germany, 2000; Volume 44, pp. 341–350. [Google Scholar]

- Chen, P.H.; Lin, C.J.; Schölkopf, B. A tutorial on ν-support vector machines. Appl. Stoch. Models Bus. Ind. 2005, 21, 111–136. [Google Scholar] [CrossRef]

- Yang, B.-B.; Shen, S.-Q.; Gao, W. Weighted Oblique Decision Trees. Proc. Conf. AAAI Artif. Intell. 2019, 33, 5621–5627. [Google Scholar] [CrossRef] [Green Version]

- Yuk, E.H.; Park, S.H.; Park, C.-S.; Baek, J.-G. Feature-Learning-Based Printed Circuit Board Inspection via Speeded-Up Robust Features and Random Forest. Appl. Sci. 2018, 8, 932. [Google Scholar] [CrossRef] [Green Version]

- Boser, B.E.; Guyon, I.M.; Vapnik, V.N. A training algorithm for optimal margin classifiers. In Proceedings of the Fifth Annual ACM Workshop on Computational Learning Theory, Pittsburgh, PA, USA, 27–29 July 1992; pp. 144–152. [Google Scholar]

- Sindagi, V.A.; Srivastava, S. Domain Adaptation for Automatic OLED Panel Defect Detection Using Adaptive Support Vector Data Description. Int. J. Comput. Vis. 2017, 122, 193–211. [Google Scholar] [CrossRef]

- Li, T.-S.; Huang, C.-L. Defect spatial pattern recognition using a hybrid SOM–SVM approach in semiconductor manufacturing. Expert Syst. Appl. 2009, 36, 374–385. [Google Scholar] [CrossRef]

- Liao, C.-S.; Hsieh, T.-J.; Huang, Y.-S.; Chien, C.-F. Similarity searching for defective wafer bin maps in semiconductor manufacturing. IEEE Trans. Autom. Sci. Eng. 2014, 11, 953–960. [Google Scholar] [CrossRef]

- Cha, Y.-J.; Choi, W.; Büyüköztürk, O. Deep Learning-Based Crack Damage Detection Using Convolutional Neural Networks. Comput. Aided Civ. Infrastruct. Eng. 2017, 32, 361–378. [Google Scholar] [CrossRef]

- Kuo, F.-J.; Hsu, C.-T.-M.; Liu, Z.-X.; Wu, H.-C. Automatic inspection system of LED chip using two-stages back-propagation neural network. J. Intell. Manuf. 2014, 25, 1235–1243. [Google Scholar] [CrossRef]

- Su, C.-T.; Yang, T.; Ke, C.-M. A neural-network approach for semiconductor wafer post-sawing inspection. IEEE Trans. Semicond. Manuf. 2002, 15, 260–266. [Google Scholar] [CrossRef]

- Azizpour, H.; Razavian, A.S.; Sullivan, J.; Maki, A.; Carlsson, S. From generic to specific deep representations for visual recognition. arXiv 2014, arXiv:1406.5774. [Google Scholar]

- Bar, Y.; Diamant, I.; Wolf, L.; Greenspan, H. Deep Learning with Non-Medical Training Used for Chest Pathology Identification. In Medical Imaging 2015: Computer-Aided Diagnosis; International Society for Optics and Photonics (SPIE): Bellingham, WA, USA, 2015; Volume 9414, p. 94140V. [Google Scholar]

- Huang, G.-B.; Zhou, H.; Ding, X.; Zhang, R. Extreme Learning Machine for Regression and Multiclass Classification. IEEE Trans. Syst. Man Cybern. Part B Cybern. 2012, 42, 513–529. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Huang, G.B.; Zhu, Q.Y.; Siew, C.K. Extreme Learning Machine: A New Learning Scheme of Feedforward Neural Networks. In Proceedings of the 2004 IEEE International Joint Conference on Neural Networks, Budapest, Hungary, 25–29 July 2004; Volume 2, pp. 985–990. [Google Scholar]

- Huang, G.-B. Can threshold networks be trained directly? IEEE Trans. Circuits Syst. II Express Briefs 2006, 53, 187–191. [Google Scholar] [CrossRef]

- Huang, G.-B.; Zhu, Q.-Y.; Siew, C.-K. Real-Time Learning Capability of Neural Networks. IEEE Trans. Neural Netw. 2006, 17, 863–878. [Google Scholar] [CrossRef] [Green Version]

- Yang, H.; Mei, S.; Song, K.; Tao, B.; Yin, Z. Transfer-Learning-Based Online Mura Defect Classification. IEEE Trans. Semicond. Manuf. 2018, 31, 116–123. [Google Scholar] [CrossRef]

- Imoto, K.; Nakai, T.; Ike, T.; Haruki, K.; Sato, Y. A CNN-Based Transfer Learning Method for Defect Classification in Semiconductor Manufacturing. IEEE Trans. Semicond. Manuf. 2019, 32, 455–459. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the 3rd International Conference on Learning Representations (ICLR 2015), San Diego, CA, USA, 7–9 May 2015; pp. 1–14. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar] [CrossRef] [Green Version]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2818–2826. [Google Scholar] [CrossRef] [Green Version]

| Hyperparameter | Learning Rate | Decay | Batch Size | Optimizer | Loss |

|---|---|---|---|---|---|

| value | 0.001 | 0.9 | 32 | Adam | Binary_crossentropy |

| Fine-Tuning | Recall | Precision | F1-Score | Accuracy |

|---|---|---|---|---|

| VGG19 FT, shallow | 96.03 | 83.47 | 86.53 | 91.17 |

| VGG19 FT, deep | 91.84 | 72.94 | 77.83 | 79.41 |

| ResNet50 FT, shallow | 86.32 | 65.42 | 68.32 | 71.31 |

| ResNet50 FT, deep | 93.31 | 88.15 | 87.12 | 91.53 |

| DenseNet121 FT, shallow | 91.31 | 82.13 | 73.14 | 84.13 |

| DenseNet121 FT, deep | 97.15 | 92.03 | 91.14 | 96.72 |

| InceptionV3 FT, shallow | 87.42 | 67.76 | 83.04 | 72.23 |

| InceptionV3 FT, deep | 92.13 | 88.56 | 86.31 | 94.61 |

| Method | Accuracy (%) |

|---|---|

| LBP + SVM | 83.03 |

| GLCM + SVM | 78.79 |

| VGG19 | 91.17 |

| ResNet50 | 91.53 |

| DenseNet121 | 96.72 |

| InceptionV3 | 94.61 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, J.; Li, Z.; Hao, R.; Wang, X.; Du, X.; Yan, B.; Ni, G.; Liu, J.; Liu, L.; Liu, Y. Classification of Microscopic Laser Engraving Surface Defect Images Based on Transfer Learning Method. Electronics 2021, 10, 1993. https://doi.org/10.3390/electronics10161993

Zhang J, Li Z, Hao R, Wang X, Du X, Yan B, Ni G, Liu J, Liu L, Liu Y. Classification of Microscopic Laser Engraving Surface Defect Images Based on Transfer Learning Method. Electronics. 2021; 10(16):1993. https://doi.org/10.3390/electronics10161993

Chicago/Turabian StyleZhang, Jing, Zhenhao Li, Ruqian Hao, Xiangzhou Wang, Xiaohui Du, Boyun Yan, Guangming Ni, Juanxiu Liu, Lin Liu, and Yong Liu. 2021. "Classification of Microscopic Laser Engraving Surface Defect Images Based on Transfer Learning Method" Electronics 10, no. 16: 1993. https://doi.org/10.3390/electronics10161993

APA StyleZhang, J., Li, Z., Hao, R., Wang, X., Du, X., Yan, B., Ni, G., Liu, J., Liu, L., & Liu, Y. (2021). Classification of Microscopic Laser Engraving Surface Defect Images Based on Transfer Learning Method. Electronics, 10(16), 1993. https://doi.org/10.3390/electronics10161993