Abstract

Levels of Automation (LOA) provide a method for describing authority granted to automated system elements to make individual decisions. However, these levels are technology-centric and provide little insight into overall system operation. The current research discusses an alternate classification scheme, referred to as the Level of Human Control Abstraction (LHCA). LHCA is an operator-centric framework that classifies a system’s state based on the required operator inputs. The framework consists of five levels, each requiring less granularity of human control: Direct, Augmented, Parametric, Goal-Oriented, and Mission-Capable. An analysis was conducted of several existing systems. This analysis illustrates the presence of each of these levels of control, and many existing systems support system states which facilitate multiple LHCAs. It is suggested that as the granularity of human control is reduced, the level of required human attention and required cognitive resources decreases. Thus, it is suggested that designing systems that permit the user to select among LHCAs during system control may facilitate human-machine teaming and improve the flexibility of the system.

1. Introduction

The vision of humans working effectively in a team with Artificial Intelligent Agents (AIAs) was clearly stated over 60 years ago [1]. Since that time, it has been demonstrated that automation can be incorporated into systems to moderate human workload [2], enhance situation awareness [3], and improve team performance [4]. Further, artificial intelligence technologies and automation have been incorporated to help us control systems to include nuclear power plants, aircrafts, and, more recently, automobiles. However, these systems often fall short of creating an interactive human–AIA team, typically placing the human operator into a supervisory role. In this role, the operator is required to recognize anomalies, assume control under time pressure, and apply their skills and knowledge to save the system in the direst of circumstances [5].

The supervisory role is not a desirable role for the human as they: (1) suffer vigilance decrements with time [6], reducing their ability to detect anomalies; (2) do not practice the skills necessary for system recovery and thus experience skill atrophy or fail to acquire the requisite skills [7]; and (3) do not necessarily have an adequate mental model of the situation to permit them to recover the system within the requisite time. However, systems are designed time and again which place the operator in a supervisory role, often leading to mishaps and occasionally loss of life [8]. Although one may cite many reasons why we continue to implement systems that place human operators in a supervisory role, one potential reason is that the commonly applied design frameworks fail to lead the designer to fully appreciate the complexity of human–AIA interaction.

One of the most frequently cited frameworks in the human-automation interaction literature is the “Levels of Automation” (LOA) framework proposed by Sheridan and Verplank in 1978 [9]. This work considered the design of a tele-operated undersea welding robot. Following a traditional Systems Engineering framework [10], these researchers decomposed the tasks which needed to be performed by the human–robot team into elemental tasks. This analysis led the researchers to the realization that some of these tasks required control loops with a duration that was shorter than the time required for the human to receive visual information from the remote location, issue a control command, transmit the command to the robot, and for the robot to execute the control command. Thus, they provided the ten levels of automation for any “single elemental decisive step” or decision, with the understanding that steps requiring fast control loops must be automated near Level 10 within tele-robotic systems while slower control loops could be automated near Level 1. The authors updated these levels of automation over the years, most recently publishing the updated list shown in Table 1 in 2011.

Table 1.

Sheridan and Verplank’s Levels of Automation in Man-Computer Decision Making [11].

When applied during system design, this framework is often coupled with task-based allocation rules in which individual decisions or tasks are allocated to the human or machine based upon their individual capabilities [12,13]. Similar frameworks have been proposed by a number of authors that describe the level of automation in different tasks and domains [14,15,16,17,18,19,20,21,22]. Parasuraman and colleagues suggested that the tasks should be further decomposed during automation allocation decisions using a four-stage model composed of sensory processing, perception/working memory, decision making, and response selection [23]. However, allocating different levels of automation to each decision and different mental processing stage within each decision produces a large number of potential system configurations for the operator to understand and maintain awareness of during system operation.

The Society of Automotive Engineers has adopted a taxonomy for classifying the automation of driving tasks [24]. The six levels in this taxonomy involve increasing levels of automation where increasing numbers of tasks are assumed by the automation. Interestingly the first five levels assume that the human is active in the control loop of the vehicle, although human involvement is safety-critical in only the first four levels. This framework does not specifically focus on reducing operator workload, attention, or other human cognitive limitations and thus the impact of this framework on human performance is unclear. However, this framework addresses automation at the system level rather than the decision level.

An alternate framework, referred to as the “Continuum of Control” has been proposed within the tele-robotics literature by Milgram and colleagues [25]. This taxonomy segments the control of robots, into five levels, as shown in Table 2. At the lowest level, the operator remains completely in the loop; making every decision and controlling every motion performed by the robot. Level 2 suggests “a master–slave control system, where all actions of the master are initiated by the human operator [and] are mimicked by the slave manipulator”. That is, the human operator communicates exactly how the robot should move, then the robot determines the servo inputs required to achieve that movement. In Level 3, the human directs the motion of the robot through a command language. At Level 4, the human only supervises, monitoring and applying direction or redirection as required. Milgram does not clearly define Level 5 but Vagia describes this level as “the human gets out of the loop” [26]. While Milgram’s continuum of control is not clearly defined, the overall concept of automating control appears in numerous other publications.

Table 2.

Milgram and colleague’s Continuum of Control.

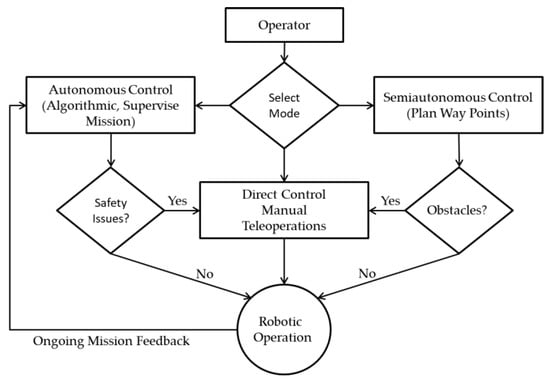

Although not proposed as a framework, Chen and colleagues proposed a control structure for the human operator as shown in Figure 1. In this structure, the human operator is able to exercise different levels of control, depending upon their needs [27]. For example, such a control scheme might enable the human operator to balance their workload and their need for precise control. Three control loops are shown within this figure. In the first loop, the human performs all control. In the second loop, the robot performs the inner control loops while the operator exercises higher-level control. All control is exercised autonomously by the robot in the third loop. This control scheme further illustrates that autonomous control requires safety considerations, such as obstacle avoidance, to be included in the automation. These safety considerations, when automated reliably, relieve the operator from monitoring the system’s performance and reduce operator workload.

Figure 1.

Control logic based on the tele-operation graphic provided by Chen and colleagues.

Chen’s model is somewhat consistent with Rasmussen’s abstraction or ends-means hierarchy [28]. Rasmussen proposes that human work can be structured in a hierarchy from physical structures to more abstract concepts that are formed to enable a high-level human goal. Each goal within each layer in this structure can be conceptualized as a control loop which encapsulates the control being performed at the lower levels of the abstraction hierarchy to achieve the higher-level goal.

Hollnagel discusses designing human interaction with automation through the application of his Contextual Control Model (COCOM), which conceptualizes the operator as operating in four control modes to include “scrambled”, “opportunistic”, “tactical”, and “strategic”. In this model, the tactical and strategic modes serve as outer loops for the others and the strategic mode is conducted on a longer time scale [29].

A more concrete example of conceptualizing human–AIA interaction is provided by Johnson and colleagues [30]. The system discussed within this publication can be envisioned as a series of concentric control loops. The inner loops represent a larger number of shorter duration, more precisely specified tasks and the outer loops represent longer duration, more abstract, and less precisely specified tasks. In this study, users were able to interact with an AIA at one of four “treatment” levels. The lowest level consists of 11 independent commanded actions, with each of the other levels consisting of fewer available commanded actions, where these actions encapsulate multiple actions at the lower level. The fourth level provided a single command that could be issued by the human operator. Within this structure, when the operator provided a command at a higher level, the AIA was required to determine the appropriate lower-level actions.

Although much of the reviewed literature illustrates examples of human control of automated systems through the conceptualization of the control structure as a hierarchy, it does not appear that this concept has been clearly stated or explored as a framework for human–AIA team design. Perhaps this is not surprising as automation has traditionally not been conceptualized as capable of completing the full perceptual cycle to exact control [31,32]. However, the decreasing costs of sensors, actuators and more robust artificial intelligence engines provide automated systems the ability to perceive events within the world, decide upon actions in response to these events, and enact these events. Thus, these innovations have enabled the proliferation of artificial agents as defined by Weis [33]. In an earlier paper, we suggested an alternate framework referred to as Level of Human Control Abstraction (LHCA) to describe the level of control a user must exercise in the control of a system [34]. In the current research, we explore the concept of human–agent teaming through this hierarchical control structure. By viewing automation within manned and unmanned aircraft systems using this framework we attempt to explore whether human interaction with existing systems could be described using this conceptual structure.

2. LHCA Method

We begin by reviewing and defining the LHCA framework and then describing the method we will apply to analyze this framework.

2.1. Framework Definition

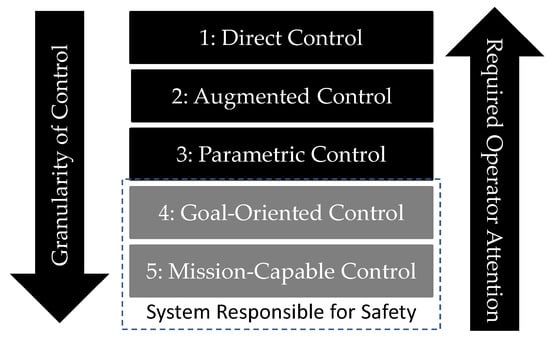

The LHCA framework is intended to provide a human-centric, instead of a system-centric, perspective. This framework comprises five control levels, including: (1) Direct, (2) Augmented, (3) Parametric, (4) Goal-Oriented, and (5) Mission-Capable. The five levels are shown and described in Table 3. The framework focuses on the cognitive tasks which are relinquished by the human operator and become the responsibility of the automation. Specifically, this framework recognizes that as higher levels of automation functions are applied in the system, the user exercises less granularity of control which reduces the amount of attention and other cognitive resources required to control the vehicle. This relationship is depicted in Figure 2. This tradeoff is particularly important in multi-tasking environments where the operator is incapable of dedicating sufficient cognitive resources to granular control of the vehicle. In systems where the operator can control the LHCA, they may prioritize some activities over others, allocating more of their attention to the details of one task than another. For example, while operating a vehicle, the operator may choose to allocate less attention to vehicle control, commanding the system to operate at a higher LHCA. This decision permits the automation to assume this control and permits the operator to focus less attention and other cognitive resources on the control task. Thus, the operator decides to rely more on automation for a period of time. This decision permits the operator to focus on alternate tasks or, perhaps, control multiple vehicles. However, the ability of the operator to provide granular or low-level control of a system has been associated with functional gains and operational flexibility of a system [35]. Therefore, granular or low-level control improves the flexibility of the system and permits the operator to innovate new solutions to the challenges they encounter during system operation [36]. We might, therefore, expect that the ability to assume granular control of the vehicle enables functional gains and improves the flexibility of the system to overcome a larger variety of circumstances within a mission, as might be expected based on Ashby’s Law [37]. Systems having variable LHCA permit the operator to exercise granular control when necessary, while allowing the operator to select more abstract control to monitor additional systems or perform alternate tasks.

Table 3.

Proposed Levels of Human Control Abstraction framework.

Figure 2.

Figure indicating a tradeoff between granularity of operator control and required operator attention.

2.2. Evaluation Method

This research was conducted in three phases. The first phase involved investigated the general application of automation within systems and their impact on the operators of these systems. This led to the observation that the framework does not apply to systems, but states within each system. As a result, application rules are proposed.

In the second phase, we evaluated the cognitive tasks associated with each LHCA for specific systems, the results of which are described in Section 3.2. In this phase, a general framework was developed for objectively evaluating the granularity of control, as well as the cognitive resources required of a system. Specifically, these objective measures were applied, together with a high-level task analysis of UAV control to describe the necessary cognitive functions. These cognitive functions were representative of the functions within this domain and they influenced the granularity of control as well as required cognitive resources to be applied by the human operator. When considering the granularity of control, the number of cognitive tasks performed by the operator was evaluated. When considering cognitive resources, it was assumed that more frequent or additional psychomotor tasks would lead to higher workload and place higher demands on attention and cognitive resources. This is a common assumption within the workload literature, as long as these tasks require the same cognitive resources [38]. However, it is recognized that if these tasks do not rely upon the same cognitive resources, this assumption is not necessarily accurate [39].

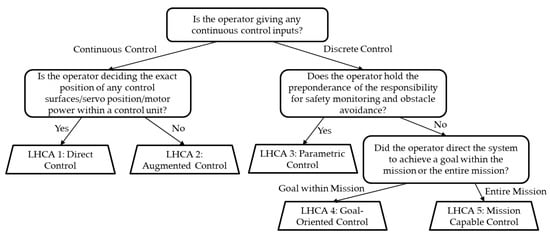

The third phase began with the development of a decision tree to categorize systems according to the general framework developed in the first two phases. However, it was quickly realized that these systems operate in multiple LHCA states. Therefore, the method was modified to categorize discrete LHCA states in which the systems could operate. As shown in Figure 3, the resulting decision tree was applied to assess whether the operator provided continuous control or discrete control, which differentiated LHCA 1 and 2 from LHCA 3, 4, and 5. Additional questions, as shown, further differentiated the LHCA. Once developed, this decision tree was applied to a number of existing systems to classify the LHCAs supported by each system. This application required a detailed examination of documentation surrounding the automated capabilities of each system. This produced the classification in Section 3.3. Further analysis of these systems and their capabilities led to general observations regarding the application and utility of LHCA.

Figure 3.

Decision tree applied to classify system states into one of the five Levels of Human Control Abstraction.

3. LHCA Application and Results

The results of the LHCA analysis is presented in three sections, first, we explain the development of application rules. We then discuss the results of applying the framework to the control of a UAV and the implications for the allocation of tasks between the human and system as a function of LHCA. Finally, we discuss the results of applying this framework to a family of real-world systems.

3.1. Application Rules

In developing the LHCA, two special circumstances arose which required rules to be developed to aid selection of the proper level under the specified condition.

- Rule 1: The LHCA is a state and is determined instantaneously.

A system can support the use of multiple LHCA and can transition between these states as required. These transitions can be based on the control inputs by the human operator or the system. An example is the initiation of an aircraft’s autopilot system. An example of operator initiated transition occurs when the operator is flying an aircraft with continuous control inputs, at either LHCA 1 or 2 and activates the aircraft autopilot, which transitions the aircraft to Parametric Control, LHCA 3. An example of a system initiated transition is the activation of an F-16’s Automatic Ground Collision Avoidance System (Auto-GCAS). During normal flying conditions, with the autopilot disabled, control inputs are given by the operator resulting in signals provided to the control surface actuators. However, if the onboard Auto-GCAS system detects an impending ground collision, the aircraft will transition to LHCA 3, Parametric Control. The system will then command the control surfaces to level off the aircraft at a safe altitude and maintain safe flight parameters.

- Rule 2: When multiple control inputs are provided, the system LHCA is assigned based upon the control with the minimum LHCA value.

It is common for a human interface to include multiple control inputs, especially when operating at lower LHCA levels. For example, we use the steering wheel to select direction and a combination of gas pedal and brake to control speed in an automobile. In some systems, it is possible for these independent controls to be automated differently. A common example is traditional cruise control. In this example, the driver steers the automobile, an action consistent with LHCA 1. However, because cruise control is responsible for maintaining the vehicle speed to a selected parameter value, an argument might be made that the vehicle is being operated at LHCA 3. This issue is resolved by examining the operator’s most detailed control input and assigning the LHCA based on that aspect of control. Therefore, according to this rule, even when cruise control is active, the operator is controlling the vehicle at LHCA 1 as they are directly determining the steering angle of the tires. Note that this rule is derived from the human-centered nature of LHCA as the operator’s attentional demands are primarily driven by the aspect of the vehicle with the lowest LHCA. That is the driver must fully attend to road conditions and automobile direction to steer the vehicle. Therefore, they are operating at LHCA 1.

3.2. Determination of Cognitive Tasks Associated with LHCAs

LHCA analysis is illustrated in this paper for a fixed-wing Unmanned Aerial Systems (UAS); analysis of additional systems is provided elsewhere [40]. To properly analyze the system state corresponding to each LHCA, an adequate system description must first be provided, as illustrated below.

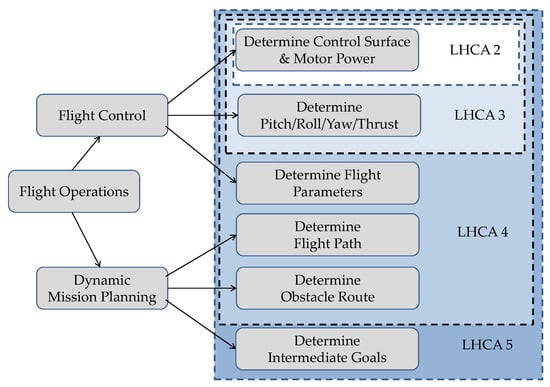

3.2.1. LHCA 1: Direct Control

In this configuration, the UAS is fully controlled by the operator using two joysticks, each joystick axis controls a control surface (elevators or rudder on the tail or ailerons on each wing) or the motor power. The operator is controlling the exact settings for all aspects of control using continuous control inputs, as is expected for a system controlled at LHCA 1. The cognitive tasks being performed by the operator during flight operations can be separated into two categories, flight control and dynamic mission planning, as depicted in the cognitive task hierarchy in Figure 4.

Figure 4.

Depiction of Level of Human Control Abstraction (LHCA) Cognitive Task Allocation for a hypothetical Unmanned Aerial Systems (UAS). Tasks indicated within each LHCA grouping are allocated to the automation on the vehicle.

As shown in Figure 4, each of these tasks can then be further decomposed into cognitive subtasks. Specifically, dynamic mission planning includes determining the intermediate goals, determining the flight path to achieve those goals and determining changes in the flight path to avoid any potential obstacles. The operator determines more detailed flight parameters to achieve the mission plan, decides upon the pitch, roll, yaw, and thrust to achieve this plan, then translates the desired movement of the aircraft into commands to the control surfaces to achieve the desired aircraft movement, adjusting the control surfaces in response to environmental conditions, such as cross-winds. Note that the operator must be aware of the status of the system and all aspects of the environment while operating at LHCA 1. As such, any loss or diversion of operator attention may delay the recognition of such an environmental event and increases the risk that control inputs may not be sufficient for safe flight. Additionally, simple time lags associated with tele-operation may make LHCA 1 inappropriate or unsafe for some systems.

3.2.2. LHCA 2: Augmented Control

In this configuration the UAS can also be controlled with two joysticks; however, each joystick axis controls either the thrust, role, pitch, or yaw rate. In this configuration, the operator does not need to translate the desired motion of the system to control surface positions and does not need to be aware of or responsive to changes in system state or environmental conditions that affect this translation. This contrasts with the Direct Control configuration because the position of the control surface is not controlled by the operator. Instead, the control surfaces are controlled by an algorithm that considers the joystick position as one of its multiple inputs. As shown in Figure 4, the human cognitive task “Determine Control Surface and Motor Power” is re-allocated to the automation when operating in Augmented Control. As certain environmental effects are now compensated for by the automation, momentary lapses or redirections of operator attention away from the control task are less likely to result in loss of control when operating at this LHCA.

3.2.3. LHCA 3: Parametric Control

The UAS is under control of an autopilot system. The operator sets flight parameters and the UAS adjusts the control surfaces and motor power to achieve those parameters. Therefore, the additional function “Determine Pitch/Roll/Yaw/Thrust” is allocated from the human to the automation. This function, together with the functions subsumed under LHCA 2, is under control of the automation at this LHCA. Notice that the human only physically interacts with the system intermittently under Parametric Control. Therefore, attention can be intermittently shifted to other tasks, depending upon the permissible periods between assessing the need to determine potential obstacles or altering system parameters without significantly increasing the risk of loss of control. However, the operator does assume some risk, dependent upon the reliability of the automation under the current flight conditions, that automation failure could result in loss of control. The operator is also still responsible for obstacle avoidance, as well as monitoring for other potential system failures. Thus, risk of loss of control, collision, and the inability to recover from system anomalies increase as the period between assessments increases.

3.2.4. LHCA 4: Goal-Oriented Control

In this configuration, the operator enters one of many pre-programmed goals to be accomplished mid-mission. The operator may command the UAS to fly to a designated location and land there. As shown in Figure 4, the operator is only responsible for “Determine Intermediate Goals” and the additional tasks of “Determine Flight Parameters” (e.g., altitude and airspeed), “Determine Flight Path”, and “Determine Obstacle Avoidance” are allocated to the automation. This configuration enables a decrease in human attention as the operator is no longer responsible for monitoring the environment. Therefore, the workload imposed upon a user by the task of controlling the vehicle will likely be similarly reduced. The operator may fully engage in another activity for substantial periods of time, receiving notice when the goal is accomplished or as status alerts are warranted. At this level of control, the operator is relying upon the system to detect and provide timely alerts. Thus, the risk of loss of control is determined predominantly by the reliability of the automation to control the aircraft and provide alerts early enough to give the operator time to fully understand the current situation and formulate an appropriate response.

3.2.5. LHCA 5: Mission-Capable Control

In this configuration, the operator specifies any mission parameters before takeoff. The UAS then executes the mission without further control inputs from the operator. As shown in Figure 4, the additional task of “Determine Intermediate Goals” is allocated to the automation. Similar to LHCA 4, the operator’s required attention is reduced. In fact, the operator might never interact with the vehicle after the mission begins. At this level of control, the operator is relying upon the system to not only detect and provide timely alerts, but to determine appropriate actions towards the fulfillment of each goal. Thus, the risk of loss of control is determined predominantly by the reliability of the automation to control the aircraft, properly formulate methods to achieve the goal, and provide alerts early enough to give the operator time to fully understand the current situation and formulate an appropriate response.

This example demonstrates the allocation of cognitive tasks associated with vehicle control from the operator to the system with increases in LHCA. As designed, levels within this framework are generally associated with the allocation of tasks necessary for the control of a vehicle from the operator to the system; reducing the attentional and other cognitive resources the human must expend to control the system. However, the human’s knowledge of the system and environment, as well as the granularity of their control over the system, decreases with increasing LHCA.

3.3. Classification of Real-World Systems

LHCA was applied to several manned aircraft and Unmanned Aerial Systems (UAS) as shown in Table 4 using the decision tree provided in Figure 3. Additionally, the framework was applied to a commercial automotive system and an Unmanned Ground Vehicle (UGV) to investigate whether this framework would generalize to other vehicles.

Table 4.

Real-World Systems Analyzed and the LHCAs provided by each system. The presence of an “X” indicates the system supports a state with the corresponding LHCA.

The results indicate that some systems are not capable of being controlled at more than one LHCA, while others permit the system to operate at multiple LHCA. The majority of the systems appear to operate at LHCA 3 or below. However, the UAS provided LHCAs above three with one of the systems permitting operation only at LHCA 5. This system was designed to be operated by minimally trained operators in close proximity to obstacles. The manufacturer provided the highest possible level of automation as the control loop must be performed at a rate that is faster than human operators are capable in order to respond appropriately to environmental changes. Further, support for LHCA 5 reduces the required operator training.

During application of the LHCA framework, a number of interesting observations were made. The most significant of these was the differentiation between augmented and direct fly-by-wire systems. Some fly-by-wire systems are controlled at LHCA 1 and others LHCA 2. An LHCA 1 fly-by-wire system simply breaks the physical connection between the operator’s controls and the control surfaces, passing the control inputs electronically to control surface actuators but not adjusting the inputs for environmental data. This type of non-augmented fly-by-wire system would be an example of Direct Control, LHCA 1, not Augmented Control, LHCA 2, because the operator is still determining the exact position of the control surfaces and engine settings. This lesson applies to both unmanned and manned vehicles.

Another lesson relates to LHCA determination when the allocation of responsibility may be ambiguous. As described in the open literature, the F-16 has an Auto-Ground Collision Avoidance System (Auto-GCAS) which is intended to prevent controlled flight into ground accidents [41]. This system is designed to prevent mishaps caused by the operator’s loss of situation awareness, spatial disorientation, loss of consciousness from over-G, and gear-up landings, which are a significant source of aviation Class A mishaps [42]. In this system, the aircraft determines if a collision with the ground is imminent, presents audio and visual warnings to the operator, and rights the aircraft (i.e., wings level with heading selected based on the aircraft’s last course) if the operator does not respond.

In a scenario when the Auto-GCAS senses an imminent ground collision and begins aircraft recovery, the LHCA classification is not obvious. However, the aircraft is using predetermined control inputs, parameters determining the safe recovery altitude and cruising airspeed are stated within the flight manual. Therefore, the system is executing “exclusively discrete control”. Classification then requires an answer to the question: “Does the operator hold the preponderance of the responsibility for safety monitoring and obstacle avoidance?” This question may cause confusion because the operator likely is unconscious or severely disoriented when the Auto-GCAS is engaged. However, the Auto-GCAS is not able to detect and avoid mid-air collisions with other aircraft. This begs the question: “can the operator be responsible for avoiding other aircraft while unconscious?” In this case, yes, as the Auto-GCAS is simply meeting and maintaining flight parameters until further notice and not actively avoiding mid-air collisions. Responsibility for avoiding all obstacles not in the aircraft’s onboard terrain map, which the Auto-GCAS depends on, falls on the operator. The gap between the Auto-GCAS capability to detect and avoid mid-air collisions and the operator’s capability to avoid them because of potential unconsciousness presents risk. However, this risk is less than presented by nearly certain ground collisions. The correct LHCA classification is three, Parametric Control with a risk of mid-air collisions accepted by aircraft designers and operators.

4. Discussion

Every system has an associated set of functions delegated to either the operator or the system to ensure effective operation. With respect to vehicle control, a number of authors have discussed systems being controlled at different levels of abstraction. The current research proposes classifying the performance of these systems in terms of the level of control abstraction employed by the human operator or LHCA. As LHCA increases, responsibilities are reassigned from the operator to the system. This reduction in operator responsibility directly leads to a reduction in the number of required human control inputs. Correspondingly, the amount of required human attention decreases as LHCA increases because operator responsibility decreases. As the specificity of control inputs decrease, the operator can devote less attention to controlling the motion of the system, assuming that the automation is sufficiently reliable under the current circumstances that risk to the system is acceptable. The decrease in human attention may correlate to a decrease in workload and the ability to dedicate attention to other duties. It is expected that the decrease in attention for higher LHCAs will reduce situation awareness with regard to the vehicle itself but permit the operator to focus on other aspects of the mission, improving SA for higher-level tasks within a mission.

In reviewing the results, a number of general system observations can be made regarding the different types of systems that were analyzed.

4.1. Unstable Systems

Unstable or under actuated systems, such as the DJI Phantom or Prenav multirotor UAVs, should not be operated at LHCA 1 as control of these systems require continuous control of multiple, interdependent controls, often exceeding human capacity. Assigning the human to control a system that requires completion of the control loop at a rate that exceeds human capacity is likely to result in failure, as originally noted by Sheridan and Verplank [9]. Generally, LHCA 2 reduces the demand on the operator as compared to LHCA 1 and is therefore desired unless the operator can control the vehicle to gain additional performance. This recommendation is made with the understanding that system failures, such as sensor failures, which was assigned as the cause of a B-2 accident in 2008, will occur [43]. However, for a well-designed system, the risk of system failure is less than the risk imposed by a pilot’s inability to control an inherently unstable system, or systems where the inherent lag times during tele-operation may make them unstable.

4.2. Multiple LHCAs

Most of the systems analyzed could be operated at several LHCAs, enabling the operator to select the granularity of control inputs as appropriate. Often, increasing a system’s LHCA reduces the operator’s required attention and responsibilities but results in a reduced granularity of control and reduced operational flexibility. In this context, the term operational flexibility refers to a system’s ability to be used as required by the operator, even if that is outside of normal operations. At LHCA 5, the operator is not required to know what a system is doing on a moment-to-moment basis and the system may not be able to perform tasks beyond those for which it was initially designed. However, at LHCA 1, the operator has complete control over the physical elements of the system. The literature demonstrates that highly trained or motivated operators have the capability to employ systems in novel ways when given the flexibility to do so [38,44]. Providing highly granular control of the basic control surfaces has the potential to permit the operators to expand the operational utility of the system, thus increasing operational flexibility. Overall, this framework is consistent with the work discussed by both Chen and Milgram [25,27].

Another point worth making is with regards to the tradeoff between appropriate LHCA and acceptable risk. Acceptable level of risk is not a static determination; it is variable based on Rules of Engagement/Employment (ROEs) and the criticality of the task. For the military, the ROEs are likely to be adjusted in favor of higher risk if victory in battle hangs in the balance. For civilian emergency response, a higher-level LHCA may be deemed acceptable if there are no alternate means of task accomplishment or the risks associated with inaction or lower LHCA is deemed higher than that of operating at a higher LHCA. Systems that provide a variable LHCA provide the operator options to react appropriately within such scenarios.

Two additional questions are worthy of discussion. First, “Are the five LHCAs provided in this paper the only useful levels or are these five the correct levels?” The answer to this question is unclear. However, we suggest that the general construct depicted in Figure 3 which suggests that the designer should consider the tradeoff between granularity of control and required operator attention during the design of the system is important. Important also in this tradeoff is the fact that the system must be capable of safe operation at higher LOAs, at least in certain, well-characterized, environments. Second, “Should the system permit operation at multiple LHCAs?” It is clear that adaptive or adaptable automation has been demonstrated to be useful in autonomous systems to permit the operator to adjust their workload to compensate for environmental variability [11,45]. We would propose that giving the operator relatively intuitive control over the granularity of control will permit better human–AIA teaming in systems that employ highly trained operators to control the system in uncertain environments. However, the utility of this functionality may depend upon the knowledge and training of the operators to understand the limits and capabilities of the underlying technology, as well as, their own limits and capabilities. The operator’s ability to understand their own limitations under high workload and in the presence of multi-tasking environments is important in their ability to assess the risk associated with operating a vehicle with higher levels of LHCA [46], enabling them to select appropriate control schemes.

4.3. Safety-Critical Considerations

All of the systems with operator safety concerns (i.e., manned systems) were limited to the first three LHCAs. This likely relates to the maturity of autonomous technologies, as well as, liability and regulations. Until system manufacturers can convince the public and regulators that their systems can be trusted with safety-critical responsibilities, systems that involve higher levels of potential for loss of human life perhaps should not be capable of operating at LHCA 4 or 5 for extended periods of time. However, even if the systems are capable of operating at these levels only in limited environments and the operator understands these limitations, this functionality may provide significant utility to the operator by improving operational flexibility.

4.4. Generalizability

The current LHCA framework has been designed to classify user interaction with vehicle control systems. We believe this framework could be extended to include other control systems and interfaces involving multiple control interfaces. An example might include control of a UAV having a slewable camera where both the control interface for the UAV and the camera have varying levels of automatic control. However, considering the current framework is specific to the cognitive tasks necessary for vehicle control, extending this or analogous frameworks to other domains will likely require a deeper understanding of the cognitive tasks performed within each domain. However, the general approach taken here and depicted in Figure 3 may well be extensible to other domains even if the five specific LHCAs are not.

5. Conclusions

The LHCA framework was proposed to enable the classification of vehicles and tele-robotic systems to provide system-level trades during design. This framework is human-centric, focusing on the granularity of control the human operator must provide. The framework associates decreases in granularity with decreased requirements for human attention and other cognitive resources. Therefore, this framework focuses on the demands placed on the human within a human–AIA team.

This framework was used to classify existing piloted vehicle systems, demonstrating the broad applicability of the LHCA framework within the domains of vehicles and tele-robotics. The analysis illustrated that control states within the existing systems could be classified using this framework and that multiple systems supported multiple LHCAs, permitting the operator to trade granularity of control for cognitive effort while operating the system. We believe this framework could be extended to include other control systems and interfaces involving multiple control interfaces. An example might include control of a UAV having a slewable camera where both the control interface for the UAV and the camera have varying levels of automatic control.

Some within the literature have argued against the proliferation of additional frameworks for describing the interaction of a human and automation [47,48]. Others have argued that the existing LOA framework has failed to account for human behavior and experience in operational environments [49]. LHCA is not likely to address all of the concerns associated with the LOA framework. In fact, the proposed framework is specific to the cognitive tasks necessary for vehicle or physical device control. Extending the framework to other domains will likely require a deeper understanding of the cognitive tasks performed within each domain. Therefore, it is likely that the primary contribution of this research is the clear communication of the need to consider the tradeoff between granularity of control and required human cognitive resources during the design of system interfaces, as well as the need to support the dynamic, situationally-aware, transitions among the resulting system states. We believe providing the ability to transition among states which embody this tradeoff can usefully-support interactions between humans and artificially intelligent agents who collaborate as team members.

Author Contributions

Conceptualization, C.D.J. and M.E.M.; methodology, C.D.J., M.E.M., C.F.R. and D.R.J.; formal analysis, C.D.J.; writing—original draft preparation, C.D.J.; writing—review and editing, M.E.M., C.D.J., C.F.R. and D.R.J.; visualization, C.D.J., C.F.R. and M.E.M.; supervision, M.E.M. and C.F.R.; project administration, M.E.M.; funding acquisition, M.E.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partially funded by the U.S. Air Force Office of Scientific Research, Computational Cognition and Machine Intelligence Program.

Disclaimer

The views expressed in this paper are those of the authors and do not reflect the official policy or position of the United States Air Force, the Department of Defense, or the U.S. Government.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Licklider, J.C.R. Man-Computer Symbiosis. Ire Trans. Hum. Factors Electron. 1960, 1, 4–11. [Google Scholar] [CrossRef]

- Kaber, D.B.; Endsley, M.R. The effects of level of automation and adaptive automation on human performance, situation awareness and workload in a dynamic control task. Issues Erg. Sci. 2004, 5, 113–153. [Google Scholar] [CrossRef]

- Gorman, J.C.; Cooke, N.J.; Winner, J.L. Measuring team situation awareness in decentralized command and control environments. Ergonomics 2006, 49, 1312–1325. [Google Scholar] [CrossRef]

- Kaber, D.B.; Riley, J.M.; Tan, K.-W.; Endsley, M.R. On the Design of Adaptive Automation for Complex Systems. Int. J. Cogn. Erg. 2001, 5, 37–57. [Google Scholar] [CrossRef]

- Schutte, P.C. How to Make the Most of Your Human: Design Considerations for Single Pilot Operations. In Lecture Notes in Computer Science; Springer Science and Business Media LLC: Berlin, Germany, 2015; Volume 9174, pp. 480–491. [Google Scholar]

- Warm, J.S.; Parasuraman, R.; Matthews, G. Vigilance Requires Hard Mental Work and Is Stressful. Hum. Factors: J. Hum. Factors Erg. Soc. 2008, 50, 433–441. [Google Scholar] [CrossRef]

- Casner, S.; Hutchins, E.L.; Norman, D. The challenges of partially automated driving. Commun. ACM 2016, 59, 70–77. [Google Scholar] [CrossRef]

- Vlasic, B.; Boudette, N.E. Self-Driving Tesla Was Involved in Fatal Crash, U.S. Says. The New York Times. Available online: https://www.nytimes.com/2016/07/01/business/self-driving-tesla-fatal-crash-investigation.html (accessed on 10 April 2020).

- Sheridan, T.B.; VerPlank, W.L. Human and Computer Control of Undersea Teleoperators; Institute of Tech Cambridge Man-Machine Systems Lab: Boston, MA, USA, 1978. [Google Scholar]

- Beevis, H.; Essens, D.; Schuffel, P.J.M.D. Improving Function Allocation for Integrated Systems Design; University of Dayton Research Institute: Dayton, OH, USA, 1995; AC/243 (Panel 8) TP/7. [Google Scholar]

- Sheridan, T.B. Adaptive Automation, Level of Automation, Allocation Authority, Supervisory Control, and Adaptive Control: Distinctions and Modes of Adaptation. IEEE Trans. Syst. ManCybern. Part. A Syst. Hum. 2011, 41, 662–667. [Google Scholar] [CrossRef]

- Fitts, P. (Ed.) Human Engineering for an Effective Air Navigation and Traffic Control System; American Psychological Association: Washington, DC, USA, 1951. [Google Scholar]

- Price, H.E. The Allocation of Functions in Systems. Hum. Factors J. Hum. Factors Erg. Soc. 1985, 27, 33–45. [Google Scholar] [CrossRef]

- Clough, B. Metrics, schmetrics! How the heck do you determine a UAV’s autonomy anyway. In Performance Metrics for Intelligent Systems Workshop; NIST Special Publications: Gaithersburg, MD, USA, 2002; p. 7. [Google Scholar]

- Draper, J.V. Teleoperators for advanced manufacturing: Applications and human factors challenges. Int. J. Hum. Factors Manuf. 1995, 5, 53–85. [Google Scholar] [CrossRef]

- Endsley, M.R. The Application of Human Factors to the Development of Expert Systems for Advanced Cockpits. Proc. Hum. Factors Soc. Annu. Meet. 1987, 31, 1388–1392. [Google Scholar] [CrossRef]

- Endsley, M.R. Level of automation effects on performance, situation awareness and workload in a dynamic control task. Ergonomics 1999, 42, 462–492. [Google Scholar] [CrossRef] [PubMed]

- Endsley, M.R.; Kiris, E.O. The Out-of-the-Loop Performance Problem and Level of Control in Automation. Hum. Factors J. Hum. Factors Erg. Soc. 1995, 37, 381–394. [Google Scholar] [CrossRef]

- Lorenz, B.; Di Nocera, F.; Röttger, S.; Parasuraman, R. The Effects of Level of Automation on the Out-of-the-Loop Unfamiliarity in a Complex Dynamic Fault-Management Task during Simulated Spaceflight Operations. Proc. Hum. Factors Erg. Soc. Annu. Meet. 2001, 45, 44–48. [Google Scholar] [CrossRef]

- Ntuen, C.; Park, E. Human factor issues in teleoperated systems. In Proceedings of the First International Conference on Ergonomics of Hybrid Automated Systems I, Louisville, KY, USA, 15–18 August 1988; pp. 203–311. [Google Scholar]

- Proud, R.W.; Hart, J.J.; Mrozinski, R.B. Methods for determining the level of autonomy to design into a human spaceflight vehicle: A function specific approach. In Performance Metrics for Intelligent Systems; NIST Special Publications: Gaithersburg, MD, USA, 2003; p. 14. [Google Scholar]

- Riley, V. A General Model of Mixed-Initiative Human-Machine Systems. Proc. Hum. Factors Soc. Annu. Meet. 1989, 33, 124–128. [Google Scholar] [CrossRef]

- Parasuraman, R.; Sheridan, T.; Wickens, C. A model for types and levels of human interaction with automation. IEEE Trans. Syst. ManCybern. Part. A Syst. Hum. 2000, 30, 286–297. [Google Scholar] [CrossRef]

- Society of Automotive Engineers. U.S. Department of Transportation’s New Policy on Automated Vehicles Adopts SAE International’s Levels of Automation for Defining Driving Automation in On-Road Motor Vehicles. 2016. Available online: https://www.newswire.com/news/u-s-dept-of-transportations-new-policy-on-automated-vehicles-adopts-sae-5217899 (accessed on 10 April 2020).

- Milgram, P.; Rastogi, A.; Grodski, J. Telerobotic control using augmented reality. In Proceedings of the 4th IEEE International Workshop on Robot and Human Communication, Tokyo, Japan, 5–7 July 1995; pp. 21–29. [Google Scholar]

- Vagia, M.; Transeth, A.A.; Fjerdingen, S.A. A literature review on the levels of automation during the years. What are the different taxonomies that have been proposed? Appl. Erg. 2016, 53, 190–202. [Google Scholar] [CrossRef]

- Chen, J.Y.C.; Haas, E.C.; Barnes, M.J. Human Performance Issues and User Interface Design for Teleoperated Robots. IEEE Trans. Syst. ManCybern. Part. C Appl. Rev. 2007, 37, 1231–1245. [Google Scholar] [CrossRef]

- Rasmussen, J.; Pejtersen, A.M.; Goodstein, L.P. Cognitive Systems Engineering; John Wiley & Sons: New York, NY, USA, 1994. [Google Scholar]

- Hollnagel, E.; Bye, A. Principles for modelling function allocation. Int. J. Hum. Comput. Stud. 2000, 52, 253–265. [Google Scholar] [CrossRef]

- Johnson, M.; Bradshaw, J.; Feltovich, P.J.; Jonker, C.; Van Riemsdijk, B.; Sierhuis, M. Autonomy and interdependence in human-agent-robot teams. IEEE Intell. Syst. 2012, 27, 43–51. [Google Scholar] [CrossRef]

- Vernon, D.; Metta, G.; Sandini, G. A Survey of Artificial Cognitive Systems: Implications for the Autonomous Development of Mental Capabilities in Computational Agents. IEEE Trans. Evol. Comput. 2007, 11, 151–180. [Google Scholar] [CrossRef]

- Neisser, U. Cognition and Reality: Principles and Implications of Cognitive Psychology; W.H. Freeman and Company: New York, NY, USA, 1976. [Google Scholar]

- Weiss, G. Multiagent Systems; MIT Press: Cambridge, MA, USA, 2013. [Google Scholar]

- Johnson, C.D.; Miller, M.; Rusnock, C.F.; Jacques, D.R. A framework for understanding automation in terms of levels of human control abstraction. In Proceedings of the 2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, Canada, 5–8 October 2017; pp. 1145–1150. [Google Scholar]

- Cox, L.C.A.; Szajnfarber, Z. System User Pathways to Change. In Disciplinary Convergence in Systems Engineering Research; Springer Science and Business Media LLC: Berlin, Germany, 2017; pp. 617–634. [Google Scholar]

- Rosenberg, N. Learning by Using. In Inside the Black Box: Technology and Economics, 1st ed.; Cambridge University Press: Cambridge, UK, 1982; pp. 120–140. [Google Scholar]

- Woods, D.D.; Hollnagel, E. Joint Cognitive Systems; Informa UK Limited: London, UK, 2006. [Google Scholar]

- McCracken, J.H.; Aldrich, T.B. Analyses of Selected LHX Mission Functions: Implications for Operator Workload and System Automation Goals. In Analyses of Selected LHX Mission Functions: Implications for Operator Workload and System Automation Goals; Defense Technical Information Center (DTIC): Fort Belvoir, VA, USA, 1984; p. 377. [Google Scholar]

- Wickens, C.D. Multiple resources and mental workload. Hum. Factors: J. Hum. Factors Erg. Soc. 2008, 50, 449–455. [Google Scholar] [CrossRef] [PubMed]

- Johnson, C.D. A Framework for Analyzing and Discussing Level of Human Control Abstraction; Air Force Institute of Technology: Wright Paterson Air Force Base, Dayton, OH, USA, 2017. [Google Scholar]

- Norris, G. Auto-GCAS Saves Unconscious F-16 Pilot—Declassified US Air Force Video. Air Combat Safety content from Aviation Week. Aviation Week. 2016. Available online: https://aviationweek.com/defense-space/budget-policy-operations/auto-gcas-saves-unconscious-f-16-pilot-declassified-usaf (accessed on 10 April 2020).

- Poisson, R.J.; Miller, M.E. Spatial Disorientation Mishap Trends in the U.S. Air Force 1993–2013. Aviat. Space. Environ. Med. 2014, 85, 919–924. [Google Scholar] [CrossRef] [PubMed]

- Carpenter, F.L. Summary of Facts, B-2A, U.S. Air Force Accident Investigation, S/N 89–0127. 2008. Available online: http://www.glennpew.com/Special/B2Facts.pdf (accessed on 8 April 2020).

- Cox, A. Functional Gain and Change Mechanisms in Post-Production Complex Systems; The George Washington University: Washington, DC, USA, 2017. [Google Scholar]

- Scerbo, M.W. Theoretical Perspectives on Adaptive Automation. Hum. Perform. Autom. Auton. Syst. 2019, 514, 103–126. [Google Scholar]

- McFarlane, D.C.; Latorella, K.A. The Scope and Importance of Human Interruption in Human-Computer Interaction Design. Hum. Comput. Interact. 2002, 17, 1–61. [Google Scholar] [CrossRef]

- Kaber, D. Issues in Human–Automation Interaction Modeling: Presumptive Aspects of Frameworks of Types and Levels of Automation. J. Cogn. Eng. Decis. Mak. 2017, 12, 7–24. [Google Scholar] [CrossRef]

- Wickens, C.D. Automation Stages & Levels, 20 Years After. J. Cogn. Eng. Decis. Mak. 2017, 12, 35–41. [Google Scholar]

- Jamieson, G.A.; Skraaning, G. Levels of Automation in Human Factors Models for Automation Design: Why We Might Consider Throwing the Baby Out With the Bathwater. J. Cogn. Eng. Decis. Mak. 2017, 12, 42–49. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).