Enabling Intelligent Data Modeling with AI for Business Intelligence and Data Warehousing: A Data Vault Case Study

Abstract

1. Introduction

2. Literature Review

2.1. Data Modeling Approaches and the Data Vault Methodology

2.2. Artificial Intelligence in Data Modeling: LLMs and Prompt Engineering

- Few-Shot Prompting—which involves providing some examples of the desired input–output pairs to the LLM so that it can adapt and provide a response based on the pattern observed in the examples provided [25];

- Contextual Prompting—which requires providing a context or background information (a few lines describing the area, domain, and why it is required to generate the output) to the LLM so that it can base the response on the context provided [26];

- Dynamic or Iterative Prompting—which refers to the practice of modifying prompts in real time based on previous responses so that performance improves [27];

- Zero-Shot Prompting—which requires asking the model to perform a task without providing any examples and relying solely on the instructions within the prompt [28];

- Instruction-Based Prompting—which refers to crafting clear, direct instructions in the prompt to specify the task that needs to be performed; it is usually used in combination with few-shot or zero-shot techniques [29];

- Chain-of-Thought Prompting—which requires the user to encourage the model to think through its reasoning process step by step and explain its thought process rather than just providing the final output, which might lead to more accurate and logical outputs [30].

- Temperature—This controls the diversity and creativity of the model-generated responses. Lower values (between 0.1 and 0.5) lead the model to be more conservative, while higher values make it more exploratory, generating varied and less predictable responses. In this context, it is recommended that the temperature be kept low, ideally as close to 0 as possible, to adhere to the established rules and avoid hallucinations;

- Maximum Number of Tokens—This parameter defines the maximum length of text that can be generated in a single response. It is used to control the output length, especially when the output needs to be integrated further and a specific format is expected;

- Top-k Sampling—This refers to the the sampling strategy used during the generation process. Lower values result in a more precise and less varied output, while higher values introduce greater diversity in the responses;

- Frequency and Presence Penalties—These are applied to regulate the tendency of the model to repeat certain phrases or introduce new concepts.

- Hallucination, where the model generates plausible but incorrect or non-verifiable content (e.g., nonexistent table structures or invalid SQL syntax).

- Verifiability, where an output may appear structurally correct but fail to comply with formal standards or business rules upon closer inspection.

- GPT—Developed by OpenAI in 2023, this is one of the most advanced LLMs, optimized for coherent text generation, data analysis, and the automation of technical tasks. It is a versatile model that requires access through the OpenAI API, which involves operational costs. Currently in active development, it exists in multiple versions with varying costs, from smaller variants such as GPT-4o-mini to more advanced and faster versions, such as GPT-4o, which are available at higher costs [31];

- LLaMA—Developed by Meta in 2023, this open-source model is designed for users who seek greater control over their data and ability to customize the model through fine-tuning [32];

- Gemini—Developed by Google in 2023, this model focuses on advanced text comprehension and natural language analysis. It is integrated within the Google Cloud ecosystem, enabling compatibility with BigQuery and other Google services. Its pricing is consumption-based, similar to OpenAI’s model [32].

3. Methodology

| Algorithm 1 Automated Data Vault Model Generation Process |

|

| ▹ Step 1: Construct dynamic prompt |

|

|

|

|

| ▹ Step 2: Submit prompt to LLM |

|

|

|

|

| ▹ Step 3: Parse generated output |

|

|

|

|

| ▹ Step 4: Evaluate model quality |

|

|

|

|

|

|

|

|

|

|

|

| ▹ Step 5: Return output and validity score |

|

|

3.1. Data Vault Model Generation Process

- Context—describes the goal and modeling paradigm (e.g., “You are an expert data architect generating a Data Vault 2.0 model.”).

- Instructions—provides specific expectations for the LLM, such as table types to use, naming conventions, and required columns.

- Example—includes one or more sample input–output pairs demonstrating the expected format and logic.

- Dynamic Input—embeds the actual input DDL corresponding to the user’s source schema.

- Hubs—representing unique business keys.

- Links—capturing relationships between hubs.

- Satellites—storing historical and descriptive attributes related to hubs or links.

3.2. Validation and Evaluation Criteria

- Structural Integrity—which verifies that the core architectural components of a Data Vault model (hubs, links, satellites) are present and correctly configured. These rules check for structural soundness, such as the presence of unique business keys in hubs, correct cardinality and connectivity in links, and the existence of surrogate technical keys.

- -

- R1. Presence of all mandatory table types (hubs, links, and satellites).

- -

- R2. Correct linkage between link tables and their corresponding hub tables.

- -

- R3. Satellites must be attached to either hub or link tables where applicable.

- -

- P1. Absence of duplicate tables.

- -

- P2. Absence of duplicate columns.

- -

- R4. Number of satellite tables ≥ number of hub tables.

- -

- R5. Number of satellite link tables ≥ number of link tables.

- -

- R6. All descriptive attributes provided in the input must be preserved in the output model.

- Conformance to Data Vault Standards—assesses adherence to the Data Vault modeling conventions. This includes naming standards, proper placement of descriptive attributes within satellites, inclusion of mandatory metadata columns such as LoadDate, RecordSource, and hash keys, and avoidance of disallowed constructs (e.g., foreign keys defined between satellites).

- -

- R7. Adherence to naming conventions.

- -

- R8. Inclusion of mandatory columns for each type of table.

- -

- R9. Validation of data types and nullability constraints.

- -

- R10. Validation of primary key definitions.

- -

- R11. Validation of foreign key relationships.

- -

- R12. Historical data separation according to model specifications.

- Performance and Scalability—evaluates the model’s readiness for production use. This includes redundancy, fragmentation, scalability under volume growth, and the model’s adaptability to future changes.

- -

- R13. Appropriateness of chosen data types for performance optimization.

- -

- R14. Balanced ratio between the link and hub tables to ensure scalability and query efficiency.

- = score for validation rule i;

- = weight for rule i;

- = penalty score for violation j (e.g., duplicate tables or columns);

- = penalty weight for violation j.

- : Perfect model—all rules satisfied, no penalties;

- : Very good model—minor issues or minimal duplication;

- : Acceptable model—moderate structural or rule violations;

- : Weak model—significant issues with structure or conformance;

- : Invalid model—fundamental non-compliance with Data Vault methodology.

3.3. Architecture of the Proposed Solution

4. Results and Discussion

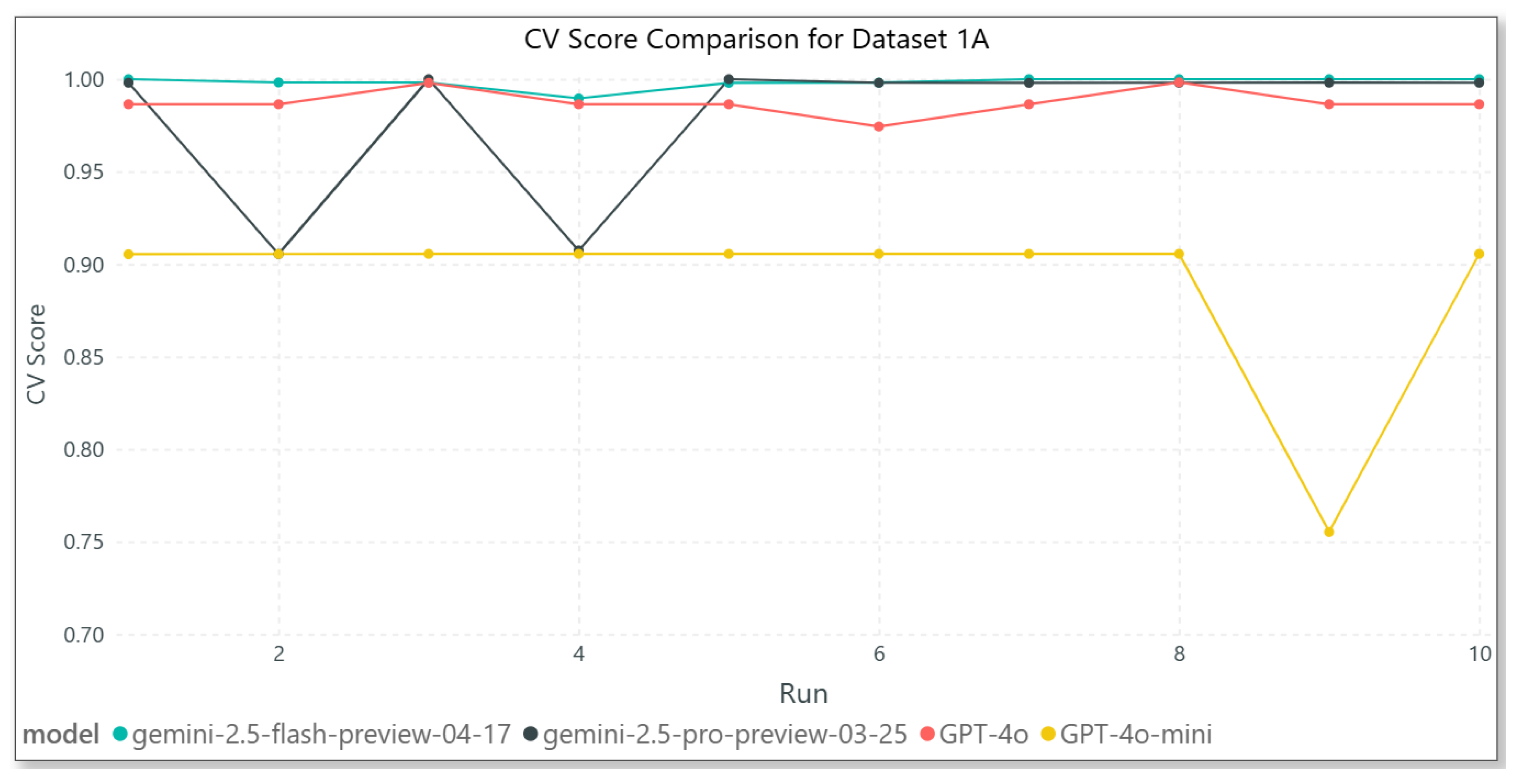

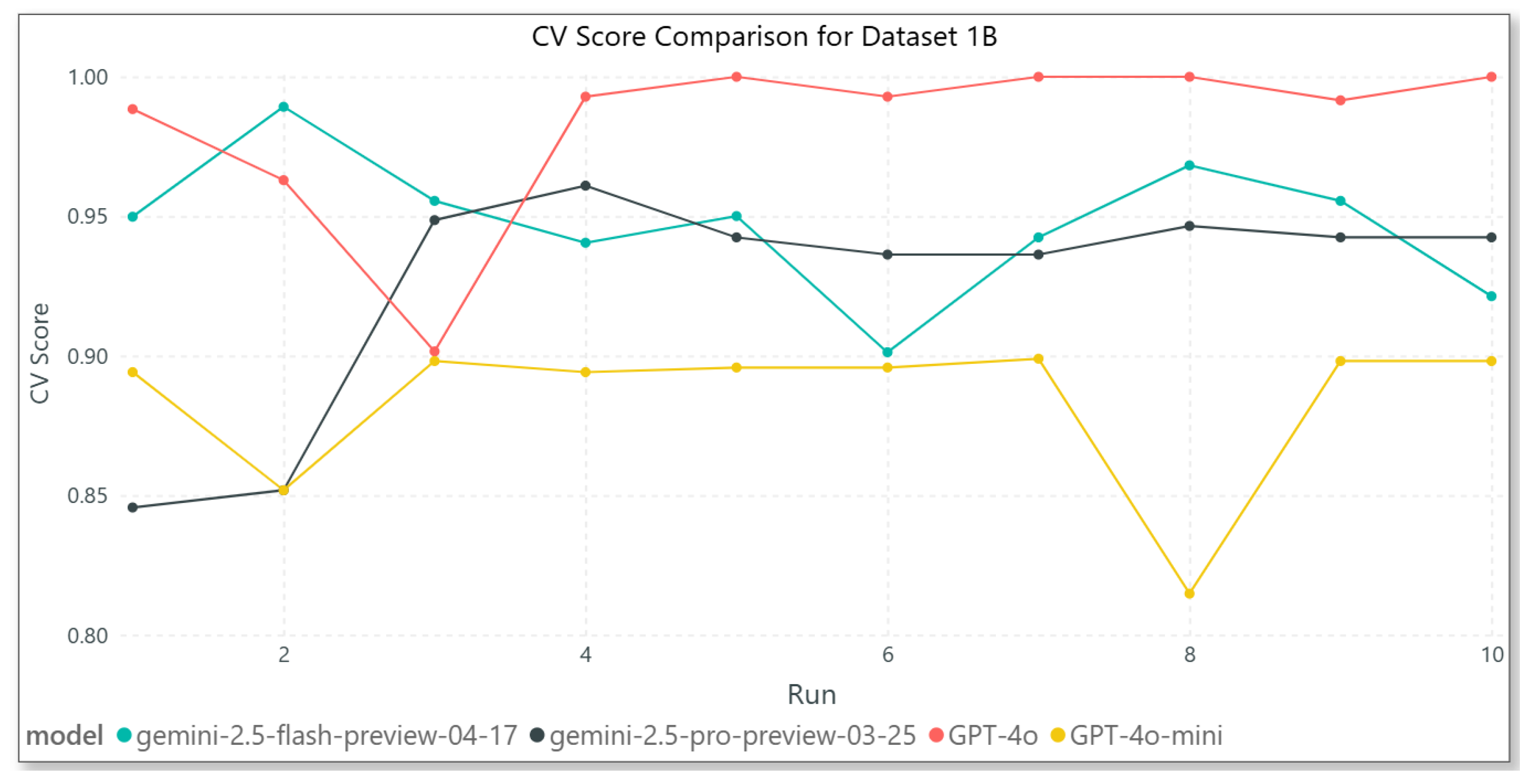

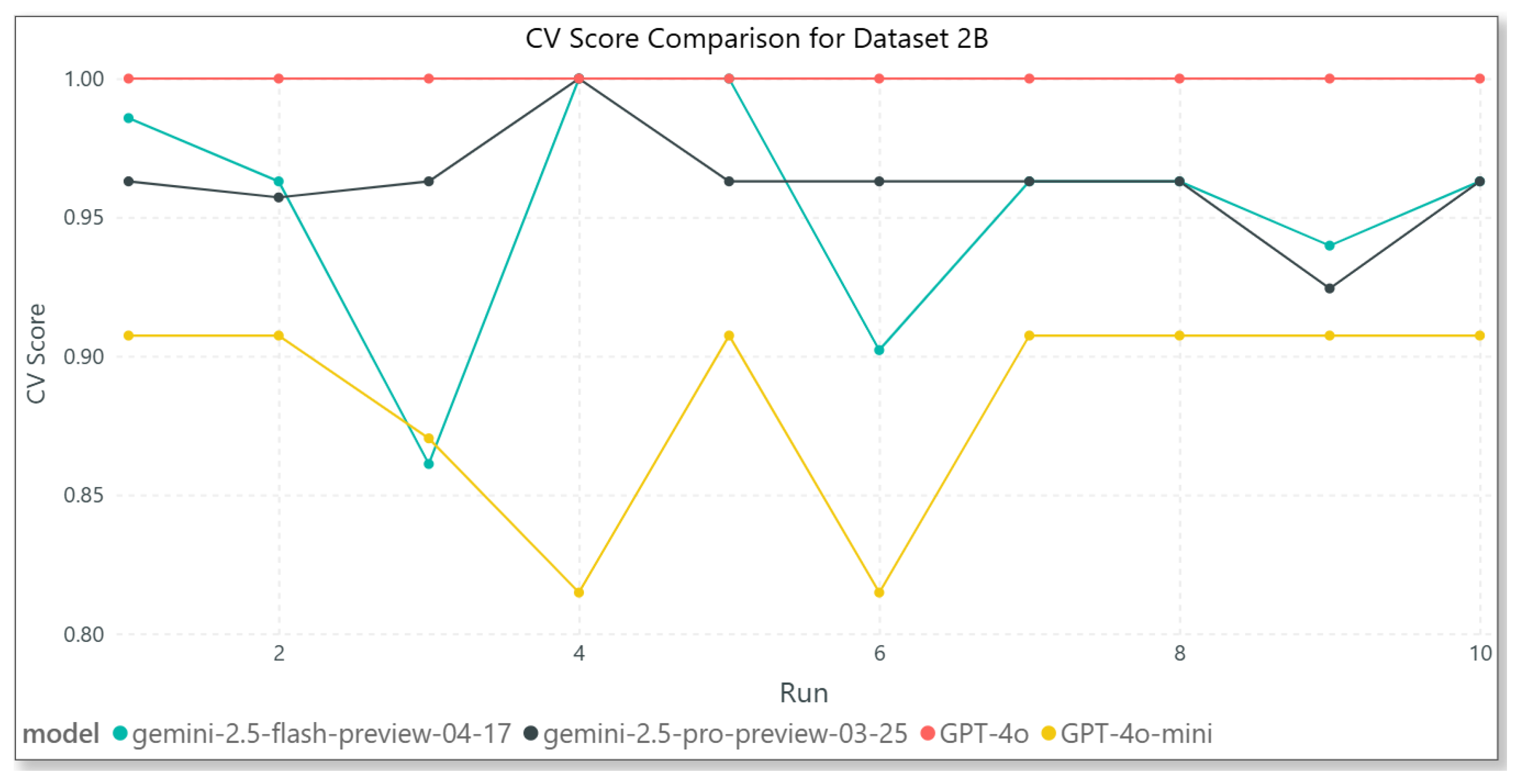

4.1. Experimental Datasets

- Transactional datasets simulate typical operational systems, such as sales, orders, and payments. These are commonly used in Business Intelligence environments for tasks like performance analysis, forecasting, and financial reporting.

- University datasets represent complex institutional systems, including students, courses, exams, and academic results. These support use cases such as curriculum management and student progress tracking.

- Category A—a moderately complex case, containing approximately 10 source tables.

- Category B—a more complex scenario, comprising approximately 16 source tables and various data inconsistencies.

4.2. LLMs Used in the Experiment

4.3. Analyzed Metrics and Model Evaluation

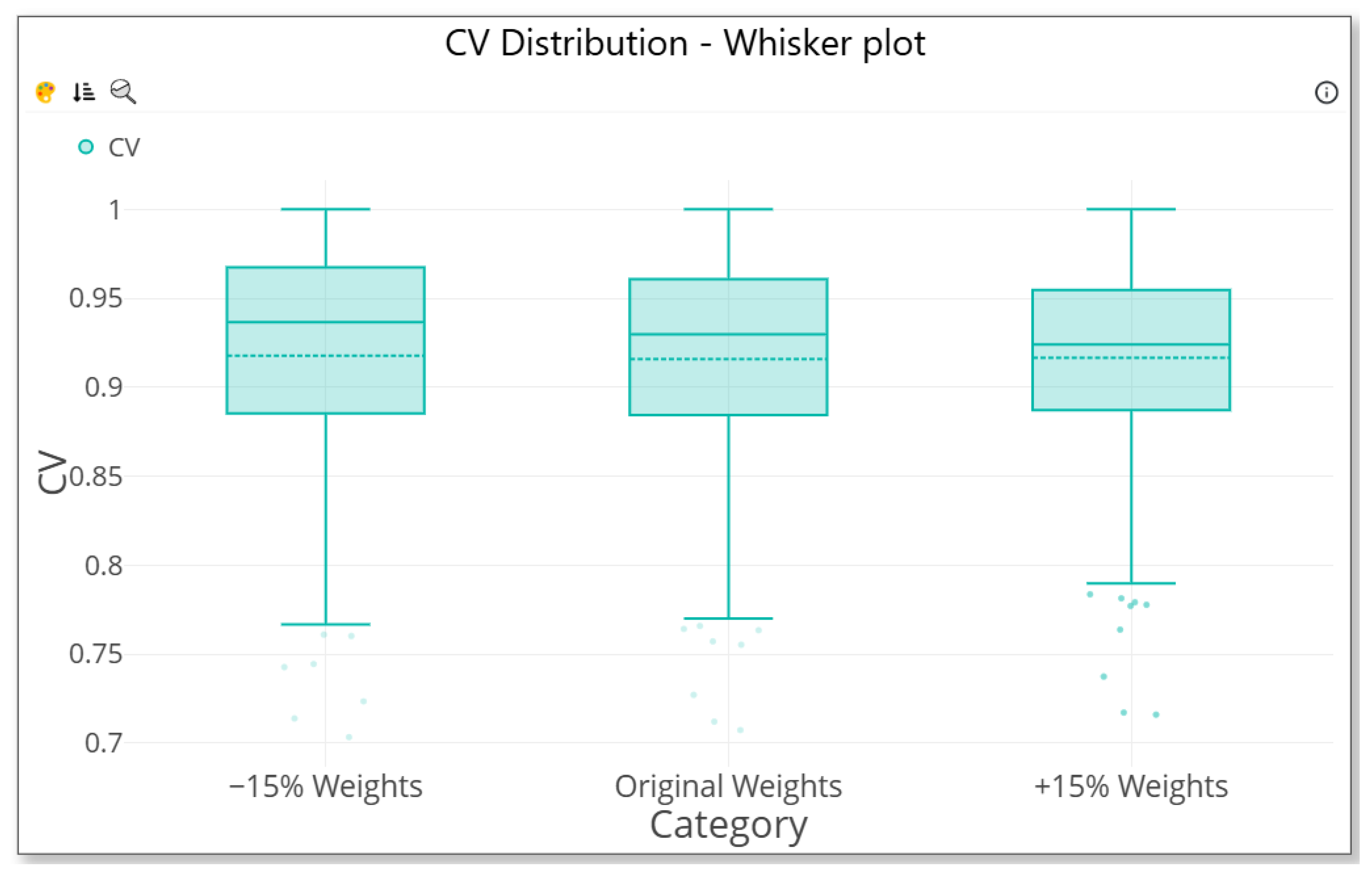

4.4. Validity Coefficient Calculation and Weight Allocation

- For a +15% increase, weights were adjusted upward and rounded up. Any weight exceeding the maximum threshold of 5 was capped accordingly.

- For a −15% decrease, weights were reduced and rounded down, with a minimum threshold of 1 enforced to preserve the influence of essential rules.

4.5. Result Interpretation

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A

- """ You are an expert in Data Vault modeling. Your task is to generate a Data Vault schema that accurately represents this dataset.

- You need to use Data Vault 2.0 principles to generate the output.

- Do not include the sql prefix before code.

- -

- Hub tables: hub_[entity]

- -

- Hub satellite tables: hsat_[entity]__main

- -

- Link tables: lnk_[entity1]__[entity2]

- -

- Link satellite tables: lsat_[entity1]__[entity2]__main

- -

- Remove unnecessary spaces in names (e.g., lnk _product should be lnk_product, h sat_column should be hsat_column)

- -

- All tables must include: id, source_key, source_id, date_effective

- -

- Satellite tables (hsat, lsat) must also include: date_ended

- -

- Hub and link tables must contain only metadata columns and technical/key columns

- -

- Satellite tables must contain only attribute columns

- -

- Each link and hub need to be followed by a lsat or hsat table (even if there are no attribute columns, they should have at least metadata and technical columns)

- -

- Columns ending with _sk, _id, or starting with id_ are keys, not attributes

- -

- Use these keys to define relationships and create appropriate link tables

- -

- Link tables need to be created based on existing hub tables.

- -

- Do not change the granularity or the context of the data, so all necessary links that represent relationships between entities need to be created

- -

- Very Important!!—Preserve original attribute column names from the source (DO NOT rename them)

- -

- Columns that contain “date” in the name should be typed as date

References

- Golightly, L.; Chang, V.; Xu, Q.A.; Gao, X.; Liu, B.S.C. Adoption of cloud computing as innovation in the organization. Int. J. Eng. Bus. Manag. 2022, 14, 1–17. [Google Scholar] [CrossRef]

- Vines, A.; Tanasescu, L. An overview of ETL cloud services: An empirical study based on user’s experience. In Proceedings of the International Conference on Business Excellence, Bucharest, Romania, 23–24 March 2023; Volume 17, pp. 2085–2098. [Google Scholar] [CrossRef]

- Clissa, L.; Lassnig, M.; Rinaldi, L. How Big is Big Data? A comprehensive survey of data production, storage, and streaming in science and industry. Front. Big Data 2023, 6, 1271639. [Google Scholar] [CrossRef] [PubMed]

- Inmon, W.H.; Zachman, J.A.; Geiger, J.G. Data Stores, Data Warehousing, and the Zachman Framework: Managing Enterprise Knowledge; McGraw-Hill: New York, NY, USA, 2008; ISBN 0070314292. [Google Scholar]

- Kimball, R.; Ross, M.; Thornthwaite, W.; Mundy, J.; Becker, B. Data Warehouse Lifecycle Toolkit: Practical Techniques for Building Data Warehouse and Business Intelligence Systems, 2nd ed.; Wiley: New York, NY, USA, 2008. [Google Scholar]

- Linstedt, D. Data Vault Series 1—Data Vault Overview. The Data Administration Newsletter (TDAN). Available online: https://tdan.com/data-vault-series-1-data-vault-overview/5054 (accessed on 5 April 2025).

- Linstedt, D. Building a Scalable Data Warehouse with Data Vault 2.0, 1st ed.; Morgan Kaufmann: Boston, MA, USA, 2016; ISBN 978-0-128-02648-9. [Google Scholar]

- Vines, A.; Tănăsescu, L. Data Vault Modeling: Insights from Industry Interviews. In Proceedings of the International Conference on Business Excellence, Bucharest, Romania, 21–22 March 2024; Volume 18, pp. 3597–3605. [Google Scholar] [CrossRef]

- El-Sappagh, S.; Hendawi, A.; El-Bastawissy, A. A proposed model for data warehouse ETL processes. J. King Saud Univ.—Comput. Inf. Sci. 2011, 23, 91–104. [Google Scholar] [CrossRef]

- Fan, L.; Lee, C.-H.; Su, H.; Feng, S.; Jiang, Z.; Sun, Z. A New Era in Human Factors Engineering: A Survey of the Applications and Prospects of Large Multimodal Models. arXiv 2024, arXiv:2405.13426. Available online: https://arxiv.org/abs/2405.13426 (accessed on 15 May 2025). [CrossRef]

- Ege, D.N.; Øvrebø, H.H.; Stubberud, V.; Berg, M.F.; Elverum, C.; Steinert, M.; Vestad, H. ChatGPT as an inventor: Eliciting the strengths and weaknesses of current large language models against humans in engineering design. arXiv 2024, arXiv:2404.18479. Available online: https://arxiv.org/abs/2404.18479 (accessed on 15 May 2025). [CrossRef]

- Choi, S.; Gazeley, W. When Life Gives You LLMs, Make LLM-ADE: Large Language Models with Adaptive Data Engineering. arXiv 2024, arXiv:2404.13028. Available online: https://arxiv.org/abs/2404.13028 (accessed on 15 May 2025). [CrossRef]

- Mantri, A. Intelligent Automation of ETL Processes for LLM Deployment: A Comparative Study of Dataverse and TPOT. Eur. J. Adv. Eng. Technol. 2024, 11, 154–158. [Google Scholar]

- Helskyaho, H.; Ruotsalainen, L.; Männistö, T. Defining Data Model Quality Metrics for Data Vault 2.0 Model Evaluation. Inventions 2024, 9, 21. [Google Scholar] [CrossRef]

- Inmon, W.H.; Imhoff, C.; Sousa, R. Corporate Information Factory, 2nd ed.; John Wiley & Sons: Hoboken, NJ, USA, 2002; ISBN 978-0-471-43750-5. [Google Scholar]

- Smith, J.; Elshnoudy, I.A. A Comparative Analysis of Data Warehouse Design Methodologies for Enterprise Big Data and Analytics. Emerg. Trends Mach. Intell. Big Data 2023, 15, 16–29. [Google Scholar]

- Giebler, C.; Gröger, C.; Hoos, E.; Schwarz, H.; Mitschang, B. Modeling Data Lakes with Data Vault: Practical Experiences, Assessment, and Lessons Learned. In Proceedings of the 38th Conference on Conceptual Modeling (ER 2019), Salvador, Bahia, Brazil, 4–7 November 2019; Lecture Notes in Information Systems and Applications. pp. 63–77. [Google Scholar] [CrossRef]

- Vines, A.; Samoila, A. An Overview of Data Vault Methodology and Its Benefits. Inform. Econ. 2023, 27, 15–24. [Google Scholar] [CrossRef]

- Yessad, L.; Labiod, A. Comparative Study of Data Warehouses Modeling Approaches: In-mon, Kimball, and Data Vault. In Proceedings of the 2016 International Conference on System Reliability and Science (ICSRS), Paris, France, 15 November 2016; pp. 95–99. [Google Scholar] [CrossRef]

- Naamane, Z.; Jovanovic, V. Effectiveness of Data Vault compared to Dimensional Data Marts on Overall Performance of a Data Warehouse System. Int. J. Comput. Sci. Issues 2016, 13, 16. [Google Scholar] [CrossRef]

- Vines, A. Performance Evaluation of Data Vault and Dimensional Modeling: Insights from TPC-DS Dataset Analysis. In Proceedings of the 23rd International Conference on Informatics in Economy (IE 2024), Timisoara, Romania, 23–24 May 2024; Smart Innovation, Systems and Technologies. Volume 426, pp. 27–37. [Google Scholar] [CrossRef]

- Helskyaho, H. Towards Automating Database Designing. In Proceedings of the 34th Conference of Open Innovations Association (FRUCT), Riga, Latvia, 15–17 November 2023; pp. 41–48. [Google Scholar] [CrossRef]

- Ggaliwango, M.; Nakayiza, H.R.; Jjingo, D.; Nakatumba-Nabende, J. Prompt Engineering in Large Language Models. In Proceedings of the Data Intelligence and Cognitive Informatics (ICDICI 2023), Tirunelveli, India, 27–28 June 2023; pp. 387–402. [Google Scholar] [CrossRef]

- Lo, L.S. The CLEAR Path: A Framework for Enhancing Information Literacy Through Prompt Engineering. The Journal of Academic Librarianship 2023, 49, 102720. [Google Scholar] [CrossRef]

- Ahmed, T.; Pai, K.S.; Devanbu, P.; Barr, E. Improving Few-Shot Prompts with Relevant Static Analysis Products. arXiv 2023, arXiv:2304.06815. Available online: https://arxiv.org/abs/2304.06815 (accessed on 20 May 2025).

- Khattak, M.U.; Rasheed, H.; Maaz, M.; Khan, S.; Khan, F.S. MaPLe: Multi-modal Prompt Learning. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver Convention Center, Vancouver, BC, Canada, 18–22 June 2023; pp. 19113–19122. [Google Scholar]

- Wang, B.; Deng, X.; Sun, H. Iteratively Prompt Pre-trained Language Models for Chain of Thought. arXiv 2022, arXiv:2203.08383. Available online: https://arxiv.org/abs/2203.08383 (accessed on 20 May 2025). [CrossRef]

- Kojima, T.; Gu, S.S.; Reid, M.; Matsuo, Y.; Iwasawa, Y. Large Language Models are Zero-Shot Reasoners. arXiv 2023, arXiv:2205.11916. Available online: https://arxiv.org/abs/2205.11916 (accessed on 20 May 2025). [CrossRef]

- Alhindi, T.; Chakrabarty, T.; Musi, E.; Muresan, S. Multitask Instruction-based Prompting for Fallacy Recognition. arXiv 2023, arXiv:2301.09992. Available online: https://arxiv.org/abs/2301.09992 (accessed on 20 May 2025). [CrossRef]

- Diao, S.; Wang, P.; Lin, Y.; Pan, R.; Liu, X.; Zhang, T. Active Prompting with Chain-of-Thought for Large Language Models. arXiv 2024, arXiv:2302.12246. Available online: https://arxiv.org/abs/2302.12246 (accessed on 20 May 2025).

- OpenAI. GPT-4 Technical Report. arXiv 2023, arXiv:2303.08774. Available online: https://arxiv.org/abs/2303.08774 (accessed on 25 April 2025). [CrossRef]

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.-A.; Lacroix, T.; Roziere, B.; Goyal, N.; Hambro, E.; Azhar, F.; et al. LLaMA: Open and Efficient Foundation Language Models. arXiv 2023, arXiv:2302.13971. Available online: https://arxiv.org/abs/2302.13971 (accessed on 10 April 2025). [CrossRef]

- Hegde, C. Anomaly Detection in Time Series Data using Data-Centric AI. In Proceedings of the 2022 IEEE International Conference on Electronics, Computing and Communication Technologies (CONECCT), Bangalore, India, 8–10 July 2022; IEEE: New York, NY, USA, 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Chai, C.; Tang, N.; Fan, J.; Luo, Y. Demystifying Artificial Intelligence for Data Preparation. In Proceedings of the Companion of the 2023 International Conference on Management of Data (SIGMOD ’23), Seattle, WA, USA, 18–23 June 2023; pp. 13–20. [Google Scholar] [CrossRef]

- Hoberman, S. Data Model Scorecard: Applying the Industry Standard on Data Model Quality, 1st ed.; Technics Publications: Bradley Beach, NJ, USA, 2015; pp. 1–250. ISBN 978-1-63462-082-6. [Google Scholar]

- Moody, D. Metrics for Evaluating the Quality of Entity Relationship Models. In Conceptual Modeling—ER ’98; Thalheim, B., Ed.; Lecture Notes in Computer Science; Springer: Berlin, Germany, 1998; Volume 1507, pp. 211–225. [Google Scholar]

- OpenAI. Pricing—OpenAI API Documentation. Available online: https://platform.openai.com/docs/pricing (accessed on 15 April 2025).

- Google Cloud. Generative AI Pricing—Vertex AI. Available online: https://cloud.google.com/vertex-ai/generative-ai/pricing (accessed on 5 April 2025).

| Quality Dimension | Rule | Short Rationale |

|---|---|---|

| QD1. Completeness | R1 | Ensures presence of all core table types (hubs, links, and satellites). |

| R3 | Guarantees satellites are attached to hubs/links where required. | |

| R4 | Ensures enough satellites to store descriptive attributes. | |

| R5 | Ensures enough link satellites for proper relationship history. | |

| R6 | Verifies that all input descriptive attributes are included in the model. | |

| R12 | Confirms proper historical data separation is implemented. | |

| QD2. Consistency | R2 | Ensures correct hub–link connectivity. |

| P1 | Prevents duplicate tables that could cause ambiguity. | |

| P2 | Prevents duplicate columns that could create inconsistencies. | |

| R7 | Enforces standardized naming conventions. | |

| R8 | Ensures presence of mandatory metadata columns. | |

| R9 | Validates data types and nullability. | |

| R10 | Ensures valid primary keys are defined. | |

| R11 | Ensures valid foreign keys are defined. | |

| R13 | Uses appropriate data types for uniform implementation. | |

| QD3. Flexibility | R4 | Allows for growth by accommodating new attributes. |

| R5 | Supports scalability by maintaining proper link satellites. | |

| R12 | Historical separation allows safe integration of new sources. | |

| R14 | Maintains proper link–hub ratio for extensibility. | |

| QD4. Comprehensibility | R2 | Clear connectivity improves model readability. |

| P1 | Avoids confusion from duplicate tables. | |

| P2 | Avoids confusion from duplicate columns. | |

| R7 | Consistent naming makes the model easier to understand. | |

| QD5. Maintainability | R8 | Metadata supports auditing and lineage tracking. |

| R9 | Valid data types reduce long-term maintenance issues. | |

| R10 | Well-defined primary keys prevent model degradation. | |

| R11 | Valid foreign keys keep relationships consistent over time. | |

| R13 | Performance-oriented data types ease future scaling. | |

| R14 | Balanced link–hub ratio avoids excessive model complexity. |

| Impact | Very Low | Low | Moderate | High | Very High |

| Factor | 1 | 2 | 3 | 4 | 5 |

| Scenario | A. Medium Complexity | B. High Complexity |

|---|---|---|

| 1. Transactional Data | A database for an online store managing information about customers, products, orders, and order details. | A database for an e-commerce platform, including management of suppliers, discounts, and delivery providers. |

| 2. University Data | A university database managing students, courses, and professors. | An extended university system including information about exams, grades, and academic programs. |

| Case Study/Scenario | Tables |

|---|---|

| 1. Transactional Data—A (1A) | customers—Customer information products—Product catalog orders—Order records order_details—Products included in orders suppliers—Suppliers shipments—Deliveries payments—Payments discounts—Order discounts reviews—Product reviews inventory—Available stock |

| 1. Transactional Data—B (1B) | users—Customer information products—Product catalog categories—Product categories product_image—Product image storage reviews—Product reviews orders—Order records order_details—Products included in orders shipments—Deliveries favorites—Favorite product entries payments—Payments discount_coupons—Discount coupons user_coupons—User coupon allocation platform_settings—Platform configurations support_messages—Account message information product_price_history—Product price history returns—Returns |

| 2. University Data—A (2A) | students—Student list courses—Course information professors—Professor details enrollments—Course enrollments departments—Academic departments exams—Scheduled exams grades—Student grades classroom—Classroom allocation assignments—Student assignments programs—Study programs |

| 2. University Data—B (2B) | students—Student list courses—Course information professors—Professor details enrollments—Course enrollments departments—Academic departments exams—Scheduled exams grades—Student grades classrooms—Classroom allocation assignments—Student assignments programs—Study programs buildings—University buildings and facilities attendance—Student course attendance library_books—University library book catalog book_loans—Book loan records schedule—Course timetable |

| Model | Input Cost (USD/1M Tokens) | Output Cost (USD/1M Tokens) |

|---|---|---|

| GPT-4o | USD 2.50/USD 1.25 * | USD 10.00 |

| Gemini 2.5 Pro | USD 1.25/USD 2.50 ** | USD 10.00/USD 15.00 ** |

| GPT-4o-mini | USD 0.15/USD 0.075 * | USD 0.60 |

| Gemini 2.5 Flash | USD 0.15 | USD 0.60 |

| Component | Rule Number | Assigned Factor | Weight |

|---|---|---|---|

| Structural Integrity | R1 | 5 | 0.092593 |

| R2 | 5 | 0.092593 | |

| R3 | 4 | 0.074074 | |

| P1 | NA | 0.5 | |

| P2 | NA | 0.2 | |

| R4 | 3 | 0.055556 | |

| R5 | 3 | 0.055556 | |

| R6 | 5 | 0.092593 | |

| Standard Compliance | R7 | 2 | 0.037037 |

| R8 | 4 | 0.074074 | |

| R9 | 3 | 0.055556 | |

| R10 | 5 | 0.092593 | |

| R11 | 5 | 0.092593 | |

| R12 | 5 | 0.092593 | |

| Performance and Scalability | R13 | 3 | 0.055556 |

| R14 | 2 | 0.037037 |

| Dataset | LLM Used | QD1 | QD2 | QD3 | QD4 | QD5 |

|---|---|---|---|---|---|---|

| 1A | Gemini-2.5-flash-preview-04-17 | 10.00 | 8.89 | 10.00 | 10.00 | 8.33 |

| 1A | Gemini-2.5-pro-preview-03-25 | 9.67 | 8.44 | 10.00 | 10.00 | 7.67 |

| 1A | GPT-4o | 10.00 | 6.89 | 10.00 | 10.00 | 6.67 |

| 1A | GPT-4o-mini | 8.17 | 7.56 | 10.00 | 9.50 | 6.67 |

| 1B | Gemini-2.5-flash-preview-04-17 | 7.50 | 8.67 | 6.75 | 10.00 | 8.33 |

| 1B | Gemini-2.5-pro-preview-03-25 | 8.17 | 9.00 | 7.25 | 10.00 | 8.33 |

| 1B | GPT-4o | 9.83 | 9.44 | 9.25 | 10.00 | 9.67 |

| 1B | GPT-4o-mini | 8.17 | 9.00 | 9.75 | 10.00 | 10.00 |

| 2A | Gemini-2.5-flash-preview-04-17 | 9.67 | 9.11 | 8.50 | 9.50 | 8.50 |

| 2A | Gemini-2.5-pro-preview-03-25 | 10.00 | 9.89 | 8.00 | 10.00 | 8.67 |

| 2A | GPT-4o | 9.33 | 9.00 | 10.00 | 8.00 | 10.00 |

| 2A | GPT-4o-mini | 8.00 | 9.67 | 9.50 | 10.00 | 10.00 |

| 2B | Gemini-2.5-flash-preview-04-17 | 9.83 | 9.22 | 8.25 | 9.50 | 8.50 |

| 2B | Gemini-2.5-pro-preview-03-25 | 10.00 | 9.78 | 7.75 | 10.00 | 8.50 |

| 2B | GPT-4o | 10.00 | 10.00 | 10.00 | 10.00 | 10.00 |

| 2B | GPT-4o-mini | 8.33 | 9.78 | 9.75 | 10.00 | 9.83 |

| Dataset | LLM Used | Perfect Model | Very Good Model | Acceptable Model | Weak Model | Invalid Model |

|---|---|---|---|---|---|---|

| 1A | GPT-4o | 0 | 10 | 0 | 0 | 0 |

| 1A | Gemini 2.5 Pro | 2 | 8 | 0 | 0 | 0 |

| 1A | GPT-4o-mini | 0 | 9 | 1 | 0 | 0 |

| 1A | Gemini 2.5 Flash | 5 | 5 | 0 | 0 | 0 |

| 1B | GPT-4o | 4 | 6 | 0 | 0 | 0 |

| 1B | Gemini 2.5 Pro | 0 | 9 | 1 | 0 | 0 |

| 1B | GPT-4o-mini | 0 | 9 | 1 | 0 | 0 |

| 1B | Gemini 2.5 Flash | 0 | 10 | 0 | 0 | 0 |

| 2A | GPT-4o | 5 | 5 | 0 | 0 | 0 |

| 2A | Gemini 2.5 Pro | 1 | 9 | 0 | 0 | 0 |

| 2A | GPT-4o-mini | 0 | 10 | 0 | 0 | 0 |

| 2A | Gemini 2.5 Flash | 2 | 7 | 1 | 0 | 0 |

| 2B | GPT-4o | 10 | 0 | 0 | 0 | 0 |

| 2B | Gemini 2.5 Pro | 1 | 9 | 0 | 0 | 0 |

| 2B | GPT-4o-mini | 0 | 8 | 2 | 0 | 0 |

| 2B | Gemini 2.5 Flash | 2 | 8 | 0 | 0 | 0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vines, A.; Bologa, A.-R.; Bostan, A.-I. Enabling Intelligent Data Modeling with AI for Business Intelligence and Data Warehousing: A Data Vault Case Study. Systems 2025, 13, 811. https://doi.org/10.3390/systems13090811

Vines A, Bologa A-R, Bostan A-I. Enabling Intelligent Data Modeling with AI for Business Intelligence and Data Warehousing: A Data Vault Case Study. Systems. 2025; 13(9):811. https://doi.org/10.3390/systems13090811

Chicago/Turabian StyleVines, Andreea, Ana-Ramona Bologa, and Andreea-Izabela Bostan. 2025. "Enabling Intelligent Data Modeling with AI for Business Intelligence and Data Warehousing: A Data Vault Case Study" Systems 13, no. 9: 811. https://doi.org/10.3390/systems13090811

APA StyleVines, A., Bologa, A.-R., & Bostan, A.-I. (2025). Enabling Intelligent Data Modeling with AI for Business Intelligence and Data Warehousing: A Data Vault Case Study. Systems, 13(9), 811. https://doi.org/10.3390/systems13090811