Development and Evaluation of an Immersive Metaverse-Based Meditation System for Psychological Well-Being Using LLM-Driven Scenario Generation

Abstract

1. Introduction

2. Literature Review

2.1. Definition and Practice of Mindfulness

2.2. Digital Technologies for Mindfulness

2.3. LLM-Based Scenario Generation

2.4. Summary

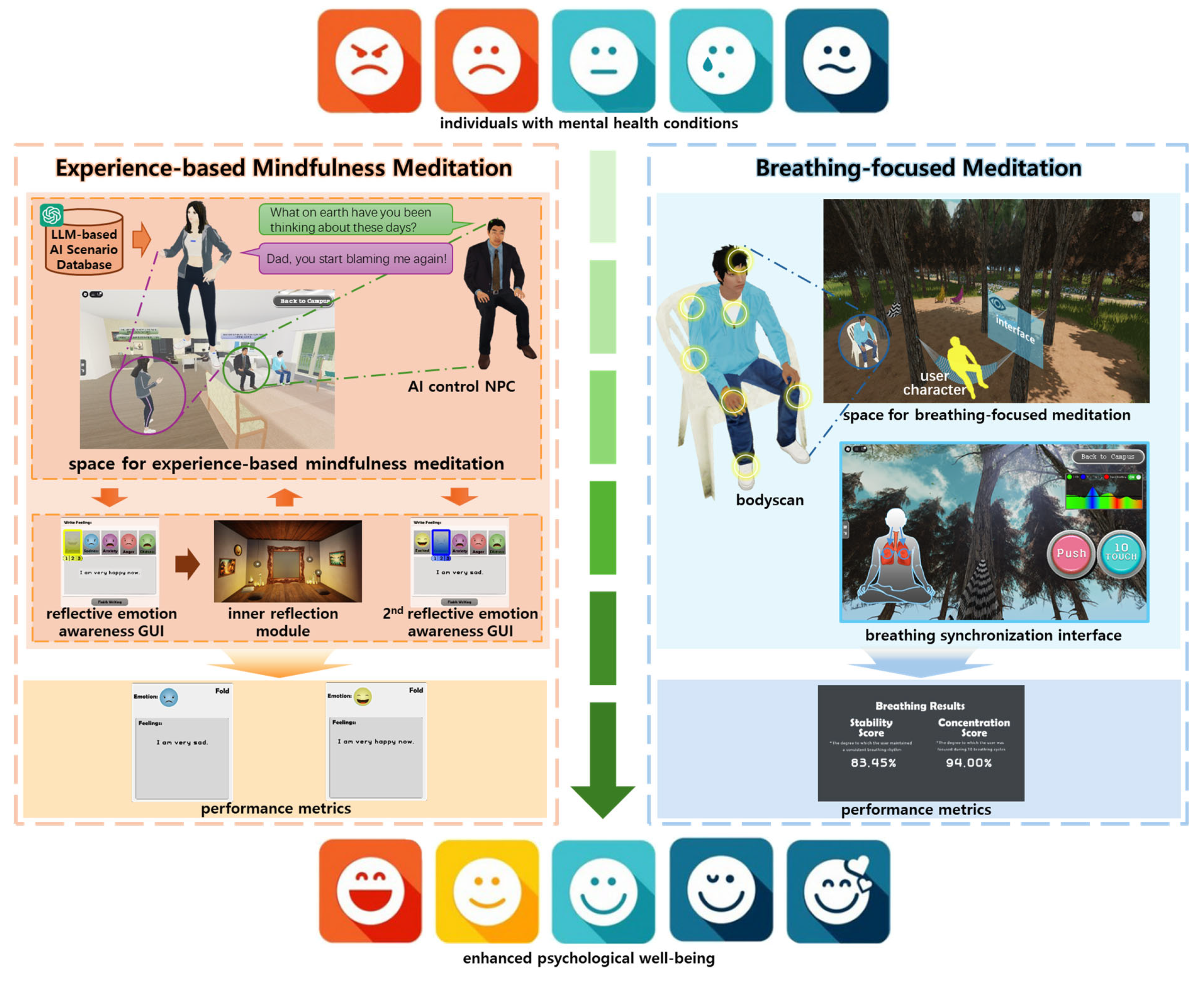

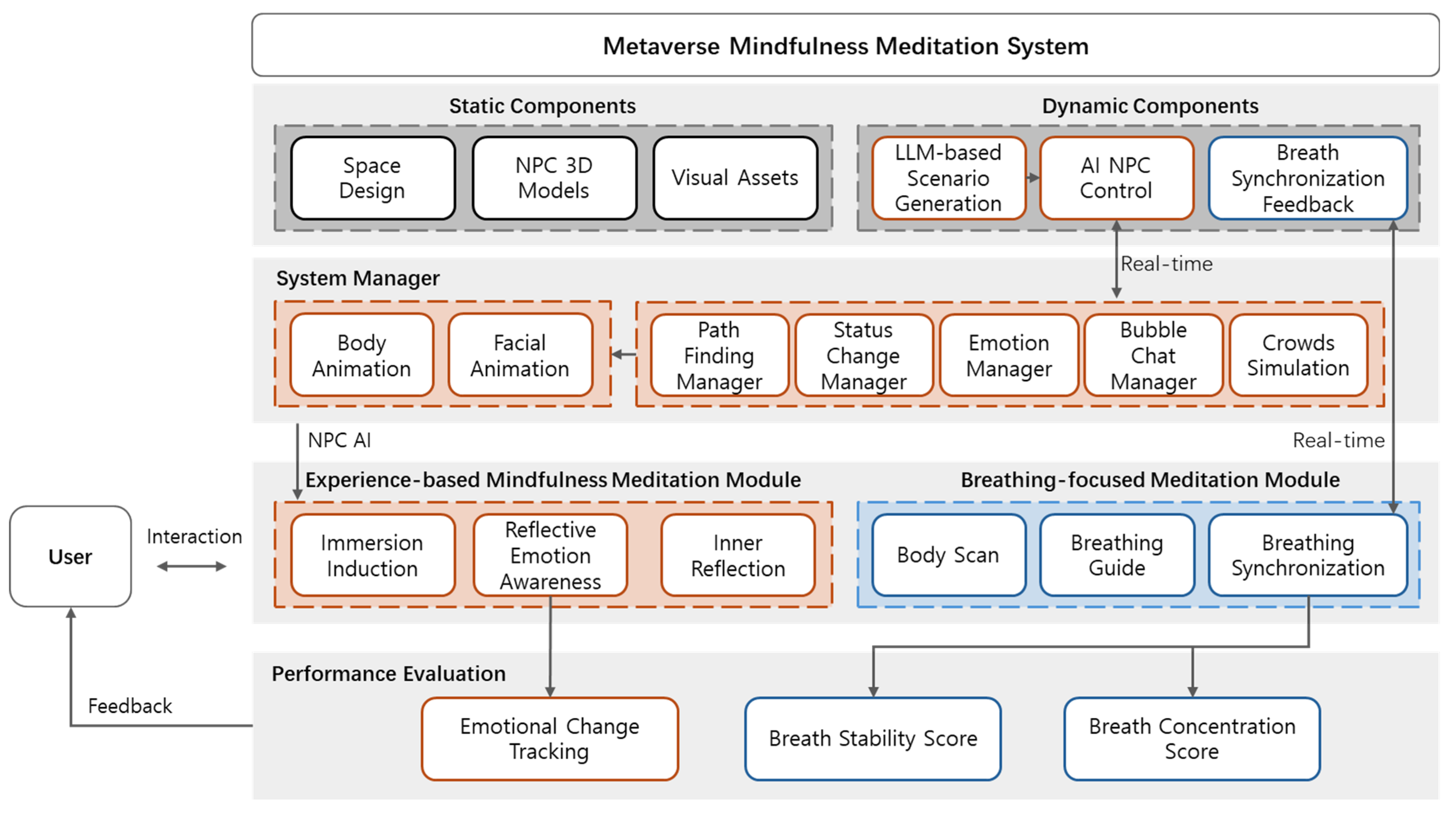

3. The Proposed Metaverse Mindfulness Meditation System

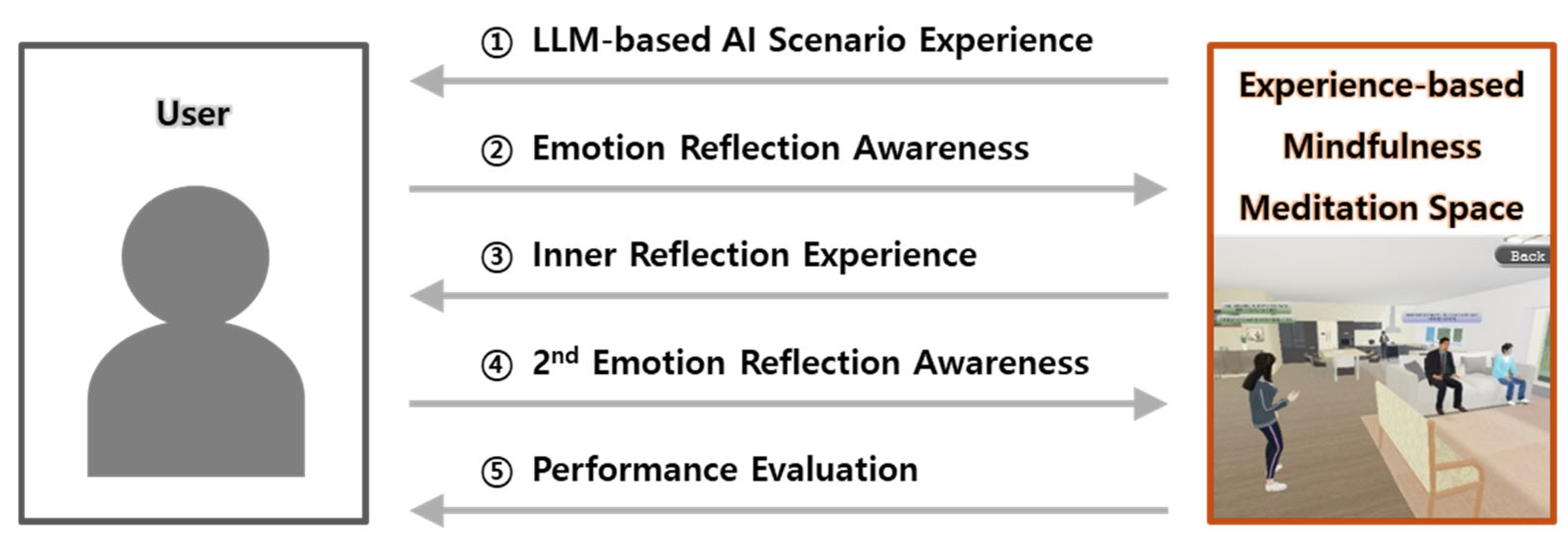

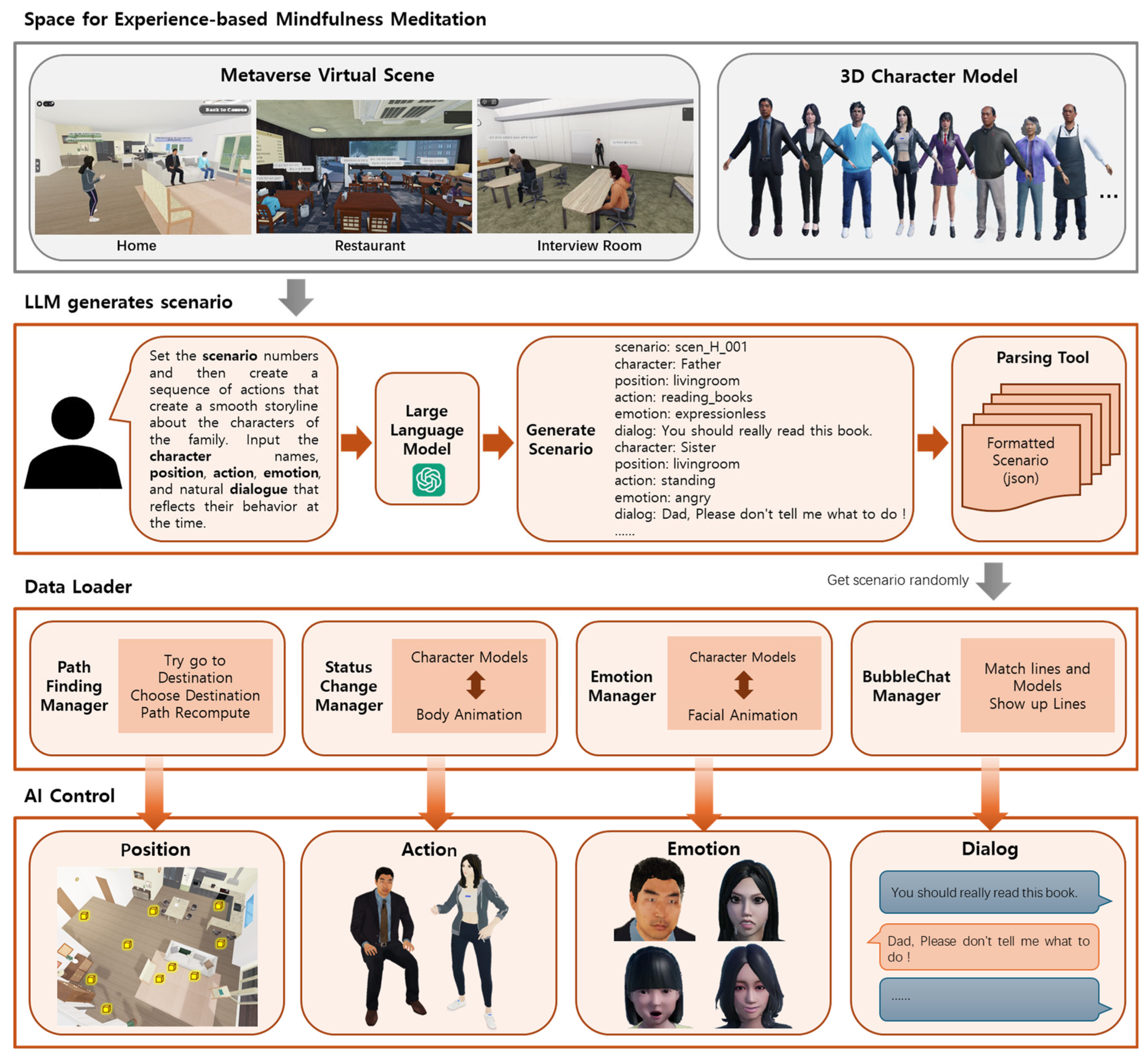

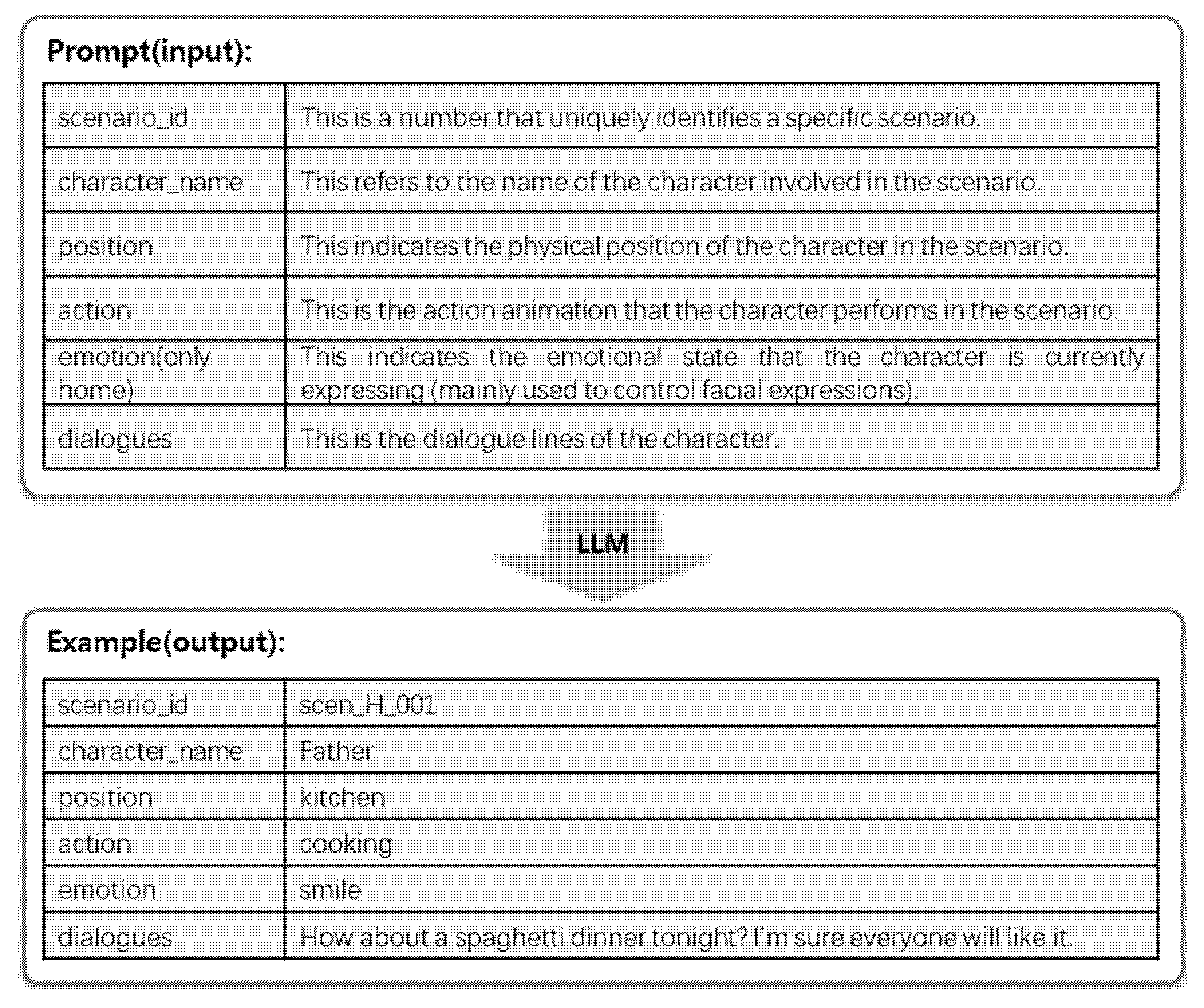

3.1. Experience-Based Mindfulness Meditation Module

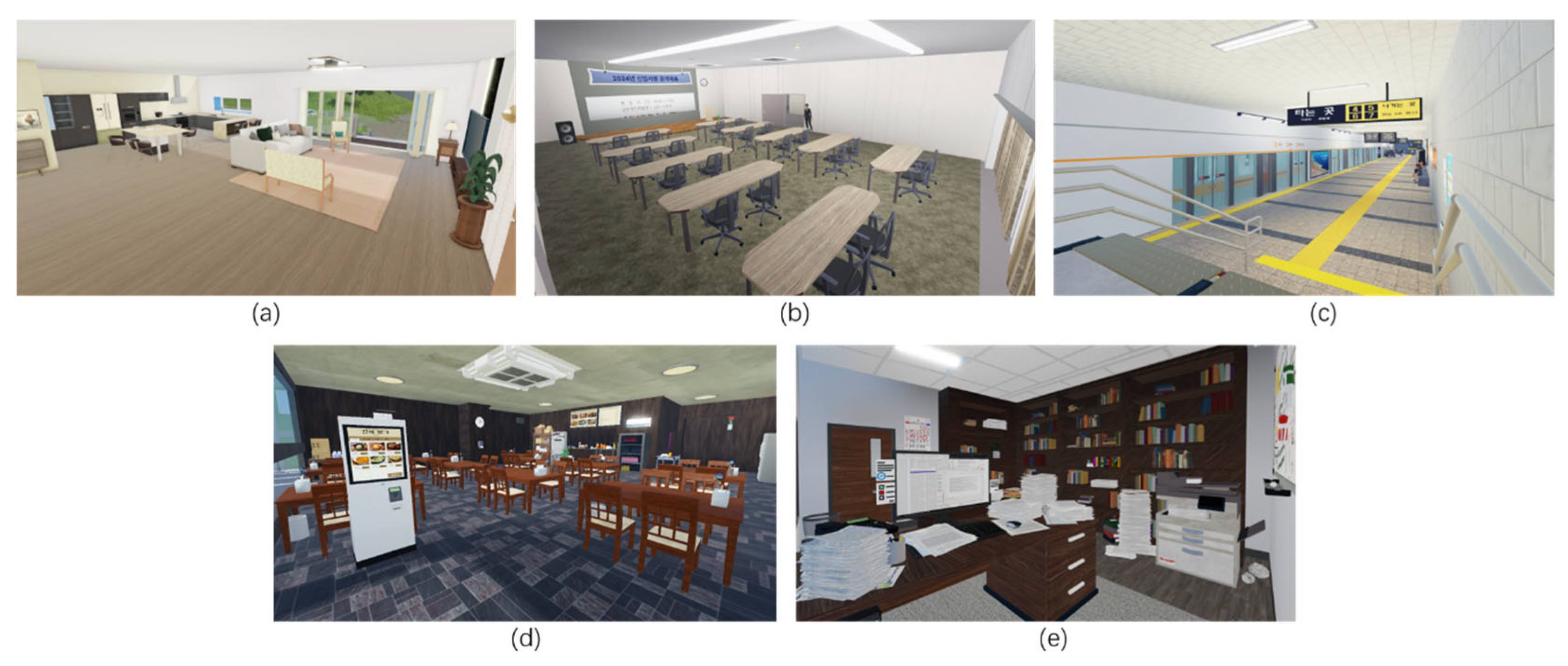

- Home: The home space replicates a typical family scene, with the living room and kitchen as the main activity areas. Scenarios generated for this space are centered around the theme of “family”, involving interactions between seven distinct familial roles, each defined with unique personality traits. To further enhance realism, the “emotion manager” system controls the facial expressions of NPCs to match the emotional context of family conversations.

- Interview Room: The space was designed to replicate the atmosphere of a real-world interview waiting area, simulating the tension and anxiety that would occur in an actual interview. The generated dialogues often reflect common pre-interview anxieties, such as nervousness, self-reminders about preparation, concerns about the interviewers, and physical stress responses.

- Subway: This environment recreates a public transit setting, specifically modeled after Korea’s Bulgwang Station, and features elements resembling the Line 3 train. To increase immersion, a realistic background audio simulating the ambient noise of a busy subway environment is included. The space is populated with several NPCs using a multi-stage crowd pathfinding system combined with collision avoidance and environmental perception algorithms. This allows users to experience rich, varied, and unpredictable interactions in public spaces.

- Restaurant: Drawing inspiration from typical Korean dining establishments, this space simulates a busy restaurant. The space features dining tables, refrigerators, air conditioners, kiosks, and menu boards. The seating area is structured around 10 tables, with background audio of lively conversations enhancing the restaurant’s ambiance. AI-generated scenes provide independent conversations for different table groups, simulating real concurrent conversations between them.

- Office: This space simulates a high-pressure office environment, featuring cluttered desks, work displays, scattered documents, and standard furnishings, such as bookshelves, a whiteboard, a clock, and a calendar. Unlike explicitly serene environments designed for relaxation, office spaces are intended to evoke feelings or thoughts associated with work, deadlines, or clutter.

3.1.1. Immersion Induction

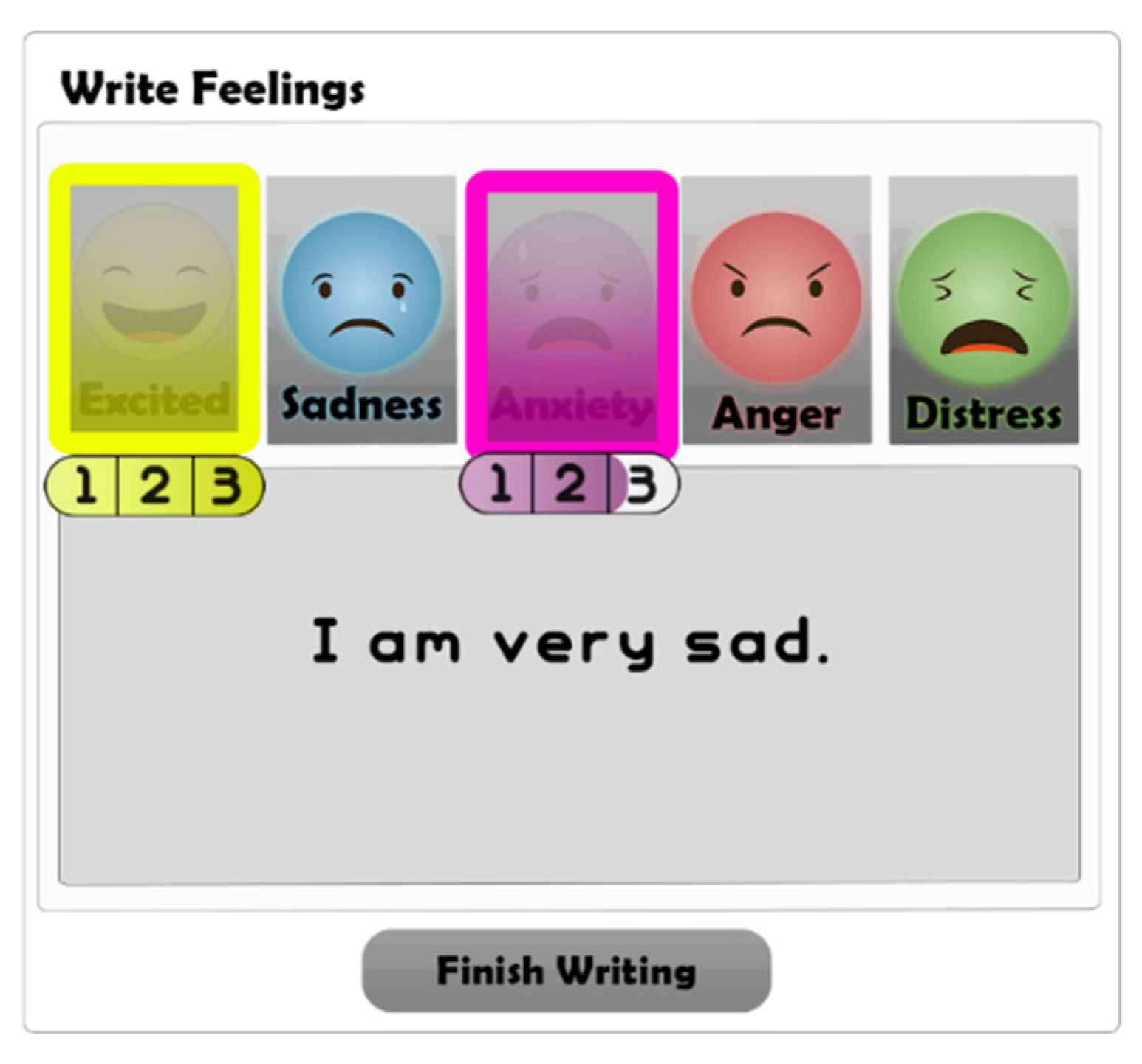

3.1.2. Reflective Emotion Awareness

3.1.3. Inner Reflection

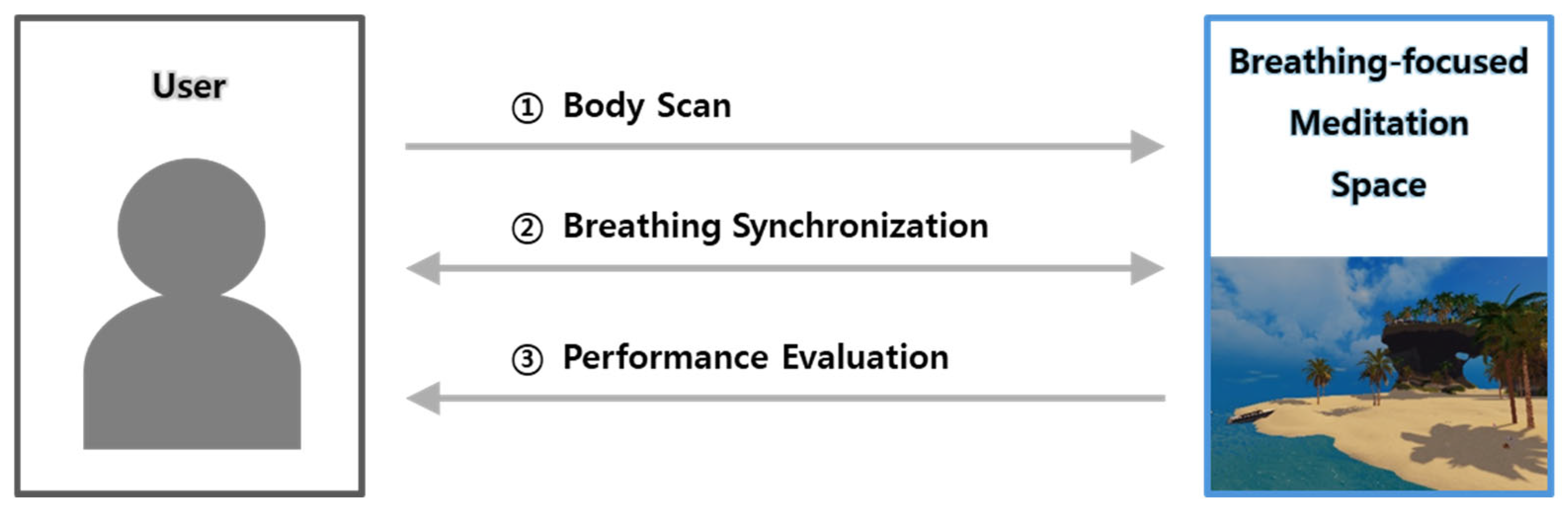

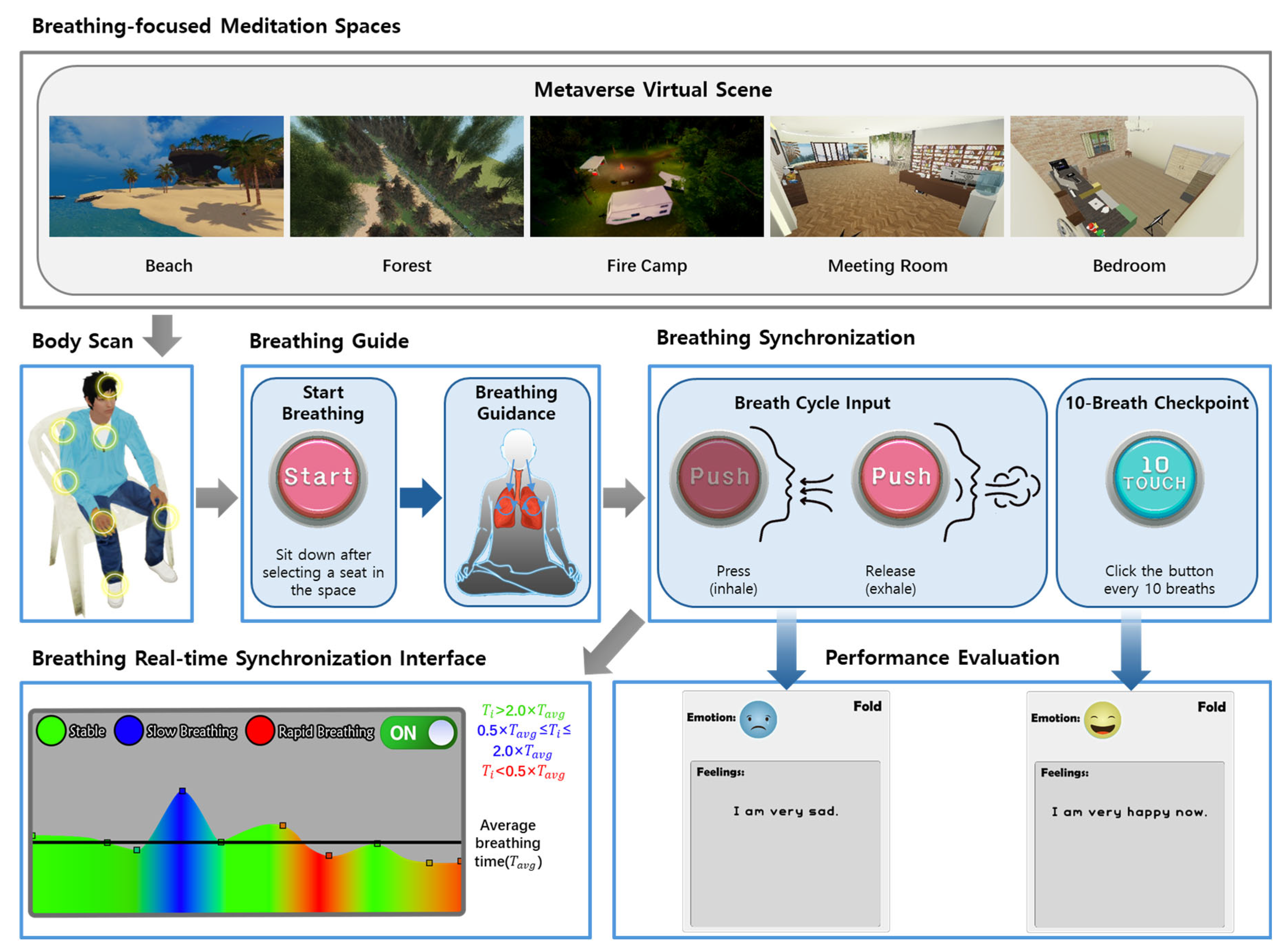

3.2. Breathing-Focused Meditation Module

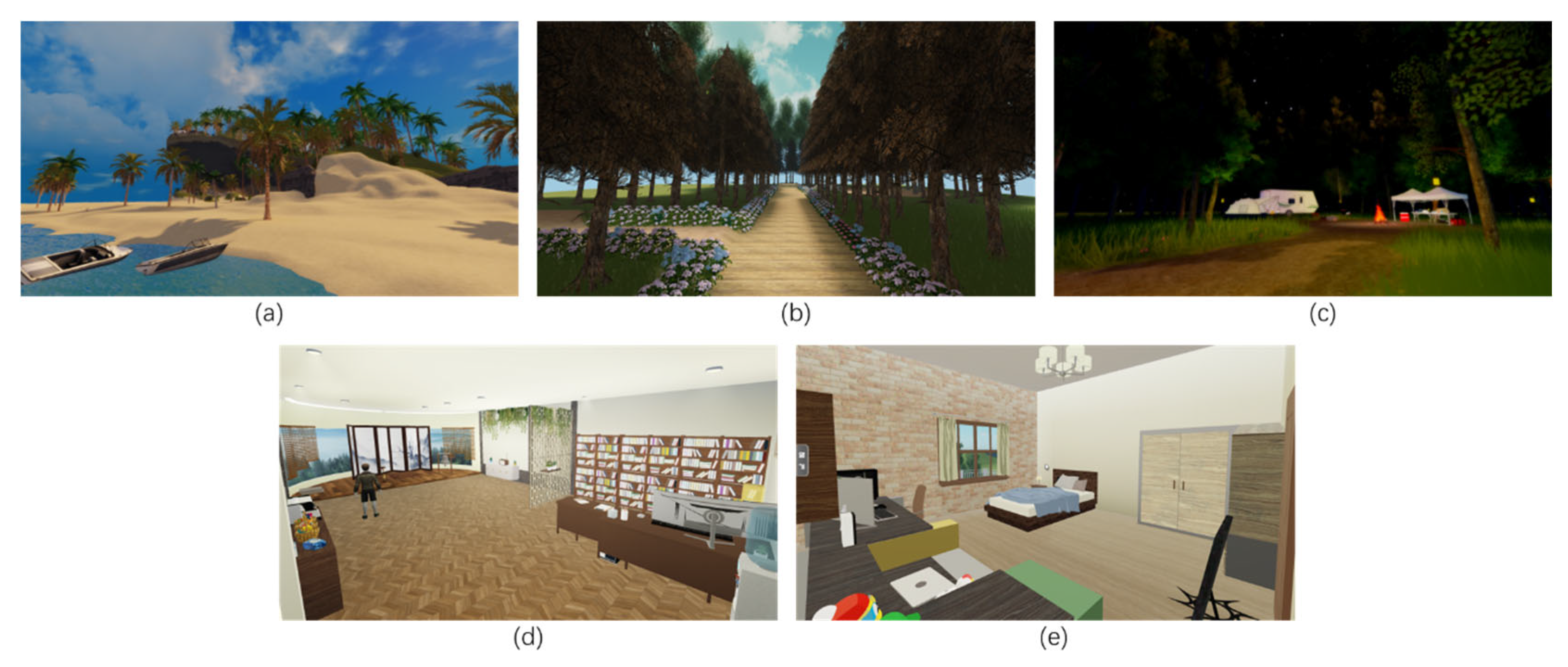

- Beach: The beach space is meticulously designed to evoke tranquility through the calming visuals and sounds of the ocean. Drawing inspiration from Korean coastal landscapes, including beaches and islands, it features realistically rendered elements, such as sand, shorelines, mountains, and seaweed. The environment dynamically shifts between a clear morning setting, offering cool shade under palm trees beneath a bright sky, and a serene afternoon ambiance characterized by the warm glow of sunset.

- Forest: This space immerses the user in a tranquil natural environment modeled after various forest trails. It features a path flanked by a lush arrangement of trees and flowers, creating a sense of peaceful enclosure. To enhance realism and sensory immersion, forest spaces feature gently swaying plants, falling leaves, and birds, creating a vivid, layered environment that fosters relaxation and focused breathing through a serene connection with nature.

- Fire Camp: The fire camp space recreates the relaxing experience of watching fire under a starry night sky. It has two areas with different feelings: a natural camp with log chairs to feel close to nature and a modern camp with an RV, camping chairs, and a grill to create a brighter and more comfortable camping experience.

- Meeting Room: Inspired by real-world counseling rooms, this space provides a quiet, formal, and ordered environment conducive to focused meditation or reflective practice. Designed with large windows offering outdoor views, it incorporates a sense of openness while maintaining a structured setting. The aesthetics are intentionally professional and minimalist to minimize distractions.

- Bedroom: Modeled closely after the intimate environment of an actual bedroom, it includes common personal items such as computers, beds, and dolls. The deliberate inclusion of familiar objects aims to evoke feelings of comfort, safety, and personal sanctuary. By leveraging the user’s potential association of their bedroom with rest and privacy, this space facilitates a sense of ease, making it easier to relax and concentrate on the meditative process of observing their breath.

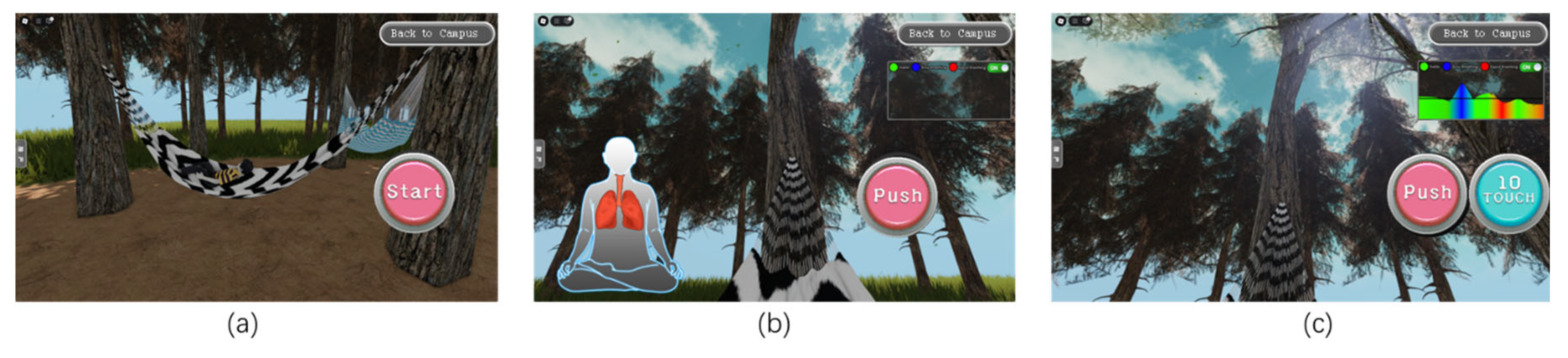

3.2.1. Body Scan

3.2.2. Breathing Guide

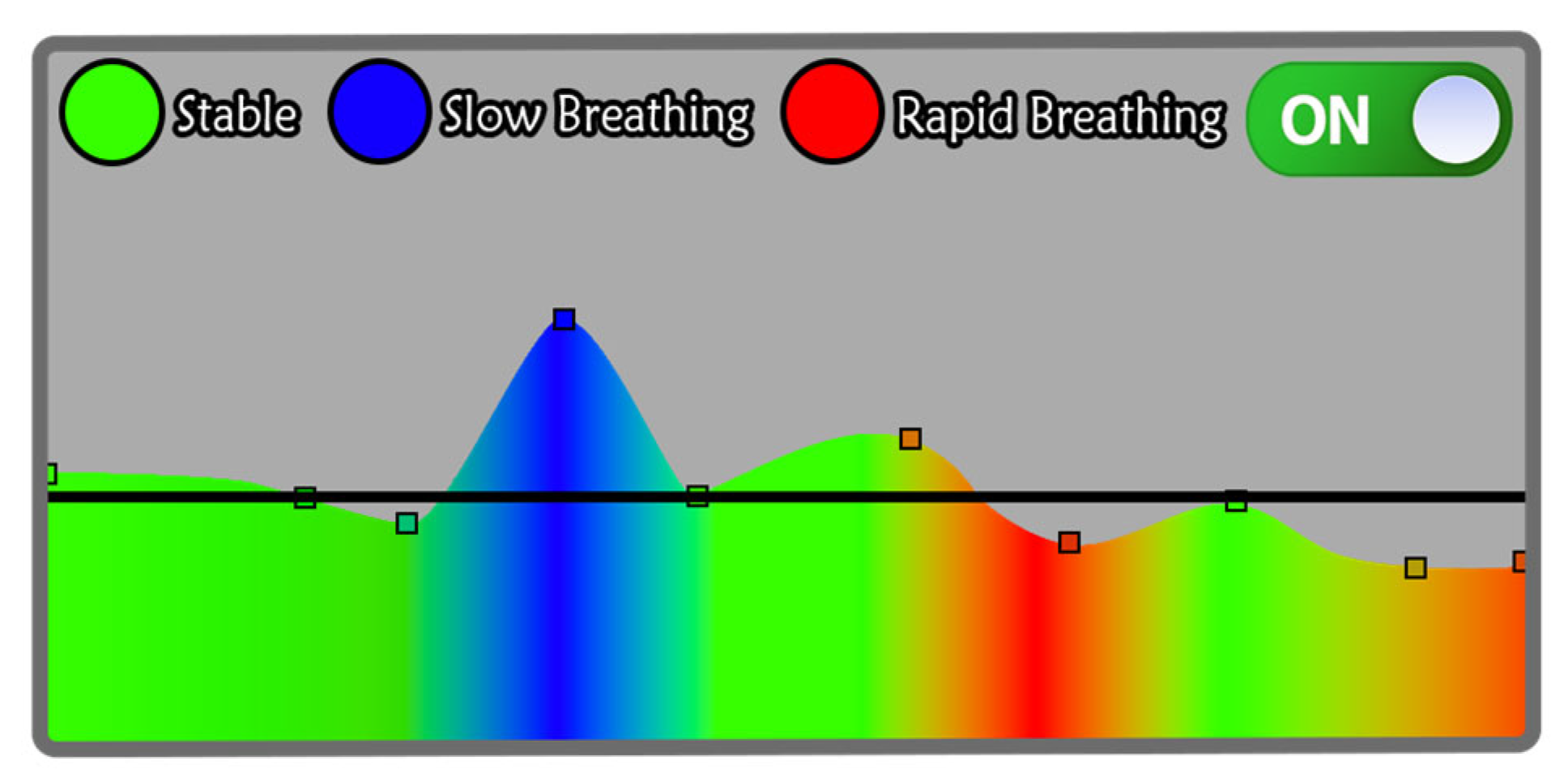

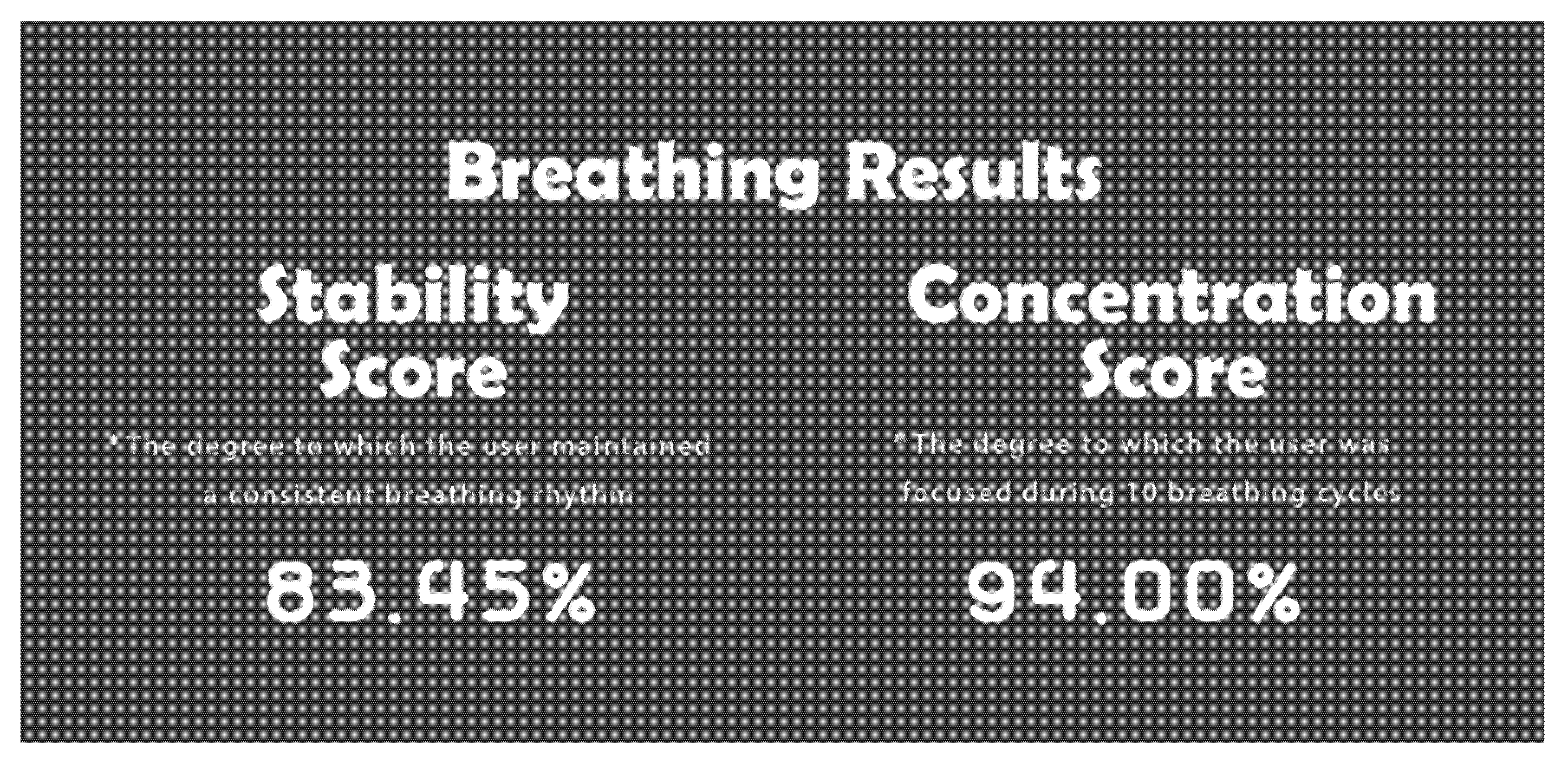

3.2.3. Breathing Synchronization

4. Experiments

4.1. Experimental Design

4.2. Evaluation Metrics

4.2.1. Questionnaire Survey

4.2.2. Data Analysis Strategy

4.3. Experimental Results

4.3.1. Measures of Psychological Distress

4.3.2. Reflecting Mindfulness

4.3.3. Psychological Flexibility

4.3.4. Self-Compassion and Self-Esteem

4.3.5. Participants’ Subjective Ratings

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| LLMs | Large Language Models |

| GPT | Generative Pre-trained Transformer |

| PSS | Perceived Stress Scale |

| PHQ-9 | Patient Health Questionnaire-9 |

| GAD-7 | Generalized Anxiety Disorder 7-item |

| BDI | Beck Depression Inventory |

| BAI | Beck Anxiety Inventory |

| FFMQ-15 | Five Facet Mindfulness Questionnaire |

| AAQ-II | Acceptance and Action Questionnaire-II |

| SCS-SF | Self-Compassion Scale—Short Form |

| RSES | Rosenberg Self-Esteem Scale |

| MCSA | Metaverse Content Suitability Assessment |

| AI | Artificial Intelligence |

| TMMS | The Melody of the Mysterious Stones |

| NPCs | Non-Player Characters |

| SPSS | International Business Machines Statistical Package for the Social Sciences Statistics |

| GUI | Graphical User Interface |

Appendix A

Appendix A.1. Validation of Emotion Congruence for LLM-Generated Loving-Kindness Guidance

| User-Selected Emotion for Guidance Script Generation | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| No. | Anger | No. | Anxiety | No. | Distress | No. | Excitement | No. | Sadness |

| 1 | Neutral (0.72) | 19 | Approval (0.35) | 37 | Caring (0.70) | 55 | Neutral (0.74) | 73 | Neutral (0.29) |

| 2 | Caring (0.48) | 20 | Nervousness (0.35) | 38 | Neutral (0.33) | 56 | Curiosity (0.57) | 74 | Sadness (0.77) |

| 3 | Approval (0.61) | 21 | Caring (0.54) | 39 | Caring (0.61) | 57 | Curiosity (0.52) | 75 | Sadness (0.48) |

| 4 | Curiosity (0.68) | 22 | Caring (0.69) | 40 | Neutral (0.37) | 58 | Curiosity (0.66) | 76 | Sadness (0.65) |

| 5 | Neutral (0.79) | 23 | Caring (0.56) | 41 | Curiosity (0.60) | 59 | Neutral (0.61) | 77 | Sadness (0.89) |

| 6 | Neutral (0.91) | 24 | Neutral (0.63) | 42 | Neutral (0.44) | 60 | Confusion (0.55) | 78 | Sadness (0.86) |

| 7 | Curiosity (0.64) | 25 | Curiosity (0.57) | 43 | Curiosity (0.66) | 61 | Curiosity (0.57) | 79 | Sadness (0.82) |

| 8 | Curiosity (0.69) | 26 | Curiosity (0.63) | 44 | Curiosity (0.61) | 62 | Curiosity (0.73) | 80 | Curiosity (0.55) |

| 9 | Curiosity (0.70) | 27 | Curiosity (0.64) | 45 | Neutral (0.51) | 63 | Joy (0.39) | 81 | Curiosity (0.46) |

| 10 | Neutral (0.74) | 28 | Caring (0.61) | 46 | Love (0.43) | 64 | Caring (0.74) | 82 | Caring (0.71) |

| 11 | Caring (0.83) | 29 | Caring (0.70) | 47 | Caring (0.87) | 65 | Excitement (0.53) | 83 | Sadness (0.81) |

| 12 | Caring (0.65) | 30 | Neutral (0.52) | 48 | Neutral (0.79) | 66 | Neutral (0.65) | 84 | Caring (0.84) |

| 13 | Neutral (0.41) | 31 | Caring (0.51) | 49 | Neutral (0.58) | 67 | Caring (0.35) | 85 | Sadness (0.91) |

| 14 | Remorse (0.38) | 32 | Caring (0.32) | 50 | Caring (0.65) | 68 | Caring (0.76) | 86 | Sadness (0.89) |

| 15 | Caring (0.24) | 33 | Caring (0.32) | 51 | Neutral (0.75) | 69 | Joy (0.27) | 87 | Sadness (0.83) |

| 16 | Caring (0.81) | 34 | Caring (0.64) | 52 | Caring (0.64) | 70 | Excitement (0.39) | 88 | Joy (0.56) |

| 17 | Desire (0.72) | 35 | Neutral (0.65) | 53 | Caring (0.75) | 71 | Caring (0.57) | 89 | Caring (0.64) |

| 18 | Caring (0.81) | 36 | Caring (0.64) | 54 | Caring (0.71) | 72 | Caring (0.65) | 90 | Sadness (0.48) |

| Topic | Count | Name | Representation | Representative Docs |

|---|---|---|---|---|

| 1 | 78 | Feeling heart ask feel | Feeling, heart, ask, feel, mind, gently, like, just, emotions, pass | ‘Ask yourself: “Who is the one observing this star of anxiety?” Close your eyes and gently watch yourself as you feel it.’, ‘Remember, this feeling will pass. Just as there are moments when things go well, there will also be times when they do not. Do not let your emotions rise and fall with circumstances. Instead, watch calmly as they pass.’, ‘Gently imagine this excitement dispersing like a soft breeze. Instead of being swept away by fleeting emotions, find the deeper, steadier center within your heart.’ |

| 2 | 12 | Emotion remember suffering rising | Emotion, remember, suffering, rising, hold, fade, time, ask, need, remain, acknowledge | ‘Observe the anger in your heart as if you were looking at a stranger. Step back from the emotion and watch it pass, like clouds drifting across the sky.’, ‘Understand that the person or situation that made you angry is also suffering and capable of making mistakes. Remember that all emotions eventually fade with time, and anger may later leave only regret.’, ‘Quietly ask yourself where this anger truly comes from. Is it really the emotion I want to hold on to? Does it deserve to remain within me?’ |

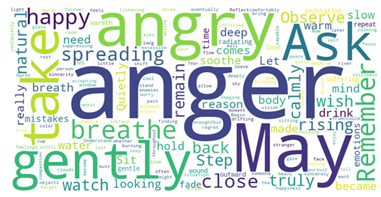

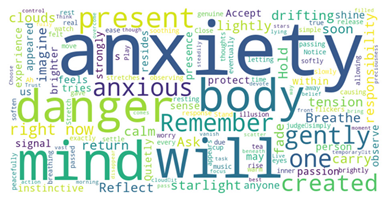

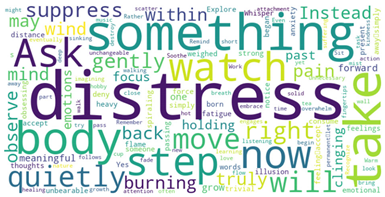

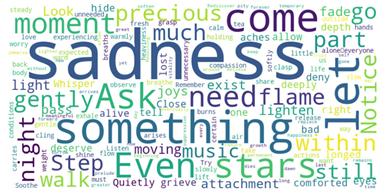

- Topic 1 (78 items): Characterized by key expressions such as “feeling, heart, ask, feel, mind, gently, like, just, emotions, pass”. The language primarily guided users toward directly experiencing and accepting their emotions through introspective reflection. Representative sentences emphasized impermanence and self-observation, such as “This emotion will eventually pass” and “Quietly close your eyes and watch deeply within your mind”.

- Topic 2 (12 items): Centered around terms like “emotion, remember, suffering, rising, hold, fade, time, ask, need, remain, acknowledge”. The content often dealt with emotions like anger and suffering, encouraging users to understand the mistakes and struggles of others while reminding them that emotions ultimately fade with time. Representative messages included “All emotions eventually disappear with time” and “Ask yourself where the root of your anger lies”.

| LLM Output | WordCloud Analysis Results |

|---|---|

| Anger |  |

| Anxiety |  |

| Distress |  |

| Excitement |  |

| Sadness |  |

Appendix A.2. Adaptive Prompt Design for Emotion- and Reflection-Driven LLM-Based Loving-Kindness Meditation Guidance

| Prompt |

|---|

| You are a compassionate therapist specializing in loving-kindness meditation and mindful emotional guidance. Your client’s current emotional state is: {user_emotion}. The client shared the following feelings: “{user_ feelings}”. Based on this information, please guide the client through a short, step-by-step loving-kindness meditation session tailored to their emotion and feelings. Follow these stages for the {user_emotion} emotion:

Write 1–2 concise sentences for each stage, using a warm, gentle, and supportive tone. Do not copy these instructions into your answer—just output the meditation script. |

| Input | Output | |

|---|---|---|

| User_Emotion | User_Feelings | |

| Anxiety | I felt nervous and kept worrying about making a mistake. |

|

| Anger | My parents always scold me. I hate my family. |

|

Appendix B

Ten Standardized Self-Report Questionnaires

| Questions | Never True | Very Seldom True | Seldom True | Sometimes True | Frequently True | Almost Always True | Always True |

|---|---|---|---|---|---|---|---|

| My painful experiences and memories make it difficult for me to live a life that I would value. | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| I’m afraid of my feelings. | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| I worry about not being able to control my worries and feelings. | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| My painful memories prevent me from having a fulfilling life. | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| Emotions cause problems in my life. | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| It seems like most people are handling their lives better than I am. | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| Worries get in the way of my success. | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| Questions | Not at All | Mildly, but it Didn’t Bother Me Much | Moderately— It Wasn’t Pleasant at Times | Severely— It Bothered Me a Lot |

|---|---|---|---|---|

| Numbness or tingling | 0 | 1 | 2 | 3 |

| Feeling hot | 0 | 1 | 2 | 3 |

| Wobbliness in legs | 0 | 1 | 2 | 3 |

| Unable to relax | 0 | 1 | 2 | 3 |

| Fear of the worst happening | 0 | 1 | 2 | 3 |

| Dizzy or lightheaded | 0 | 1 | 2 | 3 |

| Heart pounding/racing | 0 | 1 | 2 | 3 |

| Unsteady | 0 | 1 | 2 | 3 |

| Terrified or afraid | 0 | 1 | 2 | 3 |

| Nervous | 0 | 1 | 2 | 3 |

| Feeling of choking | 0 | 1 | 2 | 3 |

| Hands trembling | 0 | 1 | 2 | 3 |

| Shaky/unsteady | 0 | 1 | 2 | 3 |

| Fear of losing control | 0 | 1 | 2 | 3 |

| Difficulty in breathing | 0 | 1 | 2 | 3 |

| Fear of dying | 0 | 1 | 2 | 3 |

| Scared | 0 | 1 | 2 | 3 |

| Indigestion | 0 | 1 | 2 | 3 |

| Faint/lightheaded | 0 | 1 | 2 | 3 |

| Face flushed | 0 | 1 | 2 | 3 |

| Hot/cold sweats | 0 | 1 | 2 | 3 |

| Score | Answer |

|---|---|

| 0 | I do not feel sad. |

| 1 | I feel sad. |

| 2 | I am sad all the time and I can’t snap out of it. |

| 3 | I am so sad and unhappy that I can’t stand it. |

| 0 | I am not particularly discouraged about the future. |

| 1 | I feel discouraged about the future. |

| 2 | I feel I have nothing to look forward to. |

| 3 | I feel the future is hopeless and that things cannot improve. |

| 0 | I do not feel like a failure. |

| 1 | I feel I have failed more than the average person. |

| 2 | As I look back on my life, all I can see is a lot of failures. |

| 3 | I feel I am a complete failure as a person. |

| 0 | I get as much satisfaction out of things as I used to. |

| 1 | I don’t enjoy things the way I used to. |

| 2 | I don’t get real satisfaction out of anything anymore. |

| 3 | I am dissatisfied or bored with everything. |

| 0 | I don’t feel particularly guilty. |

| 1 | I feel guilty a good part of the time. |

| 2 | I feel quite guilty most of the time. |

| 3 | I feel guilty all of the time. |

| 0 | I don’t feel I am being punished. |

| 1 | I feel I may be punished. |

| 2 | I expect to be punished. |

| 3 | I feel I am being punished. |

| 0 | I don’t feel disappointed in myself. |

| 1 | I am disappointed in myself. |

| 2 | I am disgusted with myself. |

| 3 | I hate myself. |

| 0 | I don’t feel I am any worse than anybody else. |

| 1 | I am critical of myself for my weaknesses or mistakes. |

| 2 | I blame myself all the time for my faults. |

| 3 | I blame myself for everything bad that happens. |

| 0 | I don’t have any thoughts of killing myself. |

| 1 | I have thoughts of killing myself, but I would not carry them out. |

| 2 | I would like to kill myself. |

| 3 | I would kill myself if I had the chance. |

| 0 | I don’t cry any more than usual. |

| 1 | I cry more now than I used to. |

| 2 | I cry all the time now. |

| 3 | I used to be able to cry, but now I can’t cry even though I want to. |

| 0 | I am no more irritated by things than I ever was. |

| 1 | I am slightly more irritated now than usual. |

| 2 | I am quite annoyed or irritated a good deal of the time. |

| 3 | I feel irritated all the time. |

| 0 | I have not lost interest in other people. |

| 1 | I am less interested in other people than I used to be. |

| 2 | I have lost most of my interest in other people. |

| 3 | I have lost all of my interest in other people. |

| 0 | I make decisions about as well as I ever could. |

| 1 | I put off making decisions more than I used to. |

| 2 | I have greater difficulty in making decisions more than I used to. |

| 3 | I can’t make decisions at all anymore. |

| 0 | I don’t feel that I look any worse than I used to. |

| 1 | I am worried that I am looking old or unattractive. |

| 2 | I feel there are permanent changes in my appearance that make me look unattractive |

| 3 | I believe that I look ugly. |

| 0 | I can work about as well as before. |

| 1 | It takes an extra effort to get started doing something. |

| 2 | I have to push myself very hard to do anything. |

| 3 | I can’t do any work at all. |

| 0 | I can sleep as well as usual. |

| 1 | I don’t sleep as well as I used to. |

| 2 | I wake up 1–2 h earlier than usual and find it hard to get back to sleep. |

| 3 | I wake up several hours earlier than I used to and cannot get back to sleep. |

| 0 | I don’t get more tired than usual. |

| 1 | I get tired more easily than I used to. |

| 2 | I get tired from doing almost anything. |

| 3 | I am too tired to do anything. |

| 0 | My appetite is no worse than usual. |

| 1 | My appetite is not as good as it used to be. |

| 2 | My appetite is much worse now. |

| 3 | I have no appetite at all anymore. |

| 0 | I haven’t lost much weight, if any, lately. |

| 1 | I have lost more than five pounds. |

| 2 | I have lost more than ten pounds. |

| 3 | I have lost more than fifteen pounds. |

| 0 | I am no more worried about my health than usual. |

| 1 | I am worried about physical problems like aches, pains, upset stomach, or constipation. |

| 2 | I am very worried about physical problems and it’s hard to think of much else. |

| 3 | I am so worried about my physical problems that I cannot think of anything else. |

| 0 | I have not noticed any recent change in my interest in sex. |

| 1 | I am less interested in sex than I used to be. |

| 2 | I have almost no interest in sex. |

| 3 | I have lost interest in sex completely. |

| Questions | Never or Very Rarely True | Rarely True | Sometimes True | Often True | Very Often or Always True |

|---|---|---|---|---|---|

| When I take a shower or a bath, I stay alert to the sensations of water on my body. | 1 | 2 | 3 | 4 | 5 |

| I’m good at finding words to describe my feelings. | 1 | 2 | 3 | 4 | 5 |

| I don’t pay attention to what I’m doing because I’m daydreaming, worrying, or otherwise distracted. | 5 | 4 | 3 | 2 | 1 |

| I believe some of my thoughts are abnormal or bad, and I shouldn’t think that way. | 5 | 4 | 3 | 2 | 1 |

| When I have distressing thoughts or images, I “step back” and am aware of the thought or image without getting taken over by it. | 1 | 2 | 3 | 4 | 5 |

| I notice how foods and drinks affect my thoughts, bodily sensations, and emotions. | 1 | 2 | 3 | 4 | 5 |

| I have trouble thinking of the right words to express how I feel about things. | 5 | 4 | 3 | 2 | 1 |

| I do jobs or tasks automatically without being aware of what I’m doing. | 5 | 4 | 3 | 2 | 1 |

| I think some of my emotions are bad or inappropriate, and I shouldn’t feel them | 5 | 4 | 3 | 2 | 1 |

| When I have distressing thoughts or images, I am able just to notice them without reacting. | 1 | 2 | 3 | 4 | 5 |

| I pay attention to sensations, such as the wind in my hair or sun on my face. | 1 | 2 | 3 | 4 | 5 |

| Even when I’m feeling terribly upset, I can find a way to put it into words. | 1 | 2 | 3 | 4 | 5 |

| I find myself doing things without paying attention. | 5 | 4 | 3 | 2 | 1 |

| I tell myself I shouldn’t be feeling the way I’m feeling. | 5 | 4 | 3 | 2 | 1 |

| When I have distressing thoughts or images, I just notice them and let them go. | 1 | 2 | 3 | 4 | 5 |

| Questions | Not at All | Several Days | More Than Half the Days | Nearly Every Day |

|---|---|---|---|---|

| Feeling nervous, anxious, or on edge. | 0 | 1 | 2 | 3 |

| Not being able to stop or control worrying. | 0 | 1 | 2 | 3 |

| Worrying too much about different things. | 0 | 1 | 2 | 3 |

| Trouble relaxing. | 0 | 1 | 2 | 3 |

| Being so restless that it is hard to sit still. | 0 | 1 | 2 | 3 |

| Becoming easily annoyed or irritable. | 0 | 1 | 2 | 3 |

| Feeling afraid, as if something awful might happen. | 0 | 1 | 2 | 3 |

| Metaverse Content Suitability Assessment | |||||

|---|---|---|---|---|---|

To respond to the growing demand for mental health services, the Industrial Artificial Intelligence Researcher Center at Dongguk University is developing metaverse-based content specifically designed for the mental well-being of the MZ generation. This survey is being conducted to assess participants’ satisfaction with and the effectiveness of the metaverse content developed for mental health management among the MZ generation.

| |||||

| Gender | Male | Female | |||

| Age Group | 10s | 20s | 30s | 40s | Other |

| Questions | Very Dissatisfied | Dissatisfied | Neutral | Satisfied | Very Satisfied |

| Was the explanation of how to use the Breathing-focused Meditation Module content provided clearly and appropriately? | |||||

| Was it easy to understand and navigate the menu options in the Breathing-focused Meditation Module content? | |||||

| Are you satisfied with the design of the Breathing-focused Meditation Module space upon entry? | |||||

| Are you satisfied with the breathing guide and controls in the Breathing-focused Meditation Module? | |||||

| After completing the Experience-based Mindfulness Meditation Module, are you satisfied with the space design? | |||||

| Are you satisfied with the font size, emoji size, and overall text/screen layout while writing in the reflective emotion awareness GUI? | |||||

| Are you satisfied with the font size, playback speed, and voice tone of the guided narration provided throughout the Metaverse-Based Meditation System content? | |||||

| Questions | Not at All | Several Days | More Than Half the Days | Nearly Every Day |

|---|---|---|---|---|

| Little interest or pleasure in doing things. | 0 | 1 | 2 | 3 |

| Feeling down, depressed, or hopeless. | 0 | 1 | 2 | 3 |

| Trouble falling or staying asleep or sleeping too much. | 0 | 1 | 2 | 3 |

| Feeling tired or having little energy. | 0 | 1 | 2 | 3 |

| Poor appetite or overeating. | 0 | 1 | 2 | 3 |

| Feeling bad about yourself—or that you are a failure or have let yourself or your family down. | 0 | 1 | 2 | 3 |

| Trouble concentrating on things, such as reading the newspaper or watching television. | 0 | 1 | 2 | 3 |

| Moving or speaking so slowly that other people could have noticed, or the opposite—being so fidgety or restless that you have been moving around a lot more than usual. | 0 | 1 | 2 | 3 |

| Thoughts that you would be better off dead or of hurting yourself in some way. | 0 | 1 | 2 | 3 |

| Questions | Never | Almost Never | Sometimes | Fairly Often | Very Often |

|---|---|---|---|---|---|

| In the last month, how often have you been upset because of something that happened unexpectedly? | 0 | 1 | 2 | 3 | 4 |

| In the last month, how often have you felt that you were unable to control the important things in your life? | 0 | 1 | 2 | 3 | 4 |

| In the last month, how often have you felt nervous and stressed? | 0 | 1 | 2 | 3 | 4 |

| In the last month, how often have you felt confident about your ability to handle your personal problems? (R) | 4 | 3 | 2 | 1 | 0 |

| In the last month, how often have you felt that things were going your way? (R) | 4 | 3 | 2 | 1 | 0 |

| In the last month, how often have you found that you could not cope with all the things that you had to do? | 0 | 1 | 2 | 3 | 4 |

| In the last month, how often have you been able to control irritations in your life? (R) | 4 | 3 | 2 | 1 | 0 |

| In the last month, how often have you felt that you were on top of things? (R) | 4 | 3 | 2 | 1 | 0 |

| In the last month, how often have you been angered because of things that happened that were outside of your control? | 0 | 1 | 2 | 3 | 4 |

| In the last month, how often have you felt difficulties were piling up so high that you could not overcome them? | 0 | 1 | 2 | 3 | 4 |

| Questions | Strongly Disagree | Disagree | Sometimes | Agree | Strongly Agree |

|---|---|---|---|---|---|

| On the whole, I am satisfied with myself. | 1 | 2 | 3 | 4 | 5 |

| At times, I think I am no good at all. | 1 | 2 | 3 | 4 | 5 |

| I feel that I have a number of good qualities. | 5 | 4 | 3 | 2 | 1 |

| I am able to do things as well as most other people. | 1 | 2 | 3 | 4 | 5 |

| I feel I do not have much to be proud of. | 5 | 4 | 3 | 2 | 1 |

| I certainly feel useless at times. | 1 | 2 | 3 | 4 | 5 |

| I feel that I’m a person of worth, at least on an equal plane with others. | 1 | 2 | 3 | 4 | 5 |

| I wish I could have more respect for myself. | 5 | 4 | 3 | 2 | 1 |

| All in all, I am inclined to feel that I am a failure. | 5 | 4 | 3 | 2 | 1 |

| I take a positive attitude toward myself. | 5 | 4 | 3 | 2 | 1 |

| Questions | Never | Rarely | Sometimes | Often | Always |

|---|---|---|---|---|---|

| When I fail at something important to me, I become consumed by feelings of inadequacy. (R) | 5 | 4 | 3 | 2 | 1 |

| I try to be understanding and patient towards those aspects of my personality I don’t like. | 1 | 2 | 3 | 4 | 5 |

| When something painful happens, I try to take a balanced view of the situation. | 1 | 2 | 3 | 4 | 5 |

| When I’m feeling down, I tend to feel like most other people are probably happier than I am. (R) | 5 | 4 | 3 | 2 | 1 |

| I try to see my failings as part of the human condition. | 1 | 2 | 3 | 4 | 5 |

| When I’m going through a very hard time, I give myself the caring and tenderness I need. | 1 | 2 | 3 | 4 | 5 |

| When something upsets me, I try to keep my emotions in balance. | 1 | 2 | 3 | 4 | 5 |

| When I fail at something that’s important to me, I tend to feel alone in my failure. (R) | 5 | 4 | 3 | 2 | 1 |

| When I’m feeling down, I tend to obsess and fixate on everything that’s wrong. (R) | 5 | 4 | 3 | 2 | 1 |

| When I feel inadequate in some way, I try to remind myself that feelings of inadequacy are shared by most people. | 1 | 2 | 3 | 4 | 5 |

| I’m disapproving and judgmental about my own flaws and inadequacies. (R) | 5 | 4 | 3 | 2 | 1 |

| I’m intolerant and impatient towards those aspects of my personality I don’t like. (R) | 5 | 4 | 3 | 2 | 1 |

References

- McGrath, J.J.; Al-Hamzawi, A.; Alonso, J.; Altwaijri, Y.; Andrade, L.H.; Bromet, E.J.; Bruffaerts, R.; Caldas de Almeida, J.M.; Chardoul, S.; Chiu, W.T.; et al. Age of onset and cumulative risk of mental disorders: A cross-national analysis of population surveys from 29 countries. Lancet Psychiatry 2023, 10, 668–681. [Google Scholar] [CrossRef] [PubMed]

- Jayasree, A.; Shanmuganathan, P.; Ramamurthy, P.; Alwar, M.C. Types of medication non-adherence & approaches to enhance medication adherence in mental health disorders: A narrative review. Indian. J. Psychol. Med. 2024, 46, 503–510. [Google Scholar]

- Bailey, R.K.; Clemens, K.M.; Portela, B.; Bowrey, H.; Pfeiffer, S.N.; Geonnoti, G.; Riley, A.; Sminchak, J.; Lakey Kevo, S.; Naranjo, R.R. Motivators and barriers to help-seeking and treatment adherence in major depressive disorder: A patient perspective. Psychiatry Res. Commun. 2024, 4, 100200. [Google Scholar] [CrossRef]

- Deng, M.; Zhai, S.; Ouyang, X.; Liu, Z.; Ross, B. Factors influencing medication adherence among patients with severe mental disorders from the perspective of mental health professionals. BMC Psychiatry 2022, 22, 22. [Google Scholar] [CrossRef] [PubMed]

- Creswell, J.D. Mindfulness interventions. Annu. Rev. Psychol. 2017, 68, 491–516. [Google Scholar] [CrossRef]

- Hoge, E.A.; Bui, E.; Marques, L.; Metcalf, C.A.; Morris, L.K.; Robinaugh, D.J.; Worthington, J.J.; Pollack, M.H.; Simon, N.M. Randomized controlled trial of mindfulness meditation for generalized anxiety disorder: Effects on anxiety and stress reactivity. J. Clin. Psychiatry 2013, 74, 16662. [Google Scholar] [CrossRef]

- Sharma, M.; Rush, S.E. Mindfulness-based stress reduction as a stress management intervention for healthy individuals: A systematic review. J. Evid.-Based Complement. Altern. Med. 2014, 19, 271–286. [Google Scholar] [CrossRef]

- Komariah, M.; Ibrahim, K.; Pahria, T.; Rahayuwati, L.; Somantri, I. Effect of mindfulness breathing meditation on depression, anxiety, and stress: A randomized controlled trial among university students. Healthcare 2022, 11, 26. [Google Scholar] [CrossRef]

- Bringmann, H.C.; Michalsen, A.; Jeitler, M.; Kessler, C.S.; Brinkhaus, B.; Brunnhuber, S.; Sedlmeier, P. Meditation—Based lifestyle modification in mild to moderate depression—A randomized controlled trial. Depress. Anxiety 2022, 39, 363–375. [Google Scholar] [CrossRef]

- Goldin, P.R.; Gross, J.J. Effects of mindfulness-based stress reduction (MBSR) on emotion regulation in social anxiety disorder. Emotion 2010, 10, 83. [Google Scholar] [CrossRef]

- Zhang, Q.; Wang, Z.; Wang, X.; Liu, L.; Zhang, J.; Zhou, R. The effects of different stages of mindfulness meditation training on emotion regulation. Front. Human. Neurosci. 2019, 13, 208. [Google Scholar] [CrossRef] [PubMed]

- Creswell, J.D.; Myers, H.F.; Cole, S.W.; Irwin, M.R. Mindfulness meditation training effects on CD4+ T lymphocytes in HIV-1 infected adults: A small randomized controlled trial. Brain Behav. Immun. 2009, 23, 184–188. [Google Scholar] [CrossRef] [PubMed]

- Nyklíček, I. Aspects of self-awareness in meditators and meditation-naïve participants: Self-report versus task performance. Mindfulness 2020, 11, 1028–1037. [Google Scholar] [CrossRef]

- Chems-Maarif, R.; Cavanagh, K.; Baer, R.; Gu, J.; Strauss, C. Defining Mindfulness: A Review of Existing Definitions and Suggested Refinements. Mindfulness 2025, 2025, 1–20. [Google Scholar] [CrossRef]

- Kabat-Zinn, J.; Hanh, T.N. Full Catastrophe Living: Using the Wisdom of Your Body and Mind to Face Stress, Pain, and Illness; Random House Publishing Group: New York, NY, USA, 2009. [Google Scholar]

- Salzberg, S.; Jon, K.-Z. Lovingkindness: The Revolutionary Art of Happiness; Shambhala Publications: Boulder, CO, USA, 2004. [Google Scholar]

- Fredrickson, B.L.; Cohn, M.A.; Coffey, K.A.; Pek, J.; Finkel, S.M. Open hearts build lives: Positive emotions, induced through loving-kindness meditation, build consequential personal resources. J. Personal. Soc. Psychol. 2008, 95, 1045. [Google Scholar] [CrossRef]

- Zeng, X.; Chiu, C.P.; Wang, R.; Oei, T.P.; Leung, F.Y. The effect of loving-kindness meditation on positive emotions: A meta-analytic review. Front. Psychol. 2015, 6, 1693. [Google Scholar] [CrossRef]

- Kabat-Zinn, J. Falling Awake: How to Practice Mindfulness in Everyday Life; Hachette: London, UK, 2018. [Google Scholar]

- Keng, S.L.; Smoski, M.J.; Robins, C.J. Effects of mindfulness on psychological health: A review of empirical studies. Clin. Psychol. Rev. 2011, 31, 1041–1056. [Google Scholar] [CrossRef]

- Marais, G.A.B.; Lantheaume, S.; Fiault, R.; Shankland, R. Mindfulness-based programs improve psychological flexibility, mental health, well-being, and time management in academics. Eur. J. Investig. Health Psychol. Educ. 2020, 10, 1035–1050. [Google Scholar] [CrossRef]

- Frank, J.L.; Jennings, P.A.; Greenberg, M.T. Validation of the mindfulness in teaching scale. Mindfulness 2016, 7, 155–163. [Google Scholar] [CrossRef]

- Molloy Elreda, L.; Jennings, P.A.; DeMauro, A.A.; Mischenko, P.P.; Brown, J.L. Protective effects of interpersonal mindfulness for teachers’ emotional supportiveness in the classroom. Mindfulness 2019, 10, 537–546. [Google Scholar] [CrossRef]

- Messina, I.; Calvo, V.; Masaro, C.; Ghedin, S.; Marogna, C. Interpersonal emotion regulation: From research to group therapy. Front. Psychol. 2021, 12, 636919. [Google Scholar] [CrossRef]

- Roy, A. Interpersonal emotion regulation and emotional intelligence: A review. Int. J. Res. Publ. Rev. 2023, 4, 623–627. [Google Scholar] [CrossRef]

- Ghosh, S.; Mitra, B.; De, P. Towards improving emotion self-report collection using self-reflection. In Proceedings of the Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020. [Google Scholar]

- Kvamme, T.L.; Sandberg, K.; Silvanto, J. Mental Imagery as part of an ‘Inwardly Focused’ Cognitive Style. Neuropsychologia 2024, 5, 108988. [Google Scholar] [CrossRef]

- Guendelman, S.; Medeiros, S.; Rampes, H. Mindfulness and emotion regulation: Insights from neurobio-logical, psychological, and clinical studies. Front. Psychol. 2017, 8, 208068. [Google Scholar] [CrossRef] [PubMed]

- Salem, G.M.M.; Hashimi, W.; El-Ashry, A.M. Reflective mindfulness and emotional regulation training to enhance nursing students’ self-awareness, understanding, and regulation: A mixed method randomized controlled trial. BMC Nurs. 2025, 24, 478. [Google Scholar] [CrossRef] [PubMed]

- Bakker, D.; Rickard, N. Engagement in mobile phone app for self-monitoring of emotional wellbeing predicts changes in mental health: MoodPrism. J. Affect. Disord. 2018, 227, 432–442. [Google Scholar] [CrossRef] [PubMed]

- He, X.; Shi, W.; Han, X.; Wang, N.; Zhang, N.; Wang, X. The interventional effects of loving-kindness meditation on positive emotions and interpersonal interactions. Neuropsychiatr. Dis. Treat. 2015, 11, 1273–1277. [Google Scholar] [CrossRef]

- Hadi, S.A.A.; Gharaibeh, M. The role of self-awareness in predicting the level of emotional regulation difficulties among faculty members. Emerg. Sci. J. 2023, 7, 1274–1293. [Google Scholar] [CrossRef]

- Jerath, R.; Crawford, M.W.; Barnes, V.A.; Harden, K. Self-regulation of breathing as a primary treatment for anxiety. Appl. Psychophysiol. Biofeedback 2015, 40, 107–115. [Google Scholar] [CrossRef]

- Zaccaro, A.; Piarulli, A.; Laurino, M.; Garbella, E.; Menicucci, D.; Neri, B.; Gemignani, A. How breath-control can change your life: A systematic review on psycho-physiological correlates of slow breathing. Front. Hum. Neurosci. 2018, 12, 353. [Google Scholar] [CrossRef]

- Bentley, T.G.K.; D’Andrea-Penna, G.; Rakic, M.; Arce, N.; LaFaille, M.; Berman, R.; Cooley, K.; Sprimont, P. Breathing practices for stress and anxiety reduction: Conceptual framework of implementation guidelines based on a systematic review of the published literature. Brain Sci. 2023, 13, 1612. [Google Scholar] [CrossRef]

- Balban, M.Y.; Neri, E.; Kogon, M.M.; Weed, L.; Nouriani, B.; Jo, B.; Holl, G.; Zeitzer, J.M.; Spiegel, D.; Huberman, A.D. Brief structured respiration practices enhance mood and reduce physiological arousal. Cell Rep. Med. 2023, 4, 100895. [Google Scholar] [CrossRef] [PubMed]

- Jain, M.; Markan, C.M. Effect of Brief Meditation Intervention on Attention: An ERP Investigation. arXiv 2022, arXiv:2209.12625. [Google Scholar] [CrossRef]

- Pilcher, J.J.; Byrne, K.A.; Weiskittel, S.E.; Clark, E.C.; Brancato, M.G.; Rosinski, M.L.; Spinelli, M.R. Brief slow-paced breathing improves working memory, mood, and stress in college students. Anxiety Stress Coping 2025, 2025, 528–543. [Google Scholar] [CrossRef] [PubMed]

- Ditto, B.; Eclache, M.; Goldman, N. Short-term autonomic and cardiovascular effects of mindfulness body scan meditation. Ann. Behav. Med. 2006, 32, 227–234. [Google Scholar] [CrossRef]

- Mirams, L.; Poliakoff, E.; Brown, R.J.; Lloyd, D.M. Brief body-scan meditation practice improves somatosensory perceptual decision making. Conscious. Cogn. 2013, 22, 348–359. [Google Scholar] [CrossRef]

- Dambrun, M.; Berniard, A.; Didelot, T.; Chaulet, M.; Droit-Volet, S.; Corman, M.; Juneau, C.; Martinon, L.M. Unified consciousness and the effect of body scan meditation on happiness: Alteration of inner-body experience and feeling of harmony as central processes. Mindfulness 2019, 10, 1530–1544. [Google Scholar] [CrossRef]

- Glissmann, C. Calm College: Testing a Brief Mobile App Meditation Intervention Among Stressed College Students. Master’s Thesis, Arizona State University, Glendale, CA, USA, 2018. [Google Scholar]

- Headspace: Meditation & Sleep (Version 3.371.0). Headspace Inc. (Santa Monica, CA, USA). Available online: https://apps.apple.com/us/app/headspace-meditation-health/id493145008 (accessed on 5 May 2025).

- Kokkiri (Version 3.4.6). Maeum Sueop. (Seoul, Republic of Korea). Available online: https://apps.apple.com/kr/app/%EC%BD%94%EB%81%BC%EB%A6%AC-%EC%88%98%EB%A9%B4-%EB%AA%85%EC%83%81/id1439995060 (accessed on 5 May 2025).

- TIDE: Sleep, Focus, Meditation (Version 4.4.7). Guangzhou Moreless Network Technology Co., Ltd. (Guangzhou, China). Available online: https://apps.apple.com/kr/app/tide-sleep-focus-meditation/id1077776989 (accessed on 5 May 2025).

- TaoMix 2-Relax, Sleep, Focus (Version 2.10.04). MWM. (Neuilly-sur-Seine, France). Available online: https://apps.apple.com/kr/app/taomix-2-relax-sleep-focus/id1032493819 (accessed on 5 May 2025).

- Meditopia: Sleep, Meditation (Version 4.17.1). Yedi70 Yazilim ve Bilgi Teknolojileri Anonim Sirketi. (Istanbul, Turkey). Available online: https://apps.apple.com/us/app/meditopia-sleep-meditation/id1190294015 (accessed on 10 May 2025).

- Edwards, D.J.; Kemp, A.H. A novel ACT-based video game to support mental health through embedded learning: A mixed-methods feasibility study protocol. BMJ Open 2020, 10, e041667. [Google Scholar] [CrossRef]

- Kim, H.; Choi, J.; Doh, Y.Y.; Nam, J. The melody of the mysterious stones: A VR mindfulness game using sound spatialization. In Proceedings of the CHI Conference on Human Factors in Computing Systems Extended Abstracts, New Orleans, LA, USA, 30 April–5 May 2022. [Google Scholar]

- Miner, N. Stairway to Heaven: Breathing Mindfulness into Virtual Reality. Master’s Thesis, Northeastern University, Boston, MA, USA, 2022. [Google Scholar]

- Thomma, N. Gamified Mindfulness: A Novel Approach to Nontraditional Meditation. Master’s Thesis, University of Twente, Enschede, The Netherlands, 2025. [Google Scholar]

- Barrett, L.F.; Gross, J.; Christensen, T.C.; Benvenuto, M. Knowing what you’re feeling and knowing what to do about it: Mapping the relation between emotion differentiation and emotion regulation. Cogn. Emot. 2001, 15, 713–724. [Google Scholar] [CrossRef]

- Yildirim, C.; O’Grady, T. The efficacy of a virtual reality-based mindfulness intervention. In Proceedings of the 2020 IEEE International Conference on Artificial Intelligence and Virtual Reality (AIVR), Utrecht, The Netherlands, 14–18 December 2020. [Google Scholar]

- Kalantari, S.; Xu, T.B.; Mostafavi, A.; Lee, A.; Barankevich, R.; Boot, W.; Czaja, S. Using a nature-based virtual reality environment for improving mood states and cognitive engagement in older adults: A mixed-method feasibility study. Innov. Aging 2022, 6, igac015. [Google Scholar] [CrossRef]

- Cerasa, A.; Gaggioli, A.; Pioggia, G.; Riva, G. Metaverse in mental health: The beginning of a long history. Curr. Psychiatry Rep. 2024, 26, 294–303. [Google Scholar] [CrossRef]

- Shamim, N.; Wei, M.; Gupta, S.; Verma, D.S.; Abdollahi, S.; Shin, M.M. Metaverse for digital health solutions. Int. J. Inf. Manag. 2025, 83, 102869. [Google Scholar] [CrossRef]

- Sindiramutty, S.R.; Jhanjhi, N.Z.; Ray, S.K.; Jazri, H.; Khan, N.A.; Gaur, L. Metaverse: Virtual Meditation. In Metaverse Applications for Intelligent Healthcare; IGI Global Scientific Publishing: Hershey, PA, USA, 2024; pp. 93–158. [Google Scholar]

- Ahuja, A.S.; Polascik, B.W.; Doddapaneni, D.; Byrnes, E.S.; Sridhar, J. The digital metaverse: Applications in artificial intelligence, medical education, and integrative health. Integr. Med. Res. 2023, 12, 100917. [Google Scholar] [CrossRef]

- Song, Y.T.; Qin, J. Metaverse and personal healthcare. Procedia Comput. Sci. 2022, 210, 189–197. [Google Scholar] [CrossRef]

- Del Hoyo, Y.L.; Elices, M.; Garcia-Campayo, J. Mental health in the virtual world: Challenges and opportunities in the metaverse era. World J. Clin. Cases 2024, 12, 2939. [Google Scholar] [CrossRef]

- Ali, S.; Abdullah; Armand, T.P.T.; Athar, A.; Hussain, A.; Ali, M.; Yaseen, M.; Joo, M.; Kim, H. Metaverse in healthcare integrated with explainable AI and blockchain: Enabling immersiveness, ensuring trust, and providing patient data security. Sensors 2023, 23, 565. [Google Scholar] [CrossRef] [PubMed]

- Matamala-Gomez, M.; Maselli, A.; Malighetti, C.; Realdon, O.; Mantovani, F.; Riva, G. Virtual body ownership illusions for mental health: A narrative review. J. Clin. Med. 2021, 10, 139. [Google Scholar] [CrossRef] [PubMed]

- Chengoden, R.; Victor, N.; Huynh-The, T.; Yenduri, G.; Jhaveri, R.H.; Alazab, M.; Bhattacharya, S.; Hegde, P.; Maddikunta, P.K.R.; Gadekallu, T.R. Metaverse for healthcare: A survey on potential applications, challenges and future directions. IEEE Access 2023, 11, 12765–12795. [Google Scholar] [CrossRef]

- Leandro, J.; Rao, S.; Xu, M.; Xu, W.; Jojic, N.; Brockett, C.; Dolan, B. GENEVA: GENErating and Visualizing branching narratives using LLMs. In Proceedings of the 2024 IEEE Conference on Games (CoG), Milan, Italy, 5–8 August 2024. [Google Scholar]

- Kumaran, V.; Rowe, J.; Lester, J. NARRATIVEGENIE: Generating narrative beats and dynamic storytelling with large language models. In Proceedings of the AAAI Conference on Artificial Intelligence and Interactive Digital Entertainment, Lexington, KY, USA, 18–22 November 2024. [Google Scholar]

- Nasir, M.U.; James, S.; Togelius, J. Word2world: Generating stories and worlds through large language models. arXiv 2024, arXiv:2405.06686. [Google Scholar]

- Buongiorno, S.; Klinkert, L.; Zhuang, Z.; Chawla, T.; Clark, C. PANGeA: Procedural Artificial Narrative Using Generative AI for Turn-Based, Role-Playing Video Games. In Proceedings of the AAAI Conference on Artificial Intelligence and Interactive Digital Entertainment, Lexington, KY, USA, 18–22 November 2024. [Google Scholar]

- Peng, X.; Quaye, J.; Rao, S.; Xu, W.; Botchway, P.; Brockett, C.; Jojic, N.; DesGarennes, G.; Lobb, K.; Xu, M.; et al. Player-driven emergence in llm-driven game narrative. In Proceedings of the 2024 IEEE Conference on Games (CoG), Milan, Italy, 5–8 August 2024. [Google Scholar]

- Rasool, A.; Shahzad, M.I.; Aslam, H.; Chan, V.; Arshad, M.A. Emotion-aware embedding fusion in large language models (Flan-T5, Llama 2, DeepSeek-R1, and ChatGPT 4) for intelligent response generation. AI 2025, 6, 56. [Google Scholar] [CrossRef]

- Mixamo. 2025. Available online: https://www.mixamo.com/ (accessed on 2 May 2025).

- OpenAI. GPT-4 Technical Report. arXiv 2023, arXiv:2303.08774. [Google Scholar] [CrossRef]

- Wilhelm, S.; Bernstein, E.E.; Bentley, K.H.; Snorrason, I.; Hoeppner, S.S.; Klare, D.; Harrison, O. Feasibility, acceptability, and preliminary efficacy of a smartphone app–led cognitive behavioral therapy for depression under therapist supervision: An open trial. JMIR Ment. Health 2024, 11, e53998. [Google Scholar] [CrossRef] [PubMed]

- Braun, S.S.; Roeser, R.W.; Mashburn, A.J. Results from a pre-post, uncontrolled pilot study of a mindfulness-based program for early elementary school teachers. Pilot. Feasibility Stud. 2020, 6, 178. [Google Scholar] [CrossRef] [PubMed]

- Cotter, E.W.; Hornack, S.E.; Fotang, J.P.; Pettit, E.; Mirza, N.M. A pilot open-label feasibility trial examining an adjunctive mindfulness intervention for adolescents with obesity. Pilot. Feasibility Stud. 2020, 6, 79. [Google Scholar] [CrossRef] [PubMed]

- Kroenke, K.; Spitzer, R.L.; Williams, J.B.W. The PHQ-9: Validity of a brief depression severity measure. J. General. Intern. Med. 2001, 16, 606–613. [Google Scholar] [CrossRef]

- Beck, A.T.; Ward, C.H.; Mendelson, M.; Mock, J.; Erbaugh, J. An inventory for measuring depression. Arch. Gen. Psychiatry 1961, 4, 561–571. [Google Scholar] [CrossRef]

- Spitzer, R.L.; Kroenke, K.; Williams, J.B.; Löwe, B. A brief measure for assessing generalized anxiety disorder: The GAD-7. Arch. Intern. Med. 2006, 166, 1092–1097. [Google Scholar] [CrossRef]

- Beck, A.T.; Epstein, N.; Brown, G.; Steer, R.A. An inventory for measuring clinical anxiety: Psychometric properties. J. Consult. Clin. Psychol. 1988, 56, 893. [Google Scholar] [CrossRef]

- Cohen, S.; Kamarck, T.; Mermelstein, R. A global measure of perceived stress. J. Health Soc. Behav. 1983, 1983, 385–396. [Google Scholar] [CrossRef]

- Bohlmeijer, E.; ten Klooster, P.M.; Fledderus, M.; Veehof, M.; Baer, R. Psychometric properties of the Five Facet Mindfulness Questionnaire (FFMQ) in a Dutch sample. Mindfulness 2011, 2, 90–95. [Google Scholar]

- Bond, F.W.; Hayes, S.C.; Baer, R.A.; Carpenter, K.M.; Orcutt, H.K.; Waltz, T.; Zettle, R.D. Preliminary psychometric properties of the Acceptance and Action Questionnaire–II: A revised measure of psychological inflexibility and experiential avoidance. Behav. Ther. 2011, 42, 676–688. [Google Scholar] [CrossRef] [PubMed]

- Raes, F.; Pommier, E.; Neff, K.D.; Van Gucht, D. Construction and factorial validation of a short form of the Self-Compassion Scale. Clin. Psychol. Psychother. 2011, 18, 250–255. [Google Scholar] [CrossRef] [PubMed]

- Rosenberg, M. Society and the Adolescent Self-Image; Princeton University Press: Princeton, NJ, USA, 1965. [Google Scholar]

- Kang, M. Effects of Enneagram Program for Self-Esteem, Interpersonal Relationships, and GAF in Psychiatric Patients. Ph.D. Thesis, Seoul National University, Seoul, Republic of Korea. [CrossRef]

- Shapiro, S.S.; Wilk, M.B. An analysis of variance test for normality (complete samples). Biometrika 1965, 52, 591–611. [Google Scholar] [CrossRef]

- Smirnov, N. Table for estimating the goodness of fit of empirical distributions. Ann. Math. Stat. 1948, 19, 279–281. [Google Scholar] [CrossRef]

- Tsagris, M.; Alenazi, A.; Verrou, K.M.; Pandis, N. Hypothesis testing for two population means: Parametric or non-parametric test. J. Stat. Comput. Simul. 2020, 90, 252–270. [Google Scholar] [CrossRef]

- Wilcoxon, F. Individual comparisons by ranking methods. Biom. Bull. 1945, 1, 80–83. [Google Scholar] [CrossRef]

- Ghasemi, A.; Zahediasl, S. Normality tests for statistical analysis: A guide for non-statisticians. Int. J. Endocrinol. Metab. 2012, 10, 486. [Google Scholar] [CrossRef]

- Akbar, Z.; Ghani, M.U.; Aziz, U. Boosting Viewer Experience with Emotion-Driven Video Analysis: A BERT-based Framework for Social Media Content. J. Artif. Intell. 2025, 1, 3–11. [Google Scholar]

- Demszky, D.; Movshovitz-Attias, D.; Ko, J.; Cowen, A.; Nemade, G.; Ravi, S. GoEmotions: A dataset of fine-grained emotions. arXiv 2020, arXiv:2005.00547. [Google Scholar]

- SamLowe. Hugging Face. Available online: https://huggingface.co/SamLowe/roberta-base-go_emotions/ (accessed on 6 September 2025).

- Grootendorst, M. BERTopic: Neural topic modeling with a class-based TF-IDF procedure. arXiv 2022, arXiv:2203.05794. [Google Scholar]

- Barth, L.; Kobourov, S.; Pupyrev, S.; Ueckerdt, T. On Semantic Word Cloud Representation. arXiv 2013, arXiv:1304.8016. [Google Scholar]

- Schubert, E.; Spitz, A.; Weiler, M.; Geiß, J.; Gertz, M. Semantic word clouds with background corpus normalization and t-distributed stochastic neighbor embedding. arXiv 2017, arXiv:1708.03569. [Google Scholar] [CrossRef]

- Rasool, A.; Aslam, S.; Hussain, N.; Imtiaz, S.; Riaz, W. nbert: Harnessing nlp for emotion recognition in psychotherapy to transform mental health care. Information 2025, 16, 301. [Google Scholar] [CrossRef]

| System Functions | Calm [42] | Headspace [43] | Kokkiri [44] | Tide [45] | Tao Mix2 [46] | Meditopia [47] | ACTing Mind [48] | TMMS [49] | Stairway to Heaven [50] | MindFlourish [51] | Our |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Foundational mindfulness training | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||||

| Personalized and guided meditation | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||||

| Cognitive regulation and emotional management | ✓ | ✓ | ✓ | ✓ | ✓ | ||||||

| Tracking breathing data and feedback | ✓ | ✓ | ✓ | ✓ | |||||||

| Gamification | ✓ | ✓ | ✓ | ✓ | |||||||

| Multisensory (audiovisual) experience | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||

| Immersive virtual environment | ✓ | ✓ | ✓ |

| No. | Questionnaire | Calculation Method | Formula |

|---|---|---|---|

| 1 | Acceptance and Action Questionnaire-II (AAQ-II) | Total score calculation | |

| 2 | Beck Anxiety Inventory (BAI) | Total score calculation | |

| 3 | Beck Depression Inventory (BDI) | Total score calculation | |

| 4 | Five Facet Mindfulness Questionnaire (FFMQ-15) | Subscale score calculation | |

| 5 | GAD-7 | Total score calculation | |

| 6 | Metaverse Content Suitability Assessment (MCSA) | Subjective evaluation | N/A |

| 7 | Patient Health Questionnaire-9 (PHQ-9) | Total score calculation | |

| 8 | Perceived Stress Scale (PSS) | Total score calculation | |

| 9 | Rosenberg Self-Esteem Scale (RSES) | Total score calculation | |

| 10 | Self-Compassion Scale—Short Form (SCS-SF) | Average score calculation |

| Characteristics | Category | Frequency | Percentage (%) |

|---|---|---|---|

| Gender | Male | 9 | 29.0 |

| Female | 22 | 71.0 | |

| Age | 18–19 | 1 | 3.2 |

| 20–29 | 19 | 61.3 | |

| 30–39 | 5 | 16.1 | |

| 40–49 | 6 | 19.4 |

| Kolmogorov–Smirnov (a) | Shapiro–Wilk | ||||

|---|---|---|---|---|---|

| Statistic | Sig.(p) | Statistic | Sig.(p) | ||

| Depression | |||||

| BDI | 0.123 | 0.200 * | 0.964 | 0.364 | |

| PHQ-9 | 0.142 | 0.115 | 0.951 | 0.162 | |

| Anxiety | |||||

| BAI | 0.15 | 0.074 | 0.966 | 0.409 | |

| GAD-7 | 0.177 | 0.015 | 0.929 | 0.042 | |

| Stress | |||||

| PSS | 0.131 | 0.19 | 0.951 | 0.164 | |

| Mindfulness | |||||

| FFMQ-15 | Aggregate | 0.098 | 0.200 * | 0.979 | 0.798 |

| Observing | 0.155 | 0.054 | 0.968 | 0.476 | |

| Describing | 0.127 | 0.200 * | 0.929 | 0.042 | |

| Acting awareness | 0.114 | 0.200 * | 0.959 | 0.283 | |

| Nonjudging | 0.169 | 0.025 | 0.95 | 0.154 | |

| Nonreactivity | 0.143 | 0.108 | 0.965 | 0.39 | |

| Psychological | |||||

| AAQ-II | 0.106 | 0.200 * | 0.965 | 0.391 | |

| Self-Compassion | |||||

| SCS-SF | 0.17 | 0.023 | 0.807 | 0 | |

| Self-Esteem | |||||

| RSES | 0.164 | 0.033 | 0.889 | 0.004 | |

| Mean (SD) Intervention (n = 31) | |||||

|---|---|---|---|---|---|

| Psychological Measure | Pre | Post | t/Z | p | Effect Size |

| Depression | |||||

| BDI | 13.10 (7.83) | 7.94 (5.78) | 4.462 | <0.001 | 0.801(d) |

| PHQ-9 | 7.48 (5.21) | 3.42 (3.17) | 6.804 | <0.001 | 1.222(d) |

| Anxiety | |||||

| BAI | 9.52 (7.27) | 6.10 (5.86) | 4.217 | <0.001 | 0.757(d) |

| GAD-7 | 5.52 (4.58) | 1.97 (2.21) | −4.217 (Z) | <0.001 | 0.757 (r) |

| Stress | |||||

| PSS | 18.84 (3.91) | 16.39 (3.99) | 3.132 | 0.004 | 0.563(d) |

| Mean (SD) Intervention (n = 31) | |||||

|---|---|---|---|---|---|

| Mindfulness Facet | Pre | Post | t/Z | p | Effect Size |

| Aggregate | 3.09 (0.59) | 3.35 (0.57) | −3.153 | 0.002(one-tailed) | 0.566(d) |

| Observing | 3.50 (1.06) | 3.31 (1.23) | −1.683 | 0.051(one-tailed) | 0.302(d) |

| Describing | 3.16 (1.21) | 3.51 (1.16) | −1.883 (Z) | 0.06 | |

| Acting awareness | 3.46 (1.15) | 3.87 (0.88) | −1.852 | 0.037(one-tailed) | 0.333(d) |

| Nonjudging | 3.32 (1.11) | 3.13 (1.25) | 0.899 | 0.188(one-tailed) | 0.157(d) |

| Nonreactivity | 2.59 (1.00) | 2.94 (1.15) | −1.774 | 0.043(one-tailed) | 0.319(d) |

| Kaiser–Meyer–Olkin Measure of Sampling Adequacy | 0.598 | |

|---|---|---|

| Bartlett’s test of sphericity | Approximate chi-square (χ2) | 184.799 |

| Degrees of freedom | 105 | |

| Significance (p) | <0.001 | |

| Item | Factor 1 | Factor 2 | Factor 3 | Factor 4 | Factor 5 |

|---|---|---|---|---|---|

| Nonreactivity3 | 0.838 | 0.004 | 0.027 | 0.028 | −0.183 |

| Nonreactivity1 | 0.789 | 0.031 | 0.126 | 0.252 | −0.036 |

| Observing2 | 0.650 | 0.216 | 0.080 | −0.300 | 0.060 |

| Describing1 | 0.078 | 0.931 | 0.165 | 0.049 | −0.029 |

| Describing2 | 0.059 | 0.889 | 0.328 | 0.045 | −0.006 |

| Observing3 | 0.103 | 0.583 | −0.049 | −0.540 | 0.090 |

| Acting Awareness2 | 0.174 | 0.211 | 0.886 | −0.050 | 0.095 |

| Acting Awareness3 | −0.038 | 0.150 | 0.819 | −0.081 | 0.207 |

| Acting Awareness1 | 0.222 | 0.142 | 0.529 | 0.642 | 0.014 |

| Nonjudging3 | −0.290 | 0.082 | −0.026 | 0.658 | 0.372 |

| Observing1 | −0.157 | 0.084 | 0.213 | −0.842 | 0.102 |

| Nonjudging1 | −0.205 | −0.111 | 0.023 | 0.151 | 0.814 |

| Nonjudging2 | 0.198 | 0.054 | 0.341 | 0.143 | 0.716 |

| Nonreactivity2 | 0.328 | −0.004 | −0.247 | 0.240 | −0.707 |

| Describing3 | 0.315 | 0.285 | −0.171 | −0.184 | 0.439 |

| Mean (SD) Intervention (n = 31) | |||||

|---|---|---|---|---|---|

| Psychological Measure | Pre | Post | t | p | Effect Size |

| AAQ-II | 24.81 (10.97) | 21.90 (9.62) | 2.024 | 0.026 (one-tailed) | 0.364 (d) |

| Mean (SD) Intervention (n = 31) | |||||

|---|---|---|---|---|---|

| Psychological Measure | Pre | Post | Z | p | Effect Size |

| Self-Compassion | |||||

| SCS-SF | 2.78 (0.76) | 3.18 (0.68) | −3.380 | 0.001 | 0.607(r) |

| Self-Esteem | |||||

| RSES | 33.00 (8.91) | 36.06 (7.84) | −2.975 | 0.003 | 0.534(r) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, A.; Lee, G.; Liu, Y.; Zhang, M.; Jung, S.; Park, J.; Rhee, J.; Cho, K. Development and Evaluation of an Immersive Metaverse-Based Meditation System for Psychological Well-Being Using LLM-Driven Scenario Generation. Systems 2025, 13, 798. https://doi.org/10.3390/systems13090798

Yu A, Lee G, Liu Y, Zhang M, Jung S, Park J, Rhee J, Cho K. Development and Evaluation of an Immersive Metaverse-Based Meditation System for Psychological Well-Being Using LLM-Driven Scenario Generation. Systems. 2025; 13(9):798. https://doi.org/10.3390/systems13090798

Chicago/Turabian StyleYu, Aihe, Gyuhyuk Lee, Yu Liu, Mingfeng Zhang, Seunga Jung, Jisun Park, Jongtae Rhee, and Kyungeun Cho. 2025. "Development and Evaluation of an Immersive Metaverse-Based Meditation System for Psychological Well-Being Using LLM-Driven Scenario Generation" Systems 13, no. 9: 798. https://doi.org/10.3390/systems13090798

APA StyleYu, A., Lee, G., Liu, Y., Zhang, M., Jung, S., Park, J., Rhee, J., & Cho, K. (2025). Development and Evaluation of an Immersive Metaverse-Based Meditation System for Psychological Well-Being Using LLM-Driven Scenario Generation. Systems, 13(9), 798. https://doi.org/10.3390/systems13090798