1. Introduction

Large Language Models (LLMs), such as GPT-4, have demonstrated impressive performance across a wide range of NLP tasks, including reasoning, classification, and instruction-following [

1,

2].

These capabilities are largely shaped by the prompts used to query them. Prompts serve as natural language instructions that guide model behavior. However, designing effective prompts remains an open challenge. This process typically involves manual trial-and-error, task-specific intuition, and considerable domain expertise [

3,

4]. This process not only limits scalability but also leads to inconsistent outcomes, especially in complex applications such as information retrieval and fact verification [

5,

6].

To address these issues, recent studies have proposed automated prompt-optimization methods. Some approaches rely on resampling or paraphrasing-based search [

7], while others employ reflection-style methods that iteratively revise prompts based on performance [

8], feedback [

9,

10]. These methods have advanced the field by showing that prompts can be improved systematically using LLMs themselves. However, they often suffer from two core limitations: (1) a high computational cost due to the need to generate and evaluate multiple candidates per iteration, and (2) reliance on stochastic or locally greedy selection procedures, which may overlook semantically optimal edits.

A promising line of work proposes using textual gradients as a guide for prompt revision [

9]. Textual gradients are natural language descriptions of prompt flaws derived from model feedback. These gradients can be interpreted as analogues of loss gradients in neural networks, pointing toward semantically corrective directions. By applying these textual gradients through discrete editing, previous methods have achieved notable gains over hand-crafted prompts.

However, these approaches still depend on evaluating a large number of candidate edits using different search and selection strategies, which can introduce inefficiency and variance into the optimization process. This repeated generation and evaluation cycle often incurs a high number of LLM calls, which increases both computational cost and latency. As a result, such methods are less suitable for scenarios that require rapid iteration or operate under limited resource budgets.

In this paper, we propose an alternative selection strategy that is both more efficient and more representative of the training signal. Instead of depending on stochastic sampling or local search algorithms, we embed all candidate textual gradients into a semantic space and compute a robust center by averaging the embeddings while excluding outliers. We then select the gradient that lies closest to this center, under the assumption that it reflects the shared corrective direction present across all candidates. This center-aware approach enables us to deterministically identify high-quality gradients without expensive multi-step evaluation.

Our method improves upon existing gradient-based prompt-optimization techniques in two key ways. First, it reduces the number of LLM calls needed during optimization by avoiding iterative search and scoring. Second, it identifies more generalizable and robust prompt edits by leveraging the semantic geometry of the feedback space. While our method inherently retains transparency in how edits are derived because all revisions are expressed in natural language, this is considered a secondary benefit rather than a primary objective.

We evaluate our approach across three datasets: TREC DL 2019 for passage relevance evaluation, LIAR for political fact-checking, and ETHOS for hate speech detection. Our experiments use multiple LLMs, including GPT-4o and GPT-4o-mini. Our results show that selecting gradients based on semantic center proximity consistently outperforms random or beam-based selection, improving both Cohen’s Kappa and Accuracy while reducing the required number of model evaluations. Qualitative analysis further reveals that our method tends to select gradients that capture broader reasoning flaws [

11].

Overall, this work contributes a simple yet effective strategy for prompt optimization, highlighting semantic proximity as a practical signal for selecting natural language gradients. The results provide both empirical improvements and insights into the structure of gradient-based feedback in prompt-learning.

2. Related Work

2.1. LLM-Based Prompt Optimization

Large Language Models (LLMs) have shown remarkable performance across various natural language processing (NLP) tasks. However, their output quality remains highly sensitive to the formulation of input prompts.

This has led to extensive research into systematic prompt-engineering methods, including few-shot learning, chain-of-thought prompting, and ensemble techniques. These approaches aim to improve model performance without altering model weights, and have laid the foundation for a new research direction known as automatic prompt optimization, which seeks to automate prompt construction and refinement with minimal human intervention.

Early studies focused on white-box settings, where soft prompts are optimized via gradient-based learning [

12,

13]. These methods adjust continuous-input embeddings and apply backpropagation using labeled data. While effective in terms of performance, they require access to model parameters and gradients, making them unsuitable for proprietary LLMs that are accessible only via APIs. Consequently, discrete prompt-editing approaches in black-box settings have been proposed.

Among these methods, RLPrompt [

14] formulates prompt-editing as a sequential decision-making process, updating prompts iteratively based on reward signals. AutoHint [

15] introduces guided hint generation to improve prompts automatically without model access, and PromptAgent [

16] strategically plans prompt improvements through language model reasoning. However, these methods often rely on repeated sampling and local heuristics, leading to high computational costs and limited semantic interpretability [

7,

9]. This has motivated alternative approaches that use semantically grounded, deterministic criteria for prompt-editing.

2.2. LLMs as Prompt Optimizers: Reflection and Search Strategies

Recent studies have formalized LLM-based prompt-optimization frameworks into modular phases: initialization, updating, and search [

17]. In this framework, prompts are initialized either manually or via LLM-generated demonstrations using few-shot examples. The update phase typically involves either random resampling or reflection-based feedback to revise the prompt. In the search phase, beam search or top-

k sampling is commonly used to explore candidate prompts.

Among these phases, reflection plays a central role. Reflexion [

18] and Self-Refine [

19] propose iterative refinement strategies based on model-generated feedback.

The feedback may be explicit, in which case the model articulates its own errors, or implicit, where the feedback is inferred from past performance [

10,

18,

19]. However, some studies suggest that such reflection mechanisms may reinforce pre-existing biases or fail to diagnose the true source of failure [

20].

Furthermore, commonly used search methods such as beam search often restrict exploration to a narrow region of the prompt space, resulting in limited semantic diversity and poor generalization [

9]. These limitations have prompted new research directions focused on leveraging semantic feedback representations from LLMs.

2.3. Textual Gradients and Feedback-Guided Prompt-Editing

Building on the idea of leveraging LLM feedback as optimization signals, ProTeGi [

21] introduced the concept of

textual gradients, where model-generated feedback is interpreted as directional guidance in semantic space. The model identifies deficiencies in a prompt and proposes edits that move in the opposite semantic direction, explored using beam or bandit search.

This concept has since been generalized in frameworks such as TextGrad [

22], which apply gradient-like semantics to domains like code generation, molecular design, and radiotherapy. In these systems, the user specifies an objective, and the LLM autonomously generates feedback to guide prompt-editing. Empirical studies report gains across multiple tasks, including question-answering and program synthesis.

Nevertheless, challenges remain. Feedback quality is inconsistent across tasks [

10,

23], and stochastic exploration methods introduce instability and hinder interpretability. ProTeGi also incorporates clustering of textual gradients to maintain diversity in corrective directions, selecting representative candidates from multiple clusters for evaluation. While this strategy can help explore a broader set of feedback signals, it still requires evaluating several candidates per iteration, resulting in higher computational cost and potential variance in performance. In contrast, our approach prioritizes stability and efficiency by first filtering out outlier gradients in the embedding space and then selecting the single candidate closest to a robust semantic center. This deterministic selection process eliminates the need for multi-candidate evaluation while preserving the interpretability of feedback edits.

Recently, Chen et al. [

24] introduced embedding-based metrics to evaluate the stability of prompt outputs, proposing semantic stability as a measure of consistency across repeated executions. Their work highlights the importance of embedding representations in prompt evaluation and has inspired further research on semantic criteria for prompt and feedback selection.

In this context, we explore the use of embedding-based selection criteria for choosing feedback candidates that are close to a robust central representation, aiming to address the limitations of stochastic selection methods in prompt optimization.

2.4. Prompt-Evaluation Metrics

The evaluation of prompt quality is typically based on the outputs produced by the model. For open-ended or text generation tasks, traditional metrics such as BLEU and ROUGE are widely used to assess prompt effectiveness by quantifying the n-gram overlap between generated outputs and reference answers [

7,

25]. In recent years, embedding-based metrics have also been explored to capture deeper semantic relationships between outputs and references, leveraging pretrained models such as Sentence-BERT [

24,

26,

27].

However, for binary classification and fact-checking tasks, as addressed in this work, the key evaluation criterion is the degree of agreement between model predictions and human judgments. While accuracy provides a straightforward measure of exact matches, Cohen’s kappa offers a more robust assessment by quantifying the inter-rater reliability between the model and human annotators, correcting for chance agreement. This metric is particularly valued for its ability to capture the consistency between model outputs and human annotations beyond random alignment, and has become standard practice in recent prompt evaluation and large language model alignment studies [

15,

23,

28].

In this study, we primarily use accuracy and Cohen’s kappa to evaluate prompt effectiveness, focusing on the similarity and reliability of model predictions relative to human standards.

3. Methodology

This section presents a prompt-optimization method based on text-derived gradients. Our approach builds on the ProTeGi framework, which interprets feedback generated by LLMs as gradient signals for iterative prompt refinement. We further improve efficiency and robustness by leveraging the geometric structure of the embedding space.

Conventional text-gradient optimization methods often rely on stochastic candidate selection, which can introduce significant variance into the optimization process. To address this issue, we embed all generated gradient candidates and select the feedback closest to the centroid of the embedding distribution. This approach yields stability and efficiency.

3.1. Background: Textual Gradient Optimization

Prompt optimization can be formulated as an iterative process in which an LLM generates natural language feedback, or textual gradients, to guide prompt revision [

9,

22]. Given a current prompt

and a minibatch of queries

, the model produces predictions

that are compared against ground truth labels. An LLM is then prompted to produce feedback

describing how

should be improved:

Each gradient

is applied to the current prompt to create a candidate update:

where

feedback and

LLMUpdate templates are provided in

Appendix A.

3.2. ProTeGi-EMB: ProTeGi with Embedding-Based Selection

We propose ProTeGi-EMB, an extension of ProTeGi that employs center-aware, embedding-based gradient selection for prompt optimization. While the original framework generates multiple textual gradients and evaluates each by applying them to revise the prompt, this incurs high computational costs and instability. Our method instead leverages the geometric structure of the embedding space for more efficient and consistent selection.

Given textual gradients

, we embed each gradient using a sentence-embedding model

, such as

text-embedding-3-small:

We compute the mean center:

To reduce the influence of outliers, we define a

robust center by averaging only the top

of vectors most similar to

(cosine similarity):

We then select the gradient closest to

:

This ensures the selected gradient reflects the most semantically central and generalizable direction, avoiding overly specific or noisy feedback.

3.3. Full Procedure: Center-Aware Prompt Optimization

We summarize our full optimization pipeline in Algorithm 1, which iteratively refines the prompt by selecting textual gradients based on center-aware criteria. In each iteration, multiple feedback signals are generated from the LLM and embedded into a semantic space. A robust center is then computed from the top-

most central gradients, and the

K gradients closest to this center are each used to update the prompt separately. Here,

K is a tunable parameter: setting

applies a single update per iteration, enabling faster optimization with lower computational cost, while increasing

K explores multiple promising updates in parallel, thereby increasing the likelihood of selecting a higher-performing prompt. The updated prompts from these

K candidates are evaluated on a held-out set, and the best-performing one is selected for the next iteration. This process is repeated for a predetermined number of iterations or until convergence.

| Algorithm 1 Center-Aware Textual Gradient Optimization (ProTeGi-EMB, Top-K Extension) |

Require: Initial prompt , training data , embedding model , top percentile , number of candidates K Ensure: Optimized prompt - 1:

for to do - 2:

Sample minibatch - 3:

Use LLM to generate gradients from and - 4:

Compute embeddings: for all - 5:

Compute mean center: - 6:

Select top- indices based on cosine similarity to - 7:

Compute robust center: - 8:

Select K gradients closest to : - 9:

For each , generate updated prompt - 10:

Evaluate each on a validation set and select the best-performing one as - 11:

end for - 12:

return

|

4. Experimental Setup

To empirically evaluate the effectiveness of our center-based textual gradient selection strategy, we conducted a series of controlled experiments across diverse tasks and model configurations. In this section, we describe the language models used, the datasets and task formulations, the evaluation metrics, and the baseline methods used for comparison.

4.1. LLMs and Dataset

LLMs. We employed two large language models (LLMs) to evaluate our approach: GPT-4o and GPT-4o-mini. These represent different capacity configurations of OpenAI’s GPT-4 family. Both models are accessed via their respective APIs, and consistent decoding parameters were applied throughout our experiments, such as setting the temperature to 0.0 for deterministic outputs.

Datasets. We conducted experiments across three publicly available datasets, selected to cover diverse task types: information retrieval, fact-checking, and hate speech detection.

TREC DL 2019 (Passage Ranking)

1: This dataset provides predefined train and test splits, each with a sufficient number of samples. For our experiments, we randomly sampled from the training split for each experimental run. The task was formulated as a binary relevance classification problem, where top-judged passages are labeled as relevant and others as non-relevant.

LIAR

2: This political fact-checking dataset includes separate train and test splits with ample data. We converted the original multi-class veracity labels into binary categories:

True and

False. For each experiment, the training set was randomly sampled, and the evaluation was conducted on the test split.

Ethos

3: This hate speech detection dataset does not provide predefined splits. We randomly divided the data into training and test sets prior to experimentation. Each example was labeled as either hate or non-hate speech.

For all datasets, the evaluation was conducted on a fixed set of 500 test instances per dataset, which were randomly sampled once from the test split (or from the custom test partition for Ethos) and held constant for all experiments. This approach follows [

29]. In each experimental run, prompts were optimized using a randomly sampled subset of the training data. All experiments were repeated three times with different random samples, and we report the average results across runs to ensure robustness. The selected datasets cover a spectrum of tasks, from objective fact-based problems to more subjective and socially sensitive issues, enabling a comprehensive evaluation of the proposed method’s applicability and robustness across diverse domains as summarized in

Table 1.

4.2. Evaluation Metrics

To evaluate the effectiveness of the proposed method, we measure how closely the model-generated labels align with human-annotated ground truth. As our primary evaluation metric, we used Cohen’s kappa (

), a chance-corrected measure of inter-rater agreement. Unlike simple accuracy, Cohen’s kappa accounts for agreement that may occur by chance, making it a more reliable metric in settings such as ours, where label distributions may be imbalanced or subjective interpretation is involved. Moreover, Cohen’s kappa has been widely adopted in recent studies evaluating the alignment between large language models and human annotations. Cohen’s kappa is defined as follows:

where

is the observed agreement between the model and human labels, and

is the expected agreement by chance.

In addition to , we report accuracy to provide a more complete picture of predictive performance, which captures the overall correctness of the predictions.

4.3. Baseline Comparison

Comparison Models. To evaluate the effectiveness of our proposed method, we compare it against three representative prompt-optimization frameworks: APE [

7], OPRO [

30], and ProTeGi [

9]. APE (Automatic Prompt Engineering) paraphrases the initial prompt in multiple ways using an LLM and selects the best-performing candidate based on model feedback. OPRO (Optimization by PROmpting) iteratively updates prompts by following an optimization trajectory guided by the LLM’s responses, enabling progressive refinement over multiple steps. The original ProTeGi (Prompt Optimization with Textual Gradients) generates natural language gradients and applies beam search with bandit selection to identify improved prompt candidates. This framework serves as the foundation for our proposed approach.

Our method, termed ProTeGi-EMB, improves upon ProTeGi by replacing its stochastic candidate selection process with a deterministic strategy based on semantic centrality. Specifically, all feedback signals are embedded into a semantic space, and the textual gradient closest to a robust semantic center is selected, enabling more consistent and interpretable prompt edits.

For a fair comparison, all methods were run for up to three optimization iterations, and up to three prompt candidates were generated at each iteration.

Initial Prompt: For the initial prompts, we adopted task instructions that were either manually written or cited directly from the respective baseline implementations. Specifically, we used the original prompts provided in APE, OPRO, and ProTeGi, each designed to elicit binary classification behavior for the given task. These prompts serve as unmodified baselines and reflect realistic starting points for optimization.

Each initial prompt was evaluated independently, and results are reported as averages across all prompt types. A complete list of the initial prompts used in our experiments is provided in

Table 2 and

Appendix BParameter Settings: For all baseline methods, we followed the default hyperparameter in their respective settings. For our proposed ProTeGi-EMB, unless otherwise specified, we set the robust center threshold to and the top-K parameter to throughout all experiments.

5. Experimental Results

We evaluate the effectiveness of our proposed method through both qualitative and quantitative analyses. Our experiments are designed to answer two key questions: (1) Can semantic proximity to the embedding center serve as a reliable signal for selecting high-quality textual gradients? (2) How does our method compare to existing prompt-optimization baselines in terms of task performance?

The analysis begin with a case study and statistical analysis to assess whether gradients closer to the center of the embedding space tend to produce better prompts. Following this, we present comparative results across three datasets using several state-of-the-art baselines.

5.1. Effectiveness of Center-Based Selection: Case Study and Statistical Analysis

We begin our analysis with a case study using the TREC DL 2019 dataset to examine the distribution and quality of textual gradients in the embedding space.

Figure 1 shows a t-SNE visualization of the embedding vectors for all candidate gradients. Each blue point represents a single gradient, while the red

X indicates the arithmetic mean of all embeddings (hereafter referred to as the center). The orange

star denotes the robust center, computed as the mean of the top 80% of embeddings that are closest to the original center in cosine similarity.

The visualization reveals that while most gradients cluster around the center, several outliers lie far from the main semantic mass. These findings indicate that the arithmetic mean is susceptible to distortion from semantically irrelevant or noisy gradients, thereby limiting its reliability as a central reference point. In contrast, the robust center excludes these outliers, offering a more stable and representative semantic reference point.

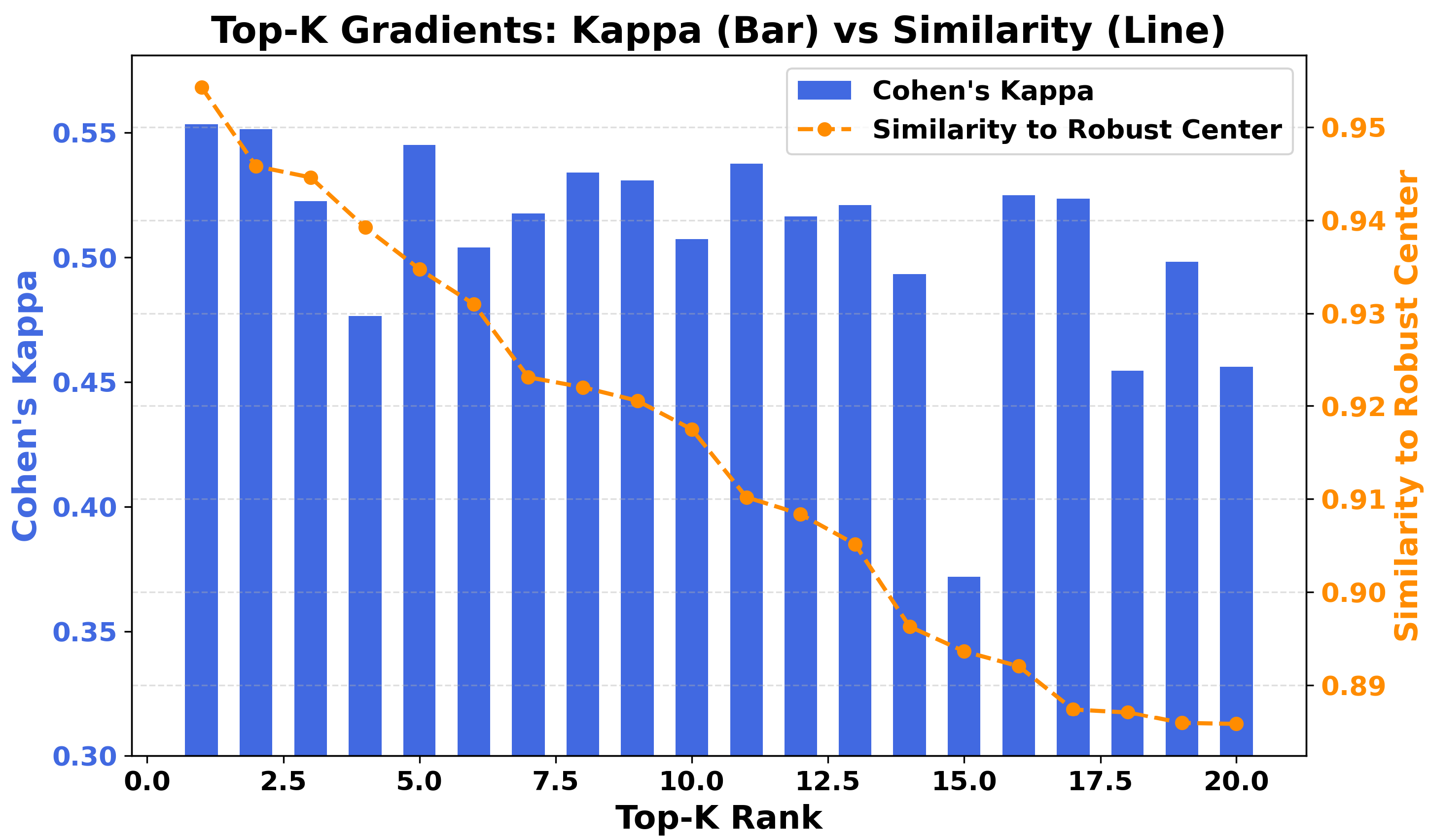

To evaluate whether proximity to this robust center correlates with better prompt quality, we examine the task performance resulting from each gradient-modified prompt. Specifically, we apply each gradient to the original prompt and measure its effectiveness using Cohen’s Kappa score. As shown in

Figure 2, the left y-axis (bars) indicates Kappa scores, while the right y-axis (line) represents cosine similarity to the robust center for the top 20 closest gradients. Although the highest-performing gradient appears at Top-1 in many runs, approximately 30% of the cases deviate from this pattern. The overall trend, however, is clear: as similarity decreases, performance tends to decline. Thus, semantic closeness to the robust center should be interpreted as increasing the probability of selecting a high-quality gradient, rather than guaranteeing the optimal one.

To generalize this observation beyond a single case, we conduct a broader statistical analysis across three datasets: TREC DL 2019, LIAR, and ETHOS. We perform 20 independent runs per dataset, totaling 60 experiments. In each run, all gradients are ranked by their similarity to the robust center, and we record the rank position of the highest Kappa score.

Figure 3 illustrates the cumulative probability of identifying the top-performing gradient within the top-K candidates. Compared to a random ranking baseline, our method demonstrates clear advantages: over 70% of optimal gradients are found within the ranks of the top 4. This reinforces the probabilistic nature of our approach—semantic proximity to the center increases the odds of optimal selection, but does not ensure it.

5.2. Performance Comparison of Difference Method

Table 3 summarizes the performance of several prompt-optimization methods across three datasets—TREC DL 2019, LIAR, and ETHOS—using two large language models: GPT-4o and GPT-4o-mini. For each dataset, the results are averaged over three runs, where the training data is randomly sampled for each run. Evaluations are based on Cohen’s Kappa and Accuracy.

Across all datasets and model configurations, ProTeGi consistently surpasses APE and OPRO. For instance, on the LIAR dataset with GPT-4o, it achieves a Kappa of 0.457, outperforming APE (0.421) and OPRO (0.371). This demonstrates that the text gradient framework captures more task-relevant prompt refinements. Similar gains are observed on the ETHOS dataset, where ProTeGi achieves a Kappa of 0.429—higher than APE (0.393) and OPRO (0.381).

Our proposed variant, ProTeGi-EMB, enhances performance by introducing semantic filtering through center-based embedding selection. It achieves the best results across all experimental settings. For instance, on the TREC DL 2019 dataset with GPT-4o-mini, ProTeGi-EMB reaches a Kappa of 0.534 and Accuracy of 0.832—markedly higher than the vanilla ProTeGi baseline (Kappa 0.393; Accuracy 0.712). Likewise, on the LIAR dataset with GPT-4o, it attains the highest Kappa of 0.485 and Accuracy of 0.750, surpassing the next best method.

The performance gap between GPT-4o and GPT-4o-mini varies across datasets. On LIAR and ETHOS—tasks that require background knowledge and nuanced reasoning—GPT-4o demonstrates a clear advantage. For instance, in LIAR, ProTeGi-EMB achieves a Kappa of 0.485 with GPT-4o, compared to 0.448 with GPT-4o-mini. On ETHOS, the same method yields 0.448 and 0.362, respectively. These results suggest that larger models can more effectively leverage domain-specific and common-sense knowledge encoded in their parameters.

In contrast, on the TREC DL 2019 dataset, which involves passage-level binary relevance classification based primarily on lexical features, GPT-4o-mini performs surprisingly well. ProTeGi-EMB with GPT-4o-mini achieves a Kappa of 0.534, exceeding GPT-4o’s result of 0.525. This suggests that for tasks centered on surface-level lexical matching, smaller models can be more stable and even preferable.

Initial prompts also perform reasonably well, particularly with GPT-4o. For instance, the baseline prompt yields a Kappa of 0.481 and Accuracy of 0.760 on TREC DL 2019, and a Kappa of 0.362 and Accuracy of 0.716 on ETHOS. Nevertheless, the improvements achieved by ProTeGi-EMB—up to 0.067 in Kappa and 0.105 in Accuracy—highlight the effectiveness of structured prompt optimization beyond scaling model size alone.

Taken together, these findings suggest there are two key contributions of this work: first, textual gradients offer a robust framework for prompt optimization, and second, embedding-based selection significantly enhances this process by reliably identifying semantically relevant candidates.

5.3. Qualitative Analysis of Gradient Selection and Prompt Revision

To better understand how gradient selection impacts final prompt quality, we conducted a qualitative comparison between ProTeGi and our proposed ProTeGi-EMB.

Table 4 presents a representative example from the LIAR dataset, including the initial prompt, the selected textual gradients, and the resulting prompt edits for each method.

Although both methods begin with the same initial prompt, they select different gradients, resulting in distinct modifications. The gradient selected by ProTeGi focuses narrowly on a specific misinterpretation concerning a claim about ethics reform. It critiques the model’s failure to distinguish between “direct” and “indirect” involvement, leading to a factual inaccuracy regarding the speaker’s exclusivity. While this feedback is precise, it is highly context-dependent and lacks generalizability. Consequently, the revised prompt emphasizes “specific wording” and “factual accuracy,” which may be helpful in similar instances but lacks robustness across more diverse inputs.

In contrast, ProTeGi-EMB selects a gradient that is semantically closer to the robust center in the embedding space. This gradient highlights a broader reasoning flaw: GPT’s tendency to interpret statements too literally while overlooking nuance, ambiguity, and broader context. The revised prompt addresses this issue directly by instructing the model to consider “all implications and nuances” and to “account for broader interpretations.” This type of generalized guidance is more likely to improve model behavior across a wider range of examples.

This difference originates from the underlying selection mechanisms. ProTeGi generates multiple textual gradients but evaluates only a subset due to computational constraints. Beam search followed by bandit selection introduces randomness, and the final outcome can be influenced by sampling variance or noise in early evaluations. As a result, the selected gradient may not fully represent the overall distribution of feedback derived from the training data.

In contrast, ProTeGi-EMB embeds all candidate gradients and selects the one closest to the semantic center. Since the center is computed from the full gradient set, this strategy better captures the consensus of the training data and avoids the stochastic bias introduced by selective sampling. This deterministic and globally-informed process enables the model to identify gradients that are both effective and representative, leading to more reliable prompt optimization.

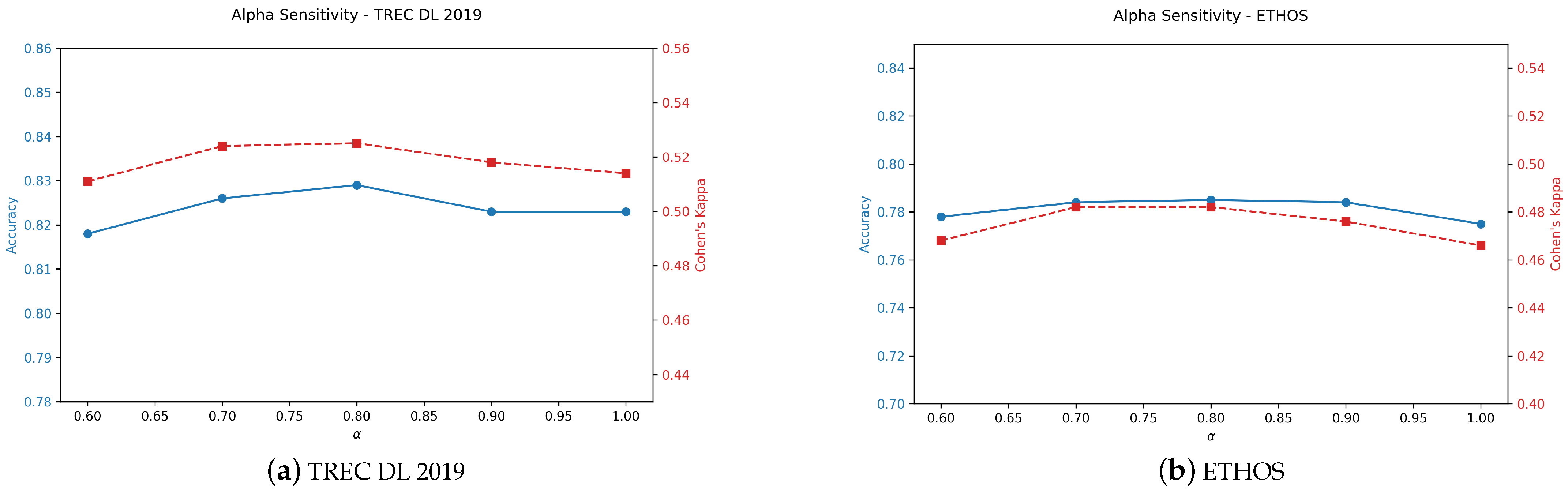

5.4. Alpha Sensitivity

To evaluate the robustness of our method with respect to the confidence threshold

, we conducted experiments with

.

Figure 4a,b present the performance variation for the TREC DL 2019 and ETHOS datasets, respectively, reporting both Accuracy and Cohen’s Kappa scores. All experiments in this section were conducted with a fixed Top-

K setting of

.

The results show that performance remains relatively stable across the full range of values. Although or tends to produce slightly higher scores, even yields comparable performance, indicating that the method is not overly sensitive to the specific choice of . The small fluctuations across settings reflect realistic variations without undermining the overall robustness of the approach.

6. Discussion

6.1. Efficient Prompt Optimization Under Resource Constraints

One of the key practical advantages of our approach lies in its efficiency when operating under limited computational or API-access budgets. Existing prompt-optimization frameworks such as APE, OPRO, and ProTeGi typically generate multiple candidates to evaluate and select among them. This process, while effective, can be costly—each candidate must be evaluated through multiple LLM API calls across several rounds.

In contrast, our method enables more efficient candidate selection by leveraging semantic embedding proximity. Since all gradient candidates are embedded once and ranked by their distance to the robust center, we can limit evaluation to only the top-k candidates. As shown in

Figure 3, selecting just the top four gradients yields the best-performing one in over 70% of cases. This means that even evaluating only a single top-ranked candidate (top one) can yield competitive performance, significantly reducing the number of required API calls. Further details on the resource and complexity analysis are provided in

Appendix C.

This property makes our method particularly well-suited for real-world applications where cost, speed, or access limitations constrain the number of prompt variants that can be tested. The ability to achieve near-optimal performance with minimal model interaction is a substantial practical benefit, especially in large-scale or time-sensitive deployments.

6.2. Robustness and Sensitivity

Our results show that semantic proximity to the embedding center provides a strong signal for selecting high-quality textual gradients. By choosing the gradient nearest to a robust center, ProTeGi-EMB captures generalizable reasoning patterns and improves prompt performance across datasets, echoing prototype-based learning where instances closer to the class center tend to be more stable [

31].

At the same time, the effectiveness of center-based selection depends on both model and dataset characteristics. On datasets dominated by lexical matching (e.g., TREC DL 2019), smaller models occasionally match or exceed larger ones, while on reasoning-intensive or subjective datasets (e.g., LIAR, ETHOS), larger models consistently perform better. This indicates that center-based selection is task-invariant in principle, but its success in practice reflects how well the underlying LLM captures semantic nuances and how closely the task aligns with the embedding space.

Bias also plays a role: LIAR gradients may cluster by political leaning, and ETHOS reflects annotator subjectivity. Moreover, LLMs inherit priors from their pretraining data, which can shape gradient generation and selection. These factors highlight that part of the observed gains may arise from dataset- or model-specific biases rather than universally generalizable reasoning.

Overall, ProTeGi-EMB demonstrates robustness in consistently extracting strong gradients, while remaining sensitive to model choice, dataset structure, and inherent biases in both data and models. Nevertheless, despite these sensitivities, our results confirm that embedding-based center selection provides a broadly effective and reliable foundation for prompt optimization.

6.3. Isotropy of Gradient Embeddings

Beyond dataset- and model-level effects, our analysis highlights a structural property of the gradient embedding space itself. Previous studies have noted that generic sentence embedding spaces often suffer from anisotropy, where most representations collapse into a few dominant directions. In contrast, our experiments suggest that textual gradient embeddings exhibit a more isotropic distribution. As illustrated in

Figure 1, the gradients are spread relatively evenly around the center, with no strong directional bias. The robust center lies close to the mean embedding, and the top-

gradients form a symmetric cluster.

This isotropy is likely linked to the generative nature of textual gradients. Because each gradient is produced as a generalized reasoning instruction in response to prediction errors, the resulting embeddings are semantically diverse yet aligned toward a shared objective. As a result, embedding-based similarity measures such as cosine similarity become more reliable in this space compared to raw sentence embeddings, which are more prone to anisotropy.

These observations indicate that the robustness of ProTeGi-EMB does not arise solely from center-based selection, but also from the structural advantages of the gradient embedding space itself. Leveraging this isotropy enhances both the stability and interpretability of our method, providing an additional explanation for the consistent gains observed across tasks.

6.4. Limitations and Future Directions

While ProTeGi-EMB demonstrates a strong empirical performance and practical efficiency, several limitations remain.

First, the current framework selects only a single textual gradient—the one closest to the embedding center. This design emphasizes efficiency and interpretability but limits the diversity of feedback signals. As shown in

Figure 3, semantic proximity does not always guarantee optimality, and future work may explore top-

k aggregation or direct synthesis from the center embedding.

Second, our method currently relies on cosine similarity as the sole metric for measuring semantic proximity. Although widely used and effective, alternative distance measures (e.g., Euclidean, Mahalanobis) may yield different behaviors. A systematic comparison would provide a more complete understanding of the robustness of center-based selection.

Finally, the method focuses on selection rather than generation. While this makes it computationally lightweight and interpretable, it lacks the dynamic exploration of new semantic directions enabled by iterative generation frameworks such as OPRO. Combining embedding-based selection with generation-based methods may offer a balanced trade-off between efficiency and adaptability.

Addressing these limitations will help improve the generalizability, robustness, and interpretability of ProTeGi-EMB in future work.

7. Conclusions

In this work, we present ProTeGi-EMB, a center-aware prompt-optimization method that selects textual gradients by identifying the candidate most semantically aligned with the consensus of all feedback signals in embedding space. Unlike prior methods that rely on beam search or bandit-based sampling, our method embeds all candidate gradients and selects the one closest to the center, an embedding-derived representation of the overall feedback signal.

Through comprehensive experiments on three diverse datasets and multiple LLMs, we demonstrated that proximity to the center is a strong indicator of gradient quality. Our proposed method, ProTeGi-EMB, consistently outperforms strong baselines such as APE, OPRO, and the original ProTeGi, while requiring fewer prompt evaluations. This efficiency makes the method especially suitable for resource-constrained settings such as API-limited environments or large-scale batch evaluations.

Qualitative analyses further revealed that our center-based selection strategy tends to favor gradients that are generalizable and aligned with broader reasoning patterns, producing human-readable edits that allow each revision step to be traced if desired. However, a key limitation remains: the reliance on pre-generated gradients, which may constrain exploration and diversity. To address this limitation, future work could investigate synthesizing gradients directly from the embedding center, for instance by leveraging pretrained decoder LMs to generate candidate edits that better reflect the semantic consensus of the pool.

Overall, our findings underscore the effectiveness and generalizability of embedding-based selection. This approach enables more efficient prompt optimization and opens up promising directions in controllable generation and feedback-driven interaction with LLMs.

Author Contributions

Conceptualization, Y.J. and J.C.; methodology, Y.J. and J.C.; formal analysis, Y.J.; investigation, Y.J.; writing, original draft preparation, Y.J.; writing, review and editing, J.C.; supervision, J.C.; project administration, J.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Hankuk University of Foreign Studies Research Fund (2025).

Data Availability Statement

The data presented in this study are available in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| LLM | Large Language Models |

| IR | Information Retrieval |

| NLP | Natural Language Processing |

| TextGrad | Textual Gradient |

| ProTeGi | Prompt Optimization with Textual Gradients |

| ProTeGi-Emb | ProTeGi with Embedding-Based Selection |

| APE | Automatic Prompt Engineering |

| OPRO | Optimization by PROmpting |

Appendix A. Meta Prompts for Textual Gradient Optimization

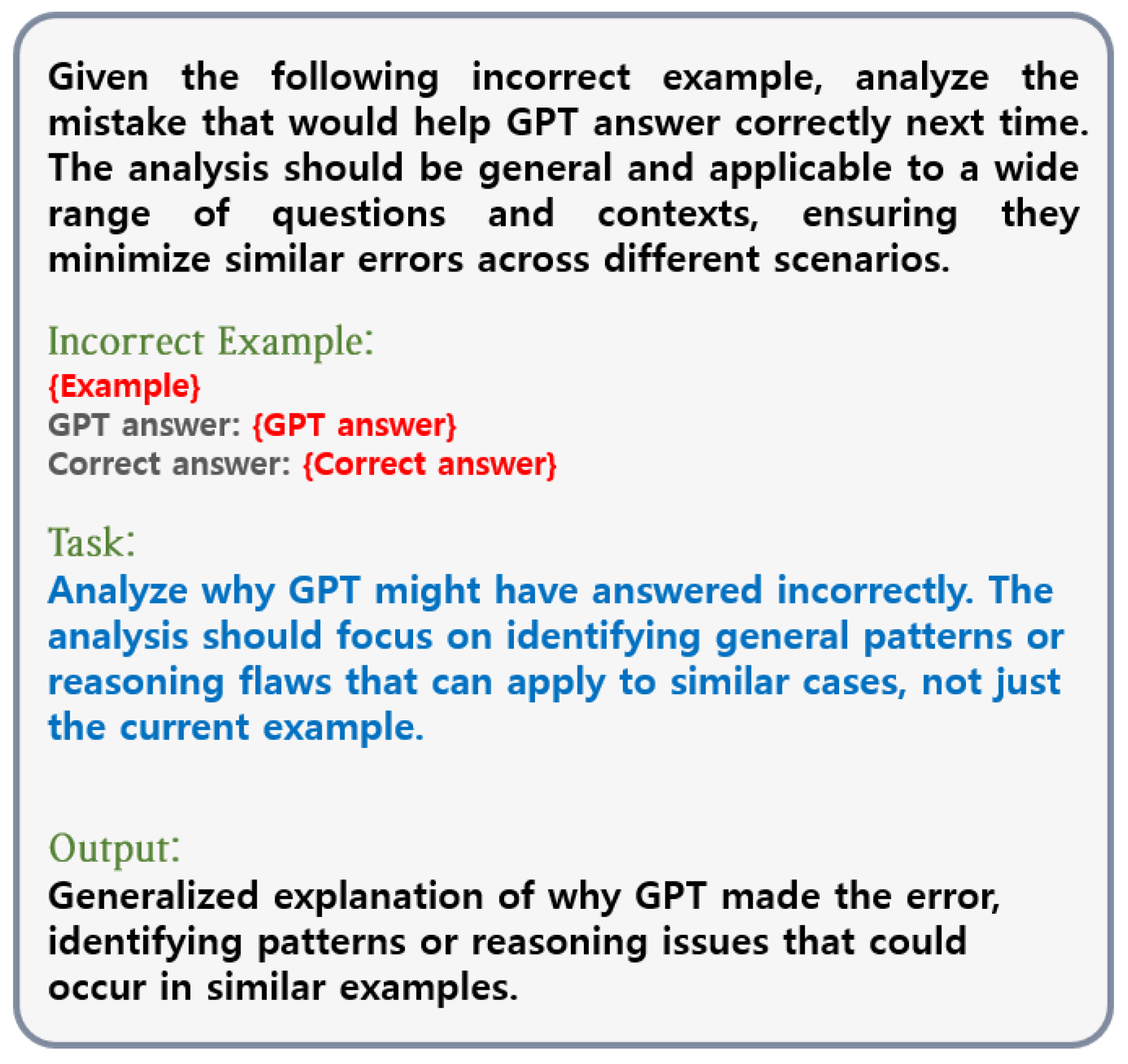

In this appendix, we provide the detailed meta prompts used at different stages of our textual gradient optimization pipeline. Our method involves two key steps requiring LLM intervention: (1) generating textual gradients based on model prediction errors, and (2) updating the current prompt using the selected gradient. For reproducibility and transparency, we include the exact prompts used during each of these stages.

Appendix A.1. Gradient Generation Prompt

Figure A1 shows the meta prompt used to extract textual gradients from the LLM. At each iteration of the optimization process, we sample a batch of training examples and identify instances where the model’s prediction does not match the gold label. For each incorrect case, we construct an input that includes the query, GPT’s incorrect answer, and the correct answer.

The LLM is then asked to analyze the cause of the error. This analysis, referred to as the textual gradient, captures generalized reasoning flaws rather than case-specific details. By focusing on broadly applicable patterns, the feedback serves as a direction for improving the prompt in a way that generalizes across future queries.

Figure A1.

Prompt template for generating textual gradients.

Figure A1.

Prompt template for generating textual gradients.

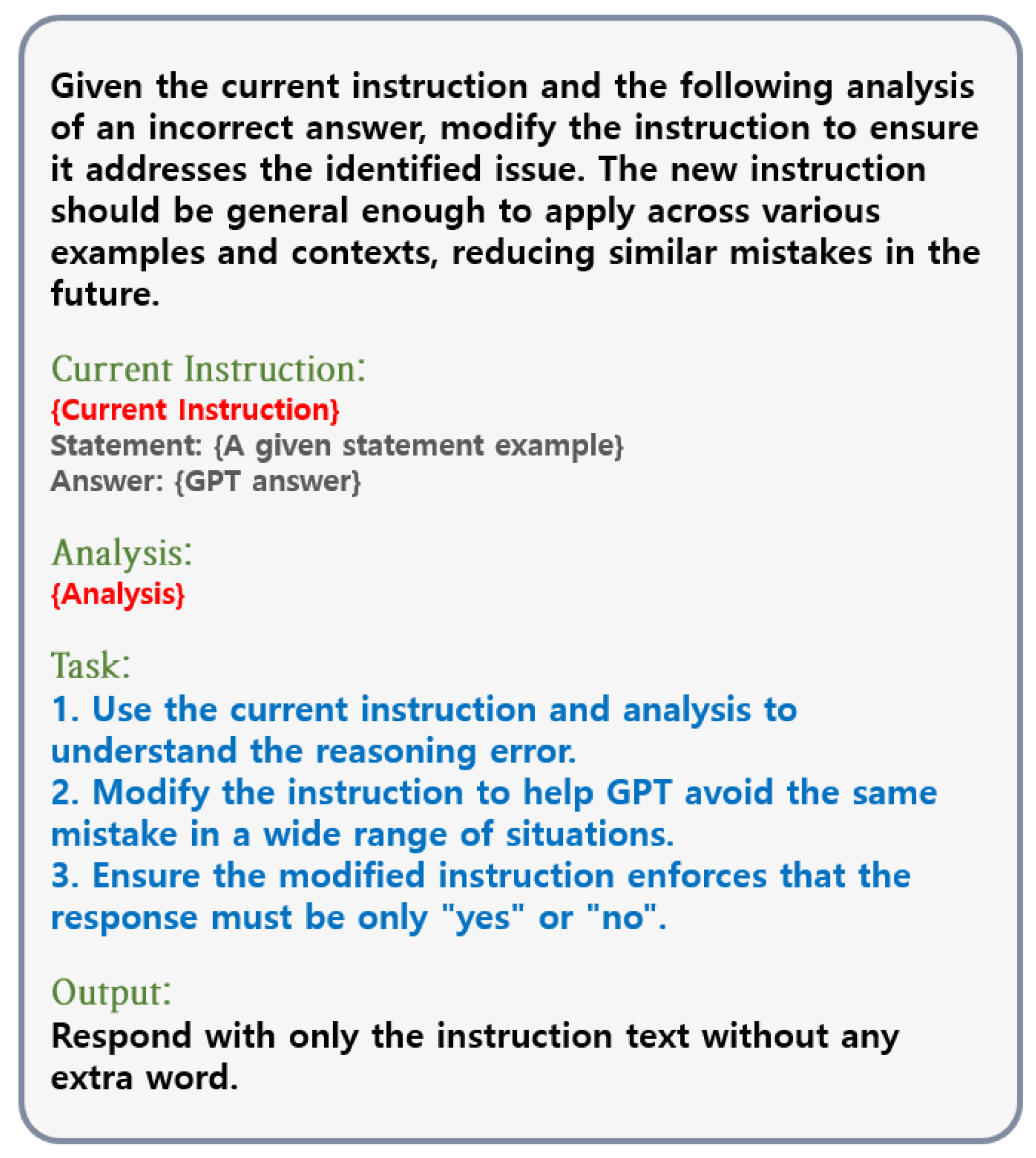

Appendix A.2. Prompt Modification Prompt

Figure A2 shows the meta prompt used to revise the current instruction based on the selected textual gradient. Once a generalized analysis is generated, we ask the LLM to modify the instruction so that it addresses the reasoning flaw identified in the analysis.

This prompt provides the LLM with the original instruction, a concrete example (including GPT’s incorrect answer), and the generalized feedback. The LLM must return an improved instruction that is generalizable and helps reduce similar mistakes in future predictions. Additionally, the revised instruction is required to constrain the output to a binary “yes” or “no” response—important for ensuring format consistency in evaluation tasks such as fact verification or toxicity detection.

Figure A2.

Prompt template for modifying instructions based on textual gradient analysis.

Figure A2.

Prompt template for modifying instructions based on textual gradient analysis.

Appendix B. Prompt Examples by Dataset

This appendix provides visual illustrations of the instruction prompts used for each dataset. While our meta prompting strategy remains consistent across tasks (as detailed in

Appendix A), the specific wording and structure of the task prompts are tailored to each dataset.

Figure A3–

Figure A5 show examples of the actual inputs provided to the LLMs during inference.

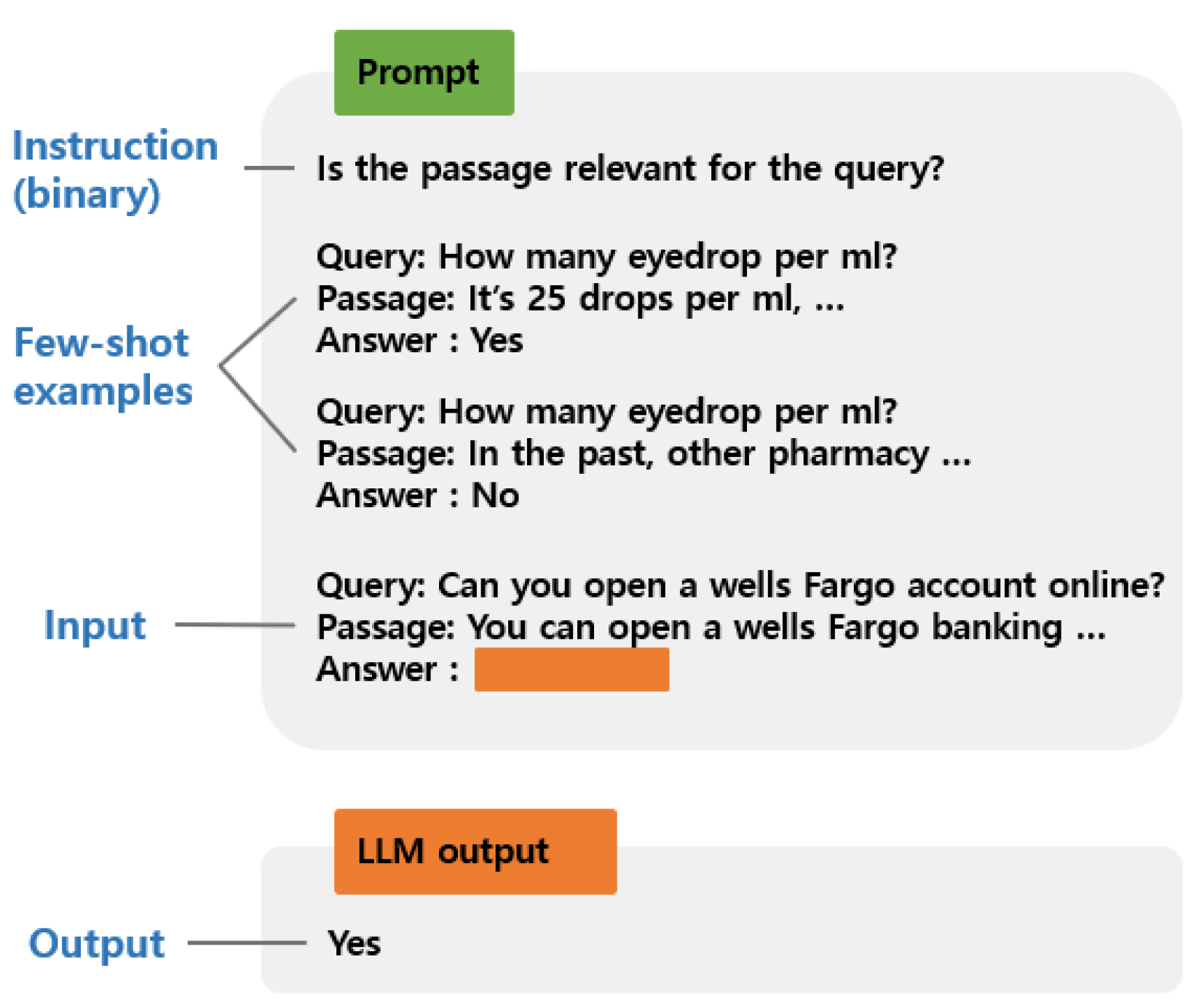

Appendix B.1. TREC DL 2019

Figure A3 shows the prompt template used for the TREC DL 2019 passage retrieval task. The model is asked to determine whether a passage is relevant to a given query. The instruction is framed in binary form (Yes/No), and few-shot examples are provided to guide the model’s response format and behavior.

Figure A3.

Prompt structure for the TREC DL 2019 dataset. The task is framed as binary passage relevance classification, with few-shot examples.

Figure A3.

Prompt structure for the TREC DL 2019 dataset. The task is framed as binary passage relevance classification, with few-shot examples.

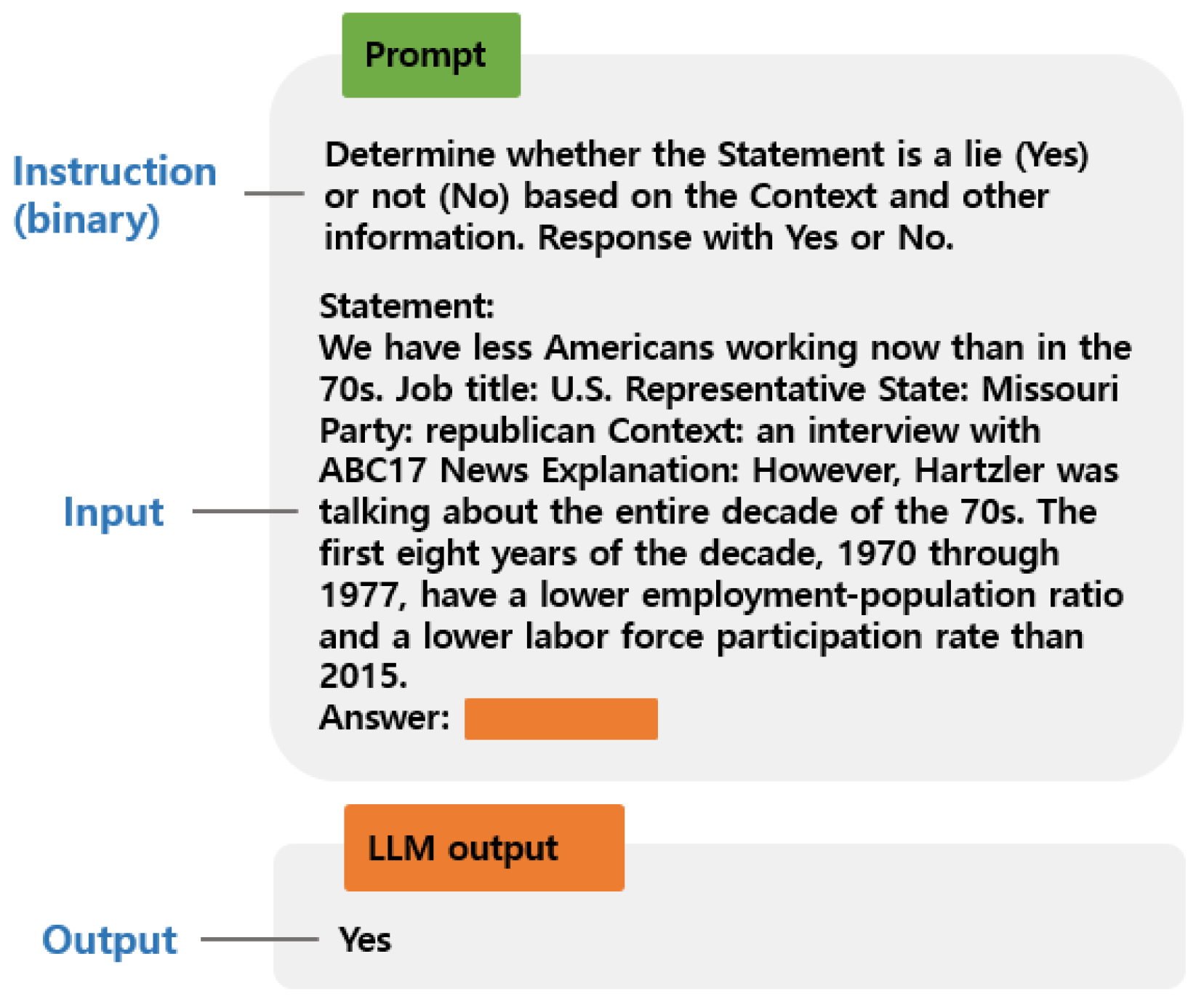

Appendix B.2. LIAR

Figure A4 presents the instruction format used in the LIAR dataset. The task is to verify the factuality of a political statement. The model is prompted to decide whether the statement is a lie based on surrounding metadata (e.g., speaker, party, context) and is required to answer with “Yes” or “No.” Unlike TREC, no few-shot examples are included, and the input is more structured.

Figure A4.

Prompt used for the LIAR dataset. The model is asked to classify whether a political statement is a lie based on contextual information.

Figure A4.

Prompt used for the LIAR dataset. The model is asked to classify whether a political statement is a lie based on contextual information.

Appendix B.3. ETHOS

Figure A5 shows the prompt used for the ETHOS hate speech detection dataset. The instruction asks whether the input text is hate speech, and the model must respond with a binary answer. No additional metadata or few-shot examples are provided in this task; the focus is entirely on the speech content itself.

Figure A5.

Prompt used for the ETHOS dataset. The model is instructed to detect hate speech in a given text and respond with a binary label.

Figure A5.

Prompt used for the ETHOS dataset. The model is instructed to detect hate speech in a given text and respond with a binary label.

Appendix C. Resource and Complexity Analysis

Table A1 summarizes the number of LLM calls required for different prompt-optimization methods. Since our method relies solely on API calls, no GPU resources are required. The results show that ProTeGi-EMB requires significantly fewer calls than prior approaches while maintaining competitive performance.

Table A1.

Comparison of LLM calls required for different prompt-optimization methods.

Table A1.

Comparison of LLM calls required for different prompt-optimization methods.

| Method | Prompt Expansion per Step | LLM Calls per Prompt | Total Steps | Total LLM Calls |

|---|

| APE [7] | 100 | 100 | 10 | 100,000 |

| OPRO [32] | 8 | 100 | 10 | 8000 |

| ProTeGi (baseline) [9] | 48 | 100 | 10 | 48,000 |

| ProTeGi-EMB (ours) | 4 | 100 | 10 | 4000 |

References

- Bubeck, S.; Chadrasekaran, V.; Eldan, R.; Gehrke, J.; Horvitz, E.; Kamar, E.; Lee, P.; Lee, Y.T.; Li, Y.; Lundberg, S.; et al. Sparks of artificial general intelligence: Early experiments with gpt-4. arXiv 2023, arXiv:2303.12712. [Google Scholar] [CrossRef]

- OpenAI. GPT-4 Technical Report. arXiv 2023, arXiv:2303.08774. [Google Scholar] [CrossRef]

- Reynolds, L.; McDonell, K. Prompt programming for large language models: Beyond the few-shot paradigm. In Proceedings of the Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; pp. 1–7. [Google Scholar]

- Sahoo, P.; Singh, A.K.; Saha, S.; Jain, V.; Mondal, S.; Chadha, A. A systematic survey of prompt engineering in large language models: Techniques and applications. arXiv 2024, arXiv:2402.07927. [Google Scholar] [CrossRef]

- Lu, Y.; Bartolo, M.; Moore, A.; Riedel, S.; Stenetorp, P. Fantastically ordered prompts and where to find them: Overcoming few-shot prompt order sensitivity. arXiv 2021, arXiv:2104.08786. [Google Scholar]

- Webson, A.; Pavlick, E. Do prompt-based models really understand the meaning of their prompts? arXiv 2021, arXiv:2109.01247. [Google Scholar]

- Zhou, Y.; Muresanu, A.I.; Han, Z.; Paster, K.; Pitis, S.; Chan, H.; Ba, J. Large Language Models are Human-Level Prompt Engineers. In Proceedings of the Eleventh International Conference on Learning Representations, Online, 25–29 April 2022. [Google Scholar]

- Chang, K.; Xu, S.; Wang, C.; Luo, Y.; Liu, X.; Xiao, T.; Zhu, J. Efficient Prompting Methods for Large Language Models: A Survey. arXiv 2024, arXiv:2404.01077. [Google Scholar] [CrossRef]

- Pryzant, R.; Iter, D.; Li, J.; Lee, Y.T.; Zhu, C.; Zeng, M. Automatic prompt optimization with “gradient descent” and beam search. arXiv 2023, arXiv:2305.03495. [Google Scholar] [CrossRef]

- Ye, Q.; Axmed, M.; Pryzant, R.; Khani, F. Prompt engineering a prompt engineer. arXiv 2023, arXiv:2311.05661. [Google Scholar]

- Zelikman, E.; Wu, Y.; Mu, J.; Goodman, N.D. STaR: Bootstrapping Reasoning With Reasoning. Adv. Neural Inf. Process. Syst. 2022, 35, 28893–28907. [Google Scholar]

- Lester, B.; Al-Rfou, R.; Constant, N. The Power of Scale for Parameter-Efficient Prompt Tuning. arXiv 2021, arXiv:2104.08691. [Google Scholar] [CrossRef]

- Qin, C.; Joty, S. LFPT5: A unified framework for lifelong few-shot language learning based on prompt tuning of t5. arXiv 2021, arXiv:2110.07298. [Google Scholar]

- Deng, M.; Wang, J.; Hsieh, C.P.; Wang, Y.; Guo, H.; Shu, T.; Song, M.; Xing, E.P.; Hu, Z. Rlprompt: Optimizing discrete text prompts with reinforcement learning. arXiv 2022, arXiv:2205.12548. [Google Scholar] [CrossRef]

- Sun, H.; Li, X.; Xu, Y.; Homma, Y.; Cao, Q.; Wu, M.; Jiao, J.; Charles, D. Autohint: Automatic prompt optimization with hint generation. arXiv 2023, arXiv:2307.07415. [Google Scholar] [CrossRef]

- Wang, X.; Li, C.; Wang, Z.; Bai, F.; Luo, H.; Zhang, J.; Jojic, N.; Xing, E.P.; Hu, Z. Promptagent: Strategic planning with language models enables expert-level prompt optimization. arXiv 2023, arXiv:2310.16427. [Google Scholar]

- Ma, R.; Wang, X.; Zhou, X.; Li, J.; Du, N.; Gui, T.; Zhang, Q.; Huang, X. Are large language models good prompt optimizers? arXiv 2024, arXiv:2402.02101. [Google Scholar] [CrossRef]

- Shinn, N.; Cassano, F.; Labash, B.; Gopinath, A.; Narasimhan, K.; Yao, S. Reflexion: Language agents with verbal reinforcement learning. arXiv 2023, arXiv:2303.11366. [Google Scholar] [CrossRef]

- Madaan, A.; Lin, X.; Lee, R.; Yang, K.; Baral, C.; Hakkani-Tür, D.; Zaiane, O.; Liu, X. Self-Refine: Iterative Refinement with Self-Feedback. arXiv 2023, arXiv:2303.17651. [Google Scholar] [CrossRef]

- Li, Y.; Yang, C.; Ettinger, A. When Hindsight is Not 20/20: Testing Limits on Reflective Thinking in Large Language Models. In Proceedings of the Findings of the Association for Computational Linguistics: NAACL 2024, Mexico City, Mexico, 16–21 June 2024; pp. 3741–3753. [Google Scholar]

- Liu, F.; Scao, T.; Xie, L.; Shelton, M.; Gundersen, O.E.; Ruder, S.; Wang, T.; Zettlemoyer, L.; Reichart, R.; Gurevych, I. ProTeGi: Prompt Tuning with Textual Gradients. arXiv 2023, arXiv:2305.16422. [Google Scholar]

- Yuksekgonul, M.; Bianchi, F.; Boen, J.; Liu, S.; Huang, Z.; Guestrin, C.; Zou, J. TextGrad: Automatic “Differentiation” via Text. arXiv 2024, arXiv:2406.07496. [Google Scholar] [CrossRef]

- Zhu, K.; Zhao, Q.; Chen, H.; Wang, J.; Xie, X. PromptBench: A Unified Library for Evaluation of Large Language Models. arXiv 2023, arXiv:2312.07910. [Google Scholar]

- Chen, K.; Zhou, Y.; Zhang, X.; Wang, H. Prompt Stability Matters: Evaluating and Optimizing Auto-Generated Prompts. arXiv 2025, arXiv:2505.13546. [Google Scholar] [CrossRef]

- Gao, T.; Fisch, A.; Chen, D. Making pre-trained language models better few-shot learners. arXiv 2021, arXiv:2012.15723. [Google Scholar]

- Qiang, Y.; Nandi, S.; Mehrabi, N.; Ver Steeg, G.; Kumar, A.; Rumshisky, A.; Galstyan, A. Prompt Perturbation Consistency Learning for Robust Language Models. In Proceedings of the Findings of the Association for Computational Linguistics: EACL 2024, St. Julians, Malta, 17–22 March 2024; pp. 1123–1135. [Google Scholar]

- Liang, P.; Bommasani, R.; Lee, T.; Tsipras, D.; Soylu, D.; Yasunaga, M.; Zhang, Y.; Narayanan, D.; Wu, Y.; Kumar, A.; et al. Holistic evaluation of language models. arXiv 2022, arXiv:2211.09110. [Google Scholar] [CrossRef]

- Choi, J. Binary or Graded, Few-Shot or Zero-Shot: Prompt Design for GPTs in Relevance Evaluation. Adv. Artif. Intell. Mach. Learn. 2024, 4, 2687–2702. [Google Scholar] [CrossRef]

- Choi, J. Efficient Prompt Optimization for Relevance Evaluation via LLM-Based Confusion Matrix Feedback. Appl. Sci. 2025, 15, 5198. [Google Scholar] [CrossRef]

- Yang, C.; Wang, X.; Lu, Y.; Liu, H.; Le, Q.V.; Zhou, D.; Chen, X. Large language models as optimizers. arXiv 2023, arXiv:2309.03409. [Google Scholar]

- Snell, J.; Swersky, K.; Zemel, R.S. Prototypical Networks for Few-shot Learning. Adv. Neural Inf. Process. Syst. 2017, 30, 4077–4087. [Google Scholar]

- Yang, S.; Zhao, H.; Zhu, S.; Zhou, G.; Xu, H.; Jia, Y.; Zan, H. Zhongjing: Enhancing the chinese medical capabilities of large language model through expert feedback and real-world multi-turn dialogue. AAAI Conf. Artif. Intell. 2024, 38, 19368–19376. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).