Context-Driven Recommendation via Heterogeneous Temporal Modeling and Large Language Model in the Takeout System

Abstract

1. Introduction

- 1.

- For multimodal features, TLSR employs a hybrid fusion strategy to integrate explicit contextual information. Specifically, its behavior extraction layer embeds multimodal data to comprehensively represent item content attributes and extract explicit context, achieving early fusion. During intermediate fusion, the context capture layer and context propagation layer model long- and short-term user preferences, enabling cross-temporal local feature fusion and dynamic feature fusion for personalized context state representation. Subsequently, user features are progressively fused as part of the final representation.

- 2.

- Empirical analysis and comparative experiments on the real-world food delivery dataset show that TLSR consistently outperforms baseline models both in overall prediction accuracy and in the quality of the generated Top-K recommendation lists.

- 3.

- Through case studies, it is demonstrated that TLSR is capable of learning implicit contextual signals. Leveraging cross attention mechanisms, TLSR performs context retrospection to uncover implicit contextual states. After integrating DeepSeek-R1’s reasoning capabilities for open knowledge-based enhancements, the reranked recommendation lists better align with the current context.

- 4.

- The effectiveness of TLSR’s hybrid fusion strategy is validated through ablation studies. The results confirm that modality fusion, layer fusion, and combination fusion all play significant roles in helping the model learn contextual states and improving its overall performance.

2. Literature Review

2.1. Multimodal Fusion

2.2. Recommendation System

3. Methodology

3.1. TLSR

3.1.1. Behavior Extraction Layer

3.1.2. Context Capture Layer

3.1.3. Context Propagation Layer

3.1.4. Prediction Layer

3.2. DeepSeek-R1

4. Experiments and Results

4.1. Experimental Settings

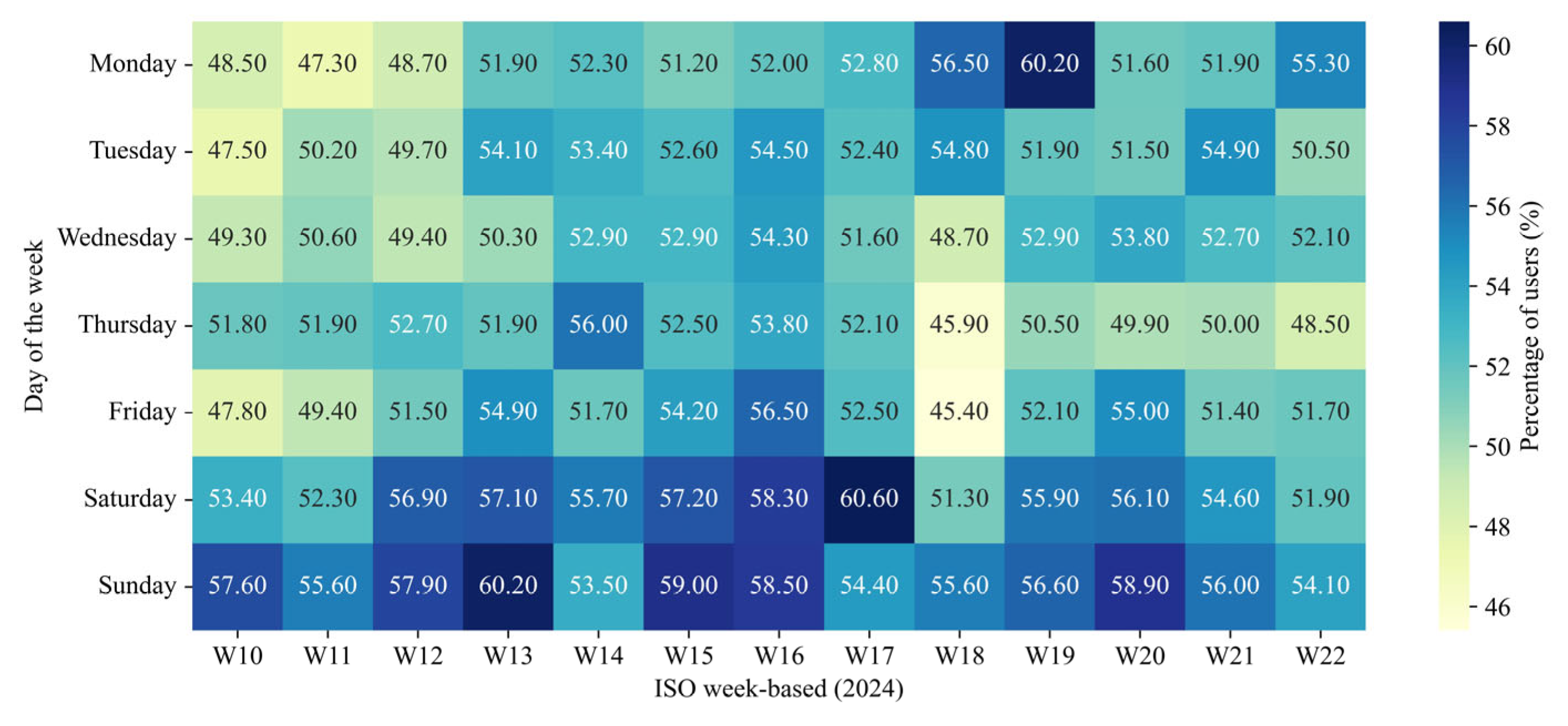

4.1.1. Dataset and Preprocessing

4.1.2. Experimental Details

4.1.3. Evaluation Metrics

4.2. Results and Analysis

4.2.1. Comparative Study (RQ1)

4.2.2. Case Study (RQ2 and RQ3)

4.2.3. Ablation Study (RQ4)

4.2.4. Sensitivity Analysis

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| SR | Sequential Recommendation |

| CARS | Context-Aware Recommendation System |

| TLSR | Time-Aware Long- and Short-Term Recommendation |

| LLM | Large Language Model |

| CNN | Convolutional Neural Network |

| Conv3d | Three-Dimensional Convolution |

| Conv2d | Two-Dimensional Convolution |

| GRU | Gated Recurrent Unit |

| BCE | Binary Cross-Entropy |

| RQ | Research Question |

| OFD | On-demand Food Delivery |

| RGB | Red, Green, Blue |

| Ap | Average Precision |

| AUC | Area Under Curve |

| ROC | Receiver Operating Characteristic |

| HR | Hit Ratio |

| MRR | Mean Reciprocal Rank |

| NDCG | Normalized Discounted Cumulative Gain |

Appendix A

Appendix A.1

| Algorithm A1: The Training Process of TLSR | |

| Input: Image feature matrix extracted by ResNet50, User behavior matrix . Output: Optimized model parameters . | |

| 1: | Initialize ; |

| 2: | for epoch=1 to do |

| 3: | ; // |

| // Training (gradient backpropagation). is the number of training batches. | |

| 4: | for to do |

| 5: | ; |

| 6: | ; |

| 7: | ; |

| 8: | ; // learning rate |

| 9: | end for |

| // Validation (no gradient backpropagation). is the number of validation batches. | |

| 10: | for to do |

| 11: | ; |

| 12: | ; |

| 13: | ; |

| 14: | end for |

| 15: | ; |

| // Model selection | |

| 16: | if then |

| 17: | ; |

| 18: | ; |

| 19: | end if |

| 20: | end for |

| 21: | return . |

Appendix A.2

| Layer | Component | Output Shape |

|---|---|---|

| Behavior extraction layer | Multimodal feature embedding and concatenation | (100, 4, 7, 48, 2110) |

| (100, 7, 48, 2110) | ||

| (100, 1, 2110) | ||

| Dimension permutation | (100, 48, 4, 7, 2110) | |

| (100, 48, 7, 2110) | ||

| Context capture layer | Conv3d | (100, 1, 4, 7, 2110) |

| Conv2d | (100, 1, 7, 2110) | |

| Maxpool-1, Maxpool-2, Maxpool-3, Maxpool-4 | (100, 7, 1055) | |

| Maxpool-5 | (100, 7, 422) | |

| Context propagation layer | GRUs-1, GRUs-2, GRUs-3, GRUs-4 | (100, 7, 200) |

| GRUs-5 | (100, 4, 400) | |

| Prediction layer | Multi-head cross attention | (100, 1, 2110) |

| Sigle-head cross attention | (100, 1, 2110) | |

| LayerNorm-1 | (100, 4, 400) | |

| LayerNorm-2 | (100, 7, 422) | |

| LayerNorm-3 | (100, 1, 2110) | |

| LayerNorm-4 | (100, 4220) | |

| Embedding | (100, 100) | |

| Linear | (100, 1) | |

| Sigmoid | (100, 1) |

Appendix B

Appendix B.1

Appendix B.2

Appendix B.3

References

- Wang, S.; Hu, L.; Wang, Y.; Cao, L.; Sheng, Q.Z.; Orgun, M. Sequential Recommender Systems: Challenges, Progress and Prospects. In Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence, Macao, China, 10–16 August 2019; International Joint Conferences on Artificial Intelligence Organization: Montreal, QC, Canada, 2019; pp. 6332–6338. [Google Scholar]

- Thøgersen, J. The Importance of the Context. In Concise Introduction to Sustainable Consumption; Edward Elgar Publishing: Cheltenham, UK, 2023; pp. 76–87. [Google Scholar]

- Dey, A.K. Understanding and Using Context. Pers. Ubiquitous Comput. 2001, 5, 4–7. [Google Scholar] [CrossRef]

- Sanne, C. Willing Consumers—Or Locked-in? Policies for a Sustainable Consumption. Ecol. Econ. 2002, 42, 273–287. [Google Scholar] [CrossRef]

- Yao, W.; He, J.; Huang, G.; Cao, J.; Zhang, Y. A Graph-Based Model for Context-Aware Recommendation Using Implicit Feedback Data. World Wide Web 2015, 18, 1351–1371. [Google Scholar] [CrossRef]

- Hartatik; Winarko, E.; Heryawan, L. Context-Aware Recommendation System Survey: Recommendation When Adding Contextual Information. In Proceedings of the 2022 6th International Conference on Information Technology, Information Systems and Electrical Engi-neering (ICITISEE), Yogyakarta, Indonesia, 13–14 December 2022; pp. 7–13. [Google Scholar]

- Yuen, M.-C.; King, I.; Leung, K.-S. Temporal Context-Aware Task Recommendation in Crowdsourcing Systems. Knowl.-Based Syst. 2021, 219, 106770. [Google Scholar] [CrossRef]

- Ma, Y.; Narayanaswamy, B.; Lin, H.; Ding, H. Temporal-Contextual Recommendation in Real-Time. In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Virtual Event, CA, USA, 23–27 August 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 2291–2299. [Google Scholar]

- Wang, Z.; Wei, W.; Zou, D.; Liu, Y.; Li, X.-L.; Mao, X.-L.; Qiu, M. Exploring Global Information for Session-Based Recommendation. Pattern Recognit. 2024, 145, 109911. [Google Scholar] [CrossRef]

- Kumar, C.; Kumar, M. Session-Based Recommendations with Sequential Context Using Attention-Driven LSTM. Comput. Electr. Eng. 2024, 115, 109138. [Google Scholar] [CrossRef]

- Adomavicius, G.; Tuzhilin, A. Context-Aware Recommender Systems. In Recommender Systems Handbook; Ricci, F., Rokach, L., Shapira, B., Kantor, P.B., Eds.; Springer US: Boston, MA, USA, 2011; pp. 217–253. [Google Scholar]

- Meng, X.; Du, Y.; Zhang, Y.; Han, X. A Survey of Context-Aware Recommender Systems: From an Evaluation Perspective. IEEE Trans. Knowl. Data Eng. 2023, 35, 6575–6594. [Google Scholar] [CrossRef]

- Dourish, P. What We Talk about When We Talk about Context. Pers. Ubiquitous Comput. 2004, 8, 19–30. [Google Scholar] [CrossRef]

- Pagano, R.; Cremonesi, P.; Larson, M.; Hidasi, B.; Tikk, D.; Karatzoglou, A.; Quadrana, M. The Contextual Turn: From Context-Aware to Context-Driven Recommender Systems. In Proceedings of the 10th ACM Conference on Recommender Systems, Boston, MA, USA, 15–19 September 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 249–252. [Google Scholar]

- Nimchaiyanan, W. A Hotel Hybrid Recommendation Method Based On Context-Driven Using Latent Dirichlet Allocation. Master’s Thesis, Chulalongkorn University, Bangkok, Thailand, 2018. [Google Scholar]

- Zhou, G.; Mou, N.; Fan, Y.; Pi, Q.; Bian, W.; Zhou, C.; Zhu, X.; Gai, K. Deep Interest Evolution Network for Click-Through Rate Prediction. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 17 July 2019; Volume 33, pp. 5941–5948. [Google Scholar]

- Xue, Z.; Marculescu, R. Dynamic Multimodal Fusion. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Vancouver, BC, Canada, 17–24 June 2023; pp. 2575–2584. [Google Scholar]

- Zhao, F.; Zhang, C.; Geng, B. Deep Multimodal Data Fusion. ACM Comput. Surv. 2024, 56, 1–36. [Google Scholar] [CrossRef]

- Stahlschmidt, S.R.; Ulfenborg, B.; Synnergren, J. Multimodal Deep Learning for Biomedical Data Fusion: A Review. Brief. Bioinform. 2022, 23, bbab569. [Google Scholar] [CrossRef]

- Dai, Y.; Yan, Z.; Cheng, J.; Duan, X.; Wang, G. Analysis of Multimodal Data Fusion from an Information Theory Perspective. Inf. Sci. 2023, 623, 164–183. [Google Scholar] [CrossRef]

- Li, X.; Li, X.; Ye, T.; Cheng, X.; Liu, W.; Tan, H. Bridging the Gap between Multi-Focus and Multi-Modal: A Focused Integration Framework for Multi-Modal Image Fusion. In Proceedings of the 2024 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2024; pp. 1617–1626. [Google Scholar]

- Krishna, G.; Dharur, S.; Rudovic, O.; Dighe, P.; Adya, S.; Abdelaziz, A.H.; Tewfik, A.H. Modality Drop-Out for Multimodal Device Directed Speech Detection Using Verbal and Non-Verbal Features. In Proceedings of the ICASSP 2024—2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; pp. 8240–8244. [Google Scholar]

- Li, X.; Guo, X.; Han, P.; Wang, X.; Li, H.; Luo, T. Laplacian Redecomposition for Multimodal Medical Image Fusion. IEEE Trans. Instrum. Meas. 2020, 69, 6880–6890. [Google Scholar] [CrossRef]

- Zhao, L.; Hu, Q.; Li, X.; Zhao, J. Multimodal Fusion Generative Adversarial Network for Image Synthesis. IEEE Signal Process. Lett. 2024, 31, 1865–1869. [Google Scholar] [CrossRef]

- Zhu, P.; Hua, J.; Tang, K.; Tian, J.; Xu, J.; Cui, X. Multimodal Fake News Detection through Intra-Modality Feature Aggregation and Inter-Modality Semantic Fusion. Complex Intell. Syst. 2024, 10, 5851–5863. [Google Scholar] [CrossRef]

- Gan, C.; Fu, X.; Feng, Q.; Zhu, Q.; Cao, Y.; Zhu, Y. A Multimodal Fusion Network with Attention Mechanisms for Visual–Textual Sentiment Analysis. Expert Syst. Appl. 2024, 242, 122731. [Google Scholar] [CrossRef]

- Yu, M.; He, W.; Zhou, X.; Cui, M.; Wu, K.; Zhou, W. Review of Recommendation System. J. Comput. Appl. 2022, 42, 1898–1913. Available online: https://www.joca.cn/CN/10.11772/j.issn.1001-9081.2021040607 (accessed on 27 July 2025). (In Chinese).

- Su, X.; Khoshgoftaar, T.M. A Survey of Collaborative Filtering Techniques. Adv. Artif. Intell. 2009, 2009, 421425. [Google Scholar] [CrossRef]

- Richardson, M.; Dominowska, E.; Ragno, R. Predicting Clicks: Estimating the Click-through Rate for New Ads. In Proceedings of the 16th International Conference on World Wide Web, Banff, AB, Canada, 8–12 May 2007; Association for Computing Machinery: New York, NY, USA, 2007; pp. 521–530. [Google Scholar]

- Rendle, S. Factorization Machines. In Proceedings of the 2010 IEEE International Conference on Data Mining, Sydney, NSW, Australia, 13–17 December 2010; pp. 995–1000. [Google Scholar]

- Wang, R.; Fu, B.; Fu, G.; Wang, M. Deep & Cross Network for Ad Click Predictions. In Proceedings of the ADKDD’17, Halifax, NS, Canada, 14 August 2017; ACM: Halifax, NS, Canada, 2017; pp. 1–7. [Google Scholar]

- Wu, S.; Sun, F.; Zhang, W.; Xie, X.; Cui, B. Graph Neural Networks in Recommender Systems: A Survey. ACM Comput. Surv. 2022, 55, 1–37. [Google Scholar] [CrossRef]

- Gao, G. Review of Research on Neural Network Combined with Attention Mechanism in Recommendation System. Comput. Eng. Appl. 2024, 60, 47–60. (In Chinese) [Google Scholar] [CrossRef]

- Rendle, S.; Freudenthaler, C.; Schmidt-Thieme, L. Factorizing Personalized Markov Chains for Next-Basket Recommendation. In Proceedings of the 19th International Conference on World Wide Web, Raleigh, NC, USA, 26–30 April 2022; Association for Computing Machinery: New York, NY, USA, 2010; pp. 811–820. [Google Scholar]

- Kang, W.-C.; McAuley, J. Self-Attentive Sequential Recommendation. In Proceedings of the 2018 IEEE International Conference on Data Mining (ICDM), Singapore, 17–20 November 2018; IEEE: Singapore, 2018; pp. 197–206. [Google Scholar]

- Li, J.; Wang, Y.; McAuley, J. Time Interval Aware Self-Attention for Sequential Recommendation. In Proceedings of the 13th International Conference on Web Search and Data Mining, Houston, TX, USA, 3–7 February 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 322–330. [Google Scholar]

- Zhou, G.; Zhu, X.; Song, C.; Fan, Y.; Zhu, H.; Ma, X.; Yan, Y.; Jin, J.; Li, H.; Gai, K. Deep Interest Network for Click-Through Rate Prediction. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018; Association for Computing Machinery: New York, NY, USA, 2018; pp. 1059–1068. [Google Scholar]

- Han, R.; Li, Q.; Jiang, H.; Li, R.; Zhao, Y.; Li, X.; Lin, W. Enhancing CTR Prediction through Sequential Recommendation Pre-Training: Introducing the SRP4CTR Framework. In Proceedings of the 33rd ACM International Conference on Information and Knowledge Management, Boise, ID, USA, 21–25 October 2024; Association for Computing Machinery: New York, NY, USA, 2024; pp. 3777–3781. [Google Scholar]

- Wang, H.; Li, Z.; Liu, X.; Ding, D.; Hu, Z.; Zhang, P.; Zhou, C.; Bu, J. Fulfillment-Time-Aware Personalized Ranking for On-Demand Food Recommendation. In Proceedings of the 30th ACM International Conference on Information & Knowledge Management, Virtual Event, QLD, Australia, 1–5 November 2021; ACM: New York, NY, USA, 2021; pp. 4184–4192. [Google Scholar]

- Wu, G.; Qin, H.; Hu, Q.; Wang, X.; Wu, Z. Research on Large Language Models and Personalized Recommendation. CAAI Trans. Intell. Syst. 2024, 19, 1351–1365. (In Chinese) [Google Scholar] [CrossRef]

- Dai, S.; Shao, N.; Zhao, H.; Yu, W.; Si, Z.; Xu, C.; Sun, Z.; Zhang, X.; Xu, J. Uncovering ChatGPT’s Capabilities in Recommender Systems. In Proceedings of the 17th ACM Conference on Recommender Systems, Singapore, 18–22 September 2023; Association for Computing Machinery: New York, NY, USA, 2023; pp. 1126–1132. [Google Scholar]

- Du, Y.; Luo, D.; Yan, R.; Wang, X.; Liu, H.; Zhu, H.; Song, Y.; Zhang, J. Enhancing Job Recommendation through LLM-Based Generative Adversarial Networks. Proc. AAAI Conf. Artif. Intell. 2024, 38, 8363–8371. [Google Scholar] [CrossRef]

- Wang, X.; Chen, H.; Pan, Z.; Zhou, Y.; Guan, C.; Sun, L.; Zhu, W. Automated Disentangled Sequential Recommendation with Large Language Models. ACM Trans. Inf. Syst. 2025, 43, 1–29. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Xu, D.; Ruan, C.; Korpeoglu, E.; Kumar, S.; Achan, K. Inductive Representation Learning on Temporal Graphs, Addis Ababa, Ethiopia. arXiv 2020, arXiv:2002.07962. [Google Scholar]

- Zhang, T.; Zhang, J.; Guo, C.; Chen, H.; Zhou, D.; Wang, Y.; Xu, A. A Survey of Image Object Detection Algorithm Based on Deep Learning. Telecommun. Sci. 2020, 36, 92–106. (In Chinese) [Google Scholar]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: New York, NY, USA, 2017; Volume 30. [Google Scholar]

- Hu, D.; Deng, W.; Jiang, Z.; Shi, Y. A Study on Predicting Key Times in the Takeout System’s Order Fulfillment Process. Systems 2025, 13, 457. [Google Scholar] [CrossRef]

- DeepSeek-AI; Liu, A.; Feng, B.; Xue, B.; Wang, B.; Wu, B.; Lu, C.; Zhao, C.; Deng, C.; Zhang, C.; et al. DeepSeek-V3 Technical Report. arXiv 2024, arXiv:2412.19437. [Google Scholar]

- DeepSeek-AI; Guo, D.; Yang, D.; Zhang, H.; Song, J.; Zhang, R.; Xu, R.; Zhu, Q.; Ma, S.; Wang, P.; et al. DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning. arXiv 2025, arXiv:2501.12948. [Google Scholar]

- Briney, K.A. The Problem with Dates: Applying ISO 8601 to Research Data Management. J. EScience Librariansh. 2018, 7, e1147. [Google Scholar] [CrossRef]

- Guizhou Sunshine HaiNa Eco-Agriculature Co., Ltd. Available online: https://www.yangguanghaina.com/ (accessed on 13 June 2025). (In Chinese).

- Kweon, W.; Kang, S.; Jang, S.; Yu, H. Top-Personalized-K Recommendation. In Proceedings of the ACM Web Conference 2024, Singapore, 13–17 May 2024; Association for Computing Machinery: New York, NY, USA, 2024; pp. 3388–3399. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2017, arXiv:1412.6980. [Google Scholar]

- Ong, K.; Haw, S.-C.; Ng, K.-W. Deep Learning Based-Recommendation System: An Overview on Models, Datasets, Evaluation Metrics, and Future Trends. In Proceedings of the 2019 2nd International Conference on Computational Intelligence and Intelligent Systems, Bangkok, Thailand, 23–25 November 2019; Association for Computing Machinery: New York, NY, USA, 2020; pp. 6–11. [Google Scholar]

- Li, M.; Ma, W.; Chu, Z. KGIE: Knowledge Graph Convolutional Network for Recommender System with Interactive Embedding. Knowl.-Based Syst. 2024, 295, 111813. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, Z.; Yu, W.; Hu, L.; Jiang, P.; Gai, K.; Chen, X. Soft Contrastive Sequential Recommendation. ACM Trans. Inf. Syst. 2024, 42, 1–28. [Google Scholar] [CrossRef]

- Valcarce, D.; Bellogín, A.; Parapar, J.; Castells, P. Assessing Ranking Metrics in Top-N Recommendation. Inf. Retr. J. 2020, 23, 411–448. [Google Scholar] [CrossRef]

- Wang, R.; Shivanna, R.; Cheng, D.; Jain, S.; Lin, D.; Hong, L.; Chi, E. DCN V2: Improved Deep & Cross Network and Practical Lessons for Web-Scale Learning to Rank Systems. In Proceedings of the Web Conference 2021, Ljubljana, Slovenia, 19–23 April 2021; ACM: Ljubljana, Slovenia, 2021; pp. 1785–1797. [Google Scholar]

- Tan, Y.K.; Xu, X.; Liu, Y. Improved Recurrent Neural Networks for Session-Based Recommendations. In Proceedings of the 1st Workshop on Deep Learning for Recommender Systems, Boston, MA, USA, 15 September 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 17–22. [Google Scholar]

- Liu, Q.; Zeng, Y.; Mokhosi, R.; Zhang, H. STAMP: Short-Term Attention/Memory Priority Model for Session-Based Recommendation. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018; Association for Computing Machinery: New York, NY, USA, 2018; pp. 1831–1839. [Google Scholar]

- Hou, Y.; Hu, B.; Zhang, Z.; Zhao, W.X. CORE: Simple and Effective Session-Based Recommendation within Consistent Representation Space. In Proceedings of the 45th International ACM SIGIR Conference on Research and Development in Information Retrieval, Madrid, Spain, 11–15 July 2022; Association for Computing Machinery: New York, NY, USA, 2022; pp. 1796–1801. [Google Scholar]

- Li, J.; Ren, P.; Chen, Z.; Ren, Z.; Lian, T.; Ma, J. Neural Attentive Session-Based Recommendation. In Proceedings of the 2017 ACM on Conference on Information and Knowledge Management, Singapore, 6–10 November 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 1419–1428. [Google Scholar]

- Nguyen, T.; Takasu, A. NPE: Neural Personalized Embedding for Collaborative Filtering. arXiv 2018, arXiv:1805.06563. [Google Scholar]

- Zhao, W.X.; Mu, S.; Hou, Y.; Lin, Z.; Chen, Y.; Pan, X.; Li, K.; Lu, Y.; Wang, H.; Tian, C.; et al. RecBole: Towards a Unified, Comprehensive and Efficient Framework for Recommendation Algorithms. In Proceedings of the 30th ACM International Conference on Information & Knowledge Management, Virtual Event Queensland, Australia, 1–5 November 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 4653–4664. [Google Scholar]

- Zhao, W.X.; Hou, Y.; Pan, X.; Yang, C.; Zhang, Z.; Lin, Z.; Zhang, J.; Bian, S.; Tang, J.; Sun, W.; et al. RecBole 2.0: Towards a More Up-to-Date Recommendation Library. arXiv 2022, arXiv:2206.07351. [Google Scholar]

- Liu, C.; Li, Y.; Lin, H.; Zhang, C. GNNRec: Gated Graph Neural Network for Session-Based Social Recommendation Model. J. Intell. Inf. Syst. 2023, 60, 137–156. [Google Scholar] [CrossRef]

- Xuan, H.; Liu, Y.; Li, B.; Yin, H. Knowledge Enhancement for Contrastive Multi-Behavior Recommendation. In Proceedings of the Sixteenth ACM International Conference on Web Search and Data Mining, Singapore, 27 February–3 March 2023; Association for Computing Machinery: New York, NY, USA, 2023; pp. 195–203. [Google Scholar]

| Models | Accuracy | Precision | Recall | F1 | Ap |

|---|---|---|---|---|---|

| TLSR | 0.9776 | 0.8221 | 0.6749 | 0.7412 | 0.7951 |

| LR | 0.9538 | 0.5976 | 0.0926 | 0.1604 | 0.2714 |

| DCNv2 | 0.4967 | 0.0478 | 0.5057 | 0.0873 | 0.0477 |

| DIN | 0.9533 | 0.5632 | 0.0881 | 0.1524 | 0.2463 |

| GRU4Rec | 0.9224 | 0.2011 | 0.2122 | 0.2065 | 0.1405 |

| SASRec | 0.9537 | 0.5822 | 0.0947 | 0.1629 | 0.2679 |

| STAMP | 0.7531 | 0.0637 | 0.3057 | 0.1055 | 0.0751 |

| CORE | 0.9614 | 0.7953 | 0.2560 | 0.3873 | 0.4484 |

| NARM | 0.9440 | 0.3888 | 0.3070 | 0.3431 | 0.2786 |

| NPE | 0.9588 | 0.7828 | 0.1854 | 0.2998 | 0.3239 |

| Models | ||||

|---|---|---|---|---|

| TLSR | 0.8578 | 0.9290 | 0.9398 | 0.9700 |

| LR | 0.5163 | 0.6597 | 0.6769 | 0.8003 |

| DCNv2 | 0.1374 | 0.2812 | 0.2277 | 0.4375 |

| DIN | 0.5018 | 0.6456 | 0.6686 | 0.7985 |

| GRU4Rec | 0.4138 | 0.5521 | 0.5654 | 0.7203 |

| SASRec | 0.5085 | 0.6501 | 0.6754 | 0.7964 |

| STAMP | 0.3938 | 0.5257 | 0.5239 | 0.6668 |

| CORE | 0.5983 | 0.7068 | 0.7513 | 0.8370 |

| NARM | 0.5154 | 0.6352 | 0.6758 | 0.7894 |

| NPE | 0.5871 | 0.7046 | 0.7434 | 0.8363 |

| Models | ||||

|---|---|---|---|---|

| TLSR | 0.8730 | 0.8771 | 0.8898 | 0.8997 |

| LR | 0.4671 | 0.4839 | 0.5193 | 0.5596 |

| DCNv2 | 0.1055 | 0.1328 | 0.1355 | 0.2026 |

| DIN | 0.4569 | 0.4746 | 0.5096 | 0.5520 |

| GRU4Rec | 0.3670 | 0.3875 | 0.4163 | 0.4662 |

| SASRec | 0.4639 | 0.4801 | 0.5166 | 0.5558 |

| STAMP | 0.3412 | 0.3601 | 0.3866 | 0.4326 |

| CORE | 0.6043 | 0.6160 | 0.6410 | 0.6689 |

| NARM | 0.5103 | 0.5256 | 0.5517 | 0.5885 |

| NPE | 0.5736 | 0.5862 | 0.6160 | 0.6463 |

| Paired Sample | W-Statistic | p-Value |

|---|---|---|

| and | 5,742,352,616.0 | 0.0000 |

| and | 11,161,300,372.0 | 0.0000 |

| and | 6,293,013,366.5 | 0.0000 |

| and | 7,308,931,681.0 | 0.0000 |

| and | 6,979,763,575.0 | 0.0000 |

| and | 5,474,380,013.0 | 0.0000 |

| and | 15,083,317,753.0 | 0.0000 |

| and | 14,264,308,243.0 | 0.0000 |

| and | 19,812,001,285.5 | 0.0000 |

| Models | Total Params | GPU Memory |

|---|---|---|

| TLSR | 11,620,449 | 7691 MiB |

| LR | 3,229,804 | 669 MiB |

| DCNv2 | 3,256,780 | 693 MiB |

| DIN | 32,258,483 | 8503 MiB |

| GRU4Rec | 6,333,096 | 2417 MiB |

| SASRec | 6,560,596 | 18,283 MiB |

| STAMP | 6,340,896 | 1443 MiB |

| CORE | 6,560,697 | 18,329 MiB |

| NARM | 6,343,046 | 2413 MiB |

| NPE | 9,520,096 | 1447 MiB |

| Case | User ID | Timestamp | Top-10 Food Recommendation List | Top-10 Merchant Recommendation List |

|---|---|---|---|---|

| 1 | 3 | 1717099526 | (24200, 24238, 24215, 24241, 24243, 24239, 24267, 8745, 24902, 23684) | (1921, 175, 1972, 1874, 209, 927, 241, 1268, 408, 21) |

| 2 | 185 | 1717098093 | (9516, 9166, 581, 21230, 28172, 15431, 18818, 2688, 20533, 6309) | (887, 63, 1697, 1523, 1299, 1528, 207, 1646, 2, 203) |

| 3 | 405 | 1717111357 | (26873, 20625, 30572, 16901, 11286, 170, 22440, 2606, 3391, 2641]) | (788, 25, 1785, 231, 253, 234, 457, 195, 111, 692) |

| 4 | 423 | 1717243462 | (2548, 14459, 9198, 8502, 14936, 15237, 9831, 15377, 997, 16846) | (180, 1244, 887, 15, 269, 1287, 622, 97, 1377, 1980) |

| Case 4 | Old | New |

|---|---|---|

| Top-10 food recommendation list | (2548, 14459, 9198, 8502, 14936, 15237, 9831, 15377, 997, 16846) | (14459, 15377, 16846, 8502, 15237, 9831, 997, 2548, 9198, 14936) |

| Top-10 merchant recommendation list | (180, 1244, 887, 15, 269, 1287, 622, 97, 1377, 1980) | (1244, 622, 1377, 15, 1287, 887, 97, 180, 269, 1980) |

| Metrics | Old | New |

|---|---|---|

| 0.8599 | 0.4539 | |

| 0.9226 | 0.9226 | |

| 0.9328 | 0.6486 | |

| 0.9564 | 0.9564 | |

| 0.8690 | 0.3362 | |

| 0.8723 | 0.3798 | |

| 0.8853 | 0.4129 | |

| 0.8930 | 0.5150 |

| Models | Accuracy | Precision | Recall | F1 | Ap |

|---|---|---|---|---|---|

| TLSR | 0.9776 | 0.8221 | 0.6749 | 0.7412 | 0.7951 |

| TLSR-i | 0.9515 | 0.4690 | 0.1432 | 0.2195 | 0.2153 |

| TLSR-t | 0.9685 | 0.8744 | 0.3954 | 0.5446 | 0.6460 |

| TLSR-k | 0.9649 | 0.7468 | 0.3993 | 0.5204 | 0.5374 |

| TLSR-c | 0.9541 | 0.6947 | 0.0631 | 0.1157 | 0.2519 |

| TLSR-g | 0.9682 | 0.7660 | 0.4786 | 0.5891 | 0.6386 |

| TLSR-r | 0.9530 | 0.6514 | 0.0270 | 0.0519 | 0.2080 |

| TLSR-u | 0.9589 | 0.5922 | 0.4415 | 0.5059 | 0.4732 |

| Models | ||||

|---|---|---|---|---|

| TLSR | 0.8578 | 0.9290 | 0.9398 | 0.9700 |

| TLSR-i | 0.4767 | 0.6313 | 0.5174 | 0.6628 |

| TLSR-t | 0.7688 | 0.8738 | 0.8609 | 0.9243 |

| TLSR-k | 0.6340 | 0.7685 | 0.7295 | 0.8354 |

| TLSR-c | 0.4514 | 0.6348 | 0.5460 | 0.7214 |

| TLSR-g | 0.7798 | 0.8766 | 0.8706 | 0.9286 |

| TLSR-r | 0.3982 | 0.5838 | 0.4817 | 0.6583 |

| TLSR-u | 0.6467 | 0.7672 | 0.7313 | 0.8312 |

| Models | ||||

|---|---|---|---|---|

| TLSR | 0.8730 | 0.8771 | 0.8898 | 0.8997 |

| TLSR-i | 0.3460 | 0.3654 | 0.3885 | 0.4355 |

| TLSR-t | 0.7350 | 0.7436 | 0.7666 | 0.7872 |

| TLSR-k | 0.5875 | 0.6014 | 0.6228 | 0.6568 |

| TLSR-c | 0.3493 | 0.3729 | 0.3979 | 0.4549 |

| TLSR-g | 0.7518 | 0.7596 | 0.7816 | 0.8004 |

| TLSR-r | 0.2924 | 0.3160 | 0.3392 | 0.3963 |

| TLSR-u | 0.5961 | 0.6094 | 0.6298 | 0.6621 |

| S | L | H | ||||

|---|---|---|---|---|---|---|

| 1 | 2 | 4 | 0.6340 | 0.7685 | 0.7295 | 0.8354 |

| 3 | 1 | 4 | 0.8205 | 0.9055 | 0.9179 | 0.9597 |

| 3 | 2 | 2 | 0.7858 | 0.8833 | 0.8754 | 0.9344 |

| 3 | 2 | 4 | 0.8578 | 0.9290 | 0.9398 | 0.9700 |

| 3 | 2 | 8 | 0.8060 | 0.8917 | 0.9022 | 0.9455 |

| 3 | 3 | 4 | 0.7296 | 0.8456 | 0.8314 | 0.9046 |

| 5 | 2 | 4 | 0.8467 | 0.9207 | 0.9306 | 0.9628 |

| S | L | H | ||||

|---|---|---|---|---|---|---|

| 1 | 2 | 4 | 0.5875 | 0.6014 | 0.6228 | 0.6568 |

| 3 | 1 | 4 | 0.8269 | 0.8325 | 0.8498 | 0.8633 |

| 3 | 2 | 2 | 0.7577 | 0.7657 | 0.7872 | 0.8064 |

| 3 | 2 | 4 | 0.8730 | 0.8771 | 0.8898 | 0.8997 |

| 3 | 2 | 8 | 0.8027 | 0.8086 | 0.8276 | 0.8418 |

| 3 | 3 | 4 | 0.6900 | 0.7000 | 0.7254 | 0.7492 |

| 5 | 2 | 4 | 0.8506 | 0.8550 | 0.8708 | 0.8813 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Deng, W.; Hu, D.; Jiang, Z.; Zhang, P.; Shi, Y. Context-Driven Recommendation via Heterogeneous Temporal Modeling and Large Language Model in the Takeout System. Systems 2025, 13, 682. https://doi.org/10.3390/systems13080682

Deng W, Hu D, Jiang Z, Zhang P, Shi Y. Context-Driven Recommendation via Heterogeneous Temporal Modeling and Large Language Model in the Takeout System. Systems. 2025; 13(8):682. https://doi.org/10.3390/systems13080682

Chicago/Turabian StyleDeng, Wei, Dongyi Hu, Zilong Jiang, Peng Zhang, and Yong Shi. 2025. "Context-Driven Recommendation via Heterogeneous Temporal Modeling and Large Language Model in the Takeout System" Systems 13, no. 8: 682. https://doi.org/10.3390/systems13080682

APA StyleDeng, W., Hu, D., Jiang, Z., Zhang, P., & Shi, Y. (2025). Context-Driven Recommendation via Heterogeneous Temporal Modeling and Large Language Model in the Takeout System. Systems, 13(8), 682. https://doi.org/10.3390/systems13080682