Abstract

Handling preliminary information appropriately is a critical challenge for many aspects of systems engineering design. The topic is gaining renewed visibility due to the expanding possibilities to apply AI to preliminary information to support systems design, engineering, and management. However, there are few empirical studies of the practicalities of handling immature information and there is a lack of concretely developed, empirically evaluated, and practical approaches for clarifying information maturity levels, needed to ensure such information is appropriately used. This article addresses the gap, contributing new insight into how immature information is handled in industrial practice that is derived from interviews with 15 engineering and product development professionals from 5 companies. Thematic analysis reveals how practitioners work with preliminary information and where they require support. A solution was developed to address the empirically identified needs. In 5 follow-up interviews, practitioner feedback on this concept demonstrator was supportive. The main result of this research, in addition to the insights into practice, is a practical maturity grid-based assessment system that can help the providers of preliminary information self-assess and communicate information maturity levels. The assessments may be stored alongside the information and may be aggregated and visualised in CAD, augmented reality, or a range of charts to make information maturity visible and hence allow it to be more deliberately considered and managed. Implications of this research include that managers should promote greater awareness and discussion of preliminary information’s maturity and should introduce structured processes to track and manage the maturity of key information as it is progressively developed. The detailed maturity grids presented in this article may provide a foundation for such processes and can be adapted for particular situations.

1. Introduction

“If only I had known how preliminary that data was… how good the data was […] Sometimes you look at it and you go: ‘It will probably be preliminary, and we can shift some of this stuff around.’ Then, you find out that it’s not.”(Senior Development Engineer)

Practitioners in systems engineering design projects frequently provide, receive, and work with preliminary information. They must decide when such information is ready to share, how much effort to dedicate to tasks given the maturity levels of their preliminary inputs, and how much allowance to give for the possibility of preliminary information changing. At present there is little practical support for such decisions and they are usually made in ad hoc ways. As suggested by the remark above, which was made by a participant in the interview study described in this article, significant problems can be caused if preliminary information is not handled effectively—usually manifesting as wasteful design rework.

The management of preliminary information in large-scale engineering and product development was researched intensively in the 1990s and early 2000s—to illustrate the level of interest, the seminal publication of Krishnan et al. [1] in 1997 had received more than 900 citations by the time of writing this article (Google Scholar, May 2025). Many insights have been generated but the topic since became less prominent in research journals, while practical challenges remain. Another look at this topic is timely today, because the increased and ongoing digitalisation of engineering work in recent decades has increased the availability and flow of preliminary information in projects [2]. While the possibility to learn from archived design information using AI is being very actively researched [3,4], it requires the quality and maturity of the input information to be well-appreciated if results are to be sensible.

This article addresses the topic of preliminary information in engineering design from a practical and empirical perspective, identifying and addressing gaps in the prior literature. We report findings from an interview study and thematic analysis across five companies and contribute a concept demonstrator showing how some newly revealed needs can be addressed. In an initial feedback study, practitioners told us that making preliminary information’s maturity visible using the approach could help appreciate assumptions and knowledge gaps, assist communication, and inform better decisions. This is the first article to address these issues from an empirical perspective and to empirically evaluate a developed solution. Findings imply that managers should deploy simple processes and tools to raise awareness of key information’s maturity during design and development projects; we discuss opportunities for further work to explore how such awareness can support the management of preliminary information flow and use.

2. Research Framework and Article Overview

This research project was structured using the design research methodology (DRM) framework [5]. DRM emphasises the development of support for engineering design in a way that generates both scientific contribution and practical value, by ensuring traceability between research stages and between needs, research decisions, and result evaluation. DRM prescribes four research stages; this article is structured accordingly, as follows:

- Research Clarification—is required in DRM to formulate the research objective and preliminary success criteria. In this research, this stage was carried out using a literature study, which is discussed in Section 3.

- Descriptive Study I—is used in DRM to explore the context into which support is to be delivered and further clarify the problem factors addressed. This research addressed the stage through an empirical study to appreciate the real-world problems of dealing with immature information. The study involved the thematic analysis of 13 interviews with 15 practitioners across five companies involved in the engineering design of products and systems. It is detailed in Section 4, which also provides full detail of the research method used for this DRM phase.

- Prescriptive Study—DRM focuses on developing support for practitioners to address the identified problems. In this research, a concept demonstrator was developed for assessing information’s maturity and making it visible with the aim to support the deliberate management of preliminary information flows and decisions based on them. This is described in Section 5.

- Descriptive Study II—involves the empirical evaluation of the developed support to establish whether success criteria are met. In this research, follow-up interviews were done with five of the original study participants to gather feedback on the developed support and assess it against the success criteria established earlier. The research method and results for this DRM phase are presented in Section 6, followed by a discussion of the implications, limitations, and future work in Section 7 and Section 8.

3. Research Clarification: Literature Review

To recap, the aim of the research clarification stage in DRM is to “identify and refine a research problem that is both academically and practically worthwhile and realistic” [5] (p. 43). This was done by a literature study; findings are discussed in this section. First, Section 3.1 reviews how preliminary information is used in design and development and discusses some of the challenges involved. Second, Section 3.2 reviews the concept of information maturity, which is a key information attribute describing preliminary information’s degree of development. Third, Section 3.3 reviews prior approaches to enhancing the design and development process by improving awareness of preliminary information’s maturity. Section 3.4 then highlights the limited empirical work in this area. The specific gap addressed by the rest of this article is pinpointed in Section 3.5.

3.1. Preliminary Information in Design and Development

Preliminary information is ubiquitous in design and development projects [6], and particularly in concurrent, large-scale systems engineering design. It exists across many process phases, but the need to work with such information is greatest in the early, less-structured phases of design [7]. Here, the knowledge-intensive, one-off nature of design and development work means that process flexibility is needed so the skilled professionals can share their work-in-progress with colleagues and adapt their designs to deal with issues as they arise. For example, a draft report may be shared between colleagues before it is considered ready, perhaps with incomplete sections or draft content—the purposes for sharing it in preliminary form may include collecting input, gaining feedback, socialising the content before formal release, and allowing colleagues to consider the content as early as possible. Computer Aided Design (CAD) models of designed parts are usually shared and used to begin downstream work before they are fully finalised; for example, finite element analysis (FEA) will often be possible and useful before all details are added to a part, while manufacturing system design can usually start before all design details are known—the outline shape and size of a part is often sufficient for planning manufacturing machines in advance of the precise geometry and tool paths being finalised.

The value of sharing preliminary information flexibly may be set against the need to control its flow to avoid chaos, particularly in large scale projects. In a stage-gate process framework [8], understanding the uncertainty around preliminary information presented for gate review may help make effective go/no-go decisions, by helping practitioners identify and challenge the fitness of the information [9] (p. 37). In a set-based framework, appreciating the uncertainties inherent in preliminary information may help to clarify the ranges in which key parameters may eventually fall [10], and hence clarify the design ranges which interfacing solutions should accept. In the structured setting of large-scale concurrent engineering, many teams must be coordinated to work in parallel, which creates significant interdependency [11]. The usual practice is to involve a range of specialists in key decisions as early as possible with the aim to improve design quality and reduce late rework, which further increases interdependency [12]. Interdependency and the dynamic nature of engineering work mean that, in many cases, all required information may not be available when a particular task needs to be done [13]. The solution is that some people need to accept immature inputs or make assumptions to get started. There is consequently often pressure to release information before it is final to prevent starvation of downstream tasks and increase the degree of task overlapping, thus accelerating project completion. The likely cost of sharing preliminary information is increased effort to deal with design rework when some of that information needs to be updated [1].

Despite the obvious ubiquity of preliminary information in project work in both flexible and structured contexts, and despite the research attention over the years, commonly used engineering tools, like most Computer Aided Design (CAD) and Computer Aided Engineering (CAE) software and general office and management tools, such as productivity and planning software, do not allow for a structured expression of information maturity [6]. Product Lifecycle Management (PLM) tools like Siemens Teamcenter do allow the information’s lifecycle status to be indicated [14]—for example, a document might be flagged as in-work, under review, approved, or released—but this is not fine-grained and the tools do not assist with actually assessing the status. Another challenge is that information shared electronically lacks contextual cues, such as body language and cues gained from informal discussions, which are crucial for fully appreciating context [15]. In practice, the contextual complexities of the preliminary status of information are, therefore, often communicated through informal conversations or during meetings [9]; without documentation, there is much scope for misunderstanding the status of preliminary information received electronically from coworkers. This may result in overconfidence and potentially rework when the preliminary information is updated, or under-confidence, which leads to unnecessarily delayed commitments and potentially suboptimal designs.

3.2. Maturity as a Key Status Attribute of Preliminary Engineering Information

Handling the flow of information in projects may be enhanced by an appreciation of its attributes, such as its format, quality, and the assumptions under which it was generated. Having established the important role of preliminary information in the systems engineering design process and some of the challenges in handling such information, this section expands on the specific attribute of preliminary information of interest in this article—its maturity.

In general, the concept of reaching maturity relates to “having attained a final or desired state” [16]. The concept is widely used in the context of maturity models, such as capability maturity models [17] and technology readiness levels [18], which have been developed in many domains to provide frameworks for assessing and improving systems, capabilities, or processes towards a perceived best-practice state, referred to as a mature state [19]. Over 150 such maturity models had been developed by 2005 [20] and likely many more since. However, the concept of information maturity has attracted far less research attention than the maturity of systems, capabilities, or processes [6].

The concept of information maturity has also attracted less attention than other information context attributes such as information quality, fidelity, and uncertainty [6]. Information maturity has been expressed using an array of terms such as the degree of consensus [7], stability [21], completeness, [22] and development [9,23] of the preliminary information. Many publications observe that the information’s maturity depends on the context and information consumers’ needs, including their ability to understand and interpret the information (see, for example, [24,25,26,27]). Information maturity is therefore a socio-technical attribute, dependent on how people perceive, share, and want to use that information. Thus, information that is mature enough for one purpose, consumer, and context may be considered immature in another situation [26]. Considering these issues, Brinkmann and Wynn [6] developed the following definition of information maturity, which is also adopted in this article:

“The maturity of preliminary information is its degree of development in relation to expectations of sufficiency for specific contexts and consumers”[6] (p. 2)

Brinkmann and Wynn [6] show that many constituent aspects of information’s maturity—including its completeness, precision, stability, fidelity, and so on—are closely related to other information context attributes such as information quality, uncertainty, and knowledge used when generating and interpreting the information. However, unlike these other information attributes, maturity applies specifically to preliminary information in the context of its development process. Appreciating preliminary information’s maturity is essential to consider how it can be most appropriately shared and used during systems engineering design.

3.3. Practical Approaches for Handling Immature Engineering Information

A range of problems in systems design and development could be alleviated by improving the awareness of information maturity. Support approaches and concepts have been proposed to achieve this. Some are generic, intended to apply to any information, while others are specific, focusing on particular information types and contexts.

In terms of generic approaches, O’Brien and Smith [22] may be among the earliest to propose assessing and managing information maturity in systems engineering design, intending to “speed the release of designs with sufficient maturity and prevent the release of designs that have not reached the required maturity” (p. 85). They propose assessing a design’s frequency of change by monitoring CAD file updates and also asking designers to manually assess the magnitude of each update on a 1–100 scale. Clarkson and Hamilton [28] write that the maturity of preliminary information determines when specific engineering design and analysis tasks are justified. They develop a support approach in which designers assess their confidence in design parameter values on a 4-point scale so that suitable tasks can be identified at each design step. Rouibah and Caskey [7] focus on early design stages in concurrent product development, pointing out that the dynamic, iterative and incremental nature of such processes mean they should be organised around the evolving design data status rather than prescribed task sequences. They propose an engineering design process should be organised around controlling key parameters’ hardness grades—which describe parameter maturity, reflecting its perceived quality and reliability and directly reflecting process stages and approvals obtained. Blanco et al. [26] highlight that information maturity assessment could support engineering design in the following two ways: (1) by optimising project schedules, such that task timings and overlapping degrees are appropriate given their input information’s maturity levels, and (2) by supporting informal communication processes, specifically, allowing earlier information sharing by establishing when preliminary information is mature enough to share and use among particular groups of colleagues. Johansson et al. [9,29] write that a common understanding of information maturity in aerospace engineering can facilitate discussions around important decisions. They develop an information maturity assessment grid comprising three maturity criteria as follows: inputs used to generate information, methods used to generate it, and the experience of the person generating the information. Drémont et al. [30] propose assessing information’s maturity in terms of the following: scope for variation; performance level versus downstream task requirements; validity duration; and sensitivity of the downstream task to potential future changes in the information. They envisage these aspects being estimated and combined with a simple formula, with the result used to monitor a design’s evolution across iterations, as well as prioritise design issues needing attention. Zou et al. [31] suggest that maturity assessment could help store, retrieve, and reuse preliminary design information while providing a proper appreciation of its context—they propose capturing key information’s maturity in IBIS-style decision rationale charts after the approach of Bracewell et al. [32]. They also suggest a Bayesian approach could be used to integrate different aspects of maturity into a single indicator, but they do not elaborate. More recently, Sinnwell et al. [33] propose that capturing information maturity can ease communication between design and manufacturing planning, and they propose doing this by assessing maturity against nine criteria. This is the most granular general assessment approach we identified.

Other authors propose specific approaches for handling immature information that are tailored to particular information types and contexts. For instance, Kreimeyer et al. [34] develop an approach for optimising preliminary information transfer from design to simulation departments in an automotive manufacturer, assessing whether changes to preliminary information warrant updates to downstream tasks given the specific design features changed and considering whether the downstream task has accounted for those specific features yet. Similarly, identifying which specific parameters are shared between two disciplines helps determine which aspects of preliminary shared information should be frozen first [35]. Abualdenien and Borrmann [36] propose a method for dealing with incomplete information in building design, while building information modelling (BIM)’s established concept of levels of development provides specific guidance for assessing the maturity of different types of building elements, with standards provided by BIMForum [37]. Ebel et al. [38] show how machine learning may be used to track a CAD file’s maturity as an input for assessing project progress. Overall, the approaches discussed in this paragraph are concrete due to their focus on specific information types, but this also means they cannot offer generally applicable support for managing information maturity.

3.4. Empirical Perspectives

While the aforementioned approaches typically draw insights from knowledge of industry challenges, very few researchers have applied structured empirical methodology to the topic of preliminary information and its management. The most significant empirical study is that of Terwiesch et al. [13], who conduct an in-depth case study involving about 100 interviews in an automotive manufacturer, resulting in proposed strategies for coordinating dependent tasks depending on the precision and stability of preliminary information exchanged between them. Other examples include the work of Grebici et al. [39], who draw on case study experience to discuss how engineers need to understand uncertainty levels to develop confidence in information from peers, considering commitment levels and downstream task sensitivity, while Kreimeyer et al. [34] draw on experience in the automotive sector to examine how simulation models’ maturities evolve during product development. Johansson et al. [9]’s maturity assessment approach is based on industry workshops in Volvo Aero. In critique, the empirical aspects of these publications are presented mainly as reflections on observations and most do not detail the use of structured empirical methodologies to analyse collected data and reach the presented conclusions. While these authors’ contributions are valid and important, a study applying structured empirical methodology would add significant value by helping to reveal practitioner perspectives and validate the challenges faced when handling preliminary information.

3.5. Research Gap: Lack of Concretely Developed, Empirically Grounded, and Generally Applicable Solutions for Clarifying Information Maturity

Overall, the literature study reveals a consensus that preliminary information is essential in systems engineering design and that clarifying preliminary information’s maturity can provide many benefits for managing the process. Table 1 summarises and thereby establishes the practical importance of this research. However, detailed analysis revealed a significant gap: there is still no concretely developed and empirically evaluated approach to provide the required clarity. This research gap is made precise in Table 2, which compares the few generally applicable multidimensional approaches for assessing information maturity that exist in the prior literature. The contributions of Blanco et al. [26] and particularly Johansson et al. [9] represent the state of the art in this area, while the other listed publications are less comprehensive.

Table 1.

Value of this research—the benefits of clarifying preliminary information’s maturity.

Table 2.

Pinpointing the research gap—a lack of empirically grounded, comprehensively developed, and empirically evaluated solutions for clarifying preliminary information’s maturity. Contributions are sequenced chronologically, and the research gap is summarised in the final row.

Table 2 highlights that while prior publications contribute significantly to establishing needs, theory, and concepts, they do not elaborate or justify key aspects of solution concepts presented. We could not find any attempt at structured empirical evaluation of the maturity assessment concept in any publication. In other words, there are still no concrete solutions for clarifying information’s maturity and the practical viability of the whole concept therefore remains unproven.

To address the identified gap and summarise the results of the Research Clarification stage of this research, the main research objective was clarified as follows:

Research, develop, and evaluate concrete practical support for clarifying preliminary information’s maturity levels.

The other main output of the Research Clarification phase stipulated by DRM is a definition of success criteria that are achievable and measurable within the project’s scope. In this research the success criterion was defined as follows:

Practitioners should find the approach useful in improving the clarity of information maturity levels.

4. Descriptive Study I: Practitioner Interviews and Thematic Analysis

In DRM, the Descriptive Study I stage stipulates empirical research to better appreciate the practical situation and relevance of the research, as well as to pinpoint specific problems to be addressed by a support approach [5] (p. 76). In this research, with reference to the main research objective stated above, the specific objective for the phase was to explore the reality of handling immature information in design and development. Qualitative empirical method was deemed appropriate because the objective is exploratory. We also sought generalisable findings. Therefore, an interview study involving multiple companies was selected. Ultimately, the study provided practical orientation and a starting point for addressing the main research objective stated in Section 3.5. It also confirmed the value of the research by underlining the problems faced by practitioners handling preliminary information.

Companies in New Zealand were approached to recruit study participants. The scope was broad, including any company involved in systems design or engineering design. Preference was given to companies offering interviews with multiple employees, because this would help triangulate findings. The following five companies participated:

- Company C1: A well-established producer of large-scale agricultural sorting systems, including custom machines and modular solutions. Three of the approximately three hundred employees on site were interviewed.

- Company C2: A startup producing support and motion equipment for film-makers and photographers. Two of the thirteen employees participated.

- Company C3: A manufacturer of water jet propulsion systems. Five of the approximately four hundred employees participated.

- Company C4: A long-standing company in tapware and valving equipment, designed and partially manufactured in New Zealand. Four employees participated; the total number of employees is undisclosed.

- Company C5: A developer and manufacturer of home appliances and the largest company in the study. One employee was interviewed.

Employees from each company were approached to participate based on the suggestions of the initial contact in each case. We sought a mix of engineering and management personnel. Interviews were done by the first author of this paper; they were one-on-one interviews, except for two cases where participants requested to participate in pairs. A linked interview technique [42] was used, meaning the interviewer sought to follow up on insights gained from one interview with the next in the same company. Table 3 summarises the 13 interviews and 15 participants.

Table 3.

Participants in the interview study.

The interviews were semi-structured, in every case following the schedule below, with the conversation being allowed to evolve naturally following each question as recommended by Adams [43]:

- Introductions were provided to set the interviewee at ease, and a preprepared script was used to explain the interview’s purpose.

- The concept of information maturity was outlined using a preprepared script, giving the example that immature design information might be ambiguous (for example, when a concept can be interpreted in different ways) or imprecise (for example, when a numeric parameter is given as a range). The interviewee was asked for their opinion on this concept.

- The interviewee was asked whether they encountered other aspects of information maturity in their work, and how the maturity of preliminary information was communicated during the design process.

- The interviewee was asked to comment on how tasks in the design process were affected by immature input information, including how information maturity influenced the timing and effort dedicated to tasks.

- The interviewee was asked whether problems could be avoided if design information maturity was more deliberately considered during the design and development process.

All interviews were audio-recorded. Thematic analysis was subsequently applied using the approach of Braun and Clarke [44].

Specifically, interviews were first transcribed manually to deepen familiarity. In total, 18 h and 32 min of recordings were transcribed verbatim by the interviewer. The transcripts were then analysed to extract verbalisations relevant to the research objective stated at the start of this section. Initially, 601 such remarks were identified, which were narrowed to 301 of the most relevant. To further assist in familiarisation with the large volume of qualitative data, the remarks were then coded using 10 predefined categories and examined further. In the third step, themes and sub-themes related to the research question were identified inductively through several iterations of grouping and regrouping the remarks, which was done by both authors. To be included and considered generalisable, a theme needed to be mentioned by multiple interviewees and in all but one case across multiple companies. The resulting thematic structure, which summarises the findings, comprises three themes and ten sub-themes as illustrated in Figure 1. Towards internal validity, the second author reviewed the original transcripts and confirmed that the thematic summary accurately reflects what was said. Some adjustments were made. Finally, towards external validity, the sub-themes were set in context of prior research findings as explained in the next subsections. Some of the sub-themes are strongly supported in prior research, while others are less widely discussed. Combining the themes revealed specific needs for a practitioner support approach.

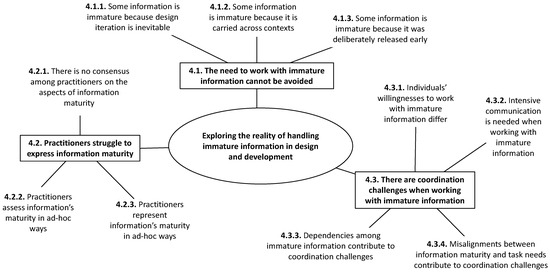

Figure 1.

Themes resulting from the interview analysis provide insight into the reality of handling immature information. Numbers indicate the article sections in which themes are discussed.

The developed themes are discussed next, prior to discussing the revealed needs. As is usual for a thematic analysis, direct quotes from the interviews are included to support our interpretations.

4.1. The Need to Work with Immature Information Cannot Be Avoided

The first theme is that the need to work with immature design information is inevitable and unavoidable. Participants explained that preliminary information exists in their work contexts for a range of reasons, all of which result from the needs to iterate, make design changes and work concurrently. The need to work with immature information is therefore not only a symptom of a poorly organised process or of another problem—it is a fundamental feature of design and development practice.

4.1.1. Some Information Is Immature Because Design Iteration Is Inevitable

The iterative nature of design and development means that changes to some information are inevitable and expected [45]. Designers may have no choice but to accept changeable information and begin work, recognising that adjustments will be needed later [46]. For example,

“I get told what the parameters are, but the parameters […] are subject to change, sometimes. Like the [part’s] height. There are a few—kind of—iterations of how high that needed to be…”(C3E3)

Companies commonly try to avoid such situations by freezing selected information so that it cannot be changed in the future [47]. However, immature information cannot be reliably frozen [48]. Therefore, information updates due to changes and iterations remain unavoidable, a fact strongly emphasised by one study participant, as follows:

“Modifications happen all the time. The design freeze is still a dream. It’s not a clear design freeze as for my experience!”(C1E3)

4.1.2. Some Information Is Immature Because It Is Carried Across Contexts

Engineering design rarely starts from a clean sheet [49]; in most situations, a new project builds on a prior product generation [50] or a platform [51]. Such design reuse is highly desirable to reduce the cost and time to produce each new product and to benefit from proven design assets [52]. However, one of the challenges entailed is the need to adapt and integrate the assets. In two separate interviews, two colleagues at company C3 explained that carry-over information is accordingly a key driver of immature information early in the design process, as follows:

“We kind of knew what the [product] looked like—we have done smaller [versions of these products] before—we spent a bunch of time researching as to what we wanted to do, but basically I said: ‘Right, I am going to make this analysis model’ and I just used past [product] geometries as a starting point.”(C3E4)

“If I am working on the hydraulics, I don’t know what the [product] looks like, yet. However, I will try to make some rules about, looking at some old product we have got, so here is some rules about how we want to do that. I guess at that stage you don’t have all the information.”(C3E1)

4.1.3. Some Information Is Immature Because It Was Deliberately Released Early

Many researchers have described how preliminary engineering information can be released before it is finalised to deliberately increase overlapping design phases [1,53,54]. Our study also confirmed this practice; several participants explained that shared information may be immature due to deliberate release prior to finalisation, because it is useful even in its preliminary state, as follows:

“Rubbish information now might actually be worth more than better information in the future […] Knowledge now actually has a value. Even if it’s rough.”(C1E1)

[Early information release is] “a matter of convincing the engineer, saying: ‘Your final solution is not the problem right now. We just need to know the minimum characteristics…’”(C1E2)

“Once we know the outside dimensions from a casting perspective, we might not necessarily know all the machine details, so we are quite often being working on making some assumptions about: ‘OK, this is the overall size’—and then as the design evolves, the detail comes.”(C3E2)

4.2. Practitioners Struggle to Express Information Maturity

The second theme is that practitioners struggle to articulate the concept of information maturity, despite all participants confirming that they work with preliminary information during their everyday tasks. Participants also did not report systematically assessing information’s maturity.

4.2.1. There Is No Consensus Among Practitioners on the Aspects of Information Maturity

The concept of information maturity has no clear consensus definition in the literature, as recently shown by the review study of Brinkmann and Wynn [6]. This lack of researcher consensus is reflected in mixed practitioner appreciations of the concept; in our study, no interviewee could articulate more than three different aspects of information maturity when asked to describe the concept. In many cases, the language they used while discussing the concept suggested the described aspects were not conceptually distinct. To illustrate, some participants’ attempts to articulate the maturity concept are now described.

One interviewee described mature information as being detailed, comprehensive, and clear as follows:

“The more detailed, the more comprehensive, the more clear that information is, the easier it is to make those decisions.”(C4E3)

Another suggested that increasing the level of detail can reduce the ambiguity in preliminary information as follows:

“If you have got a lot of detail to start with, you can probably take out a lot of the ambiguity.”(C4E1)

C4E2 suggested that maturity relates to the presence of essential elements of the information as follows:

“These are the important bits. These are the like-to-haves, and these are the we-don’t-really-care abouts.”(C4E2)

Credibility of preliminary information’s sources (or one’s ability to verify information if the credibility is low) was also seen as an important contributor to information maturity as follows:

“There are some things that I might say: ‘Well, OK, I hear what you are saying but I need to do it myself.’ To revisit and ensure that the information is correct. Furthermore, similarly, there is information from sources where you just go: ‘OK, verbatim!’ ”(C4E1)

Finally, another interviewee described information’s maturity in terms of its reliability (his word), which he decomposed into the following four levels:

“It starts at guess, then working value, confident and final.”(C3E4)

4.2.2. Practitioners Assess Information’s Maturity in Ad-Hoc Ways

The second sub-theme is that practitioners assess specific information’s maturity when using that information, but the assessments are done in ad hoc ways. The study participants did not believe there was a need for more systematic approaches to information maturity assessment. For example,

“We have a fairly small office and so we know what everyone’s working on. So if they have only been working on it for a couple of days, you go: ‘Ha, yeah…’ If you know they’ve been working on it for three, four, five weeks, you go: ‘Yeah right, okay, they’ve done a few iterations. They’ve been around where they think it’s going to be. I am reasonably confident that they are not just going to change their mind…’”(C4E2)

“You would just talk to people, it’s easy. ‘You are working on this? Let us get three of us in a room—hash it out!’ I don’t think that there would be any need for a kind of CAD system or PLM system to indicate what data was preliminary because the design was moving so quickly and so fluidly…”(C3E4)

One of their colleagues, in a different interview, suggested that accurate assessments of information maturity might not be particularly important, as follows:

“We know that we are working with preliminary data at times, but we don’t necessarily have to get into the absolute detail of it. However, as long as we are directionally correct…”(C3E2)

Two interviewees observed that assessing information’s maturity is difficult due to not appreciating the endpoint or target state of full development. For example,

“Your perception of what is good is preliminary. There is no way you could ask someone in the 1950s to tell you what quality of TV they want—because they had no idea.”(C2E1)

4.2.3. Practitioners Represent Information’s Maturity in Ad-Hoc Ways

Thirdly, none of the five companies systematically represent and communicate information maturity—except for the concept of formal release status, which is provided in PLM solutions [14] but is not highly granular. To represent maturity in more granular ways, a range of ad hoc approaches were used by the study participants. One was to indicate immature design properties in an informal way in CAD models as follows:

“We make our CAD bright pink, if it has not applied any material to it yet […] we want to have accurate mass, so it’s bright pink—it stands out!”(C3E1)

Another reported approach was to deliberately avoid representing immature information at all, to ensure that only sufficiently mature aspects of a design are shared:

“We almost deliberately don’t put too many dimensions on the drawing apart from the overall size, because actually, you don’t want anyone to be able to make it actually from the drawing […] providing that initial information, but being a bit vague, too.”(C5E1)

Other participants explained that they would share preliminary information but without representing its maturity explicitly, simply presenting the immature information as though it were final and relying on contextual clues to remember or appreciate its status as follows:

“I have given it a best guess, but there is nowhere where I write: ‘This is a best guess.’ I have to keep it in my head, actually, or write it down elsewhere.”(C4E4)

“The preliminary stuff is usually really preliminary, so it’s quite obvious that you put something in there that is unknown.”(C2E1)

Only one company seemed to use a systematic approach to represent information maturity; namely, information’s formal release status in a stage-gate process. In this case, C3E1 explained that higher status implies widened visibility and indicates that a more thorough review process has been completed. This reflects the common practice also observed by Blanco et al. [26].

4.3. There Are Coordination Challenges When Working with Immature Information

As shown in Figure 1, the third and final main theme resulting from the empirical study is that practitioners face a range of challenges in handling immature information, which are described in the next subsections. The revealed challenges predominantly relate to coordination, that is, dealing with dependencies among people and activities that are entailed by sharing immature information, rather than making optimal decisions within specific tasks that produce or consume the information.

4.3.1. Individuals’ Willingness to Work with Preliminary Information Differs

One of the revealed coordination challenges is that individuals have different willingness to work with immature information. On the one hand, several interviewees expressed a preference to move forward with early, rough approximations, rather than investing excessive time in analysis and information development. C1E1 summarises this as follows:

“Fail fast! Information now! Imperfect! …Do something! Why are you drawing that up in CAD? You can go to the workshop and hack it apart with a saw, a drill and an angle grinder. Go and do something!”(C1E1)

“People are just having ideas all the time, but some ideas you can prove wrong in a conversation, some of them take some drawing, some of them take a quick CAD model, some of them take a prototype”(C2E1)

The remarks above reflect modern practice to prototype and iterate designs as a way of testing ideas, developing technology, and learning about problems and solutions [55]. However, on the other hand, another interviewee observed that some engineers have limited comfort when dealing with preliminary information, as follows:

“Quite a few engineers […] really do not cope with ambiguity and unknownness […] they just want to be told what to do…”(C5E1)

Reflecting this latter group, some participants expressed frustration with the need to work with changeable or immature input information, and they mentioned some ways of dealing with the issue, outlined as follows:

“How confident are you that this information is going to change? Furthermore, if you go: ‘Not confident at all!’ you might go: ‘Well, I will just look like I’m doing something, and then when they change it, it is not going to be too much of an effort…’”(C4E2)

“Obviously, any further work you are doing is based on that information […] if I can see that there are obvious gaps or inconsistencies, then I will clarify those before I do any further work.”(C4E3)

These differences may stem from personal preferences as well as the nature of the tasks the individuals are working on. They are likely to cause difficulties in deciding whether and when preliminary information is ready for sharing.

4.3.2. Intensive Communication Is Needed When Working with Preliminary Information

Collaborative engineering design is a highly social and consultative process [56]. In our empirical study, the importance of interpersonal communication to handling immature information was strongly emphasised by participants. For example,

“One of those kind of ambiguous parameters changes and everyone that it impacts needs to be consulted to have their input!”(C3E3)

“I can’t overemphasise the importance of collaboration and communication—and knocking down the barriers from different departments…”(C3E2)

Interviewee comments also confirmed that the needs of an information’s recipient for mature information may not match the information producer’s ability to provide it at a given point in the process, as follows:

“Typically [the manufacturing engineers] want the precision side of it, because they are going to make some very definitive choices […] to give them enough information early enough, that is always a challenge we are facing…”(C3E2)

Again emphasising the social and consultative aspects of engineering design, participants told us that such challenges are resolved by negotiating activities around immature information, considering both the producer’s and consumer’s points of view, as follows:

“It’s a personal relationship. When I go to see our production engineers, I will say: ‘I have done this. This is preliminary information’…‘I want to see it! Or: ‘I have done this, it’s finished. We need you to do a jig to do this.’ However, sometimes it is: ‘We have done this, I need a jig to do this, but we can change a few things…’”(C4E2)

“We would just talk it through, and he would say: ‘Can we change this? Can we change that?’ Furthermore, [I] would just write those things down and I would do those changes and would send an updated model through to him, and he would say: ‘Yes, that is good’, or ‘it needs further changes’ and then we would come up with something.”(C4E3)

4.3.3. Dependencies Among Immature Information Contribute to Process Complexity

The items of information available in a project are interdependent, because they describe related aspects of the emerging design and because they are partially derived from one another through performing tasks [57]. Therefore, the maturity levels of different pieces of information are also interdependent [57]. The complexity arising from such interdependencies was recognised by the interviewees, for example,

“[A certain type of part] can be designed preliminary on their own, but the proper nature of [those parts] and some other parts can be analysed only in the assembly context. So the complexity of analysis grows while we are still investigating some preliminary responses.”(C3E5)

Due to these dependencies, problems can also arise when immature information is incorporated into a design without traceability and without all stakeholders being aware as follows:

“I am not really sure what the inputs were, I am not sure what has changed between then and now. Maybe we have to do the whole job again. It’s a massive hassle.”(C3E4)

“If only I had known how preliminary that data was…how good the data was […] sometimes you look at it and you go: ‘It will probably be preliminary, and we can shift some of this stuff around.’ Then, you find out that it’s not.”(C4E2)

“The distinction between decisions that are made on next to no information vs. ones that are made on a lot…very little traceability of that in the system, currently. A lot of that resides within a person’s head.”(C1E1)

When immature information is updated, the resulting changes should be propagated so that all downstream decisions are revisited and their own outputs updated if necessary [58]. However, the study revealed that the necessary propagation may not happen in practice—hence, practitioners may not recognise when the maturity of certain information is reduced due to prerequisites becoming invalid as follows:

“In a theoretically perfect world, any time a design decision is made or a value changes or anything in the design changed, the information will be propagated to the entirety, instantly, and you will be able to update and adjust for all of it—and if that decision impacts on something negatively, you will get that feedback straight away. Obviously, that does not happen.”(C3E4)

4.3.4. Misaligned Information Maturity and Task Needs Contributes to Process Complexity

Finally, several participants explained problems that occur when suitably mature information is not available prior to starting a task. One is the inability to make necessary decisions:

“We know that we don’t have the information. So therefore we flounder—we meander through until either somebody makes a decision or it becomes Hobson’s choice.”(C4E1)

Another recognised issue is that insufficiently mature input information for a task may lead to poor quality outcomes and is hence likely to increase rework as follows:

“As soon as you go and base it on guesswork, your quality is shocking! […] it’s having to…being able to get the preliminary data that is based on existing fact, rather than guesswork.”(C4E2)

The study also confirmed that problems can be caused when information is developed further than needed by the downstream task.

“My experience with other engineers has been: ‘Ah, I finished the job here! Instead of checking it in, I think I can improve on this design […] because I have this luxury of time…’”(C1E3)

These comments support the concept that assessing information’s maturity and comparing it to the needs of downstream tasks could help to avoid both under- and over-refinement.

Overall, the third and final theme highlights that the necessary use of immature information contributes to multiple coordination challenges in systems engineering design. The corollary that the flow and use of preliminary information could be more deliberately managed to address these challenges was not recognised by any interviewee.

4.4. Summary of Descriptive Study I Findings: The Revealed Needs for Practitioner Support

To recap, the purpose of this phase of the research project (following the DRM framework [5]) was to confirm the practical value of the research topic, clarify the problem situation, and refine the research objective by identifying specific needs to be addressed by a support approach.

Clarifying the value of the research, every participant confirmed that they work with immature information on a daily basis and experienced problems relating to handling such information, particularly relating to coordination. Clarifying the problem situation, we observed that all participants struggled to articulate the concept of information maturity itself, while some approaches for handling immature information were mentioned, no participant described systematically tracking and managing information’s maturity to streamline processes and avoid unnecessary rework. Participants’ perceptions of the implications of working with preliminary information were focused on coordination issues rather than on decision making within individual tasks. They also mainly reflected interpersonal communication and negotiation aspects. Participants did not emphasise the systemic implications of immature information flow and how it could be managed to support task planning. This reflects the fact that that the companies involved in our study, while all dealing with system level engineering design, did not exhibit the complex, very large-scale concurrent engineering contexts where managing rework and churn is a more substantial issue. As a result, participant explanations focused on their experiences as providers and recipients of immature information rather than on system-level information flow management.

Table 4 summarises the findings of the interview study and their implications for the whole research project reported in this article. As shown in the table, each finding either supports the hypothesis that information maturity levels should be clarified or indicates a specific need towards achieving the clarification.

Table 4.

Summary of empirical findings and implications from the Descriptive Study I phase.

5. Prescriptive Study: Solution Development

In DRM, the purpose of Prescriptive Study includes deciding what problem factors to focus on and developing a support approach to address them. Because creating support is a form of design and development, a systematic design methodology is recommended by Blessing and Chakrabarti [5] as follows: needs should be considered to identify functions to be provided by the support approach, alternative concepts for each function should be considered leading to ideation and development of a support approach, before initial verification of the solution by the researchers. In DRM, the purpose of initial verification is not to comprehensively evaluate the approach but to check concepts are correctly implemented and build confidence that the stated needs are met well enough to justify an empirical evaluation study (in this research, such a study was also done and is reported in Section 6).

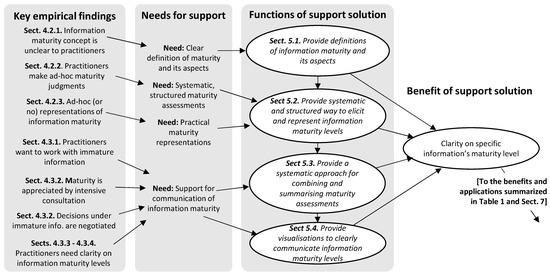

In this research, as already stated, the objective was to develop support that can provide clarity on preliminary information’s maturity level. The needs identified from the interviews and thematic analysis (Section 4 and Table 4) revealed four specific functions to be provided by a support approach, as shown in Figure 2. The functions, and the development of a practical approach to realise them, are explained next.

Figure 2.

Empirical findings led to needs for support and to the functions of a support approach that provides clarity on information maturity levels.

5.1. Need 1—The Solution Must Provide Clear Definitions of Information Maturity Aspects

Practitioners in the interview study struggled to articulate the precise meaning of information maturity (Section 4.2.1). The literature review also revealed no consensus on what the dimensions for an information maturity assessment should be (Section 3.3). Without a clear and common conceptualisation, maturity levels cannot practically be clarified.

To address this need for clear conceptualisation, an integrative literature review [59] was used to develop and systematically verify a new conceptual framework unpacking the many aspects of information maturity. This part of the solution development is reported in depth in a recent article [6] and needs not be repeated here. Table 5 summarises the result, providing a decomposition of the information maturity construct that integrates all maturity dimensions found in 109 reviewed articles in the literature on engineering design, product development, building design, information systems, and management. The framework is presented as a hierarchically structured taxonomy as shown in Table 5. In overview, it combines 18 distinct aspects of information maturity from prior research and organises them into three categories: (1) aspects concerning the maturity of preliminary information’s content, including its completeness and clarity; (2) aspects relating to the information’s context, including how well it meshes with the project context and how much it is expected to change; and (3) aspects related to the information’s provenance, including the suitability of assumptions while generating it and the degree to which it has been properly generated and validated. The foundational concept of the support approach introduced in this article is that answering each of the questions set out in Table 5 can provide a means for describing information’s maturity in a comprehensive and granular way that integrates conceptual insights from prior work on the topic. Readers are referred to Ref. [6] for full details and an explanation of the taxonomy’s conceptual foundations.

Table 5.

Taxonomy decomposing the maturity of preliminary engineering information into eighteen aspects and associated questions, structured in a three-level hierarchy. Adapted from Brinkmann and Wynn [6]. For the conceptual foundation of this taxonomy, please refer to the original work of Brinkmann and Wynn [6].

5.2. Need 2—Provide a Structured Way to Elicit and Represent Information Maturity

To recap, the second theme of the interview study revealed that that practitioners do not use structured, systematic and repeatable approaches to assess or represent information maturity levels (Section 4.2). The literature review of Section 3 showed that no prior approach provides detailed solutions for these tasks, as summarised in Table 2.

Four approaches were considered to address the need for a structured, detailed and generally applicable way to assess information maturity: (1) automatic detection of information maturity levels (like refs. [38,60,61]); (2) manual assessment based on a generic scale-based approach, such as a 1–5 scale for every maturity aspect (like refs. [26,30,33]); (3) manual representation of maturity levels in a bubble diagram (like ref. [31]); and (4) a manually-completed maturity grid approach (like ref. [9]). The following disadvantages of options 1–3 were noted. While automatic detection is highly desirable, it is not clear how it would be achieved for facets of the taxonomy that are contextual in nature; to be applicable in this research, automated information maturity detection would also need to deal with any type of information developed during a design and development project (the above-listed approaches are too specific). A scale-based approach, such as assessing maturity levels on a 1–5 scale, does not indicate precisely how to determine when information reaches each maturity level. The diagram-based proposal of Zou et al. [31] is highly preliminary, untested, and would not be easy to extend for the 18 maturity attributes identified in our study. Considering these disadvantages of options 1–3, it was decided that maturity evaluations in the new approach would use a maturity grid approach, which is well-established and proven in many other domains [62,63]. A significant practical advantage of maturity grids is that they are time- and cost-effective to complete [63], as well as flexible, because they can be completed digitally or on paper.

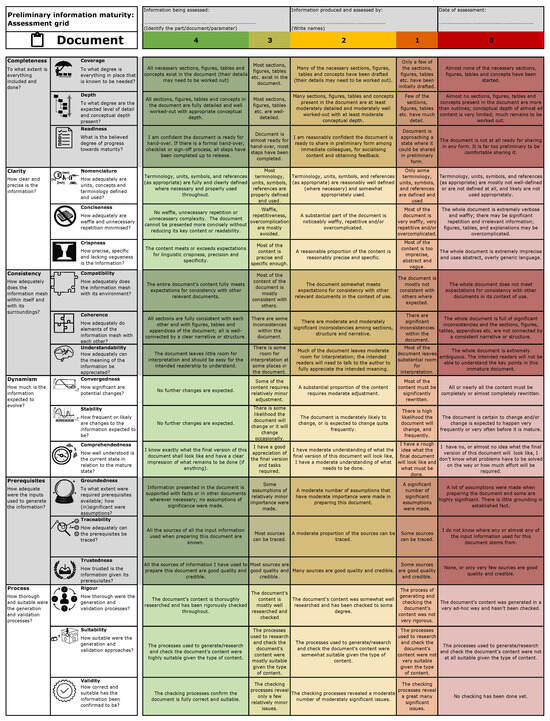

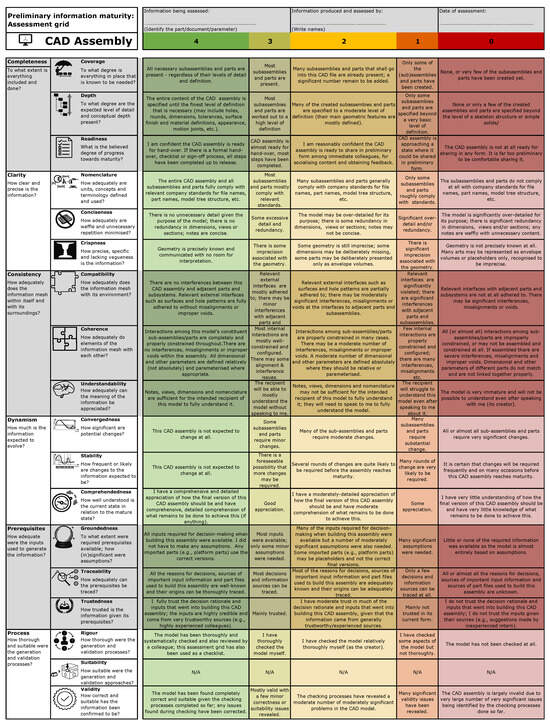

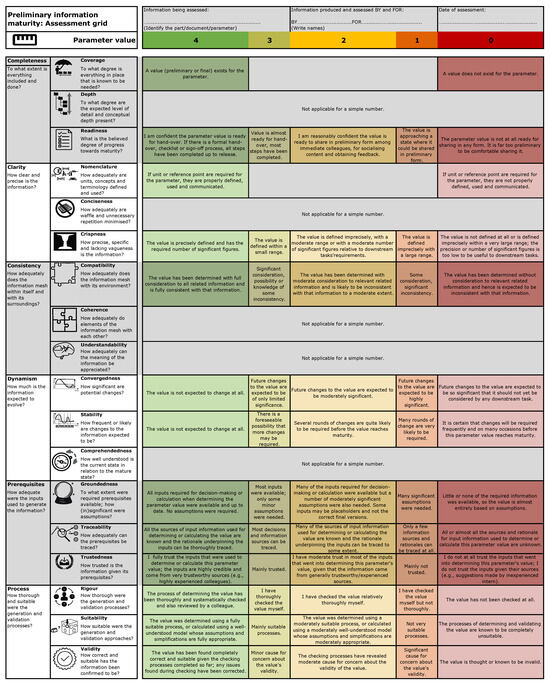

In overview, a maturity grid is a table that shows the aspects of maturity to be assessed (defined in the table row headers) against a number of discrete maturity levels that can be achieved for each aspect (in the table column headers). Maturity grids are distinguished from scale-based assessments by the precise definition of every maturity level for every aspect of maturity being assessed [63]—the definitions are written in the cells of the grid. Once a maturity grid is defined, a particular information item’s maturity may be assessed by working systematically through all the rows in the grid and, for each row, selecting the most appropriate descriptor for the current maturity state. Thus, a completed maturity grid provides detailed justification for the assessed maturity level, and by examining the descriptors, also indicates what developments are necessary to reach a higher level. An information producer may complete a grid to assess and express the status of information they are working on, while an information consumer may use the same system to define and communicate the maturity levels they require.

In this research, the process recommended by Maier et al. [63] informed development of new maturity grids for the specific context of preliminary engineering information. The first step was to identify the set of maturity elements to be assessed, thereby forming the row headers of the grid. The eighteen elements of our recently developed information maturity taxonomy (Table 5) were used. The second step was to define the scale for assessing each element, forming the column headers of the maturity grid. In this research, five maturity levels were allowed for, which is common practice [63]. The third and final step was to define the maturity levels themselves for each element, thereby filling all cells of the 18 × 5 grid.

In this case, because the 18 aspects of maturity in the taxonomy apply to all types of preliminary information, they are relatively abstract. To assist interpretation, three separate maturity grids were developed to concretise the assessments for three types of preliminary information commonly encountered in system engineering design projects:

- Documents—such as test reports or project plans.

- CAD models—such as assembly models comprising multiple parts and subassemblies.

- Parameter values—such as the length of a bolt or the mass of an assembly.

It was determined that the maturity of the simplest, unstructured form of information—the parameter value—cannot meaningfully be assessed against all of the 18 aspects shown in Table 5, particularly because many of the aspects concern the information’s internal structure, which a simple number does not have. This can be appreciated by reading the descriptions in Table 5.

For each of the three information types listed above, the 18 × 5 maturity descriptors were then defined. This was straightforward for numeric parameters after the non-assessable aspects had been excluded. Specific maturity level definitions were more challenging to formulate for CAD models and documents because each dimension of a maturity assessment may be interpreted in the following two ways: (1) as the proportion of the information’s elements, such as a document’s sections or a CAD assembly’s parts, that have reached a specified level of maturity; or (2) as the degree to which full maturity is reached considering all the information’s elements. The final maturity grids are carefully worded to allow flexibility for the person performing an assessment to choose the appropriate interpretation for their specific context.

To illustrate how the maturity level definitions are worded, the five possible levels of the maturity aspect Coverage for the information type CAD assembly are as follows:

- Level 0—None, or very few of the subassemblies and parts have been created yet.

- Level 1—Only some of the subassemblies and parts have been created.

- Level 2—Many subassemblies and parts required for this CAD assembly are already present; a significant number remain to be added.

- Level 3—Most subassemblies and parts are present.

- Level 4—All necessary subassemblies and parts are present—regardless of their levels of detail and definition.

The developed maturity grids are presented in full detail in Appendix A, Figure A1, Figure A2 and Figure A3; the reader is encouraged to explore their detail as they are ready for use in a practical setting.

The grids allow items of preliminary information to be scored on each of the maturity aspects shown in Table 5. The decomposition of information maturity into the rows of the maturity grids for different types of information allows for a highly granular, justified assessment and is intended to minimise subjectivity as far as possible. The result of completing the assessment for one piece of information is 18 integer values between 0 and 4, where 4 indicates full maturity on a particular aspect and 0 indicates no maturity—meaning that the information largely does not exist yet or the value is not defined yet. Parts of the assessment may also be skipped if the corresponding maturity aspect is deemed inapplicable to the specific information and context. This allows for a wide range of use cases.

The suitability of the new grids to elicit information maturity levels was initially checked by the authors by trials on a range of cases. This provided confidence to justify seeking practitioner feedback, which is discussed in Section 6.

5.3. Need 3—The Solution Must Combine and Summarise Information Maturity Assessments

The interview study revealed the need for support to clearly communicate information maturity levels (Table 4). To achieve this, the 18 values resulting from an assessment grid need to be aggregated into a smaller number of values to provide easily comprehensible summaries of information’s assessed maturity levels.

As shown in Table 2, most approaches in the literature that provide for multidimensional information maturity assessments do not consider how to aggregate those dimensions into a single value. The main exception is Drémont et al. [30]’s formula for calculating information maturity by combining the assessed scope for variation, assessed level of design performance, assessed time for which preliminary information is considered valid, and sensitivity of the downstream task to changes in the information. Johansson et al. [29] provide a figure that suggests combining their three maturity aspects to obtain an overall maturity level, but the text of their article does not provide much detail on the aggregation approach used, so the method remains unclear. Zou et al. [31] suggest using a Bayesian approach to aggregate maturity aspects but do not provide an explanation or example of how this would work.

Because there is no clear solution in prior work, a range of possible approaches were therefore considered. The simplest would be to average the 18 assessed aspects to arrive at an overall maturity level. However, averaging is a form of compensating aggregation in which low values in some factors may be compensated for by high values in others. Compensating aggregations are usually unsuitable to combine assessments in engineering contexts because low values are often critical and should not be easily disguised [64,65]. To illustrate in the maturity assessment context, consider a situation in which a preliminary CAD model was analysed and showed the bulk part design would not meet strength requirements. This would result in a reduction in the CAD model’s validity according to the definition given in Table 5. Logically, it should not be possible to recover the previous maturity level by ignoring the unfavourable analysis and adding more design depth, such as chamfers, to the same design. One alternative is a non-compensating aggregation, for example, aggregating elements of the assessment by multiplication or using the min function. Non-compensating aggregations ensure that very poor outcomes are never disguised, but one zero renders the whole aggregated value zero. This is also not realistic for information maturity assessments. We also considered more sophisticated aggregation schemes, specifically a fuzzy logic-based [66] or Bayesian network based approach, both of which are appealing because maturity assessments are themselves not crisp, or crafting a mathematical function to combine the individual assessment elements based on arguments about the relationships between the 18 maturity aspects, like Zou et al. [31]. However, initial trials, some reported in Brinkmann [67], showed that these approaches were excessively complicated, would be difficult to explain to practitioners, and did not yield demonstrably better results than the simple approach ultimately selected, which is explained next.

To reduce the undesired disguising of extremely low values while also avoiding overall maturity assessments of zero when only one element of the assessment grid is zero, and to do so using an approach that is easy to explain to practitioners, a simple combination of compensating and non-compensating approaches is used in the new approach. Specifically, leveraging the hierarchical structure of Table 5 taxonomy, the weighting scheme shown in Equation (1) is used to aggregate the three evaluations within each lowest-level subcategory of Table 5 as follows:

As an illustrative example, consider the subcategory completeness in Table 5 and a situation in which an information maturity assessment yielded coverage = 2, depth = 3, and readiness = 1. In this case, . The resulting values for each pair of subcategories shown in Table 5 are then aggregated using a similar 80-20 scheme, in which the lower value of the pair is weighted at 80 and the higher value at 20. Thus, for example, results for completeness and clarity are aggregated to arrive at a result for content. Finally, content, context, and provenance values are aggregated using the 80-50-20 scheme described above to arrive at an overall maturity assessment for the information.

This scheme is justified as follows. The lowest maturity levels are most heavily weighted so that potential design problem areas have the greatest impact on the final aggregated value, for the reasons explained above. The 80-50-20 and 80-20 weightings reflect the so-called 80-20 rule familiar to most practitioners. The aggregation approach was initially tested on a range of hypothetical cases, including those with extreme differences among assessed maturity levels. It generated intuitively reasonable results. Finally, the suitability of the selected weighting factors was evaluated using sensitivity analysis. This showed that changing the weighting scheme from 80-50-20 to 90-50-10 or 70-50-30 yielded less than 2.5% change in the aggregated maturity value for the example given in the next subsection; this was deemed insignificant given that the assessments being aggregated are themselves experiential and, hence, not precise. Additionally, the empirical feedback on the approach (discussed in Section 6) did not reveal practitioner concerns about the aggregation method. If concerns were raised in a particular application context, the analytic hierarchy process [68] could be used to arrive at weightings for that context with input from domain experts.

Overall, the aggregation method explained in this subsection was deemed to function well enough to seek practitioner feedback on the entire approach, while having the significant practical advantage of simplicity.

5.4. Need 4—Provide Visualisations to Clearly Communicate Information Maturity Levels

The identified need for support to clearly communicate information maturity levels (Table 4) is additionally addressed in the concept demonstrator by visualising raw and aggregated assessment results. The prior maturity assessment approaches from the literature, summarised in Table 2, were examined to consider how results were visualised. Very little was found, with the topic either not discussed at all (in refs. [22,30,31] or maturity levels being visualised as basic bar charts (in refs. [9,26]). Three new visualisation concepts were therefore ideated to illustrate how information maturity assessments could address envisaged use cases. The focus was set on supporting common communication and coordination tasks because coordination based on immature information had been revealed as particularly challenging in the interview study (Section 4.3.3).

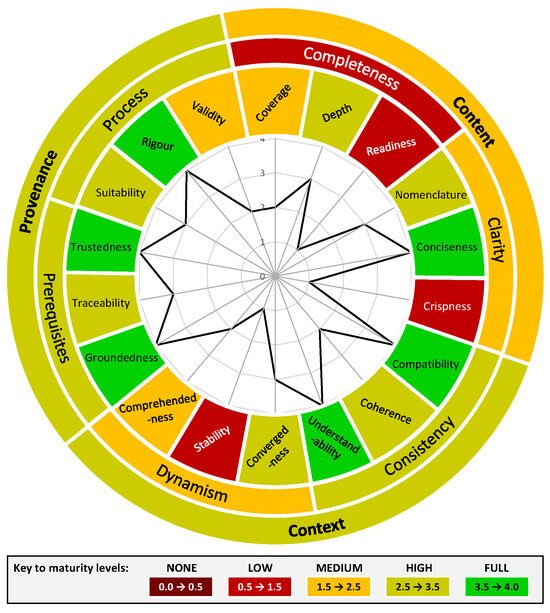

- The first developed visualisation was developed to highlight which maturity aspects are lagging behind others and hence, guide discussions about how maturity of key information could be improved. Called the Information Maturity Wheel, this visualisation combines detail and overview of a single maturity assessment as shown in Figure 3. To illustrate this, Figure 3 indicates that the overall maturity level of the concerned information is medium-high, with the lagging aspects being crispness, stability, and readiness.

Figure 3. The Information Maturity Wheel visualises the detail of a single information maturity assessment to communicate information’s maturity level and pinpoint aspects requiring improvement. Colours on the outer rings indicate aggregated maturity levels. The hierarchical structure and definitions reflect the taxonomy of Table 5.

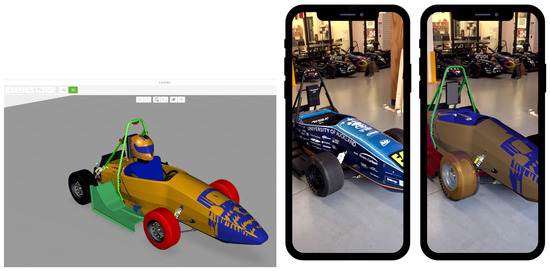

Figure 3. The Information Maturity Wheel visualises the detail of a single information maturity assessment to communicate information’s maturity level and pinpoint aspects requiring improvement. Colours on the outer rings indicate aggregated maturity levels. The hierarchical structure and definitions reflect the taxonomy of Table 5. - The second visualisation was developed to aid discussions of information maturity in a design review setting as well as aid communication of design progress. Overall maturity levels of subsystems are indicated using colours in a CAD model as shown in Figure 4 (left). The same information may be overlaid onto a tangible design prototype using augmented reality as shown in Figure 4 (right); this example was created using Vuforia Studio and trialled using a Formula SAE car design.

Figure 4. (left) Visualising the aggregated design maturity of product subsystems using a traffic light colour scheme overlaid on a CAD model to support design reviews and communication. (right) Visualising the design maturity of subsystems in an evolving design by augmented reality overlay on an earlier product generation.

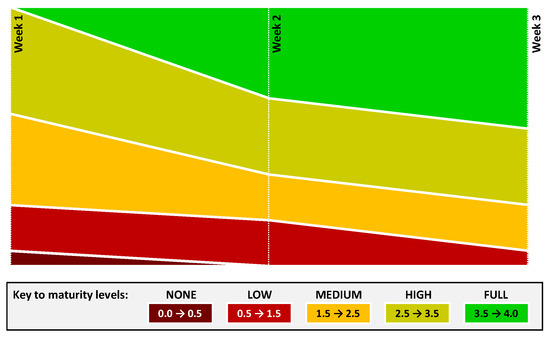

Figure 4. (left) Visualising the aggregated design maturity of product subsystems using a traffic light colour scheme overlaid on a CAD model to support design reviews and communication. (right) Visualising the design maturity of subsystems in an evolving design by augmented reality overlay on an earlier product generation. - The third visualisation was developed to assist tracking of progress on iterative engineering design tasks where traditional milestone tracking and earned value tracking can be difficult to apply due to a lack of measurable deliverables prior to design completion [69]. The visualisation summarises a series of consecutive maturity assessments in terms of the number of the 18 aspects that reached each of the five maturity levels at the time of each assessment (Figure 5). Tracking these charts for key design subsystems could indicate where targeted action may be needed on particular subsystems whose maturity progress is consistently lagging behind most others.

Figure 5. Visualising the results of successive information maturity assessments to assist design progress tracking. The colours represent the number of the 18 facets reaching the corresponding maturity level at each point in time. As a process moves forward, the green areas representing high and full maturity may be expected to progressively cover more of the plot.

Figure 5. Visualising the results of successive information maturity assessments to assist design progress tracking. The colours represent the number of the 18 facets reaching the corresponding maturity level at each point in time. As a process moves forward, the green areas representing high and full maturity may be expected to progressively cover more of the plot.

5.5. Summary of Prescriptive Study: Research Contributions of the Developed Solution

To recap, the concept demonstrator presented in this section addresses needs resulting from the empirical study. It is grounded in a conceptual framework that decomposes information maturity into well-defined constituent elements, provides detailed maturity grids to concretise the maturity assessment concept for three distinct types of commonly encountered engineering design information, aggregates assessment components into overall information maturity scores, and visualises assessment results to make information maturity visible to practitioners so that the use of preliminary information can be more deliberately managed. The presented approach thereby addresses the research gap and limitations of prior work summarised in Table 2.

6. Descriptive Study II: Practitioner Feedback

The final stage of DRM is Descriptive Study II. According to Blessing and Chakrabarti [5] (p. 36), its objectives include: (1) to determine whether research goals were adequately addressed, that is, whether success criteria are satisfied by the developed approach, (2) to inform improvement of the approach, and (3) to suggest how it could be introduced into practice. These authors recommend a multistage evaluation including a basic verification that the support technically meets needs prior to assessing practical impact of the support against the success factors. Blessing and Chakrabarti [5] recognise that the ideal evaluation may not be achievable within the scope of many research projects.

In this research, the initial verification was done as already explained in Section 5, then the assessment against success factors was approached through a second interview study with reference to the success criterion defined in Section 3.5. Five practitioners from the earlier study agreed to participate; see Table 6. They were introduced to the concept demonstrator’s functionality using a prerecorded video presentation, which summarised the first three visualisations described in this article along with an explanation of the maturity grids and assessment process.1 Then, they were asked the following questions:

Table 6.

Participants in the second interview study.

- What are your thoughts about the concept that you were just shown?

- Could you imagine a system like this would be useful to you or to others? Would you use it? if yes: mainly because of what?/what for?/what are the advantages? if no: why not?

- What aspects of the concept did you like best and why?

- What aspects of the concept do you think could be improved and why/how?

As before, all interviews were audio-recorded and manually transcribed for familiarisation and analysis. Comments deemed insightful were extracted and mapped against the three objectives listed by Blessing and Chakrabarti [5], that are mentioned at the start of this section. The next subsections summarise the findings for each objective.

6.1. Determining Whether the Success Criterion Is Satisfied

To recap from Section 3.5, the success criterion for this research was that practitioners should find the approach useful to improve clarity of information maturity levels. Most interviewees’ feedback was supportive and emphasised the value of the approach to make information maturity more visible:

“This would absolutely be something that would be beneficial for us […] this checklist to work through and being able to quickly score where each component, for example, is and then having a visual representation where all the parts are. That would be useful; I can see how I would use that pretty much straight away!”(C4E4)

“The visualisation would be a great way to keep reminding ourselves: That bit of data is not that good! So you need to be looking out for that problem…”(C3E1)

“Engineers tend to focus on the detail and go down the rabbit hole, so there has to be someone who has that high-level view […] Have we thought about that? Or the assumption on this detail, how do we know that is accurate? So, [the support approach is] a tool to make it visible to everyone, not just the project manager or this high-level-thinker…”(C3E1)

“I can see it being good for clarifying what you understand and what you don’t […] it could be good for that, particularly your CAD diagram showing colours…”(C5E1)