Abstract

Citizen science now relies heavily on digital platforms to engage the public in environmental data collection. Yet, many projects face declining participation over time. This study examines the effect of three elements of gamification—points, daily streaks, and real-time leaderboards—on student engagement, achievement, and immersion during a five-day campus-wide intervention utilising the GAME and a spatial crowdsourcing app. Employing a convergent mixed-methods design, we combined behavioural log analysis, validated psychometric scales (GAMEFULQUEST), and post-experiment interviews to triangulate both quantitative and qualitative dimensions of engagement. Results reveal that gamified elements enhanced students’ sense of accomplishment and early-stage motivation, which is reflected in significantly higher average scores for goal-directed engagement and recurring qualitative themes related to competence and recognition. However, deeper immersion and sustained “flow” were less robust with repetitive task design. While the intervention achieved only moderate long-term participation rates, it demonstrates that thoughtfully implemented game mechanics can meaningfully enhance engagement without undermining data quality. These findings provide actionable guidance for designing more adaptive, motivating, and inclusive citizen science solutions, underscoring the importance of mixed-methods evaluation in understanding complex engagement processes. While the sample size limits the statistical generalizability, this study serves as an exploratory field trial offering valuable design insights and methodological guidance for future large-scale, controlled citizen science interventions.

1. Introduction

This study investigates the effect of various gamification strategies on citizen engagement and data quality in spatial crowdsourcing, offering timely insights for enhancing participation in citizen-driven environmental and data collection initiatives. Citizen science is reshaping the landscape of data-driven research by enabling systematic involvement of non-professionals in the scientific process. This paradigm shift—fuelled by the proliferation of mobile technologies, low-cost sensors, and digital platforms—has significantly expanded the scale, resolution, and accessibility of ecological and environmental data [1,2]. In urban contexts, where environmental and infrastructural dynamics are complex and rapidly evolving, citizen science initiatives have proven effective in generating fine-grained, spatially distributed information, which is critical for monitoring public health, biodiversity, and sustainability challenges [3,4]. Beyond data collection, such initiatives embody a democratisation of science, fostering co-production of knowledge, civic empowerment, and more inclusive environmental governance frameworks [5,6]. Moreover, citizen-generated data are increasingly being recognised as valuable inputs for policy design, Sustainable Development Goals (SDG) monitoring, and global reporting mechanisms—provided that appropriate mechanisms for quality assurance, interoperability, and ethical stewardship are in place [3,7]. Taken together, these developments position citizen science as a methodological innovation and a critical infrastructural pillar for sustainability science in the present day.

Despite the widespread adoption of mobile apps and sensors, citizen science projects often face a well-documented ‘engagement–quality spiral’. As task complexity rises, sustained motivation declines, undermining data integrity [8]. Across domains such as biological invasions [9], urban biodiversity, plastic pollution monitoring, and hydrological observations, multi-year evaluations consistently reveal that, after the initial excitement fades, participation drops sharply—whether in biological invasions [10], urban biodiversity campaigns curtailed by the pandemic [11], plastic pollution monitoring networks [12], or hydrological observatories [13]. Participation, therefore, becomes dominated by a narrow nucleus of enthusiasts, leaving broad geographic and sociodemographic gaps and amplifying sampling bias [14,15]. Attrition also undermines data fidelity: declining motivation correlates with misidentifications, protocol drift, and “careless responding” artefacts that can inflate error rates by 10–15% in unattended surveys [16,17]. The result is an uneasy trade-off between coverage and credibility, especially when task complexity is high, feedback is scant, or automation displaces perceived sense of volunteer agency [18,19]. Mitigating this compound deficit in sustained motivation and methodological rigour is thus indispensable if citizen-generated evidence is to fulfil its scientific and policy promise.

Recent research has underscored that gamification in citizen science is most effective not when it merely attracts participants through superficial rewards, but when it strengthens their intrinsic motivations and reinforces a sense of meaningful contribution. For instance, studies of platforms such as Foldit and Eyewire consistently reveal that participants are primarily driven by the opportunity to contribute to real science, rather than by game elements themselves [20,21,22]. Nevertheless, those same game elements—such as points, rankings, and collaborative play—play a pivotal role in sustaining engagement over time by nurturing intellectual challenge, peer learning, and a sense of community identity [20,23,24]. In their study “Motivation to Participate in an Online Citizen Science Game: A Study of Foldit”, Curtis and Tinati et al. [20] emphasise that perceived progress, recognition, and interaction with both scientists and peers are critical for long-term commitment. In contrast, recent reviews highlight that poor design, unclear communication, and shallow gamification can rapidly erode motivation [25,26]. Taken together, these insights suggest that successful gamified systems must align gameplay with scientific purpose, provide adaptive motivational scaffolding, and accommodate diverse user profiles to avoid limiting participation to technically skilled or intrinsically motivated individuals.

A gameful participation approach can greatly enhance motivation. A growing body of evidence suggests that motivational scaffolds grounded in game design can curb attrition while scaffolding data-quality controls. Gamified point systems, badges, and territorial “conquest” mechanics have raised completion rates in voluntary geographic information tasks and increased spatial coverage without compromising positional accuracy [27]. Yet the empirical base remains thin: rigorous, in situ evaluations of game elements in location-dependent citizen science are scarce, short-lived, and rarely track objective error metrics over time [28]. Moreover, most projects still confine volunteers to a “contributory” role, withholding analytic feedback and thereby muting the very sense of competence and social recognition that sustains engagement [29]. Reviews of smart city apps echo this gap, noting that incentives are either absent or poorly aligned with participant profiles, resulting in rapid post-launch drop-offs [30]. Although reward-based systems, such as Social Coin [31], have demonstrated conceptual promise, their uptake in certain co-designed citizen science pilots was limited. During early co-creation and design phases, pilot stakeholders expressed low levels of interest, potentially affecting adoption and impact in subsequent deployment stages. This underscores the importance of aligning incentive models with stakeholder expectations from the outset. Addressing these gaps through theory-informed, longitudinal trials of adaptive gamification constitutes the next critical step toward resilient, high-integrity citizen science infrastructures.

A notable approach in this direction is that of Puerta-Beldarrain et al., who developed the Volunteer Task Allocation Engine (VTAE), a system that emphasises user experience and an equitable spatial distribution of tasks in the context of altruistic participation [32]. Drawing on a growing body of empirical work demonstrating that game mechanics can foster sustained, higher-quality contributions in volunteered geographic information and other participatory-sensing contexts [27,28], the present study evaluates how point rewards, daily streak bonuses and real-time leaderboards embedded in the GREENCROWD web app influence university students self-reported engagement—operationalised through perceived accomplishment and immersive flow—while they gather geolocated environmental observations. University students constitute a strategically important cohort: they are digitally proficient yet chronically time-constrained, and their future professional trajectories position them to shape urban sustainability agendas. Accordingly, our investigation seeks design principles that amplify motivation without imposing inequitable or extractive workloads, thereby answering recent ethical critiques of “dark” citizen science models that covertly harvest unpaid labour [33] and complement calls to widen participation beyond highly specialised hobbyists [34,35]. Against this backdrop, we ask whether gamification enhances the volume, subjective enjoyment, and informational value of contributions—insights that are essential for next-generation citizen observatories tasked with balancing engagement, data quality, and civic legitimacy at scale [36,37,38,39].

This study also innovates by integrating a modular gamification engine (GAME) with a real-time, privacy-preserving spatial crowdsourcing platform (GREENCROWD), enabling deployment in real-world settings with minimal configuration. This integration not only ensures practical applicability beyond controlled trials but also facilitates replication and adaptation across diverse urban and environmental contexts.

Although gamification has gained widespread traction in citizen science, empirical studies that systematically integrate game elements with location-based data collection under real-world conditions remain notably limited. Prior works have demonstrated the value of gamified platforms for enhancing user engagement in purely digital environments [40] or have explored the ethical and regulatory implications of gamification in citizen science [41]. Some efforts, such as the Biome mobile app in Japan, have analysed large-scale geolocated contributions within a gamified context [42]; yet, these studies lack experimental field control and do not assess the effect of specific game mechanics. Other projects incorporate spatial dimensions in citizen science without gamification [43] or simulate gamified forest-based interactions without actual user participation in the field [44]. To date, a critical gap exists in the literature regarding in situ field studies that purposefully combine gamification and spatial crowdsourcing, with a rigorous evaluation of user motivation, retention, and data quality. This study addresses that gap by presenting a controlled field experiment that examines the behavioural and experiential impact of gameful engagement in a location-based citizen science context.

This study, therefore, makes three interrelated contributions. First, it is one of the earliest mixed-methods examinations of how discrete game mechanics operate in situ within a location-based, urban citizen science setting populated by university students. This mixed-methods approach combines quantitative data (such as structured surveys and cumulative points) and qualitative data (such as interviews) to provide a more comprehensive and in-depth understanding of the impact of gamification on participants’ experiences. This audience remains under-represented in the gamification literature outside of classroom contexts [27,28]. Second, by triangulating validated self-report scales with post hoc interviews, we move beyond coarse engagement metrics (e.g., total submissions) and reconstruct the underlying experiential texture of accomplishment and flow, yielding a richer account of motivational processes than survey-only or log file studies can provide. Third, the empirical insights translate into actionable design levers—optimal point weighting, streak calibration, and socially salient but ethically balanced leaderboards—that platform developers and municipal “smart city” teams can deploy to sustain participation while safeguarding data fidelity. In doing so, the work extends current debates on citizen observatories from proof-of-concept prototypes toward scalable, evidence-based frameworks for participatory urban sensing and policy co-production.

1.1. Rationale for Gamification in GREENCROWD

Gamification is increasingly used to boost engagement in citizen science. However, empirical studies caution that its effectiveness is often short-lived and context-dependent. While game elements such as points and leaderboards can generate initial motivation, their impact tends to decline over time as their novelty wears off [45]. In addition, a heavy reliance on external rewards can crowd out intrinsic motives when participants perceive the incentives as trivial or misaligned with their personal goals [46].

Recent mixed-methods work on social media moderation highlights an even sharper risk: a study of crowdsourced fake-account detection reported that shifting from a low (EUR 0.01) to a high (EUR 0.75) fixed cash reward drove a 365% surge in submissions but crashed accuracy from 45% to 16%—a phenomenon dubbed over-gamification [47]. Conversely, a hybrid schedule that began with modest cash and gradually layered points, badges, and incremental bonuses restored accuracy to 38% while extending interaction time by 26%. These findings reinforce long-standing concerns that purely quantitative incentives may prompt volunteers to prioritise speed over care, thereby undermining data integrity [48].

Gamified platforms can also skew participation toward a narrow demographic—often young, tech-savvy users—thereby limiting representativeness [49]. Excluding volunteers from early design decisions further erodes ownership and long-term commitment [49].

GREENCROWD tackles these challenges through a behaviourally informed model spanning five intertwined dimensions: base performance, spatial equity, temporal diversity, personal progress, and consistency. Mirroring the hybrid approach that mitigated over-gamification in [47], GREENCROWD dispenses rewards adaptively: small initial payouts are supplemented by points, streak bonuses, and situational boosters that respond to user behaviour and geospatial gaps. By avoiding static, one-size-fits-all incentives, the platform aims to strike a balance between motivation and data quality, broaden the participant base, and mitigate the risk of disengagement or performance gaming.

Importantly, our solution, named GAMEDCROWD, integrates the GAME seamlessly with the GREENCROWD web app, thus providing an experimental environment to manage spatial crowdsourcing campaigns, which offers a modular design to facilitate rapid adoption and configuration. This design ensures that the resulting GAMEDCROWD platform is not only suitable for controlled experimental scenarios, but also fully ready for deployment in real-world urban observatory programmes.

1.2. Research Objectives and Questions

Three objectives guide the work: (i) to quantify the extent to which discrete game mechanics—points, daily streak bonuses and real-time leaderboards—elevate university volunteers’ self-reported engagement, accomplishment, and immersion during location-based data collection; (ii) to uncover the motivational and behavioural pathways through which those mechanics operate; and (iii) to delineate the contextual drivers and constraints that determine whether gamification can sustain contribution volumes while safeguarding data quality in urban citizen science infrastructures. Consistent with these aims, we advance the following testable statement:

Building upon the self-determination theory (SDT), which posits that competence, autonomy, and relatedness are central to sustained motivation [50], we hypothesise that the integration of game elements (points, streaks, leaderboards) will enhance participants’ sense of accomplishment (competence), increase engagement (autonomy through voluntary participation), and support moderate immersion (relatedness via social comparison features).

Hypothesis 1.

The introduction of gamified elements (points, daily streaks, and leaderboards) into a location-based citizen science platform will significantly enhance participants’ reported sense of accomplishment and engagement, in line with SDT (competence, autonomy, and relatedness).

To prove the mechanisms and boundary conditions underlying this hypothesis, we address the following three interrelated research questions:

RQ1 How do university students experience engagement, accomplishment, and immersion while participating in a gamified, campus-wide citizen science experiment?

RQ2 What motivational and behavioural patterns arise from using points, streaks, and leaderboards during geospatial data collection?

RQ3 Which qualitative factors—such as perceived value, social drivers, or logistical barriers—influence the participation endurance and the perceived credibility of the data produced?

We investigate whether game mechanics enhance both the volume and subjective quality of citizen contributions, insights that are critical for designing next-generation participatory platforms. Given the limited sample size and the voluntary nature of participation, this study is best interpreted as a preliminary investigation—a pilot field trial designed to uncover early-stage insights into gamified spatial crowdsourcing. Its primary contribution lies in revealing methodological considerations and design opportunities to inform subsequent, larger-scale experiments with more robust statistical power.

By doing so, this study contributes to the broader discourse on digital solutions for participatory governance in smart cities, demonstrating how gamified platforms can foster active citizen involvement in data-driven urban interventions. By embedding motivational game elements within a location-based system, we explore how digital tools can enhance civic engagement, improve environmental data quality, and support more inclusive and responsive forms of urban governance.

To address the research questions outlined above, the remainder of this paper is structured as follows: Section 2 presents the related work, providing context and background for this study. Section 3 outlines the research methodology, including a detailed description of the research design and the GAMEDCROWD solution for gamified spatial crowdsourcing. Section 4 presents the results, followed by a thorough discussion in Section 5. Finally, the conclusions and future directions are discussed in Section 6.

2. Related Work

As this research spans multiple dimensions, including citizen science, rewards, gamification, and participatory platforms, the related work is organised accordingly to provide a comprehensive background.

2.1. Citizen Science and Engagement

Over the last decade, citizen science has matured from ad hoc volunteer observation to a recognised research infrastructure, particularly in environmental and urban studies. Large-scale syntheses show that citizen contributions now underpin high-resolution biodiversity, air-quality, and climate datasets that would be prohibitively costly to obtain otherwise [1]. At the same time, longitudinal audits reveal a persistent “long-tail” pattern: fewer than 10% of registrants sustain activity beyond the first month, leaving spatial and socio-demographic gaps in coverage [51]. Data-quality meta-analyses suggest that volunteer observations can match expert benchmarks when accompanied by robust protocols, training, and post hoc validation [6]. Yet, misidentifications and protocol drift rise sharply once motivation fades [16]. Consequently, contemporary work frames engagement as a multidimensional construct—involving the cognitive, affective, behavioural, and social dimensions—that must be actively engineered throughout the project life cycle [52].

2.2. Gamification

Gamification is commonly defined as “the use of game-design elements in non-game contexts” [53]. Following this definition, we refer to the deliberate integration of points, badges, leaderboards, daily streaks, and other game mechanics into platforms whose primary purpose is not entertainment. A review of 819 Web of Science (WoS) indexed research papers confirms that points, badges, and leaderboards (PBL) dominate practice, with education, health and crowdsourcing as the primary application areas [54]. Meta-analyses using Hedges’ g coefficient [55] evidence show small-to-moderate positive effects on cognitive (g ≈ 0.49), motivational (g ≈ 0.36) and behavioural (g ≈ 0.25) outcomes in formal learning [56]. The effect heterogeneity is explained by design nuance: narrative context, balanced competition–cooperation, and personalised feedback consistently amplify impact, whereas “points-only” implementations risk novelty decay and user fatigue. These findings highlight the need for theory-led, user-centred gamification rather than bolt-on reward schemes.

2.3. Gamification in Citizen Science

While the integration of gamification into citizen science platforms is frequently promoted as a strategy to enhance engagement and data quality, empirical evidence remains limited and nuanced. Controlled field studies have shown that the deployment of game mechanics, such as progress points, leaderboards, and spatial “quests”, can increase short-term participation rates and expand the spatial and thematic coverage of contributions [27]. However, the literature consistently highlights that such effects are often transient: participant motivation typically wanes after the initial novelty effect, leading to a decline in retention and the emergence of a core group of highly engaged contributors, while the majority contribute sporadically or disengage entirely [1,15,17].

Crucially, the impact of gamification on data quality is ambivalent. On one hand, competition and feedback mechanisms can improve accuracy and learning among motivated participants [27,57]. On the other hand, evidence suggests risks associated with “quantity-over-quality” behaviours, careless or opportunistic submissions, and protocol violations, particularly when incentives are not carefully aligned with project goals or when feedback and recognition mechanisms are absent [16,33,58].

Moreover, gamified citizen science initiatives often default to a contributory model, where participants are restricted to data collection roles and rarely engage in co-design or interpretation of the collected data, i.e., they do not participate in the different stages of the citizen science loop. This limitation reduces the sense of ownership, long-term motivation, and ultimately, the sustainability and inclusivity of the projects [29,30,34]. Additionally, digital game mechanics may disproportionately attract technologically literate individuals, inadvertently exacerbating demographic biases and thereby limiting broader community involvement [29,30].

In summary, while gamification provides a valuable toolkit for fostering participation and learning in citizen science, significant challenges remain regarding the long-term retention of volunteers once the novelty fades, the design of equitable and inclusive game layers, and the implementation of robust mechanisms to safeguard data quality against “gaming the system”. Addressing these gaps will be critical for advancing the scientific and societal impact of gamified citizen science platforms.

2.4. Theoretical Foundations

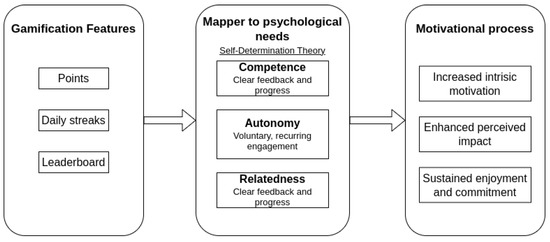

This study draws explicitly on SDT [59], a well-established behavioural framework that explains how intrinsic motivation and sustained engagement emerge from fulfilling three basic psychological needs: competence, autonomy, and relatedness.

Competence refers to participants’ perception of effectiveness and mastery over tasks. Within gamified citizen science contexts, this need can be satisfied through clear feedback mechanisms, progressive challenges, and recognition of achievements [27,57].

Autonomy is experienced when participants feel they have control and choice over their involvement. Game mechanics that allow flexibility in task selection, optional challenges, and personalised pacing directly enhance autonomy [1,60].

Relatedness pertains to feelings of social connection, belonging, and meaningful interaction with others. Leaderboards, team activities, and social comparison elements foster this connectedness by situating individual performance within a broader social context [1,27].

In this study, the gamification elements of points, daily streaks, and leaderboards were deliberately selected and designed to map directly onto these psychological needs. Specifically,

Points provide immediate, clear feedback on task performance, directly fostering a sense of competence and goal attainment.

Daily streaks encourage participants to return and sustain engagement over consecutive days, strengthening perceptions of autonomy through repeated voluntary choice.

Real-time leaderboards enable participants to socially contextualise their contributions, thereby promoting a sense of relatedness by visually connecting individual progress with that of their peers.

This explicit theoretical grounding allows us to predict not only whether gamification affects behaviour, but also why and through which psychological mechanisms these effects might occur, providing deeper insights into the motivational dynamics underlying citizen science participation.

Recent research underscores that engagement and sustained participation in citizen science are driven by a complex interplay of motivational factors, which include but are not limited to intrinsic interest, perceived impact, and social or educational benefits [29,60], where intrinsic motivation is driven by personal motivations or even to feel a sense of accomplishment. In contrast, extrinsic motivation is driven by rewards, the result of an action performed, approval, or the avoidance of disapproval. While explicit adoption of formal motivational theories such as self-determination theory is still limited in the environmental citizen science literature, empirical findings consistently reveal that autonomy, perceived competence, and social connectedness are critical for fostering long-term motivation and high-quality contributions [34,57].

Game elements in citizen science—such as optional tasks, tiered challenges, personalised feedback, and collaborative activities—are frequently aligned with these motivational drivers. Studies have shown that autonomy-supportive and competence-building activities, as well as mechanisms for recognition and social interaction, are associated with higher participant retention and improved data quality [1,27,60].

Furthermore, systematic reviews emphasise the importance of triangulating quantitative and qualitative methods—for example, combining digital logs of participant activity, structured surveys, and in-depth interviews—to distinguish between initial novelty effects and more enduring, deeper forms of engagement [1,16,57]. This mixed-methods approach is increasingly regarded as essential for evaluating both the effectiveness of game-based interventions and the underlying mechanisms of sustained motivation in citizen science contexts.

Nevertheless, a recurring gap in the literature is the lack of validated instruments specifically tailored to measure “gamefulness” and the quality of engagement in real-world citizen science. As projects grow in scale and diversify, developing robust, context-sensitive measures of participant experience—including enjoyment, immersion, sense of impact, and creative contribution—remains a critical area for future research [29,30]. To visualise this framework, Figure 1 presents a logic model that maps the relationship between gamification elements, psychological needs (as per self-determination theory), and expected behavioural outcomes.

Figure 1.

Conceptual model linking gamification mechanics to psychological needs and behavioural outcomes.

3. Methodology

To conduct the study, we developed GAMEDCROWD. This custom experimental platform includes the GREENCROWD web app, designed to support the evaluation of gamification strategies in spatial crowdsourcing, building on our earlier contribution to the GAME [61]. This platform was developed to evaluate gamification strategies, drawing on insights from prior implementations by the authors with the SOCIO-BEE app [61] and GREENGAGE app [62], and the GAME could also be easily integrated, given its RESTful API, into third-party spatial crowdsourcing apps. The provided GREENCROWD experimental web app serves as a lightweight, modular interface that integrates with our gamification engine. This setup enables real-time reward computation based on user actions and contextual factors (e.g., geolocation, time, performance consistency), allowing fine-grained tracking of engagement and participation metrics. The gamification engine was purpose-built to simulate various reward conditions in controlled environments while maintaining the flexibility to deploy in real-world data collection scenarios. All user interactions were logged for subsequent analysis, and the frontend remained agnostic to specific domain content, ensuring that results are generalisable beyond the immediate use case.

3.1. Study Design

We conducted a five-day field experiment to investigate the effectiveness of gamification in spatial crowdsourcing activities within Citizen Science. We used both quantitative and qualitative methods at the same time because (i) there is limited existing research on this topic, and most of it does not track changes over time, and (ii) our participant group was relatively small of volunteers—too small for strong statistical analysis, but enough to gain deep insights into their experiences. Although brief, the five-day period was sufficient to capture fluctuations in motivation and participation, as daily, on-site tasks simulated key aspects of sustained engagement. By combining activity logs, surveys, and interviews, we identified the extent, nature, and drivers of changes in participant engagement.

Participants and Setting

We recruited participants from two classes at the University of Deusto.

- Registered users: a total of 49 users created an account with GAMEDCROWD; however, just 40 participants completed a baseline socio-demographic survey (age bracket, gender identity, study major, employment status, digital-skills self-rating, perceived disadvantage, residence postcode). Most participants (38 out of 49; 77.55%) belonged to the 18–24 years age group, classified as young adults or university-age. Only two participants fell outside this group—one in the 25–34 range (early adulthood) and one in the 45–54 range (late adulthood), each representing 2.04% of the sample. Nine users (18.37%) did not declare their age range. The interquartile range (IQR = 20–24)—which represents the middle 50% of the participants’ age distribution—confirms that most respondents were in their early twenties, aligning with the university student demographic (Table 1). In terms of gender identity, 30 participants identified as male (75%) and 10 as female (25%), indicating a gender imbalance in the sample. Importantly, no significant differences were observed across groups regarding study major, employment status, digital-skills self-rating, perceived disadvantage, or residential location, supporting the demographic homogeneity of the analytical sample.

Table 1. Summarises the distribution of participants across predefined age brackets, along with corresponding life-stage categories.

Table 1. Summarises the distribution of participants across predefined age brackets, along with corresponding life-stage categories. - Active contributors: seven students (15% of registrants) submitted at least one complete task (Appendix A) set during the study window; this subgroup constitutes the analytical sample for behavioural metrics.

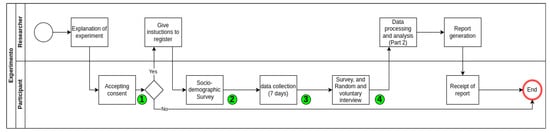

- Ethics: the protocol (Appendix B), which is depicted in Figure 2, was reviewed and approved by the Ethical Assessment Committee of the University of Deusto (Ref. ETK-61/24-25). In-app onboarding provided a study information sheet; participants gave explicit, GDPR-compliant e-consent and could withdraw at any point without receiving a penalty.

Figure 2. The ethics committee approved the protocol diagram for the experiment.

Figure 2. The ethics committee approved the protocol diagram for the experiment.

While the analytical sample for behavioural analysis was relatively small (n = 7), this is consistent with exploratory gamified studies in educational and applied contexts. For instance, Anderle et al. [63] evaluated the impact of gamified tools in small groups of 14–15 participants within a similarly short intervention period. In our study, 49 participants registered on the platform and 40 completed the baseline sociodemographic survey. However, only 7 participants completed at least one full task set during the intervention, forming the core analytical group for behavioural metrics. This limitation reflects the institutional and logistical constraints of embedding the study within scheduled university classes, where participation in daily field tasks could not be enforced. Therefore, this study is positioned as an exploratory field trial designed to generate preliminary insights into gamified spatial crowdsourcing dynamics, rather than to provide statistically generalisable results. The implications of this limitation are discussed in Section 6.

3.2. Intervention: The Gamified Experiment

We implemented the intervention over five consecutive days (Monday to Friday) on a university campus using the GREENCROWD web app. Initially, a brief in-person meeting was held to present the experiment, recruit participants, and address any questions that may have arisen. All subsequent activities, including daily tasks, reminders, data collection, and post-experiment interviews, were conducted remotely.

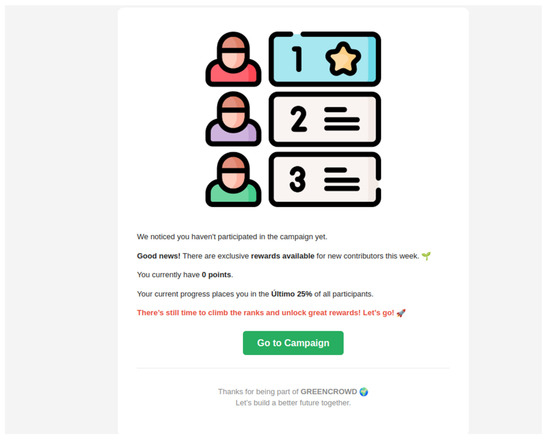

3.2.1. Daily Task Structure

Each day at 9:00 AM, three unique points of interest (POIs) were activated within each of the two designated campus areas, yielding a total of six active POIs per day. These POIs were rotated daily to maximise exposure to varied campus environments, resulting in 30 distinct POIs over the five-day intervention. Environmental diversity was operationally defined as variation in the contextual features of POIs, including surface type (natural vs. paved), the presence or absence of vegetation, pedestrian traffic intensity, and surrounding infrastructure. Participants were notified by email at the same hour (Appendix D), serving as a task reminder and motivational tool. The emails included each participant’s current position on the public leaderboard and a tailored motivational message, encouraging those in leading positions to maintain performance, and inviting inactive users to re-engage and earn more points.

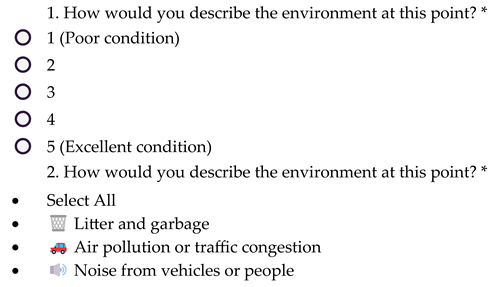

3.2.2. Micro-Task Components

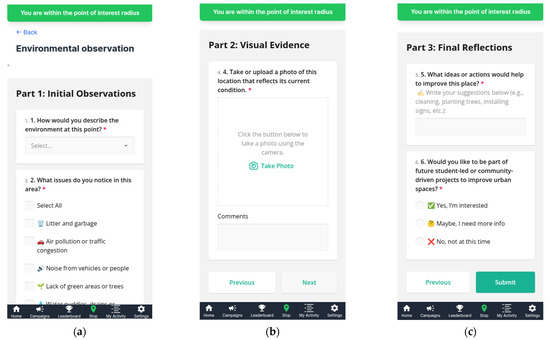

For each POI, participants were required to:

- Complete a site survey (environmental rating, issue identification, and site usage frequency).

- Upload a geo-tagged photograph reflecting current site conditions.

- Provide suggestions for site improvement and indicate willingness to participate in future student-led initiatives.

3.2.3. GAMEDCROWD Platform

GAMECROWD is a gamified spatial crowdsourcing platform neatly integrating the GREENCROWD web app with the GAME (see Section 3.2.4.), specifically designed to facilitate spatially distributed citizen science activities in urban contexts [64]. It supports the structured collection of geolocated environmental data (e.g., site conditions, public space usage, and perceived issues) encouraged by an open-source gamification engine called GAME (Goals And Motivation Engine) [65]. A web app, integrated with a gamification engine, serves as a means for both data acquisition and civic engagement, targeting university students, local communities, and research practitioners seeking to mobilise non-professional contributors in data-driven sustainability initiatives. GAMEDCROWD addresses a core challenge in citizen science—namely, maintaining sustained participation over time—by embedding lightweight gamification layers (e.g., points, streaks, leaderboards) without compromising the privacy or agency of users. It is particularly suited for campus- or district-scale deployments that require fine-grained spatial resolution and structured tasks.

As an open-source platform, both the GREENCROWD web app and the GAME are licenced under a permissive model that encourages community-driven development. Developers are free to audit, modify, or extend the platform, fostering long-term sustainability and collaborative innovation. Importantly, the system is explicitly designed to protect user privacy: it does not collect any personal information such as names or email addresses. Instead, user identity and attribute management are fully delegated to a Keycloak identity and access management (IAM) system, which authenticates users and issues pseudonymous tokens that contain only the necessary claims (e.g., age range, gender identity, language preference). The GAMEDCROWD backend logic processes these tokens without storing or accessing any direct user metadata, thereby ensuring GDPR compliance and minimising ethical risks in experimental contexts.

The application interface is composed of the following main components:

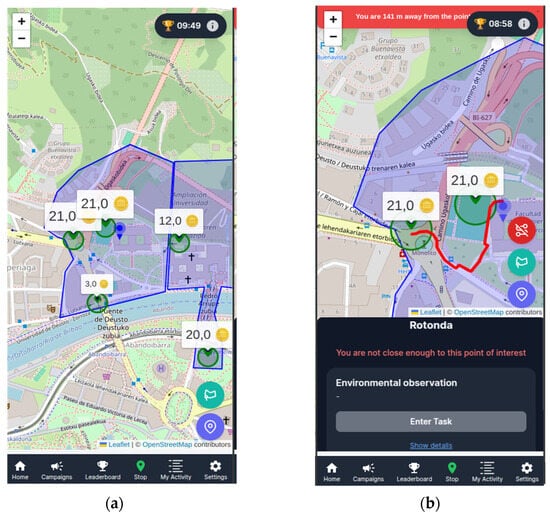

- Interactive map with POIs and dynamic scoring: after logging in, users can join any available campaign in the system. Then, the user views a map overlaid with daily POIs, each associated with specific environmental observation tasks and point values (depending on the gamification group to which users belong). These values are dynamically updated based on the time, frequency, or contextual rules defined in the campaign logic (Figure 3).

Figure 3. GREENCROWD web app’s map interface showing the active POIs and associated points rewards (a), and when the user selects a point and creates a route to reach there (b).

Figure 3. GREENCROWD web app’s map interface showing the active POIs and associated points rewards (a), and when the user selects a point and creates a route to reach there (b). - Modular task workflow: in this experiment, the tasks were structured in a three-step format as follows: (i) environmental perception ratings, (ii) geotagged photo uploads, and (iii) suggestion prompts and willingness-to-engage indicators. This modularity simplifies user experience and improves data completeness (Figure 4).

Figure 4. Tasks comprised three stages: environmental perception ratings (a), geotagged photo submissions (b), and engagement intent indicators with suggestions (c).

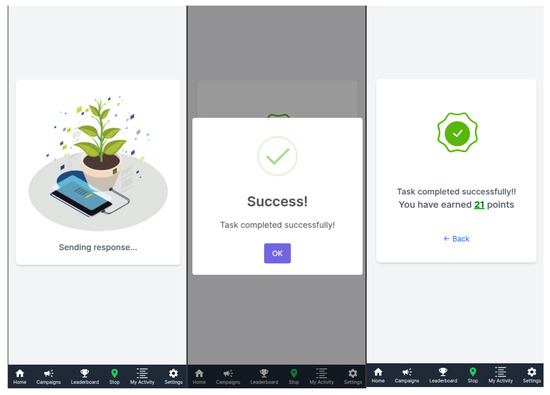

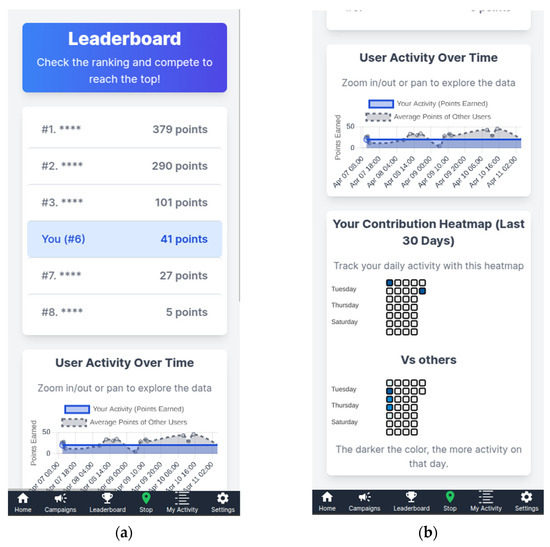

Figure 4. Tasks comprised three stages: environmental perception ratings (a), geotagged photo submissions (b), and engagement intent indicators with suggestions (c). - Points, leaderboard, and feedback layer: after submitting a response, participants are shown the points earned for the completed task (Figure 5). Participants can monitor their own cumulative points and relative position on a public leaderboard. While users can see their own alias and ranking, other entries appear anonymised (e.g., “***123”), preserving participant confidentiality while still leveraging social comparison as a motivational driver (Figure 6).

Figure 5. Submitting a response to a task and achieving points for it.

Figure 5. Submitting a response to a task and achieving points for it. Figure 6. The leaderboard anonymously displays the points of all participants, including the user’s own—individual identities are not revealed, and anonymous users are labeled with “****” (a). The activity charts below visualise the user’s performance in comparison to the collective activity of other participants (b).

Figure 6. The leaderboard anonymously displays the points of all participants, including the user’s own—individual identities are not revealed, and anonymous users are labeled with “****” (a). The activity charts below visualise the user’s performance in comparison to the collective activity of other participants (b). - Device-agnostic and responsive design: GREENCROWD is optimised for mobile devices, supporting real-time geolocation, camera integration, and responsive layouts, thereby reducing barriers to participation in field-based conditions.

Collectively, these features establish GAMEDCROWD as a technically robust and ethically sound solution for participatory sensing. Its combination of open-source accessibility, privacy-by-design principles, and user-centred gamification enables researchers to deploy scientifically credible interventions while preserving the autonomy and trust of contributors.

3.2.4. GAME

Participants were randomly assigned to one of three gamification groups—Random, Static, or Adaptive—each implementing a distinct point-calculation strategy based on task completion.

- Random group: participants received a score generated by a stochastic function. If no previous point history existed, a random integer between 0 and 10 was assigned. If prior scores were available, a new score was randomly drawn from the range between the minimum and maximum historical values of previously assigned points. This approach simulates unpredictable reward schedules often found in game mechanics.

- Static group: similarly to the random group in its initial stage, a random value between 0 and 10 was assigned in the absence of historical data. However, once past scores existed, the participant’s reward was determined as the mean of the minimum and maximum previous values. This method introduces a fixed progression logic, providing more predictable feedback than random assignment, while still maintaining some variability.

- Adaptive group: this group received dynamically calculated scores based on five reward dimensions informed by user behaviour and task context:

- ○

- Base points (DIM_BP): adjusted inversely according to the number of previous unique responses at the same point of interest (POI), to encourage spatial equity in data collection.

- ○

- Location-based equity (DIM_LBE): if a POI had fewer responses than the average across POIs, a bonus equivalent to 50% of base points was granted.

- ○

- Time diversity (DIM_TD): a bonus or penalty based on participation at underrepresented time slots, encouraging temporal coverage. This was computed by comparing task submissions during the current time window versus others.

- ○

- Personal performance (DIM_PP): reflects the user’s behavioural rhythm. If a participant’s task submission interval improved relative to their average, additional points were awarded.

- ○

- Streak bonus (DIM_S): rewards consistent daily participation using an exponential formula scaled by the number of consecutive participation days.

Each dimension was calculated independently and summed to produce the total reward. This adaptive method aims to reinforce behaviours aligned with the goals of spatial, temporal, and participatory balance in citizen science.

Participants could view their score for each completed task in real time, as well as their cumulative ranking on a dynamic public leaderboard. While modest material prizes were awarded at the end of the intervention, their function was explicitly framed as recognition, not as the primary motivational driver. The gamification was implemented through the following:

- Points: awarded for each completed survey and photo submission. Participants could see their points both before and after each task.

- Leaderboard: a public, real-time leaderboard displayed cumulative points and fostered social comparison.

- Material prizes: at the study’s conclusion, we awarded eight material prizes, each valued at EUR 25–EUR 30, to acknowledge participants’ contributions. These included LED desk lamps with wireless chargers, high-capacity USB 3.0 drives, wireless earbuds, and external battery packs (27,000 mAh, 22.5 W). The purpose of these rewards was to acknowledge and appreciate participants’ involvement, rather than to act as the primary incentive for participation. All prizes were communicated transparently to participants before the intervention, with an emphasis on their role as a token of appreciation rather than as a driving force for competition.

3.2.5. Technical Support and Compliance

A technical support form was available throughout the experiment. Only three support requests were received, all of which were resolved within minutes. All activities and data were managed digitally; location and timestamps were logged automatically to ensure data quality and behavioural traceability.

3.2.6. Engagement Assessment

Engagement was assessed by:

Quantitative data: task completion logs and the GAMEFULQUEST post-test scale (focusing on accomplishment and immersion dimensions) were gathered [66].

Qualitative data: semi-structured interviews (conducted remotely after the intervention) were carried out, exploring motivation, perceptions of gamification, and the impact on engagement.

This structure ensured a robust yet feasible field experiment, with minimal logistical friction and maximised data integrity.

3.3. Data Collection and Metrics

To ensure conceptual clarity, we defined key behavioural constructs as follows:

Engagement was operationalised as the submission of at least one complete task set, where a task set refers to a predefined group of three geolocated microtasks assigned per day. Participants who completed one or more full task sets during the study period were considered behaviourally engaged.

The task completion rate was calculated as the ratio of completed tasks to the total number of tasks available to each participant during the intervention window.

Additional indicators included response time per task, spatial diversity (number of distinct POIs visited), and temporal consistency, which measured the number of days with at least one task submission. These metrics were extracted from platform logs and used to evaluate user behaviour across the different gamification conditions.

3.4. Qualitative Analysis Protocol

To complement the behavioural metrics, qualitative insights were gathered through post-experiment semi-structured interviews. Out of the seven participants who completed at least one full task set, four agreed to participate in an interview. All interviews were conducted individually and remotely (via video call), with durations ranging from 16 to 33 min.

A thematic analysis was carried out manually due to the small number of participants. The coding process followed a deductive approach guided by the self-determination theory (SDT) framework, with codes aligned to the psychological needs of competence, autonomy, and relatedness. Predefined analytical categories were also used to align qualitative insights with behavioural data, particularly concerning engagement, motivation, and perceived impact.

Only one researcher conducted and coded the interviews. Given the limited sample, no inter-rater reliability coefficient was calculated. However, direct quotations and structured coding notes were cross-checked against survey data to ensure interpretative consistency.

4. Results and Analysis

4.1. Participant Profile: Demographics and Participation Rates

A total of seven registered participants (≈15%) engaged in at least one complete set of geo-located tasks during the five-day intervention, thus forming the analytical sample for behavioural and engagement analyses. However, only five of these participants completed the post-intervention survey (Appendix C), which assessed perceived accomplishment and immersion. The demographics of the active participants were very similar to those of the larger group, which helps confirm the reliability of our later comparisons. The overall participation rate (active contributors/registrants) was 15%, which aligns with rates reported in similar campus-based citizen science interventions [27].

4.2. Quantitative Findings

4.2.1. Distribution of Engagement, Accomplishment, and Immersion

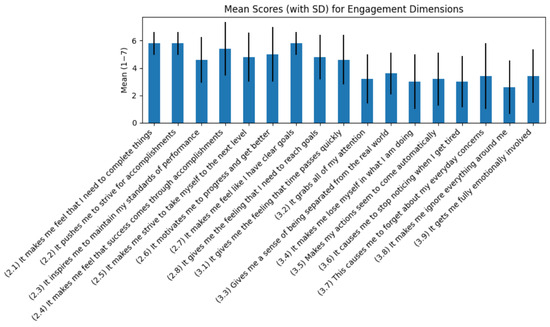

Post-experiment engagement was assessed using validated subscales (accomplishment and immersion) from the GAMEFULQUEST instrument. The standard deviations for each survey item are depicted in Figure 7, which summarises participant ratings across the two key engagement dimensions.

Figure 7.

The bar plot of mean scores and standard deviations for all GAMEFULQUEST items shows higher accomplishment than immersion across the board.

Accomplishment (M = 5.25, SD = 5.75): items reflecting a sense of goal-directedness and striving for improvement received consistently high ratings (e.g., “It motivates me to progress and get better”, “It makes me feel like I have clear goals”), while items associated with self-assessment and performance standards showed moderate variance.

Immersion (M = 3.3, SD = 2.88): most participants reported only moderate immersion, with higher variability on items relating to emotional involvement and separation from the real world. Only one participant reported immersion scores near the maximum, suggesting limited flow-like engagement across the sample.

To complement individual item analysis, Table 2 presents descriptive summary statistics for the overall accomplishment and immersion subscale scores, including means, medians, standard deviations, and interquartile ranges. These provide a more straightforward overview of central tendencies and variability across participants’ reported engagement experiences.

Table 2.

Descriptive statistics of perceived accomplishment and immersion scores.

Given the limited sample size, no formal statistical tests (e.g., normality, variance homogeneity) were conducted. This is consistent with the exploratory nature of the study.

4.2.2. Notable Individual Differences and Trends

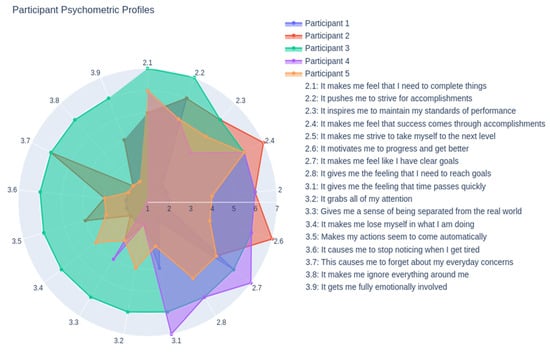

A radar plot of psychometric profiles (Figure 8) illustrates heterogeneity in how participants experienced the intervention. While accomplishment was uniformly elevated, immersion scores showed considerable spread, with some users experiencing marked “flow” and others remaining largely unaffected.

Figure 8.

Radar plot of individual participant psychometric profiles, highlighting diversity in responses.

4.2.3. Association Between Task Completion and Engagement

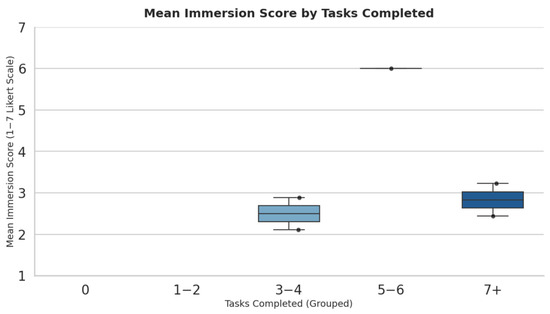

Boxplots (Figure 9 and Figure 10) visualise the relationship between the number of tasks completed and mean accomplishment or immersion scores. Due to the small sample size, only the “1–2 tasks” group is represented. Still, clear trends are visible: participants who completed more tasks reported higher accomplishment and slightly elevated immersion, though the latter dimension displayed greater variability. Outliers in both directions (i.e., highly engaged but minimally active, or vice versa) suggest that engagement is shaped by the quantity of participation and individual motivational drivers.

Figure 9.

Boxplot of mean accomplishment scores by grouped task completion, with superimposed jittered data points for clarity.

Figure 10.

Boxplot of mean immersion scores by grouped task completion, similarly annotated.

4.3. Qualitative Insights

To complement quantitative findings and gain a richer understanding of the participant experience, we conducted a thematic analysis of post-experiment interviews. This qualitative inquiry was designed to uncover the nuanced motivational, behavioural, and emotional pathways that shaped engagement throughout the gamified citizen science intervention. By systematically coding interview transcripts, we identified recurring themes relating to initial motivations, perceived barriers, the impact of specific gamification elements, and the broader social-emotional context of participation. The following synthesis presents the main themes and illustrative quotations and subsequently contrasts these qualitative insights with the patterns observed in quantitative data. This integrated approach provides a comprehensive account of how and why university students engaged with the platform, as well as the practical and psychological factors influencing their sustained participation.

4.3.1. Motivations for Participation

- Curiosity and novelty: several participants expressed initial curiosity about participating in a real-world experiment using a digital platform. One stated that “I wanted to see how it worked and if the platform would motivate me to do the tasks”.

- Desire to contribute: a recurring theme was the desire to contribute to environmental improvement on campus: “It felt good to think our observations could help the university or city get better”.

- Appreciation for recognition: some cited the value of being recognised or rewarded, even if modestly: “I participated more because I knew there was a leaderboard and some prizes, but not only for that”.

4.3.2. Barriers and Constraints

- Time management: all participants mentioned that balancing the experiment with their academic workload was a challenge: “Some days I just forgot or was too busy to go to the POIs”.

- Repetitiveness and task fatigue: a few noted the repetitive nature of tasks as a demotivating factor by the end of the week: “At first it was fun, but by day three it felt like doing the same thing”.

4.3.3. Perceptions of Gamification

- Leaderboard and points: most reported that seeing their position on the leaderboard was a motivator for continued participation, but only up to a point: “I liked checking if I was going up, but when I saw I couldn’t catch up, I just did it for myself”.

- Prizes as acknowledgement: participants did not view the material prizes as the main incentive, but as a positive gesture: “I would have done it anyway, but it was nice to have a little prize at the end”.

- Fairness and engagement: some voiced that the system was fair because everyone had the same opportunity each day, but also suggested ways to make the game more dynamic, such as varying tasks or giving surprise bonuses.

4.3.4. Social and Emotional Aspects

- Sense of community: in the interview with two participants, there was discussion of sharing experiences with classmates, even if there was no formal team component: “We talked about it in class, comparing our scores and photos”.

- Enjoyment and frustration: while most participants described the experience as “fun” or “interesting”, minor frustrations included technical glitches and a lack of immediate feedback after submitting their work.

4.4. Integration of Results

To better understand how participants experienced gamified engagement, we synthesised quantitative and qualitative results across key dimensions. Accomplishment received relatively high scores (M = 5.25, median = 5.75, SD = 1.14), indicating that most participants felt a sense of achievement. This aligns with interview excerpts where users described the satisfaction of completing tasks and being rewarded with points. In contrast, immersion scores were lower and more variable (M = 3.3, median = 2.88, SD = 1.55), suggesting inconsistent emotional involvement. Participants explained that while tasks were initially engaging, they became repetitive over time. Table 2 presents a joint display connecting these patterns with corresponding qualitative insights.

Quantitative results established that accomplishment, reflecting goal-oriented motivation and perceived progress, was consistently high across participants, as evidenced by the elevated mean scores on the GAMEFULQUEST subscale. Interview themes strongly echo this; participants described a sense of satisfaction in completing tasks, a desire to contribute positively to their environment, and a general appreciation for being recognised through the platform’s feedback mechanisms. The leaderboard and point systems acted as immediate, visible markers of achievement, reinforcing the high accomplishment scores reported.

In contrast, immersion scores fluctuated and remained moderate; qualitative feedback clarifies this trend, as participants initially found the tasks engaging, but increasingly found it repetitive by the end of the week. The lack of narrative variation or adaptive feedback contributed to diminished emotional absorption, aligning with the lower and more dispersed quantitative immersion scores.

Motivational pathways proved multifaceted. While gamification elements, such as points, leaderboards, and small prizes, generated initial enthusiasm and healthy competition, intrinsic motivators—such as personal interest, curiosity, and a sense of civic contribution—emerged as the dominant sustaining factors. Participants emphasised that while external rewards were appreciated, they were not the principal drivers of engagement. These findings support the central tenets of self-determination theory [50], particularly the importance of autonomy and competence in fostering lasting involvement.

Barriers to sustained participation, including academic workload, forgetfulness, and task repetitiveness, were reported in both data streams. Interviewed students specifically cited time constraints and the challenge of integrating participation into daily routines, which is consistent with the modest participation rate and the observed drop-off in task completion after initial days. Suggestions for improvement, such as more varied tasks and adaptive motivational messages, provide actionable directions for future platform iterations.

Overall, the triangulation of data reveals that while gamification successfully enhanced participants’ sense of accomplishment and initial engagement, its impact on deeper immersion was limited by task design and contextual constraints. The findings highlight the importance of balancing extrinsic motivators with opportunities for intrinsic satisfaction and underline the need for adaptive, user-centred design to sustain engagement in real-world citizen science settings.

5. Discussion

5.1. Interpretation of Findings

This exploratory study provides nuanced empirical insights into the behavioural and experiential effects of gamification on participant engagement, perceived accomplishment, and immersion within the context of a spatial crowdsourcing intervention in citizen science among university students. Quantitative analyses revealed that game elements—primarily points and leaderboards—significantly elevated participants’ sense of accomplishment, with consistently high scores on the goal orientation and performance subscales. However, immersion, conceptualised as flow-like absorption in the task, was more variable and generally moderate across the sample.

These trends align with previous findings by Koivisto and Hamari [54], who noted that the motivational impact of gamification tends to wane as the novelty fades. Similarly, Hanus and Fox [67] reported that while points and leaderboards can enhance short-term engagement, they may undermine intrinsic motivation over time. Our observation of reduced immersion by the end of the intervention resonates with these patterns. Additionally, Miller et al. [25] found that gamified citizen science environments may fail to sustain engagement when tasks become repetitive or feedback lacks perceived value, an insight echoed by participants in this study.

Although participants did not explicitly report feelings of stress or pressure related to competition, some noted that the leaderboard initially motivated them to engage but became less relevant in later stages of the intervention. For example, participants expressed that while they enjoyed tracking their progress, their behaviour was not primarily driven by outperforming others. This suggests that the competitive elements were not universally experienced as intense or stressful, but rather as supplementary motivators whose influence varied across individuals. These findings are consistent with the literature on gamification, which highlights that leaderboards can have mixed effects depending on users’ goals, perceptions of fairness, and personal orientation toward competition.

These findings align with the predictions of self-determination theory [50], which posits that engagement flourishes when activities fulfil basic psychological needs for competence, autonomy, and relatedness. The gamification mechanics employed in GAMEDCROWD appeared particularly effective in satisfying the need for competence, as reflected in participants’ reported satisfaction with progression, recognition, and achievement. Nevertheless, qualitative feedback highlighted that the repetitive structure and lack of narrative diversity limited the emergence of deep immersion. This result aligns with prior studies that emphasise the critical role of adaptive, personalised feedback and contextual variation in sustaining flow states in gamified environments.

Intrinsic motivators—such as curiosity, civic contribution, and personal interest—emerged as key sustaining factors. While extrinsic rewards initially provided a boost in engagement, most participants reported that they were secondary to the internal satisfaction of completing tasks and contributing to campus improvement. This aligns with recent meta-analyses [68], which reinforce that gamification should complement, rather than replace, intrinsic motivation.

5.2. Practical Implications

For the design of citizen science platforms, these results underscore the importance of integrating game mechanics that foster competence and provide visible, meaningful feedback, while also attending to the diversity and adaptability of task design. Leaderboards and point systems can be robust in maintaining early engagement, but sustaining long-term participation likely requires greater narrative richness and the possibility for users to shape their experience, whether through task variety, personalised feedback, or social features.

At the university level, interventions aimed at mobilising digitally literate, yet time-constrained, student populations may benefit from “lightweight” gamification layers that emphasise contribution recognition and community impact. Ensuring transparency in the reward system, minimising competition for high-value prizes, and supporting intrinsic drivers such as campus stewardship are all recommended to maximise participation and data quality.

While these trends suggest meaningful relationships between the GAMEDCROWD gamification features and participants’ reported experiences, it is important to note that the study lacked a non-gamified control group. As a result, we cannot attribute observed changes in engagement, achievement, or immersion exclusively to the intervention itself, as other external or contextual factors may have influenced participant behaviour. Nevertheless, the convergence of findings across quantitative scores, behavioural data, and qualitative feedback enhances the plausibility of the proposed mechanisms. Future studies should include a control condition to allow for more rigorous testing of causal effects.

5.3. Methodological Reflections

This study demonstrates the value of a convergent mixed-methods approach, particularly in small-N, exploratory research contexts. By triangulating psychometric instruments and semi-structured interviews, we achieved both breadth and depth of insight, thereby overcoming the limitations of any single data source. Using validated measures (e.g., GAMEFULQUEST) ensured psychometric rigour, while qualitative inquiry provided the necessary context to interpret individual variation and identify mechanisms underpinning the observed trends. This methodological pluralism is increasingly recognised as best practice in the study of complex interventions in citizen science and digital engagement [52,66].

While this study combined validated self-report instruments and post-intervention interviews to capture perceived accomplishment and emotional immersion, we recognise that engagement logs also provide valuable objective behavioural traces [69]. Although our current analysis focused on aggregated behavioural indicators—such as task completion rate, spatial diversity, and temporal consistency—future research may benefit from more granular techniques such as sequence pattern mining or log trajectory analysis. These methods have proven effective in identifying temporal engagement patterns and micro-level transitions between interaction states, offering a richer understanding of motivational dynamics and disengagement processes in asynchronous gamified environments [70,71]. When triangulated with psychometric data and qualitative insights, such multi-layered analyses can substantially deepen our understanding of engagement structures in citizen science platforms.

5.4. Limitations

First, the small analytical sample (n = 7 for behavioural metrics; n = 5 for psychometric responses) and the restriction to a single university setting limit the generalizability of the findings. The observed effects may reflect idiosyncratic characteristics of the local context, cohort, or institutional culture. Second, potential self-selection and response bias may have inflated estimates of engagement or masked fewer positive experiences; for example, more motivated or tech-savvy students may have been disproportionately likely to participate and complete post-experiment surveys. Third, the near-universal digital proficiency of the sample precludes direct inference to populations with lower technology familiarity.

While 49 participants registered and 40 completed the baseline socio-demographic survey, only 7 participants submitted at least one full set of geolocated tasks, and just 5 completed the post-intervention psychometric questionnaires. This steep drop-off in participation reflects a common challenge in field-based exploratory studies, particularly when participation is voluntary and time-constrained within academic settings. Although this limits statistical generalisability, the study still offers valuable insights into early engagement dynamics and motivational pathways, which are relevant for the design of future, larger-scale interventions. We acknowledge this limitation and have framed our findings accordingly within an exploratory scope.

Furthermore, the short duration of the intervention (five days) limits the ability to observe longer-term engagement patterns, and no statistical control was applied for demographic variables such as gender, study major, or socio-economic background, due to the small population of the sample.

Inferential statistics were not applied due to the contextual variability within each gamification condition. However, future studies with larger and more stable samples will explore non-parametric methods to estimate group-level behavioural differences more robustly.

5.5. Future Research

Future research should pursue larger-scale, longitudinal studies across multiple campuses or community contexts to assess the robustness and sustainability of gamification effects over time. Comparative designs, including non-gamified control groups, will be critical for disentangling the specific drivers of engagement and data quality. Investigating adaptive gamification—where feedback, challenges, and rewards evolve in response to user behaviour and preferences—represents a promising direction for maximising both inclusivity and effectiveness. Finally, exploring the intersection of gamification with social, ethical, and equity concerns remains vital as citizen science platforms scale and diversify. In contexts where the crowdsourced topic directly affects participants’ daily lives, such as noise pollution, air quality crises, or local infrastructure issues, intrinsic motivation tends to be higher. In such cases, gamification may shift from stimulating interest to sustaining engagement and enhancing data quality.

Furthermore, future research should examine gamification strategies for push-based task models, where the system actively assigns tasks to users rather than relying on voluntary selection. This shift alters the motivational dynamics, requiring mechanisms that reward punctuality, responsiveness, and reliability rather than exploration or initiative. Designing adaptive, context-sensitive incentives for push scenarios—such as urgency bonuses, streak-based rewards, or reputation systems—may be crucial to maintaining engagement and ensuring high-quality data in time-sensitive or location-critical citizen science applications.

Future studies should consider incorporating specific measures to capture the emotional impact of competition, such as stress, frustration, or disengagement, particularly in leaderboard-based feedback. Implementing optional or user-configurable competitive elements may help accommodate different motivational profiles and mitigate unintended adverse effects. Additionally, future studies should assess whether gamified participation leads to sustained real-world behavioural change, such as increased environmental awareness, reporting habits, or civic involvement beyond the platform.

6. Conclusions

This study provides timely, empirical evidence regarding the impact of gamification on engagement, accomplishment, and immersion in spatial crowdsourcing. Our mixed-methods field experiment with university students reveals that carefully integrating game elements, such as points, daily streak bonuses, and real-time leaderboards, can substantially enhance participants’ sense of accomplishment and goal-directed engagement. However, the translation of these mechanics into more profound immersive “flow” experiences remains limited, highlighting the challenge of sustaining emotional and cognitive absorption over time in repetitive, real-world data collection settings.

The central hypothesis, that introducing game elements would produce significant gains in engagement and perceived accomplishment relative to expectations for non-gamified activities, finds partial support. Quantitative and qualitative results demonstrate that gamified feedback mechanisms boost initial participation and motivation, particularly by fulfilling needs for competence and recognition (RQ1, RQ2). However, these effects are modulated by intrinsic motives, such as the desire to contribute to campus or community, and are tempered by barriers, including task fatigue (RQ3). The sustainability of engagement thus appears to be contingent upon a dynamic balance between extrinsic and intrinsic drivers, as well as the diversity and adaptability of platform design.

For research, these findings underscore the value of mixed-methods evaluation in unpacking not just the “how much” but also the “why” and “for whom” of gamification impacts. Methodological pluralism—combining psychometric assessment with qualitative insights—proves critical for understanding both the affordances and the boundaries of gamification in citizen science. Future studies should extend these insights to more diverse populations and longer-term interventions, with a focus on adaptive, user-centred mechanics.

For practice, our results offer clear guidance to designers of citizen science platforms and tech-savvy digital interventions. Game mechanics should be deployed not merely as superficial add-ons, but as thoughtfully integrated features that reinforce competence, provide transparent and meaningful feedback, and recognise contributions equitably. Attention must also be paid to minimising barriers, refreshing task variety, and cultivating a sense of community, all of which are essential for maintaining both engagement and data quality at scale.

Ultimately, while gamification holds considerable promise for broadening participation and enhancing the user experience in spatial crowdsourcing, its effectiveness depends on nuanced design, contextual sensitivity, and ongoing evaluation. As digital citizen observatories continue to proliferate in smart cities and academic contexts alike, evidence-based gamification strategies will be indispensable for transforming episodic volunteering into sustainable, impactful civic engagement.

Author Contributions

Conceptualization, F.V.-B., D.L.-d.-I., M.E., C.O.-R. and Z.K.; methodology, F.V.-B., D.L.-d.-I. and C.O.-R.; software, F.V.-B.; validation, F.V.-B., D.L.-d.-I., M.E., C.O.-R., Z.K. and K.S.; formal analysis, F.V.-B.; investigation, F.V.-B., M.E. and C.O.-R.; resources, D.L.-d.-I. and M.E.; data curation, F.V.-B.; writing—original draft preparation, F.V.-B. and D.L.-d.-I. writing—review and editing, F.V.-B., D.L.-d.-I., M.E., C.O.-R., Z.K. and K.S.; visualisation, F.V.-B.; supervision, D.L.-d.-I. and C.O.-R.; project administration, F.V.-B. and D.L.-d.-I.; funding acquisition, D.L.-d.-I. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the DEUSTEK5 project (Grant ID IT1582-22) and the Basque University System’s A-grade Research Team Grant.

Data Availability Statement

The dataset generated and analysed during this study is publicly available at: https://doi.org/10.5281/zenodo.15387354 (accessed on 14 May 2025). The gamification engine implemented in the study is accessible at: https://github.com/fvergaracl/game (accessed on 14 May 2025).

Acknowledgments

We sincerely thank all the university students who participated in the field experiment and contributed their time and insights to this study.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

| SDG | Sustainable Development Goals |

| VTAE | Volunteer Task Allocation Engine |

| SDT | Self-determination theory |

| GDPR | General Data Protection Regulation |

| POI | Point of interest |

| WoS | Web of Science |

| PBL | Points, badges, and leaderboards |

| GAMEFULQUEST | Gameful Experience Questionnaire (validated psychometric scale) |

| IQR | Interquartile range (statistical measure) |

| M | Mean (statistical average) |

| SD | Standard deviation (statistical measure) |

| RQ | Research question |

| IAM | Identity and access management |

| QUAN | Quantitative (in mixed-methods research design) |

| QUAL | Qualitative (in mixed-methods research design) |

Appendix A. Assigned Task at Each Point of Interest

Part 1: Initial Observations

Appendix B. Experiment Protocol: Evaluation of Gamification Impact in GREENCROWD

1. Justification and Ethical Considerations

Rationale for the Experiment:

As part of the previously approved project, we propose the inclusion of a complementary experiment to evaluate the impact of the GREENCROWD gamification strategy on user participation in collaborative tasks. The experiment will be integrated in a controlled and structured manner, fully aligned with established ethical principles.

Why is this experiment being added?

The primary objective is to empirically validate, through simulation and statistical analysis, the effect of the various dimensions of the gamification system on participant behaviour. This study aims to:

- -

- Understand how reward mechanisms (base points, geolocation equity, time diversity, personal performance, and participation streaks) influence participant motivation.

- -

- Identify whether some aspects of the system may generate unintended effects (e.g., inequality in reward distribution or demotivation among specific participant profiles).

- -

- Enhance the quality and fairness of the system before its large-scale implementation.

Added Value:

This experiment contributes:

- A systematic analysis of participatory behaviour under different reward conditions.

- Quantitative evidence on the effectiveness of the system’s design.

- A scientific foundation for adjusting or scaling the gamification model, in alignment with principles of fair and inclusive participation.

Ethical Risk Assessment:

No significant additional ethical risks are anticipated. The following considerations are addressed:

- Voluntary participation: for real participants, explicit informed consent will be obtained in accordance with the approved protocol.

- Privacy and anonymity: the experiment may utilise simulated or anonymised data. GREENCROWD does not collect email addresses, only a unique participant identifier. If real data is used, all approved safeguards (anonymisation, pseudonymisation, and restricted access) will be applied.

- Right to withdraw: participants may cancel at any time by referencing their unique ID, after which all associated data will be deleted.

- Data scope: no additional sensitive data will be collected, and the original data processing purpose remains unchanged.

- Use of results: the results are solely for scientific evaluation and system improvement, with no individual negative consequences.

- No adverse consequences: the gamification system does not impact access to external resources or services. Any modifications will be based on fairness and equity.

2. Overview of GREENCROWD Data Collection

- Consent Form:

Participants are presented with a clear and comprehensive consent form that allows withdrawal at any time under the same conditions.

- b.

- Socio-Demographic Survey:

Participants complete the same socio-demographic questionnaire as in the main project.

- c.

- Data Collection:

Only data generated during active application use is collected (no passive tracking).

- d.

- Post-Experiment Engagement Survey:

To measure engagement with the gamified platform, participants complete the following validated questionnaire (GAMEFULQUEST, adapted for the GREENCROWD context):

3. Engagement Questionnaire: GAMEFULQUEST (Accomplishment And Immersion Dimensions)

Instructions:

“Please indicate how much you agree with the following statements, regarding your feelings while using GREENCROWD as a tool for [language learning/data collection, adapt as needed].”

Each question is answered using a 7-point Likert scale:

(1) Strongly disagree, (2) Disagree, (3) Somewhat disagree, (4) Neither agree nor disagree, (5) Somewhat agree, (6) Agree, (7) Strongly agree.

Accomplishment

- -

- It makes me feel that I need to complete things.

- -

- It pushes me to strive for accomplishments.

- -

- It inspires me to maintain my standards of performance.

- -

- It makes me feel that success comes through accomplishments.