Measures of Effectiveness Analysis of an Advanced Air Mobility Post–Disaster Response System

Abstract

1. Introduction

2. Methods

2.1. Measures of Effectiveness

| = System of interest (or candidate) effectiveness | |

| = Weight of ith MOE | |

| i | = 1 … n represents the position of the measure |

| = the alternative’s score on the ith measure |

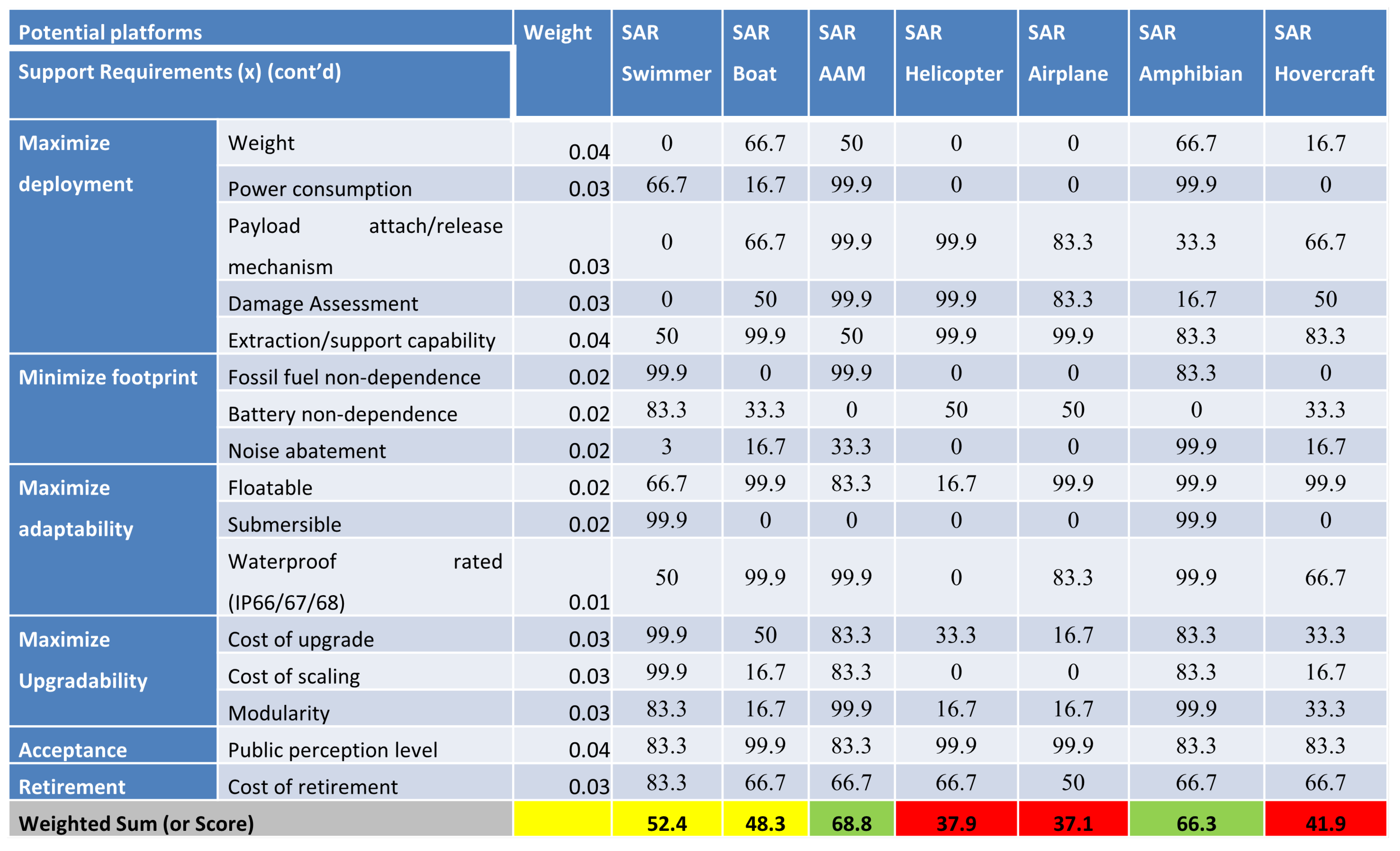

2.2. The Pugh Matrix—Concept Selection

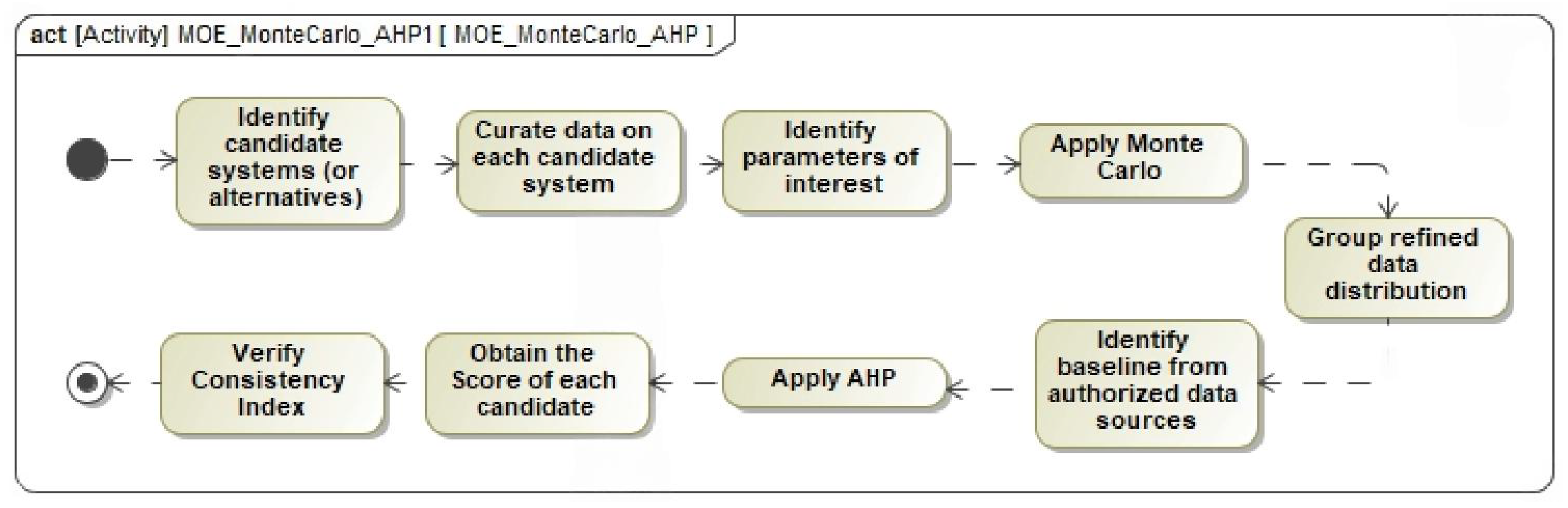

2.3. The Monte Carlo–Analytical Hierarchical Hybrid Process (MC–AHHP)

3. Results

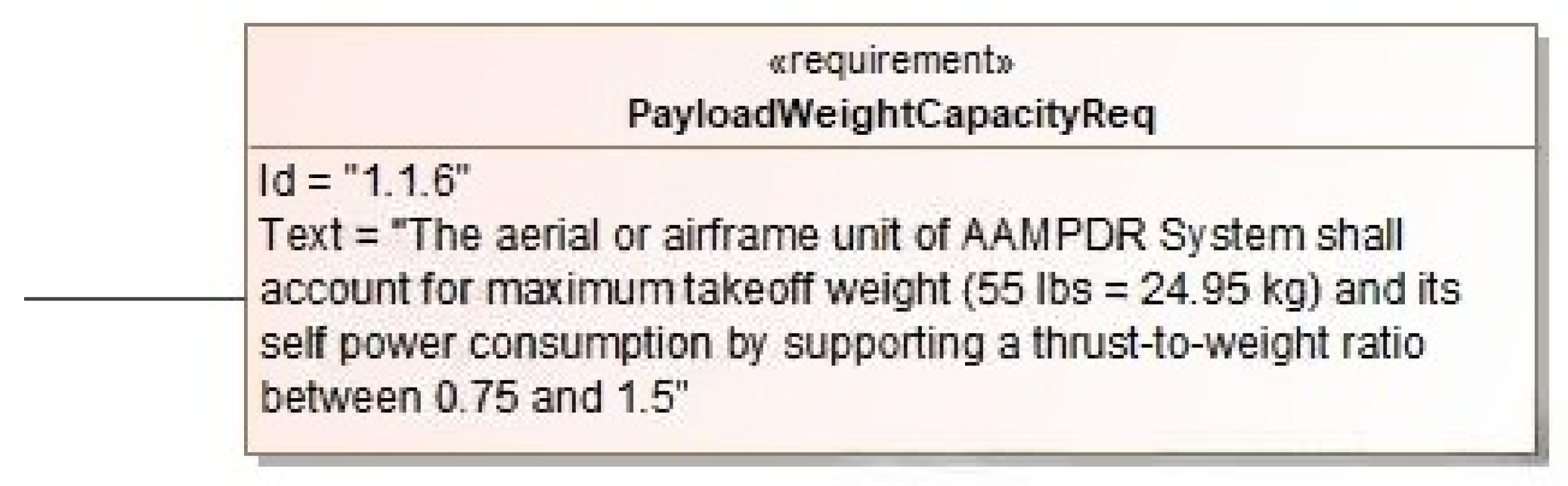

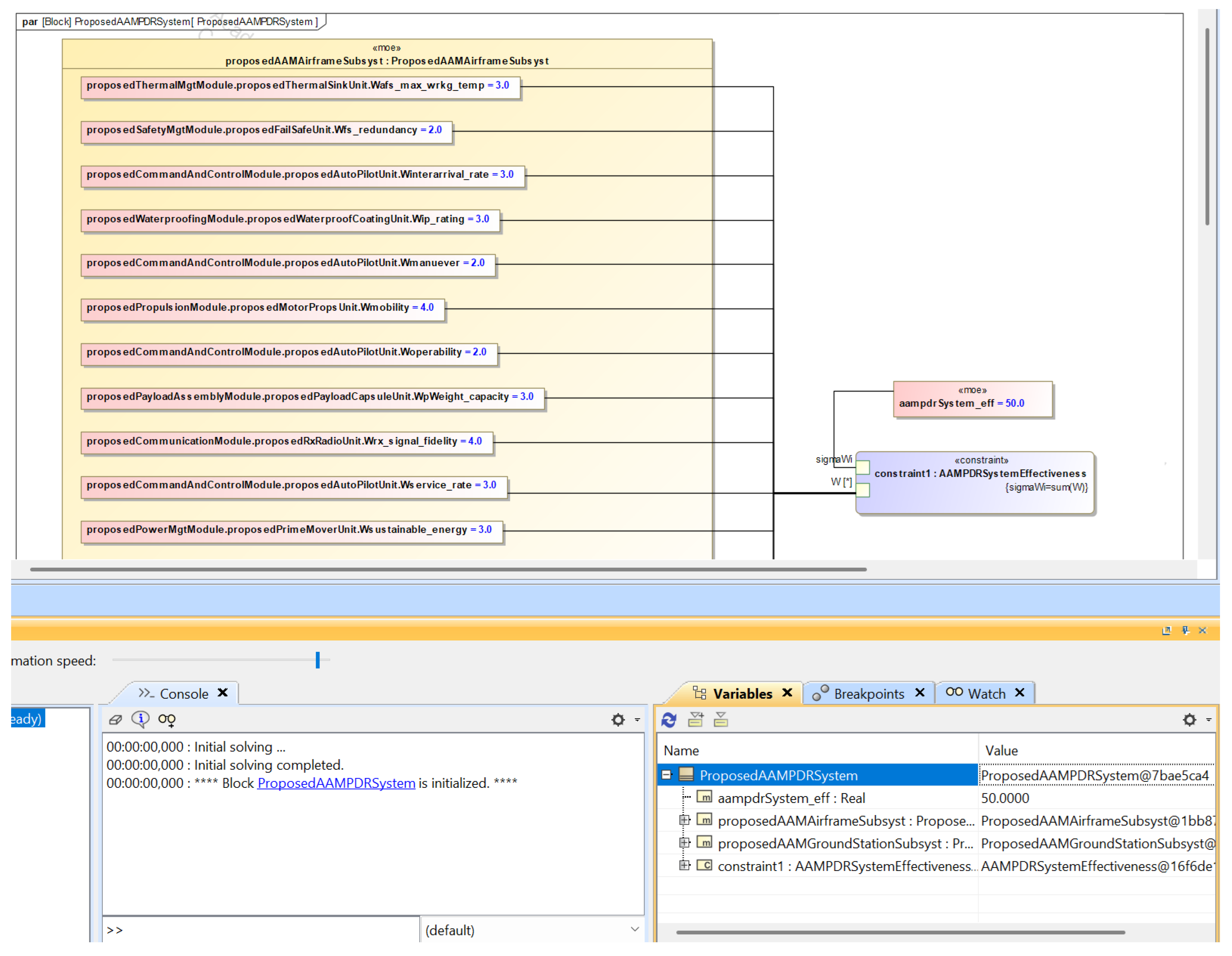

3.1. Measures of Effectiveness (SysML)

| = AAMPDR system effectiveness | |

| = the weight of ith MOE | |

| i | = 1 … n represents the position of the measure |

| = the alternative’s score on the ith measure |

- = the weight assigned to the AAM airframe subsystem maximum working temperature monitored by the thermal management module4.

- = the weight assigned to the AAM system fail safe redundancy unit under the safety management module.

- = the weight assigned to the AAM airframe subsystem measure of interarrival rate under the allocation of the command and control module.

- = the weight assigned to the AAM airframe measure of water.

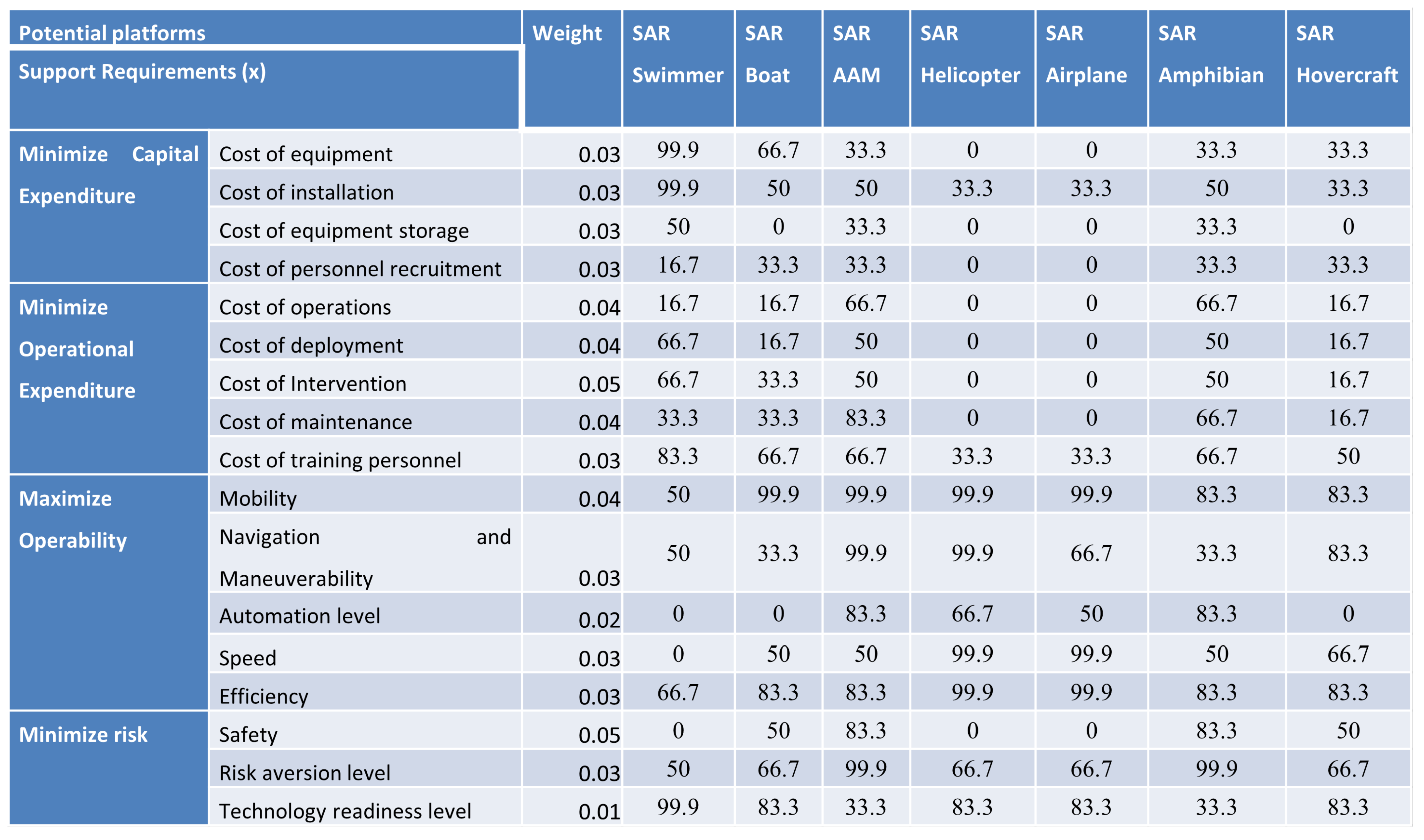

3.2. Pugh Matrix Analysis

- To provide an emergency response team with support,

- To visually assess the level of damage to affected areas,

- To support ER workers in locating survivors and casualties by providing real-time imagery and live video feed of affected residential areas,

- To deliver on-demand and critical intervention in the form of medical aid and food packs to casualties and survivors,

- To map the affected area in addition to assessing it and upload the map onto a database,

- To develop the device or means such that it shall be operable in water due to the flooding that accompanies tropical cyclones.

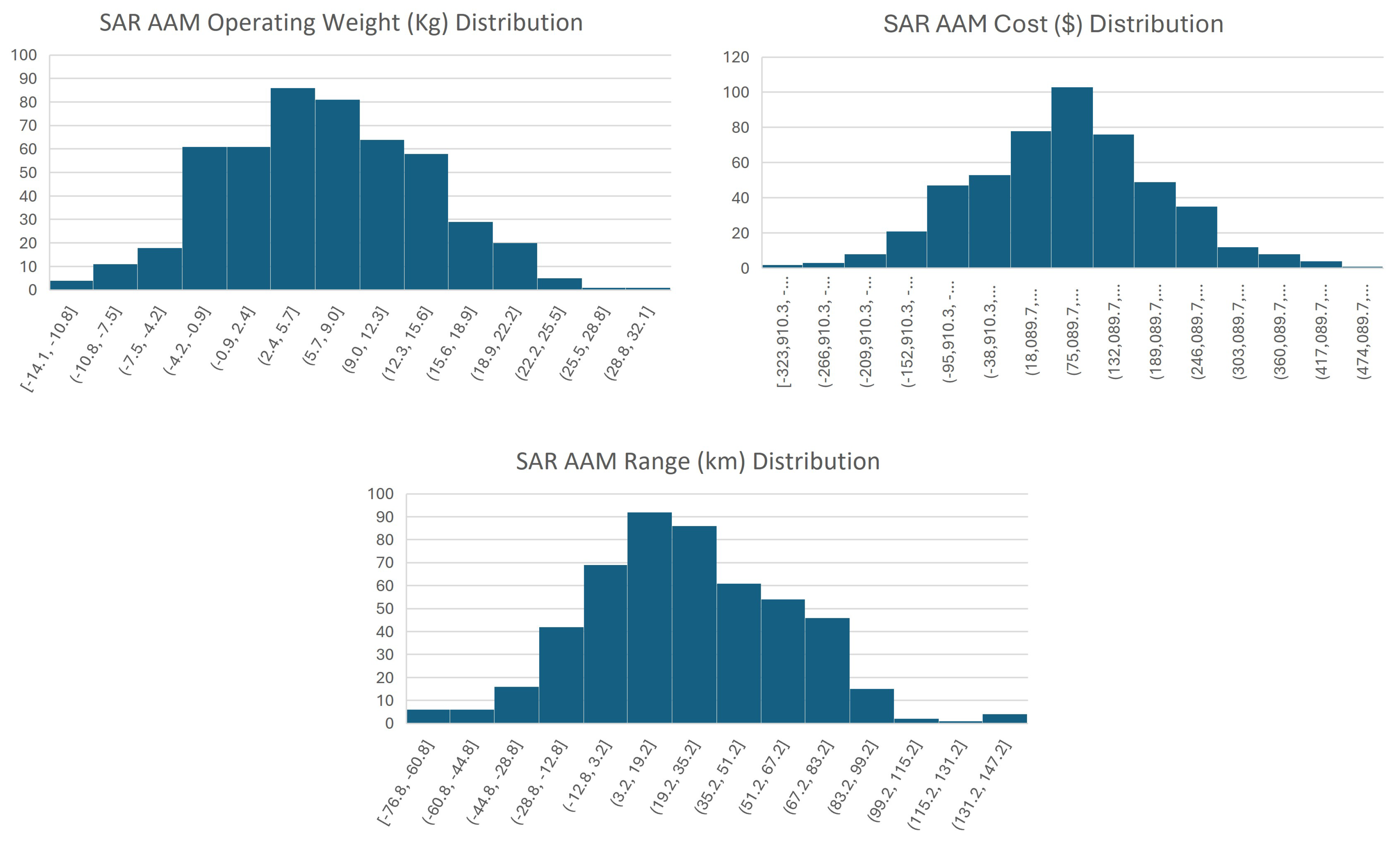

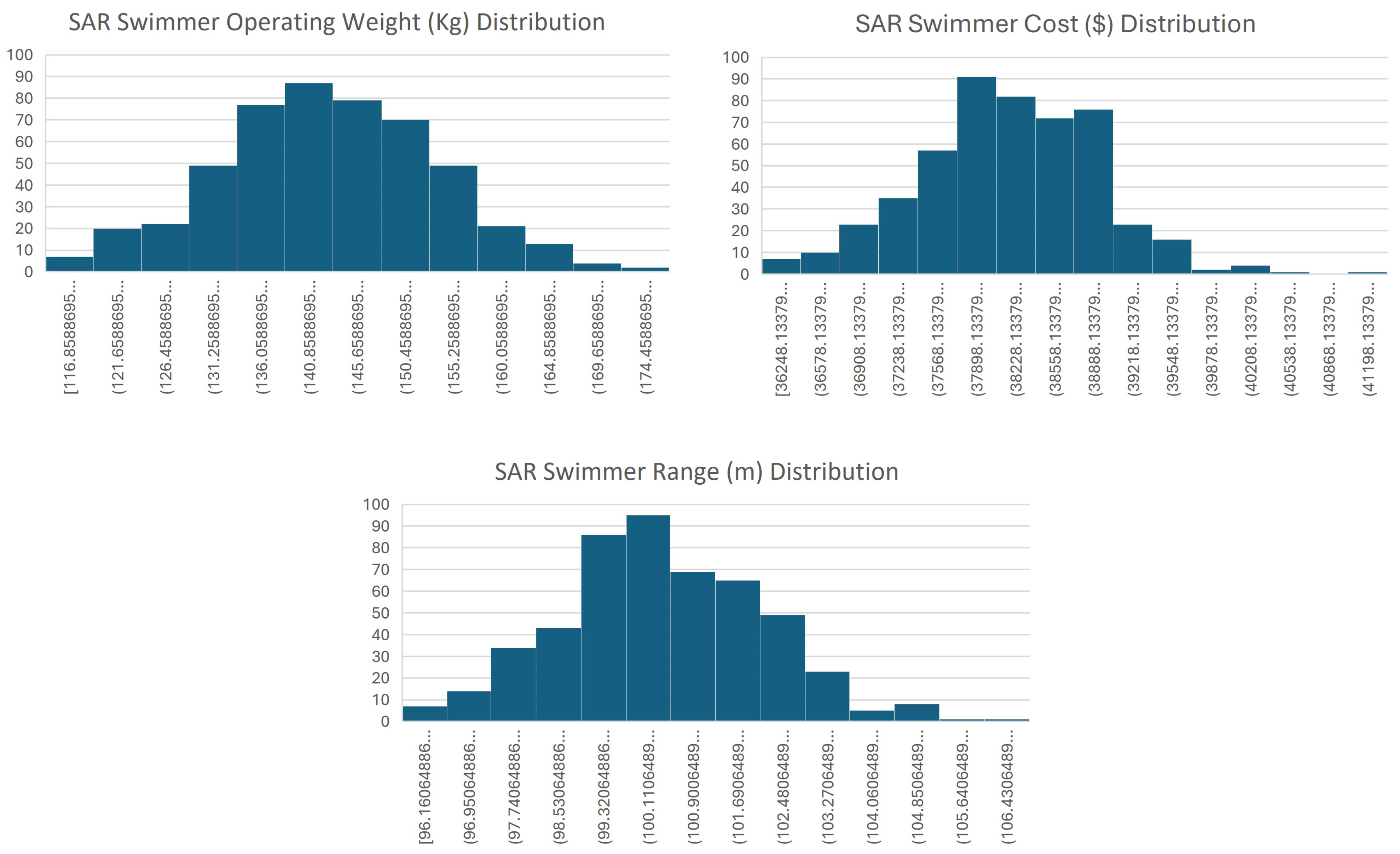

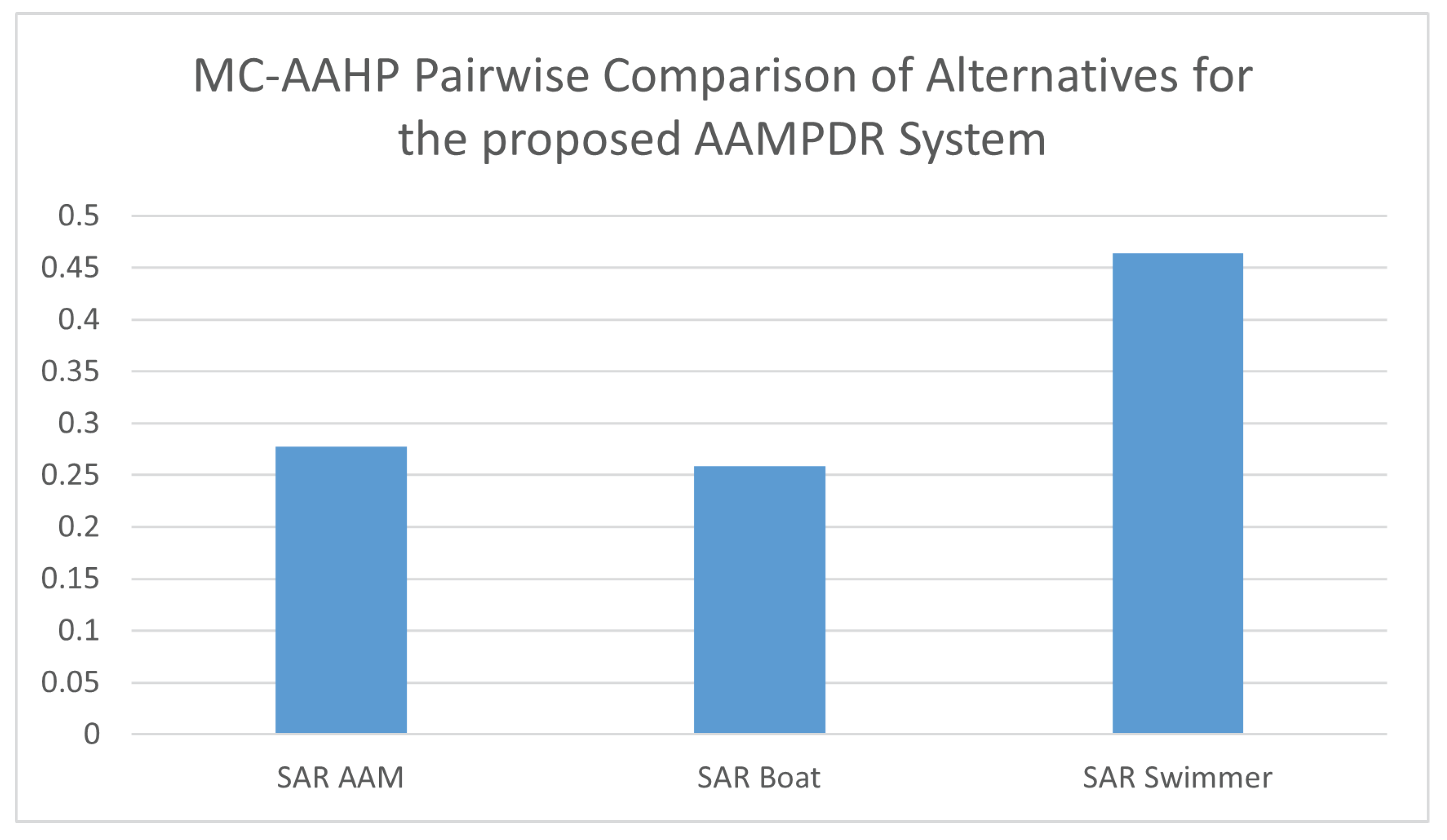

3.3. The Monte Carlo–Analytical Hierarchical Hybrid Process (MC–AHHP) Analysis

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AAM | Advanced Air Mobility |

| AAMPDR | Advanced Air Mobility Post-Disaster Response |

| AHP | Analytical Hierarchy Process |

| FAA | Federal Aviation Administration |

| FEMA | Federal Emergency Management Agency |

| MC–AHHP | Monte Carlo–Analytical Hierarchical Hybrid Process |

| MCDM | Multi-criteria Decision Making |

| MCM | Monte Carlo Method |

| NASA | National Aeronautic and Space Administration |

| NOAA | National Oceanic and Atmospheric Administration |

| SAR | Search and Rescue |

| SysML | System Modeling Language |

| UAV | Unmanned Aerial Vehicle |

| WUTA | Weighted Utility Additive Theory |

| 1 | |

| 2 | Readers should note the implied assumption in this study, which is the introduction of Gaussian noise into the Monte Carlo segment of the MC–AHHP process. This is owing to the pseudo-randomness of the numbers generated. |

| 3 | Refer to [19] for the details on the requirements for the AAMPDR system. |

| 4 | Refer to [19] for the complete logical architecture SysML artifacts depicting the different levels of decomposition for the proposed AAMPDR system. These include (but not limited to) the thermal, safety, command and control management modules |

References

- Kroese, D.P.; Brereton, T.; Taimre, T.; Botev, Z.I. Why the Monte Carlo method is so important today. Wiley Interdiscip. Rev. Comput. Stat. 2014, 6, 386–392. [Google Scholar] [CrossRef]

- Vargas, L.G. An overview of the analytic hierarchy Process and its applications. Eur. J. Oper. Res. 1990, 48, 2–8. [Google Scholar] [CrossRef]

- Friedenthal, S.; Moore, A.; Steiner, R. A Practical Guide to SysML: The Systems Modeling Language, 3rd ed.; Morgan Kaufmann: Burlington, MA, USA, 2014; pp. 1–599. ISBN 978-0-12-800202-5. [Google Scholar]

- Green, J.M. Establishing system measures of effectiveness. In Proceedings of the 2nd Biennial National Forum on Weapon System Effectiveness, Laurel, MD, USA, 27–29 March 2001; pp. 1–5. [Google Scholar]

- Cilli, M.V.; Parnell, G.S. 4.3.1 Systems Engineering Tradeoff Study Process Framework. In INCOSE International Symposium; Wiley Online Library: Hoboken, NJ, USA, 2014; pp. 313–331. [Google Scholar] [CrossRef]

- Anyanhun, A.I.; Anzagira, A.; Edmonson, W.W. Intersatellite communication: An MBSE operational concept for a multiorbit disaggregated system. IEEE J. Miniaturization Air Space Syst. 2020, 1, 6–65. [Google Scholar] [CrossRef]

- Reed, C.M.; Fenwick, A.J. A consistent multi-user framework for assessing system performance. arXiv 2010, arXiv:1011.2048. [Google Scholar]

- Rosenbloom, E.S. A probabilistic interpretation of the final rankings in AHP. Eur. J. Oper. Res. 1996, 96, 371–378. [Google Scholar] [CrossRef]

- Momani, A.M.; Ahmed, A.A. Material handling equipment selection using hybrid Monte Carlo simulation and analytic hierarchy process. Int. J. Ind. Manuf. Eng. 2011, 5, 2177–2182. [Google Scholar]

- Chakraborty, S.; Banik, D. Design of a material handling equipment selection model using analytic hierarchy process. Int. J. Adv. Manuf. Technol. 2006, 28, 1237–1245. [Google Scholar] [CrossRef]

- Reddy, J.N. An Introduction to Continuum Mechanics, 2nd ed.; Cambridge University Press: New York, NY, USA, 2006; pp. 1–449. ISBN 978-1-107-02543-1. [Google Scholar]

- Karande, P.; Chakraborty, S. Material handling Equipment Selection Using Weighted Utility Additive Theory. J. Ind. Eng. 2013, 2013, 268708. [Google Scholar] [CrossRef]

- Sproles, N. The difficult problem of establishing measures of effectiveness for command and control: A systems engineering perspective. Syst. Eng. 2001, 4, 145–155. [Google Scholar] [CrossRef]

- FAA. NAS System Engineering Manual Version 3.1 Section 4.6. Federal Aviation Authority (FAA). 3.1. June 2006, pp. 1–20. Available online: https://web.archive.org/web/20080916144301/http://www.faa.gov/about/office%5Forg/headquarters%5Foffices/ato/service%5Funits/operations/sysengsaf/seman/SEM3.1/Section%204.6.pdf (accessed on 25 March 2025).

- NASA; Hirshorn, S.R.; Voss, L.D.; Bromley, L.K. Nasa Systems Engineering Handbook, 6th ed.; National Aeronautics and Space Administration (NASA): Washington, DC, USA, 2017; pp. 1–297. Available online: https://web.archive.org/web/20240207045729/https://www.nasa.gov/wp-content/uploads/2018/09/nasa_systems_engineering_handbook_0.pdf (accessed on 25 March 2025).

- Taylor, B.W. Introduction to Management Science, 13th ed.; Pearson: London, UK, 2019; pp. 1–836. ISBN 9780137503933. Available online: https://web.archive.org/web/20230312185505/https://www.pearson.com/en-us/subject-catalog/p/introduction-tomanagement-science/P200000006423/9780137503933 (accessed on 25 March 2025).

- Portner, B.; Crabtree, B.; Kern, J. SMC Systems Engineering Primer & Handbook—Concepts, Processes, and Techniques, 2nd ed.; Space & Missile Systems Center U.S. AirForce: El Segundo, CA, USA, 2004; pp. 1–262. Available online: https://web.archive.org/web/20240630224910/https://www.acqnotes.com/Attachments/SMC%20System%20Engineering%20Handbook.pdf (accessed on 25 March 2025).

- FAA. Part 107 Airspace Authorizations—How to Request a Part 107 Airspace Authorizations; Technical Report; Federal Aviation Administration (FAA). Available online: https://web.archive.org/web/20221123205841/http://web.archive.org/screenshot/https://www.faa.gov/uas/commercial_operators/part_107_airspace_authorizations (accessed on 25 March 2025).

- Olanipekun, O.A.; Montalvo, C.J.; Walker, S.; Wade, J.T.; Lohar, B.R. An Advanced Air Mobility Post–Disaster Response System—An Exemplar SysML Model; Springer Nature: Berlin/Heidelberg, Germany, 2025. [Google Scholar]

- Katie, B.; Coast Guard. Coast Guard Height and Weight Requirements. July 2023. Available online: https://web.archive.org/web/20240219043642/https://www.operationmilitarykids.org/coast-guard-height-and-weight-requirements/ (accessed on 30 July 2023).

- International Maritime Organization (IMO). Regulation 23—Rescue Boats. August 2024. Available online: https://web.archive.org/web/20240831204949/https://www.imorules.com/GUID-CFD93744-4BAA-4C9A-92F2-D223E93A9577.html (accessed on 25 March 2025).

- Patterson, M.D.; Antcliff, K.R.; Kohlman, L.W. A Proposed Approach to Studying Urban Air Mobility Missions Including an Initial Exploration of Mission Requirements. In Proceedings of the AHS International 74th Annual Forum & Technology Display, Pheonix, AZ, USA, 14–17 May 2018; pp. 1–19. Available online: https://web.archive.org/web/20230326200349/https://ntrs.nasa.gov/citations/20190000991 (accessed on 25 March 2025).

- BBC. Rescue Drone Trial Hopes to Save Lives at Sea—BBC News. November 2022. Available online: https://web.archive.org/web/20221107181554/https://www.youtube.com/watch?v=hVMrz0fw4MM (accessed on 22 November 2022).

- Naval Helicopter Association Historical Society. HH-60 J Jayhawks. March 2025. Available online: https://web.archive.org/web/20240705042238/https://www.nhahistoricalsociety.org/coast-guard-hh-60j-jayhawk-helicopter/ (accessed on 25 March 2025).

- United States Coast Guard (USCG). Medium Range Surveillance Aircraft. March 2010. Available online: https://web.archive.org/web/20101201015024/http://uscg.mil/acquisition/mrs/features.asp (accessed on 25 March 2025).

- Robot Platform. Aquatic Robots. August 2012. Available online: https://web.archive.org/web/20191223003911/https://www.robotplatform.com/knowledge/Classification_of_Robots/aquatic_robots.html (accessed on 22 November 2022).

- BBC. Surviving Hurricane Ian: ‘You Have to Swim or Drown’. September 2022. Available online: https://web.archive.org/web/20221020172248/https://www.bbc.com/news/av/world-us-canada-63082360 (accessed on 22 November 2022).

| Standard Preference Level | Index |

|---|---|

| Equally preferred | 1 |

| Equally to moderately preferred | 2 |

| Moderately preferred | 3 |

| Moderately to strongly preferred | 4 |

| Strongly preferred | 5 |

| Strongly to very strongly preferred | 6 |

| Very strongly preferred | 7 |

| Very strongly to extremely preferred | 8 |

| Extremely preferred | 9 |

| Concept Selection | ||||

|---|---|---|---|---|

| Candidates | 1 | 2 | 3… | n |

| 1 | ||||

| 2 | ||||

| 3 | ||||

| ⋮ | ⋮ | ⋮ | ⋮ | ⋮ |

| n | ||||

| n | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

|---|---|---|---|---|---|---|---|---|---|

| RI | 0 | 0.58 | 0.90 | 1.12 | 1.24 | 1.32 | 1.41 | 1.45 | 1.51 |

| Concept Selection | |||

|---|---|---|---|

| Candidates | SA | SB | SS |

| SA | 1 | 1/8 | 7 |

| SB | 8 | 1 | 9 |

| SS | 1/7 | 1/9 | 1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Olanipekun, O.A.; Montalvo, C.J.; Walker, S.G. Measures of Effectiveness Analysis of an Advanced Air Mobility Post–Disaster Response System. Systems 2025, 13, 512. https://doi.org/10.3390/systems13070512

Olanipekun OA, Montalvo CJ, Walker SG. Measures of Effectiveness Analysis of an Advanced Air Mobility Post–Disaster Response System. Systems. 2025; 13(7):512. https://doi.org/10.3390/systems13070512

Chicago/Turabian StyleOlanipekun, Olabode A., Carlos J. Montalvo, and Sean G. Walker. 2025. "Measures of Effectiveness Analysis of an Advanced Air Mobility Post–Disaster Response System" Systems 13, no. 7: 512. https://doi.org/10.3390/systems13070512

APA StyleOlanipekun, O. A., Montalvo, C. J., & Walker, S. G. (2025). Measures of Effectiveness Analysis of an Advanced Air Mobility Post–Disaster Response System. Systems, 13(7), 512. https://doi.org/10.3390/systems13070512