Research on Mobile Agent Path Planning Based on Deep Reinforcement Learning

Abstract

1. Introduction

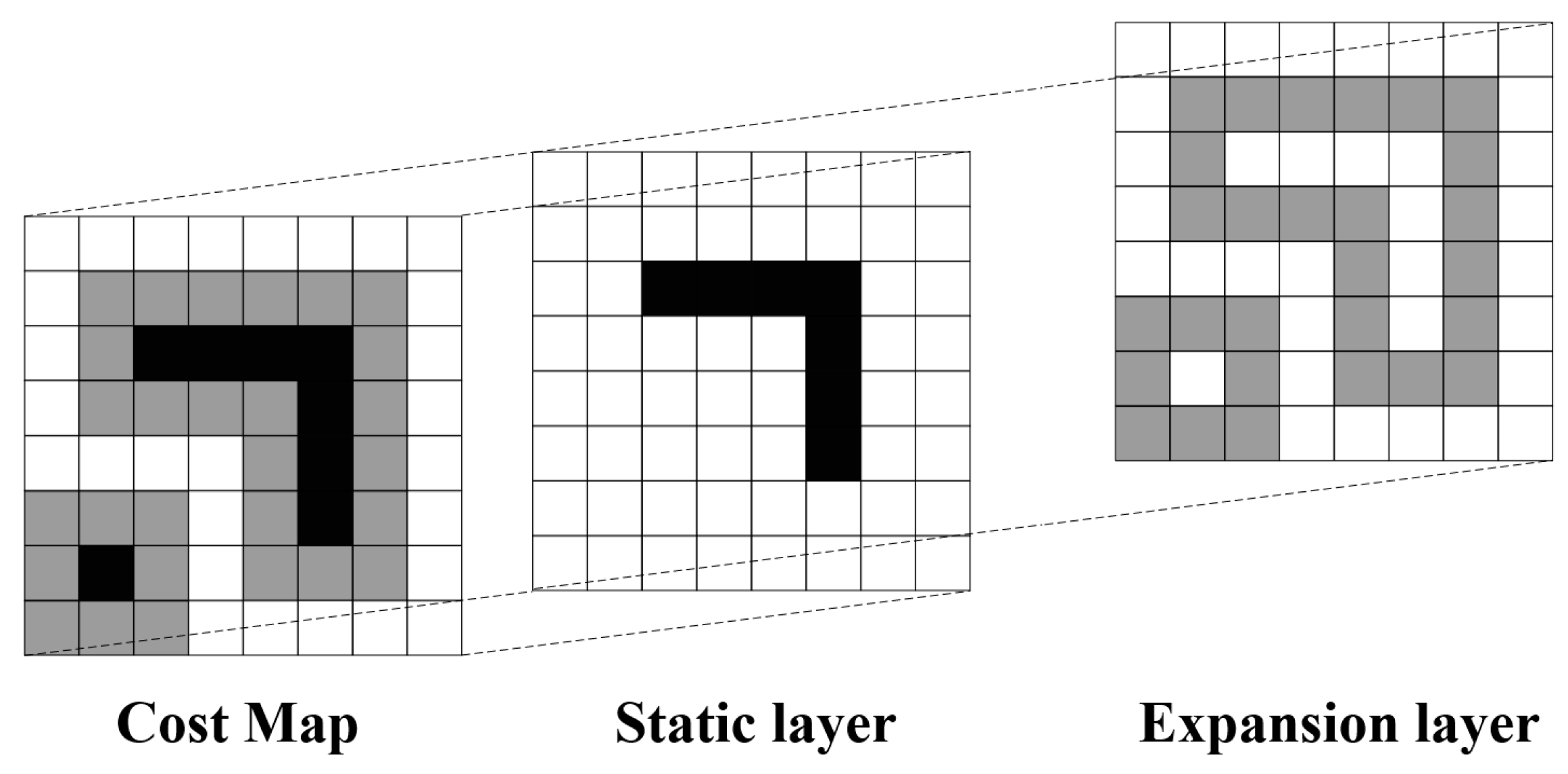

- Environmental Modeling: Constructing a 3D scenario in Gazebo for mobile agent operations. Post Simultaneous Localization and Mapping (SLAM)-based mapping, LiDAR scans generate grid maps optimized via inflation algorithms to enhance the path’s safety;

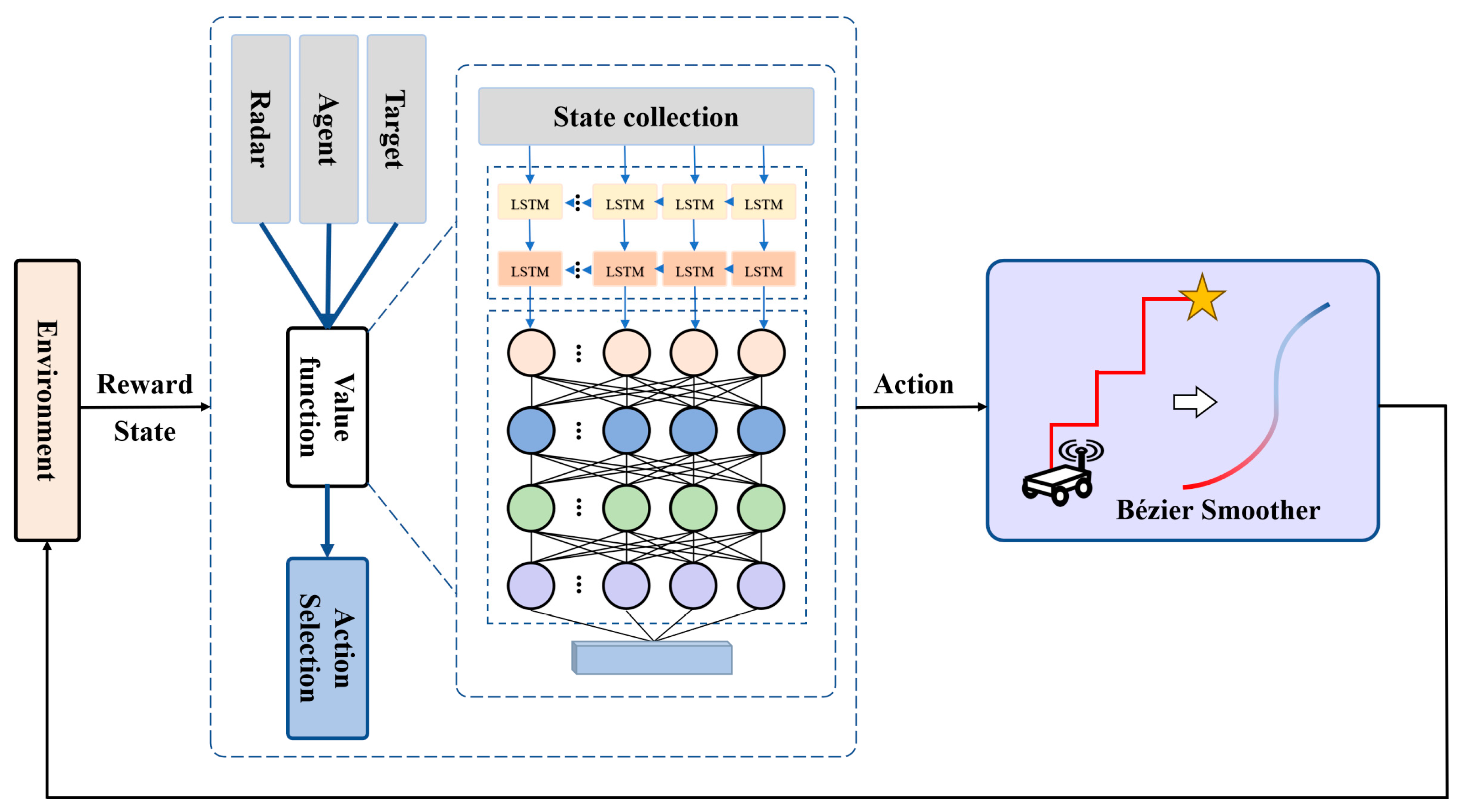

- Path-Planning Framework: Integrating LSTM networks and heuristic reward mechanisms with DDQN to achieve mobile agent path planning;

- Trajectory Optimization: Refining autonomous decision-making models through the Bézier curve-based smoothing of DDQN agent–environment interactions, validated via simulations in Gazebo and Rviz tools under the ROS.

2. Path-Planning Algorithm

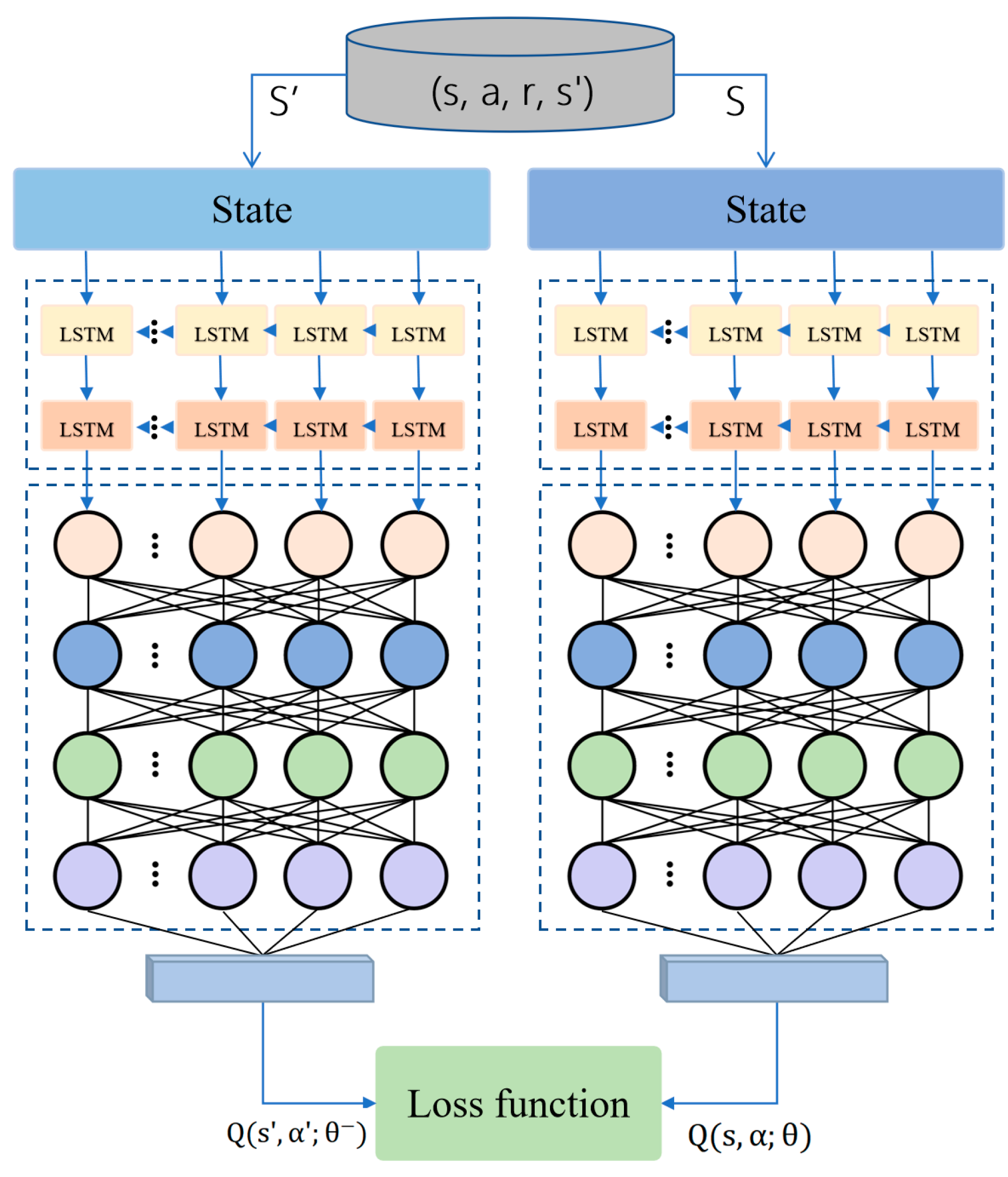

2.1. DDQN Method

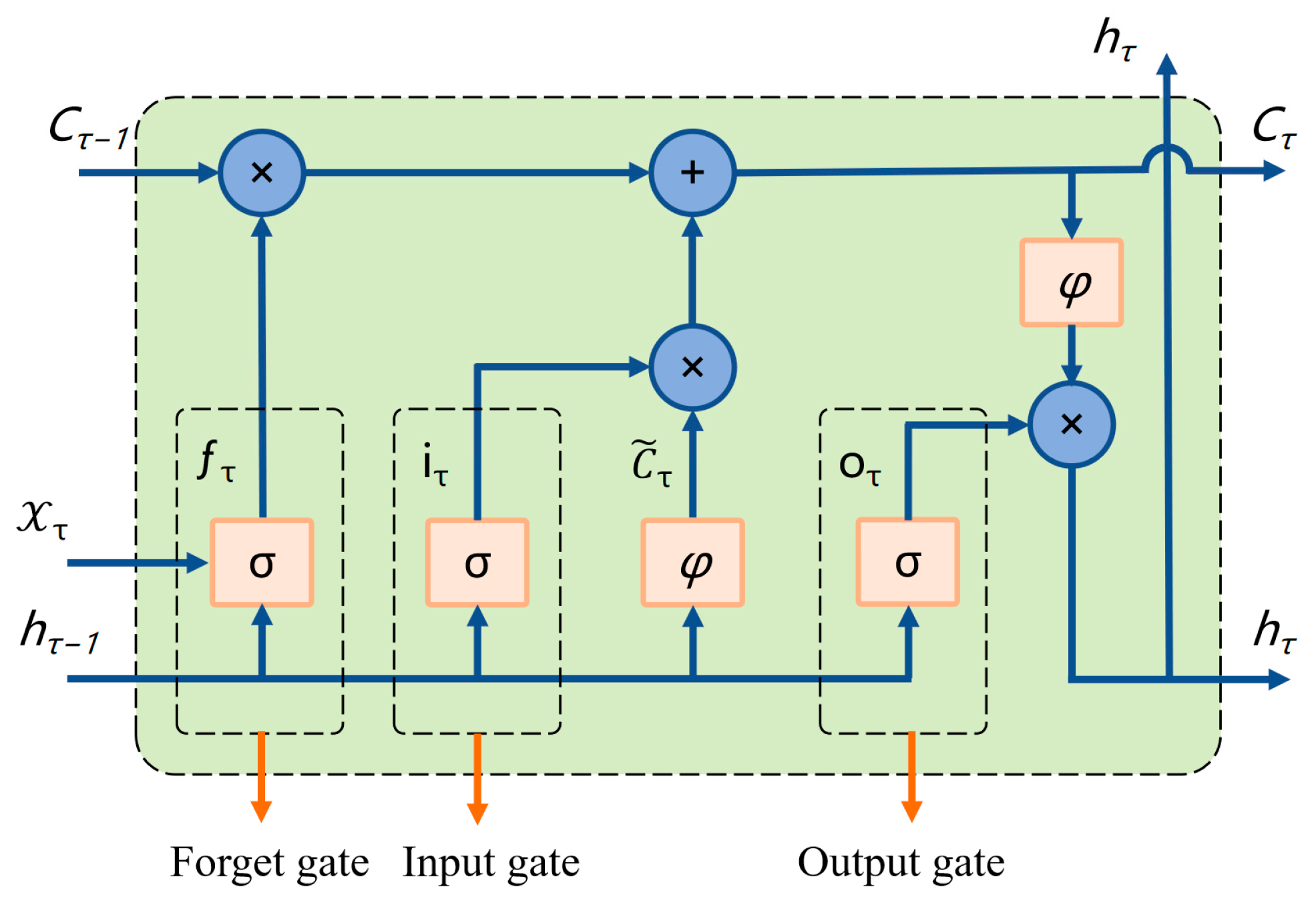

2.2. LSTM

2.3. Bézier Curve Smoothing Optimization

2.4. LB-DDQN Path-Planning Algorithm

3. Design of Path Planning for Mobile Agents Based on the LB-DDQN Approach

3.1. ROS Physical Engine Validation

3.2. Cost Map

3.3. Spatial Design and Reward Functions

4. Experiment and Performance Analysis

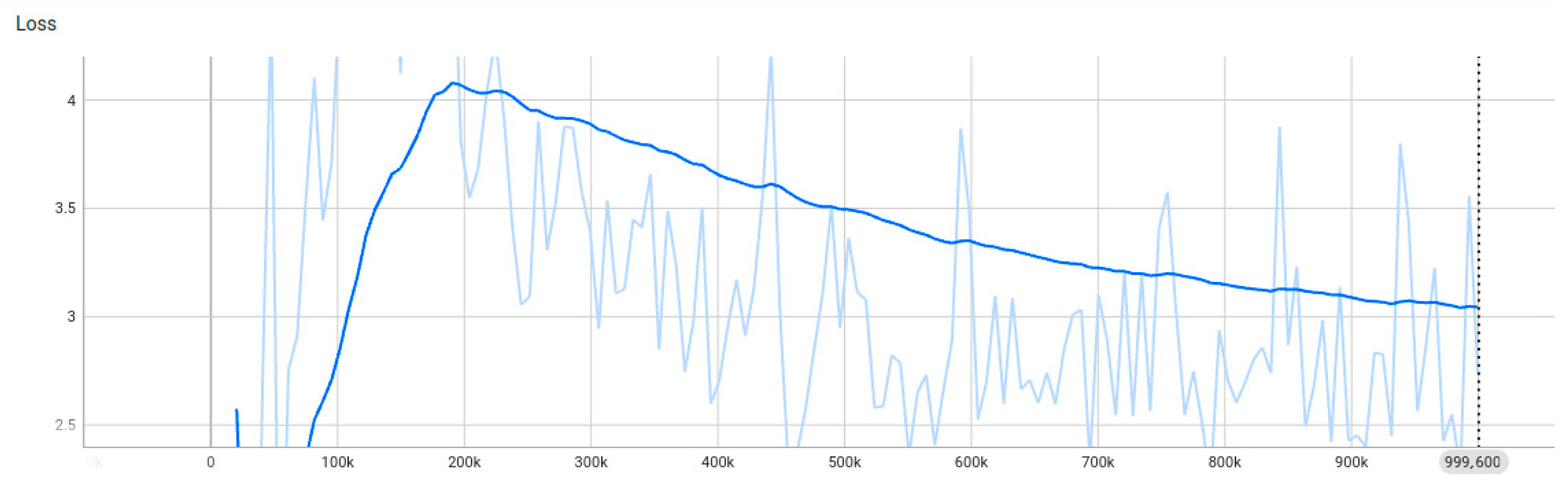

4.1. Performance Analysis

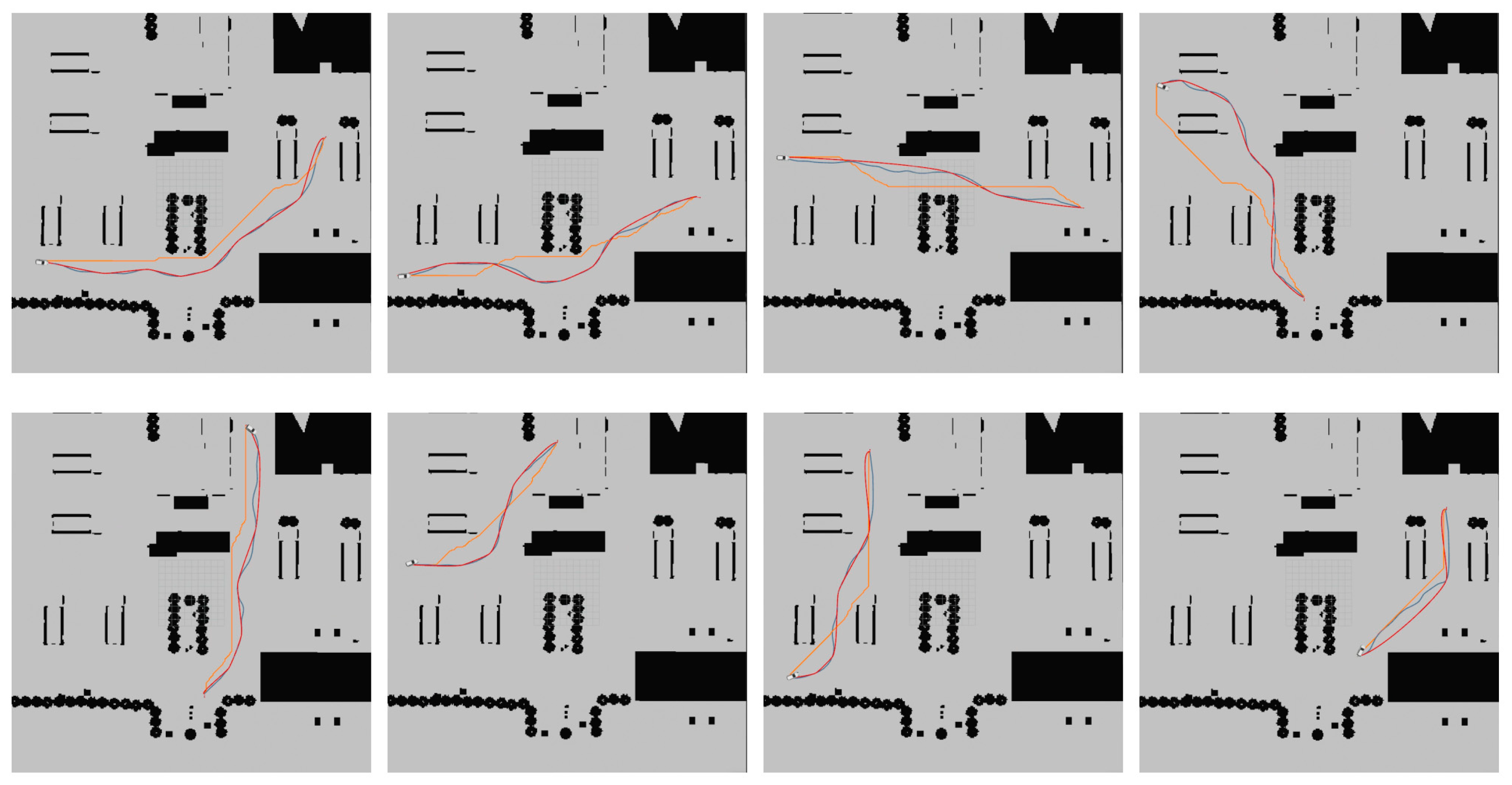

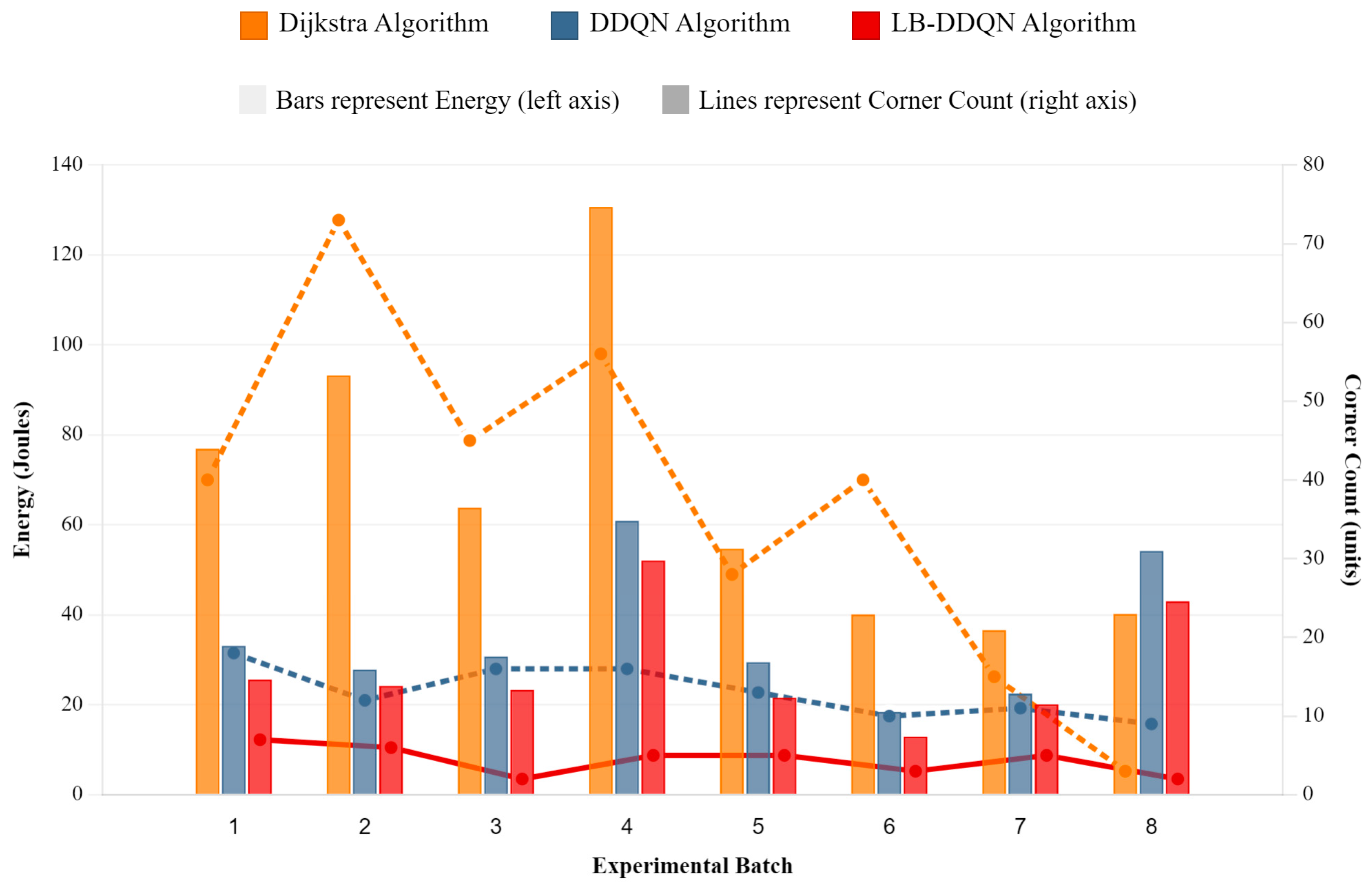

4.2. Comparative Experiment

4.2.1. Comprehensive Evaluation of Safety Performance

4.2.2. Planning a Comprehensive Evaluation of Energy Efficiency

4.3. Model Deployment

5. Conclusions

6. Limitations and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Li, X.; Ma, X.; Wang, X. A review of path planning algorithms for mobile robots. Comput. Meas. Control 2022, 30, 9–19. [Google Scholar]

- Zhu, Z.; Lyu, H.; Zhang, J.; Yin, Y. An efficient ship automatic collision avoidance method based on modified artificial potential field. J. Mar. Sci. Eng. 2021, 10, 3. [Google Scholar] [CrossRef]

- Jia, C.; He, H.; Zhou, J.; Li, J.; Wei, Z.; Li, K. Learning-based model predictive energy management for fuel cell hybrid electric bus with health-aware control. Appl. Energy 2024, 355, 122228. [Google Scholar] [CrossRef]

- Jia, C.; Liu, W.; He, H.; Chau, K. Deep reinforcement learning-based energy management strategy for fuel cell buses integrating future road information and cabin comfort control. Energy Convers. Manag. 2024, 321, 119032. [Google Scholar] [CrossRef]

- Li, K.; Zhou, J.; Jia, C.; Yi, F.; Zhang, C. Energy sources durability energy management for fuel cell hybrid electric bus based on deep reinforcement learning considering future terrain information. Int. J. Hydrogen Energy 2024, 52, 821–833. [Google Scholar] [CrossRef]

- Tang, G.; Tang, C.; Claramunt, C.; Hu, X.; Zhou, P. Geometric A-star algorithm: An improved A-star algorithm for AGV path planning in a port environment. IEEE Access 2021, 9, 59196–59210. [Google Scholar] [CrossRef]

- Miao, C.; Chen, G.; Yan, C.; Wu, Y. Path planning optimization of indoor mobile robot based on adaptive ant colony algorithm. Comput. Ind. Eng. 2021, 156, 107230. [Google Scholar] [CrossRef]

- Chen, S.; Zhao, C.; Wang, C.; Zhengbing, Y. Reinforcement learning based multi-intelligence path planning algorithm. In Proceedings of the 32nd Chinese Conference on Program Control, Taiyuan, China, 30 July 2021. [Google Scholar]

- Liu, J.W.; Gao, F.; Luo, X.L. Survey of deep reinforcement learning based on value function and policy gradient. J. Comput. 2019, 42, 1406–1438. [Google Scholar]

- Liu, Q.; Zhai, J.; Zhang, Z.; Shan, Z.; Qian, Z.; Peng, Z.; Jin, X. A review of deep reinforcement learning. Chin. J. Comput. 2018, 41, 1–27. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Graves, A.; Antonoglou, I.; Wierstra, D.; Riedmiller, M. Playing Atari with Deep Reinforcement Learning. In Proceedings of the Workshops at the 26th Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; ACM: New York, NY, USA, 2013; pp. 201–220. [Google Scholar]

- Watkins, C.J.C.H.; Dayan, P. Q-learning. Mach. Learn. 2012, 8, 279–292. [Google Scholar] [CrossRef]

- Van Hasselt, H.; Guez, A.; Silver, D. Deep reinforcement learning with double Q-learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 12–17 February 2016; AIAA: Reston, VA, USA, 2016; pp. 2094–2100. [Google Scholar]

- Xin, J.; Zhao, H.; Liu, D.; Li, M. Application of deep reinforcement learning in mobile robot path planning. In Proceedings of the Chinese Automation Congress, Jinan, China, 20–22 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 7112–7116. [Google Scholar]

- Zhang, X.; Shi, X.; Zhang, Z.; Wang, Z.; Zhang, L. A DDQN path planning algorithm based on experience classification and multi steps for mobile robots. Electronics 2022, 11, 2120. [Google Scholar] [CrossRef]

- Zhu, Z.; Hu, C.; Zhu, C.; Zhu, Y.; Sheng, Y. An improved dueling deep double-Q network based on prioritized experience replay for path planning of unmanned surface vehicles. J. Mar. Sci. Eng. 2021, 9, 1267. [Google Scholar] [CrossRef]

- Yi, F.; Shu, X.; Zhou, J.; Zhang, J.; Feng, C.; Gong, H.; Zhang, C.; Yu, W. Remaining useful life prediction of PEMFC based on matrix long short-term memory. Int. J. Hydrogen Energy 2025, 111, 228–237. [Google Scholar] [CrossRef]

- Shi, K.; Wu, Z.; Jang, B.; Karimi, H.R. Dynamic path planning of mobile robot based on improved simulated annealing algorithm. J. Frankl. Inst. 2023, 360, 4378–4398. [Google Scholar] [CrossRef]

- Wang, N.; Wu, Y.; Xu, N. Research on control strategy of agricultural robot. J. Agric. Mech. Res. 2025, 47, 205–209. [Google Scholar]

- Pade, B.; Čáp, M.; Yong, S.Z.; Yershov, D.; Frazzoli, E. A Survey of Motion Planning and Control Techniques for Self-Driving Urban Vehicles. IEEE Trans. Intell. Veh. 2016, 1, 33–55. [Google Scholar] [CrossRef]

- Rajamani, R. Vehicle Dynamics and Control; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- NHTSA. Federal Automated Vehicles Policy: Accelerating the Next Revolution in Roadway Safety; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Levinson, J.; Askeland, J.; Becker, J.; Dolson, J.; Held, D.; Kammel, S.; Kolter, J.Z.; Langer, D.; Pink, O.; Pratt, V.; et al. Towards Fully Autonomous Driving: Systems and Algorithms. In Proceedings of the 2011 IEEE Intelligent Vehicles Symposium (IV), Baden-Baden, Germany, 5–9 June 2011. [Google Scholar]

- Dolgov, D.; Thrun, S.; Montemerlo, M.; Diebel, J. Path Planning for Autonomous Vehicles in Unknown Semi-structured Environments. Int. J. Robot. Res. 2010, 29, 485–501. [Google Scholar] [CrossRef]

| Model | ||||

|---|---|---|---|---|

| Dijkstra | 1.905 | 1.018 | 1.571 | 0.211 |

| DDQN | 0.410 | 0.098 | 0.218 | 0.097 |

| LB-DDQN | 0.330 | 0.072 | 0.210 | 0.022 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jin, S.; Zhang, X.; Hu, Y.; Liu, R.; Wang, Q.; He, H.; Liao, J.; Zeng, L. Research on Mobile Agent Path Planning Based on Deep Reinforcement Learning. Systems 2025, 13, 385. https://doi.org/10.3390/systems13050385

Jin S, Zhang X, Hu Y, Liu R, Wang Q, He H, Liao J, Zeng L. Research on Mobile Agent Path Planning Based on Deep Reinforcement Learning. Systems. 2025; 13(5):385. https://doi.org/10.3390/systems13050385

Chicago/Turabian StyleJin, Shengwei, Xizheng Zhang, Ying Hu, Ruoyuan Liu, Qing Wang, Haihua He, Junyu Liao, and Lijing Zeng. 2025. "Research on Mobile Agent Path Planning Based on Deep Reinforcement Learning" Systems 13, no. 5: 385. https://doi.org/10.3390/systems13050385

APA StyleJin, S., Zhang, X., Hu, Y., Liu, R., Wang, Q., He, H., Liao, J., & Zeng, L. (2025). Research on Mobile Agent Path Planning Based on Deep Reinforcement Learning. Systems, 13(5), 385. https://doi.org/10.3390/systems13050385