Abstract

The system of systems (SoS) is essential for integrating independent systems to meet increasing service demands. However, its growing complexity leads to disruptions that are difficult to predict and mitigate, making resilience analysis a critical aspect of SoS development. Resilience reflects an SoS’s ability to absorb and adapt to disruptions, yet its quantitative assessment remains challenging due to diverse stakeholders. To address this, we propose a model-based approach leveraging ArchiMate for SoS visualization and quantitative modeling, combined with a resilience indicator derived from model simulations. This indicator identifies critical constituent systems (CSs) and informs resilient design strategies. Using Mobility as a Service (MaaS) as a case study, we demonstrate how the proposed method can be implemented in a specific SoS.

1. Introduction

SoS refers to a set of systems or system elements that interact to provide a unique capability that none of the CSs can accomplish on their own [1]. This concept is increasingly appearing in critical infrastructure and services, such as cross-regional electrical grid systems, globalized logistics systems, and multi-modal transportation networks. Its characteristics include the operational independence of individual systems, the managerial independence of the systems, geographical distribution, emergent behavior, and evolutionary development [2]. Conventional safety engineering methods primarily focus on predicting and preventing potential risks [3]. However, in SoS, anticipating all potential disruptions and identifying structural risks is challenging due to its inherent complexity and emergence. Moreover, a minor error can be magnified and result in significant consequences due to interdependence. A notable example is the 2021 Suez Canal blockage, where a single vessel obstruction disrupted the global logistics SoS. This disruption led to worldwide supply chain breakdowns, halted production across multiple industries, and resulted in an estimated economic loss of USD 15 to 17 billion [4]. Therefore, evaluating resilience in the SoS design is crucial in ensuring its dependability [5].

Resilience refers to the ability to absorb and adapt in a changing environment [6,7]. “Absorb” reflects the remaining performance of the system after a disruption, while “adapt” describes the response and recovery process from this lowest level to a stable performance level. It has been widely studied in various domains, including organizational, social, economic, and engineering domains [8]. Resilience is an emergent structural property, meaning that its evaluation must consider not only the system’s ability to respond to specific disruptions but also the overall structure of the SoS [9]. Therefore, model-based approaches are well suited for identifying structural risks and assessing resilience. One significant barrier to SoS resilience evaluation is the involvement of independent and diverse stakeholders. Unlike traditional systems with closely coordinated stakeholders, SoS stakeholders are highly autonomous and dispersed, often operating without direct awareness of one another. This necessitates a resilience indicator that considers stakeholder interests and conflicts [10].

To address these challenges, we propose a model-based SoS resilience evaluation approach that incorporates multistakeholder perspectives to support resilience-oriented design. This approach extends workshop research [11]. The key contributions of this study are as follows:

- 1.

- An SoS modeling and simulation approach is proposed based on ArchiMate, an enterprise architecture (EA) modeling language which facilitates a shared understanding among stakeholders. Furthermore, we introduce a quantification of model elements and relationships between them, supporting simulation-based resilience evaluation.

- 2.

- A stakeholder-centric resilience metric is proposed. This metric takes the interests of four stakeholder categories into account: users, SoS organizers, CS providers, and society. Among these stakeholders, a cooperative game is employed to determine the consensus on resilience metric.

- 3.

- A case study on MaaS is implemented. We apply the proposed approach to develop an SoS model for MaaS and evaluate its resilience. Through this evaluation, we identify resilience bottlenecks within the MaaS, providing insights for resilience improvement.

2. Related Works

2.1. SoS Modeling and Simulation

Early SoS research was primarily focused on addressing the definition of SoS and delineating its boundaries in relation to a single system [12,13]. In the late 20th century, some scholars began exploring the architectural aspects of SoS, attempting to employ specific terms and graphical elements to depict the constituents of SoS and provide fundamental principles for constructing overall architectures [2,14,15]. As the concept of SoS gradually crystallized, spurred by advancements in IT technology and driven by evolving demands, mature frameworks for SoS emerged. The initial efforts were observed in the military domain, where the United States Department of Defense introduced the Department of Defense Architecture Framework (DoDAF) as a foundational approach to SoS modeling. DoDAF excels in modeling mission-critical systems with detailed capability, operational, and technical views, making it ideal for a structured, defense-oriented system of systems; however, its complexity and specialization may limit its flexibility and adaptability in open, enterprise-level contexts. Subsequently, a series of SoS modeling and simulation approaches evolved from DoDAF, leading to frameworks such as the Ministry of Defence Architecture Framework (MODAF) and the Unified Architecture Framework (UAF) [16,17,18,19]. In the field of software engineering, modeling languages such as UML [20] and SysML [21,22] have also been employed for modeling SoSs that are closely tied to information technology, owing to their mature modeling languages and simulation capabilities. For cross-industry SoSs, High-Level Architecture (HLA) provides a universal standard for interaction, aiding in the establishment of heterogeneous SoS models [23]. Furthermore, certain frameworks from the EA domain, such as the Zachman Framework [24] and The Open Group Architecture Framework (TOGAF) [25], along with modeling tools like Business Process Model and Notation (BPMN) [26], have been utilized to guide the modeling of SoS from perspectives related to requirements and management. Among these, TOGAF enables holistic and flexible modeling of complex organizational systems, facilitating mutual understanding among cross-domain stakeholders by explicitly linking business objectives, operational processes, and technical architectures.

Alternative approaches originate from systems engineering. One prominent category is continuous modeling techniques, exemplified by System Dynamics (SD), which is commonly used for modeling physical SoS. For instance, Watson [27,28] utilized SD to model ecological SoS to analyze fluctuations in biological populations. Kumar [29] employed bond graphs to model autonomous vehicles and simulated the model using SD based on physical differential equations. Furthermore, Zhou [30] utilized the Functional Resonance Analysis Method (FRAM) to model healthcare-related SoSs, encompassing simulation of inherent uncertainties. Another category of methods is based on discrete events and states, which are better suited for simulating IT-related SoS. Jamshidi [31] provides a detailed account of employing Discrete Event System Specification (DEVS) for constructing SoS models. Petri nets [18,32,33] and Markov chains [34,35] have also been utilized to model and simulate SoSs involving intricate dynamic networks. Zhao [36] combined both continuous and discrete models to simulate microgrid SoS belonging to Cyber–Physical Systems (CPSs). A third category involves agent-based models (ABMs), which are inherently more stochastic than discrete methods and are often used to simulate social SoS. Andrade [37] utilized an ABM to simulate the occurrence and response of wildfires, highlighting the construction of a more resilient wildfire-response SoS. Martin [38] combined system dynamics and agent-based modeling to simulate and analyze SoSs constituted by human societies and ecological systems. Tan [39] proposes a dynamic agent-based model for quantifying the seismic resilience of oil storage tanks. Lastly, certain graph theory models have also been utilized for modeling and simulating SoS. For instance, Kumar [40] employed a multilevel tree model based on bond graphs to analyze an intelligent transportation system. Xu [41,42] investigated the resilience of heterogeneous SoS using a network model. Jiang [43] established a model using the hypergraph model for modeling and analyzing heterogeneous robot groups. Farid [44] uses a hetero-functional graph to model and quantify the resilience of energy–water SoSs. In addition, some studies integrate multiple modeling approaches for more comprehensive SoS representation; for example, Joannou employed rich picture and causal loop diagrams to develop conceptual models, adopted the DoDAF framework and SysML for static architectural modeling, used Simulink for dynamic simulation, and finally applied it in water supply resilience evaluation [45].

The architecture and simulation models discussed above each have their own advantages, disadvantages, and are tailored to specific application domains, which are summarized in Table 1. For the SoS feature explored in this study, characterized by multiple stakeholder perspectives, many of these approaches pose a steep learning curve for stakeholders without an engineering background, leading to difficulties in understanding and potential communication barriers. The EA modeling tool ArchiMate is well suited for this study’s objective of incorporating multiple stakeholders in modeling. It facilitates the creation of intuitive visual models, enabling stakeholders to quickly comprehend the SoS structure and the significance of resilience. Furthermore, compared to the model using no specific modeling language, ArchiMate offers general semantics and a platform for communication among stakeholders from different domains, especially those business managers and shareholders with decision-making authority. Last, ArchiMate has the framework for iteration. Employing this language framework will also facilitate further research into resilience assurance in the whole SoS lifecycle [46]. Therefore, in Section 3.1 and Section 3.2, we construct a static SoS model and quantitative simulation based on ArchiMate.

Table 1.

Comparison of architecture and simulation models for SoS modeling.

2.2. Resilience Engineering

Resilience in the field of engineering has been studied systematically since the inception of the Resilience Engineering Association in its first conference in 2004. Currently, research has transitioned from defining resilience to developing modeling and measurement techniques [47]. Quantitative approaches to SoS resilience analysis can be classified into four categories based on how evaluation results are presented.

Indirect methods: These methods do not directly define resilience but other related quantifiable indicators, such as uncertainty [48] and criticality [49], to identify bottlenecks within the SoS for resilience design. Additionally, The resilience matrix proposed by Linkov incorporates detailed indicators in four domains (physical, information, cognitive, and social) across the four stages of resilience (prepare, absorb, recover, and adapt), also leading to a series of derived methods for evaluating resilience guidelines [50,51]. These methods consider the survival and recovery capacity in detail and are easily implemented in engineer but lack an overall and complete evaluation of the SoS.

Probability methods: These methods consider both the likelihood of risk occurrence and the effectiveness probability of response measures. Based on models such as Bayesian networks [52,53,54,55] and state trees [56], they provide probabilistic interpretations or accident distributions of the resilience of the entire SoS. These methods can effectively illustrate the SoS’s ability to respond to risks but are heavily dependent on historical data and predefined risk scenarios, making them less effective in assessing unforeseen disruptions and black swan events. Additionally, they primarily focus on individual risk probabilities, often neglecting the complex interdependencies among system components and the adaptive capacity of SoS in dynamic environments.

Indicator methods: These methods use directly measurable indicators related to disruption response to evaluate resilience. Typical indicators include response and recovery time [57,58] and the number of operational CSs (both after failure and recovery) [59], among others. These methods heavily depend on the selection of measurable indicators, which may introduce bias and fail to capture the true resilience of the system, as easily quantifiable metrics do not always correspond to the system’s actual ability to withstand and recover from disruptions.

Performance methods: These methods measure resilience by calculating the loss of SoS performance during a disruption. For instance, the mission success rate is used as a performance indicator for resilience in military SoS [60], electricity power is used to gauge the resilience of power infrastructure SoS [61], and population size is used to evaluate the resilience of ecological system SoS [27]. Defining performance is crucial for these methods, particularly when dealing with multiple stakeholders with differing requirements within the SoS. There have also been studies attempting to integrate indicator methods and performance methods into a single metric [23]. However, these methods struggle to accommodate diverse stakeholder priorities, and offer limited guidance for resilience improvements.

Compared to the aforementioned methods, the proposed resilience evaluation approach offers the advantages summarized in Table 2. In Section 3.3, this study proposes a resilience quantification approach based on performance methods. First, a disruption model independent of risk analysis is introduced. Second, a multistakeholder consensus-based evaluation of resilience is incorporated. Finally, an importance coefficient is introduced to showcase bottleneck and guide resilience-oriented design.

Table 2.

The proposed resilience evaluation approach compared to existing methods.

3. Methodology

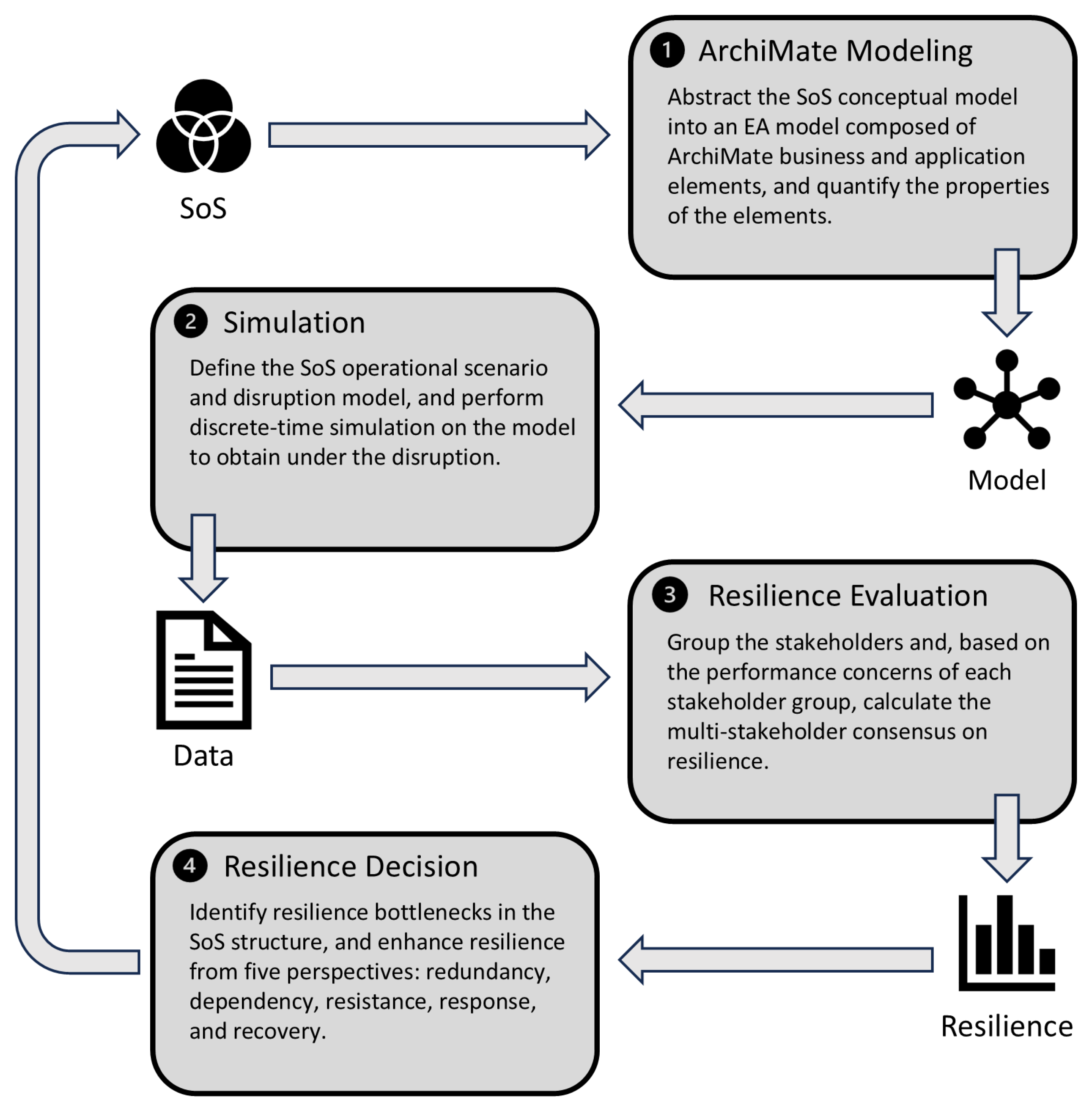

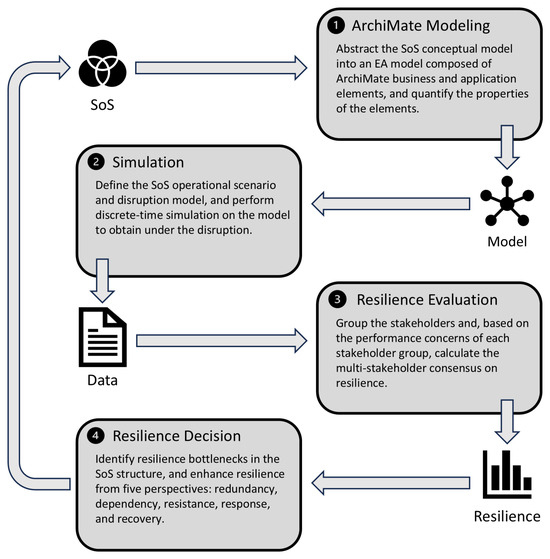

The proposed framework, as illustrated in Figure 1, consists of ArchiMate modeling, simulation, resilience evaluation, and resilience-informed decision making. This method has three key objectives: (1) to provide quantitative metrics for evaluating the resilience of the SoS, (2) to identify critical CS services, and (3) to offer recommendations for resilience-oriented design. In the SoS life cycle defined in ISO 21839 [62], the proposed framework facilitates resilience evaluation to support SoS design in the development phase. During the utilization phase, the proposed indicators remain applicable for resilience monitoring. When changes occur in the SoS environment or internal structure (e.g., legal amendments or the addition or removal of CSs), the SoS undergoes an iterative phase for resilience reevaluation and redesign. Table 3 summarizes the variables and parameters introduced in this section along with their definitions.

Figure 1.

Overview of the proposed approach.

Table 3.

Definitions of identifiers in methodology section.

3.1. ArchiMate Modeling

The EA tool ArchiMate offers a standardized modeling language for describing the operation of business processes, organizational structures, information flows, IT systems, and technical infrastructure. Built upon the TOGAF framework, it facilitates the end-to-end process from requirements analysis to organizational design and iterative improvements [63]. The process of modeling the SoS using the TOGAF framework includes the development of the SoS conceptual model, the business architecture model, the application architecture model, and the technology architecture model. This research employs its business architecture and application architecture to establish a description of the static structure of the SoS and the relationships among its CSs.

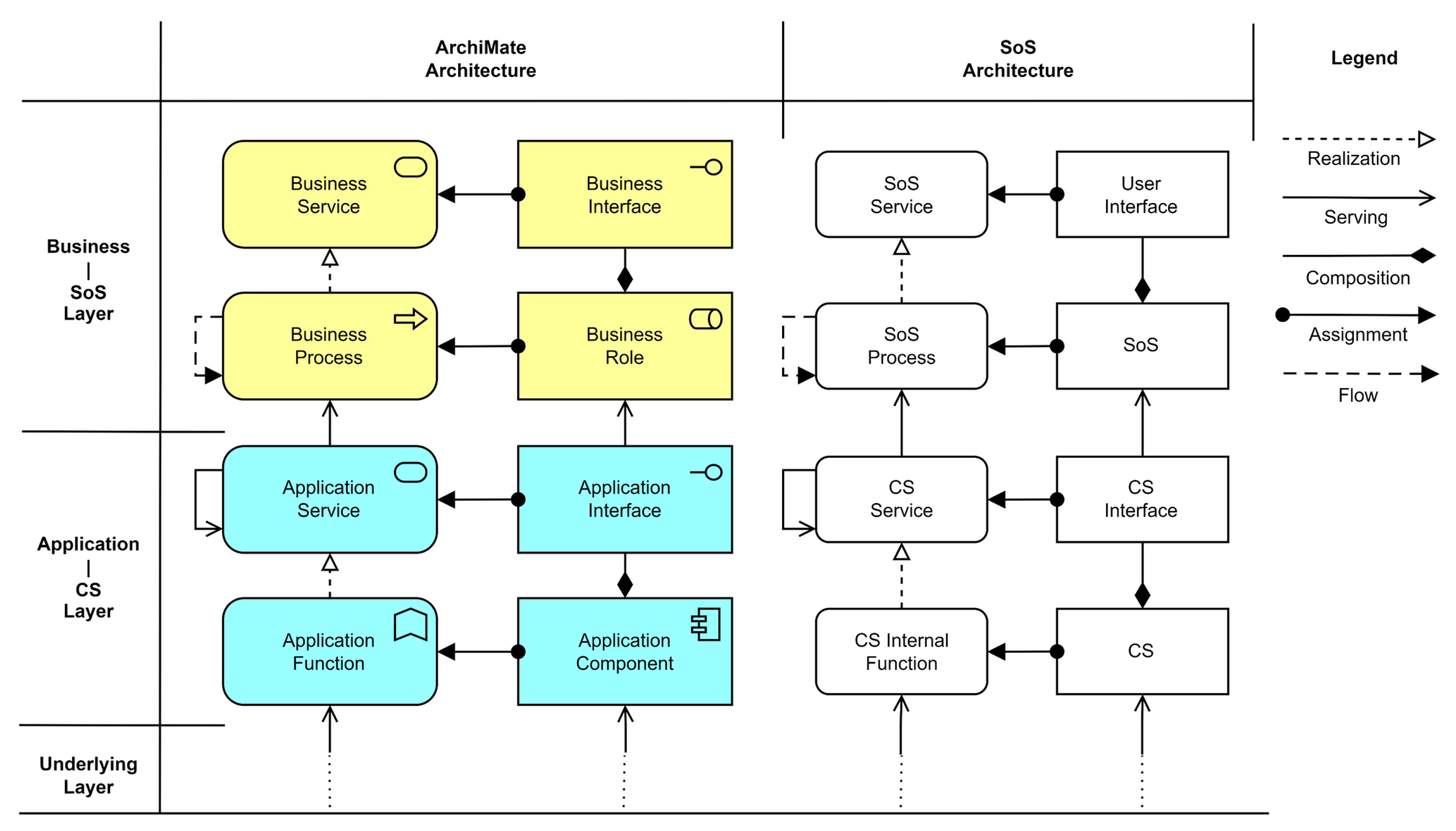

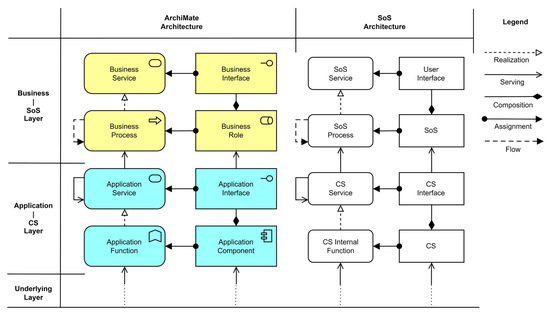

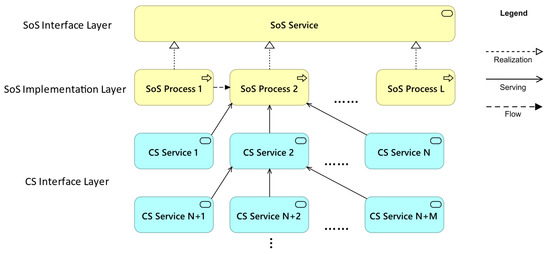

As illustrated in Figure 2, the real-world SoS is abstracted to the conceptual SoS architectures and matched with the concepts in the ArchiMate architecture. Three layers of ArchiMate’s core architecture: the business layer, application layer, and technology layer correspond to the SoS layer, the CS layer, and the system elements layer in the SoS architecture. Each layer consists of an interface layer that provides external services and an implementation layer that handles internal functionalities. This research focuses on modeling the relationship between CSs and SoS and therefore the internal composition of the CS function and their lower-level elements are omitted in the illustration, despite ArchiMate providing corresponding concepts to match them. Table 4 provides definitions for key ArchiMate elements used in this study. The application layer defines the composition and interoperability of CSs, while the business layer expands the model to include additional stakeholders such as users, society, and SoS organizers. Unlike conventional models that focus solely on the flat, structural design of SoS, this model adopts a multidimensional perspective, capturing both its internal configuration and its broader socio-technical interactions.

Figure 2.

The Correspondence between SoS architecture and ArchiMate architecture.

Table 4.

Definitions of elements in ArchiMate [63].

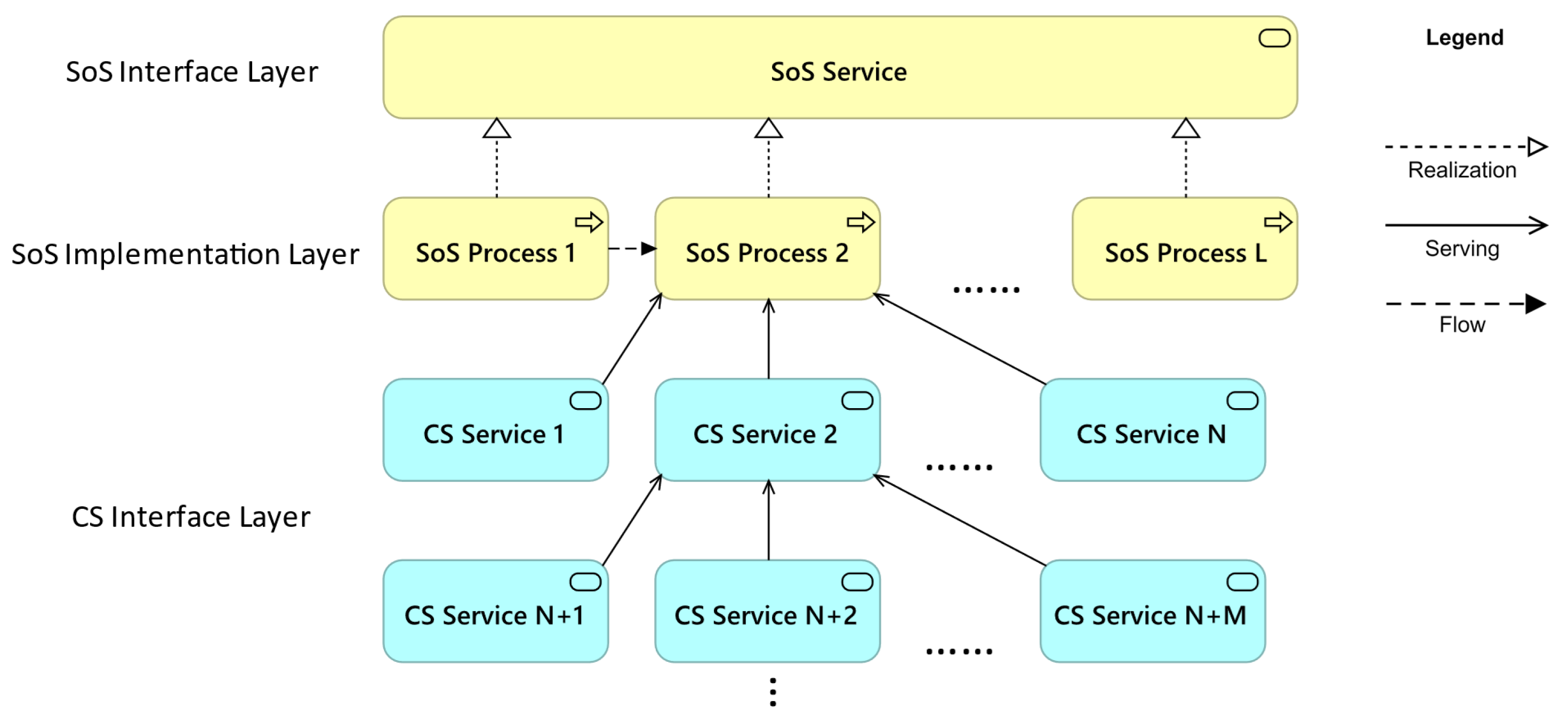

As illustrated in Figure 2, an SoS can be represented as an ArchiMate model, depicted in Figure 3. The model comprises four key elements: two nodes—the SoS process and CS service—and two directed edges representing the flow and serving relationships. The implementation of an SoS service follows a sequential execution of SoS processes, each requiring a certain amount of time to complete. When a specific SoS process is triggered, it utilizes the corresponding CS service, which may include information technology services (e.g., data provisioning), socio-technical services (e.g., transportation), or production and market services (e.g., inventory management). This operational logic serves as the foundation for the simulation detailed below. To enable quantitative simulation, key properties have been defined for these elements.

Figure 3.

An example of an SoS–ArchiMate model.

The flow relationship depicts the movement of users between the business processes and does not possess any inherent properties.

The serving relationship denotes that an element delivers its functionality to another element. It has a property, serving dependency (), which quantifies the degree of dependency of the target on the source in a serving relationship. This property is the answer to the following question: “How much source service is required when a target service is requested?”. This is defined as the product of request probability and absence tolerance, ranging from 0 to 1. Request probability quantifies the frequency at which the source service is requested when the target service is needed. Absence tolerance quantifies the degree to which the target service can tolerate the unavailability of the source service. They are quantified through a structured evaluation conducted among service-related stakeholders, using a quantitative rating table (see Table 5). For instance, if Service A frequently requires Service B, then the request probability of this serving relationship is 0.8. If Service A can tolerate a moderate absence from Service B, then the absence tolerance of this serving relationship is 0.6. Ultimately, the serving dependency () value is the product of the two, resulting in 0.48. In other words, when there are 100 requests for Service A, 80 requests are made for Service B.

Table 5.

Evaluation of request probability and absence tolerance.

CS service represents an explicitly defined externally accessible application behavior provided by the CS. CS service has two properties: CS Service Self Capacity () and CS Service Real Capacity ().

quantifies the maximum number of user requests processed per unit time, assuming unlimited external resources and constrained solely by the system’s inherent capacity. is formally defined in Equation (1).

represents the steady-state demand (in the absence of disruptions) for a CS service. This parameter ensures supply–demand equilibrium for services before disruptions occur. is determined by stakeholder-defined dependability requirements in the presence of potential CS risks and is expressed as a percentage. represents the impact of unexpected events on the performance of the CS service, quantified in percentage. Here, 0% signifies a complete loss of CS service performance, while 100% indicates no impact on CS service performance. The specific disruption model is detailed in Section 3.2.

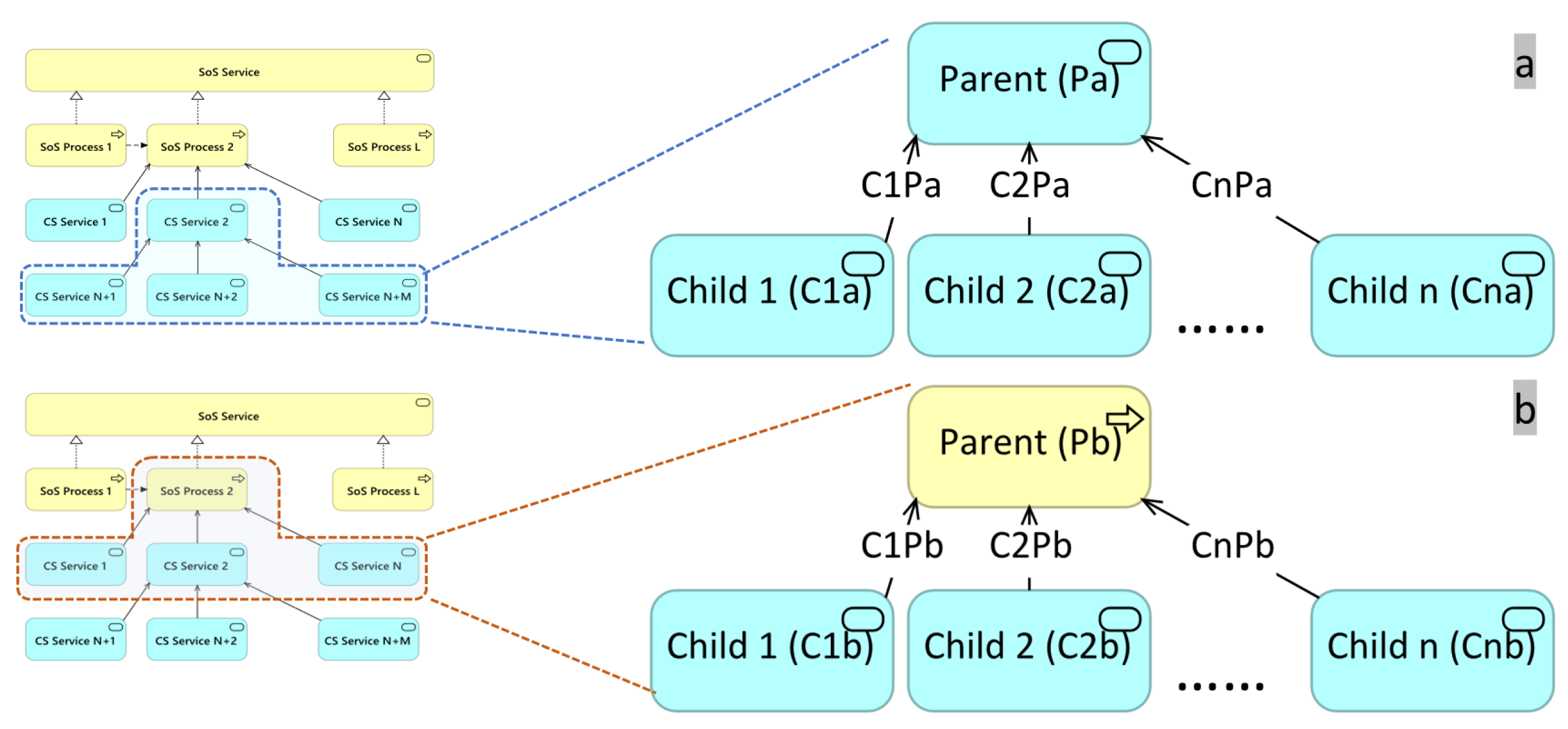

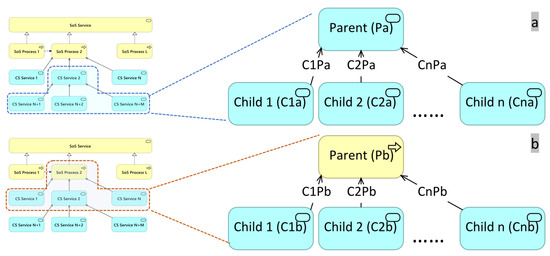

quantifies the number of requests that can be fulfilled per unit time, determined by both its inherent capacity and the of its dependent services. Figure 4a illustrates the scenario where a CS service relies on multiple CS services. The parent element depends on the child elements , ,..., , with the of the serving relationships between them denoted as , ,..., . is formally defined in Equation (2). It indicates that depends on the weakest point, which could be its own capacity, , or the service capacity of other dependencies, .

Figure 4.

A parent element relies on other child CS services. (a) A scenario where the parent element is a CS service and depends on other CS services. (b) A scenario where the parent element is the SoS process and depends on other CS services.

The SoS process represents a sequence of business behaviors that achieves a specific result, such as a defined set of products or business services. It has two properties, SoS process regular time () and SoS process capacity ().

quantifies the time required to complete the target SoS process in the absence of disruptions. The property is derived from the average time taken to complete the SoS process.

quantifies the number of requests that can be fulfilled for the current process, determined by the of its dependent CS service. Figure 4b shows the scenario where a parent SoS process relies on multiple CS services. is determined by Equation (3). Similar to the computation of , Equation (3) signifies that depends on the weakest service capability among the CS services it relies on.

3.2. Simulation

Resilience is highly context-dependent, influenced not only by the objective structure but also by the operational scenario and the nature of disruptive events [5]. Therefore, defining the operational scenario and disruption model is essential for resilience simulation.

Assumption of operational scenario: The user agent represents the source of service requests as well as the entity being served. Based on requirement analysis, the number of users generated per unit time () can be determined. The generated agents simulate request behavior within the SoS, beginning with the initial SoS process and sequentially executing each subsequent process until completion. Agents are assumed to exist in three fixed states. If the SoS process is available, meaning that there is remaining capacity (defined as Equation (3)), then the agent will enter the running state and occupy the capacity at the current time step. Otherwise, the agent will enter the blocking state. Upon completion of an SoS process, the agent transitions to the ready state in preparation for the next SoS process. Given the configuration of , the variable (from Equation (1)) can be determined through steady-state simulation. In the steady-state simulation, disruptions are absent, ensuring a perfectly balanced supply and demand for services.

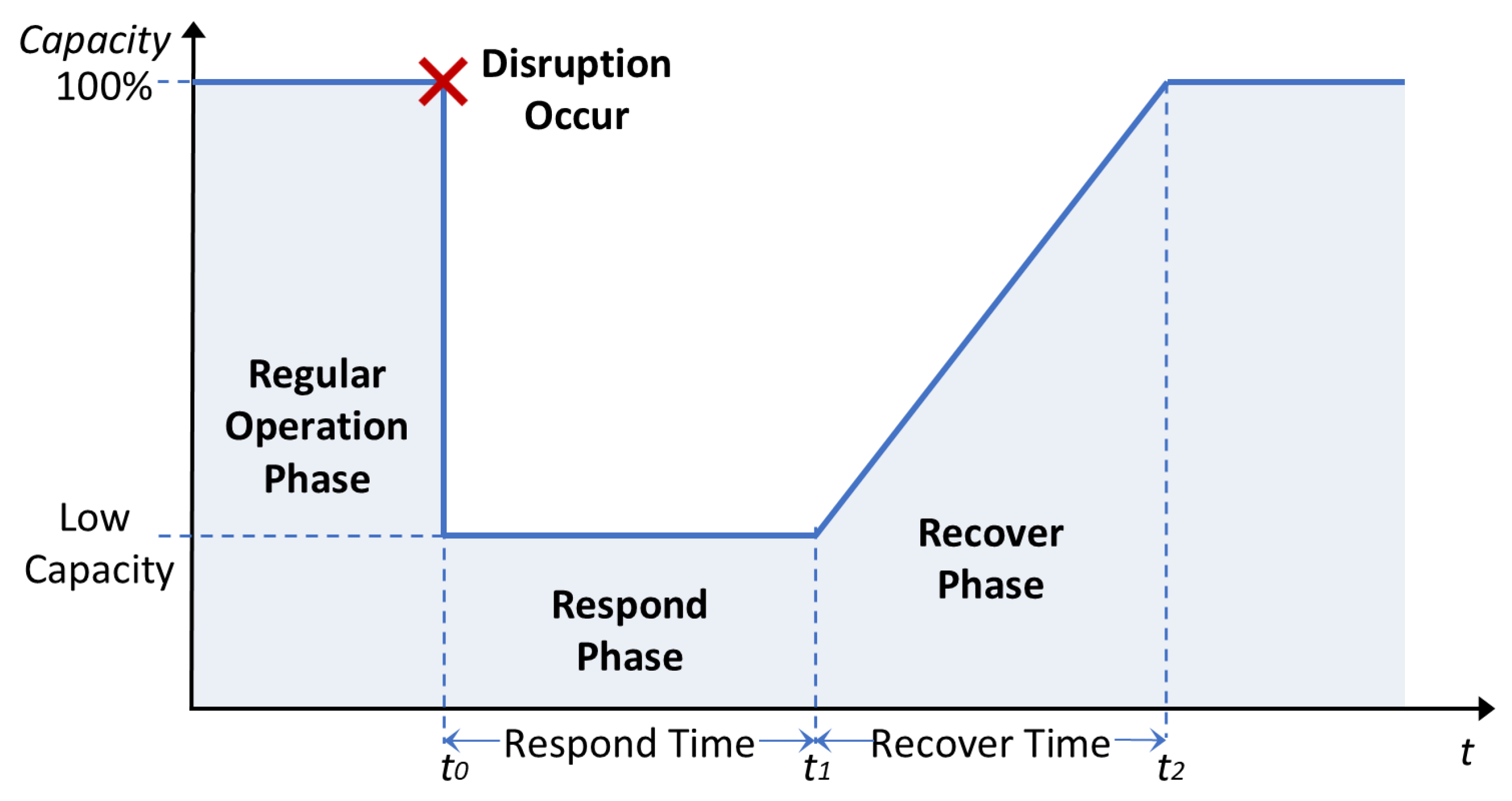

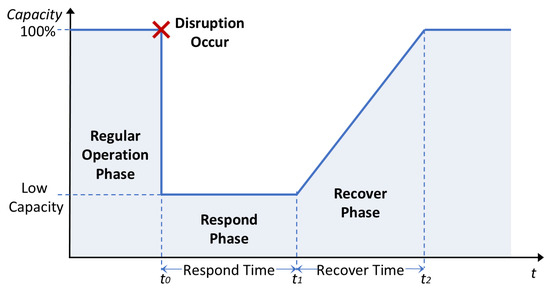

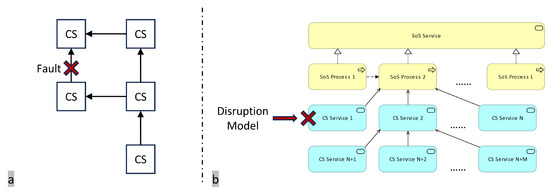

Assumption of disruption model: The disruption model captures the impact of disruptive events on CS services, as illustrated in Figure 5. Disruptive events can originate from any CS function or external environment. This study does not examine the causes of these events but rather focuses on their impact on CS services, as resilience primarily concerns how the failure of a CS function affects the integrity of the SoS. represents the time when the disruption occurs, and the CS service affected by the disruption immediately experiences a decrease in its capacity to a low level (), indicating the CS service’s resistance capability to disruption. denotes the start of the affected CS service’s recovery. The time interval between and reflects the CS service’s response capability to disruption, denoted as . represents the time when the CS service fully recovers to its original capacity. The time interval between and quantifies the CS service’s recovery capability following a disruption, denoted as . The specific values for , , and need to be set in the simulation.

Figure 5.

The disruption model assumed in this study.

Generally, the parameters of the three aforementioned disruption models can be determined by the following three methods.

- Risk-informed method: If sufficient risk knowledge is available, then it can be acquired using various mature techniques, such as fault tree analysis. The parameters of the disruption model at the CS service level can then be determined through calculations or simulations at the system or component level. However, this approach may result in a reactive design paradigm, which is not conducive to macro-level resilient design [5].

- History-informed method: If ample historical data on CS service disruptions are available, then the parameters of the disruption model can be directly inferred from representative cases. However, this approach is often inadequate for predicting unknown scenarios.

- Requirement-informed method: Resilience engineering emphasizes the macro-level capacity to withstand disruptions. From this perspective, defining the parameters of the disruption model based on top–down requirements is a reasonable approach. This approach entails determining the extent of disruption that stakeholders expect the SoS to withstand while maintaining sufficient resilience and ensuring acceptable stakeholder losses. In this case, the disruption model serves as a benchmark similar to those used in software performance testing.

ArchiMate does not provide a dedicated simulation tool, but it does offer a scripting language called JArchi for model computation and batch processing. Based on JArchi, the author developed a discrete-time simulation program suitable for the model established in Section 3.1 (ArchiMate Version: 4.10.0, JArchi Version: 1.9.0) [64]. In the simulation, the state of the user agent and the properties of the CS change over time steps according to the equations in Section 3.1 and the settings in Section 3.2. The simulation progress is illustrated in Algorithm 1. Specifically, the disruption model is iteratively applied to each CS service to evaluate the resilience of the SoS, as represented by the loop in lines 3–15. During the simulation of a CS service disruption, user agents are instantiated at each time step (line 6), the SoS’s current performance is computed (line 7), the next state of each user agent is determined (lines 8–10), and the capacity utilization of each CS service is assessed (line 11).

The simulation output for each CS service disruption comprises the following elements: planned execution time () for each user; actual execution time () for each user; available service capacity (); service utilization () for each CS service; SoS process at each simulation time; the number of active users () within the entire SoS; the number of queued users () within the entire SoS.

| Algorithm 1 Simulation for SoS MODEL. | |

| Require: SoS Model (Topology and Properties) | |

| Ensure: performance-related Data. | |

| 1: | Disruption Setting: , , |

| 2: | User Setting: , , |

| 3: | for in SoS Model do |

| 4: | Load Disruption to |

| 5: | for i = 1,2,3... do |

| 6: | Put number of agents in AgentActiveArray. |

| 7: | Traversal calculation of and . |

| 8: | for in ActiveAgentArray do |

| 9: | Function: StateMachine() |

| 10: | end for |

| 11: | Traversal calculation of and |

| 12: | Store the data of time i |

| 13: | end for |

| 14: | Save the data of in file format. |

| 15: | end for |

3.3. Resilience Evaluation

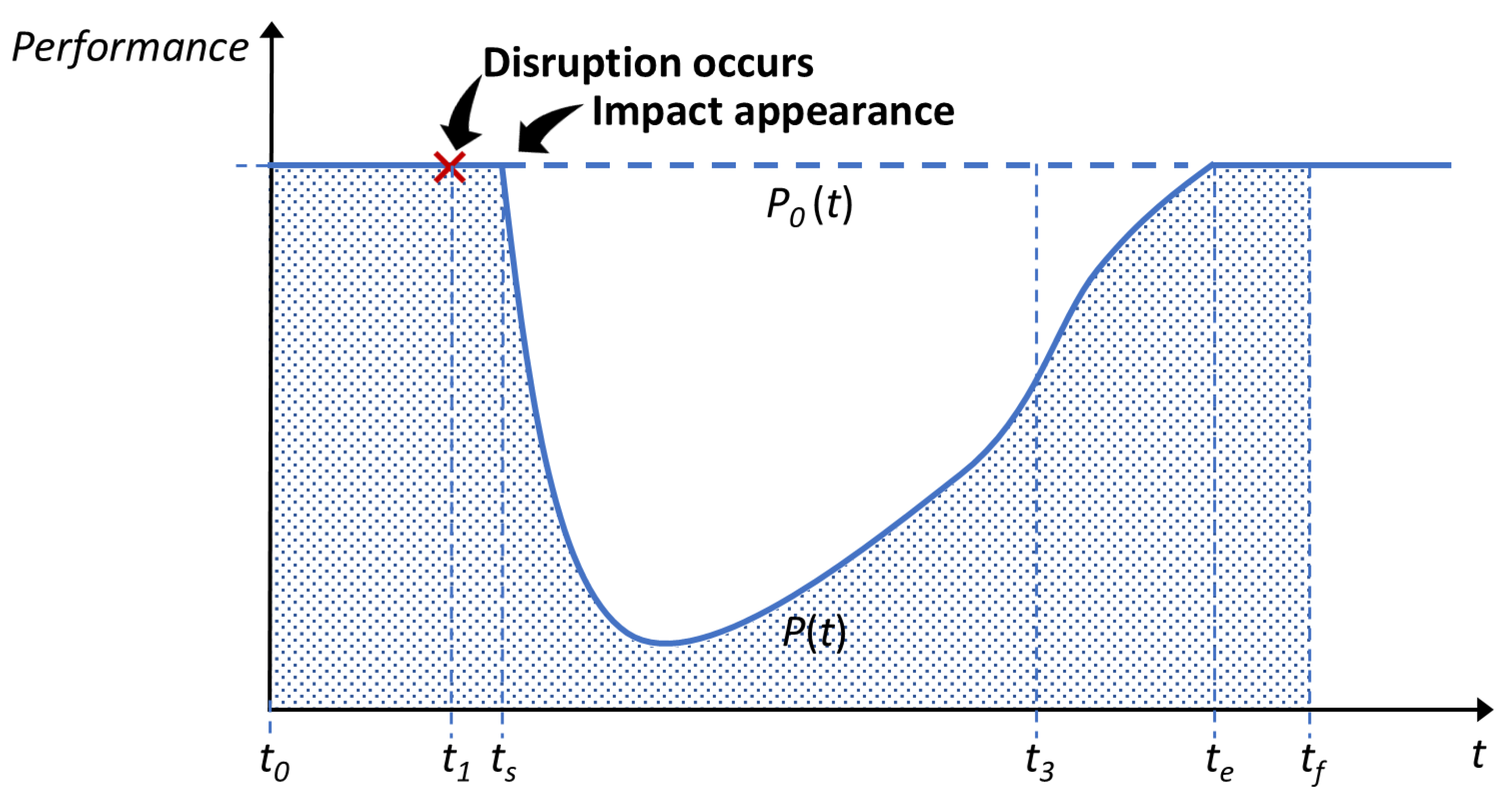

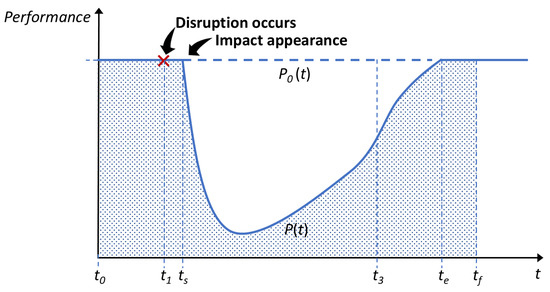

The performance method, which is currently the mainstream approach for resilience evaluation, employs a characteristic indicator as defined in Equation (4). Resilience is quantified based on the disrupted performance curve, , and the regular performance curve, [61].

In this equation, denotes the time at which the disruption impact becomes evident, while represents the duration required for the SoS to restore its regular performance following the disruption. Figure 6 illustrates the two performance curves defined in Equation (4).

Figure 6.

Performance curve under the disruption state and normal operation.

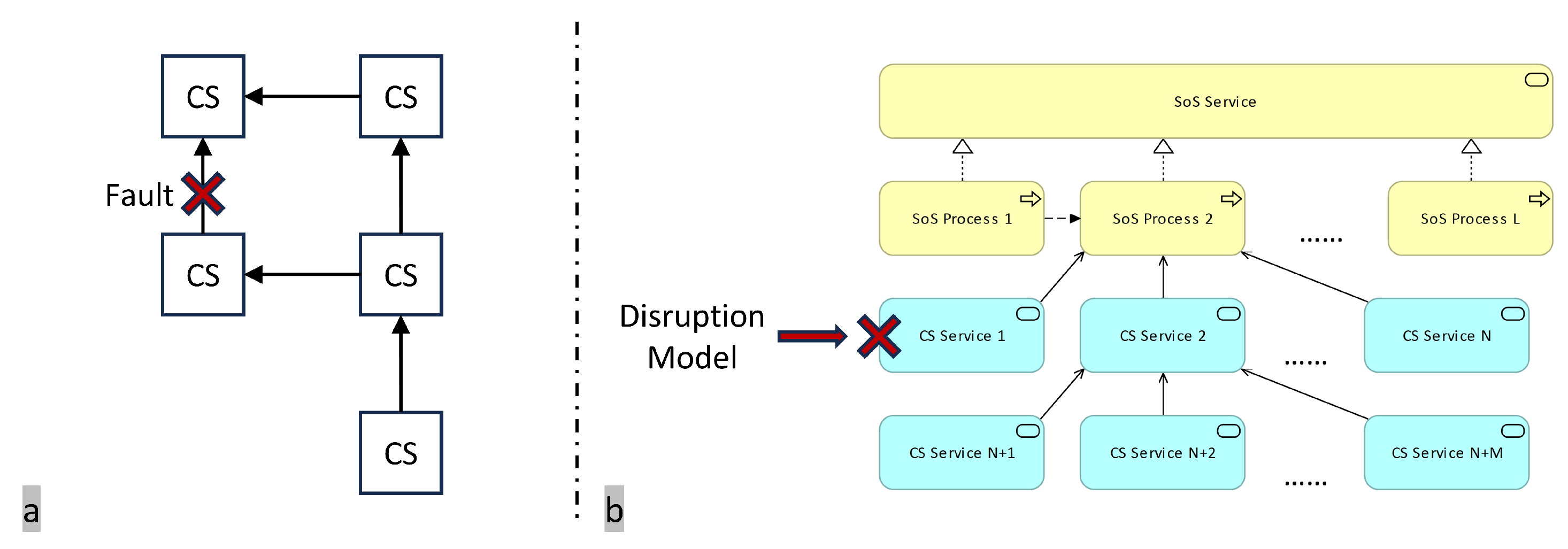

Building on Equation (4), Watson proposed a method for evaluating the resilience of SoS, as formulated in Equation (5) [27]. He developed a physical flow graph model for the SoS, depicted in Figure 7a, where nodes represent CS and edges represent flow (e.g., energy flow within an ecological chain). By sequentially disrupting each individual edge (totaling k edges) and analyzing the corresponding performance curves, he derived the overall resilience of the SoS. Here, denotes the time required for full recovery.

Figure 7.

(a) A fault occurrence in Watson’s physical flow graphic model. (b) A disruption model is introduced in the ArchiMate model.

The study further explores this resilience definition from the perspective of the established ArchiMate model and a multistakeholder approach.

First, we introduce the disruption model as mentioned in Section 3.2. The term “fault” in Equation (5) denotes the disruption of an edge in the graphical structure, which implies the complete failure of the source CS. However, as a system, the CS is neither atomic nor strictly binary in terms of functionality—i.e., it is not either working or not working. Instead, it operates through three phases: disruption, response, and recovery. Therefore, we introduced the disruption model with parameters showing survival and recovery capability to simulate more detailed scenarios, as shown in Figure 7b. Resilience is defined in Equation (6), which quantifies the resilience of the SoS when CS service i experiences a disruption. In Figure 6, because and vary across different disruptions, we set as the starting time, assuming a uniform disruption occurrence time in the simulation, and define the maximum of all values as the end time to maintain consistent time intervals for all resilience computations.

Second, we introduce multiple stakeholders. In Equation (5), performance is defined in a singular manner. This is more characteristic of general systems, where stakeholders typically have closer relationships and more aligned objectives. In contrast, stakeholders in a SoS are often diverse, decentralized, and concerned with broader societal impacts and interests, resulting in a more heterogeneous definition of performance. However, considering the performance objectives of all individual stakeholders is infeasible. A feasible approach is to group stakeholders with similar interests [65]. Based on stakeholder theory, the SoS should consider internal stakeholders who focus on profit, such as CS service providers and the SoS organizer, and external stakeholders who prioritize Quality of Service (QoS), namely users and society, since these four stakeholder groups play a crucial role in SoS decision making [66]. At the end of Section 3.2, some outputs of the simulation that can be used to calculate the performance indicators of each stakeholder group are mentioned, and we summarize them in Table 6.

Table 6.

Stakeholders’ interests and the simulation data related to these interests.

Using Equation (6), the resilience indicators corresponding to the above performance indicators are derived, denoted as , , , and . The overall resilience indicator reflects the consensus among the four stakeholder groups. Equations (7) and (8) define a basic consensus process, where denotes the set of the four stakeholder groups, and represents the cost incurred by the corresponding stakeholder group when adjusting their resilience evaluation to reach consensus. This parameter is determined based on loss estimation in accordance with real-world conditions. This optimization determines the overall resilience indicator by minimizing the total adjustment cost incurred by all stakeholder groups when aligning their individual resilience evaluations to a consensus value.

The solution of this consensus process is not unique. To ensure fairness in consensus formation, we introduce the Shapley value from cooperative game theory. we construct a cooperative game model . The player set is the set . T means any coalition composed of members of . The characteristic function equals the optimal value for Equations (9)–(11) below. Equation (9) identifies the most favorable solution to coalition T among the solutions of Equation (7), i.e., the one with the minimal total cost caused by adjustment for T. Therefore, an additional constraint (10) is introduced, ensuring that the sum of all stakeholders’ resilience evaluation adjustments equals the optimal value of Equation (7), noted as .

The Shapley value distributes benefits and costs according to marginal contributions and satisfies several desirable axiomatic properties, such as efficiency, symmetry, linearity, and the null player condition, which guarantees fairness in the allocation process [67,68]. As defined in Equation (12), the Shapley value assigned to stakeholder i is computed based on the characteristic function . It quantifies the resilience adjustment cost allocated to each stakeholder.

By incorporating the Shapley value into the consensus process, the optimization problem formulated in Equations (13)–(15) determines the resilience evaluation by minimizing the total weighted adjustment cost across all stakeholder groups while ensuring that each stakeholder’s individual adjustment cost equals their allocated Shapley value , thereby maintaining a fair distribution of resilience adjustments.

Third, we introduce the importance coefficient. In Equation (5), the resilience of the SoS under each fault scenario is evaluated, and the overall resilience is computed as the average of these evaluations. This implies that disruptions with significant losses are given the same weight as those with minor losses. However, resilience refers to the capacity to withstand low-frequency, high-impact disruptions [5]. Therefore, the overall resilience indicator should account for the significance of critical CS services. Here, we introduce the coefficients , as shown in Equation (16), to quantify the relative importance of each CS service. This implies that CS services with lower resilience are assigned higher importance weights, where m denotes the total number of CS services.

The overall resilience of the SoS is defined as and shown in Equation (17).

3.4. Resilience Decision

The purpose of this section is to demonstrate the significance of the proposed resilience evaluation method, namely providing a basis for decision making in the SoS resilience designing phase. Three key questions arise in this phase:

- Q1: Among multiple SoS design options, which design option is preferable?

- Q2: Which CSs are the bottlenecks that need improvement when a design option lacks resilience?

- Q3: What measures should be taken based on identified bottlenecks?

For Q1, serves as an indicator for assessing the resilience of multiple design options, providing a quantitative basis for decision making.

For Q2, quantifies the criticality of CS service i. A larger value suggests that a disruption in CS service i would result in more significant losses for the SoS. This helps prioritize resilience measures by identifying critical CS services.

For Q3, first, it is important to distinguish reliability from resilience. Reliability focuses on the system by predicting and mitigating risk-related factors (e.g., probability of failure and impact). However, resilience is not about avoiding failure but ensuring system quality by monitoring and improving the system’s leading indicators (e.g., survival and recovery capability) [69]. Specifically, these indicators related to robustness, self-healing capability, redundancy, and decentralization [70]. Table 7 shows the corresponding properties of these indicators in the SoS EA model established in this paper. Resilience engineers should consider the potential disruptions and prepare the corresponding properties of critical CS services during the SoS design phase and design monitoring mechanism to ensure the resilience of SoS during runtime.

Table 7.

The leading indicators of resilience and corresponding improvement measurements on critical CS services.

4. Case Study: Mobility as a Service

4.1. MaaS Introduction

The definition of MaaS, along with the organizational structure (including processes and supplier integration) used in this section, refers to the Brussels MaaS trial report. The parameters in the model are only sample data derived from the assumptions in Section 3.2 [71]. MaaS is a seamless integration of mobility services and manages the provision of transport-related services, such as route planning, navigation, transportation, ticketing, and payment. Currently, the main operational mode of MaaS is the integration of existing mapping services, transportation companies, and payment companies. These companies operate independently and join MaaS through agreements to gain more profits through cooperation. Therefore, MaaS can be seen as an SoS, and the customers, MaaS organizers, integrated service providers, and society can be viewed as four stakeholder groups. MaaS is divided into six seamless business processes:

- Log in: MaaS verifies user identity and payment account authentication.

- Plan: An information integration process. MaaS provides users with travel options by integrating map data, vehicle availability, and transportation schedules.

- Order: A booking integration process. MaaS offers users a one-stop ticketing service by consolidating ticketing systems from various transportation platforms.

- Authenticate: MaaS verifies passengers’ identities upon boarding.

- Travel: A transportation integration process. MaaS combines various transportation modes to provide users with seamless travel and navigation services.

- Pay: A payment integration process. MaaS supports multiple payment methods and internally distributes revenue among different CSs.

The actual implementation of each business process involves multiple service providers, including transportation service providers, such as public transport, sharing mobility, and taxis, as well as comprehensive service providers, including integrated journey planning, mobility data aggregators, and online payment platforms. These providers are modeled as CSs to deliver services.

4.2. Method Implementation

4.2.1. Step 1: MaaS Modeling

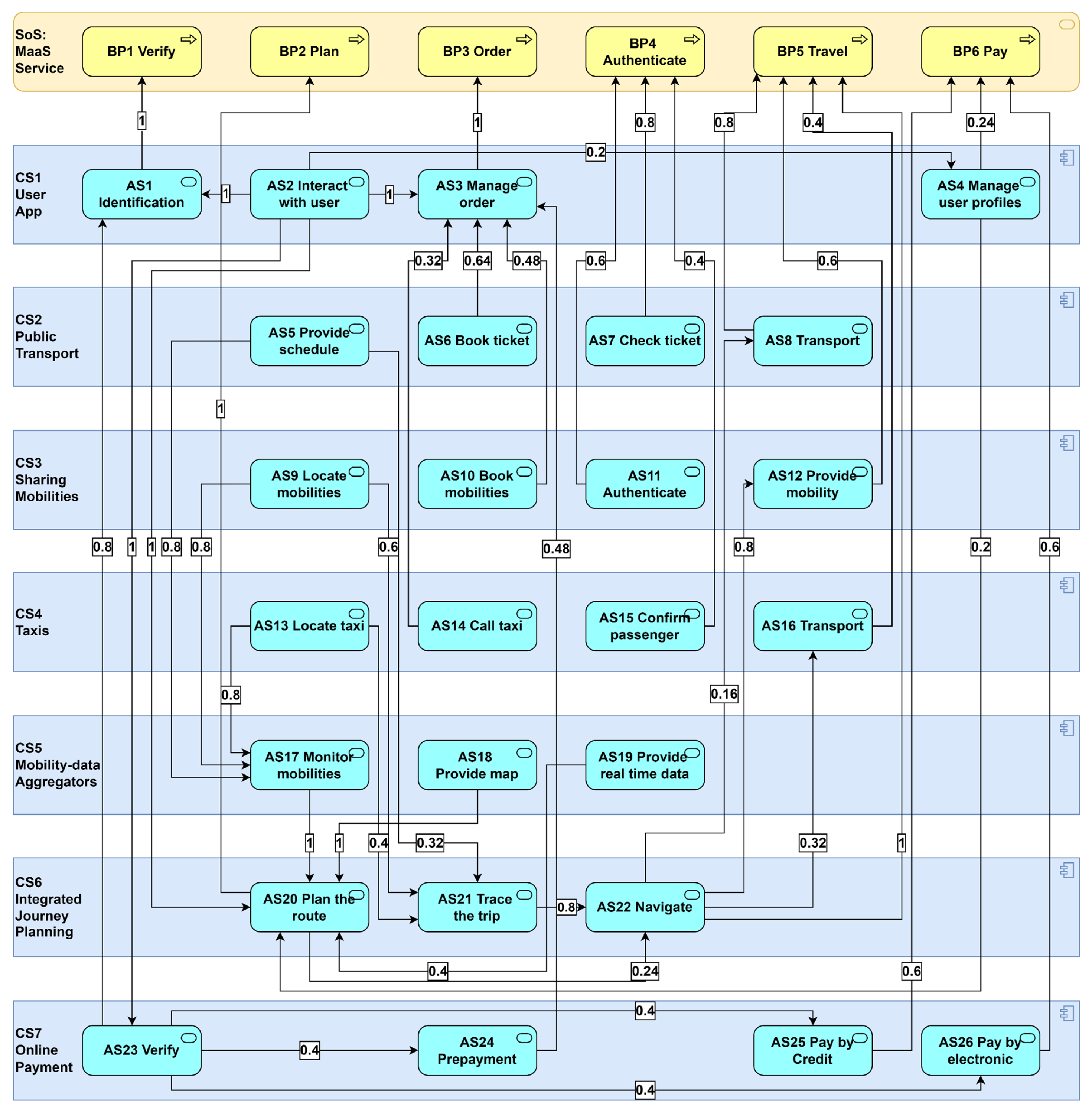

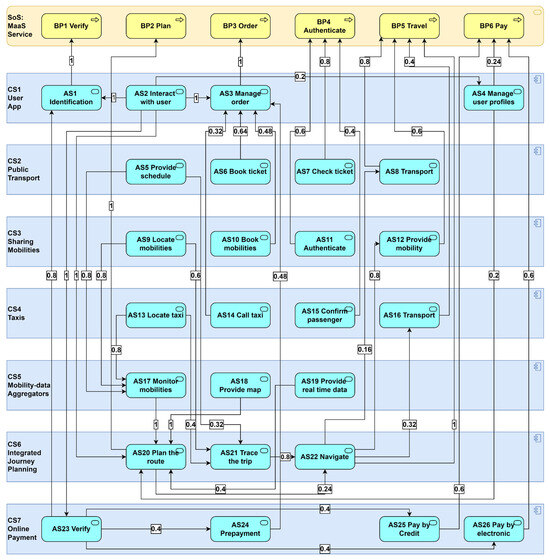

The MaaS ArchiMate model, as depicted in Figure 8, has been developed based on the approach presented in Section 3.1. In this model, users sequentially trigger business processes (BP in the figure) within MaaS. Each business process depends on the support of multiple application services (AS in the figure) provided by CSs. The value assigned to the serving relationship in Figure 8 represents its parameter , which is empirically determined based on Table 5.

Figure 8.

MaaS ArchiMate model.

4.2.2. Step 2: MaaS Simulation

We have established a fundamental resilience evaluation scenario. In a given region’s MaaS, the demand is set at 20 user orders per unit of time. Each CS function provides a corresponding number of services based on this scenario, with an additional 20% redundancy to accommodate unforeseen events as per protocol. Furthermore, based on stakeholder requirements, MaaS must demonstrate sufficient resilience even when each CS function undergoes a certain level of disruption (, , and ). In this context, the model parameters serve as assumptions to illustrate the methodology; however, in practice, they can be determined through various methods, including risk analysis (e.g., applying Failure Modes and Effects Analysis (FMEA) to assess potential degradation of order service AS3 due to network attacks), historical data (e.g., analyzing statistical data on decreased performance of public transportation service AS8 caused by vehicle deceleration during heavy rain), and stakeholder requirements (e.g., ensuring service continuity even in the event of a complete failure of the credit card system, AS25). The parameter settings for this scenario are presented in Table 8. If a CS service fails to meet demand after experiencing disruption, reinforcing the relevant SoS structure and enhancing the corresponding CS service properties becomes necessary. The simulation of the MaaS model was conducted using ArchiMate Version 4.10.0 and JArchi 1.1.0.

Table 8.

Parameters and assumptions setting for MaaS simulation.

4.2.3. Step 3: MaaS Resilience Evaluation

The foundation for resilience evaluation lies in identifying performance indicators for the four stakeholder groups.

MaaS organizers, serving as decision-makers, designers, and investors, focus on the overall service capacity of the SoS. This capacity, defined as the maximum number of users that can be served, is determined by the sum of the service capacities of all business processes (), as expressed in Equation (18).

CS providers enter into contracts with MaaS to offer their independent services and primarily focus on profitability. The utilization rate of CS services can, to some extent, reflect their turnover and expenses. Therefore, Equation (19), which represents the average utilization rate of all CS services, serves as the performance indicator for CS providers. The number ‘26’ denotes the total number of CS services in MaaS. The numerator represents the number of services being utilized, while the denominator represents the total number of services provided.

Users prioritize the punctuality of MaaS. For the stakeholder group of users, Equation (20), which represents the average punctuality rate, serves as their performance indicator. The set denotes users who complete their SoS service at time t. The numerator corresponds to the planned duration of the user’s itinerary, while the denominator represents the actual travel time.

For society, MaaS should strive to minimize congestion. The congestion rate, as defined in Equation (21), serves as the performance indicator. The denominator represents the total number of users, while the numerator denotes the number of users whose service is operating as scheduled.

Based on the performance definitions of the four stakeholder groups, as given in Equations (18)–(21), the resilience evaluation for CS service i after experiencing a disruption can be determined using Equation (6). This yields the resilience indicators , , , and . The results are presented in the first four columns of Table 9. The subcolumn labeled “Rank” indicates the ranking of CS services based on their importance from the perspective of the corresponding stakeholder group’s performance indicator.

Table 9.

Resilience result and CS service importance ranking.

Then, , , , and are used as inputs to the consensus model based on cooperative game theory, as defined in Equations (7)–(15). The consensus resilience between all stakeholders for the disruption is calculated, with the results recorded in the subcolumn ’Value’ of in Table 8. The subcolumn of represents the importance weight of each CS service, derived using Equation (16). Finally, the consensus evaluation of all disturbances and their corresponding weights is used as input to Equation (17), resulting in a MaaS resilience value of 0.9079.

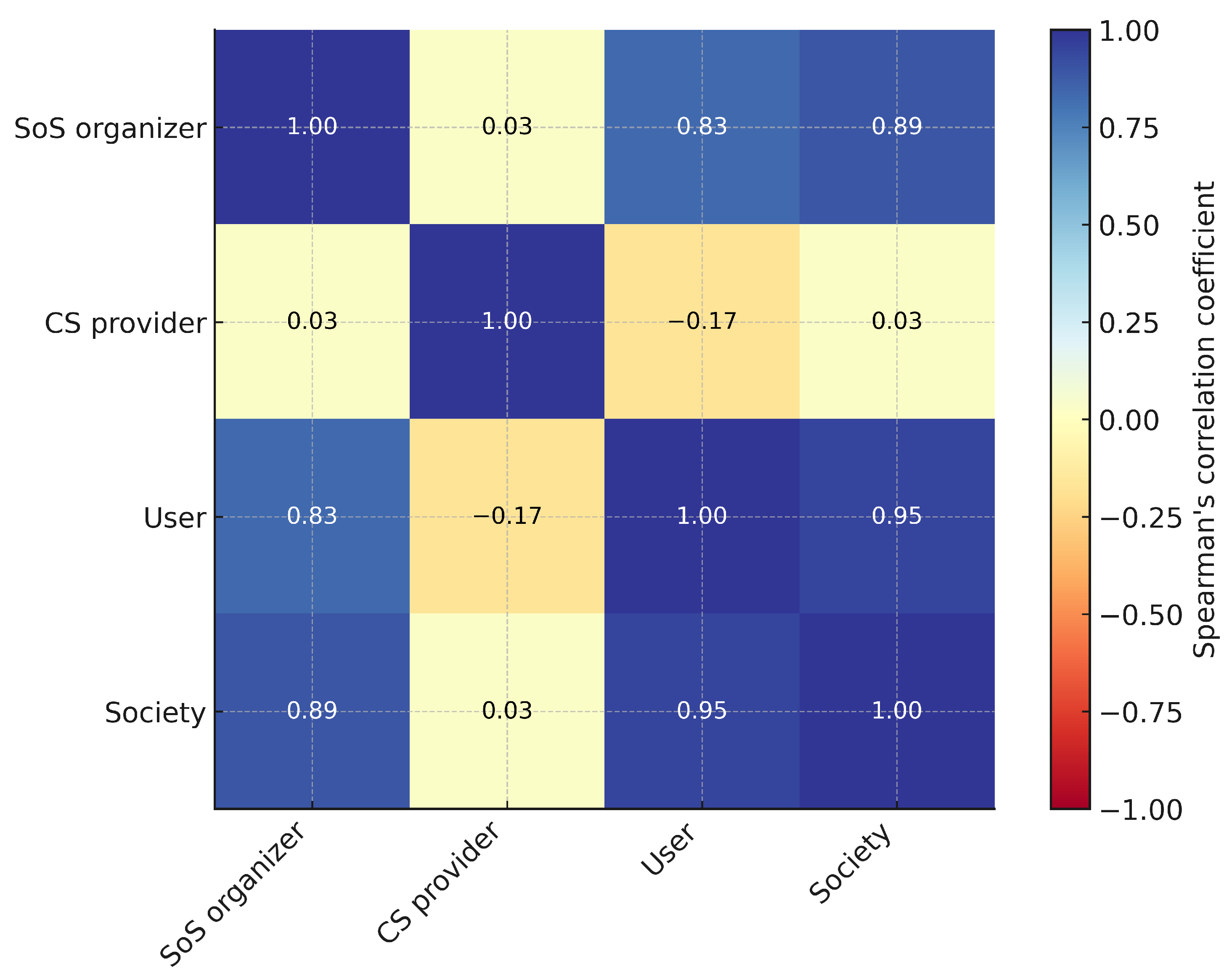

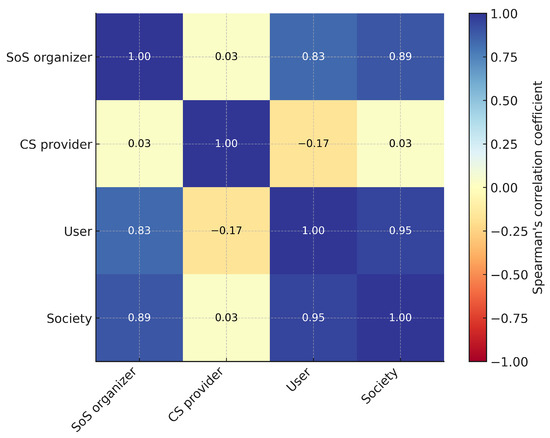

In this dataset, the resilience evaluation rankings of differ significantly from those of other stakeholder groups. To further illustrate the divergence in resilience assessments among different stakeholder groups, this study calculates the Spearman correlation coefficient for the resilience data in Table 9, with the results presented in Figure 9. At a significance level of , the resilience evaluations by SoS organizers, users, and social stakeholders exhibit a significant positive correlation with one another. However, the evaluations by CS providers do not demonstrate a linear correlation with those of other stakeholders. This indicates a discrepancy between CS providers and other stakeholder groups in terms of resilience assessment. The primary reason for this divergence lies in the performance metric used for CS providers, which is service utilization and incorporates redundancy costs. In contrast, the performance metrics for other stakeholder groups do not account for resilience cost considerations.

Figure 9.

Spearman’s correlation coefficient between resilience of MaaS evaluated by different stakeholder performance indicators.

4.2.4. Step 4: MaaS Resilience Decision

In response to the three questions posed in Section 3.4, the answers are as follows:

A1: The resilience of the MaaS model was evaluated to be 0.9079. This metric can be compared with other models to assess its relative superiority or inferiority.

A2: In Table 9, CS services are ranked in descending order of , highlighting the relative importance of each CS service. “AS2 Interact with user” is identified as the most critical CS service in the MaaS model and should be prioritized for resilience enhancement. If, after improvement, the resilience still fails to meet stakeholder requirements, further reinforcement should be carried out based on the updated rankings.

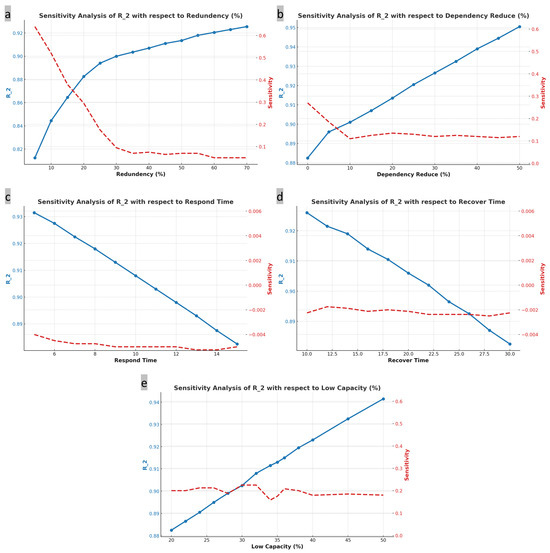

A3: To enhance the resilience of the MaaS model, the following measures based on Table 7 are applied as examples to the critical CS service “AS2 Interact with user.” The term “Original” represents the state of MaaS before any improvements were implemented.

- Original: MaaS before any improvements and parameters set as Table 8.

- Method1: was increased from 20% to 50% by scaling up servers.

- Method2: of the relationship relied on ’AS2’ was reduced 25% by increasing local servers.

- Method 3: was reduced from 15 to 9 by implementing network congestion monitoring and attack detection mechanisms.

- Method4: was reduced from 30 to 15 by team security skills training.

- Method5: was increased from 20% to 30% by adding firewalls.

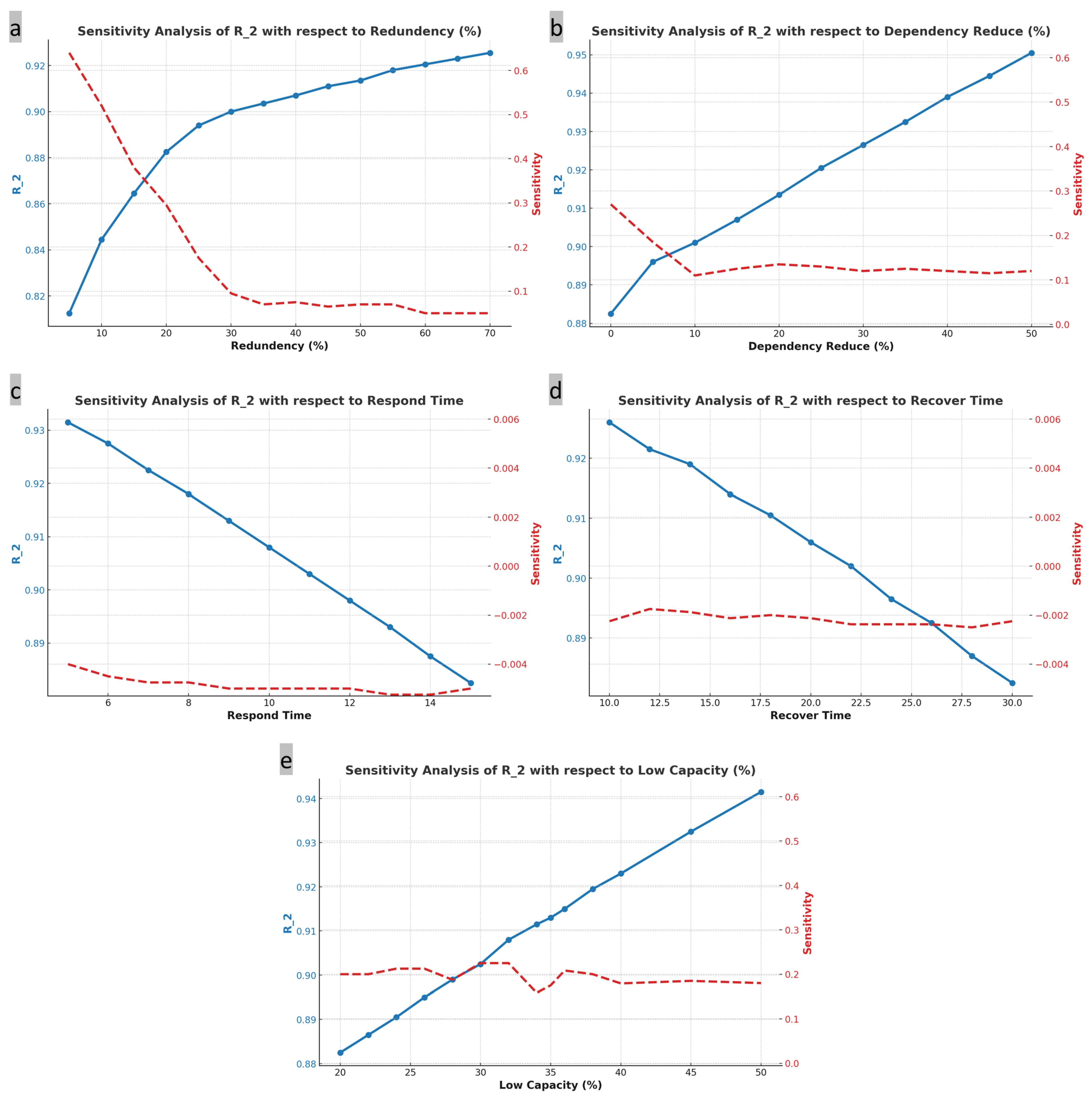

The improvement results are presented in Table 10. Methods 1 through 5 all contributed to enhancing SoS resilience and lowering the importance ranking of AS2. Furthermore, we conducted a one-at-a-time sensitivity analysis on the five parameters corresponding to the proposed measures. The results are shown in Figure 10. Initially, the sensitivity of the proposed resilience indicator to all five parameter changes offers preliminary evidence of its conceptual soundness. More specifically, as for the parameter , introducing redundancy at earlier stages yields a more pronounced improvement in resilience. However, as redundancy continues to increase, the marginal benefits gradually diminish, ultimately stabilizing into a steady linear relationship between redundancy increments and resilience gains. Reducing the dependency on AS2, represented by the parameter , results in a linear improvement in resilience. When AS2 is no longer relied upon by the SoS, disruptions occurring within AS2 have minimal impact on the overall performance of the SoS. Similarly, the three parameters related to the disruption model—, , and —exhibit a linear relationship with the resilience of AS2 after experiencing a disruption. Specifically, reducing and , as well as increasing , consistently improves resilience. This is because variations in these parameters under the disruption model are linearly associated with performance losses. It should be noted, however, that evaluating the effectiveness of resilience measures requires consideration of additional factors such as cost, feasibility, and legal constraints. Therefore, future research could incorporate these aspects, implement multi-parameter sensitivity analysis and explore more concrete resilience-enhancing decisions.

Table 10.

SoS resilience after enhancing AS2 using the five methods.

Figure 10.

One-at-a-time sensitivity analysis on the five parameters corresponding to the proposed measures. (a) Sensitivity analysis of with respect to redundancy. (b) Sensitivity analysis of with respect to dependency reduction. (c) Sensitivity analysis of with respect to respond time. (d) Sensitivity analysis of with respect to recover time. (e) Sensitivity analysis of with respect to low capacity.

5. Conclusions

The approach proposed in this study enables the quantitative evaluation of SoS resilience, with the evaluation outcomes serving as a basis for SoS design. Firstly, the proposed SoS model, based on ArchiMate, facilitates communication among stakeholders and enhances the comprehension of non-specialist stakeholders. Secondly, the introduced quantified ArchiMate model and simulation assist engineers in testing resilience during the design phase. Finally, the SoS performance is evaluated from a multistakeholder perspective, incorporating the viewpoints of users, SoS organizers, CS providers, and society to ensure a comprehensive assessment of resilience.

MaaS was implemented as a case study to demonstrate the proposed resilience evaluation approach. The evaluation identified key CS services within MaaS and highlighted potential improvement directions. Enhancing MaaS resilience metrics can be achieved by strengthening the robustness, redundancy, and decentralization of its critical services. Furthermore, constructing a diverse, flattened, and low-coupling MaaS architecture can help balance resilience costs among stakeholders, further contributing to resilience enhancement. However, additional research is required to further analyze and identify specific resilience measures.

Building upon this foundation, further development is needed to establish a systematic approach for SoS resilience design. For instance, specific methodologies are required for deriving disruption models from risk analysis, historical data, and stakeholder requirements; dynamic modeling techniques for structural adaptation, including the introduction or decommissioning of CS services; ways of ensuring resilience during the operational phase of the SoS lifecycle and what measures can be taken when inconsistencies with the original design arise; modeling emergent behaviors through the inclusion of constituent system and user agents, enriched state variability, stochastic factors, and dynamic relationships. Finally, a critical need remains for further validation of the proposed method through its application to a broader range of real-world SoS instances, particularly in comparison with conventional dependability design methodologies.

Author Contributions

Conceptualization, H.Z. and Y.M.; methodology, H.Z.; software, H.Z.; investigation, H.Z. and Y.M.; resources, H.Z. and Y.M.; writing—original draft preparation, H.Z.; writing—review and editing, H.Z.; supervision, Y.M.; project administration, Y.M.; funding acquisition, Y.M. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by JSPS KAKENHI Grant Number 22K04618.

Data Availability Statement

Dataset available on request from the authors.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- ISO/IEC/IEEE 21841:2019; Systems and Software Engineering—Taxonomy of Systems of Systems. International Organization for Standardization: Geneva, Switzerland, 2019.

- Sage, A.P.; Cuppan, C.D. On the systems engineering and management of systems of systems and federations of systems. Inf. Knowl. Syst. Manag. 2001, 2, 325–345. [Google Scholar]

- IEC 31010:2019; Risk Management—Risk Assessment Techniques. International Electrotechnical Commission: Geneva, Switzerland, 2019.

- Lee, J.M.Y.; Wong, E.Y.C. Suez Canal blockage: An analysis of legal impact, risks and liabilities to the global supply chain. In MATEC Web of Conferences; EDP Sciences: Les Ulis, France, 2021; Volume 339, p. 01019. [Google Scholar]

- Uday, P.; Marais, K. Designing resilient systems-of-systems: A survey of metrics, methods, and challenges. Syst. Eng. 2015, 18, 491–510. [Google Scholar] [CrossRef]

- ISO 22300:2021; Security and Resilience—Vocabulary. International Organization for Standardization: Geneva, Switzerland, 2021.

- Chatterjee, A.; Layton, A. Mimicking nature for resilient resource and infrastructure network design. Reliab. Eng. Syst. Saf. 2020, 204, 107142. [Google Scholar] [CrossRef] [PubMed]

- Hosseini, S.; Barker, K.; Ramirez-Marquez, J.E. A review of definitions and measures of system resilience. Reliab. Eng. Syst. Saf. 2016, 145, 47–61. [Google Scholar] [CrossRef]

- Hynes, W.; Trump, B.D.; Kirman, A.; Haldane, A.; Linkov, I. Systemic resilience in economics. Nat. Phys. 2022, 18, 381–384. [Google Scholar] [CrossRef]

- Muller, G. Are stakeholders in the constituent systems SoS aware? Reflecting on the current status in multiple domains. In Proceedings of the 2016 11th System of Systems Engineering Conference (SoSE), Kongsberg, Norway, 12–16 June 2016; pp. 1–5. [Google Scholar]

- Zhang, H.; Matsubara, Y.; Takada, H. A Quantitative Approach for System of Systems’ Resilience Analyzing Based on ArchiMate. In International Conference on Computer Safety, Reliability, and Security; Springer: Berlin/Heidelberg, Germany, 2023; pp. 47–60. [Google Scholar]

- Shenhar, A.J. 2.5. 1 A New Systems Engineering Taxonomy. In INCOSE International Symposium; Wiley Online Librar: Hoboken, NJ, USA, 1995; Volume 5, pp. 723–732. [Google Scholar]

- Gorod, A.; Sauser, B.; Boardman, J. System-of-systems engineering management: A review of modern history and a path forward. IEEE Syst. J. 2008, 2, 484–499. [Google Scholar] [CrossRef]

- Kotov, V. Systems of systems as communicating structures. Object-Oriented Technology and Computing Systems Re-Engineering; Horwood Publishing Limited: Chichester, UK, 1997; pp. 141–154. [Google Scholar]

- Maier, M.W. Architecting principles for systems-of-systems. Syst. Eng. J. Int. Counc. Syst. Eng. 1998, 1, 267–284. [Google Scholar] [CrossRef]

- Hause, M. The Unified Profile for DoDAF/MODAF (UPDM) enabling systems of systems on many levels. In Proceedings of the 2010 IEEE International Systems Conference, San Diego, CA, USA, 5–8 April 2010; pp. 426–431. [Google Scholar]

- Li, L.; Dou, Y.; Ge, B.; Yang, K.; Chen, Y. Executable System-of-Systems architecting based on DoDAF meta-model. In Proceedings of the 2012 7th International Conference on System of Systems Engineering (SoSE), Genoa, Italy, 16–19 July 2012; pp. 362–367. [Google Scholar]

- Zhiwei, C.; Tingdi, Z.; Jian, J.; Yaqiu, L. System of systems architecture modeling and mission reliability analysis based on DoDAF and Petri net. In Proceedings of the 2019 Annual Reliability and Maintainability Symposium (RAMS), Orlando, FL, USA, 28–31 January 2019; pp. 1–6. [Google Scholar]

- Agarwal, P.; Estanguet, R.; Leblanc, F.; Ernadote, D. Architecting System of Systems for Resilience using MBSE. In Proceedings of the 2023 18th Annual System of Systems Engineering Conference (SoSe), Lille, France, 14–16 June 2023; pp. 1–7. [Google Scholar]

- Nadira, B.; Bouanaka, C.; Bendjaballah, M.; Djarri, A. Towards an UML-based SoS Analysis and Design Process. In Proceedings of the 2020 International Conference on Advanced Aspects of Software Engineering (ICAASE), Constantine, Algeria, 28–30 November 2020; pp. 1–8. [Google Scholar]

- Ingram, C.; Fitzgerald, J.; Holt, J.; Plat, N. Integrating an upgraded constituent system in a system of systems: A SysML case study. In INCOSE International Symposium; Wiley Online Library: Hoboken, NJ, USA, 2015; Volume 25, pp. 1193–1208. [Google Scholar]

- Cloutier, R.; Sauser, B.; Bone, M.; Taylor, A. Transitioning systems thinking to model-based systems engineering: Systemigrams to SysML models. IEEE Trans. Syst. Man, Cybern. Syst. 2014, 45, 662–674. [Google Scholar] [CrossRef]

- Nan, C.; Sansavini, G. A quantitative method for assessing resilience of interdependent infrastructures. Reliab. Eng. Syst. Saf. 2017, 157, 35–53. [Google Scholar] [CrossRef]

- Bondar, S.; Hsu, J.C.; Pfouga, A.; Stjepandić, J. Agile digital transformation of System-of-Systems architecture models using Zachman framework. J. Ind. Inf. Integr. 2017, 7, 33–43. [Google Scholar] [CrossRef]

- Goldschmid, J.; Gude, V.; Corns, S. SoS Explorer Application with Fuzzy-Genetic Algorithms to Assess an Enterprise Architecture–A Healthcare Case Study. Procedia Comput. Sci. 2021, 185, 55–62. [Google Scholar] [CrossRef]

- Falcone, A.; Garro, A.; D’Ambrogio, A.; Giglio, A. Using BPMN and HLA for SoS engineering: Lessons learned and future directions. In Proceedings of the 2018 IEEE International Systems Engineering Symposium (ISSE), Rome, Italy, 1–3 October 2018; pp. 1–8. [Google Scholar]

- Watson, B.C.; Chowdhry, A.; Weissburg, M.J.; Bras, B. A new resilience metric to compare system of systems architecture. IEEE Syst. J. 2021, 16, 2056–2067. [Google Scholar] [CrossRef]

- Watson, B.C.; Morris, Z.B.; Weissburg, M.; Bras, B. System of system design-for-resilience heuristics derived from forestry case study variants. Reliab. Eng. Syst. Saf. 2023, 229, 108807. [Google Scholar] [CrossRef]

- Kumar, P.; Merzouki, R. Power Consumption Modeling of a System of Systems applied to a Platoon of Autonomous Vehicles. In Proceedings of the 2023 18th Annual System of Systems Engineering Conference (SoSe), Lille, France, 14–16 June 2023; pp. 1–6. [Google Scholar]

- Zhou, Z.; Matsubara, Y.; Takada, H. Developing Reliable Digital Healthcare Service Using Semi-Quantitative Functional Resonance Analysis. Comput. Syst. Sci. Eng. 2023, 45, 35–50. [Google Scholar] [CrossRef]

- Jamshidi, M. Systems of Systems Engineering: Principles and Applications; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar]

- Rao, M.; Ramakrishnan, S.; Dagli, C. Modeling and simulation of net centric system of systems using systems modeling language and colored Petri-nets: A demonstration using the global earth observation system of systems. Syst. Eng. 2008, 11, 203–220. [Google Scholar] [CrossRef]

- Abdelgawad, M.; Ray, I. Resiliency Analysis of Mission-Critical System of Systems Using Formal Methods. In Proceedings of the IFIP Annual Conference on Data and Applications Security and Privacy, San Jose, CA, USA, 15–17 July 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 153–170. [Google Scholar]

- Wang, H.; Fei, H.; Yu, Q.; Zhao, W.; Yan, J.; Hong, T. A motifs-based Maximum Entropy Markov Model for realtime reliability prediction in System of Systems. J. Syst. Softw. 2019, 151, 180–193. [Google Scholar] [CrossRef]

- Sun, H.; Yang, M.; Zio, E.; Li, X.; Lin, X.; Huang, X.; Wu, Q. A simulation-based approach for resilience assessment of process system: A case of LNG terminal system. Reliab. Eng. Syst. Saf. 2024, 249, 110207. [Google Scholar] [CrossRef]

- Zhao, B.; Wang, X.; Lin, D.; Calvin, M.M.; Morgan, J.C.; Qin, R.; Wang, C. Energy management of multiple microgrids based on a system of systems architecture. IEEE Trans. Power Syst. 2018, 33, 6410–6421. [Google Scholar] [CrossRef]

- Andrade, S.R.; Hulse, D.E. Evaluation and Improvement of System-of-Systems Resilience in a Simulation of Wildfire Emergency Response. IEEE Syst. J. 2022, 17, 1877–1888. [Google Scholar] [CrossRef]

- Martin, R.; Schlüter, M. Combining system dynamics and agent-based modeling to analyze social-ecological interactions—An example from modeling restoration of a shallow lake. Front. Environ. Sci. 2015, 3, 66. [Google Scholar] [CrossRef]

- Tan, X.; Xiao, S.; Yang, Y.; Khakzad, N.; Reniers, G.; Chen, C. An agent-based resilience model of oil tank farms exposed to earthquakes. Reliab. Eng. Syst. Saf. 2024, 247, 110096. [Google Scholar] [CrossRef]

- Kumar, P.; Merzouki, R.; Bouamama, B.O. Multilevel modeling of system of systems. IEEE Trans. Syst. Man, Cybern. Syst. 2017, 48, 1309–1320. [Google Scholar] [CrossRef]

- Xu, R.; Liu, J.; Li, J.; Yang, K.; Zio, E. TSoSRA: A task-oriented resilience assessment framework for system-of-systems. Reliab. Eng. Syst. Saf. 2024, 248, 110186. [Google Scholar] [CrossRef]

- Xu, R.; Ning, G.; Liu, J.; Li, M.; Li, J.; Yang, K.; Lou, Z. CSoS-STRE: A combat system-of-system space-time resilience enhancement framework. In Frontiers of Engineering Management; Springer: Berlin/Heidelberg, Germany, 2025; pp. 1–21. [Google Scholar]

- Jiang, J.; Lakhal, O.; Merzouki, R. A Resilience Implementation Framework of System-of-Systems based on Hypergraph Model. In Proceedings of the 2022 17th Annual System of Systems Engineering Conference (SOSE), Rochester, NY, USA, 7–11 June 2022; pp. 487–492. [Google Scholar]

- Farid, A.M. A Hetero-functional Graph Resilience Analysis for Convergent Systems-of-Systems. arXiv 2024, arXiv:2409.04936. [Google Scholar]

- Joannou, D.; Kalawsky, R.; Saravi, S.; Rivas Casado, M.; Fu, G.; Meng, F. A model-based engineering methodology and architecture for resilience in systems-of-systems: A case of water supply resilience to flooding. Water 2019, 11, 496. [Google Scholar] [CrossRef]

- Zhang, H.; Matsubara, Y. Consensus Based Resilience Assurance for System of Systems. IEEE Access 2025, 13, 20203–20217. [Google Scholar] [CrossRef]

- Patriarca, R.; Bergström, J.; Di Gravio, G.; Costantino, F. Resilience engineering: Current status of the research and future challenges. Saf. Sci. 2018, 102, 79–100. [Google Scholar] [CrossRef]

- Zhou, Z.; Matsubara, Y.; Takada, H. Resilience analysis and design for mobility-as-a-service based on enterprise architecture modeling. Reliab. Eng. Syst. Saf. 2023, 229, 108812. [Google Scholar] [CrossRef]

- Ed-daoui, I.; Itmi, M.; Hami, A.E.; Hmina, N.; Mazri, T. A deterministic approach for systems-of-systems resilience quantification. Int. J. Crit. Infrastructures 2018, 14, 80–99. [Google Scholar] [CrossRef]

- Wells, E.M.; Boden, M.; Tseytlin, I.; Linkov, I. Modeling critical infrastructure resilience under compounding threats: A systematic literature review. Prog. Disaster Sci. 2022, 15, 100244. [Google Scholar] [CrossRef]

- Wood, M.D.; Wells, E.M.; Rice, G.; Linkov, I. Quantifying and mapping resilience within large organizations. Omega 2019, 87, 117–126. [Google Scholar] [CrossRef]

- Hosseini, S.; Al Khaled, A.; Sarder, M. A general framework for assessing system resilience using Bayesian networks: A case study of sulfuric acid manufacturer. J. Manuf. Syst. 2016, 41, 211–227. [Google Scholar] [CrossRef]

- Yodo, N.; Wang, P. Resilience modeling and quantification for engineered systems using Bayesian networks. J. Mech. Des. 2016, 138, 031404. [Google Scholar] [CrossRef]

- Sun, J.; Bathgate, K.; Zhang, Z. Bayesian network-based resilience assessment of interdependent infrastructure systems under optimal resource allocation strategies. Resilient Cities Struct. 2024, 3, 46–56. [Google Scholar] [CrossRef]

- Jiao, T.; Yuan, H.; Wang, J.; Ma, J.; Li, X.; Luo, A. System-of-Systems Resilience Analysis and Design Using Bayesian and Dynamic Bayesian Networks. Mathematics 2024, 12, 2510. [Google Scholar] [CrossRef]

- Li, J.; Wang, T.; Shang, Q. Probability-based seismic resilience assessment method for substation systems. Struct. Infrastruct. Eng. 2021, 18, 71–83. [Google Scholar] [CrossRef]

- Francis, R.; Bekera, B. A metric and frameworks for resilience analysis of engineered and infrastructure systems. Reliab. Eng. Syst. Saf. 2014, 121, 90–103. [Google Scholar] [CrossRef]

- Dessavre, D.G.; Ramirez-Marquez, J.E.; Barker, K. Multidimensional approach to complex system resilience analysis. Reliab. Eng. Syst. Saf. 2016, 149, 34–43. [Google Scholar] [CrossRef]

- Chen, Z.; Hong, D.; Cui, W.; Xue, W.; Wang, Y.; Zhong, J. Resilience Evaluation and Optimal Design for Weapon System of Systems with Dynamic Reconfiguration. Reliab. Eng. Syst. Saf. 2023, 237, 109409. [Google Scholar] [CrossRef]

- Uday, P.; Chandrahasa, R.; Marais, K. System importance measures: Definitions and application to system-of-systems analysis. Reliab. Eng. Syst. Saf. 2019, 191, 106582. [Google Scholar] [CrossRef]

- Ouyang, M.; Wang, Z. Resilience assessment of interdependent infrastructure systems: With a focus on joint restoration modeling and analysis. Reliab. Eng. Syst. Saf. 2015, 141, 74–82. [Google Scholar] [CrossRef]

- ISO/IEC/IEEE 21839:2019; Systems and Software Engineering—System of Systems (SoS) Considerations in Life Cycle Stages of a System. International Organization for Standardization: Geneva, Switzerland, 2019.

- Josey, A.; Lankhorst, M.; Band, I.; Jonkers, H.; Quartel, D. An introduction to the ArchiMate® 3.0 specification. White Paper from The Open Group; The Open Group: San Francisco, CA, USA, 2016. [Google Scholar]

- Beauvoir, P. Archi—Scripting for Archi. Available online: https://www.archimatetool.com/blog/2018/07/02/jarchi/ (accessed on 10 April 2025).

- Chen, V.Z.; Zhong, M.; Duran, P.; Sauerwald, S. Multistakeholder Benefits: A Meta-Analysis of Different Theories. Bus. Soc. 2023, 62, 612–645. [Google Scholar] [CrossRef]

- Chen, V.Z.; Duran, P.; Sauerwald, S.; Hitt, M.A.; van Essen, M. Multistakeholder Agency: Stakeholder Benefit Alignment and National Institutional Contexts. J. Manag. 2021, 49, 839–865. [Google Scholar] [CrossRef]

- Pérez-Castrillo, D.; Wettstein, D. Bidding for the surplus: A non-cooperative approach to the Shapley value. J. Econ. Theory 2001, 100, 274–294. [Google Scholar] [CrossRef]

- Winter, E. The shapley value. Handbook of Game Theory with Economic Applications; Elsevier: Amsterdam, The Netherlands, 2002; Volume 3, pp. 2025–2054. [Google Scholar]

- Madni, A.M.; Jackson, S. Towards a conceptual framework for resilience engineering. IEEE Syst. J. 2009, 3, 181–191. [Google Scholar] [CrossRef]

- Lecointre, G.; Aish, A.; Améziane, N.; Chekchak, T.; Goupil, C.; Grandcolas, P.; Vincent, J.F.; Sun, J.S. Revisiting Nature’s “Unifying Patterns”: A Biological Appraisal. Biomimetics 2023, 8, 362. [Google Scholar] [CrossRef]

- Crist, P.; Deighton-Smith, R.; Fernando, M.; McCarthy, O.; Sohu, V.; Mulley, C.; Geier, T. Developing Innovative Mobility Solutions in the Brussels-Capital Region; Technical Report; OECD: Paris, France, 2021. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).