1. Introduction

The emergence of data fundamentally transformed the business landscape [

1]. With the widespread adoption of artificial intelligence (AI) technologies across industries, data are increasingly viewed not as a cost but as a valuable asset for companies [

2]. Unlike traditional intangible assets, data vary widely in origin, possess immense capacity, and are produced at a high speed, which makes them a critical resource for developing effective business strategies [

3]. Moreover, data are highly regarded as a corporate asset because their intangible nature enables simultaneous use across departments [

4]. The utilization of data spans decision-making support, cost reduction, and public service provision, which has led to growing expectations regarding their potential value [

5]. As a result, businesses are increasingly seeking ways to leverage data to create value, gain deeper insights into their operations, and enhance both decision-making and performance [

6]. To facilitate the transfer and transaction of intangible assets—such as data, patents, and brands—valuation is actively used to determine their monetary worth for purposes including finance technology, investment, litigation, and taxation.

However, existing research presents several notable limitations. Although factors such as data quality, availability, and timeliness are recognized as critical in determining data value [

7], key problems remain unresolved. First, the specific mechanism that links data quality to economic value has not been clearly defined [

8]. Second, much of the research concentrates on structured data while often neglecting complex forms such as unstructured data [

9]. Third, data valuation studies tend to be confined to specific industries or use cases, which limits their generalizability [

10]. Fourth, the dynamic process of data creation, processing, and utilization is insufficiently addressed in these studies [

11]. Together, these shortcomings hinder companies from fully leveraging their data assets and understanding the true value they provide to stakeholders. Hence, there is a pressing need to develop a data valuation model that aligns with how companies use data and accounts for the various factors influencing data value. Understanding the determinants of data value is crucial for effective data valuation [

6]. By clarifying these determinants, organizations can optimize their data assets more strategically, which improves data-driven decision-making and correct prioritization of data management and investment decisions [

12]. In other words, identifying these factors allows companies to align data more directly with business objectives, which allows them to concentrate resources on high-value data.

This study addresses the outlined challenges by proposing a more effective approach to generating and securing value from data. The main objective is to identify the factors that determine the essential value of data and to quantify the impact of these factors. Securing these essential value factors is critical for accurate data valuation, and this study can provide a framework for recognizing and extracting these factors. By distinguishing between essential value factors and value-of-use factors, this study proposes a new approach to data valuation that can help companies manage and utilize data more effectively. Furthermore, this methodology enables a quantitative assessment of how data value factors can vary across different data types, and it provides insights into how data can generate business value in the future. The valuation model developed here lays the foundation for treating data as an asset and will support companies in creating effective strategies for managing and utilizing their data assets, which ultimately improves data-driven management practices.

This study is structured as follows:

Section 2 reviews the relevant literature on data categorization and existing approaches to data valuation, together with background research on the determinants of data value.

Section 3 presents the research framework.

Section 4 applies the proposed framework to evaluate the value of datasets used in machine learning, while

Section 5 discusses the disruptions observed during the evaluation. Finally,

Section 6 concludes the study with a summary of the findings.

2. Literature Review

2.1. Data as an Intangible Asset

2.1.1. Conceptualizing Data

Data have transformed into a critical intangible asset in modern society, and understanding their characteristics and classifications is fundamental for developing effective data management and utilization methods [

2]. Data are typically categorized into three types: structured, unstructured, and semi-structured [

13].

Structured data follow a fixed format, which allows for easy processing within database management systems (DBMSs) [

14]. Examples include financial transaction records, ERP system data, and customer information.

Unstructured data, in contrast, lack a consistent format and appear in various forms, such as text, images, and video [

14]. As the role of unstructured data grows, companies use technologies like machine learning and natural language processing to analyze and extract value from them.

Semi-structured data falls between the other two categories. They contain some structure—typically in formats such as XML and JSON—but do not follow a fixed schema [

15].

Data possess inherent characteristics that can generate new value when combined with other data sources [

16]. Additionally, metadata (which provide information about the data themselves) play a crucial role in data management and retrieval [

8]. For instance, metadata such as the creation date, revision history, and author details enhance the data utility. Data categorization is not only important for classification but also for understanding how each data type can impact corporate decision-making and strategic planning. By categorizing data, organizations can evaluate the potential value of their data assets and introduce appropriate analysis methods and management strategies [

12]. A thorough understanding of data types facilitates the discovery and utilization of the value within large datasets, such as big data. This study aims to provide a foundation for data valuation based on the classification and characteristics of these data types.

2.1.2. Data as an Intangible Market Asset

Data are increasingly recognized as a strategic asset for companies, with their value directly linked to organizational performance. The International Financial Reporting Standards (IFRS) outline three conditions for classifying something as an intangible asset: identifiability, resource control, and the potential to generate future economic benefits [

17]. When these conditions are met, data can be considered an asset. Furthermore, data are non-consumable and can be shared across various departments simultaneously, which increases their economic value [

18]. For instance, Amazon boosted sales by analyzing customer purchase and search data to build a personalized recommendation system [

9]. Similarly, Facebook leverages user data for targeted advertising, and Tencent uses multidimensional user data to provide innovative services [

19]. These examples illustrate how data can strengthen corporate competitiveness and drive market differentiation. Data also play a critical role in mergers and acquisitions (M&A) [

11]. A notable example is Microsoft’s USD 26.2 billion acquisition of LinkedIn, where LinkedIn’s high-quality user data were central to the deal [

19]. These examples highlight the potential of data assetization to enhance corporate value and offer essential information for investors and stakeholders to make decisions. Moreover, the strategic significance of data assets can be further explained by the Resource-Based View (RBV), which posits that unique and high-quality data can confer sustained competitive advantages [

20]. Additionally, value-based data governance frameworks emphasize aligning data management practices with broader organizational strategies, highlighting how the effective governance of data quality dimensions directly enhances business value [

21]. Effective Knowledge Management (KM), particularly through big data analytics, plays a critical role in transforming data assets into actionable knowledge, thereby driving innovation and strategic decision-making [

9]. This study contributes to the theoretical advancement of data valuation by integrating the Resource-Based View (RBV), which emphasizes the rarity, inimitability, and organizational embeddedness of valuable resources such as data. Furthermore, the proposed framework draws upon Knowledge Management theory to model the transformation of raw data into actionable knowledge that fuels innovation. By aligning essential and value-of-use factors with the DIKW (Data–Information–Knowledge–Wisdom) hierarchy, this study provides a structured and theory-grounded foundation for assessing data value.

2.2. Determinants of Data Value

2.2.1. Key Factors Impacting Data Value

The value of data is determined by several key factors, including their quality, availability, and timeliness [

20]. Data quality encompasses essential attributes such as accuracy, completeness, and consistency, which are critical for assessing both their reliability and accessibility. Levitin and Redman [

22] found that when data quality is low, organizations spend over 20% of their total operating costs on correcting and refining data errors. This finding highlights the direct impact of data quality on an organization’s economic performance. Furthermore, Pipino [

23] emphasized that high-quality data significantly enhance the efficiency of data-driven strategies, such as customer relationship management (CRM). Data availability refers to the ease with which data can be accessed and analyzed. It is another fundamental factor in determining data’s economic value. Miller and Mork [

24] examined cases where process automation, powered by readily available data, led to significant productivity gains in manufacturing. In addition, their research underscores the importance of data availability in optimizing corporate operations. For instance, implementing predictive maintenance with real-time data substantially reduced production-line downtime. Timeliness, or the ability to provide data promptly, is especially crucial in time-sensitive industries such as finance and logistics. Thomas and Griffin [

25] explored cases where logistics companies used real-time data to optimize delivery routes and cut fuel costs. Timeliness is particularly important in areas like supply chain management. For example, Amazon successfully reduced logistics costs and improved customer satisfaction by deploying inventory in real time through a data-driven order prediction system [

26]. These examples illustrate how the economic value of data can be maximized when both data quality and usability are effectively integrated.

Previous studies have stressed that determinants of data values such as data quality, usability, and timeliness—are essential for improving data-driven strategies and enhancing operational efficiency. However, most of these studies are confined to specific industries or types of data, and they have not fully explored the value creation mechanisms for complex data types, such as unstructured data. To address these gaps, this study introduces a comprehensive evaluation model that can identify data value determinants by industry and incorporate the value of various data types. This approach aims to increase the reliability of data valuation and enhance the effectiveness of data-driven decision-making. Recent advancements have introduced AI-driven and blockchain-based real-time data valuation methodologies, providing dynamic approaches that continuously assess data assets. AI-driven techniques leverage machine learning algorithms to adapt valuations to real-time data streams, whereas blockchain technology ensures transparent and auditable valuation processes, addressing traditional valuation challenges related to transparency and trust.

2.2.2. Data Quality Assessment in Value Evaluation

Data quality assessment is a critical element in the data valuation process and directly affects the economic value of data [

27]. ISO/IEC 25012 [

28], an international standard for data quality evaluation, identifies key factors such as accuracy, completeness, timeliness, and accessibility [

11]. These factors ensure data reliability and enhance the effectiveness of data-driven decision-making. Moreover, data quality assessment also includes technical tasks like data purification and deduplication [

29]. For example, in the financial sector, data accuracy is maintained through deduplication and precise analysis, while in healthcare, data quality management is directly linked to patient safety [

30]. This study aims to improve the reliability of the data valuation model by incorporating data quality factors.

Existing research on data quality evaluation has evolved across various industries and contexts [

16]. Yanlin and Haijun [

31] proposed a framework that defines data quality in terms of accuracy, completeness, and consistency and connects it with data availability. While these studies highlight the significant impact of data quality on data utilization and economic value, they face limitations in evaluating complex data types, such as unstructured data. Laney [

4] argued that timeliness and accessibility are essential to enhancing corporate decision-making agility, but the methodological basis linking these factors to specific economic value remains insufficient. Furthermore, Moody and Walsh [

13] proposed a model combining data quality factors and data use cases for evaluating data asset value. However, this model was industry-specific, which limits its range of applications. Nani [

32] examined the impact of data quality factors on data valuation in a big data environment by focusing on timeliness and availability. However, the study did not address how data quality evolves dynamically over time. The distinction between essential and value-of-use factors proposed in this study is theoretically supported by ISO/IEC 25012 data quality dimensions, which emphasize intrinsic aspects of data quality (e.g., accuracy and completeness) as well as contextual factors (e.g., timeliness and accessibility). Additionally, this conceptualization aligns with the Data–Information–Knowledge–Wisdom (DIKW) hierarchy, where essential value factors correspond to the foundational ‘Data’ quality, while value-of-use factors relate to the contextualized application and usefulness associated with ‘Information’ and ‘Knowledge’.

In summary, while existing research acknowledges the importance of data quality in data valuation, several limitations remain. First, the mechanisms connecting the data quality factors to an economic value have not been clearly defined. Second, there is a lack of systems for evaluating complex data types, such as unstructured data. Third, the generalizability of data valuation models is constrained by their industry-specific focus. Finally, the dynamic nature of data quality, which may change over time or according to specific application contexts, has not yet been sufficiently addressed. To overcome these limitations, this study proposes a comprehensive model that integrates data quality evaluation with data value assessment. The model will define a clear path that links data quality factors to economic value, incorporate an evaluation system for both structured and unstructured data, and enhance the model’s versatility by analyzing industry-specific data use cases. This approach seeks to establish a new framework that captures the dynamic relationship between data quality and economic value.

3. Framework

3.1. Overall Process

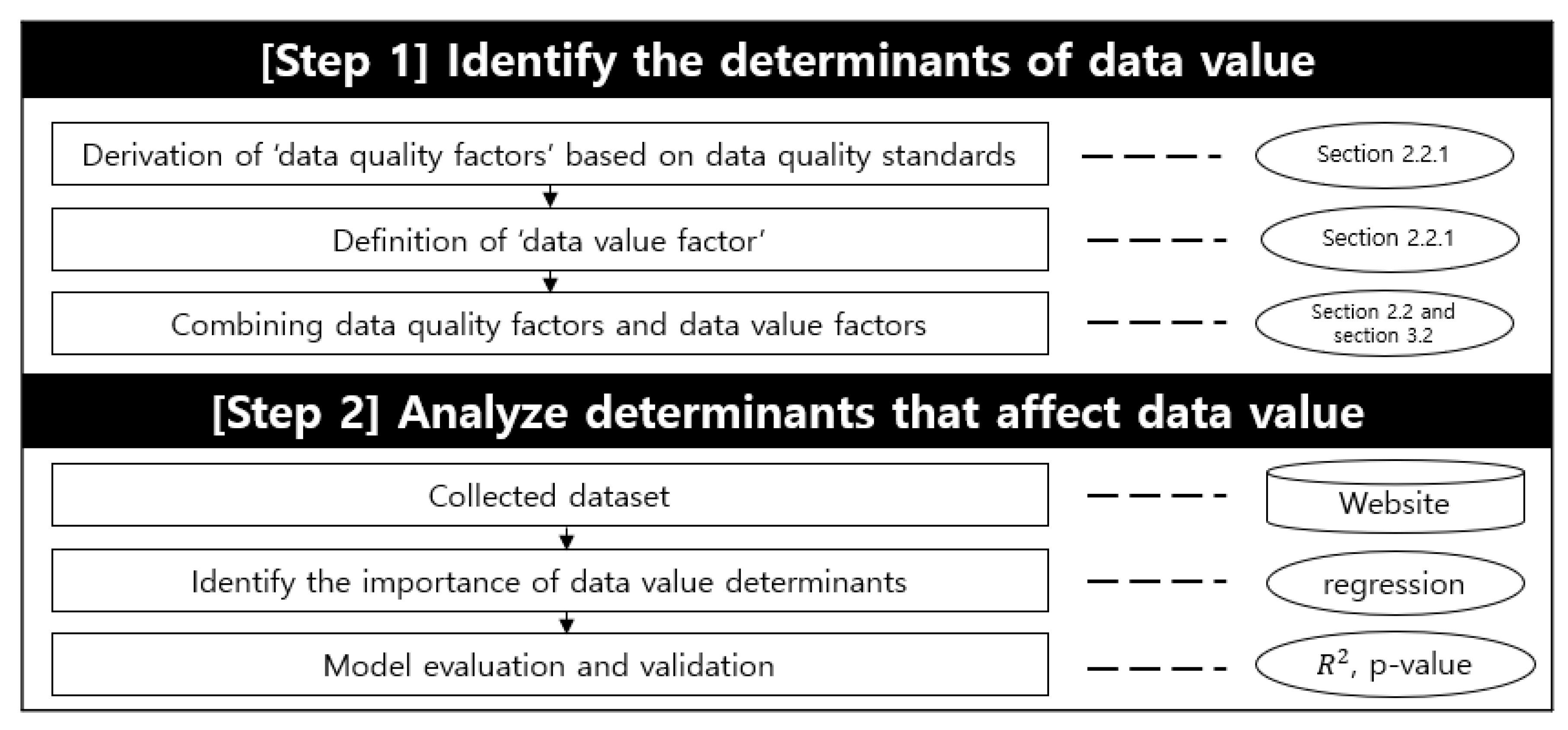

This study is grounded in the data value factor index, which allows for the evaluation of data importance based on specific objectives. The aim was to develop a framework that identifies and assigns weights to key value factors that affect the data value. Through a regression analysis, we seek to determine the relative significance of each data value determinant and identify those with the highest impact. The process outlined in this study is illustrated in

Figure 1. The research method is performed in the order of arrows for each step in the figure, and the dotted line is about the DB or methodology used or performed based on the contents of the previous literature survey. This study addresses the following research questions:

RQ1: What determinants significantly impact the value of data assets?

RQ2: How do essential and value-of-use factors differ in their effects on data value?

The corresponding hypotheses include the following:

H 1. Data accuracy and completeness positively influence data value.

H 2. Data uniqueness and timeliness significantly impact data value.

3.2. Step 1: Identify the Determinants of Data Value

The first step in evaluating data is to define the factors that affect their intrinsic value. The items selected for this study were reorganized based on a review of prior research conducted in

Section 2.2 (data value determinants and value calculation methods).

Table 1 below summarizes each factor and provides an explanation.

Completeness refers to the extent to which data were fully collected. Fewer missing values allows for broader use in analysis and thus improves their reliability. This factor reflects the intrinsic quality of the data.

Risk concerns the legal and financial exposure associated with data, including potential misuse and the need to comply with data protection regulations.

Accuracy measures how closely the data reflects the true value. This parameter can be determined by calculating the error rate (higher error rates indicate lower accuracy).

Exclusivity represents how differentiated the data are compared to other datasets that may serve the same purpose. It identifies data with unique qualities that can provide a competitive advantage.

Timeliness assesses the relevance of the data based on the time they are used. The value of data is maximized when they are both collected and utilized at the appropriate time. The data currency is also a critical factor.

Consistency measures the extent to which data can be reliably maintained over time. This number is vital for trend analysis and accurate predictions.

Usage Restrictions refer to legal or policy constraints that limit how and for whom data can be used. These restrictions affect the data’s potential scope and value. In this study, a value of 0 or 1 is used to represent whether such restrictions exist.

Accessibility measures how easily data can be integrated and accessed across various systems and applications. High compatibility with other systems increases the data’s usability and, consequently, its value.

Utility evaluates the practical applications of data and evaluates their potential across various industries and sectors. This parameter quantifies the number and range of industries that rely on the data for products, services, or solutions.

Table 1 provides a concise summary distinguishing essential and value-of-use factors, explicitly linking each factor to relevant ISO 25012 data quality dimensions, clarifying their theoretical grounding and practical relevance. The above items can be categorized into essential value factors and utilization value factors for comparison. The weight of each item can be calculated in Step 2 (

Figure 2). The essential value factors refer to elements related to the intrinsic quality of the data, which are fundamental to their characteristics. These factors serve as critical criteria to evaluate the inherent quality of the data, which affects both their reliability and accuracy. The essential value factors include completeness, accuracy, exclusivity, and consistency. Each of these factors is inherently tied to the data quality and plays a crucial role in determining its reliability. The utilization value factor, in contrast, assesses how useful the data is in practical applications, i.e., it reflects the data’s availability and utility. In other words, this factor evaluates how effective data can be applied in real-world business contexts, considering their applicability, economic value, and associated risks. Essentially, the utilization value factors evaluate how data are used and the extent of value it can generate. These factors include risk, timeliness, usage restrictions, accessibility, and utility. By measuring these factors, we can understand how data are utilized in various business scenarios. Both the essential value factors and the utilization value factors play crucial roles in assessing the overall value of data, which enables companies to manage and leverage data strategically for maximum benefit. Expert-based mapping of dataset attributes to determinants inherently involves subjective judgment, which may affect replicability. To enhance the replicability, future studies could adopt standardized evaluation guidelines, inter-rater reliability assessments, or automated attribute–determinant mapping techniques.

3.3. Step 2: Analysis of Determinants That Affect Data Value

In Step 2, a comprehensive analysis is conducted to assess the essential value and usability of data. This is achieved by quantitatively analyzing specific factors that impact the determinants of data value (

Figure 3). The process begins by structuring the collected data set based on the data value determinants (e.g., accuracy, completeness, and exclusivity) defined in Step 1. Detailed elements within the dataset are then identified. These elements include the quantity of data, data type (structured vs. unstructured), degree of labeling bias, number of similar datasets, field and scope of data usage, frequency of data use, and any ethical restrictions. Each of these elements is linked to the corresponding data value determinant. Next, data preprocessing is performed to convert each detailed element into a quantitative variable. The datasets were selected based on their extensive usage and representation of different data types (image and text). Data preprocessing means substitution for missing values, standardization, and normalization to ensure comparability. The dependent variable (number of papers) was selected to quantify practical data utilization, while independent variables were chosen based on the literature-driven importance of data value determinants.

For example, missing values are addressed through mean or median substitution, and standardization is applied to remove scale differences between variables. Subsequently, a multiple regression analysis is used to establish a functional relationship between the data value determinants and the detailed elements. This analysis statistically estimates the effect of independent variables (detailed factors) on dependent variables (e.g., the number of data use cases) while also calculating the weight of each determinant. In this context, the coefficient of each variable indicates both the direction and magnitude of its impact on the data value. To address the multicollinearity between variables, the Variance Inflation Factor (VIF) is calculated. Unnecessary variables are then optimized using stepwise regression. The regression analysis results are evaluated for model fit using R2 and adjusted R2 values. The significance of each variable is determined based on p-values, with values of 0.05 or lower regarded as statistically significant. Variables that do not meet this threshold are either excluded from the model or supplemented with additional data. The significant variables identified through this process reveal the key factors that contribute to data value formation. It is important to note potential methodological limitations, including endogeneity and omitted variable bias, inherent in regression-based analyses. To address these concerns, we performed careful variable selection based on theoretical and empirical literature, conducted robustness checks, and evaluated Variance Inflation Factors (VIF) to minimize potential bias. The analysis results are visualized through tables or graphs to provide a clear understanding of the relative importance and interactions between the determinants of data value. Finally, the primary mechanism underlying the data value formation process is derived from the regression analysis. It confirms the interaction between the determinants and highlights the significance of individual factors.

4. Application

This section presents a partial application of the proposed data valuation model to specific datasets and offers insights into its practical use. While the number of papers referencing datasets serves as a practical indicator of data value for this study, it may not comprehensively represent data’s economic or commercial value. Future studies could consider incorporating more direct business metrics, such as monetary returns or market impacts, to provide a more robust measure of data value. The objective is to identify potential challenges in applying the model and to briefly assess its utility. In this study, data were extracted from the Papers with Code platform, which serves as a repository for machine learning research papers, together with their associated code and datasets. The platform provides a valuable resource for improving research reproducibility by enabling researchers to share code and data more effectively. Although the datasets available on the platform are free and cannot be directly priced as assets, they are well suited for identifying detailed factors that impact data value, which is the topic of this study.

The proposed model functions in two main steps: identifying the determinants of data value and analyzing them to extract the key factors that influence it. Applying this model allows organizations to strengthen the strategic value of their data assets.

4.1. Case Study 1: Image Data

4.1.1. Step 1: Identifying the Determinants of Data Value

For this case study, a dataset was collected from the platform Papers with Code. The dataset includes information about data types, tasks, papers, and code repositories, benchmarking the data. Usage statistics and other essential details are also accessible (

Figure 4). The highlighted parts of the figure are the elements that can be used on the platform. This case study explores how the relative weight of each determinant may vary across different data types. Image data were selected as the first dataset for analysis, comprising a total of 2672 data points.

Next, detailed elements within the dataset were identified and linked to predefined data value determinants (

Table 2). This matching process was performed qualitatively by connecting dataset elements to relevant determinants of data value. The mapping process may differ based on the specific dataset and typically requires expert qualitative review and interpretation.

4.1.2. Step 2: Analyzing Determinants That Affect Data Value

In this step, the weight ratios of each determinant of data value were evaluated using a regression analysis.

Table 3 shows the detailed elements that were extracted from the image data. For the regression analysis, the variable papers—representing the number of datasets used in academic papers—were set as dependent variables. This decision was based on the significance of user engagement with the data. The remaining variables, including Similar Datasets, Benchmarks, Licenses, Dataset Loaders, Task, Percentage Correct, Usage, Trend, and Description, were designated as independent variables to assess their respective weights and impacts.

A regression analysis was performed to determine the weight ratio of each sub element, and the results are presented in

Figure 5. The analysis reveals the impact of each variable on the number of papers in the image data. Among the variables, Percentage Correct had the highest positive (+) effect, while similar datasets exhibited the most prominent negative (−) effect. The variables Description and Usage showed minimal influence and were found to have little effect. Statistical significance was evaluated using

p-values. All variables, except Description and Usage, were statistically significant and had a meaningful impact on the data value. However, the Description and Usage variables were not significant, which suggests that they may not be suitable determinants for measuring the data value in this context. It is important to note that the results may vary for other datasets. A summary of the findings, including the weight and statistical significance of each variable, is provided in

Table 4. The robustness of the regression model was evaluated using the adjusted R

2, Variance Inflation Factor (VIF), and model selection criteria such as Akaike Information Criterion (AIC) and Bayesian Information Criterion (BIC). For the image data model, the adjusted R

2 was 0.784, indicating that approximately 78.4% of the variance in the data value determinants was explained. The AIC and BIC values were 1210.35 and 1240.48, respectively, supporting the model’s overall fit and parsimony.

The regression analysis revealed the following key findings:

Positive Effects: The variables Percentage Correct, Task, and Trend positively influenced the number of papers. Among these, Percentage Correct had the highest impact.

Negative Effects: The variables Similar Datasets, Benchmarks, License, and Dataset Loaders negatively affected the number of papers, with Similar Datasets showing the strongest negative impact.

Minimal or No Effects: The Usage and Description variables had no significant impact on the number of papers.

In conclusion, the findings suggest that when analyzing datasets, particular emphasis should be placed on the variables Percentage Correct, Task, and Trend because these are the most influential factors that determine the data value.

4.2. Case Study 2: Text Data

To further evaluate the applicability of the model, text data were analyzed within the same dataset. Text data were collected from the “Papers with Code” website, which resulted in a total of 2708 data points.

4.2.1. Step 1: Identifying the Determinants of Data Value

In this step, the elements extractable from the dataset were mapped to the previously defined data value determinants. The process and results are analogous to those described in

Section 4.1.1.

4.2.2. Step 2: Analyze Determinants That Affect Data Value

A regression analysis was conducted to determine the weight ratio of each data value determinant, following the same methodology as outlined in

Section 4.1. Detailed values for the elements extracted from the text data were identified and categorized as dependent and independent variables to enable the regression analysis. The weight significance of each variable is summarized in

Table 5.

A regression analysis was performed to confirm the weight ratio between each sub element, and the results are presented in

Figure 6. These values illustrate the impact of each variable on the number of papers in the text data. Among these, the Percentage Correct variable exhibited the most positive (+) effect, while Similar Datasets showed the most pronounced negative (−) effect. The analysis also revealed that the influence of the Description and Usage variables was minimal, with very little impact on the data value. To assess the statistical significance of the analysis, the

p-values were calculated. All variables, except for Description and Usage, were found to be statistically significant and demonstrated a meaningful impact on the data value. Conversely, the “description” and “use” variables did not exhibit a significant effect on the collected data and produced statistically insignificant results. This finding suggests that these variables may not be appropriate for evaluating the determinants of data value. It is important to note that the results may vary depending on the data set collected and used in the analysis. The findings are summarized in

Table 6. The robustness of the regression model was evaluated using adjusted R², Variance Inflation Factor (VIF), and model selection criteria such as Akaike Information Criterion (AIC) and Bayesian Information Criterion (BIC). For the text data model, the adjusted R

2 was 0.815, explaining 81.5% of the variance in the dependent variable. The AIC and BIC values were 1102.47 and 1131.62, respectively, demonstrating strong model performance.

The regression analysis highlights that the variables Percentage Correct, Task, and Trend positively affect the number of papers, with Percentage Correct showing the highest impact. Conversely, Similar Datasets, Benchmarks, Licenses, and Dataset Loaders negatively affect the number of papers, with Similar Datasets producing the strongest negative effect. The Description and Usage variables did not significantly impact the number of papers. In conclusion, the findings suggest that when datasets are used, particular emphasis should be placed on the variables Percentage Correct, Task, and Trend to maximize their value.

5. Discussion

This study introduced a systematic and quantitative framework for data valuation to offer practical insights into effective data management and utilization. By analyzing the impact of various data value determinants, the framework provides a foundation for companies to effectively manage and optimize their data assets. The findings demonstrate that essential and utilization value factors exert distinct effects on data value formation, which aids the advancement of research in data valuation.

Key essential value factors, such as Percentage Correct, serve as critical indicators of data quality and reliability. They play a pivotal role in determining data value. The study also reveals that Percentage Correct has the strongest positive effect on the economic value of data. These findings highlight the importance of maintaining high data quality as part of a company’s data utilization strategy. For instance, during the training of machine learning models, high-quality data enhances the model performance significantly while mitigating the risks of erroneous outputs. Consequently, establishing and maintaining a robust data quality management system becomes an essential strategy for maximizing the data value.

Utilization value factors, such as Task, evaluate the applicability and versatility of data, which makes them central to transforming data into valuable business assets. The study shows that datasets with broader applicability across multiple tasks have higher value. These outcomes underscore the importance of converting data from mere storage assets to actively utilized resources. Companies can maximize the value of their data assets by exploring diverse applications and expanding the scope of data usage.

Certain factors, such as Similar Datasets and Benchmarks, negatively impact the data value. Here are more details:

Similar Datasets: Excessive similarity among datasets diminishes their uniqueness, which decreases the competitive edge of these data assets. Companies should prioritize strategies to enhance dataset originality, such as developing proprietary datasets or securing exclusive usage rights. For instance, exclusive access to unique datasets can enable companies to offer differentiated services and gain a competitive advantage in the market.

Benchmarks: Benchmarks assess data consistency, showing that a lack of standardization in data management systems undermines usability and reliability. To counter this, companies must implement standardized processes and ensure consistent data management to maintain reliability and usability.

This study holds significance as it provides a practical approach for utilizing data value evaluation results effectively.

Data Management and Investment Strategies: The study enables data management and investment strategies based on the weight of data value determinants. For example, resources can be prioritized for high-quality data, while targeted processes can be introduced to address factors with a negative impact on data value.

Prioritizing Data Development and Utilization: By analyzing the relative importance of data value determinants, companies can set clear priorities for data development and utilization. For example, the diversification of data applications can be realized by strengthening positive factors, such as Task, to maximize their contribution to the overall value.

Fair and Transparent Data Transactions: This study recommends using data valuation results to establish objective criteria for data transactions, including purchases and sales. This supports fairness and transparency in the data market and helps define the economic value of data assets with greater precision.

These findings provide critical insights for strengthening data-driven decision-making and improving data asset management. Companies can improve the efficiency of data use by analyzing the interaction between data value determinants and predicting the effects of data management policies on business performance. In addition, data valuation helps optimize resource allocation in data development and operations.

These findings are consistent with recent research highlighting the importance of dynamic, real-time data valuation for decision-making and innovation. However, our study advances this perspective by introducing a clearer distinction between essential and value-of-use factors and quantifying their respective impacts using a structured regression framework. In contrast to AI-driven valuation approaches, which often operate as black boxes, our methodology emphasizes transparency and interpretability in factor-based valuation. This study supports the development of strategies that balance short-term benefits with long-term value creation through the effective use of data. It demonstrates the practical applicability of data valuation and offers specific recommendations for improving data asset management and utilization strategies. Future research should assess the generalizability of this framework across various data types and industry contexts. Moreover, expanding the model to support real-time data valuation in dynamic environments would further enhance its practical value. Future studies should explore the adaptation of this valuation methodology to real-time data systems and dynamic environments, such as streaming data applications or IoT platforms, by integrating continuous valuation mechanisms and advanced predictive analytics.

6. Conclusions

This study proposes a systematic and quantitative framework for data valuation, offering a novel approach to assess the economic value of data during analysis and use. It distinguishes between essential value factors and utilization value factors, providing a quantitative evaluation of their impact—a significant advancement over prior research. The key findings indicate that factors such as Percentage Correct, Task, and Trend significantly increase the data value, underscoring the importance of accuracy, applicability, and timeliness. In contrast, Similar Datasets and Benchmarks reduce the data value by diminishing uniqueness and consistency. These results suggest actionable strategies for managing data assets by reinforcing high-impact factors and addressing those that weaken the value. This study establishes a strategic foundation for maximizing the economic value of data through quality improvement and more effective use.

The proposed framework provides companies with a practical tool to evaluate and manage data assets effectively. For instance, companies can establish priorities for data utilization based on the weighted significance of value determinants or devise strategies for data development and acquisition. Moreover, the framework’s data valuation results can serve as objective criteria in transactions, which improves transparency and fairness within the data market.

However, despite its valuable insights, this study has certain limitations. First, the dataset used was confined to specific types of data, which may limit the generalizability of the findings. Second, subjective judgments inherent in the evaluation of data value determinants may affect the reliability of the results. To address these limitations, future research should explore the applicability of this framework across a broader range of datasets and industries. In addition, the development of methodologies that leverage machine learning and artificial intelligence for automated, real-time data valuation in dynamic environments remains an important research goal. A potential limitation of this study is the use of academic datasets, which may restrict immediate applicability in broader industrial contexts such as healthcare, finance, and manufacturing. Future research should validate and adapt this framework to diverse industry settings to enhance its generalizability and practical utility. This study’s key methodological innovations include clearly distinguishing essential and value-of-use factors, applying a quantitative regression-based analysis, and introducing an objective valuation framework adaptable across data types and industries. Compared to the recent literature on data valuation, which often leverages machine learning for predictive insights, our framework provides a more interpretable and theory-grounded approach. By aligning data quality determinants with international standards like ISO 25012 and theoretical structures such as the DIKW hierarchy, our study offers a novel and structured foundation for transparent data value assessment.

By addressing key gaps in the existing research, this study introduces a robust framework for managing data assets more effectively. Its findings offer practical guidance for companies aiming to strengthen data-driven decision-making and build a competitive advantage. Looking forward, expanding the scope and applicability of data valuation methods will equip businesses to thrive in an increasingly data-driven economy and reinforce their position in a data-centric society.