1. Introduction

Cybersecurity decision-making is an essential aspect of digital resilience, as individuals and organizations continuously assess risks and implement protective measures against evolving cyber threats. Given the complexity of this domain, cognitive heuristics—mental shortcuts used for rapid decision-making—play a significant role in shaping security-related behaviors [

1]. While heuristics can enhance efficiency in uncertain environments, they also introduce cognitive biases that may lead to security vulnerabilities [

2,

3]. Existing research has extensively examined the influence of specific heuristics such as the availability heuristic [

4], the representativeness heuristic [

5], and anchoring effects [

6] on cybersecurity decision-making. However, the structured nature of heuristic-driven behaviors in cybersecurity remains an underexplored area, limiting the ability to develop targeted interventions for improving security practices.

Previous studies have largely focused on identifying isolated heuristics that affect security decisions. For instance, studies on password management have highlighted satisficing heuristics, where individuals opt for “good enough” security solutions rather than optimal measures [

7,

8]. Similarly, research on phishing susceptibility has shown that cognitive biases, such as trust heuristics and authority bias, play a crucial role in determining an individual’s vulnerability to social engineering attacks [

9,

10]. While these findings offer valuable insights, they do not fully capture how these heuristics interact to form underlying cognitive structures influencing cybersecurity behaviors.

This study addresses this research gap by employing a quantitative approach to identify latent cognitive dimensions underlying cybersecurity decision-making. Factor analysis has been used in previous research to reveal structured patterns in information security behavior [

11], risk perception [

12], and heuristic-driven decision-making [

13]. Building on these methodologies, this study applies Exploratory Factor Analysis (EFA) followed by Confirmatory Factor Analysis (CFA) to determine whether cybersecurity decision-making can be characterized by two primary latent factors: (1) risk perception, encompassing concerns about financial, reputational, and personal protection, and (2) compliance and security, capturing adherence to security policies and operational risk mitigation.

Beyond identifying these cognitive structures, this research explores their predictive power in determining susceptibility to cyber deception. Logistic regression modeling assesses the extent to which these latent factors influence the likelihood of individuals falling victim to cyberattacks. Additionally, clustering analyses classify individuals into distinct cybersecurity decision-making profiles, revealing how heuristic reliance varies across demographic groups and security awareness levels. Previous studies have demonstrated that regulatory compliance [

14] and financial investment [

15] are strong predictors of cybersecurity behaviors. However, this study further investigates whether these factors moderate the impact of heuristics on decision-making, contributing to a deeper understanding of the security paradox, wherein individuals acknowledge risks but fail to act accordingly [

16,

17].

This research contributes to the cybersecurity literature by bridging the gap between heuristic-based decision-making theories and empirical validation of latent cognitive structures. Unlike previous studies that treat heuristics as isolated biases, this study proposes that cybersecurity heuristics function within an interrelated cognitive framework, which can be leveraged for both predictive risk assessment and targeted security training.

Based on the identified gaps, this study is guided by the following research questions:

RQ1: Can cybersecurity behaviors be organized into underlying latent cognitive dimensions (heuristic factors)?

RQ2: How do these cognitive dimensions influence individuals’ susceptibility to cyber deception (e.g., phishing and social engineering attacks)?

RQ3: What distinct decision-making profiles emerge among users based on their reliance on security heuristics and biases?”

This study contributes to the cybersecurity literature in several novel ways. First, we empirically identify latent cognitive dimensions (risk perception and security compliance) that structure a wide range of security behaviors—an integrative perspective not developed in prior work. Second, we combine behavioral data with multi-stage analysis (Exploratory Factor Analysis, clustering, and association rule mining) to uncover complex patterns (e.g., the ‘security paradox’ where high risk awareness does not always translate into action). Third, we define distinct user profiles of cybersecurity decision-making based on heuristic reliance, which, to our knowledge, have not been characterized in previous studies. Together, these insights bridge cognitive psychology and cybersecurity practice, offering actionable guidance for tailored security awareness interventions.

The remainder of this paper is structured as follows:

Section 2 reviews the existing literature on heuristic-driven decision-making in cybersecurity.

Section 3 details the methodology, including survey instrument design, data collection, and statistical analyses.

Section 4 presents the findings from factor analysis, regression modeling, and clustering techniques.

Section 5 discusses the implications of the results for cybersecurity awareness and training programs. Finally,

Section 6 outlines limitations and future research directions.

3. Materials and Methods

This study aims to empirically validate the role of latent cognitive factors in shaping cybersecurity decision-making and susceptibility to social engineering attacks. Each analytical choice was guided by both theoretical frameworks and empirical considerations, ensuring that the findings could contribute meaningfully to the existing literature on cybersecurity cognition.

To address the research questions, we employ a multi-stage analytical approach combining factor analysis, predictive modeling, clustering, and association rule mining. This methodological framework ensures that findings contribute not only to theoretical models of heuristic-driven security behaviors but also to practical applications in cybersecurity awareness and risk mitigation strategies.

The research employed a quantitative, survey-based methodology to capture cybersecurity behaviors, awareness levels, and heuristic-driven decision-making patterns. A structured online questionnaire was developed to assess individual responses to cybersecurity threats, phishing susceptibility, and security habits. The survey instrument was designed based on established frameworks in cybersecurity research, drawing from studies on heuristic processing in digital security contexts [

16,

17,

32,

33,

47,

48,

49]. The questionnaire included scenario-based assessments, multiple-choice questions, and Likert-scale items to measure respondents’ risk perceptions, decision-making biases, and familiarity with security protocols.

3.1. Survey Instrument

The use of structured questionnaires, as described in the query, is a validated approach in cybersecurity research [

50]. These questionnaires often include items that measure awareness of specific cybersecurity practices, such as the use of strong passwords and two-factor authentication. The survey was designed to capture key cognitive shortcuts that may impact cybersecurity behaviors (

Appendix A). Variables encoding and measurement units are presented in

Table A1 from

Appendix A.

The questionnaire consisted of 24 items grouped into thematic sections. The first section (Demographics—Questions 20–24 in

Appendix A) collected demographic and background information, including age, education level, and place of residence. The second section (Cybersecurity Awareness—Question 1 in

Appendix A) assessed cybersecurity awareness and concern using a five-point Likert scale. This aligns with previous cybersecurity research methodologies that measure security perception and self-reported awareness. Using a five-point Likert scale to assess cybersecurity awareness and concern is a standard method [

50,

51,

52,

53]. The third section (Security Decision Factors—Questions 2–3 in

Appendix A) examined factors influencing cybersecurity decisions, allowing respondents to select multiple motivations for adopting security measures. Prior studies have shown that individuals prioritize cybersecurity based on perceived risk exposure and external regulatory pressure [

54,

55].

The fourth section (Phishing Recognition—Questions 4–9 in

Appendix A) focused on phishing recognition and susceptibility. It included items designed to evaluate participants’ ability to detect homoglyph attacks, a common social engineering tactic used in phishing schemes [

56]. These questions were adapted from prior experimental studies on phishing awareness and user susceptibility to deceptive URLs [

38,

57]. The fifth section (Social Engineering Experience Questions 10–14 in

Appendix A) investigated social engineering vulnerabilities by analyzing past experiences with manipulation tactics such as trust exploitation and urgency-based persuasion [

58,

59]. This approach is aligned with studies that explore affect-based decision-making in security contexts [

10,

60]. Finally, the sixth section (Security Behaviors—Questions 15–19) examined security behaviors, such as password management practices, malware response strategies, and website security evaluation.

To ensure content validity, the survey items were designed based on well-established psychological theories, including dual-process models of decision-making and security-specific heuristics research [

13]. The phishing-related questions were derived from methodologies used in cybersecurity training evaluations, while password management questions were adapted from studies on user authentication habits [

61].

Given the exploratory nature of this study, face validity was ensured by aligning survey items with previous empirical research, rather than conducting a formal validation study. However, prior cybersecurity behavior studies provided a strong theoretical foundation for measuring heuristic-driven security decisions, ensuring the relevance and appropriateness of the survey items. The survey was administered online, with responses collected anonymously. Participants were instructed to answer based on their real-world experiences and perceptions of cybersecurity threats. No personally identifiable information was collected, ensuring compliance with ethical research standards.

To ensure content validity, the questionnaire was reviewed by domain experts, and a pilot study with a small subset of participants (N = 15) was conducted. This process helped refine item wording, check for comprehension, and assess initial internal consistency. Reliability testing was performed on the final dataset using Cronbach’s alpha to confirm the internal consistency of the measured constructs, ensuring that the instrument captured heuristic-driven cybersecurity decision-making in a robust manner.

Given the need to uncover latent cognitive structures influencing cybersecurity behaviors, this study integrates multiple quantitative techniques:

EFA was chosen as an initial step to identify underlying dimensions within cybersecurity decision-making, as it is particularly suited for detecting unobserved cognitive patterns.

CFA was then employed to validate these dimensions and assess the robustness of the factor structure.

To understand how these latent constructs predict cybersecurity behaviors, logistic regression was used.

Clustering techniques were applied to segment individuals based on their decision-making tendencies.

Additionally, mediation analysis was performed to assess whether risk perception plays an intermediary role in cybersecurity behaviors.

Association rule mining was used to uncover behavioral patterns in cybersecurity practices.

This multi-method approach ensures a comprehensive evaluation of how heuristics shape security decisions.

Based on prior research on heuristic-driven decision-making, the study formulates the following hypotheses:

H1. Cybersecurity behaviors can be grouped into latent cognitive dimensions, particularly along risk perception and compliance-driven security tendencies.

H2. Higher levels of risk perception are associated with a lower likelihood of falling victim to cyber deception.

H3. Compliance-driven security behaviors moderate the impact of risk perception on cybersecurity decision-making.

H4. Heuristic-driven cybersecurity decision-making follows structured behavioral profiles that can be identified through clustering analysis.

These hypotheses provide a structured basis for the statistical analyses conducted in this study, ensuring that findings contribute to both theoretical and practical advancements in cybersecurity awareness and intervention strategies.

We employed Exploratory Factor Analysis (EFA) not as an end in itself, but to reveal underlying structures in participants’ security behaviors. This approach allows us to move beyond isolated observations by uncovering latent cognitive factors that group related behaviors together. Identifying such factors is important—it enables a more structured understanding of decision-making heuristics in cybersecurity, which can enhance theoretical models and targeted interventions.

3.2. Data Analysis Strategy

An initial step involved data preprocessing and examination of inter-item relationships to determine the underlying structure of cybersecurity decision-making factors. EFA was chosen to identify latent constructs within the dataset, as this technique allows for the discovery of unobserved dimensions that influence security-related behaviors. The extraction method used was maximum likelihood estimation, which provides robust parameter estimates under non-normal data conditions. To account for potential correlations between factors, an oblimin rotation was applied, allowing for non-orthogonal factor structures that better reflect cognitive decision-making processes in cybersecurity.

The suitability of the data for factor analysis was assessed using the Kaiser–Meyer–Olkin (KMO) measure, which evaluates the sampling adequacy, and Bartlett’s test of sphericity, which determines whether sufficient correlations exist among items for factor extraction. Factor retention was determined based on eigenvalues greater than one, the proportion of variance explained, and parallel analysis, ensuring that extracted factors represented meaningful constructs rather than statistical artifacts. Items with factor loadings below 0.4 were excluded from the final factor structure to maintain interpretability and construct validity.

To confirm the factor structure derived from the EFA, CFA was performed using the robust weighted least squares estimator. CFA was selected to validate the latent constructs identified in the exploratory phase, ensuring measurement reliability and construct validity. Model fit was evaluated using multiple indices, including the chi-square test, root mean square error of approximation (RMSEA), comparative fit index (CFI), and Tucker–Lewis index (TLI). The inclusion of these indices allowed for a comprehensive assessment of model adequacy, balancing statistical power and goodness-of-fit criteria.

Following the validation of latent decision-making factors, logistic regression analysis was employed to examine the predictive power of these factors on prior exposure to cyber deception. Logistic regression was selected due to its robustness in modeling binary outcome variables, allowing for the estimation of odds ratios that quantify the likelihood of phishing susceptibility based on cognitive heuristics. The regression model included the two extracted factors as independent variables, while the dependent variable indicated whether respondents had previously fallen victim to cyber deception.

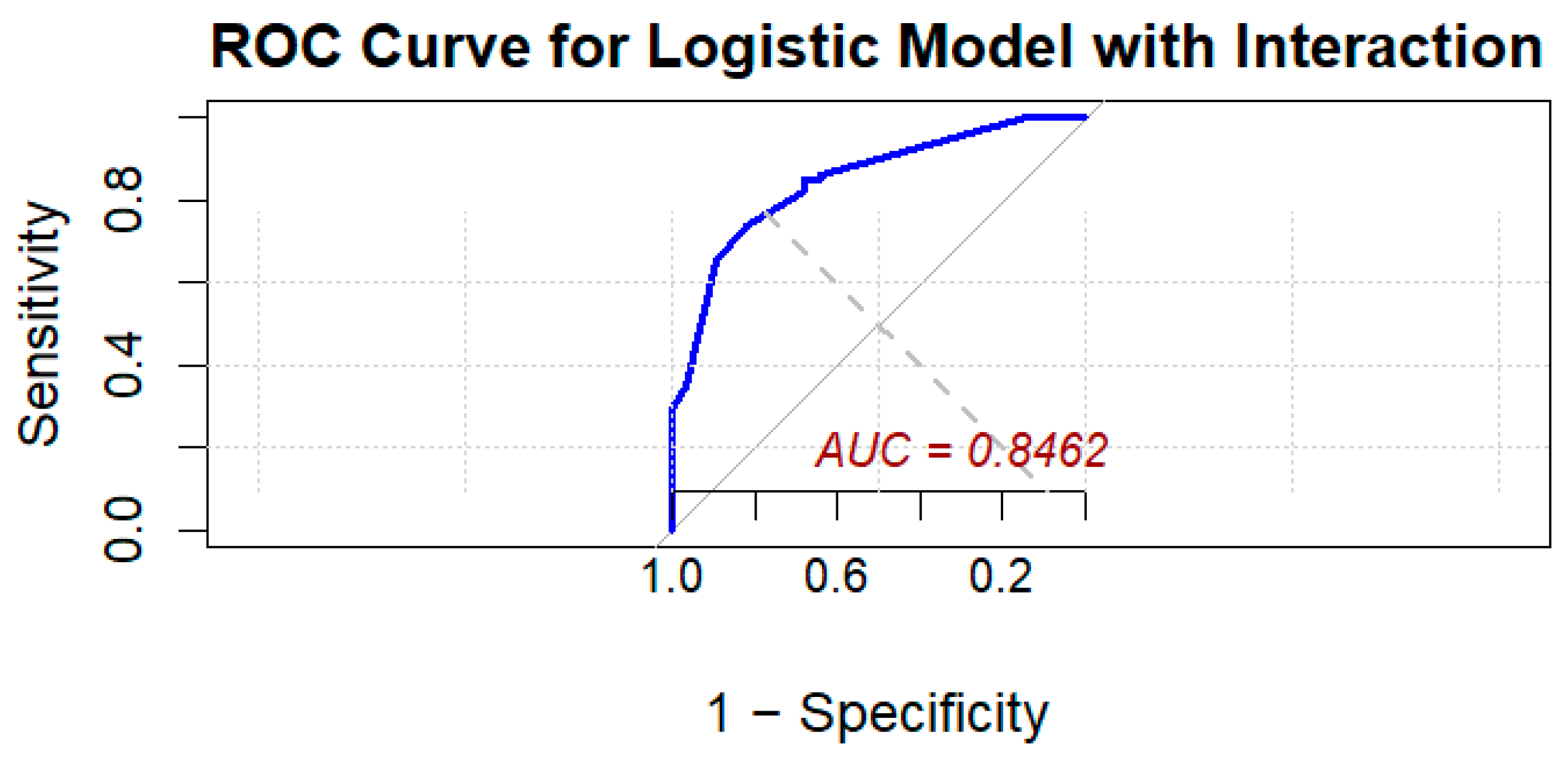

A subsequent model incorporated interaction terms to assess whether the effect of one factor on cybersecurity vulnerability depended on the level of another factor, capturing potential moderating effects in heuristic-driven security decisions. Model performance was evaluated using receiver operating characteristic (ROC) analysis, with the area under the curve (AUC) serving as the primary metric for assessing predictive accuracy. The ROC curve was generated to evaluate the discriminative power of the logistic regression model in distinguishing between users who had previously been deceived (VictimOfCyberDeception = 1) and those who had not (VictimOfCyberDeception = 0). The ROC analysis was supplemented with an optimal threshold (opt_prag), determined using the Youden index, to maximize sensitivity and specificity in risk classification.

A Risk Score was computed using the predicted probabilities from the logistic regression model with interaction terms between Factor 1 (risk perception) and Factor 2 (compliance and security). The Risk Score represents the likelihood that an individual falls into the “VictimOfCyberDeception” category (previously deceived). Higher scores indicate an increased probability of heuristic-driven decision-making leading to susceptibility to phishing and social engineering tactics.

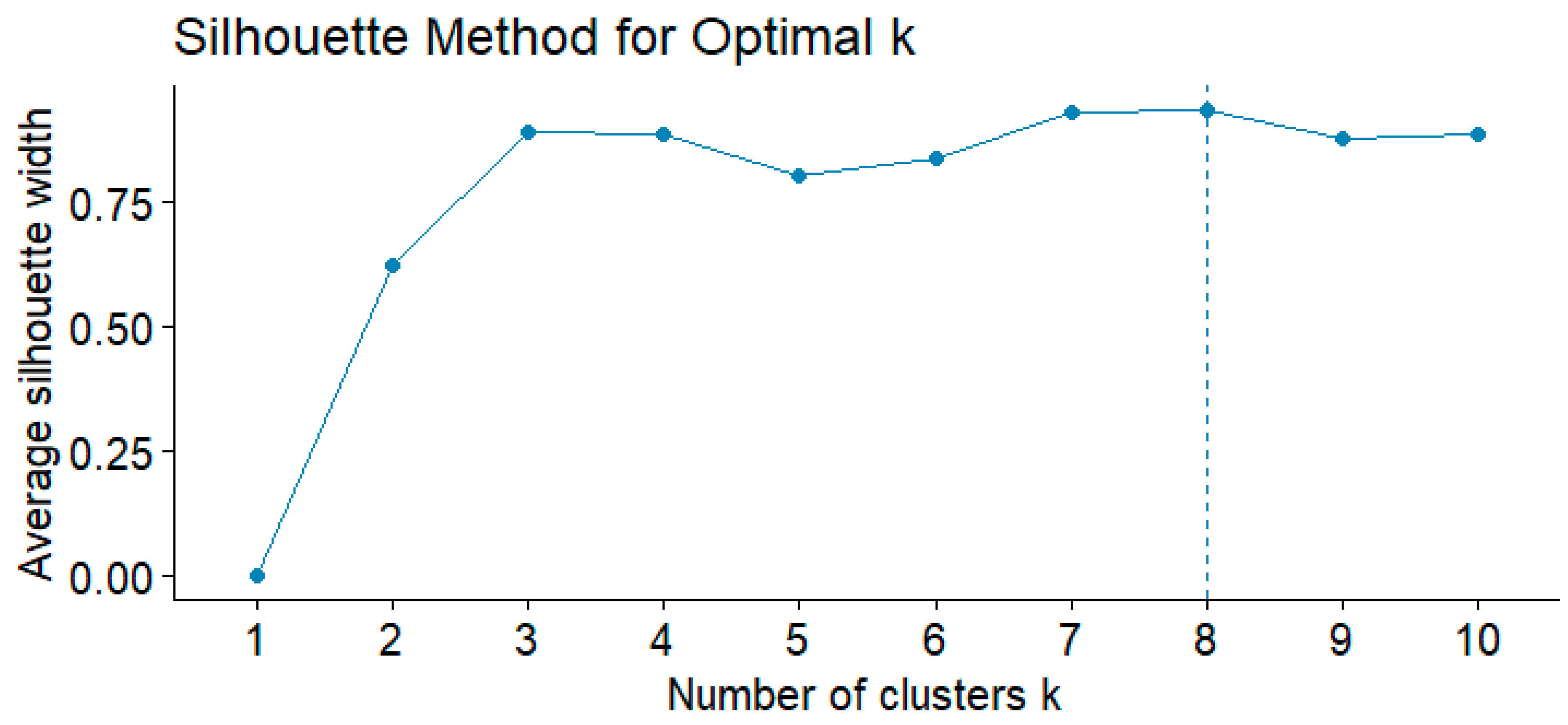

In order to refine the understanding of cybersecurity decision-making, a clustering analysis was performed to identify distinct security behavior profiles. K-means clustering was chosen due to its effectiveness in segmenting individuals into homogenous groups based on security-related cognitive traits. The optimal number of clusters was determined using the Silhouette method by clustering respondents’ factor scores into eight groups using k-means, which balances within-cluster cohesion and between-cluster separation. Specifically, we performed clustering on the two primary factor scores derived from the EFA/CFA (the risk perception factor and the compliance and security factor). Each participant is represented as a point in this two-dimensional factor space. Clustering in this space groups together individuals with similar profiles in terms of these underlying cognitive dimensions. Using participants’ factor scores as clustering features ensures that the resulting groups reflect meaningfully different cognitive-behavioral profiles, rather than arbitrary divisions. The resulting clusters were analyzed to examine how security attitudes and behaviors varied among different groups, with special attention to differences in prior cyber deception experiences. The Kruskal–Wallis test was used to evaluate statistical differences in cybersecurity risk perception across clusters, and Dunn’s post hoc test with Bonferroni correction was applied to identify significant pairwise differences.

To investigate the role of cognitive heuristics in cybersecurity behavior, a mediation analysis was conducted to assess whether risk perception mediated the relationship between security behaviors—such as URL verification—and phishing susceptibility. Mediation analysis was chosen to quantify the indirect effects of cybersecurity awareness on susceptibility outcomes, allowing for a decomposition of direct and mediated pathways. The analysis employed bootstrapped mediation models to estimate the average causal mediation effect (ACME) and average direct effect (ADE), controlling for demographic covariates such as education level [

62]. Additionally, a multinomial logistic regression model was used to explore whether higher education levels moderated heuristic-driven security decisions, assessing whether individuals with more formal cybersecurity knowledge were less likely to rely on cognitive shortcuts.

Additionally, association rule mining was applied to extract behavioral patterns in cybersecurity decision-making, and we have used the Apriori algorithm. This approach was selected to identify recurring security behavior patterns and assess how specific actions—such as password creation habits or phishing detection strategies—correlated with cyber deception experiences. The dataset was transformed into a transaction-based format, and association rules were generated based on minimum support and confidence thresholds. Rules were ranked using lift values to determine the strongest predictive relationships between cybersecurity practices and phishing susceptibility.

All statistical analyses were conducted using R. The psych package was used for factor analysis, lavaan for CFA, pROC for ROC analysis, cluster for k-means clustering, mediation for mediation modeling, and arules for association rule mining. Data visualization was performed using ggplot2, and model diagnostics were examined to ensure robustness. The methodological framework adopted in this study ensured the rigorous examination of cybersecurity decision-making by integrating psychometric validation, predictive modeling, and behavioral segmentation. By combining factor analysis, regression modeling, clustering techniques, and association rule mining, the study provided a multidimensional perspective on the cognitive heuristics underlying cybersecurity behavior.

3.3. Ethical Considerations

This study adhered to standard ethical guidelines for survey-based research, ensuring participant confidentiality and data protection. Given that the study involved an anonymous online questionnaire with no collection of personally identifiable information, formal approval from an institutional ethics committee was not required. Participants were informed about the study’s purpose and their voluntary participation, with the option to withdraw at any time. Data security measures were implemented, ensuring that responses were stored securely and accessed only by the research team.

4. Results

This section presents the findings of the study, focusing on the heuristic-driven decision-making tendencies of participants in cybersecurity contexts. The results are structured around descriptive statistics (

Table A2,

Table A3,

Table A4,

Table A5,

Table A6 and

Table A7 Appendix B), EFA, and key heuristic patterns influencing cybersecurity behavior.

4.1. Correlation Between Cybersecurity Decision Factors

Before performing inferential analyses, we examined the relationships among the cybersecurity decision factors using a tetrachoric correlation matrix. The results indicate moderate correlations between several factors, particularly between regulatory compliance and data protection (r = 0.72), as well as between compliance and financial loss prevention (r = 0.48). In contrast, reputational protection shows a weak association with operational continuity (r = −0.025), suggesting that these motivations are largely independent. The full correlation matrix is provided in

Table A8 (

Appendix B).

4.2. Logistic Regression: Predicting Susceptibility to Social Engineering

To determine whether cybersecurity motivations predict heuristic cyber behaviors, we performed logistic regression using susceptibility to social engineering as the dependent variable (binary: 1 = susceptible, 0 = not susceptible). The results reveal a significant negative association between regulatory compliance and susceptibility (β = −2.074,

p = 0.002). This result aligns with expectations—individuals who rigorously follow security policies indeed tend to avoid falling victim. While unsurprising, this empirical confirmation underscores the protective value of compliance. More interestingly, however, we observed that many users with high risk awareness still fell victim (a manifestation of the ‘security paradox’), indicating that knowledge alone is insufficient without corresponding secure action. Our multi-method analysis brings this paradox to light by showing that individuals prioritizing compliance are less likely to fall victim to social engineering attacks. Data protection approaches significance (

p = 0.097), suggesting a potential protective effect. Other factors, including reputational protection, financial loss prevention, and operational continuity, do not show statistically significant relationships. Full regression coefficients are provided in

Table A9 (

Appendix B).

4.3. Association Between Cybersecurity Motivations and Cybersecurity Behaviors

To evaluate whether cybersecurity decision factors influence behaviors such as URL verification and password creation, we conducted chi-square tests. The results indicate strong associations between data protection concerns and both behaviors (p < 0.001), suggesting that individuals who prioritize data protection are more likely to adopt secure practices.

4.4. Impact on Perceived Cybersecurity Importance

Using Kruskal–Wallis tests, we assessed whether cybersecurity motivations influence the perceived importance of cybersecurity. The findings indicate that reputational protection (p = 0.0007) and operational continuity (p = 0.0057) significantly affect how much cybersecurity is valued. In contrast, compliance, financial loss prevention, and data protection do not significantly alter perceptions of cybersecurity importance. These results highlight that motivations linked to external consequences (such as reputation and business continuity) may drive cybersecurity prioritization more than compliance or financial concerns.

4.5. Justification for Exploratory Factor Analysis (EFA)

These findings suggest that cybersecurity decision-making is not entirely independent but follows underlying latent structures, which EFA will help uncover. By identifying these factors, we aim to refine models of cybersecurity behavior and enhance targeted interventions for improving cybersecurity practices.

A factorial analysis was conducted to assess the latent structure underlying cybersecurity decision-making factors. The Kaiser–Meyer–Olkin (KMO) test for sampling adequacy yielded a value of 0.72, suggesting the appropriateness of factor analysis. Bartlett’s test of sphericity was significant (χ² = 146.73, p < 0.001), confirming the presence of intercorrelations among variables.

The EFA using a maximum likelihood extraction method with oblimin rotation resulted in a two-factor solution explaining 61% of the total variance. Factor 1 (

Table 1) predominantly loaded onto Reputation Protection (0.998) and Financial Protection (0.464), whereas Factor 2 encompassed Regulatory Compliance (0.912), Operational Continuity (0.510), and Data Protection (0.792). The communalities ranged from 0.25 to 0.99, with Reputation Protection (0.995) and Regulatory Compliance (0.847) showing the highest values, indicating strong contributions to their respective factors.

The CFA validated this structure, yielding a strong model fit with χ²(4) = 6.733,

p = 0.151, RMSEA = 0.059, CFI = 0.998, and TLI = 0.994 (

Table 2). The Cronbach’s alpha for the overall scale was 0.80, indicating good internal consistency. The two latent factors showed a moderate correlation (0.322), suggesting that while related, they capture distinct aspects of cybersecurity decision-making (

Table 2). Factor 1 (covering “Protection against reputational damage” and “Protection against financial losses”) had a Cronbach’s alpha of 0.76. Factor 2 (covering “Compliance with regulations and standards“, “Prevention of operational and service disruptions“, and “Protection of sensitive personal or organizational data“) had a Cronbach’s alpha of 0.82 (

Table A10—

Appendix B).

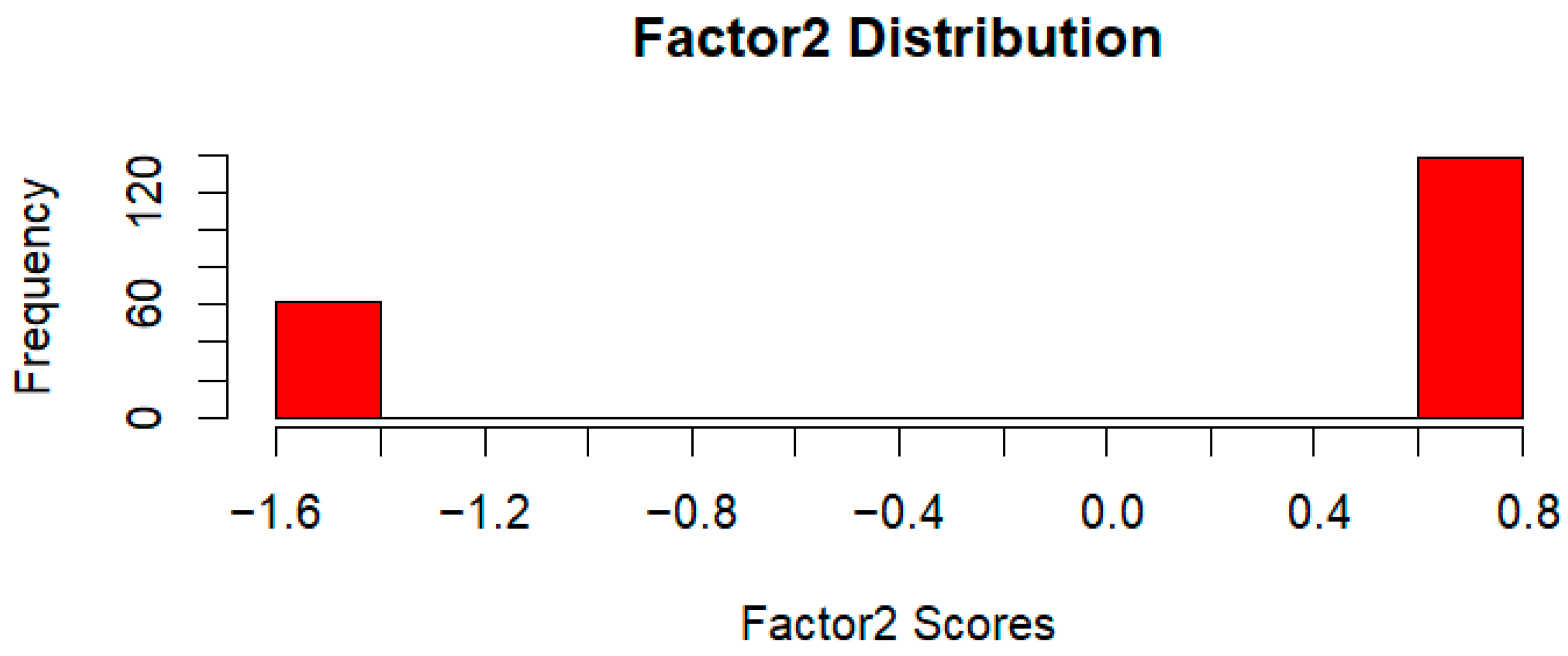

The distributions of Factor 1 and Factor 2 scores (

Figure 1 and

Figure 2) indicate a strong polarization among participants, with most responses clustering around extreme values. These factor scores were derived from the EFA and CFA models, where participant responses were transformed into latent constructs representing underlying cybersecurity decision-making patterns. Factor scores were computed using the regression method, ensuring that individual responses were mapped onto a standardized scale. The resulting histograms illustrate the frequency of observed scores, highlighting distinct decision-making profiles. Given the role of heuristics in security-related judgments, these polarized distributions may suggest reliance on cognitive shortcuts such as risk aversion or the availability heuristic, though further investigation is required to substantiate this interpretation.

These findings reinforce the robustness of the constructs used in studying cybersecurity decision-making and social engineering heuristics.

4.6. Risk Score Distributions and Predictive Analytics

To assess the influence of heuristic-driven decision-making on cybersecurity vulnerability, a binary logistic regression model was employed, predicting prior exposure to cyber deception (“VictimOfCyberDeception”) based on latent decision-making factors (Factor 1, Factor 2). The model yielded a strong predictive power (AUC = 0.83), suggesting that individuals exhibiting specific heuristic patterns were more likely to have been targeted by cyberattacks. The initial logistic regression model (

Table A11 Appendix B) indicated that Factor 1 had a significant negative effect on cyber deception experience (Estimate = −1.0545,

p < 0.001), suggesting that individuals scoring higher on Factor 1 were less likely to have been deceived. In contrast, Factor 2 did not significantly predict deception experience (

p = 0.456).

To further investigate the interaction between heuristic-driven decision-making components, an interaction term (Factor 1 * Factor 2) was included in a second logistic regression model. This model demonstrated improved explanatory power, with the interaction term approaching significance (Estimate = 3.3439,

p = 0.079), indicating that the relationship between Factor 1 and cyber deception experience might depend on the level of Factor 2. The area under the ROC curve (AUC) for this model was 0.8462 (

Figure 3), reflecting strong predictive performance.

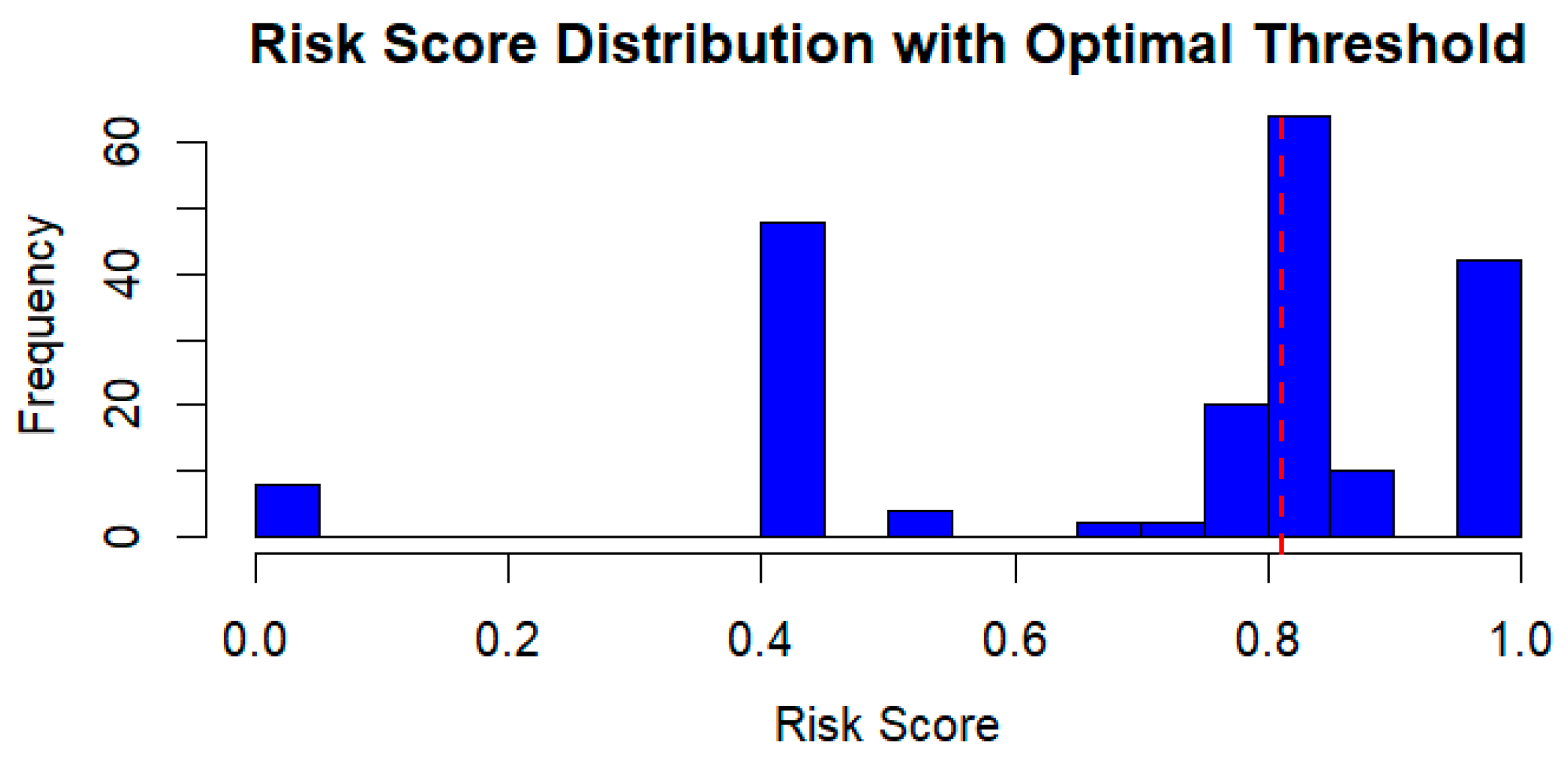

Following the CFA, we derived cybersecurity risk scores to assess individual susceptibility to security threats. The risk scores were computed from the interaction model (

Table A12 Appendix B) and categorized into low, medium, and high-risk groups, ensuring a standardized mapping of participant responses onto latent constructs. The distributions of these risk scores after threshold optimization is presented in

Figure 4. These distributions were obtained by computing factor scores using the regression method. The bimodal nature of the distributions suggests that participants exhibit distinct decision-making patterns, with some demonstrating a strong proactive stance towards cybersecurity, while others show a heightened vulnerability. The analysis of these scores revealed a meaningful distinction in cyber deception vulnerability, with a majority of participants falling into the high-risk category. The optimal threshold for classification was determined using ROC analysis, achieving an accuracy of 76%, a precision of 91%, and a recall of 73%. These findings underscore the role of heuristic cognitive structures in cybersecurity decision-making, highlighting how specific decision-making patterns may increase or mitigate vulnerability to cyber deception.

4.7. Clustering Analysis of Security Behavior

A k-means clustering approach was employed to classify individuals based on the two extracted latent factors (Factor 1 and Factor 2), reflecting distinct patterns in cybersecurity decision-making. The optimal number of clusters was determined using the Silhouette method (

Figure A1—

Appendix B), which indicated that k = 8 provided the most coherent separation, balancing compactness and distinctiveness across groups. The resulting clusters were analyzed in relation to prior exposure to cybersecurity deception, demonstrating notable variations in susceptibility.

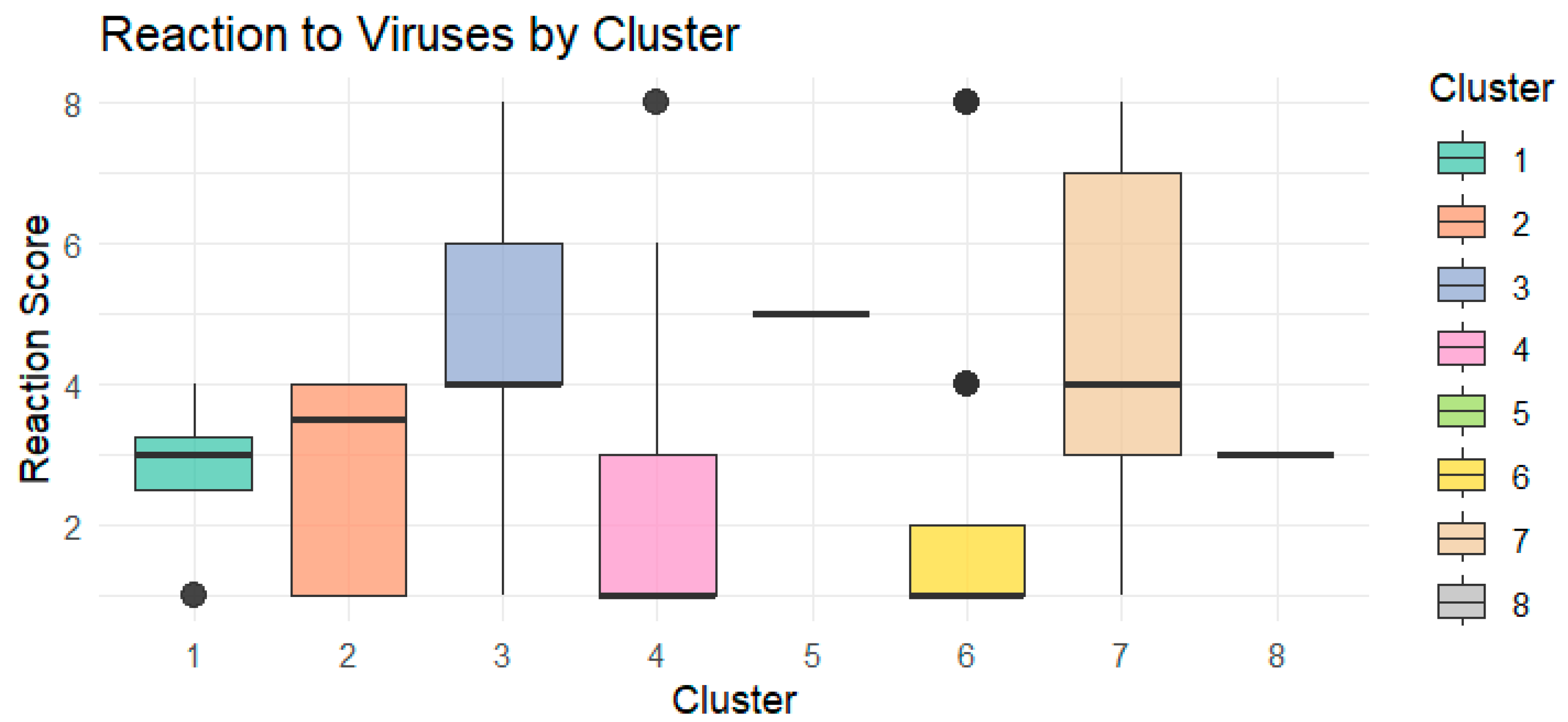

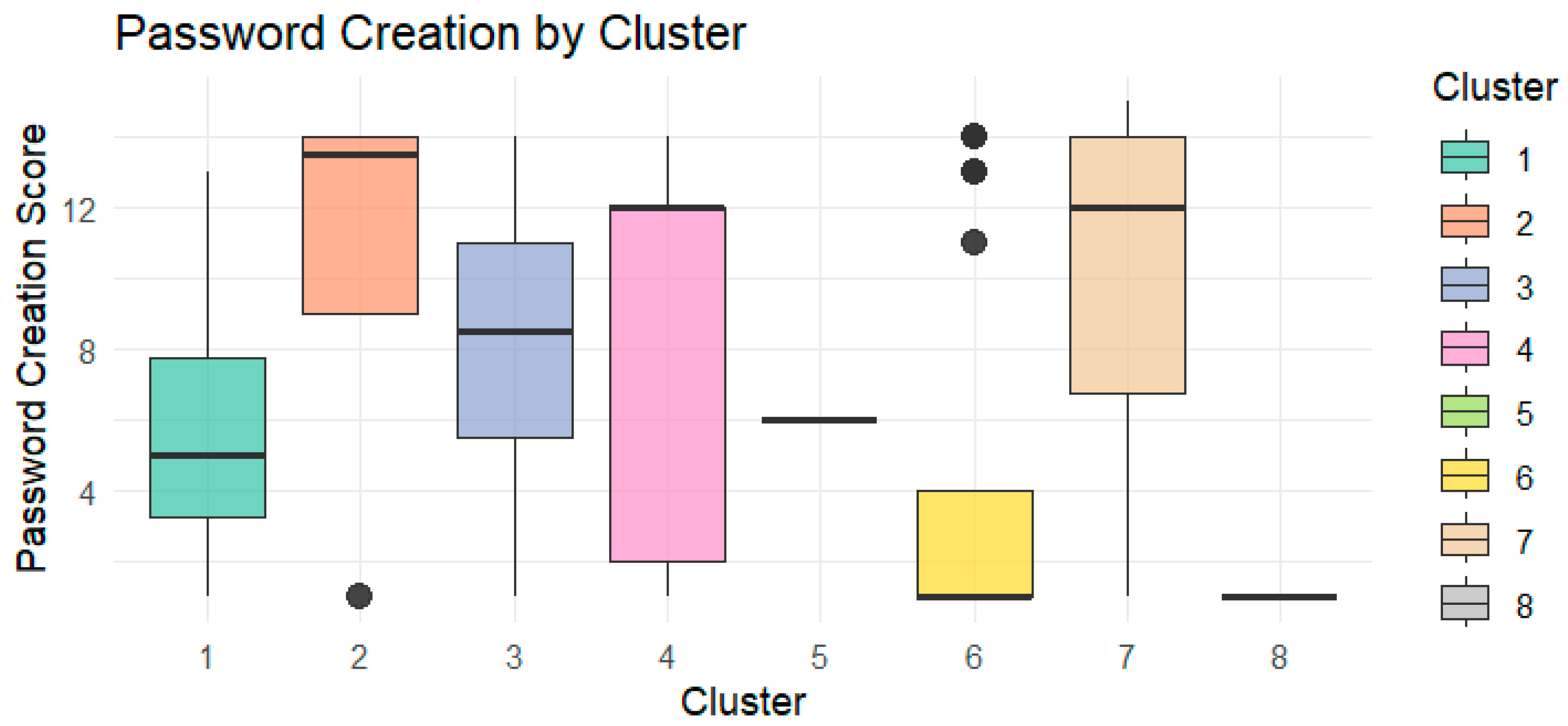

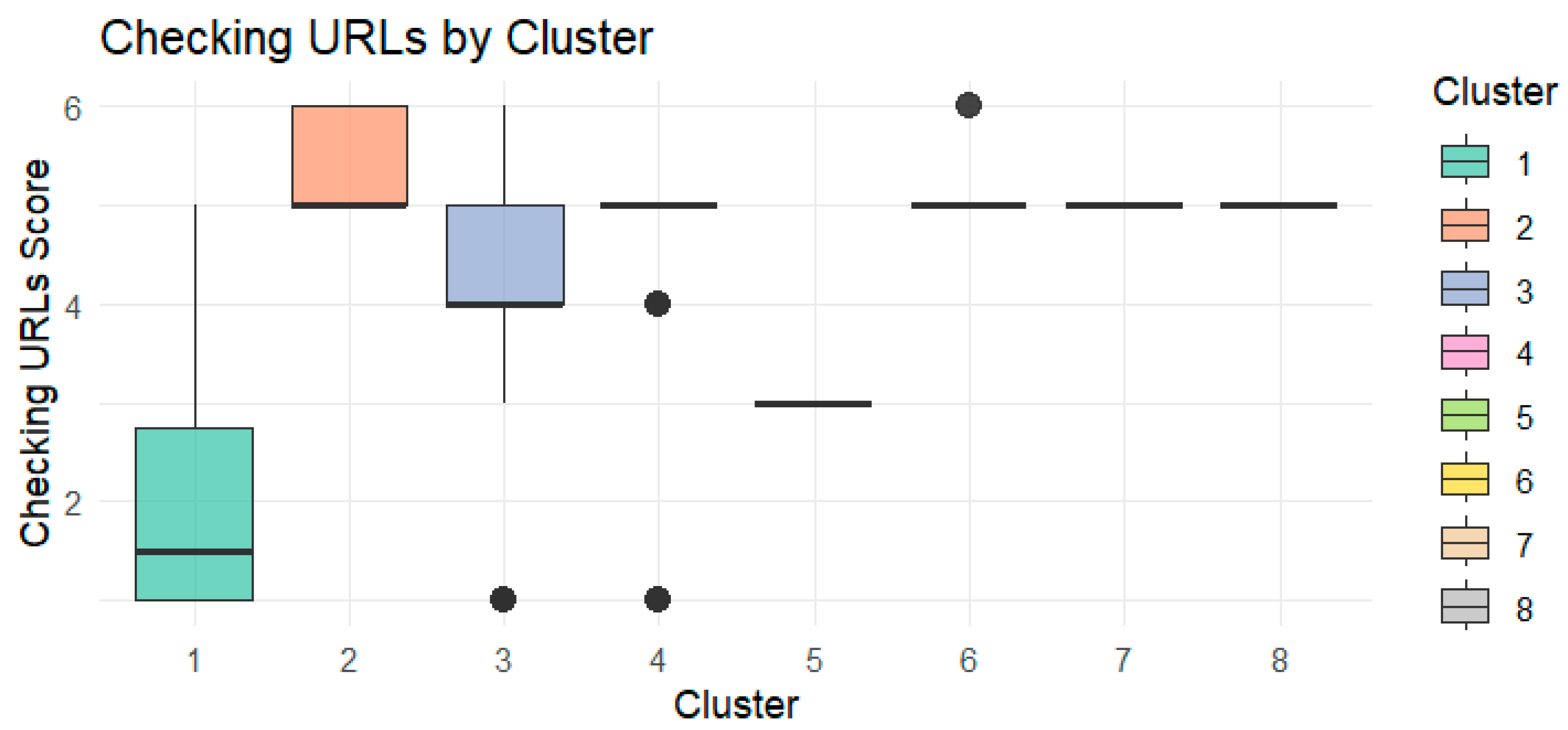

4.8. Clustering Analysis and Behavioral Insights

Reaction to Viruses (

Figure A2 Appendix B): Significant variation was observed across clusters, with some groups demonstrating higher reactivity in response to security threats.

Password Creation (

Figure A3 Appendix B): Marked differences emerged between clusters, with certain groups exhibiting stronger password hygiene practices.

Checking URLs (

Figure A4 Appendix B): The likelihood of verifying website authenticity varied substantially, suggesting distinct levels of awareness and precaution across clusters.

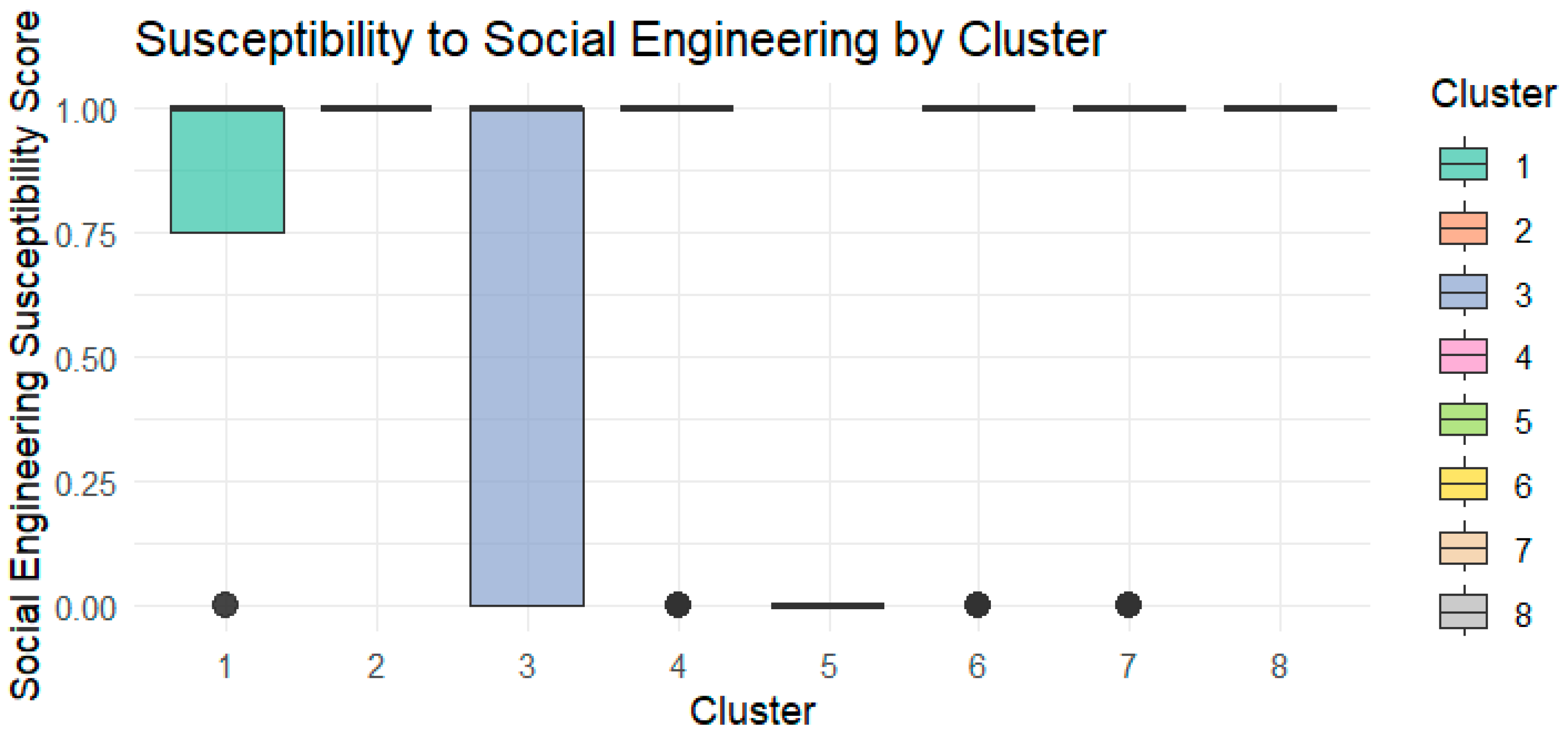

Susceptibility to Social Engineering (

Figure A5 Appendix B): Some clusters displayed a markedly higher tendency to fall for social engineering tactics, aligning with lower security awareness.

These findings were further substantiated using pairwise Wilcoxon rank-sum tests, which confirmed statistically significant behavioral differences between certain clusters, particularly in URL verification (p < 0.001), password creation (p < 0.001), and reaction to virus threats (p < 0.001).

Clusters 1 and 8, for instance, contained only participants who had never been deceived, whereas Clusters 2, 4, and 6 exhibited a substantially higher proportion of individuals with prior deception experiences. This clustering pattern suggests that cognitive differences in heuristic decision-making may be associated with varying levels of susceptibility to cyber threats.

To further examine the relationship between cybersecurity risk perception and self-reported importance of security, a Kruskal–Wallis test was conducted to assess differences in risk scores across security awareness levels, measured on a Likert scale from 1 to 5. The results indicated a statistically significant effect (χ²(4) = 13.619,

p = 0.0086), suggesting that perceived importance of cybersecurity influences risk assessment. A Dunn’s post hoc test with Bonferroni correction (

Table A13—

Appendix B) identified significant differences in risk perception between individuals rating cybersecurity importance as 3 versus 2 (

p = 0.0082) and 5 versus 2 (

p = 0.0475). These findings indicate that individuals with moderate (3) or very high (5) security importance ratings exhibit distinct cybersecurity risk perceptions compared to those who perceive security as less important (2). However, no significant differences emerged between the highest and lowest groups, suggesting potential nonlinearities in how security awareness translates into risk assessment.

Further analyses examined the demographic influence on cybersecurity decision-making by testing the association between cluster membership and education level as well as gender. The results confirmed significant associations in both cases, with education level showing a strong relationship with cluster assignment (χ²(14) = 48.54, p < 0.00001) and gender also exhibiting a significant effect (χ²(14) = 40.39, p = 0.0002). These findings highlight that differences in cybersecurity decision-making may be shaped not only by individual cognitive tendencies but also by broader demographic factors.

Overall, these results emphasize the structured nature of cybersecurity decision-making, which appears to be organized into distinct cognitive profiles. The relationship between cybersecurity awareness and risk perception exhibits nonlinear patterns, with notable differences particularly among those with moderate or high awareness levels. Furthermore, demographic influences suggest that educational background and gender play significant roles in shaping cybersecurity-related cognitive structures. These insights provide a foundation for targeted interventions and adaptive security strategies, considering both cognitive heuristics and demographic variations in cybersecurity behavior.

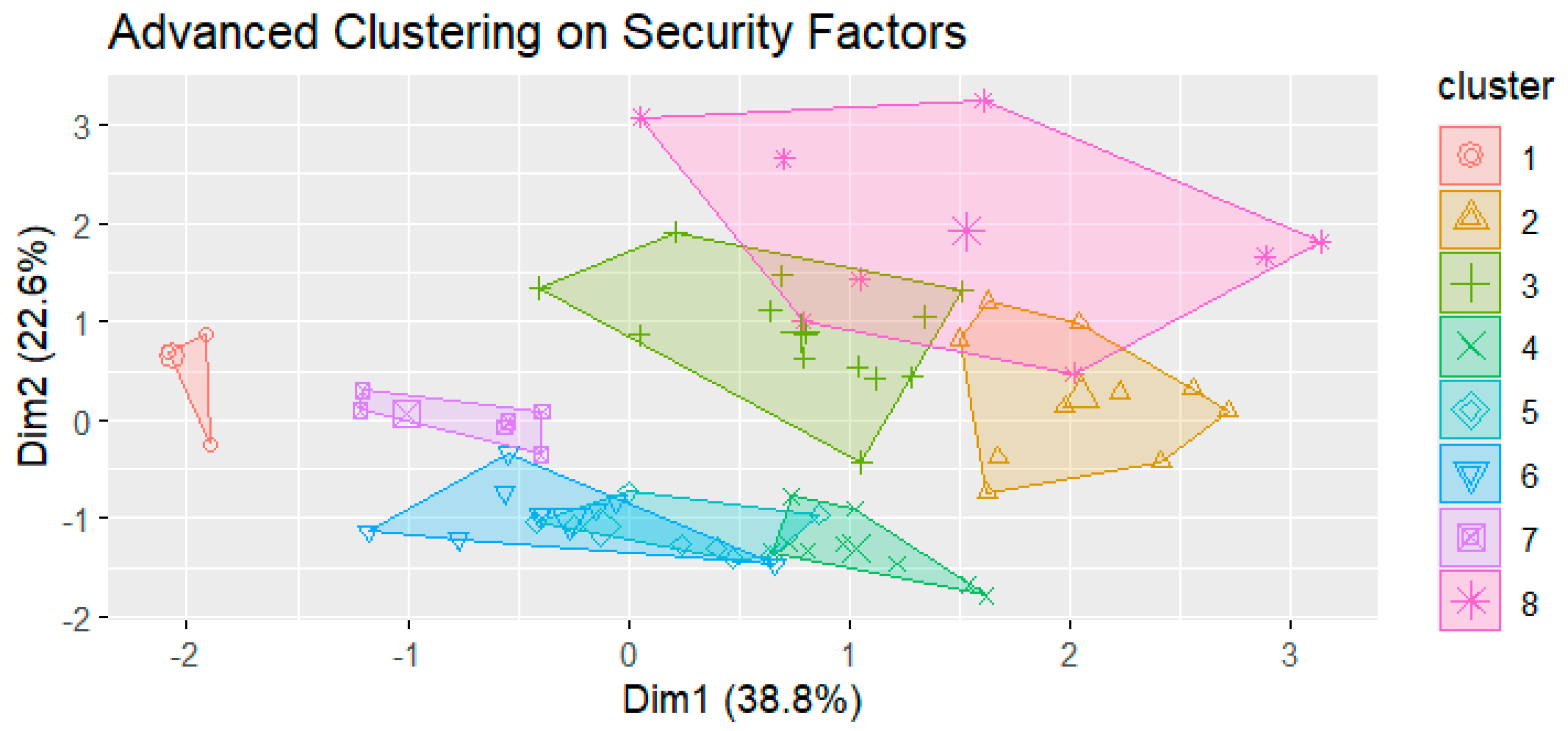

4.9. Advanced Clustering Analysis on Security Behavior Factors

To further investigate the heterogeneity in security behaviors, an advanced clustering analysis was performed using key security-related factors, specifically Factor 1, Factor 2, URL verification, password creation, and reaction to viruses. The resulting clusters, visualized in

Figure 5, illustrate distinct groupings of individuals based on their security practices. To assess the significance of clustering outcomes, a series of Kruskal–Wallis tests were conducted to compare cluster differences in key behavioral factors. The results indicated statistically significant differences in URL verification scores (χ2 = 67.143,

p < 5.57 × 10−12), password creation practices (χ2 = 42.494,

p < 4.17 × 10−7), and reactions to malware threats (χ2 = 67.671,

p < 4.36 × 10−12), supporting the validity of the identified clusters in differentiating cybersecurity behaviors.

Furthermore, an analysis of social engineering susceptibility was performed across clusters, with a Kruskal–Wallis test revealing a significant effect (χ2 = 30.143,

p < 8.94 × 10−5). Post hoc pairwise Wilcoxon comparisons with Bonferroni correction highlighted specific clusters exhibiting heightened susceptibility to manipulation techniques (

Table A14—

Appendix B).

To provide context beyond the statistics, we qualitatively characterized each of the eight clusters identified by the k-means analysis:

- -

Cluster 8—“Vigilant” (High Awareness, High Compliance): This cluster scored high on both risk perception and security compliance. Its members exhibit strong security habits (e.g., careful password management and rigorous URL checking) and notably, none had fallen victim to prior cyber deception. They represent highly vigilant individuals.

- -

Cluster 6—“High-Risk” (Low Awareness, Low Compliance): This group is the mirror opposite, with low risk perception and poor compliance behaviors. Participants in Cluster 6 tended to ignore security measures (weak password practices, low reaction to threats) and had the highest incidence of past social engineering victimization. They epitomize a highly vulnerable profile.

- -

Cluster 4—“Aware but Passive” (High Awareness, Lower Compliance): Cluster 4 members understand cyber risks (high risk perception) but do not consistently act on that knowledge (only moderate compliance with best practices). This knowledge-action gap—a manifestation of the security paradox—means they still experienced above-average susceptibility to attacks despite knowing better.

- -

Cluster 1—“Compliant Rule-Followers” (Moderate Awareness, High Compliance): Individuals in Cluster 1 displayed very diligent security behavior (high policy compliance and preventive actions) even though their personal risk perception was only moderate. Interestingly, like Cluster 8, no one in Cluster 1 had been deceived previously. This suggests that strict adherence to recommended practices can protect users even if they do not feel highly concerned about security.

- -

Clusters 2, 3, 5, and 7—“Intermediate Profiles”: The remaining clusters fell in between these extremes, with mixed levels of awareness and compliance. For example, Cluster 2 showed moderately low compliance (skipping some security measures) coupled with low–medium risk awareness, correlating with a higher-than-average deception rate. Clusters 3 and 5 had more balanced profiles (moderate awareness and fairly good compliance on certain behaviors), resulting in moderate vulnerability. Cluster 7 was somewhat similar to Cluster 4 (relatively higher risk perception with only average compliance), though slightly more protected. Each cluster represents a distinct cybersecurity persona—from the highly vigilant to the highly vulnerable—defined by different combinations of cognitive mindset and actual practice.

4.10. Multinomial Logistic Regression Analysis of Social Engineering Susceptibility

To further explore the relationship between behavioral factors and vulnerability to social engineering, a multinomial logistic regression model was estimated with social engineering susceptibility as the dependent variable and key security factors as predictors. The model demonstrated that URL verification (β = 0.65,

p = 0.0005) and password creation practices (β = 0.16,

p = 0.016) were significant predictors, indicating that individuals engaging in more rigorous security behaviors exhibited lower susceptibility to social engineering attacks (

Table A15—

Appendix B). Conversely, reaction to security threats (β = −0.74,

p < 10−6) had a strong negative association with susceptibility, suggesting that individuals who responded proactively to security incidents were less likely to be deceived by manipulative techniques.

The clustering and statistical analyses collectively reveal distinct cybersecurity behavior profiles, demonstrating that prior exposure to cyber deception, risk perception, and security attitudes vary meaningfully across clusters. The results provide empirical support for the idea that cybersecurity decision-making is influenced by complex heuristic mechanisms rather than a single factor such as risk perception alone. Future research could further investigate the cognitive biases underlying these behavioral differences and explore targeted interventions to improve cybersecurity resilience among at-risk groups.

4.11. Association Rule Mining for Cybersecurity Decision-Making

To identify patterns in cybersecurity decision-making and risk perception, an association rule mining analysis was conducted using the Apriori algorithm. The dataset consisted of key decision-making factors related to cybersecurity awareness, preventive behaviors, and susceptibility to social engineering. The extracted rules provide insights into how security practices influence risk exposure and the likelihood of being misled by fraudulent attempts.

The most relevant association rules, ranked by lift, are presented in

Table 3. The results highlight the relationships between proactive security behaviors and lower susceptibility to deception, as well as the role of financial protection and compliance concerns in shaping security importance.

The results indicate that users who employ strong password creation practices and frequent URL verification are significantly less likely to be deceived by phishing attempts (lift = 1.63). This highlights the effectiveness of proactive security behaviors in reducing exposure to cyber threats. Similarly, individuals who prioritize financial security and regulatory compliance are more likely to assign high importance to cybersecurity (lift = 1.32). This finding suggests that regulatory frameworks and financial risk considerations influence security awareness and decision-making.

A notable observation is that individuals with poor security habits, such as infrequent URL verification or reusing passwords, tend to perceive cybersecurity risks as higher (lift = 1.64). This may indicate a cognitive dissonance effect, where users are aware of security threats but fail to implement protective measures. This awareness–action gap suggests the need for targeted interventions that encourage behavioral change rather than just increasing awareness.

The association rule analysis provides meaningful insights into how security behaviors relate to risk perception and vulnerability to deception. However, additional analyses can further refine these findings. Clustering techniques can be employed to identify distinct security behavior profiles, while logistic regression models could assess the predictive strength of these security habits on actual phishing susceptibility. Moreover, sequential pattern mining could explore how security habits evolve over time, offering a longitudinal perspective on cybersecurity behavior adaptation.

The causal mediation analysis performed in this study investigates the indirect effect of URL verification practices (independent variable) on the likelihood of falling victim to phishing attempts (dependent variable), mediated by perceived risk score (mediator).

4.12. Mediation Analysis

The Average Causal Mediation Effect (ACME), which quantifies the extent to which URL verification reduces phishing susceptibility through risk perception, was statistically significant. For the control group, ACME was 0.0491 (95% CI: [0.0143, 0.13], p < 0.001), and for the treated group, ACME was slightly higher at 0.0589 (95% CI: [0.0237, 0.12], p < 0.001). The average ACME across groups was 0.0540 (95% CI: [0.0190, 0.12], p < 0.001), confirming that a significant portion of the total effect is mediated by perceived risk.

The Average Direct Effect (ADE), representing the direct influence of URL verification on phishing susceptibility after accounting for the mediator, was not statistically significant. For the control group, ADE was 0.0223 (95% CI: [−0.0253, 0.04], p = 0.22), and for the treated group, ADE was 0.0321 (95% CI: [−0.0330, 0.06], p = 0.22). The average ADE was 0.0272 (95% CI: [−0.0291, 0.05], p = 0.22), indicating that URL verification does not directly reduce phishing susceptibility but operates primarily through increasing risk perception.

The total effect, which represents the combined influence of both direct and indirect pathways, was 0.0812 (95% CI: [0.0381, 0.11], p < 0.001), suggesting that verifying URLs significantly reduces phishing vulnerability. Furthermore, the proportion of the total effect mediated by risk perception was 60.52% for the control group (95% CI: [30.05%, 136%], p < 0.001) and 72.51% for the treated group (95% CI: [47.16%, 127%], p < 0.001). The average proportion mediated was 66.51% (95% CI: [39.41%, 132%], p < 0.001), indicating that risk perception plays a crucial role in explaining the impact of URL verification behavior on phishing susceptibility.

4.13. Heuristic-Driven Cybersecurity Behaviors

To evaluate respondents’ ability to identify fake URLs, were asked some questions, offering answer options that included homoglyphs of the correct URL.

A large portion of respondents did not notice the replacement of the letters, indicating a low level of attention to detail, a lack of familiarity with the concept of homoglyphs, or the presence of satisficing heuristics in their decision-making process.

‘Have you ever been in a situation where someone tried to quickly gain your trust using compliments or other flattery tactics? How did you handle this situation?’ The purpose of those questions was to identify heuristics used in respondents’ decision-making processes when exposed to this social engineering scenario. 138 respondents indicated that they cooperated with individuals who employed such tactics, suggesting the presence of the affect heuristic in their decision-making process. On the other hand, 56 respondents mentioned that they were either hesitant to cooperate with such individuals because they were unsure of the reasons behind the approach or chose not to engage at all. By analyzing possible motives behind this social interaction, these 56 respondents employed the simulation heuristic in their mental process before deciding to decline cooperation.

Participants selected what indicates safety while browsing a website. While certain cues, such as the padlock icon in the browser’s address bar and the use of the ‘https’ protocol, serve as indicators of the security level of the connection, some users may mistakenly interpret them as indicators of the overall security of the website and its content. Thus, the representativeness heuristic that respondents relied on in their decision-making can lead to misjudgments regarding the level of safety [

31].

To identify the presence of the affect heuristic and the familiarity heuristic on the decision-making process regarding the sharing of sensitive data, the following question was posed: ‘Have you ever provided sensitive information to acquaintances or close individuals?’ According to the collected responses, the majority of respondents indicated that their decisions regarding the sharing of sensitive information were influenced by social contexts, emotions, and the degree of familiarity with the person they were interacting with. From the responses received, only 4% indicated that the respondents did not provide sensitive data, showing indifference to such heuristics.

6. Conclusions

This study provides empirical evidence that heuristic-driven decision-making plays a critical role in cybersecurity behavior, particularly in the context of social engineering vulnerabilities. By identifying two key cognitive structures—risk perception and compliance and security—this research advances the theoretical understanding of how individuals assess cyber threats and make security-related decisions. The findings indicate that while heuristics can facilitate efficient decision-making, they also contribute to systematic security errors that increase vulnerability to cyberattacks.

Our results challenge previous assumptions that cybersecurity awareness alone is sufficient to drive secure behaviors. Instead, we demonstrate that risk perception serves as a crucial intermediary, suggesting that security interventions should prioritize risk salience and cognitive reframing rather than merely increasing procedural knowledge. Moreover, the observed cognitive dissonance effect underscores the need for training programs that not only enhance awareness but also translate awareness into action.

By bridging cognitive psychology with cybersecurity research, this study lays the groundwork for targeted interventions that leverage an understanding of heuristic-driven vulnerabilities. Future research should build upon these findings by employing longitudinal designs, experimental methodologies, and cross-cultural analyses to further refine heuristic-based security models. As cyber threats continue to evolve, understanding the cognitive mechanisms that underlie security decisions will be essential for developing more effective cybersecurity strategies.