Abstract

This research investigates the potential of computational argumentation, specifically the application of the Abstract Argumentation Framework (AAF), to enhance the evaluation of deliberative quality in public discourse. It focuses on integrating AAF and its related semantics with the Discourse Quality Index (DQI), which is a reputable indicator of deliberative quality. The motivation is to overcome the DQI’s constraints using the AAF’s formal and logical features by addressing dependency on hand coding and attention to specific speech acts. This is done by exploring how the AAF can identify conflicts among arguments and assess the acceptability of different viewpoints, potentially leading to a more automated and objective evaluation of deliberative quality. A pilot study is conducted on the topic of abortion to illustrate the proposed methodology. The findings of this research demonstrate that AAF methods can improve discourse analysis by automatically identifying strong arguments through conflict resolution strategies. They also emphasise the potential of the proposed procedure to mitigate the dependence on manual coding and improve deliberation processes.

1. Introduction

Deliberative democracy presents a viable framework for mitigating political polarization by fostering inclusive, reasoned discourse and encouraging consensus-oriented decision-making. By prioritizing deliberation over adversarial debate, it facilitates mutual understanding, reduces ideological entrenchment, and enhances democratic legitimacy. However, as deliberative processes expand globally, assessing the quality of these discussions becomes a key challenge. The manner of assessing the quality of deliberation is a matter of scholarly concern. One widely recognized method for evaluating deliberative quality is the Discourse Quality Index (DQI) [1]. The DQI offers a framework to measure key indicators of deliberation, such as participation, justification, respect, and counterarguments. Despite its utility, the DQI is labor-intensive, relying heavily on human coders to annotate speech acts and interpret the quality of discourse. This introduces some form of subjectivity and dependence on manual annotation, making it time-consuming, expensive, and difficult to scale for large-scale deliberations or online discussions. Certain studies have explored automating the DQI through machine learning, such as the work by Fournier-Tombs and colleagues [2,3]. In contrast, others such as Jaramillo and Steiner introduced Deliberative Transformative Moments (DTMs) to capture discussion dynamics [4]. Systemic approaches such as the Index of the Quality of Understanding (IQU) [5] and frameworks evaluating mini-public roles have also been proposed [6]. Despite these advancements, most methods require substantial human supervision for annotation and evaluation. The DQI also presents challenges, including its reliance on manual coding, difficulty scaling for large deliberations, and lack of standardized structures to evaluate public discussions. These limitations underline the need for automated and scalable approaches to measuring deliberative quality effectively.

This research argues that computational argumentation, a multidisciplinary field, can potentially add value to the assessment of deliberativeness. There is a growing interest in artificial intelligence to address uncertainties and resolve conflicts in argumentation computationally and automatically. Much work has been done using abstract argumentation [7,8]. An Abstract Argumentation Framework (AAF) is a computational mechanism that handles contentious information and brings forward claims using abstract arguments. In Dung’s framework, arguments are represented as nodes in a graph, while attacks between them are defined as edges. The specific content of these nodes is abstracted, and only the graph’s topological structure is evaluated to analyze conflicting information in a dialogical manner. The AAF operates on the principle of defeasible reasoning, which is a non-monotonic approach that allows conclusions to be retracted as new information emerges. This mirrors real-world deliberation, where data are often incomplete or evolving. By structuring arguments as nodes and conflicts as edges, AAF semantics can dynamically handle uncertainty and help identify acceptable arguments in contentious discussions. For example, in a local government debate on renewable energy, an argumentation graph could be used to visualize the relationships between arguments globally, identify conflicts, and simplify the discussion analysis, reducing the time required for manual evaluation. This research assumes that deliberative processes can noticed as multiagent defeasible reasoners that interact and argue with each other about an underlying deliberative topic, producing conflictual information and shaping a knowledge base. Following this, the specific research question addressed in this research is the following: How can integrating abstract argumentation to model defeasible reasoning with the Discourse Quality Index (DQI) enhance the assessment of deliberative quality in debates on highly polarized topics?

In detail, this study addresses the challenges mentioned above by proposing a novel approach integrating the AAF with the DQI. The AAF offers a structured and computationally tractable way to model the relationships between arguments, identify conflicts, and assess the acceptability of different viewpoints. By integrating the AAF with the DQI, the aim is to improve objectivity and scalability and offer a nuanced understanding of argument dynamics in polarized debates. This research builds on recent advancements in computational argumentation and deliberative theory using a pilot analysis of a highly polarized topic, abortion, to demonstrate the potential of this integration.

The remainder of this manuscript is organized as follows: Section 2 discusses the related work on the DQI, the AAF, their semantics, and their gaps. Section 3 introduces the design of an experiment devoted to the pilot study, its data acquisition process, the production of arguments and their labeling, and the automatic procedure for conflict resolution. Section 4 presents the results, while the findings are discussed in Section 5. Section 6 summarizes this research by explaining its contribution to the body of knowledge and how it can be further extended in future work.

2. Related Work

Existing methods for measuring deliberative quality, particularly the DQI and computational argumentation approaches, are reviewed. This section highlights the key limitations of the DQI and introduces the Abstract Argumentation Framework (AAF) as a complementary method to enhance discourse analysis.

2.1. Deliberation and Deliberative Quality

Deliberation refers to carefully considering and discussing different perspectives and ideas before deciding on reaching a conclusion; it centers around individuals engaging in well-informed discussions to reach rational conclusions [9]. It involves thoughtful analysis, active listening, and open-mindedness [10,11]. It can be applied to various contexts, from local discussions to international politics. Critics have raised concerns about social inequalities and the potential for certain groups to dominate deliberative settings. Other factors such as the domination, issue polarization, and agenda settings can affect the quality of deliberation [12]. It is thus essential to measure the quality of these processes. Deliberative discussions involves reaching a consensus on a course of action for an underlying topic. Various mechanisms within social sciences have been invented and applied to assess the quality of such deliberations [1,2,4,6,13]. Analyzing and assessing the quality of a deliberation process is crucial because it allows an assessment of whether decisions are well-informed, fair, and inclusive. Any deliberation studies have been evaluated by the empirical method DQI [1,14], for example to conduct analysis on parliamentary debates [10]. Here, the emphasis is on rational, inclusive discourse where participants justify claims through validity-driven argumentation that is free from coercion. The primary focus of the DQI is on equal participation, justification, the content of justification, counterarguments, respect, and constructive politics. Still, it does not tap into the inclusion and equality of participants and satire in deliberation. For example, one speaker may have more speaking time than others in deliberation [15] or say something humorous which may not meet the deliberative criteria.

Recent critiques highlight the DQI’s inability to capture structural inequalities in deliberation. Mockler [16] critiques the DQI by introducing the concept of deliberative uptake, which assesses whether a participant’s ideas are acknowledged and engaged within deliberation. Using Ireland’s Convention on the Constitution, a forum where citizens and politicians are included as members, the research reveals how structural inequalities influence deliberation dynamics, with politicians receiving greater uptake than citizens. This power asymmetry underscores the limitations of traditional metrics like the DQI in capturing inclusion and exposing DQI’s blindness to inclusion and systemic biases. Bächtiger [17] notes that the DQI’s static aggregation fails to account for dynamic argumentation processes, such as retractions or evolving consensus, limiting its relevance in asynchronous or large-scale deliberations. Further critiques focus on scalability and automation challenges. Fournier-Tombs and MacKenzie demonstrated that machine learning adaptations such as DQI 2.0 remain constrained by dependency on labeled datasets and human expertise, struggling with contextual nuances, such as with the identification of ‘constructive politics’ in social media debates [3]. Despite leveraging LLMs, AQuA inherits the DQI’s conceptual blind spots, such as neglecting conflict resolution [18]. In 2014, Deliberative Transformative Moments (DTMs) were introduced as the DTM framework [4], an extension of the DQI designed for small-group deliberations, and it revealed that personal narratives such as those shared by Colombian ex-combatants can elevate or diminish deliberation quality. By focusing on predefined indicators like justification and respect, the DQI neglects the emotional and narrative dimensions of discourse, which are particularly salient in post-conflict settings [4].

The rise of online deliberation platforms has further strained existing metrics. A 2015 systematic review of digital deliberation [19] found that most studies prioritized platform design over process analysis, leaving a vacuum in tools to assess asynchronous, large-scale discussions. For example, the Index of the Quality of Understanding (IQU) [20], developed to analyze local political debates in Zurich, quantified participation rates but struggled to differentiate between substantive contributions and superficial engagement. Klinger and Russmann noted that while the IQU captured the quantity of deliberation, for example, using word counts or topic frequency, it inadequately measured the quality of reasoning or inclusivity [20]. These limitations underscore a broader challenge: though rigorous in controlled settings, existing indices lack the flexibility to adapt to the heterogeneous nature of real-world deliberations. The Deliberative Reason Index (DRI) [21] is designed to evaluate the quality of deliberation at the group reasoning level instead of concentrating on individual inputs. This method uses surveys before and after discussions to assess participants’ views and preferences with respect to the debated topics. It computes agreement scores combined to produce an overall score for the group. Cognitive complexity (CC) [13] is another metric for assessing deliberation quality. Although it may not be a flawless indicator of deliberative quality, CC is valuable in exploring how an argument is constructed and what cognitive processes are used when spoken or written. They provide an equation to measure the CC for a single speech act based on nine indicators [13], which were computed using Linguistic Inquiry and Word Count (LIWC) a computerized text analysis method [22]. Other studies also use this measure on Twitter, Reddit, and citizen assembly deliberative datasets [23,24,25].

Efforts to automate deliberative analysis have partially addressed these issues. The DelibAnalysis framework [2], which applies machine learning to classify speech acts based on DQI criteria, demonstrated that computational tools could reduce reliance on manual annotation. By training models on UK parliamentary debates, researchers achieved moderate accuracy in detecting indicators such as ‘respect’ and ‘content of justification’ [3]. However, DelibAnalysis and its successor, DQI 2.0 [3], remain constrained by their dependency on high-quality labeled datasets and political analysts’ expertise. For instance, labeling ‘constructive politics’ in Twitter debates requires contextual knowledge of platform-specific norms, which automated systems struggle to generalize [25]. Recent advancements like AQuA [18], which integrates Large Language Models (LLMs) with deliberative indices, show promise in scaling analysis but are complex due to subjectivity and the limitations of relying solely on expert annotations (often scarce) or non-expert inputs. Bächtiger et al. (2022) analyzed decades of deliberative research, emphasizing the need to reframe deliberation as a performative, distributed process [17]. Their critique highlights flaws in the original DQI, such as overlooking participation equality and real-time interactivity. They critique its rigid aggregation methods and systematic biases, arguing against applying uniform DQI metrics across contexts such as parliamentary versus online debates and advocating interdisciplinary approaches to enhance measurement.

2.2. Computational and Abstract Argumentation

Alongside advances in deliberative theory, the Abstract Argumentation Framework [8] has emerged as a strong paradigm for modeling defeasible reasoning [26,27,28,29]. This reasoning is non-monotonic, following a type of logic where, in a nutshell, conclusions can be revised based on new information [30,31,32,33]. In other words, defeasible reasoning is a form of non-monotonic reasoning with the ability to conclude in situations where only partial information is available, and humans achieve this ability by using default knowledge [29]. However, if new data/evidence become available that contradict the preconditions on which the default knowledge is based, any conclusions drawn using that knowledge can be retracted [26]. There has been a rapid increase in research on computational argumentation and its systems [34,35,36], which help compute the dialectical status of arguments in a dialogical structure with conflicts and implement non-monotonic reasoning in practice. On the one hand, monotonic reasoning includes arguments whose conclusion can not be retracted in light of other arguments with contradicting findings. The term ‘monotonic’ refers to increased conclusions among the arguments considered. On the other hand, in non-monotonic reasoning, conclusions brought forward by specific arguments can be rejected in the light of further information supported by different arguments; thus, their cardinality can be reduced [37,38].

In practice, the reasoning followed in a deliberative process is defeasible because it recognizes that human deliberation is inherently provisional. In other words, arguments are advanced tentatively and subject to revision as new information emerges. For example, a policymaker might initially argue that ‘year-round schooling improves educational outcomes’ (Argument A) but retract this position if confronted with evidence that ‘summer programs mitigate learning loss’ (Argument B). This iterative process mirrors the dialectical nature of deliberative democracy, where consensus is forged through the clash and synthesis of opposing viewpoints. In the case of dialogues for deliberation, human reasoners use default knowledge to create their arguments. Since these reasoners interact, the conclusions supported by each argument can contradict the ones supported by other arguments; thus, retractions are possible. Formally, ‘An argument is a tentative inference that links one or more premises to a conclusion’ [39]. Various types of arguments can be formalized, including forecast and mitigating arguments [40]. ‘Forecast arguments are tentative defeasible inferences: they can be seen as justified claims and definitions similar to the argument’. ‘Mitigating arguments question the validity of a forecast argument, whether that argument’s premises or the conclusion are valid, and whether any uncertainties appear’. They help to identify conflicts between arguments. In 1995, Dung proposed the definition of the Abstract Argumentation Framework and how it can help in the acceptability of arguments and resolve conflicts independent of the context of application and their internal structure [8]. Formally, we have the following: An argumentation framework is a pair AF = (AR, attacks), where is a set of arguments, and is a binary relation on , which means attacks ⊆. An example of a framework is (argumentation graph of Figure 1).

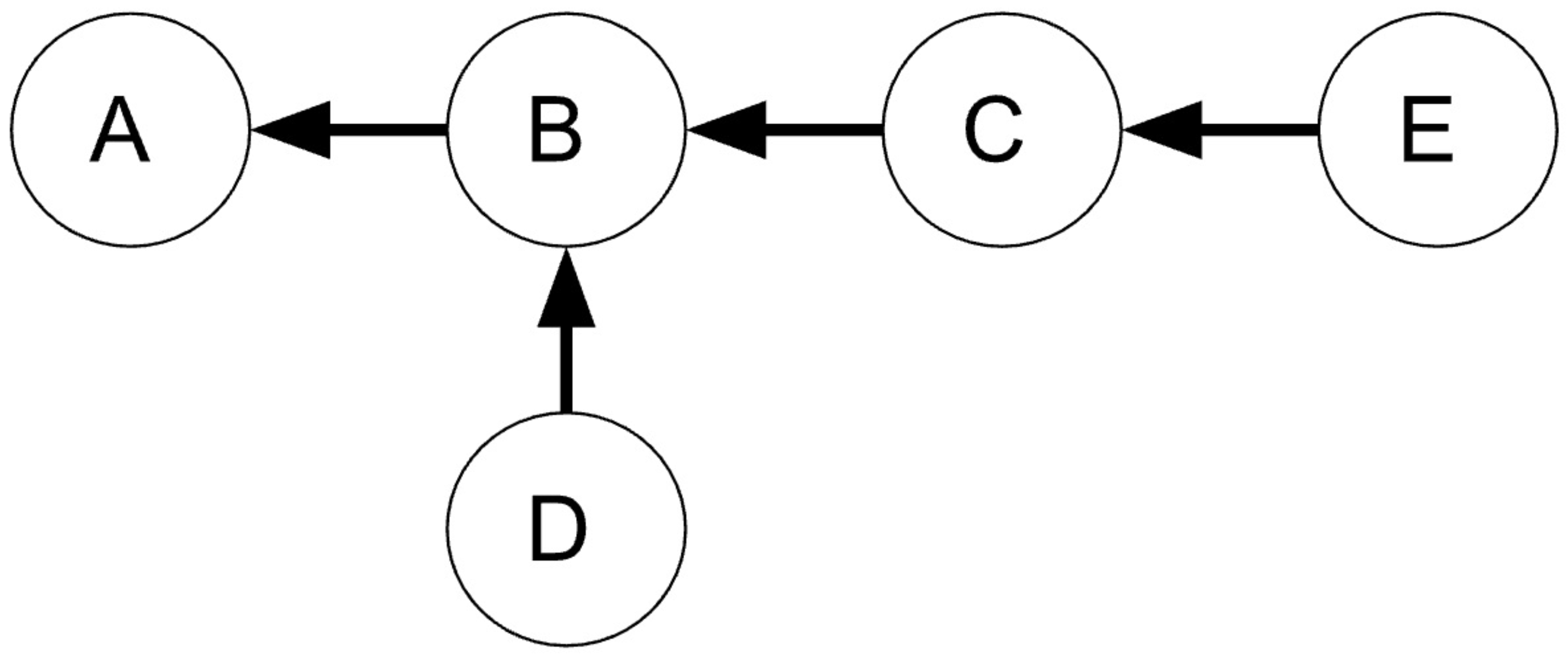

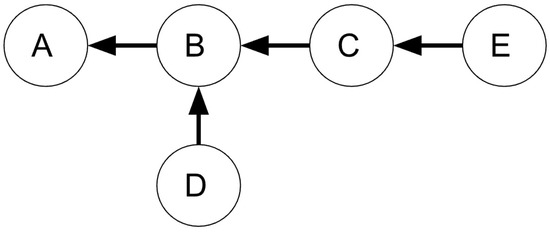

Figure 1.

Arguments and reinstatement in an argumentation framework.

A set of arguments S is admissible if and only if it is conflict-free and can defend itself. Let (Ar, attacks) be an argumentation framework, and let . A set of S arguments is said to be conflict-free if there are no arguments in S such that A B. In the literature of argumentation theory, three types of conflict are usually formalized: rebutting, undermining, and undercutting [41,42]. A rebutting attack is when an argument negates the conclusion of other arguments [26,43]. In an undermining attack, an argument can attack one of its premises by another whose conclusion refuses those premises [26,43]. An undercutting attack occurs when an argument uses a defeasible inference rule and is attacked by arguing that a particular case does not allow for the application of that inference [44]. When an argumentation framework is shaped, its topological structure is often examined to extract conflictual information and create conflict-free argument partitions of acceptable arguments [45,46]. Acceptability semantics can be defined in terms of extensions [8] following different principles:

- An extension can ‘stand together’ (conflict-free principle).

- An extension can ‘stand on its own’ if it can counterattack all the attacks it receives (defence principle).

- An extension through counterattacking its attacks can defend an attacked argument. In other words, the extension reinstates such an argument and should belong to it (reinstatement principle) [47].

Various acceptability and extension-based semantics have been proposed for abstract argumentation [48,49,50,51]. Given and , we have the following:

- S is conflict-free if (a, b) ∉ R for all a, b ∈ S.

- a ∈ A is acceptable w.r.t. S (or equivalently S defends a) if for each b ∈ A with (b, a) ∈ R, there is c ∈ S with (c, b) ∈ R.

- The characteristic function of (A, R) is defined by F(S) = a ∈ A such that a is acceptable w.r.t. S.

- S is admissible if S is conflict-free and S ⊆ F(S).

- S is a complete extension of (A, R) if it is conflict-free and a fixed point of F.

- S is the grounded extension of (A, R) if it is the minimal (w.r.t. ⊆) fixed point of F.

- S is a preferred extension of (A, R) if it is a maximal (w.r.t. ⊆) complete extension.

- S is a stable extension of (A, R) if it is conflict-free, and for each a ∉ S, there is b ∈ S with (b, a) ∈ R.

Below is an example of a discussion on whether kids should attend school during the summer to clarify the formal concepts presented so far. Consider the argumentation framework and (Figure 1):

- Argument A: Kids should be in school year-round, even in the summer.

- Argument B: Kids should not have a year-round school and should spend summers off.

- Argument C: Kids should not spend summer off. They should participate in educational activities like summer camp.

- Argument D: Kids should spend part of the summer off and part of the summer in academic programs.

- Argument E: Kids should have summers entirely off, but schools should provide voluntary summer programs for those who need or want them.

In Figure 1, Argument A has a counterargument presented by Argument B, which makes Argument A unacceptable. However, Argument B is attacked by Arguments C and D, Argument E attacks Argument C, and Argument E has no other arguments against it. Therefore, Arguments A, D, and E should be accepted. However, if we accept C, B would be rejected and no longer be a reason against A. Hence, we should accept Argument A as well. This example emphasizes the concept of reinstatement in abstract argumentation [52] and anticipates the issues that exist behind it [53]. These issues lead to the design of other semantics not based on the concept of extension [54,55]. Many types of argumentation semantics exist. One is ranking-based semantics, which rank each argument based on the notion of attacks [56]. Ranking-based semantics evaluate arguments based on their strength and acceptability [54]. In 2001, Bernard and Hunter proposed a categorizer function that gives value to each argument and provides value to its attackers [57]. Let be an AF. The categorizer function is defined as follows:

The categorizer semantics associate to any a ranking on A such that if . This approach assigns strength values to arguments and evaluates arguments on a graded scale. It provides a more detailed assessment and opens new possibilities for understanding and evaluating arguments, offering a more comprehensive view than traditional extension-based semantics. The more attacks on arguments, the lower the ranking; the fewer attacks, the higher the ranking [54].

2.3. Gaps in the Literature

The DQI relies heavily on labor-intensive manual annotation, requiring expert coders to label speech acts for indicators such as justification or respect [1]. While semi-automated frameworks like DQI 2.0 [3] and AQuA [18] leverage machine learning, they inherit the DQI’s dependency on high-quality labeled datasets and political analysts’ contextual expertise. The DQI limits scalability, particularly in large-scale or asynchronous deliberations such as social media, where manual coding becomes impractical. Such constraints highlight the urgent need for computational methods to reduce human bias and automate the structural analysis of argumentation. Traditional DQI metrics adopt static aggregation, averaging scores across speech acts without capturing evolving argument dynamics [17]. This overlooks non-monotonic reasoning processes, such as retractions of the argument, which are central to real-world deliberation. For instance, the DQI fails to model how new evidence might negate prior claims, which is a gap underscored by critiques of its rigidity in asynchronous debates [16]. Computational argumentation frameworks, for example, Dung’s AAF [8], address this by dynamically resolving conflicts through modeling defeasible reasoning, enabling updates to argument acceptability. Such frameworks are often modular, using abstract or structured arguments and defining a notion of attacks to model their interactions to form a knowledge base [58]. Such a knowledge base can be elicited, and conflictuality and inconsistencies can be resolved, producing a dialectical status for each argument through defeasible principles that mirror provisional human reasoning. Eventually, this might be accrued if a unique inference must be produced.

The DQI’s focus on individual speech acts obscures systemic power imbalances in deliberative settings. As demonstrated in Ireland’s Constitutional Convention, politicians’ arguments received a disproportionate uptake compared to citizens [16], yet DQI metrics lack the tools to quantify such disparities. Similarly, indices like the DTM [4] reveal that the DQI neglects emotional or narrative dimensions that are critical in post-conflict contexts. The AAF’s topic-agnostic conflict detection and resolution offers a formal mechanism to evaluate argument interactions independently of content, mitigating biases tied to participant status or cultural context. The DQI’s reliance on predefined indicators, such as ‘common good’ justifications, assumes universal applicability, yet its Eurocentric design struggles in culturally heterogeneous deliberations [17]. For example, ‘respect’ in online debates may align with platform-specific norms invisible to manual coders [25]. The AAF bypasses this by abstracting arguments into nodes and attacks, decoupling evaluation from semantic content. This formal approach enables cross-context analysis while preserving Habermasian ideals of rationality [10]. Existing deliberative metrics operate in disciplinary silos: the DQI prioritizes procedural norms from political theory, while computational tools like AQuA focus on technical scalability [18]. Few studies bridge these paradigms, leaving a vacuum in frameworks that balance qualitative depth with computational rigor. By integrating the AAF’s ranking-based semantics [54] and extension-based semantics [8] with the DQI’s established indicators, this research proposes a hybrid model automating conflict resolution while retaining contextual nuance, thus advancing deliberative theory into computationally controllable domains. A critical yet underexplored limitation of the DQI is its inability to provide real-time feedback during live deliberations. Traditional DQI application is retrospective, analyzing discourse post hoc rather than offering actionable insights to participants or moderators during the process [17]. This gap is particularly acute in digital platforms like Reddit or real-time citizen assemblies, where dynamic adjustments prompting users to justify claims or address counterarguments could enhance deliberative quality iteratively. Recent studies note that static metrics fail to guide in situ improvements, risking stagnation in polarized debates [25]. Integrating the AAF’s computational semantics, which are particularly suited for defeasible reasoning [8], could enable updates to argument acceptability as new evidence emerges. For instance, rebuttals or undercutting attacks could dynamically alter argument rankings, which would be visualized as conflict hot spots for moderators. This non-monotonic logic central to defeasible reasoning allows conclusions to be retracted or revised during deliberation and could be used in real-world dialectics [26].

3. Design and Methods

An empirical study was designed to integrate abstract argumentation with the Discourse Quality Index (DQI). In particular, the hypothesis tested is that integrating AAF with the DQI supports the scalability and automation of deliberative analysis, reducing reliance on only qualitative, subjective interpretation. This hypothesis addresses the limitations in deliberative analysis, such as reliance on human coders [1] and scaling quality measures to larger datasets [9], introducing a more objective and quantitative method. We conducted a pilot study combining the AAF’s framework with the DQI’s contextual depth to investigate the feasibility of creating a robust and scalable evaluation framework. In detail, computational argumentation theory, with its quantitative abstract version described in the previous section, can be complemented by the qualitative discourse quality index in discriminating arguments built by multiple human reasoners. The topic of abortion was selected as the focus of this pilot study due to its highly polarized and ethically complex nature, making it an ideal case for evaluating deliberative quality in contentious discussions. Deliberative processes on abortion often involve deeply conflicting viewpoints, requiring participants to justify, counter, and defend arguments with varying degrees of reasoning complexity. Additionally, abortion provides a rich and diverse set of arguments for analysis. Furthermore, debates surrounding abortion are not only philosophical and ethical but also political and legal, ensuring that the deliberation process encapsulates a broad spectrum of argumentative structures. By selecting a topic that is socially relevant and widely debated, this study ensures that the deliberation captured in the pilot study reflects real-world argumentative complexity, thereby offering a strong empirical basis for testing the effectiveness of the AAF in measuring deliberative quality. The following section introduces a pilot study built over different phases. It describes the use of the DQI in conjunction with the computational AAF presented by Dung in his seminal work [8].

3.1. Definition of Pilot Study

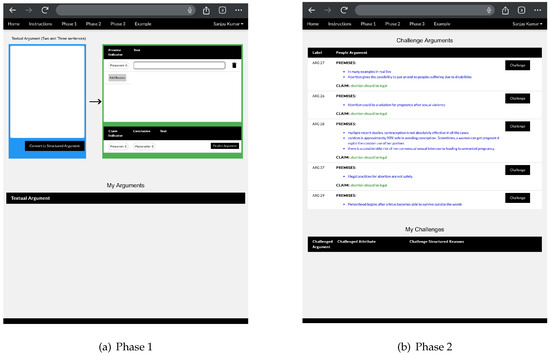

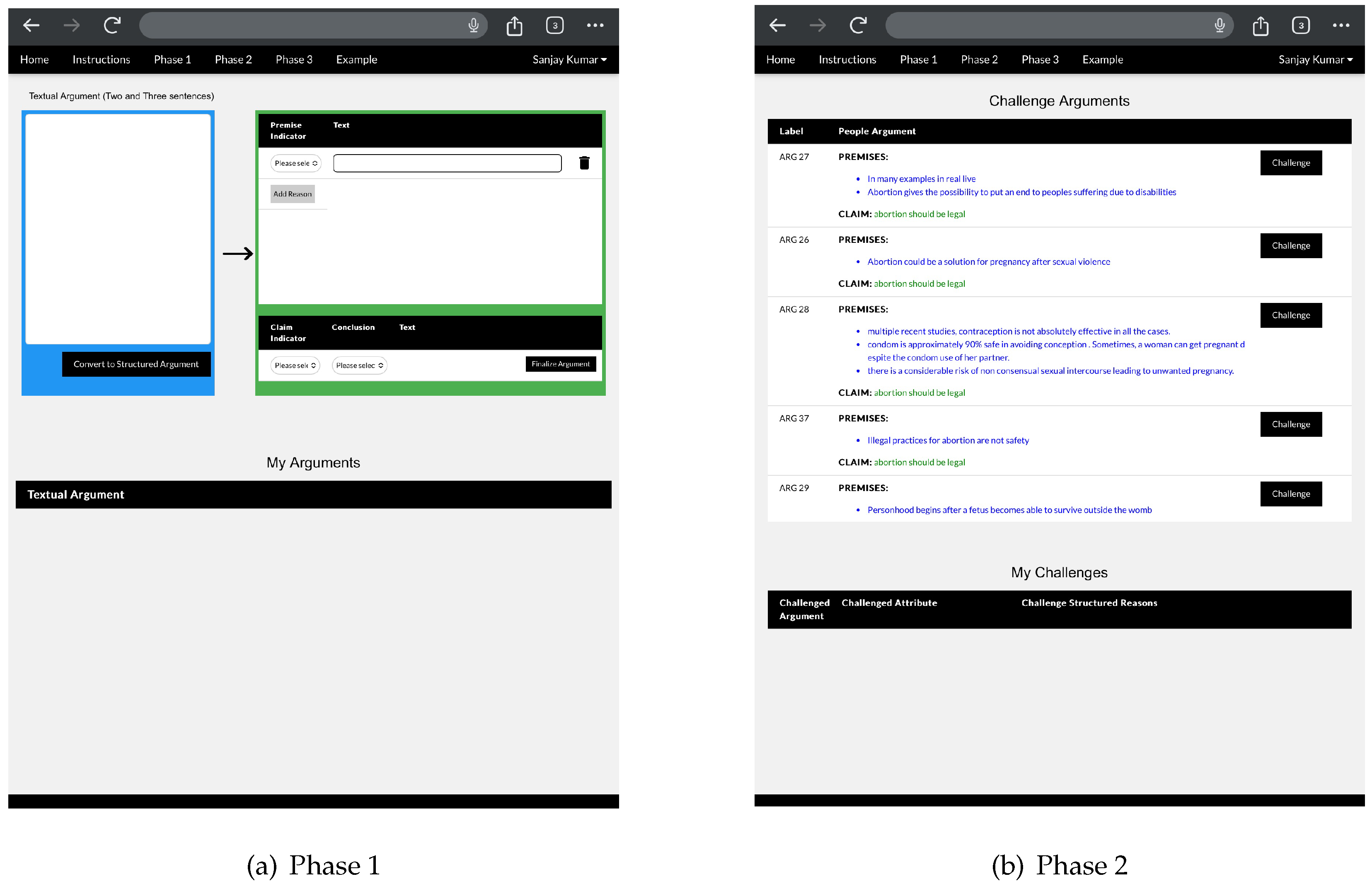

Our approach draws on the discourse ethics proposed by Habermas, highlighting the democratic essence of deliberation, in which each participant has an equal opportunity to contribute and justify their perspectives while respecting opposing viewpoints [10]. To create a structured, scalable, and user-friendly approach to deliberation, a custom web-based interface tool was developed for this study (Figure A1 and Figure A2 in Appendix A). Traditional deliberation analysis relies on manually coded speech transcripts, which are prone to subjectivity, time constraints, and inefficiencies. The web-based tool was designed to mitigate these challenges by allowing participants to submit, challenge, and respond to arguments in a time-strained environment, ensuring precise tracking of argumentation structures. This interface facilitated automatic data structuring, where each argument and counterargument was systematically stored, making it easier to apply AAF-based computational analysis. Additionally, the tool enabled real-time visualization of the argument and its structure, helping to represent attacks and counterarguments dynamically, which would be impractical in a purely manual setting. The pilot study follows a sequential approach with phases as suggested in [17] (p. 87). Additionally, as indicated in [59], splitting deliberations into many phases is ideal. This provides eight stages of the formal model of the deliberation dialogues that can be executed: open, inform, propose, consider, revise, recommend, confirm, and close. Nevertheless, they do not provide a formal argument evaluation. A formal model outlines a democratic policy deliberation process with two phases. The first phase establishes acceptable proposal criteria, while the second phase generates and assesses proposals based on these criteria [60]. These criteria and proposals are evaluated using preferred semantics [8]. This pilot study follows a sequential deliberation process, where participants first constructed their arguments, responded to counterarguments, and finally challenged the counterarguments. This structured approach ensured that the deliberation followed a logical progression, allowing for a more precise evaluation of argument strength, counterargument integration, and overall deliberative quality. By employing a controlled yet dynamic deliberative setting, the study tested the viability of integrating AAF ranking-based semantics with the DQI, demonstrating its potential to enhance deliberative assessment by providing an automated, scalable, and structured argumentation analysis.

An ethical proposal for the pilot study was submitted to the Research Ethics Committee(REC) of the Dublin City University, Ireland, and got approved(DCU-FHSS-2022-031). Participant recruitment was conducted by posting study advertisements on university walls and mailing lists. While it is best if research participants are genuine volunteers in most cases, this study is rather long, and finding volunteers can be difficult. For this reason, a financial reward mechanism via vouchers was set up as an essential motivator to attract participants. The REC approved 40 euros as a reward for the pilot study (between 3 and 4 h). A consent form and study information document, including specific instructions, were emailed to each participant two days before the pilot study. On the day of the study, a live demo of the web-based interface was initially performed for clarification purposes, allowing participants to clear up their doubts. Participants were given the same tablet (Samsung Galaxy Tab A7 10.4 [9.75 × 6.20 × 0.28 in]) to interact with the designed web interface. A total of 13 participants took part in the study, with a balanced male-to-female ratio of 7:6. The group included undergraduates, graduates, and academic experts. They generated over 200 arguments, of which 170 were deemed relevant for analysis, while 30 were excluded due to their brevity or repetition.

3.2. Definition of a Computational Argumentation Model

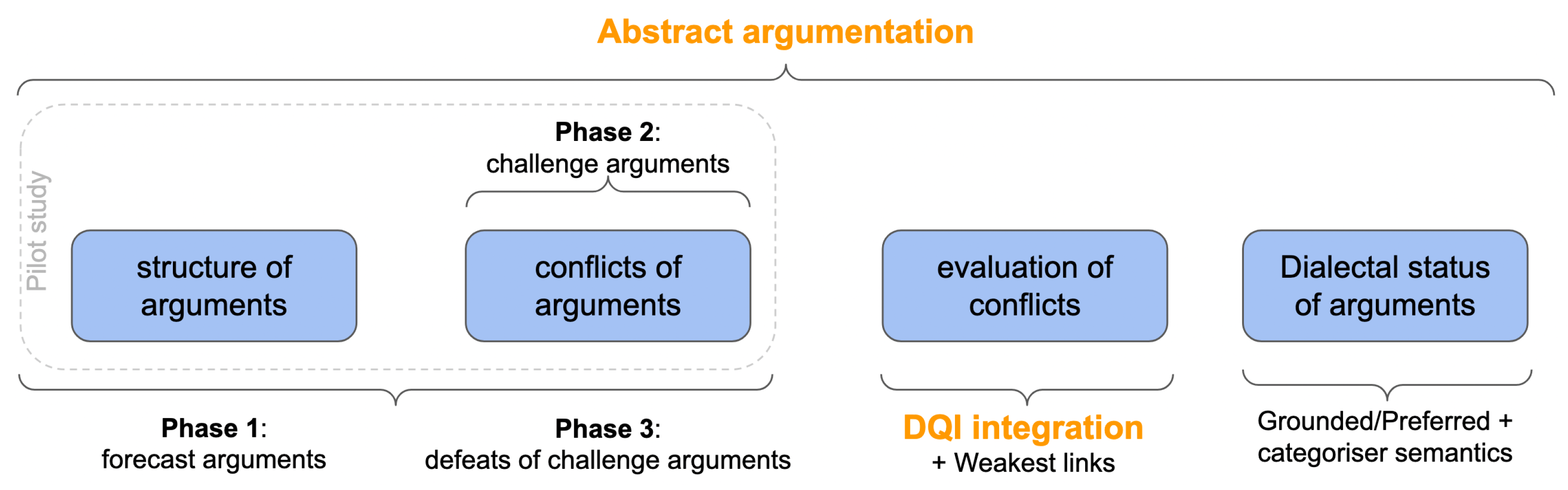

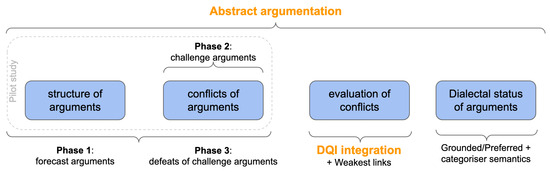

In line with argumentation-based systems that often feature multilayers [39,61,62], this research presents a modular framework, as depicted in Figure 2.

Figure 2.

A modular argument-based process that integrates computational abstract argumentation and the Discourse Quality Index.

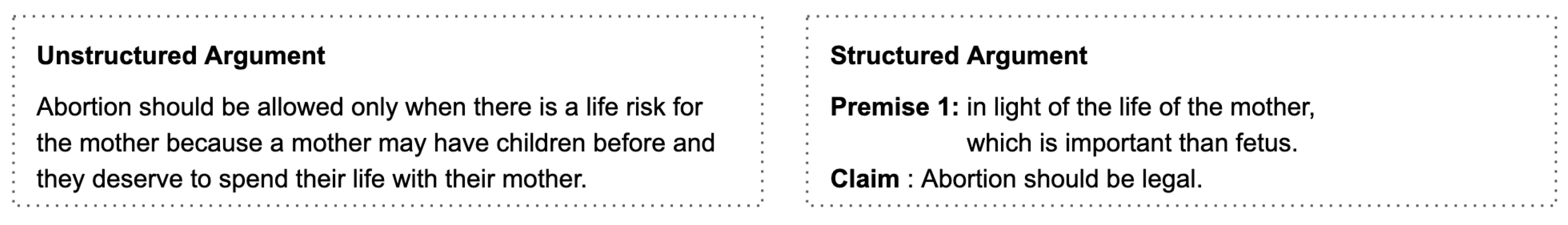

3.3. Pilot Study Phase 1: Definition of the Structure of Arguments

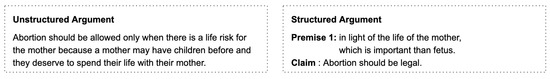

Usually, an argument comprises one claim and a single or many premises to support that claim. Formally, this structure can be referred to as a forecast argument, and, as explained in Section 2.2, it is a tentative defeasible inference that links premises to claims. However, in the real world, an argument’s structure is unlike in this format in terms of a claim, with its premises being written or spoken together. Hence, existing research focuses on extracting claims and premises from natural language text, finding their relations and providing a suitable structure to an argument for further analysis [63]. An example of an unstructured argument vs a structured one can be seen in Figure 3. Natural language arguments help us understand their subjective meaning and context, facilitating a human assessment of their DQI value. Most research studies focus on the dimensions and factors of deliberation quality but not the structure of arguments or dialogues. For these reasons, the web interface was developed to facilitate participants to present their natural (unstructured) arguments. However, to prepare arguments for subsequent computations, a tool was added to convert such natural arguments into structured ones. In detail, participants were asked to use lexical discourse indicators, which were used to label the arguments in the persuasive essays dataset [64], such as ‘because’, ‘although’, ‘however’, and many more, to perform the conversion and help to relate the premises with their claims. In a nutshell, unstructured arguments are raw and natural, allowing participants to express them subjectively. In contrast, structured arguments are built with existing linguistic indicators facilitating the identification of premises and allowing a clear link to one of the defined claims; in this case, whether abortion should be legal or not. Participants can create as many forecast arguments as they wish.

Figure 3.

An illustration of unstructured argument (left) and its structured version (right).

3.4. Pilot Study Phase 2: Definition of Challenge Arguments

The second phase is designed to create challenges which help to gain more insights into which arguments are conflicted. These challenge arguments are referred to as mitigating arguments. As mentioned in Section 2.2, mitigating arguments question the validity of a forecast argument, which means whether that argument’s premises or the conclusion are valid and whether any uncertainties appear. It helps to identify conflicts between arguments. Conflicts are sometimes referred to as attacks, challenges, or counterarguments. This pilot study considers only undercutting attacks, as explained in Section 2.2. In Figure A1 (Appendix A), part b indicates that participants can challenge any forecast arguments. In detail, the forecast arguments produced in Phase 1 are presented randomly in a list to avoid participants receiving the same initial set and to mitigate potential unknown biases. In the DQI, a counterargument indicator represents attacks where each argument/dialogue assigns value based on how the participant feels about that argument for which they were going to provide a counter. To understand this, experts must observe the context and assign a value to it according to the DQI approach. The AAF has a significant advantage; an example can be seen in Figure 1, where Argument B attacked Argument A without the context and topic. Visually, it looks as if B has challenged A. Our deliberation process tries to minimize the dependence on field experts to spend time identifying which participant provides a challenge for which argument. Additionally, it allows participants to challenge as many arguments as they want.

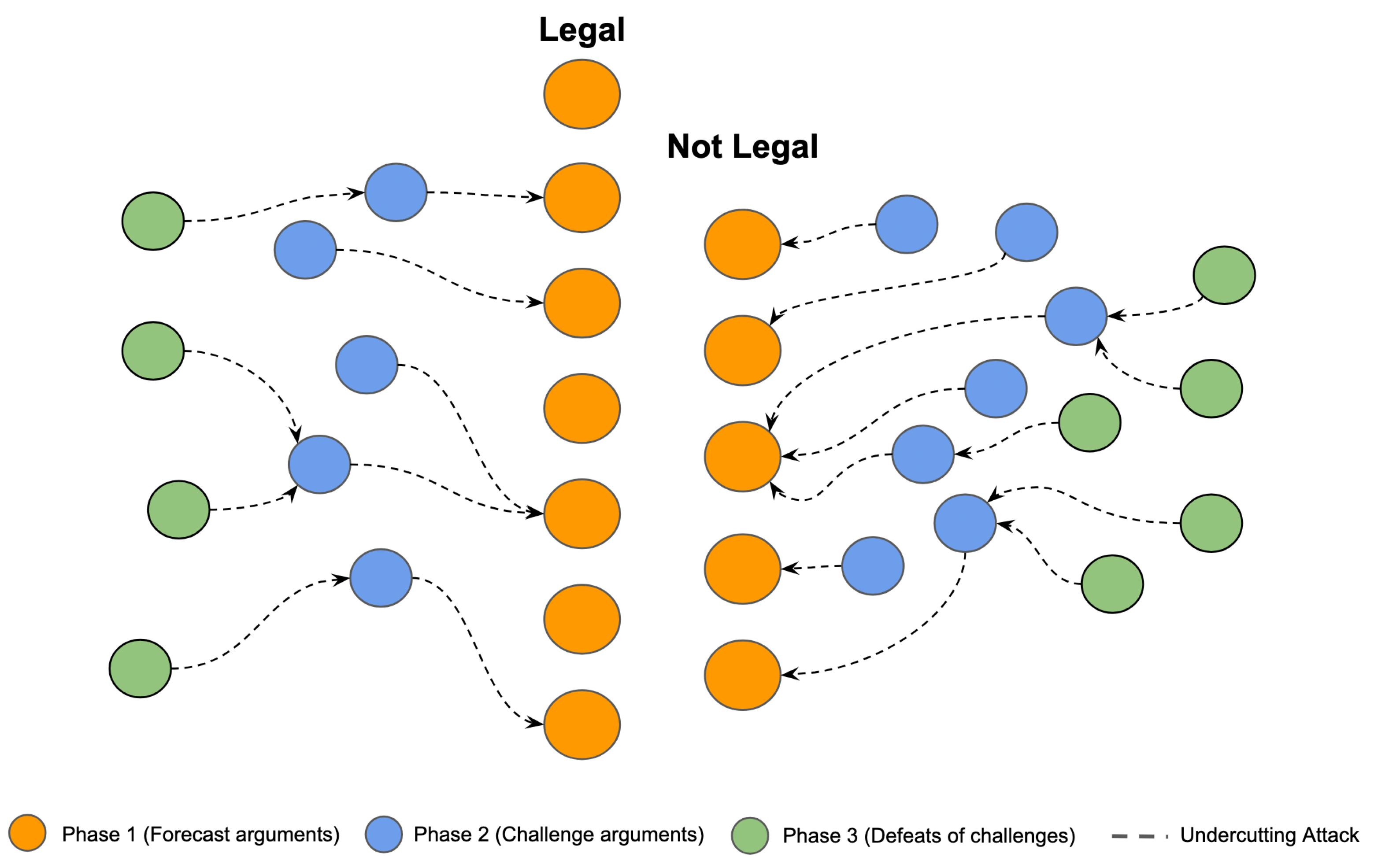

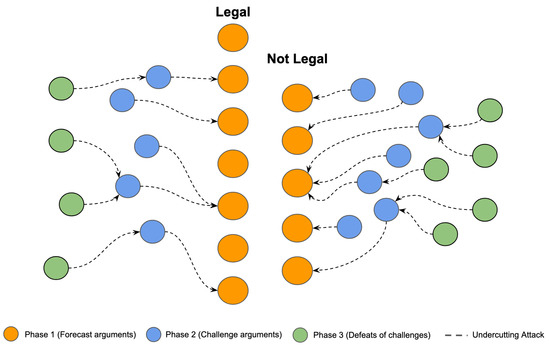

3.5. Pilot Study Phase 3: Definition of Defeats for Challenge Arguments

In the first two phases of the study, participants focused on generating arguments (forecast) and counterarguments (mitigating), essentially establishing a structured dialogue format. In the third phase, the participants were tasked with defeating the counterarguments presented in Phase 2, which were again presented in a random order. This process of argumentation and refutation was limited to one round to avoid an endless cycle of challenges. In this phase, participants could undermine the validity of counterarguments by nullifying, countering, or questioning them. This approach created a dialogical argumentation framework that could be evaluated using abstract argumentation semantics, as depicted in Figure 4. Importantly, this deliberation process allowed participants to refute counterarguments against other’s arguments, fostering a more collaborative and dynamic discussion. The data collected throughout these three phases included structured and unstructured textual arguments and an argumentation graph, providing a multifaceted view of the deliberation process. This study primarily focused on how Abstract Argumentation Frameworks (AAFs) can streamline the evaluation of deliberation quality while adhering to the fundamental dimensions of deliberation.

Figure 4.

Visual illustration of an Abstract Argumentation Framework (AAF) across three pilot study phases with the types of arguments and attacks.

3.6. Computation of Metrics for Deliberation Quality and Coding Procedure

As discussed in Section 2.3, the DQI helps measure the quality of a deliberation. This research study primarily focuses on the original DQI and its six indicators: participation, justification, content of justification, respect, counterargument, and constructive politics. Table 1 depicts the rubric for annotators with the rules to calculate each of these six indicators, with each indicator value ranging from 0 to 3 [65]. The DQI value is calculated by summing the six numerical indicators for each argument. The DQI for a dialogue is the average of all its argument’s DQI scores. For this pilot study, arguments are annotated using the DQI guidelines developed in [1]. These guidelines have been well established in the field for the last two decades and effectively evaluate the deliberation quality on any deliberation dataset. This pilot study consists of three phases moderated by its first author to run the deliberation process smoothly. The first author of this research was also in charge of coding the data collected from participants. The DQI scores were calculated individually for each argument produced over the three phases. The chains of arguments, starting from forecast (Phase 1) to challenge arguments (Phase 2) and defeats of challenges (Phase 3), can be seen as a multiparty dialogue. Phase 1 arguments represent the start of the dialogue, while the Phase 3 arguments indicate its end.

Table 1.

Discourse Quality Index (DQI) codebook for annotating arguments [1].

3.7. Dialectical Status of Arguments and the Weakest Link

This phase was built to evaluate the arguments formed in the previous three sequential phases. Such arguments are part of an overall formal argumentation framework (graph) in Dung’s terms [8]. Analyzing an argumentation graph with semantics can lead to more effective decision making independent of the topic and its context. In detail, this study primarily focused on the claims of forecast arguments to find which prominent point of view emerges: whether abortion should be legal or not. To find a set of acceptable arguments in the argumentation graph, grounded and preferred semantics [8] were adopted among all the arguments produced over the three phases. This will produce one or more conflict-free extensions (set of arguments). While this extension-based approach can successfully identify acceptable arguments, it cannot assign a strength to each one. Consequently, ranking-based semantics [54] were adopted for this purpose. They involve a computational mechanism to evaluate argument strength dynamically by assigning numerical values rather than binary acceptability. Unlike extension-based semantics, which classify arguments as acceptable or not, ranking-based approaches enable a graded differentiation between arguments. This method can support a more nuanced understanding of deliberative discourse by evaluating the presence of arguments and their relative influence within the discussion. Given that deliberative processes include evolving and often conflicting arguments, ranking semantics can enhance the precision of argument evaluation, ensuring a more scalable, objective, and automated approach to deliberative quality assessment. The justification for using ranking semantics also aligns directly with the research hypothesis that integrating the AAF with the DQI improves the scalability and automation of deliberative analysis. This method captures the hierarchical structure of argumentation, where stronger arguments sustain fewer successful attacks, while weaker arguments are refuted more frequently. By ranking arguments based on their interactions and levels of attack, ranking-based semantics provide a more detailed and dynamic evaluation than static DQI indicators. This approach can reduce dependence on human coders, mitigating biases that arise from interpretative subjectivity. Additionally, ranking semantics introduce context-independent evaluation, making them particularly useful in large-scale online deliberations, where manual annotation is impractical. By integrating the AAF with the DQI, the study proposes a computationally robust method that overcomes traditional deliberative analysis limitations and enables more transparent and systematic argument evaluation. In detail, DQI values have been used as a weight indicator in ranking-based semantics to remove the weakest attacks (links) from the argumentative graph. For example, if Argument A has been assigned a DQI score of 12 and a second Argument B has been assigned a DQI score of 10, then the attack from A to B is preserved, while B to A is removed. This strategy helps refine an argumentation framework by integrating the DQI, which can act as a weight to remove the weakest attacks and, thus, the arguments they start from. Also, it helps shape dialogues that can help improve the deliberation quality and help sustain democracy [66].

4. Results

Participants produced 200 arguments across the three phases, but only 170 were deemed relevant to the topic under consideration (abortion) and retained. The remaining 30 arguments were discarded due to their short length (less or equal to six words) or because they were repeated. Table 2 lists the number of arguments produced in each phase, the number of participants, the average of the arguments produced by each of them and their standard deviations, the average of word count per argument and its standard deviation, whether they supported the legal claim (or not), and the duration of each phase.

Table 2.

Descriptive statistics for arguments grouped by phase.

Such results show that the highest number of arguments (76) were recorded in Phase 2, where participants actively challenged the initial forecast arguments presented in Phase 1. In other words, the data indicate that structured deliberation phases influenced argument complexity, as evidenced by the increasing average length of arguments from Phase 1 (4.08 words per argument) to Phase 2 (5.85 words per argument). This pattern aligns with existing deliberation research, suggesting that participants engage more actively in counterargumentation rather than initial argument formulation [1,3]. Additionally, the word count per argument decreased over phases, suggesting a situation of possible convergence.

Table 3 shows an overview of the DQI scores for each phase of the deliberation process. These data do not reflect the time spent on the qualitative analysis. It was a time-consuming process that took more than four weeks to code each argument and understand its context and relation to the topic of abortion. In the ‘participation’ category, since there were no interruptions in any phase, all arguments received code 1, highlighting equal participation in every deliberation stage. Table A1–Table A5 (Appendix A) list examples of arguments coded differently for the other DQI categories (‘level of justification category’, ‘content of justification’, ‘respect’, ‘counterarguments’, and ‘constructive politics’).

Table 3.

DQI scores descriptive statistics grouped by phase.

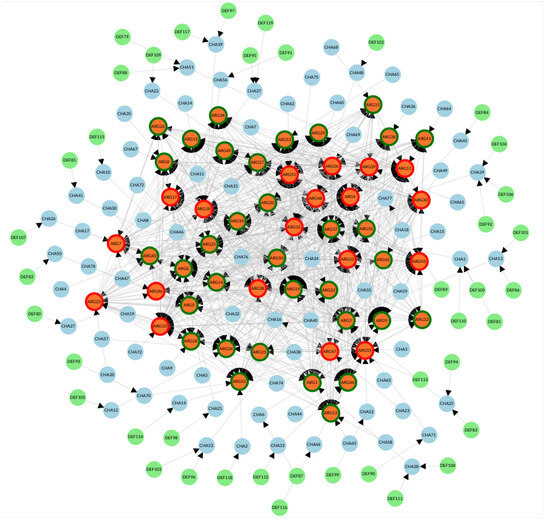

Phase 1 produced arguments with low-scoring DQI values, while Phase 2 and Phase 3 both produced high-scoring top DQI values for arguments. The reason is that during data coding, there was a common trend in Phase 1 whereby each argument did not respond to any other argument, so the code value for counterarguments was zero. Additionally, the average DQI values and standard deviation were significantly higher for the third Phase than for the previous two. This is explained by the fact that in Phase 3, subjects already saw the initial random set of forecast arguments (from Phase 1) and started counterattacking them (in Phase 2), refining their knowledge about the topic. This led to a composition of new counterarguments with higher precision and justificative content. Once DQI scores were produced for each argument and each phase, abstract argumentation theory was applied, and an Abstract Argumentation Framework (AAF) was produced (Figure 5).

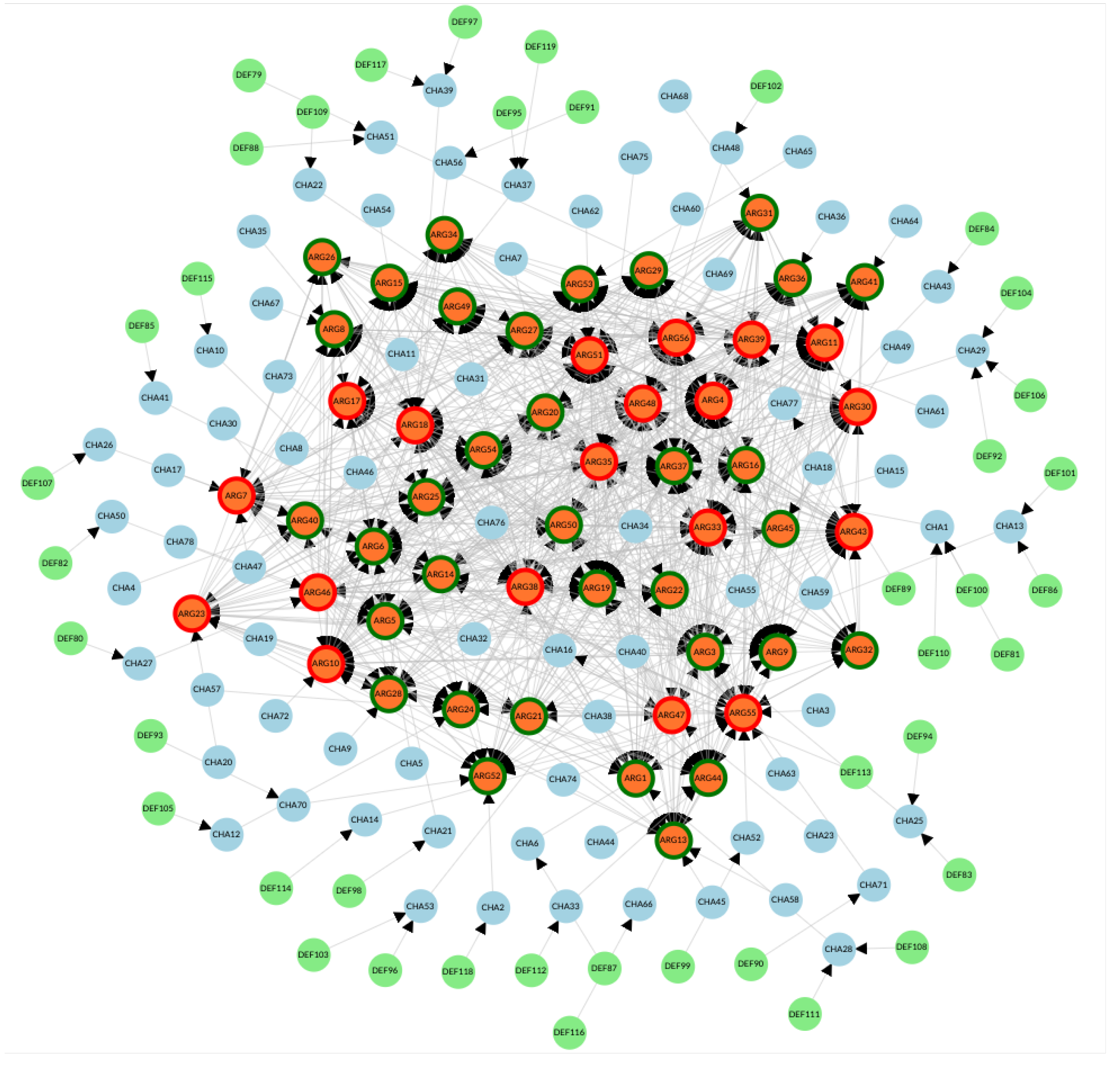

Figure 5.

Argumentation graph containing all the arguments produced in the pilot study, with color-coded nodes representing different arguments. The border on the orange node represents claims of forecast arguments. Blue nodes represent challenge arguments, while green node are defeats of challenge arguments.

The grounded, preferred, and categorizer semantics were executed at this stage, as described in Section 2.2. In particular, one unique, grounded extension was produced. This is a sceptical point of view containing all the accepted unattacked arguments regardless of their claim (legality of abortion or not). This coincides with the single computed preferred extension containing 13 accepted arguments (Table 4) either supporting the legality of abortion or not. It represents a maximal conflict-free point of view on abortion, in which its arguments succeeded in attacking all their attackers. In detail, the arguments of such a set are those presented in Table A8 (Appendix A), and strikingly, they all support the legality of abortion as a prominent claim. These arguments contained an average of 58 words, and the categorizer semantics and DQI score assigned them relatively high-rank values. Table A6 (Appendix A) lists some dialogues containing chains of arguments (including forecasts, challenges, and defeats). The forecast arguments in such dialogues (those containing a claim) were ranked low according to the categorizer semantics and were also assigned lower DQI scores. In contrast, Table A7 (Appendix A) lists some dialogues containing arguments with high DQI scores and categorized ranks. As it is possible to notice, these are single forecast arguments and not chains containing challenges or defeats of challenges. Hence, intuitively, they were the strongest arguments.

Table 4.

Descriptive statistics for the thirteen accepted arguments by the grounded/preferred semantic.

On the one hand, extension-based argument semantics, including grounded and preferred semantics, although they abstracted away the arguments’ relative differences, they allowed for the identification of the most prominent points of view: abortion should be legal. On the other hand, integrating ranking-based semantics and DQI scores was essential to combine quantitative and automatic identification of stronger arguments and to penalize qualitatively weaker arguments. Categorized semantics made the evaluation process more efficient, contributing significantly to theoretical and practical advancements in deliberation analysis. As this study’s findings indicate, a ranking-based evaluation of arguments is primed to enhance deliberation quality assessment by providing a systematic approach to measuring argument strength and influence. This structured ranking process facilitates decision making by identifying the most persuasive and resilient arguments, reducing the ambiguity inherent in subjective manual coding. Moreover, this method enables deliberative analysis to adapt to evolving arguments, providing a more holistic view of discourse quality. This research presents an innovative framework that significantly improves discourse quality evaluation’s scalability, reliability, and objectivity by integrating computational argumentation techniques with established deliberative measures. These contributions emphasize the necessity of bridging computational models with deliberative democratic theory, reinforcing the potential of the AAF and ranking semantics as essential tools for the future of deliberation analysis.

5. Discussion

The study’s findings support the growing consensus that computational tools can substantially complement established normative frameworks such as the DQI in analyzing deliberative quality [1,3,10]. Specifically, computational and abstract argumentation’s ability to model non-monotonic reasoning where arguments may be refined or retracted as new evidence surfaces enhances our understanding of how ‘real-world’ discussions evolve (see also [3,8]). Traditional DQI analysis, while illuminating key indicators such as justification and respect, often involves substantial human annotation. This can limit scalability, as coding hundreds or thousands of arguments for large-scale debates or online forums is resource-intensive [1,2]. By employing an AAF to detect conflicts automatically (via ‘arguments’ and ‘attacks’), researchers can expedite the identification of central arguments that are either most vigorously challenged or most resilient over multiple rounds of rebuttals. This allows for reducing the argument set, which can then help conduct a faster qualitative analysis, for example, using DQI-based indicators. Moreover, the pilot showed that combining the AAF with the DQI allowed for a more transparent exploration of polarized discussions on ethically sensitive issues like abortion. The iterative nature of the pilot’s three-phase design permitted a nuanced view of how arguments progressed from initial claims to multilayered debates, mirroring findings by Mockler and Bächtiger et al. on how reiterations of discourse can enrich deliberative depth [16,17]. Many participants who began Phase 1 with relatively superficial or unilateral positions ended Phase 3 by acknowledging at least some validity in opposing viewpoints—a transformation that was readily observed in both the DQI scoring (improved justification and constructive politics) and the AAF’s mapping of fewer successful ‘attacks’ on these arguments.

The multiphase deliberation system implemented in this research is inline with the Structured Dialogical Design (SDD) consensus-building process [67,68]. They both aim at consolidating varied viewpoints into a clear, analytical, and rational representation that can support strategic decision making and planning. Additionally, it is also aligned with the Issue-based Information System (IBIS), a particular argumentation-based approach to clarifying wicked problems that are difficult to solve due to the degree of inconsistency and incompleteness of the available information [69,70].

In fact, the proposed argument-based solution, via its computational semantics, tries to resolve contradictions among pieces of evidence in a dialogical structure [71], and it is open to multiple possible solutions to the underlying deliberation topics.

However, important limitations remain. First, the pilot study was the first of its kind, and its sample size (thirteen participants) restricts the generalizability of the results. Future research involving bigger and more diverse populations must validate the observed patterns and potential scalability [2,20]. Second, the requirement to structure arguments via lexical indicators (such as ‘however’ or ‘because’) may have constrained natural expression and deterred spontaneous interchange, pointing to a need for more flexible or automated extraction of argumentative structures via natural language processing, which is an avenue recognized by Fournier-Tombs and MacKenzie [3]. Third, while the DQI codebook covers critical dimensions of deliberation quality, it may not capture emerging elements such as affective or emotional framing, which can significantly shape real-world debates (as suggested in [4,18]). Despite these limitations, the combined DQI–AAF method demonstrates notable promise in producing a more holistic account of deliberation. By bridging a well-established normative tool (DQI) with a formal, topic-agnostic model of argument relations (AAF), the pilot study offers preliminary evidence that the synergy between qualitative discourse indicators and computational structural analysis can improve the consistency and richness of deliberative assessments. This resonates with the call from Bächtiger et al. [17] to explore interdisciplinary solutions for advancing deliberation studies, especially as online and hybrid forums become increasingly central to political decision making. The pilot results reinforce the value of uniting traditional, context-aware evaluation methods like the DQI with computational argument mapping. Such hybrid approaches could significantly enhance our ability to evaluate large-scale deliberations, identify particularly robust or problematic points of contention, and ultimately guide facilitators and policymakers toward fostering more informed, respectful, and solution-oriented public discourse.

6. Conclusions

This study demonstrates that converging the DQI with the AAF enhances deliberation research’s analytical depth and practical scalability. By systematically mapping arguments as nodes and attacks as edges, the AAF approach captures debates’ evolving, non-monotonic nature, a process often overlooked in static, manually coded evaluations. Meanwhile, the DQI-established indicators offer a rigorous qualitative lens, shedding light on key discursive dimensions such as justification, respect, and counterarguments. Taken together, these tools reveal not only which arguments withstand rebuttal but also how the norms of deliberative democracy are enacted or strained under complex, ethically charged discussions, as demonstrated in the pilot case of abortion.

Theoretically, this research bridges computational argumentation and deliberative democracy, advancing frameworks for analyzing evolving discourse [8,17]. Practically, policymakers can leverage AAF–DQI integration to identify robust arguments in contentious debates, fostering inclusive decision making. For instance, visualizing argument graphs could guide moderators in prioritizing rebuttals or highlighting consensus-building proposals. In summary, this study underscores the potential of interdisciplinary methods to address longstanding challenges in deliberation analysis. By uniting AAF computational rigor with DQI normative depth, we pave the way for scalable, objective, and context-sensitive tools to evaluate and ultimately enhance the quality of democratic discourse.

Building on these findings and limitations, future studies should aim to expand and refine the DQI–AAF across multiple fronts. First, investigating larger, more heterogeneous participant samples and a broader array of deliberation topics would test the generalizability and resilience of the proposed hybrid framework. Second, integrating advanced natural language processing could automatically detect discourse indicators and map argument structures in near real time, thus reducing human coding workloads. Third, incorporating novel metrics such as affective or narrative elements would help capture the emotional underpinnings of debate, broadening the scope beyond purely rational argumentation. Finally, longitudinal evaluations of live deliberations, where participants receive immediate feedback on their argument quality or structural positioning within the discussion, could significantly advance the practical utility of this approach. Implementing these enhancements allows the DQI and AAF to evolve into a robust, adaptable instrument for measuring and improving deliberative quality in academic and policy-focused arenas.

Author Contributions

Conceptualization, S.K., J.S. and L.L.; methodology, S.K. and L.L.; software, S.K.; validation, S.K. and L.L.; formal analysis, S.K. and L.L.; investigation, S.K. and L.L.; resources, J.S. and L.L.; data curation, S.K.; writing—original draft preparation, S.K. and L.L.; writing—review and editing, S.K., J.S. and L.L.; visualization, S.K.; supervision, J.S. and L.L.; project administration, J.S.; funding acquisition, J.S. All authors have read and agreed to the published version of the manuscript.

Funding

This work was conducted with the financial support of the Research Ireland Centre for Research Training in Digitally Enhanced Reality (d-real) under Grant No. 18/CRT/6224. For the purpose of Open Access, the author has applied for a CC BY public copyright licence to any Author Accepted Manuscript version arising from this submission.

Data Availability Statement

The materials supporting this study’s findings are available upon request from the corresponding author, Sanjay K. The data are not publicly available due to restrictions, e.g., their containing information that could compromise the privacy of research participants.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

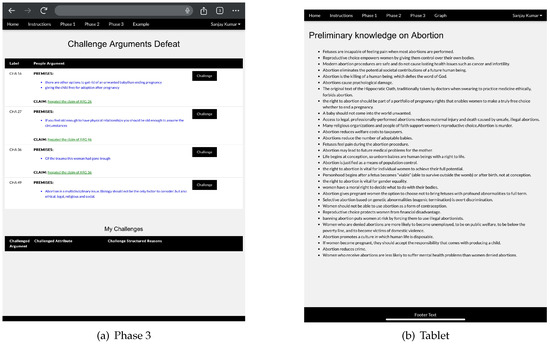

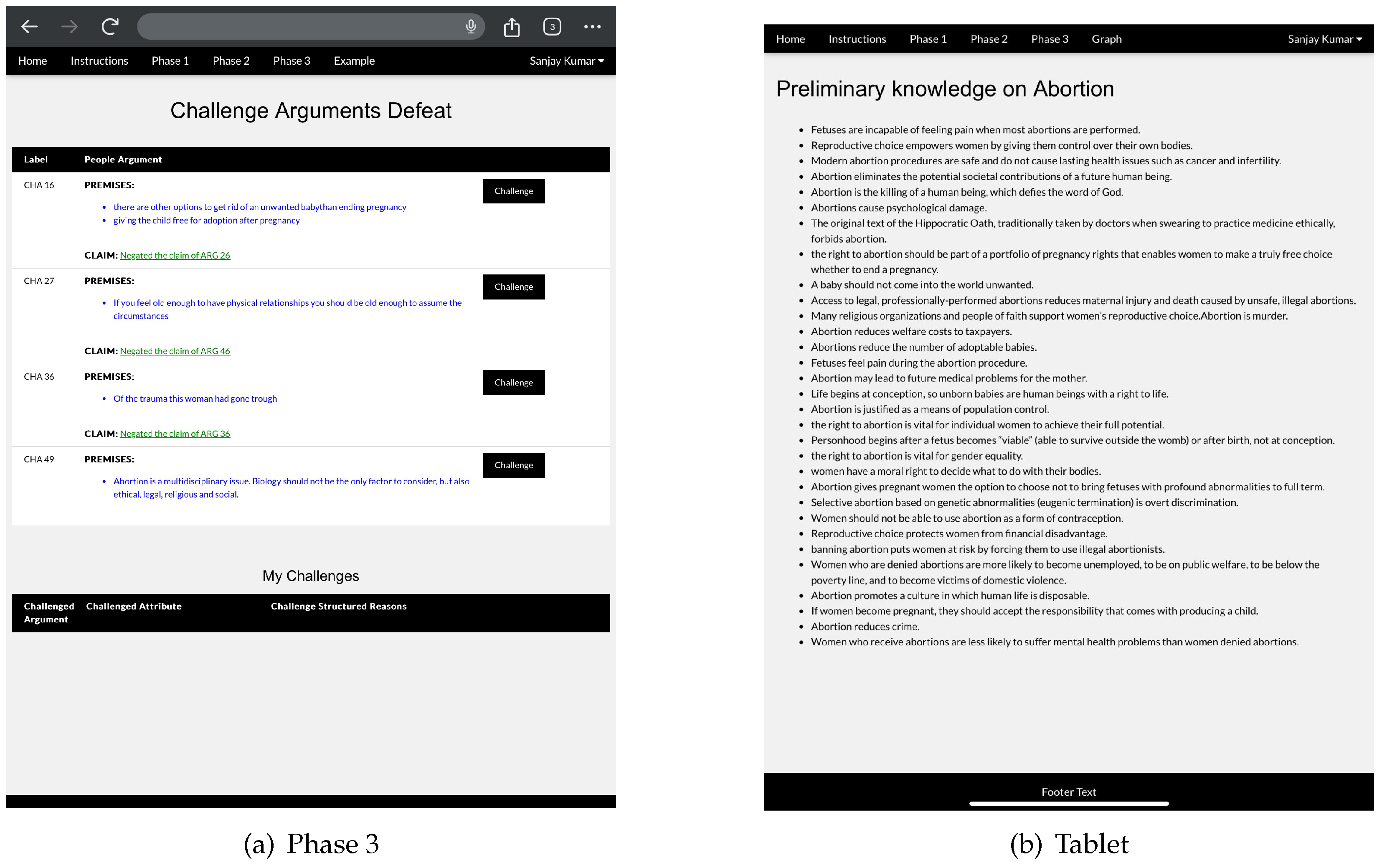

Figure A1.

Web interface for Phase 1 (a) and Phase 2 (b) of the pilot study.

Figure A1.

Web interface for Phase 1 (a) and Phase 2 (b) of the pilot study.

Figure A2.

Web interface for Phase 3 (a) and homepage (b) of the pilot study.

Figure A2.

Web interface for Phase 3 (a) and homepage (b) of the pilot study.

Table A1.

Examples of raw natural language arguments and their assigned DQI code for the category ‘level of justification’ grouped by phase (Phase 1: forecast arguments; Phase 2: challenge arguments; Phase 3: defeats of challenge arguments).

Table A1.

Examples of raw natural language arguments and their assigned DQI code for the category ‘level of justification’ grouped by phase (Phase 1: forecast arguments; Phase 2: challenge arguments; Phase 3: defeats of challenge arguments).

| Phase | Category Code | Argument Text |

|---|---|---|

| 1 | 0 | Abortion should be not legal given that the kid can live a good and healthy life. |

| 1 | 1 | Abortion should be not legal, as abortion is a temporary solution to the lack of sex education. |

| 1 | 2 | Abortion should be not legal because how we can stop the birth of a child who even did not come in the universe yet? Furthermore, let us suppose the expected child is unhealthy and disabled; then, would it be justified to be smashed? Why we are so selfish and make choices about the things which are not even under our control? |

| 1 | 3 | Abortion should be legal; assuming that women should be allowed equality with men, there can only be one fair conclusion. In view of the generally held position that women should be equal to men, abortion access must be free, safe, and legal, since a society in which half its population run the risk of being forced to use their bodies as incubation units for the unborn can hardly be said to be an equal one in light of the fundamental injustice of being forced to lose control of your body due to circumstances beyond your control. |

| 2 | 0 | For example, health conditions. |

| 2 | 1 | Due to the possibility that a child can be adopted by a structured family, then this argument cannot be considered valid. |

| 2 | 2 | Whereas every religion is a complete code and conduct of life, a society should follow its religion to make a better and sensible society, because if there will be no rules and regulation, then there will be no difference between humans and animals. |

| 2 | 3 | In light of abortion being a multidisciplinary issue, biology should not be the only factor to consider but also ethical, legal, religious, and social. |

| 3 | 0 | Because it is unknown when life starts, then killing might not even happen. |

| 3 | 1 | Researchers found that there is a considerable risk of non-consensual sexual intercourse leading to unwanted pregnancy. |

| 3 | 2 | Because each child has his/her own story. Each child carries their own luck and life story; moreover, parents should not be so predictable at this early stage to take this extreme step of killing them. |

| 3 | 3 | For example, sex education includes talking about contraceptive methods. Furthermore, sex education does not encourage sexual activities but ensures that the parties involved in the sexual act are aware of what is happening. |

Table A2.

Examples of raw natural language arguments and their assigned DQI code for the category ‘content of justifications’ grouped by phase (Phase 1: forecast arguments; Phase 2: challenge arguments; Phase 3: defeats of challenge arguments).

Table A2.

Examples of raw natural language arguments and their assigned DQI code for the category ‘content of justifications’ grouped by phase (Phase 1: forecast arguments; Phase 2: challenge arguments; Phase 3: defeats of challenge arguments).

| Phase | Category Code | Argument Text |

|---|---|---|

| 1 | 0 | Abortion should be not legal given that the kid can live a good and healthy life. |

| 1 | 1 | Abortion should be legal due to the fact that the baby will be in their belly and not the man’s one. |

| 1 | 2 | Abortion should be not legal because how we can stop the birth of a child who even did not come in the universe yet? Furthermore, let us suppose the expected child is unhealthy and disabled; then, would it be justified to be smashed? Why we are so selfish and make choices about the things which are not even under our control? |

| 1 | 3 | Abortion should be legal, since an extremely unhuman fetus might be growing inside a woman because giving birth to such a fetus might be extremely unfair for the newborn itself. |

| 2 | 0 | Given that men and women are not possibly equal on this topic. |

| 2 | 1 | In view of conception of a baby in the majority of the cases is the willingness by both parents. |

| 2 | 2 | Assuming that the kid can also live a disadvantaged life if the parents are not mentally, physically, and financially prepared. |

| 2 | 3 | Because abortion is all about the baby’s health and condition and the choices of parents to adopt the child or not. Of course, if there will be the matter of mother’s health, we should prefer the health of the mother, but in this abortion case, only the baby is involved and the parents’ choices about keeping the baby or not involved. |

| 3 | 0 | Because it is unknown when life starts, then killing might not even happen. |

| 3 | 1 | In light of this argument, it is a cop-out. Just because equality is hard or maybe even impossible, this is no reason to be resigned to an injustice and not even attempt to resolve it. |

| 3 | 2 | Because each child has his/her own story. Each child carries their own luck and life story; moreover, parents should not be so predictable at this early stage to take this extreme step of killing them. |

| 3 | 3 | For the reason that women are the ones most profoundly affected by this issue, given that it is clear that if one gender is being denied bodily autonomy while another is not, then this can only be a gender equality issue. |

Table A3.

Examples of raw natural language arguments and their assigned DQI code for the category ‘respect’ grouped by phase (Phase 1: forecast arguments; Phase 2: challenge arguments; Phase 3: defeats of challenge arguments).

Table A3.

Examples of raw natural language arguments and their assigned DQI code for the category ‘respect’ grouped by phase (Phase 1: forecast arguments; Phase 2: challenge arguments; Phase 3: defeats of challenge arguments).

| Phase | Category Code | Argument Text |

|---|---|---|

| 1 | 0 | Abortion should be not legal; furthermore, according to my belief, everyone should bring their own food. |

| 1 | 1 | Abortion should be not legal; as shown in different medical studies, abortion processes have life-long complications. |

| 1 | 2 | Abortion should be legal for the reason that religions should not impact the right to abortion, given that only psychological and biological reasons should have an impact. |

| 2 | 0 | Given that men and women are not possibly equal on this topic. |

| 2 | 1 | For the reason that abortion should be a last-resort practice, not just another contraceptive practice. |

| 2 | 2 | Because there are other options to get rid of an unwanted baby than ending pregnancy, for example, giving the child up for adoption after pregnancy. |

| 3 | 0 | Because abortion can cause life-long injuries. |

| 3 | 1 | Researchers found that there is a considerable risk of non-consensual sexual intercourse leading to unwanted pregnancy. |

| 3 | 2 | Due to the adoption industry being highly exploitative, adoption is not always a pleasant outcome. In addition, saying a woman should just give the child up for adoption overlooks the stresses, strains, and the burdens both physical and financial that having a baby do to a woman; furthermore, this overlooks the life and health of the mother who has to subject her body to pregnancy, as there may be complications that only termination can resolve. |

Table A4.

Examples of raw natural language arguments and their assigned DQI code for the category ‘counterargument’ grouped by phase (Phase 1: forecast arguments; Phase 2: challenge arguments; Phase 3: defeats of challenge arguments).

Table A4.

Examples of raw natural language arguments and their assigned DQI code for the category ‘counterargument’ grouped by phase (Phase 1: forecast arguments; Phase 2: challenge arguments; Phase 3: defeats of challenge arguments).

| Phase | Category Code | Argument Text |

|---|---|---|

| 1 | 0 | No counterargument was addressed in Phase 1. |

| 1 | 1 | No counterargument was addressed in Phase 1. |

| 1 | 2 | No counterargument was addressed in Phase 1. |

| 1 | 3 | No counterargument was addressed in Phase 1. |

| 2 | 0 | For example, we do not stop treatment of a patient even if they are found with some very critical illness. |

| 2 | 1 | as The chances of an unwanted child being happy are low. |

| 2 | 2 | In view of conception of a baby in the majority of the cases is the willingness by both parents. |

| 2 | 3 | Whereas every religion is a complete code and conduct of life, a society should follow its religion to make a better and sensible society, because if there will be no rules and regulations, then there will be no difference between humans and animals. |

| 3 | 0 | Because it is unknown when life starts, then killing might not even happen. |

| 3 | 1 | As at the very early stages, the fetus cannot feel anything. It is not a child yet. |

| 3 | 2 | As the mother is the one mostly affected when having an unwanted child. |

| 3 | 3 | Because women do not always choose to have sex, as some suffer abuse; furthermore, there are men who abandon their kids even after birth, leaving the responsibility to the woman. |

Table A5.

Examples of raw natural language arguments and their assigned DQI code for the category ‘constructive politics’ grouped by phase (Phase 1: forecast arguments; Phase 2: challenge arguments; Phase 3: defeats of challenge arguments).

Table A5.

Examples of raw natural language arguments and their assigned DQI code for the category ‘constructive politics’ grouped by phase (Phase 1: forecast arguments; Phase 2: challenge arguments; Phase 3: defeats of challenge arguments).

| Phase | Category Code | Argument Text |

|---|---|---|

| 1 | 0 | Abortion should be legal, assuming that there are risks for anyone involved, in the present or future, or violent conception. |

| 1 | 1 | Abortion should be not legal, given that the kid can live a good and healthy life. |

| 1 | 2 | Abortion should be legal, assuming that banning abortion puts women at risk by forcing them to use illegal abortionists. |

| 2 | 0 | Whereas an abortion can lead to psychological problems, forcing a pregnancy can also lead to a wide range of problems, including psychological issues; for example, an unwanted or even a wanted pregnancy can lead to health issues in certain circumstances, even potentially leading to the death of the mother. Given that more severe problems can arise from forcing a pregnancy than allowing a woman to choose if she wants to be pregnant, the argument that abortions could lead to problems is invalid. |

| 2 | 1 | As the chances of an unwanted child being happy are low. |

| 2 | 2 | Because there are other options to get rid of an unwanted baby than ending pregnancy, for example, giving the child up for adoption after pregnancy. |

| 3 | 0 | As decisions as to whether someone gets to live should not be about fairness. |

| 3 | 1 | Because each child has his/her own story. Each child carries their own luck and life story; moreover, parents should not be so predictable at this early stage to take this extreme step of killing them. |

| 3 | 2 | For example, sex education includes talking about contraceptive methods; furthermore, sex education does not encourage sexual activities but ensures that the parties involved in the sexual act are aware of what is happening. |

Table A6.

An illustration of dialogues that ranked low according to the categorizer semantics, with number of attacks and description of the internal structure of arguments ( is a forecast argument of Phase 1 attacked by some phase-2 challenge arguments and, in turn, attacked by phase-3 defeats).

Table A6.

An illustration of dialogues that ranked low according to the categorizer semantics, with number of attacks and description of the internal structure of arguments ( is a forecast argument of Phase 1 attacked by some phase-2 challenge arguments and, in turn, attacked by phase-3 defeats).

| Dialog Labels | Claim | Dialog Text | Attacks | Ranking (Categorizer) |

|---|---|---|---|---|

| ARG55, CH3, CH6, DEF87, CH14, DEF114, CH52, DEF99, CH63, CH66, DEF116, CH74 | Abortion should be not legal | ARG55: As abortion is a temporary solution to the lack of sex education. CH3: Since the evidence is there is a considerable risk of non-consensual sexual intercourse leading to unwanted pregnancy. CH6: In light of women being informed about prevention and not sex education. DEF87: For example, sex education includes talking about contraceptive methods; furthermore sex education does not encourage sexual activities but ensures that the parties involved in the sexual act are aware of what is happening. CH14: For example, health conditions. DEF114: Because there are always chances of infection and life-long injuries. CH52: This can be seen from woman with very low income raising 4 or 5 kids, sometimes from different fathers. DEF99: As there is still the possibility of giving the child up for adoption. CH63: In view of abortion and security education are not mutually exclusive. CH66: Assuming that the woman chose to have sex and was responsible, birth control is not 100% effective; furthermore, not every pregnant woman chose to have sex. Better sex education cannot do much for rape victims. DEF116: Because women do not always choose to have sex, as some suffer abuse; furthermore, there are men who abandon their kids even after birth, leaving the responsibility to the woman. CH74: Assuming that an instructor might have a very peculiar teaching style while teaching sex education, therefore, sex education cannot be considered a valid solution to abortion. | 11 | 0.16 |

| ARG 22, CH32, CH34, CH40, CH53, DEF 96, DEF 103 | Abortion should be legal | ARG 22: In light of the life of the mother bieng more important than the fetus. CH 32: Assuming that a woman has a few days left of life because of a rare cancer, then her life cannot be considered more important than the fetus she is carrying. CH 34: Is supported by God. CH 40: Because abortion is all about the baby’s health and condition and the choices of parents to adopt the child or not. Of course, if there will be the matter of the mother’s health, we should prefer the health of the mother, but in this abortion case, only the baby is involved, and parents choices about keeping the baby or not are involved. CH 53: As the mother’s life usually is not threatened by a pregnancy, just her lifestyle; in addition, the baby’s life is more important than the mother’s lifestyle. DEF 96: As mothers who cannot afford having a child can get sick due to poverty. DEF 103: Given that while the cases where the mother’s health is threatened are a minority of cases, those cases still occur and are valid; moreover, it is very flippant to disregard how much a woman’s life changes after having a child. Imagine a society in which men were arbitrarily forced to become pregnant with no agency in the matter. | 6 | 0.23 |

| ARG 54, CH8, CH46, CH76 | Abortion should be legal | ARG 54: Because of unwanted pregnancy, the child would be disadvanted in terms of mental and financial aspects, for example, if the parents are not mentally prepared to give birth and raise their kid, this one would not have decent care and attention. Furthermore, an unstable material situation would stop the child from having extracurricular activities, which could lead to a lack of self-confidence. CH 8: As the government can support unwanted babies to have a good life away from their families. CH 46: Assuming that no one is prepared for that. CH 76: Assuming that if they got pregnant it was meant to be, and for some reason, they should go through this experience together. | 3 | 0.25 |

| ARG 35, CH1, DEF 81, DEF 100, DEF 110, CH60, CH75 | Abortion should be not legal | ARG 35: Given that the kid can live a good and healthy life. CH 1: As the chances of an unwanted child being happy are low. DEF 81: Because each child has his/her own story. Each child carries their own luck and life story; moreover, parents should not be so predictable at this early stage to take this extreme step of killing them. DEF 100: Because happiness is a complex multilevel feeling. DEF 110: Because every human being carries his/her own life story and because the woman does not always choose to have sex, and some suffer abuse and get pregnant; furthermore, there are men who abandon their children even after birth, leaving the responsibility to the woman. CH 60: Assuming that the kid can also live a disadvantaged life if the parents are not mentally, physically, and financially prepared. CH 75: Given that this overlooks the life and health of the mother who has to subject her body to pregnancy; furthermore, it ignores the many babies that are born unviable, living only minutes after birth. | 6 | 0.31 |

Table A7.

Illustration of some dialogues that ranked high according to the categorizer semantics, with number of attacks and description of the internal structure of arguments.

Table A7.

Illustration of some dialogues that ranked high according to the categorizer semantics, with number of attacks and description of the internal structure of arguments.

| Dialog Labels | Claim | Dialog Text | Attacks | Ranking (Categorizer) |

|---|---|---|---|---|

| ARG 32 | Abortion should be legal | ARG 32: Since the vast majority, upwards of 90% of abortions, take place so early in the pregnancy that only a pill-based intervention is necessary, in light of this, concerns over the invasiveness of the procedure or any pain felt by the fetus are irrelevant. Furthermore, decisions to have an abortion later in the pregnancy are almost always due to medical necessity. Because of this, outlawing abortion will mean blocking access to a safe, minimally invasive and often vitally necessary procedure and put the lives of those who carry children at risk. | 0 | 1.0 |

| ARG 50 | Abortion should be legal | ARG 50: As some women would look for alternatives to terminate their pregnancy, for example, by getting an illegal abortion in someone’s home without the necessary hygienic measures for example, leading to infections; in addition to threatening the respective womens’ lives and health, this can be seen from the time abortion was still illegal. | 0 | 1.0 |

| ARG 30 | Abortion should be not legal | ARG 30: For example, if a woman goes for abortion due to any reason, then the child who lived in the mother’s stomach for a time is his/her fault, because he or she does not know his or her mistake, in addition to all these mothers who did suffer pain and bleeding being totally disgraceful; moreover, that child is innocent and, we cannot stop any birth by force which itself looks unethical. | 0 | 1.0 |

| ARG 43 | Abortion should be not legal | ARG 43: Due to having free contraception in some countries, maybe inferred abortion should only be available in some cases in those countries. | 0 | 1.0 |

| ARG 34 | Abortion should be legal | ARG 34: As society does not support women who decide to have a baby and cannot afford to do it. | 0 | 1.0 |

| ARG 56 | Abortion should be not legal | ARG 56: Because they have fought for this right for so long. But when it comes to pregnancy, it has another life involved. | 0 | 1.0 |