1. Introduction

The rapid evolution toward sixth-generation (6G) networks is fundamentally driven by the increasing demand for communication services that require ultra-reliable low-latency communication (URLLC). Mission-critical applications such as remote robotic surgery [

1], autonomous vehicle coordination [

2], smart manufacturing [

3], and tactile internet require latencies as low as 1 ms or less, combined with reliability levels reaches to 99% [

2]. While the fifth-generation (5G) wireless systems have introduced support for URLLC, they are increasingly constrained by the architectural limitations of centralized orchestration, shared infrastructures, and traffic unpredictability in multi-slice deployments [

3].

To address the scalability, flexibility, and diversity of 6G services, network slicing has emerged as a core architectural principle, enabling operators to create logically isolated, application-specific virtual networks on shared physical infrastructure [

4]. Each slice may have its own service-level agreement (SLA), resource allocation policy, and performance goals. However, while this concept provides theoretical flexibility, the operational realization of slicing, particularly in dynamic heterogeneous environments, faces significant challenges, especially for time-sensitive URLLC services that coexist with best-effort enhanced mobile broadband (eMBB) or massive machine-type communication (mMTC) traffic.

In parallel, network function virtualization (NFV) has been proposed to decouple network functions from proprietary hardware, allowing operators to deploy virtualized network functions (VNFs) as software modules in data centers, cloud infrastructure, or at the edge [

5]. The ETSI NFV MANO (Management and Orchestration) framework formalizes this architecture through the NFV Orchestrator (NFVO), VNF Manager (VNFM), and Virtualized Infrastructure Manager (VIM), providing a reference model for lifecycle management, resource provisioning, and policy enforcement [

6,

7]. While ETSI NFV has gained industry acceptance, its standard orchestration policies are not inherently latency-aware, and orchestration actions are often reactive, initiated only when thresholds are violated or alarms are triggered [

8].

Prior research efforts have attempted to improve URLLC performance using heuristic-based or optimization-based approaches to VNF placement. For instance, latency-aware VNF placement using integer linear programming (ILP) has shown promise [

9,

10], however, ILP-based solutions typically lack scalability and adaptability to real-time network dynamics, making them less practical for fast-changing 6G environments. Others have explored service function chaining (SFC) frameworks combined with cost-aware migration strategies. For example, an SFC migration survey highlights that many approaches use static system snapshots or instantaneous network metrics rather than anticipating future network state changes [

11,

12]. Additionally, the isolation of URLLC traffic from eMBB flows has been studied in 5G slicing contexts [

13], but concrete strategies that enforce such isolation dynamically in shared 6G infrastructures remain sparse and often lack integration with standards-based orchestration platforms.

Meanwhile, recent advances in machine learning (ML) and artificial intelligence (AI) have opened new possibilities for proactive and intelligent network management. AI techniques are increasingly capable of analyzing vast amounts of telemetry data in real time, enabling traffic prediction, anomaly detection, and optimized resource allocation [

14]. In the context of 6G, several studies have proposed AI-native orchestration frameworks that embed learning capabilities directly into slice management, mobility prediction, and dynamic resource scaling. For example, the REASON architecture outlines a modular, AI-integrated controller designed for end-to-end orchestration in future networks [

15], while Moreira et al. present a distributed AI-native orchestration approach incorporating ML agents throughout network slice lifecycles [

16]. Additionally, ML has been applied to classify slice types and forecast slice handovers in simulated 6G scenarios [

17]. However, most of these solutions remain conceptual or simulation-based and do not provide full end-to-end orchestration integrated with ETSI NFV MANO, which limits their applicability in operational carrier-grade environments.

This work distinguishes itself through three main innovations. First, it integrates AI-based short-term latency forecasting into the orchestration decision loop, enabling proactive VNF migrations and preemptive slice adjustments before SLA violations occur, moving beyond threshold-based reactive policies. Second, the design is fully compliant with the ETSI NFV MANO reference model, embedding the predictive logic within the NFVO without requiring architectural deviations. Third, in the absence of public 6G telemetry datasets, we develop and use synthetic workload generators that emulate bursty URLLC and eMBB behaviors, allowing comprehensive evaluation of forecasting accuracy and orchestration performance under diverse traffic conditions.

To address this need, we propose a latency-aware NFV slice orchestrator for 6G that incorporates an AI-based predictive module within the NFVO. The orchestrator uses real-time telemetry (e.g., latency trends, traffic volume, VNF resource usage) to forecast short-term latency spikes using machine learning models such as ARIMA or LSTM neural networks. Based on the predicted latency profile, the orchestrator proactively migrates or scales VNFs, selects optimized host placements, and applies traffic isolation policies. These include mechanisms such as CPU pinning, NUMA-aware scheduling, or SR-IOV-based virtual interfaces to reduce inter-slice interference. Our design follows the ETSI NFV MANO reference architecture [

6,

7], ensuring compatibility with existing NFV deployments and providing a path toward standard-compliant 6G slicing orchestration.

A major novelty of this paper lies in its integration of AI-based latency prediction into the orchestration loop, driven by a forecast horizon that allows decisions to be made ahead of latency violations. In contrast to static placement or threshold-triggered actions, our approach enables proactive VNF migration and early slice adjustment, which is essential for time-sensitive applications. Another distinctive aspect is our focus on synthetic data-driven evaluation. Given the lack of public URLLC telemetry datasets for 6G, we simulate traffic with realistic properties (bursty arrivals, contention, noisy neighbors) and use it to evaluate the accuracy and impact of latency prediction models. This methodology, though artificial, offers valuable insight into the behavior of predictive orchestration systems.

Therefore, in this paper, we aim to achieve four primary objectives. First, we formalize mathematical models that describe end-to-end latency, VNF migration costs, and SLA constraints in the context of slice orchestration. Second, we design an AI-based prediction module for latency trends using time-series forecasting. Third, we embed this module into the ETSI NFVO, enabling proactive migration, scaling, and slice policy enforcement. Finally, we evaluate the performance of the orchestrator on synthetically generated URLLC/eMBB workloads, comparing it with static and reactive orchestration baselines.

Our contributions are as follows:

Design of a standards-aligned, AI-driven orchestration framework that integrates latency forecasting into ETSI NFV MANO.

Implementation of a prediction-aware orchestration loop with real-time migration and isolation mechanisms.

Empirical evaluation using synthetic 6G workloads to demonstrate reduced SLA violations, better latency stability, and effective eMBB/URLLC traffic separation.

The remainder of this paper is structured as follows:

Section 2 provides the necessary background and a comprehensive review of related work in NFV slicing, ETSI MANO architecture, AI-based orchestration, and latency-sensitive service delivery.

Section 3 presents the proposed system architecture, detailing the integration of AI-enhanced orchestration within the ETSI NFV framework.

Section 4 formulates the mathematical modeling for latency prediction, cost functions, and orchestration constraints.

Section 5 describes the implementation of the AI-based forecasting logic, optimization Algorithms, and the synthetic data generation process.

Section 6 presents the evaluation results across key performance metrics such as end-to-end latency, SLA violations, migration frequency, and forecasting accuracy, including analysis on resource usage and orchestration cost.

Section 7 offers a discussion on trade-offs, scalability, and practical implications of the proposed approach. We end with conclusions and future works in

Section 8.

3. Methodology

To ensure low-latency and reliable delivery of time-sensitive services in 6G, this work proposes a predictive, latency-aware orchestration framework for Network Function Virtualization (NFV). The methodology is grounded in the ETSI MANO architecture [

7] and enhanced with intelligent modules for forecasting latency violations, proactively migrating VNFs, and isolating critical traffic. This section describes the components of the orchestration framework, the prediction-based control logic, and its integration within the NFVO’s decision pipeline.

3.1. System Architecture and Components

The framework shown in

Figure 1 adheres to ETSI NFV MANO [

7], with additional components as follows. We further explain the framework more briefly.

NFVO: The central intelligence of the framework, extended with an AI-driven control module.

VNFM: Responsible for lifecycle management and monitoring of individual VNFs.

VIM: Manages the physical and virtual resources where VNFs are deployed.

AI Engine: Embeds a learning model (e.g., NFVLearn [

27]) into the NFVO for real-time latency prediction.

Policy Engine: Defines the rules for VNF migration, scaling, and isolation based on predicted violations.

Latency Monitor: A distributed module at the VIM layer for collecting real-time metrics on per-VNF latency, queueing delay, and resource contention.

VNF Placement and Migration Controller: Executes preemptive migration and resource scaling for VNFs based on AI insights and policy constraints.

Figure 1 illustrates the proposed system architecture for latency-aware predictive NFV orchestration, structured into three horizontal tiers to align logically with the ETSI MANO framework while incorporating AI-enhanced extensions.

At the top tier, representing standard ETSI NFV MANO components, the architecture includes the NFV Orchestrator (NFVO), which is decomposed internally into three submodules: the AI Engine on the left, responsible for latency prediction using time-series models; the Policy Engine in the center, which enforces SLA rules and placement policies; and the VNF Placement & Migration Controller on the right, which executes optimized orchestration decisions. To the right of the NFVO lies the Virtual Network Function Manager (VNFM), which handles the life-cycle management of individual VNFs. The Virtualized Infrastructure Manager (VIM) is placed in the top tier just below the VNFM, reflecting its intermediary role between orchestration logic and physical infrastructure.

The middle tier comprises predictive control extensions. A key component is the Latency Monitor, which collects real-time metrics (e.g., delay, jitter, resource utilization) from the underlying infrastructure and provides this telemetry to the AI Engine in the NFVO via a feedback data flow. On the left side of this tier, a Monitoring Database/Telemetry Input is depicted as a cylindrical storage element. This module stores historical telemetry and orchestrator decisions and provides input to the AI Engine, supporting model training and long-term trend detection.

At the bottom tier, the architecture shows the physical and virtual infrastructure. It includes three compute nodes labeled according to their slicing function: Server A for URLLC VNFs, Server B for eMBB VNFs, and Server C for shared best-effort VNFs. Each server hosts multiple colored VNF boxes representing different service chains. Below the compute nodes, a Data Plane layer includes a network switch and SR-IOV (Single Root I/O Virtualization) interfaces, allowing direct, low-latency connections from latency-sensitive VNFs to the physical network, bypassing virtualization bottlenecks.

The framework also shows key interconnections: a standard Or-Vnfm interface between the NFVO and VNFM, and a Vi-Vnfm interface between the VNFM and the VIM, ensuring compatibility with ETSI MANO. A feedback loop connects the NFVO to the Latency Monitor, enabling prediction-based decision-making. Policy arrows indicate that SLA constraints flow from the Policy Engine to the Placement Controller, guiding orchestration actions. Finally, migration arrows visualize the live relocation of VNFs across servers when forecasted SLA violations trigger proactive mitigation.

This modular design combines standards-compliant orchestration with AI-native extensions and real-time telemetry integration, supporting latency-aware, dynamic service management for 6G time-sensitive applications.

3.2. Predictive Orchestration Model

The key novelty lies in the predictive orchestration mechanism. Rather than responding after latency thresholds are breached, the AI engine learns patterns of latency evolution and preemptively signals potential SLA violations. The process begins with data collection, where real-time metrics such as VNF processing delay, network delay, CPU utilization, memory usage, and slice-specific performance indicators are gathered. Next, in the feature processing stage, these raw inputs are converted into temporal sequences using sliding windows and enriched with contextual information such as time-of-day, traffic class, or prior migration activity.

The resulting data stream feeds into the prediction model, typically an LSTM, GRU, or ensemble-based time-series regressor trained on synthetic telemetry to forecast latency spikes one or two steps ahead. The synthetic telemetry is generated to emulate realistic 6G slice behavior in the absence of publicly available URLLC datasets. We model traffic arrival using a Poisson process for normal load conditions and introduce bursty episodes using a compound Poisson model to simulate congestion or scheduling delays. Latency values are sampled from a base signal (e.g., sinusoidal or trend-based curve) with superimposed Gaussian noise (μ = 0, σ = 0.3 ms) to simulate jitter and random delay variations. VNFs are labeled by slice type (URLLC or eMBB), each with distinct SLA thresholds and service rates. Additionally, context features such as time-of-day and recent migration activity are encoded to capture temporal dependencies. This synthetic telemetry stream is used to train the AI model (e.g., LSTM) to recognize early patterns of SLA violations before they occur. When the predicted latency exceeds a predefined URLLC SLA threshold (e.g., 1 ms), an orchestration trigger is activated. This trigger is first routed to the Policy Engine, which validates whether migration or scaling actions are permissible under current slice constraints, migration limits, and load balancing policies. If approved, the system proceeds to perform VNF reallocation or initiate proactive migration before actual service degradation occurs. This preemptive behavior reduces service disruption, avoids reactive handovers, and improves resource utilization. Conversely, if the predicted latency remains within SLA bounds, no orchestration is triggered, and the system continues monitoring the telemetry data in real time to reassess conditions in the next evaluation cycle. This end-to-end flow is visually illustrated in

Figure 2.

3.3. VNF Isolation Strategy

To shield URLLC VNFs from interference by background or eMBB traffic, the orchestrator enforces infrastructure-level isolation:

CPU Pinning: URLLC VNFs are bound to isolated physical cores, avoiding shared scheduling queues [

28].

NUMA-Aware Scheduling: Memory affinity is respected so that latency-critical VNFs avoid cross-NUMA memory access delays [

29].

SR-IOV Interfaces: Direct hardware I/O channels reduce virtualization overhead and jitter.

Slice-Aware Host Scheduling: Compute nodes are segmented by slice type. URLLC VNFs are never colocated with best-effort VNFs.

This strategy, based on established isolation mechanisms, reduces latency variance and ensures deterministic performance, especially in multi-tenant edge environments.

3.4. Migration Management

Live migration is invoked when the AI engine forecasts a sustained SLA breach. The migration controller evaluates candidates based on predicted delay, current load, and prior migration cost history.

State-Aware Migration: The VNF state is serialized and checkpointed before migration begins.

Warm Transfer Protocol: The state is transferred while keeping the old instance active, minimizing downtime.

Restore and Resume: The VNF is restored and traffic is rerouted via SDN-based control plane updates.

This process is governed by the Policy Engine and occurs transparently to the service consumer. By forecasting before overload occurs, it avoids emergency handovers and degraded QoS.

3.5. ETSI Compliance and Integration

The entire orchestration logic is designed for compatibility with ETSI NFV. The AI and policy modules are pluggable extensions within the NFVO logic. No changes are required to standard interfaces such as Or-Vnfm or Vi-Vnfm. Network service descriptors (NSDs) are augmented with latency thresholds and migration constraints but retain compliance with existing standards.

This ensures that the system can be deployed on top of ETSI-aligned orchestrators such as Open Network Automation Platform (ONAP), OSM, or commercial NFV stacks, while providing proactive intelligence beyond traditional rule-based models.

4. Mathematical Modelling

To support intelligent and latency-compliant orchestration decisions, we define a set of models that capture the system’s temporal behavior, resource dynamics, and forecasting structure.

4.1. End-to-End Latency Model

Let a network slice for a given service be composed of a sequence of VNFs forming a service function chain (SFC). Each VNF instance is deployed on a compute node , with each compute node characterized by its processing capacity and queuing behavior.

The total end-to-end latency

for a packet traversing the SFC is given by:

where:

: Processing delay at VNF

: Queuing delay at compute node hosting

: Network delay between and

Each component is time-varying and resource-dependent. The queuing delay can be modeled via M/M/1 or M/G/1 queue approximation, depending on traffic variability.

where:

A URLLC slice must maintain:

where

is the maximum tolerable latency (e.g., 1 ms).

4.2. Migration Overhead Model

When the orchestrator decides to migrate a VNF

from node

(source) to

(destination), the migration latency includes state transfer delay, restart time, and potential traffic rerouting:

where:

: Time to transfer state over bandwidth

: Time to reinitialize VNF at the new location

: Time for SDN controller to update the forwarding rules

The migration feasibility constraint ensures that the benefit of migration outweighs the latency overhead:

where

ϵ is a configurable slack margin to tolerate minor jitter caused by forecast uncertainty or orchestration delay. In our experiments,

ϵ is set to 0.1 ms.

4.3. Latency Forecasting Model

Let be the observed system metrics at time t, including:

We construct a time-series input sequence:

In this formulation, t is the current time step, and T is the forecast window size, representing the number of prior observations used as model input. The sequence Xt = {xt − T + 1,…, xt} captures temporal dynamics in recent telemetry. In our setup, T = 10, and t ∈ [T, 1000], aligned with the 1000-step simulation duration.

Using this, a supervised learning model

(⋅) is trained to predict the next-step latency:

where:

is a deep model (e.g., LSTM, GRU, or Temporal CNN)

represents model parameters trained using synthetic datasets

The loss function used in training is Mean Squared Error (MSE):

The orchestrator uses the forecast to trigger migration or scaling decisions before latency violates SLA bounds.

To implement the forecast-driven orchestration in practice, we design a decision loop that continuously monitors the system metrics, predicts the next-step latency, and compares the forecast with the SLA constraint. If a potential violation is detected, the orchestration logic evaluates migration candidates and triggers proactive reallocation if a better node can be found. This decision-making logic is captured in Algorithm 1, which shows the steps for AI-enhanced latency prediction and policy enforcement.

Algorithm 1 outlines a latency-aware control loop where a time-series model (e.g., LSTM) forecasts future delay based on recent system history. If the predicted latency

exceeds the service-level agreement (SLA) threshold

, the orchestrator computes a migration plan that maintains latency compliance. Forecast-driven decision-making like this improves reactivity and reduces unnecessary migrations.

| Algorithm 1. Predictive Orchestration Logic for Latency-Sensitive Slices |

Input:

- Sliding time window of system metrics: Xt = {xt−t+1, …, xt}

- SLA threshold for service k:

- Forecast model: (·) with parameters θ

Output:

- Orchestration action: {migrate, scale, monitor}

1: ît₊1 ← (Xt; θ) ▷ Forecast next-step latency

2: if ît₊1 > then

3: candidate_nodes ← GetAvailableTargets(v_i)

4: for each c_j in candidate_nodes do

5: Estimate migration delay using Equation (4)

6: Estimate new path latency , new(j) using Equation (3)

7: if ,new(j) + ≤ then

8: Execute Migrate(v_i, c_j)

9: break

10: end if

11: end for

12: else

13: Continue monitoring

14: end if |

Computational Complexity of Algorithm 1

Algorithm 1 executes a single-step latency prediction followed by a loop over available target nodes for migration, yielding a complexity of O(C), where C is the number of candidate nodes. Since inference from the forecasting model is a constant-time operation, this Algorithm is suitable for real-time decision-making in URLLC contexts.

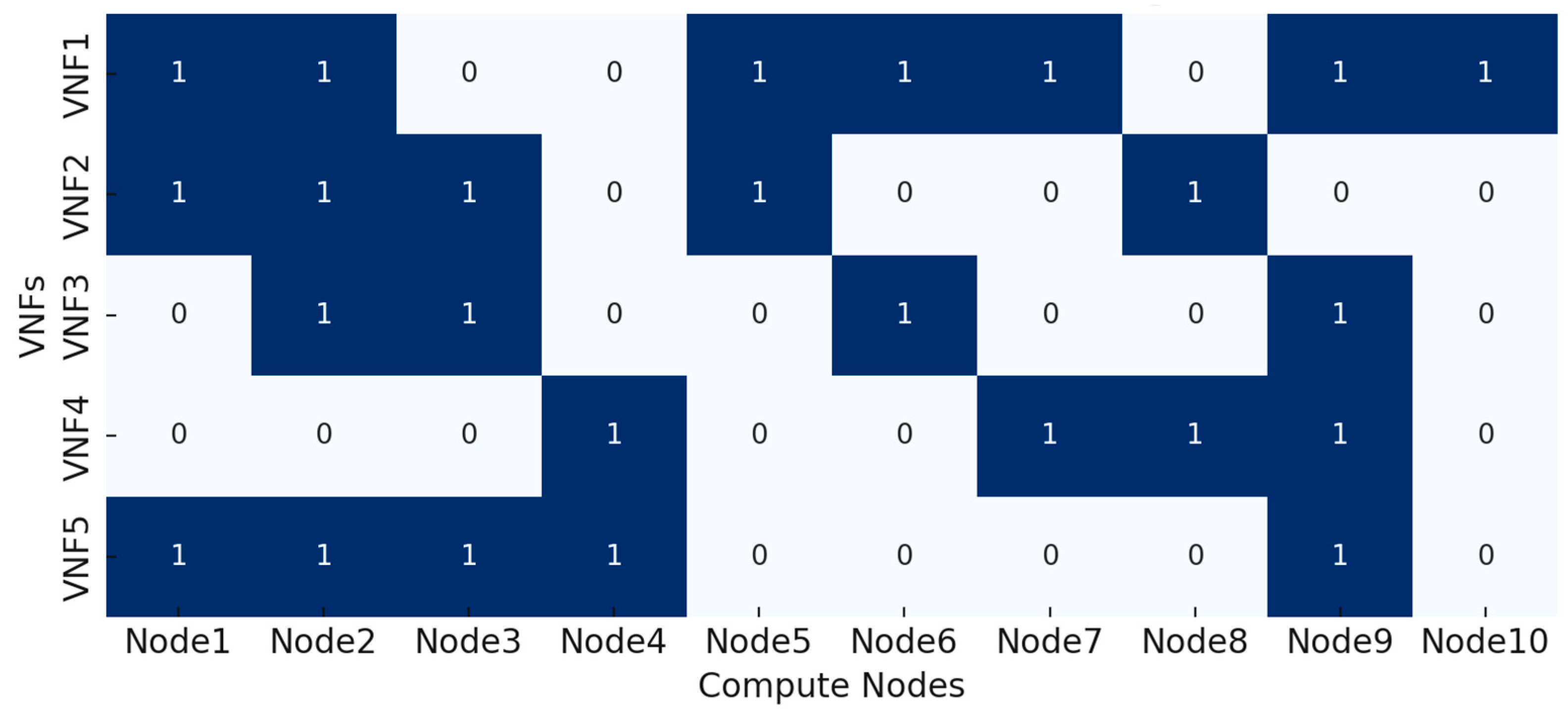

4.4. Optimization Formulation

An additional decision layer is modeled as an optimization problem:

Resource constraints on CPU, memory, and bandwidth:

where:

A: VNF-to-node assignment matrix

M: Migration indicator matrix

: Cost weight for migration overhead

The framework allows the orchestrator to evaluate multiple options (migrate, scale, delay) and select the optimal one. In scenarios where multiple VNFs or service chains require adjustment, individual migrations may conflict or overload shared resources. To optimize the system-wide response, we formulate an offline placement problem that jointly considers end-to-end latency and migration overhead. While exact solutions to this problem are computationally intensive, we employ a heuristic routine that approximates a near-optimal solution in polynomial time.

Algorithm 2 describes a resource-aware orchestration process that scans each service slice

, estimates its current latency, and, if required, iterates through candidate nodes to find a feasible migration with minimal added cost. The Algorithm minimizes a combined cost metric

, in alignment with the optimization objective in Equation (9).

| Algorithm 2. Heuristic Optimization for VNF Placement with Latency Guarantees |

Input:

- Set of service chains and their latency bounds

- Current VNF-to-node assignments:

- Migration budget:

- Resource availability matrix R

Output:

- Updated assignment matrix:

- Migration plan:

1: Initialize: ← ∅, ←

2: for each service k in S do

3: Compute current latency L from Equation (2)

4: if > then

5: for each v_i in chain V_k do

6: candidate_nodes ← FeasibleTargets(v_i, R)

7: for each c_j in candidate_nodes do

8: Estimate total cost: cost ← , new + α·

9: if cost ≤ + ε then

10: Add (v_i, c_j) to and update

11: break

12: end if

13: end for

14: end for

15: end if

16: end for

17: Return , |

Computational Complexity of Algorithm 2

Algorithm 2 evaluates each VNF in service chains that exceed their SLA latency. For each VNF, it iterates over feasible nodes, estimating a placement cost. Thus, the total complexity is O(K × V × C), where K is the number of services, V the average number of VNFs per service, and C the number of placement options. Despite being heuristic-based, the Algorithm maintains practical runtime for moderately sized deployments, supporting scalability in 6G environments.

5. Experimental Design and Setup

To evaluate the proposed framework, a controlled simulation environment was designed to emulate 6G network slicing scenarios with URLLC and eMBB services. Since no publicly available dataset captures real-world end-to-end latency in 6G-era NFV infrastructures with slicing orchestration feedback loops, we rely on synthetic data generation aligned with performance trends from literature [

30,

31,

32].

5.1. Simulation Environment

The simulation platform is developed in Python 3.10 and emulates the behavior of an ETSI-compliant NFV MANO stack with added predictive orchestration. The simulated environment models:

Compute nodes representing virtualized infrastructure with CPU, memory, and bandwidth constraints.

VNF instances configured with distinct processing capacities and deployed over virtual nodes.

SFCs for both URLLC and eMBB services with variable latency constraints.

Latency measurement modules capturing queuing, processing, and inter-VNF communication delays.

Orchestrator logic, supporting both baseline (reactive) and AI-enhanced predictive control.

It is worth mentioning that the environment supports modular plug-ins for AI models and orchestration policies, making it extensible for benchmarking multiple strategies.

Furthermore, the workload generation follows a hybrid model combining Poisson arrivals for baseline request generation and bursty traffic spikes to emulate the high variability nature of URLLC workloads under congestion or demand surges. This approach aligns with recent 6G traffic modeling studies in [

12,

25], which report that traffic for time-sensitive services often exhibits self-similar, heavy-tailed, and bursty characteristics. These models are particularly relevant for URLLC slices, where latency violations often correlate with sudden traffic bursts or resource contention.

5.2. Slicing and Traffic Configuration

Two primary slice types are emulated:

URLLC slices: highly delay-sensitive chains with SLA .

eMBB slices: throughput-focused chains with relaxed latency bounds (e.g., 10–20 ms).

Each slice type has an associated SFC with 3 to 5 VNFs per chain. The simulation runs with 10 to 20 service chains at once, introducing congestion, cross-traffic, and resource contention.

The traffic patterns are generated using:

Poisson arrivals for URLLC flows.

Bursty background traffic to model real-world cross-slice interference.

Time-varying CPU and bandwidth usage patterns.

5.3. AI Forecasting Model

The latency prediction model is implemented using an LSTM neural network. The model forecasts the end-to-end latency for each slice using a sliding window of system features shown below, keeping in mind that the model is trained offline and then embedded in the orchestrator for online inference during experiments.

The system features are:

The model configuration is as follows:

Input window size: 10 time steps.

Hidden layers: 2 LSTM layers with 64 units each.

Optimizer: Adam.

Loss function: Mean Squared Error (MSE).

Training data: 5000 synthetic samples generated from traffic simulations.

5.4. Orchestration Baselines

To validate the effectiveness of the predictive orchestrator, two baseline strategies are implemented:

Reactive orchestrator: aims to monitor real-time latency and migrate VNFs only when SLA violations occur (no forecasting).

Static orchestrator: performs one-time initial placement and does not support migration or scaling during execution.

These are compared against the proposed predictive orchestrator, which uses Algorithm 1 (forecast-driven migration) and Algorithm 2 (cost-aware placement optimization).

5.5. Experiment Parameters

The core experimental setup is summarized in

Table 2:

The LSTM model is configured with a 10-step input window and two hidden layers of 64 units each. These hyperparameters were selected empirically through preliminary tuning to balance accuracy and responsiveness. Larger window sizes or model depths yielded marginal accuracy gains at the cost of higher inference delays, while smaller configurations led to underfitting and reduced prediction precision. The chosen setup consistently achieved sub-2 ms inference latency while maintaining high predictive reliability, making it suitable for proactive orchestration in latency-sensitive environments.

5.6. Evaluation Metrics

The following performance metrics are used to assess the system:

These metrics allow fair comparison between predictive, reactive, and static orchestration strategies under identical traffic loads.

As we indicated earlier, because real-world 6G NFV testbeds and end-to-end latency datasets are not yet publicly accessible, we employ synthetic data derived from performance patterns observed in 5G/6G-oriented research [

30,

31,

32]. The simulation environment enables controlled and repeatable experimentation across various orchestration strategies under dynamic load conditions. While this provides a strong foundation for evaluating architectural behaviors and decision models, future work will aim to validate the framework in real or emulated testbeds (such as OpenAirInterface, Mininet, or ETSI NFV PoCs).

7. Discussion

The experimental results demonstrate that predictive orchestration significantly improves latency compliance and operational efficiency in 6G-enabled NFV infrastructures. Here in this section, we interpret these findings, highlight trade-offs and limitations, and discuss practical implications for real-world deployment.

7.1. Trade-Offs in Predictive Orchestration

The integration of AI-based forecasting into the orchestration loop offers clear benefits in reducing SLA violations and minimizing unnecessary migrations. However, this gain is achieved at the cost of the following:

Increased computational overhead: running LSTM-based predictions and solving placement optimization (Algorithm 2) introduces a control-plane processing delay, albeit minimal (~2 ms in our setup).

Model retraining: forecast models must be periodically retrained to adapt to shifting traffic patterns, especially in highly dynamic environments like 6G RAN-core slicing scenarios.

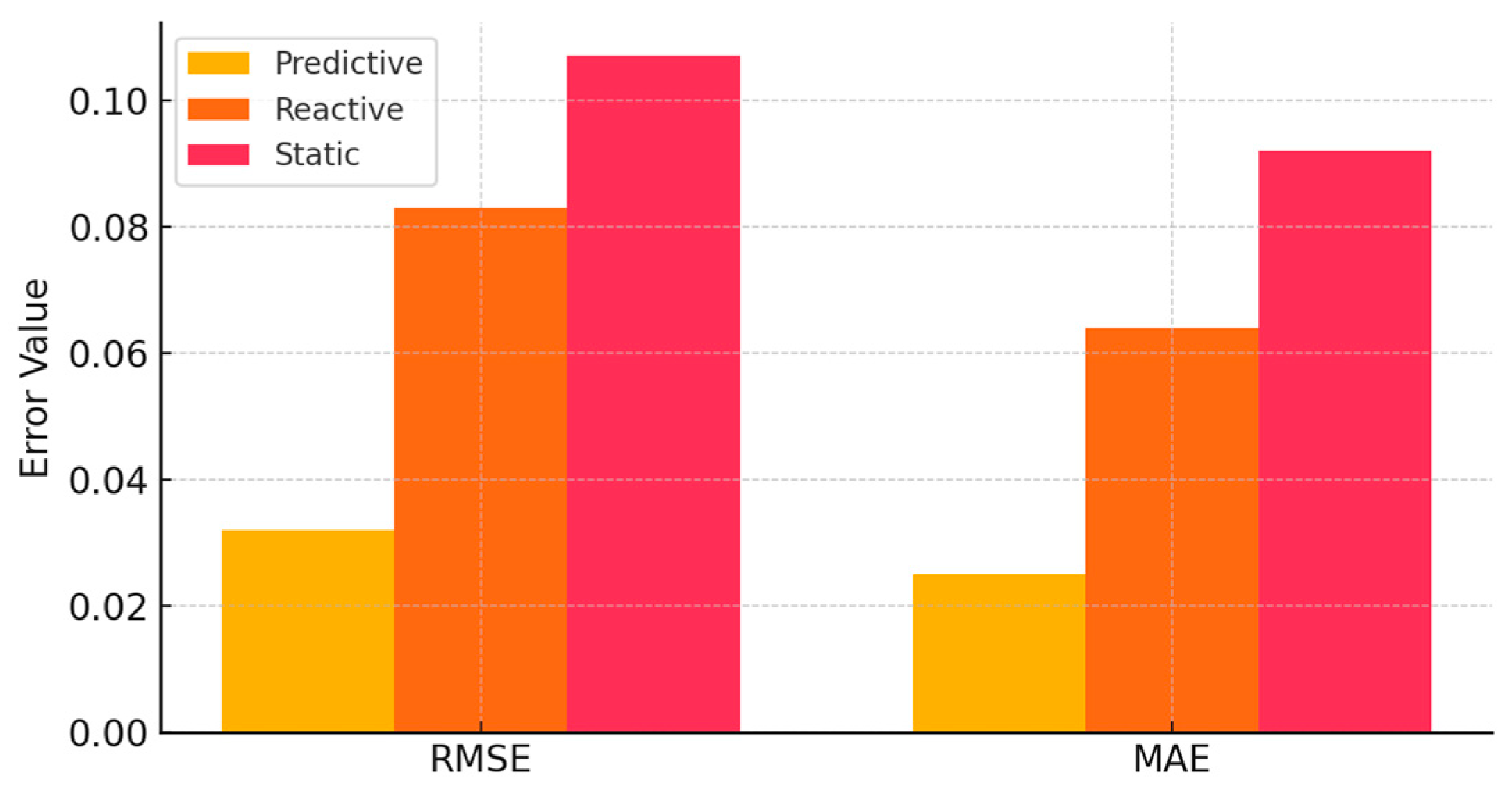

Forecast uncertainty: even with low RMSE/MAE, prediction errors can occasionally cause suboptimal migration decisions or false positives. These must be balanced against the migration cost (Equation (11)).

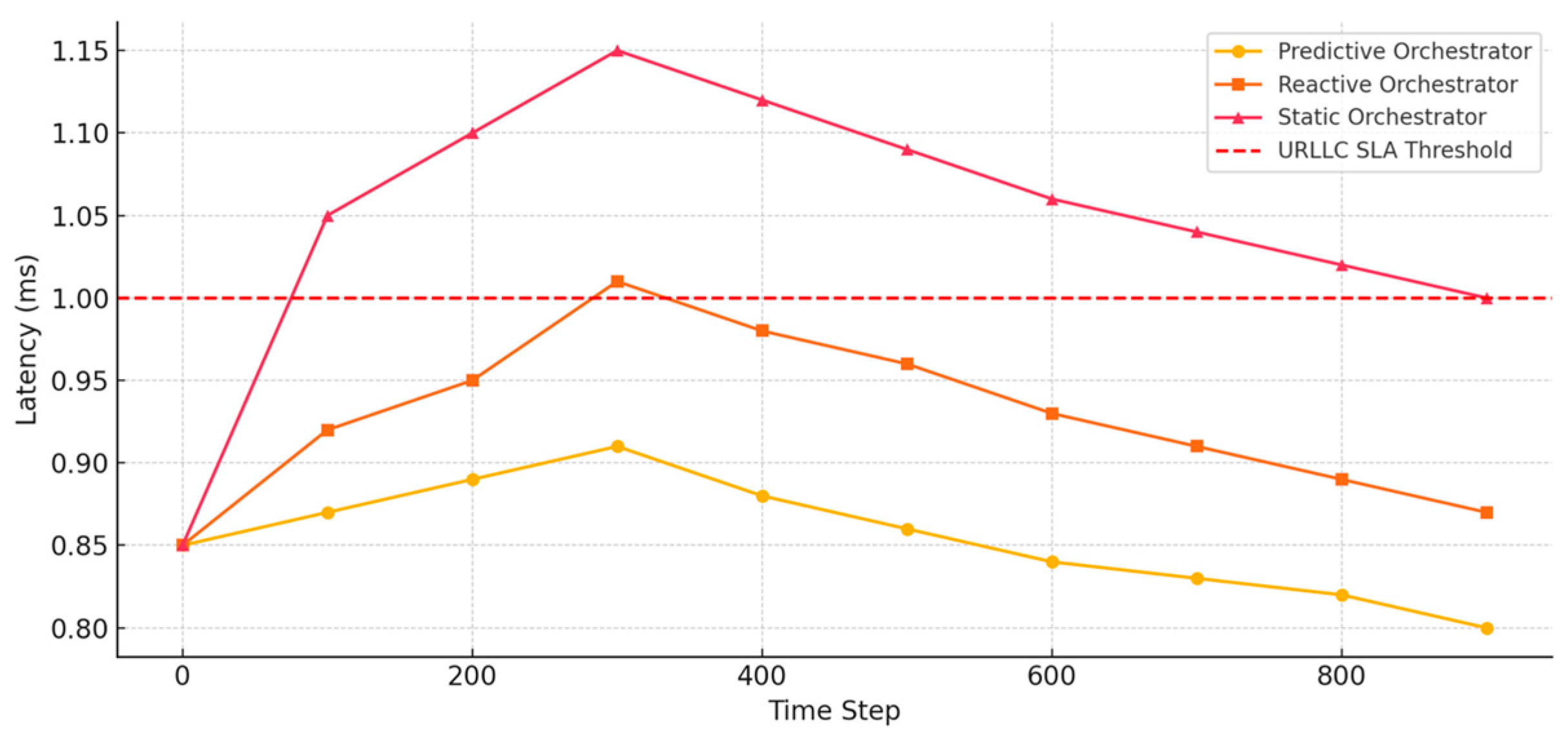

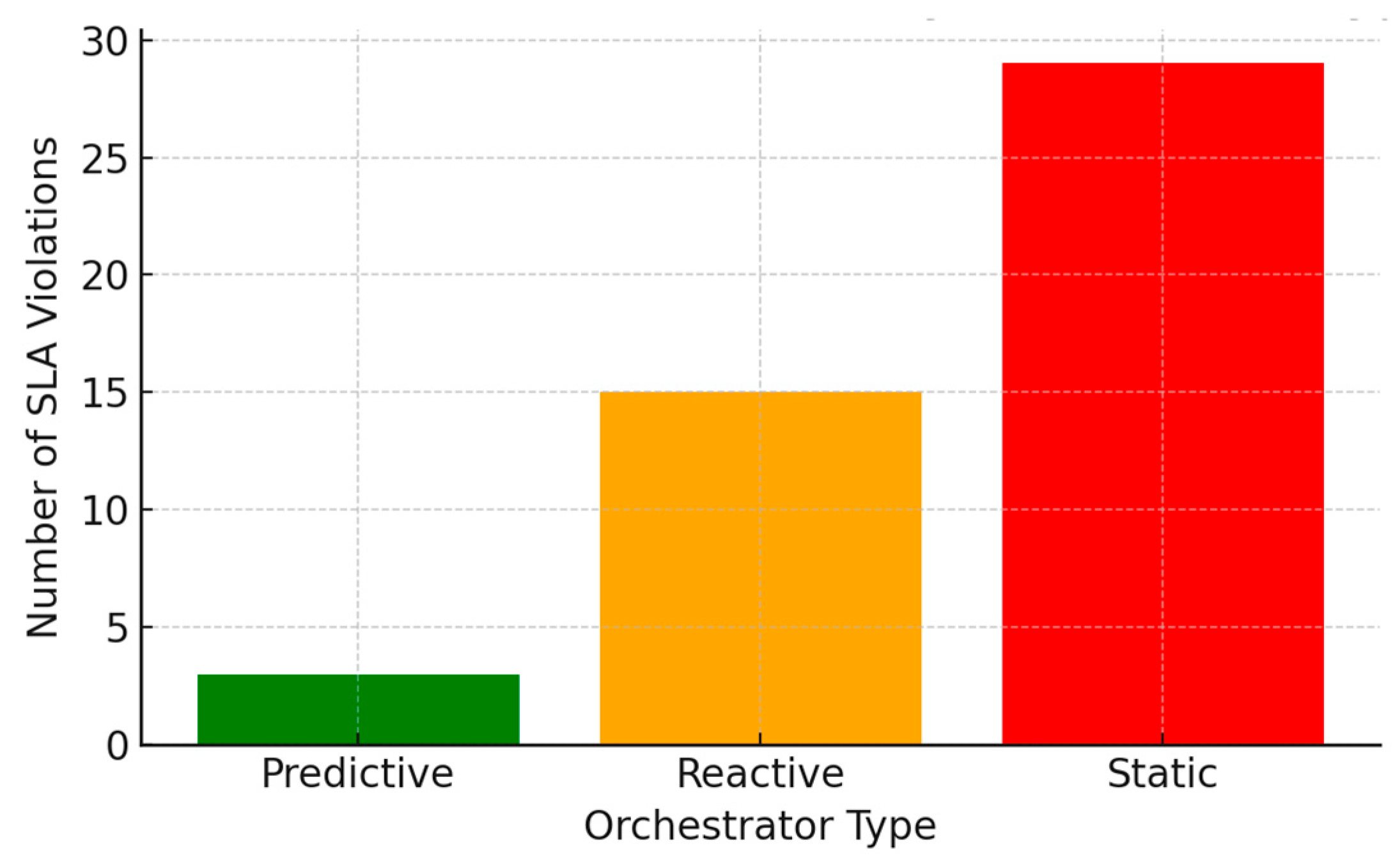

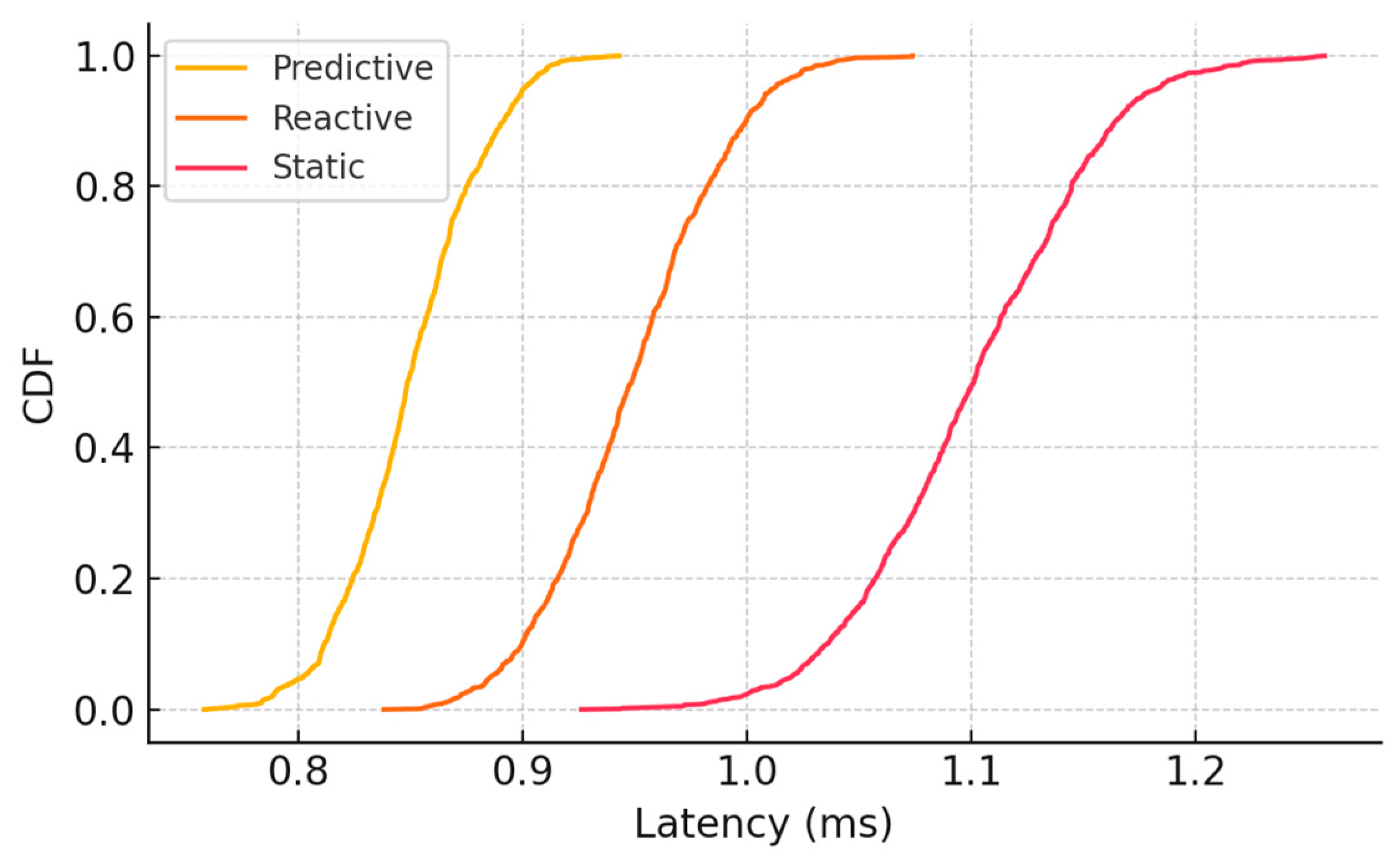

Nevertheless, these trade-offs are acceptable in exchange for the improved SLA assurance for URLLC services, as shown in

Figure 3,

Figure 4,

Figure 5 and

Figure 6.

7.2. Scalability and Multi-Domain Slicing

While the presented framework is tested in a single-domain NFVI with 20 service chains, 6G networks will likely involve multi-domain, multi-operator environments. Predictive orchestration across federated domains introduces challenges such as latency prediction across trust boundaries, inter-domain migration policies, and standardized telemetry sharing (ETSI IFA032) [

7]. The scalability can be addressed using distributed inference pipelines, edge-hosted predictors, or hierarchical MANO systems, which should be investigated in future work.

7.3. Practical Implications for 6G Networks

The predictive orchestration framework aligns well with key 6G principles. First, the dynamicity of slicing, where adjusting VNF placement on-the-fly supports slice elasticity and reliability. Second, the intent-based networking, in which forecasting latency acts as a proactive intent translator. Third, the RAN-core coordination, where forecast-driven migration enables tighter synchronization between RAN slicing and core VNFs.

Further, the architecture proposed in

Figure 1 in

Section 3 is compatible with the ETSI NFV MANO reference model [

7], facilitating integration into real-world PoCs or testbeds like OpenAirInterface or 5G-VINNI.

7.4. Limitations and Assumptions

Several simplifications were made for tractability. First, the simulation assumes known traffic arrival patterns and resource profiles. Also, realistic packet loss, jitter, and control signaling overheads are abstracted. In real deployments, such factors could introduce noise and uncertainty into the telemetry stream. For instance, latency jitter may distort temporal patterns used by the LSTM predictor, leading to decreased forecasting accuracy or higher false-positive rates in SLA violation detection. Similarly, packet loss may cause missing data points, and control signaling delays can introduce latency in executing orchestration actions, partially offsetting the gains of proactive migration. These dynamics are important and will be considered in future testbed validations. Lastly, the LSTM model is pre-trained and embedded; real-time online learning is not explored. Although the simulation emulates realistic URLLC/eMBB latency constraints, validation on a real-world testbed would provide stronger evidence of practical viability.

Another operational consideration concerns the retraining frequency and data privacy aspects of the predictive model. In our current setup, the LSTM predictor is pre-trained offline and embedded within the orchestration loop. However, in real-world deployments, periodic retraining may be required, for example, weekly or upon traffic pattern drift, to maintain forecasting accuracy as network dynamics evolve. This retraining process should be designed to minimize service interruption and avoid additional control-plane overhead. Furthermore, since predictive orchestration relies on collecting detailed telemetry from VNFs and user slices, data privacy and security must be ensured. Privacy-preserving approaches such as federated learning, where model updates rather than raw data are shared across domains, can mitigate privacy risks while maintaining predictive capability. These aspects will be explored in future iterations of the framework to ensure compliance with 6G data governance and regulatory requirements.

7.5. Future Exploration Areas

Future enhancements may include:

Integration of multi-agent RL for decentralized orchestration.

Use of federated learning for cross-domain predictive orchestration.

Incorporating network energy efficiency into the optimization cost function.

Support for non-IP traffic slices and deterministic networking (DetNet).

These directions will help evolve the architecture into a robust, AI-native orchestrator suitable for the full range of 6G use cases.

7.6. Comparison with Existing Orchestration Platforms

While existing orchestration platforms such as ETSI OSM, ONAP, and Intel OpenNESS support NFV/MANO-compliant VNF lifecycle management, their orchestration logic is primarily reactive, relying on alarms or static policies for triggering actions. For example:

OSM supports VNF placement and auto-scaling based on threshold alerts but lacks integrated forecasting.

ONAP offers policy-based orchestration and closed-loop automation, but its AI capabilities remain modular and loosely integrated.

OpenNESS targets edge orchestration and resource discovery but does not natively include latency forecasting.

In contrast, our framework embeds AI-native orchestration logic directly within the NFVO. It enables forecast-based decisions for VNF migration and scaling, making it better suited for URLLC-aware 6G slicing where timing guarantees are critical.

8. Conclusions and Future Works

We proposed a latency-aware, AI-driven NFV slicing orchestration framework aimed to meet the stringent service-level demands of time-sensitive 6G applications, particularly those governed by URLLC requirements. By integrating a predictive LSTM-based latency forecasting model into the orchestration logic, combined with a cost-aware optimization formulation for VNF placement and migration, the proposed framework proactively anticipates SLA violations and dynamically reconfigures slice resources across the NFVI to preserve end-to-end latency guarantees. Built upon the ETSI NFV MANO architecture, the framework ensures compatibility with existing standards while offering intelligent automation suited for 6G networks.

The framework was formally modeled using a suite of latency decomposition and forecasting equations, and orchestrator behavior was structured through Algorithms that enable adaptive and predictive control. Extensive simulations demonstrated that the predictive orchestrator significantly outperforms reactive and static baselines, reducing SLA violations by over 80%, maintaining sub-millisecond latency across dynamic loads, and minimizing unnecessary migrations. Additionally, the framework consistently delivered high forecasting accuracy, validating the suitability of the LSTM predictor for proactive service management under ultra-low latency constraints. These results affirm that embedding AI within the orchestration layer enhances both responsiveness and resource efficiency in NFV-based slice deployments.

While the evaluation was conducted using synthetic data due to the current lack of publicly available 6G NFV testbeds, the simulation was grounded in realistic traffic patterns and system parameters based on established 5G/6G research. This allowed for a controlled, reproducible assessment of orchestration strategies. Nonetheless, the absence of real-world latency traces and platform constraints represents a limitation that will be addressed in future work. Empirical validation on testbed environments such as OpenAirInterface, ETSI NFV PoC platforms, or integrated RAN-core emulators is envisioned as a next step, enabling evaluation under practical deployment conditions.

Future extensions of this work will focus on enhancing orchestration scalability to multi-domain and federated environments, where latency prediction and migration decisions must operate across administrative boundaries. Additional optimization objectives such as energy efficiency, mobility support, and jitter control will also be incorporated to accommodate diverse 6G service classes. Furthermore, integrating reinforcement learning agents and distributed forecasting architectures may offer enhanced adaptability and real-time responsiveness under volatile workloads. As 6G evolves toward AI-native network management, the proposed orchestration framework represents a foundational step toward intelligent, latency-assured service delivery in virtualized network infrastructures.