GPTs and the Choice Architecture of Pedagogies in Vocational Education

Abstract

1. Introduction

Introduction to GPTs and Their Potential

2. Materials and Methods

2.1. Limitations of the Study

2.2. Participants

- What is your role at the College and subject specialism?

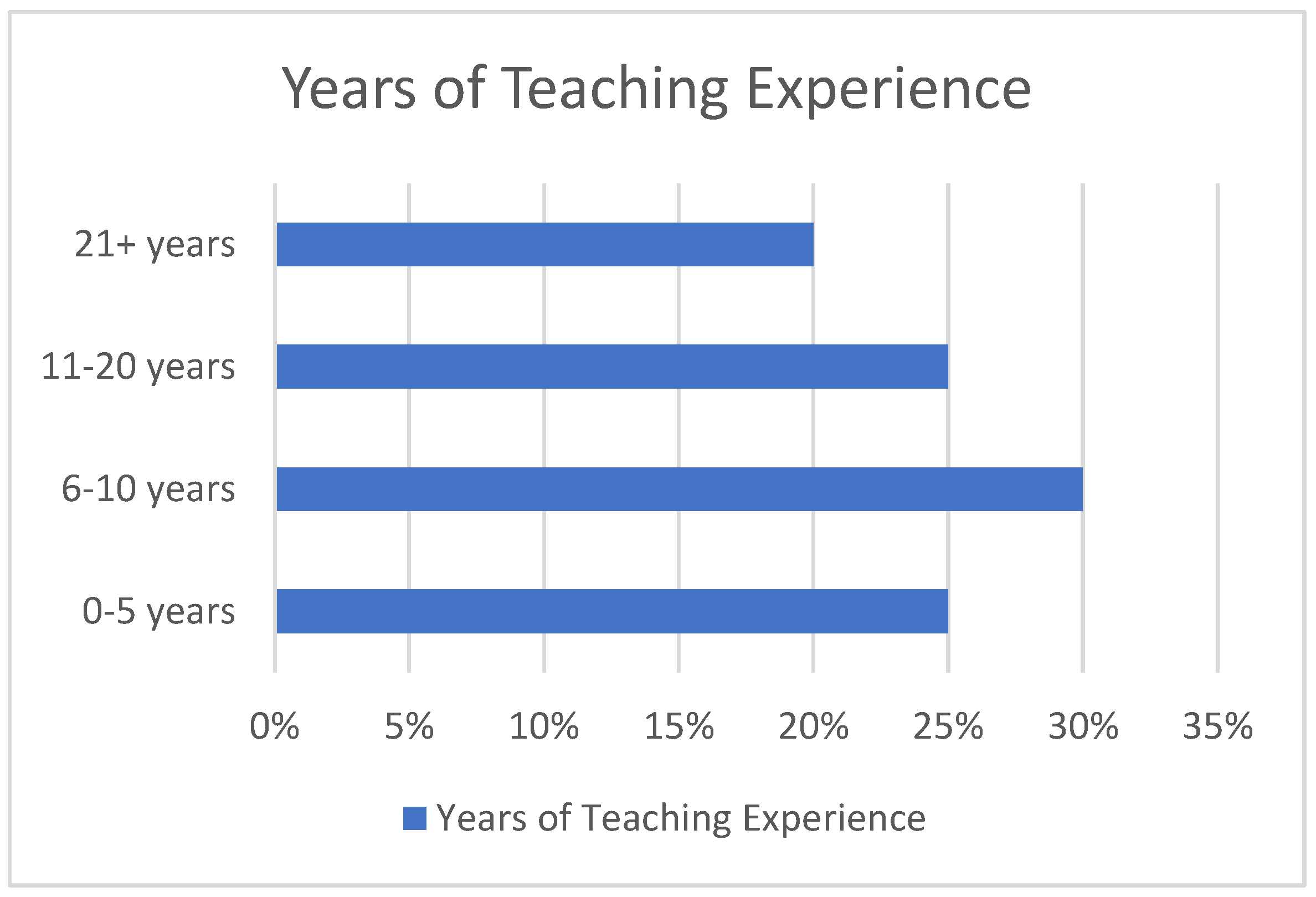

- How many years teaching experience do you have?

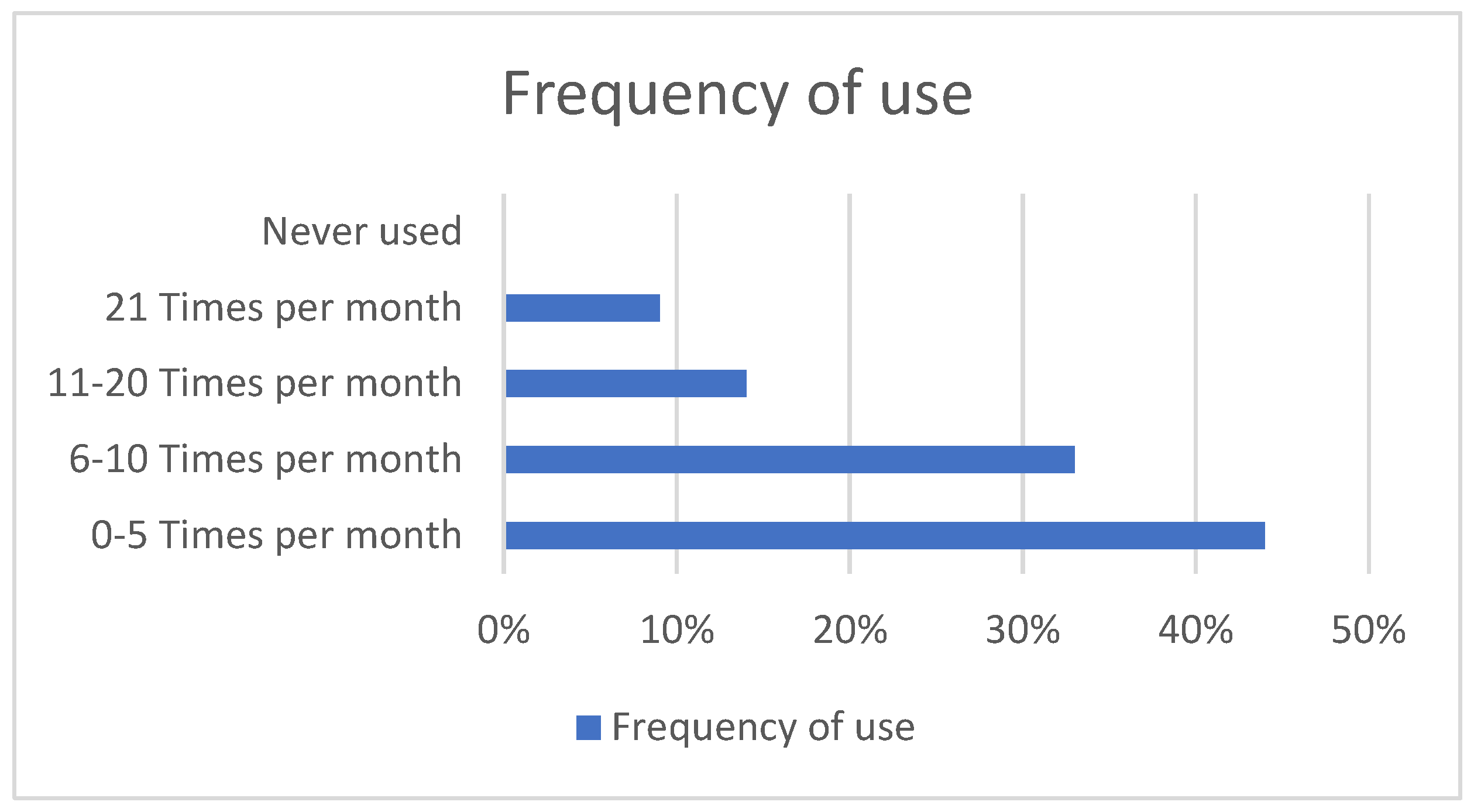

- How often do you use artificial intelligence tools (such as TeacherMatic, Gemini, ChatGPT, etc.) for work each month?

- Which is the AI tool that you use most frequently?

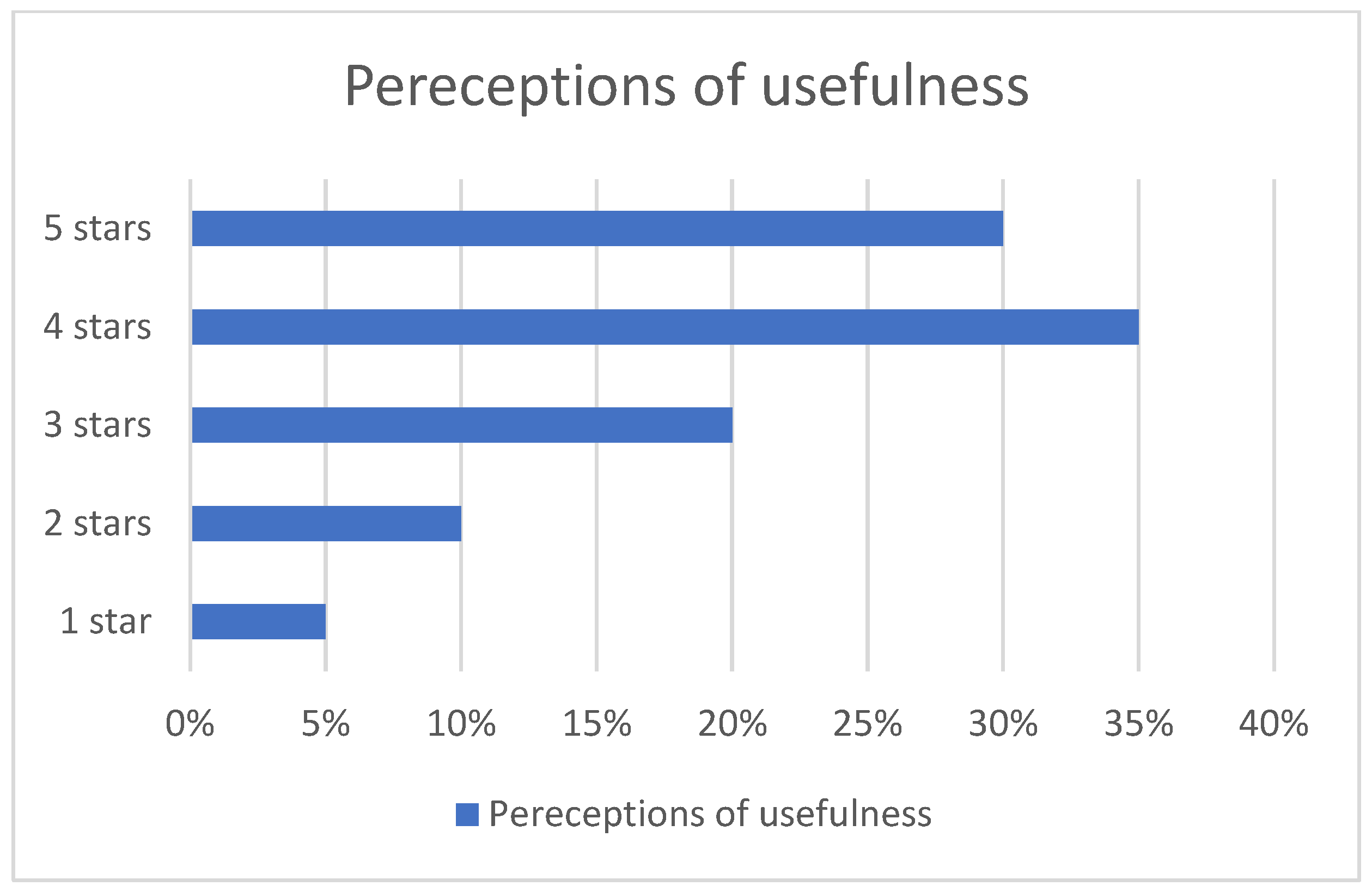

- On a scale of 1–5 Stars (1 being ‘Not at all useful’ and 5 being ‘Extremely useful’), how would you rate these tools in terms of supporting your workload?

- Which functions of these AI tools do you use most frequently? (Select up to three.)

- How often do you need to adapt the output created by AI?

- In general, do you think AI tools are worthwhile for educators?

- Is there anything else you would like to share about your experience with using AI tools in education?

3. Results

3.1. Key Quantitative Findings and Descriptive Results

- Total responses: 60

3.1.1. AI Use Frequency (Monthly)

3.1.2. Usefulness Ratings (1–5)

- Q. How helpful do teachers find AI for supporting workload?

3.1.3. Tasks Delegated to GPT/AI Systems

- Cross-tabulation of usefulness by frequency of AI use

- Correlation between teaching experience and AI adoption

- Participant case examples and illustrative quotes

3.1.4. Cross-Tabulation and Group Comparisons

- 0–5—n = 16, mean usefulness ≈ 4.00, sd ≈ 1.06

- 6–10—n = 12, mean usefulness ≈ 4.08, sd ≈ 0.67

- 11–20—n = 3, mean usefulness ≈ 4.33, sd ≈ 1.15

- 21+—n = 1, mean usefulness = 5.0

- Never used—n = 1 (no usefulness rating)

3.1.5. Influence of Extent of Teaching Experience on Perceptions of GPTs

3.1.6. Illustrated Anonymized Comments from Open Questions

- “The end result is only ever as good as the prompts you put into whichever AI generator you are using.”

- “Some features of TeacherMatic are used more frequently than others. It’s good that there is a ‘favourites’ option.”

- “There is a general expectation that they should be better than they are. (e.g., produce a scheme of work or lesson plan perfectly first time).”

- “Please do not utilise A.I in education just to save time on teaching: use it to enhance learning experiences.”

- “They are useful in the current teaching environment. But they shouldn’t have to be if the workload was balanced correctly. If we continue to have to use it, will it de-skill teachers [?]. Will we run the risk of lesson be created by AI and the teacher not knowing or understanding how or if it meets the needs of learners [?]. Meaning that lesson are used inappropriately.”

- “Often it is sold as reducing your workload but I’m not sure. Often the quality or robustness of the product it gives you requires more work to make it effective. I worry the impact it will have on student teachers and the lessons they will lose in their early career as they use AI.”

3.1.7. Choice Architecture and GPTs Interpretation of the Data

4. Discussion

Recommendations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| VE | Vocational Education |

| GPT | Generative Pre-Trained Transformers |

| AIE | Artificial Intelligence in Education |

| CPD | Continued Professional Development |

References

- Liu, Y.; He, H.; Han, T.; Zhang, X.; Liu, M.; Tian, J.; Zhang, Y.; Wang, J.; Gao, X.; Zhong, T.; et al. Understanding llms: A comprehensive overview from training to inference. Neurocomputing 2025, 620, 129190. [Google Scholar] [CrossRef]

- Sahlgren, O. The politics and reciprocal (re)configuration of accountability and fairness in data-driven education. Learn. Media Technol. 2023, 48, 95–108. [Google Scholar] [CrossRef]

- Rongchang, J.; Yonghong, C.; Yi, P.; Shijie, X.; Dandan, Q. Opportunities and challenges of AI in vocational education. Int. J. Learn. Teach. 2024, 10, 590–596. [Google Scholar]

- Cukurova, M. The interplay of learning, analytics and artificial intelligence in education: A vision for hybrid intelligence. Br. J. Educ. Technol. 2025, 56, 469–488. [Google Scholar] [CrossRef]

- Denecke, K.; Glauser, R.; Reichenpfader, D. Assessing the potential and risks of AI-based tools in higher education: Results from an eSurvey and SWOT analysis. Trends High. Educ. 2023, 2, 667–688. [Google Scholar] [CrossRef]

- Niloy, A.C.; Hafiz, R.; Hossain, B.M.T.; Gulmeher, F.; Sultana, N.; Islam, K.F.; Bushra, F.; Islam, S.; Hoque, S.I.; Rahman, M.A.; et al. AI chatbots: A disguised enemy for academic integrity? Int. J. Educ. Res. Open 2024, 7, 100396. [Google Scholar] [CrossRef]

- Orr, K. A future for the further education sector in England. J. Educ. Work 2020, 33, 507–514. [Google Scholar] [CrossRef]

- Scott, H.; Iredale, A.; Harrison, B. FELTAG in rearview: FE from the past to the future through plague times. In Digital Learning in Higher Education, 1st ed.; Smith, M., Traxler, J., Eds.; Edward Elgar Publishing: Cheltenham, UK, 2022; pp. 24–36. [Google Scholar] [CrossRef]

- Hobley, J. ‘Here’s the iPad’. The BTEC philosophy: How not to teach science to vocational students. Res. Post-Compuls. Educ. 2016, 21, 434–446. [Google Scholar] [CrossRef]

- Smith, V.; Husband, G. Guest editorial: Teacher recruitment and retention challenges in the further education and skills sector: Lessons and solutions from international perspectives. Educ. + Train. 2024, 66, 465–476. [Google Scholar] [CrossRef]

- Zubizarreta Pagaldai, A.; Cattaneo, A.; Imaz Agirre, A.; Marín, V.I. Factors influencing the digital competence of students in basic vocational education training. Empir. Res. Voc. Ed. Train. 2025, 17, 19. [Google Scholar] [CrossRef]

- Avis, J. Socio-technical imaginary of the fourth industrial revolution and its implications for vocational education and training: A literature review. J. Vocat. Educ. Train. 2018, 70, 337–363. [Google Scholar] [CrossRef]

- Mishra, P.; Koehler, M.J. Technological Pedagogical Content Knowledge: A Framework for Teacher Knowledge. Teach. Coll. Rec. 2006, 108, 1017–1054. [Google Scholar] [CrossRef]

- Marangunić, N.; Granić, A. Technology acceptance model: A literature review from 1986 to 2013. Univers. Access Inf. Soc. 2015, 14, 81–95. [Google Scholar] [CrossRef]

- Hamilton, E.R.; Rosenberg, J.M.; Akcaoglu, M. The substitution augmentation modification redefinition (SAMR) model: A critical review and suggestions for its use. TechTrends 2016, 60, 433–441. [Google Scholar] [CrossRef]

- Song, J.; Zhong, Z.; He, C.; Lu, H.; Han, X.; Xiong, X.; Wang, Y. Definitions and a Review of Relevant Theories, Frameworks, and Approaches. In Handbook of Technical and Vocational Teacher Professional Development in the Digital Age, 1st ed.; Han, X., Zhou, Q., Li, M., Wang, Y., Eds.; Springer: Singapore, 2023; pp. 17–39. [Google Scholar] [CrossRef]

- Cattaneo, A.A.; Antonietti, C.; Rauseo, M. How do vocational teachers use technology? The role of perceived digital competence and perceived usefulness in technology use across different teaching profiles. Vocat. Learn. 2025, 18, 5. [Google Scholar] [CrossRef]

- Holmes, W.; Porayska-Pomsta, K. The Ethics of Artificial Intelligence in Education, 1st ed.; Routledge: London, UK, 2023; pp. 621–653. [Google Scholar]

- Avis, J.; Reynolds, C. The digitalization of work and social justice: Reflections on the labour process of English further education teachers. In The Impact of Digitalization in the Workplace: An Educational View, 1st ed.; Harteis, C., Ed.; Springer International Publishing: Cham, Switzerland, 2017; pp. 213–229. [Google Scholar] [CrossRef]

- Generative AI in Education. UK Government Policy Paper. 2025. Available online: https://www.gov.uk/government/publications/generative-artificial-intelligence-in-education/generative-artificial-intelligence-ai-in-education?pStoreID=Http (accessed on 25 August 2025).

- Turvey, K.; Pachler, N. A topological exploration of convergence/divergence of human-mediated and algorithmically mediated pedagogy. Br. J. Educ. Technol. 2025. early version. [Google Scholar] [CrossRef]

- Rahm, L.; Rahm-Skågeby, J. Imaginaries and problematisations: A heuristic lens in the age of artificial intelligence in education. Br. J. Educ. Technol. 2023, 54, 1147–1159. [Google Scholar] [CrossRef]

- Perrotta, C.; Gulson, K.N.; Williamson, B.; Witzenberger, K. Automation, APIs and the distributed labour of platform pedagogies in Google Classroom. Crit. Stud. Educ. 2021, 62, 97–113. [Google Scholar] [CrossRef]

- Lammert, C.; DeJulio, S.; Grote-Garcia, S.; Fraga, L.M. Better than nothing? An analysis of AI-generated lesson plans using the Universal Design for Learning & Transition Frameworks. Clear. House A J. Educ. Strateg. Issues Ideas 2024, 97, 168–175. [Google Scholar] [CrossRef]

- Van den Berg, G.; du Plessis, E. ChatGPT and Generative AI: Possibilities for its contribution to lesson planning, critical thinking and openness in teacher education. Educ. Sci. 2023, 13, 998. [Google Scholar] [CrossRef]

- Kuzu, T.E.; Irion, T.; Bay, W. AI-based task development in teacher education: An empirical study on using ChatGPT to create complex multilingual tasks in the context of primary education. Educ. Inf. Technol. 2025, 1–35. [Google Scholar] [CrossRef]

- Scott, H. Theoretically rich design thinking: Blended approaches for educational technology workshops. Int. J. Manag. Appl. Res. 2023, 10, 298–313. [Google Scholar] [CrossRef]

- Birtill, M.; Birtill, P. Implementation and evaluation of genAI-aided tools in a UK Further Education college. In Artificial Intelligence Applications in Higher Education, 1st ed.; Crompton, H., Burke, D., Eds.; Routledge: Oxford, UK, 2024; pp. 195–214. [Google Scholar]

- Grace, D.; Haddock, B. Innovative Assessment Using Smart Glasses in Further Education: HDI Considerations. In Human Data Interaction, Disadvantage and Skills in the Community: Enabling Cross-Sector Environments for Postdigital Inclusion; Hayes, S., Jopling, M., Connor, S., Johnson, M., Eds.; Springer International Publishing: Cham, Switzerland, 2023; pp. 93–110. [Google Scholar] [CrossRef]

- Celik, I.; Dindar, M.; Muukkonen, H.; Järvelä, S. The promises and challenges of artificial intelligence for teachers: A systematic review of research. TechTrends 2022, 66, 616–630. [Google Scholar] [CrossRef]

- Akgun, S.; Greenhow, C. Artificial intelligence in education: Addressing ethical challenges in K-12 settings. AI Ethics 2022, 2, 431–440. [Google Scholar] [CrossRef]

- Kasneci, E.; Seßler, K.; Küchemann, S.; Bannert, M.; Dementieva, D.; Fischer, F.; Gasser, U.; Groh, G.; Günnemann, S.; Hüllermeier, E.; et al. ChatGPT for good? On opportunities and challenges of large language models for education. Learn. Individ. Differ. 2023, 103, 102274. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Scott, H.; Dwight, A. GPTs and the Choice Architecture of Pedagogies in Vocational Education. Systems 2025, 13, 872. https://doi.org/10.3390/systems13100872

Scott H, Dwight A. GPTs and the Choice Architecture of Pedagogies in Vocational Education. Systems. 2025; 13(10):872. https://doi.org/10.3390/systems13100872

Chicago/Turabian StyleScott, Howard, and Adam Dwight. 2025. "GPTs and the Choice Architecture of Pedagogies in Vocational Education" Systems 13, no. 10: 872. https://doi.org/10.3390/systems13100872

APA StyleScott, H., & Dwight, A. (2025). GPTs and the Choice Architecture of Pedagogies in Vocational Education. Systems, 13(10), 872. https://doi.org/10.3390/systems13100872