2.1. AI-Powered Smart Home Technologies

In this study, AI-powered smart homes are defined as residential environments equipped with Internet of Things (IoT) devices and intelligent systems that utilize AI to automate tasks, optimize energy use, enhance security, and personalize user experiences [

14,

15]. These technologies include devices such as voice assistants, security systems, and smart appliances, all of which rely on AI algorithms for adaptive decision-making.

Smart home technology has seen significant adoption over the last few years. The reason for this is the advancement of the IoT, wireless communication, and AI. A smart home is an application of IoT that includes smart TVs, security cameras, smart locks, smart lighting, and much more [

14]. By leveraging IoT, these technologies enable households to have more control over their homes by connecting electronic devices. Additionally, the integration of AI into these smart technologies has further enhanced user experiences. This enhancement is possible because AI can analyze user behavior, thereby offering more personalized experiences [

16] and matching household preferences and habits. Unlike traditional technology, where users play a key role in its functionality, AI-powered technologies rely on machine learning (ML) algorithms. Notably, AI-powered security systems can enhance home security by detecting potential threats and alerting households to unusual activity. The integration of AI into security home systems enhances the overall house security by such means as employing facial recognition and behavior analysis in order to detect unusual activity and reduce false alarms.

In addition to security systems, another example of AI-powered smart home services is Voice Assistants, such as Amazon Echo (Alexa) and Apple HomePod (Siri), which are one of the most popular technologies in home ecosystems [

2]. Beyond simple tasks, such as providing weather conditions, Alexa, for example, can serve as a centralized unit for smart home ecosystems, allowing users to automate many tasks, such as controlling lighting in the home, adjusting thermostats, and initiating routines (e.g., locking the door).

Smart home technologies continue to evolve, with the integration of AI becoming an integral part of the smart home environment, thus shaping its future. Concerning security, AI can enhance home security by analyzing security camera footage to help detect unusual activity. However, the vast amounts of personal data that AI-powered IoT devices generate and analyze daily, along with Internet technology in general, raise serious security concerns [

17]. AI-powered IoT devices expose households to serious cybersecurity risks [

18], leaving their systems vulnerable to malicious actors [

19] and potentially putting their privacy at significant risk.

Within the wider AI ecosystem, smart homes provide a distinctive context because they embed AI and IoT directly into the household environment, shaping daily routines and personal security practices. In contrast, other AI domains, such as smart cities, healthcare, or autonomous vehicles, operate at larger infrastructural or institutional levels, often governed by stricter regulations and collective oversight. This comparison highlights both the uniqueness of smart homes, where individual trust and cybersecurity awareness are central, and their relevance to broader AI adoption challenges, where user confidence and risk management are equally critical [

1,

2,

20,

21].

From a systemic viewpoint, smart homes embody autonomous capabilities where devices interact dynamically with users and their environment. This interaction fosters adaptive responses to security threats, promotes household resilience, and stabilizes routines. By embedding AI within everyday infrastructures, smart homes reflect systemic qualities of flexibility and robustness, making their study especially relevant for understanding larger socio-technical transitions [

15,

22,

23].

From a systems theory perspective, smart homes can be understood as autonomous socio-technical subsystems whose functionality depends on user trust and cybersecurity awareness. This perspective highlights their capacity for self-regulation and continuity under uncertainty, offering a theoretical lens for explaining how micro-level adoption drivers connect to macro-level systemic stability.

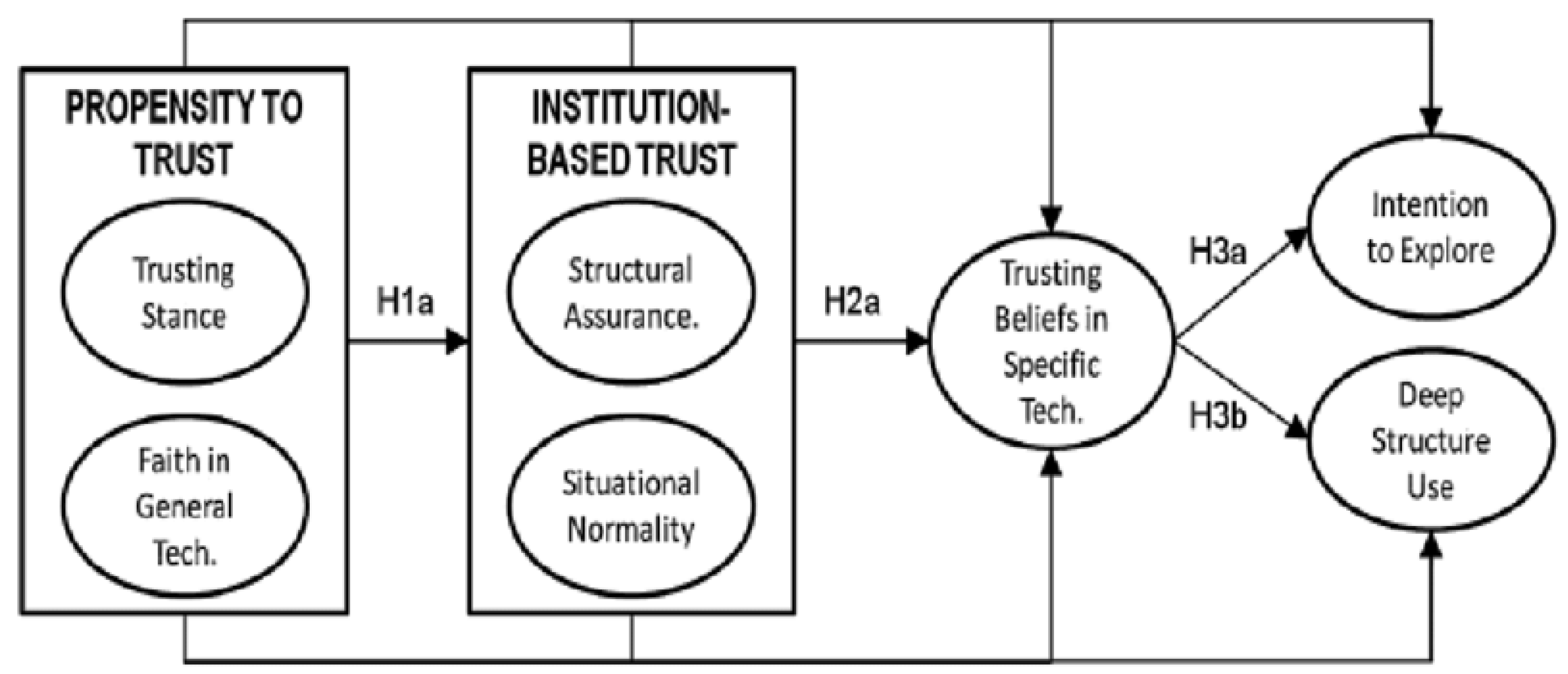

2.2. Human Trust in AI-Powered Technologies

In this study, human trust in AI is conceptualized as the willingness of individuals to rely on AI systems for decision-making and everyday functions, despite uncertainties about outcomes [

24,

25]. In the context of this research, human trust in AI refers specifically to users’ confidence in the reliability, security, and privacy of AI-powered smart home devices.

Trust influences both the intention behind and the ongoing use of technology [

24]. It plays a crucial role in the adoption of smart home technology. Household trust perception tends to be a determining factor that influences the decision to adopt and use AI-powered IoT devices [

26]. However, AI-powered technologies pose a threat to trust [

27], threatening households’ security and privacy.

The literature on users’ acceptance of technology has emphasized that perceived usefulness, ease of use, trust, and privacy determine users’ behavior toward technology adoption [

28,

29]. Trust is seen as an essential factor in adopting new technology [

30]. Drawing on AI technologies literature, a recent systematic literature review on user trust in AI shows that users’ levels of knowledge about it, prior experiences with it, demographic factors, and socio-ethical factors (fairness and transparency) positively influence users’ trust in AI-powered AI technologies [

31]. However, many users lack knowledge about cyberattacks or when their devices are being exploited.

From a different angle, the growing integration of IoT into daily life presents another trust challenge. That is, it brings about significant privacy and data security challenges, causing users to hesitate to share data that typically would not raise any privacy concerns [

6]. From a technology perspective, this could limit the effectiveness of IoT and AI-powered services. This is because IoT heavily relies on the data shared by users to provide optimal services. From the human perspective, this might lead to missed enhanced services when users refuse to share essential data, which could also negatively impact IoT adoption. In fact, growing evidence from prior research suggests that the current AI-powered approach overlooks the broader implications of AI on individuals and society, such as ethical considerations and privacy, by focusing solely on a technical perspective [

32,

33]. Privacy concerns and users’ control over their personal information significantly influence their decisions about sharing data [

34]. Trust, security, and privacy are among the top user concerns regarding the adoption of IoT [

35].

Despite the growing popularity of IoT and smart home devices, they raise critical cybersecurity challenges due to numerous vulnerabilities [

36]. These risks arise from the interaction of constant connectivity, cloud-based data processing, limited user control over the data, and security mismanagement [

37]. While AI-powered IoT devices, such as those provided by Amazon, bring convenience to homeowners, they are vulnerable due to their continuous listening mode and reliance on internet-based services for functionality, exposing them to various cybersecurity risks [

8]. This includes the potential for these devices to be exploited, thus putting homeowners at risk. For example, attackers can exploit insecure Wi-Fi configurations to gain unauthorized access to them and manipulate their functionality. The Mirai Botnet is a well-known case, where compromised IoT devices were hijacked and used to carry out a massive Distributed Denial-of-Service (DDoS) attack [

38]. Another issue is that many of these devices are poorly secured (e.g., with default passwords), which makes them easier to exploit over time [

2,

36].

Concerning data privacy, voice assistant technology faces real security risks, such as voice spoofing, where commands can be initiated to manipulate these devices without user acknowledgment [

39]. Additionally, resold or rented devices may also raise privacy concerns, as these may hold user data. A report on smart homes has shown that 40.8% of homeowners had at least one vulnerable device, which puts the entire home at risk [

40]. As a consequence, many industrial and academic studies have discussed the importance of cybersecurity awareness in overcoming the challenges and concerns posed by AI-powered technology, thereby empowering IoT users with the necessary knowledge for protecting their devices and data [

41,

42,

43]. Cybersecurity awareness is a key solution for addressing users’ concerns by offering this knowledge.

Cybersecurity awareness must incorporate best security practices to mitigate the risks posed by these technologies. For example, with IoT, it is recommended that users change default passwords, enable encryption, update firewalls, and meet other requirements to ensure privacy and tackle security challenges.

Trust also carries systemic implications. High levels of user trust strengthen the stability of the adoption ecosystem, while distrust may destabilize it. Trust can be seen as a systemic stabilizer that maintains continuity, resilience, and adaptation within socio-technical systems such as AI-powered smart homes [

31,

44,

45,

46].

2.4. Role of Cybersecurity Awareness

Cybersecurity Awareness (CSA) is defined as an individual’s knowledge, perception, and proactive behavior regarding potential cyber risks and the protective measures required to mitigate them [

55,

56]. It encompasses both the cognitive understanding of security threats, such as privacy risks, data breaches, or unauthorized access, and the behavioral practices, including updating software, using strong passwords, and adjusting device privacy settings. In the context of AI-powered smart homes, CSA refers specifically to household users’ ability to recognize vulnerabilities in smart devices and adopt preventive actions that safeguard personal data. By clarifying CSA in this way, this highlights its dual role as both a cognitive factor influencing trust and a behavioral driver of adoption.

When it comes to cybersecurity threats linked to IoT, numerous technological solutions have been developed and introduced to mitigate such risks. To combat them, several studies have examined this issue from a technical angle, including proposing a detection system that uses deep learning techniques to safeguard the IoT environment. Diro and Chilamkurti [

57] emphasize the importance of cryptographic mechanisms [

58]. Data privacy is an essential component of IoT security. However, it is one of the major challenges associated with IoT [

59,

60]. This is due to the lack of household awareness of passive data collection and the challenges in managing such matters [

61]. Dang et al. [

62] propose an authentication scheme to provide additional protection in the cloud server in IoT.

Concerning the security challenges associated with IoT, privacy is consistently linked to CSA. It is defined as the user’s right to control access to their personal data [

63]. However, privacy takes a new dimension in AI-powered smart home devices as these are constantly collecting and passively processing personal information, such as voice recordings and behavior patterns, which can pose a threat to user privacy [

10]. When users understand the extent and sensitivity of this data, their privacy concerns increase, leading them to explore security features and adopt safe practices. Thus, CSA influences how privacy concerns translate into actions. Privacy concerns drive users to put more effort into understanding and managing this [

64]. While both expert and non-expert users may worry about privacy, those with higher cybersecurity awareness are more likely to respond to technical signals, such as permission settings [

65].

Another important aspect that influences the protection of the user’s privacy and the adoption of AI-powered home technologies is the role of information security policies (ISPs). Typically, these set the standard for how personal data is collected, stored, and shared. In a smart home, ISPs are included within the user agreement or app permissions. However, many users are often unaware of them [

10], thus accepting them without fully understanding the implications for their privacy and security. This lack of awareness can negatively impact the level of trust and result in slow adoption. Awareness of ISPs is crucial for effective cybersecurity practices [

66].

When AI-powered smart home technologies are introduced for users’ convenience, their integration with home networks introduces security risks, such as unauthorized surveillance or data breaches. Additionally, the vulnerabilities in smart homes, such as weak passwords or unsecured communication protocols, can often be exploited. Users’ understanding of potential vulnerabilities is essential for them to take preventive action [

67]. In other words, user awareness and understanding of these vulnerabilities can strengthen their overall cybersecurity stance.

The rapid development of IoT makes it a prime target for cybercriminals and exposes it to additional security threats [

68]. In addition to the technical variabilities, many studies have found that there are other non-technical reasons behind cybersecurity attacks in IoT. These include a lack of awareness of cybersecurity [

69], as well as poor compliance with ISPs and recommendations [

70]. The number of non-technical users of the smart home is on the rise, yet most of them lack an understanding of CSA and privacy [

71]. While recent studies have shown that households may have CSA, they can lack knowledge regarding the risks they are exposed to by using IoT. In recognition of the increased security risk, the U.S. Federal Bureau of Investigation (FBI) has issued many public safety alerts, warning households that cybercriminals exploit IoT device vulnerabilities, such as those of smart cameras, routers, among others [

72,

73]. Households, especially those that are not technically inclined, need to pay extra attention to safeguarding their privacy and improving their overall security, particularly regarding CSA.

Theoretically, CSA has been incorporated into several theories to examine different phenomena. For example, Ng et al. [

55], in their study, extended the Health Belief Model (HBM) by incorporating CSA as a factor in studying the user’s computer security behavior in terms of adopting security measures. CSA was found to be significant in shaping users’ decisions and taking proactive action. They incorporated it into a broader framework that related CSA to human behavior in an information security context. Moreover, the Technology Acceptance Model (TAM) suggests that perceived usefulness and perceived ease of use drive individual intention behavior [

28,

74]. In this regard, CSA could enhance perceived ease of use and usefulness by providing users with the confidence to adopt secure systems.

Furthermore, the Unified Theory of Acceptance and Use of Technology (UTAUT) emphasizes that facilitating conditions are significant determinants of intention [

75]. Awareness of cybersecurity aligns with facilitating conditions and potentially enhances users’ trust, while positively impacting both intention and actual behavior. Protection Motivation Theory (PMT) asserts that an individual’s awareness of threats, as well as the perceived efficacy of coping mechanisms, significantly influences their protective behavior [

76,

77]. This leads us to believe that cybersecurity has a high potential impact on driving technology adoption.

From the above, it can be seen that users need to be aware of potential threats and employ safeguards in order to stay secure and reduce the probability and the impact of security incidents. According to CNSS [

78], safeguards are “protective measures and controls prescribed to meet the security requirements … may include security features, management constraints, personnel security, and security of physical structures, areas, and devices” (p. 172). The applications of the security safeguards in smart homes involve both technical mechanisms (e.g., encryption) and intelligent user behavior (updating device firmware, reviewing privacy settings, and ensuring strong passwords).

We believe CSA is more likely to affect intention and actual behavior when adopting AI-powered smart home technologies. So, we are of the view that users with high CSA could be more critical of technologies that do not provide a strong security solution. Those users are more likely to act as savvy consumers who carefully assess the security and privacy features of technologies and whether these meet the desired standards before adoption, as their critical view reflects selective adoption, rather than full acceptance or rejection of those technologies. This assumption is supported by the idea that CSA significantly influences the users’ decision-making processes [

56,

79].

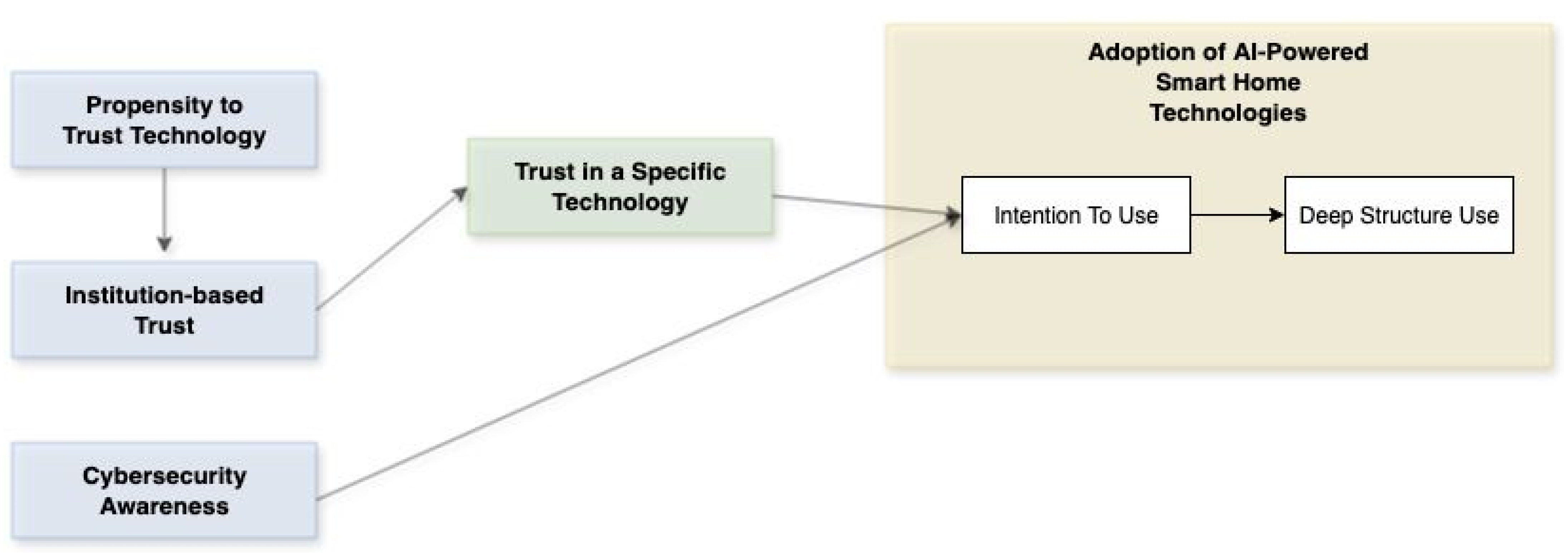

We hold that CSA could be a meaningful factor for TTM, as it offers a way to capture how user security knowledge affects their intention to use and the actual behavior of the adoption of AI-powered smart home technologies. Considering all the above, we argue that CSA is an important factor and a valuable addition to TTM, as it could influence the adoption of AI-powered smart homes. Each of TTM’s constructs (PPT, SAT, and TST) highlights different and important aspects of trust, while CSA adds an additional layer concerning security threats. For example, in the context of adopting smart home technology, a user with a high PPT may adopt it without question, while one who relies on SAT might do so based on its certifications or compliance with regulations. Moreover, a user with high CSA would go beyond that by looking at how well those technologies handle potential cybersecurity risks. So, this leads us to the belief that intention and actual behavior in adopting AI-powered smart home technologies may be influenced by CSA levels.

Table 1 demonstrates the relation of cybersecurity awareness to TTM’s constructs. Hence, we hypothesize:

H4. Cybersecurity Awareness will positively affect individuals’ intention to explore the technology in the adoption of AI-powered smart home technologies.

The relationship between intention and deep structure use is influenced by the level of trust users develop in a specific technology. Those who find it functional, helpful, and reliable will develop an intention to explore its features. The intention to use the system leads to deep structure use, where users move beyond basic operations and engage in more advanced functionalities. They gain the necessary confidence to try complex features through their direct experience with the technology, which forms their trusting beliefs. Through this process, intention functions as a motivational link that converts trust into practical technology usage with value [

47,

80].

Dinger et al. [

81] state that intention to explore and deep structure use are post-adoptive behaviors that are influenced by both emotional and cognitive factors. Users develop trusting cognitive beliefs about technology’s helpfulness, capability, and reliability through their emotional experiences of both positive and negative events. Positive emotions both build trust and boost user willingness to use the technology, which shows emotions can motivate users beyond logical evaluation. The effective use of information technology depends on the fundamental interaction between emotional and cognitive processes. Moqbel et al. [

82] identify two essential components of technology engagement after adoption: user intention and deep structure use. The system user makes an intentional choice to continue using the system, which allows them to predict their future actions. The system’s advanced features enable deep structure use, which allows users to achieve meaningful outcomes that go beyond basic functionality. The willingness to use technology defines intention, whereas deep structure use demonstrates how well one uses the technology.

A strong intention often leads to deeper usage, as motivated users are more likely to explore and apply complex functionalities. These constructs, together, help explain both the readiness to use a technology and the extent of its actual use. Therefore, we hypothesize the following:

H5. Positive individuals’ intention behavior significantly enhances the deep structure use with the adoption of AI-powered smart home technologies.

Cybersecurity awareness enhances not only individual protection but also systemic resilience. By equipping users with knowledge and proactive behaviors, CSA reduces the overall vulnerability of interconnected devices, thereby contributing to the adaptive capacity and stability of smart home ecosystems [

83,

84].