1. Introduction

Artificial intelligence (AI) has witnessed over sixty years of development, leading to transformative applications that significantly impact various aspects of our lives. The core objective of AI is to emulate and augment human intelligence, resulting in the creation of intelligent machines [

1,

2]. As AI systems rapidly evolve, their integration becomes increasingly prevalent in governance [

3]. Government and agency managers grapple with decision-making challenges involving vast amounts of data, necessitating the consideration of rational choices coupled with human experiential insights [

1,

3].

The ability of managers to navigate rational decisions is pivotal to their tactical and strategic choices, particularly within the domain of e-government. AI technology’s growing significance in modern enterprises is chiefly attributed to its proven capacity to alleviate administrative burdens, enabling data-driven decisions instead of relying solely on intuition [

4]. While previous studies assert that AI can engage in rational thinking and actions [

5,

6,

7], differing opinions highlight cautious approaches [

8,

9,

10,

11]. The skepticism revolves around AI’s ability to surmount obstacles to practical reasoning.

Government studies on the Jordanian e-government’s use of AI are nascent, focusing on the expected impacts and theoretical aspects [

12]. There is substantial room for an empirical study of natural and AI applications, especially in government sectors [

8,

9,

13]. As AI holds immense potential across various government sectors, encompassing education, healthcare, transportation, telecommunications, infrastructure, data protection, finance, policymaking, legal systems, and research and development, governments are compelled to consider and incorporate AI to enhance the efficiency of their decision-making processes [

10,

14]. This study focuses specifically on machine learning (ML), the most commonly used technique within AI. The field of machine learning, which falls under the umbrella of artificial intelligence, enables machines to enhance their performance by learning from available data, eliminating the necessity for explicit programming [

15,

16,

17]. Supervised machine learning (Sup_Lea) and unsupervised machine learning (Uns_Lea) constitute the two main dimensions of ML, involving knowledge extraction from observed outcomes and discovering insights from data without predetermined results, respectively [

15,

16,

18].

Furthermore, there is a growing need for a comprehensive understanding of AI-based applications, including associated problems and limitations [

14,

15]. Budget allocation prioritizes maintaining legacy systems, and there is a notable gap in contextual ML knowledge among Jordanian government employees, potentially affecting citizen trust and satisfaction with public services [

12,

19,

20].

In tackling the issue of insufficient contextual awareness within ML solutions, this study advocates for a sustainable approach that merges human expertise with machine capabilities, particularly within the e-government domain. By integrating the two pillars of human expertise and machine capabilities, decision-making processes are enriched, fostering a holistic perspective that extends into administrative science studies. This aligns with Simon’s decision-making model [

21]. This study aims to determine how decision-making is affected by ML best supports, identifying suitable ML techniques for RDM and emphasizing the importance of incorporating diverse perspectives and sustainable practices into our methodologies. By incorporating sustainability principles such as engaging stakeholders, continuous improvement, collaborative decision-making, and knowledge sharing into our methodologies, we can ensure that our decision-making processes are not only more inclusive and equitable but also sustainable in the long term.

The primary inquiry under investigation for this study led to an important question, which is “What is the effect of using machine learning (supervised learning and unsupervised learning) on rational decision-making with the role of trust as a mediating in this relationship?” Addressing this, this study seeks to obtain insights into the impact of supervised and unsupervised ML on rational decision-making in the e-government context of Jordanian e-government. It also investigates the impact of ML on trust, the influence of trust on RDM, and the mediating role of trust in the ML and RDM relationship. By addressing this question, this study aims to provide valuable insights into the nuanced dynamics between machine learning, trust, and rational decision-making in the specific context of Jordanian e-government transformation, contributing to a deeper understanding of the effective utilization of AI technologies in this domain.

The remaining sections of this document are organized as follows: Initially, a comprehensive literature review is provided, followed by a discussion on the conceptual framework of the study and the development of hypotheses accordingly. Following this, the study methodology is explained, and the data analysis outcomes are then introduced. The next section delves into a discussion of the findings and explores their implications. Lastly, the document concludes with a discussion on limitations, future study prospects, and final conclusions.

2. Related Work

The literature review used Scopus, Web of Science, and Google Scholar databases. This study focused on literature published within the last 10 years to ensure that the information on the machine learning variable was up to date. However, for theories and concepts related to rational decision-making, older works were also included to provide a comprehensive understanding. Keywords such as “machine learning”, “decision-making”, “e-government”, “artificial intelligence”, and “public sector” were employed to identify relevant studies.

2.1. Machine Learning

Machine learning, particularly in supervised and unsupervised forms, supports decision-making processes across various domains, including e-government [

3,

5,

14,

20]. In supervised learning, predictive analytics enables algorithms to make predictions based on historical data. Supervised machine learning has been widely adopted across various domains due to its ability to make predictions based on labeled data. In finance, for example, supervised learning models are used to predict stock prices, detect fraudulent transactions, and assist in credit scoring, providing more accurate insights for risk management and investment strategies [

22]. In healthcare, these models help diagnose diseases by analyzing medical images, improving the accuracy and speed of diagnoses, particularly in complex cases like cancer detection [

23]. E-commerce platforms also benefit from supervised learning by implementing recommendation systems that suggest products based on user behavior, enhancing customer experience and boosting sales [

24]. Additionally, in public administration, supervised learning aids in optimizing resource allocation and improving service delivery in e-government systems [

14]. Sen et al. [

25] offered a classification framework for machine learning algorithms, providing a comprehensive guide for researchers and practitioners. This framework helps to understand the types of supervised machine learning algorithms applicable in digital transformation, aiding decision-makers in the e-government context. These studies contribute to a deeper comprehension of the role that supervised machine learning plays in decision-making processes. Merkert et al. [

18] and Wang et al. [

26] further contributed to the understanding of machine learning algorithms, with Wang et al. [

26] focusing on multivariate forecasting and deep feature learning, presenting a novel anomaly detection framework for smart manufacturing support. Merkert et al. [

18] examined the utilization of machine learning in decision support systems, offering insights into various algorithms and their practical implications. Both studies explored the application of machine learning in decision support, establishing a foundation for understanding the relationship between ML and RDM.

The analysis aimed to identify significant correlations between supervised machine learning and rational decision-making in e-government systems. Numerous studies, as mentioned in the above-related works, have explored the relationship between supervised machine learning and various aspects of decision-making, such as accuracy, efficiency, and transparency in various domains [

27,

28,

29]. The findings consistently demonstrate a significant positive correlation between the use of supervised machine learning and enhanced decision-making quality, suggesting that, when decision-making processes incorporate supervised learning models, the outcomes tend to be more rational and data-driven. This relationship has been observed across various domains, including healthcare, finance, and public policy, where supervised learning models have been employed to predict outcomes, classify data, and personalize services [

27,

30]. The difference from previous studies is that our study focuses specifically on the e-government context, where the integration of supervised machine learning can address the unique challenges public administrators face. In e-government, decision-makers must navigate complex and dynamic environments with diverse data sources and stakeholder needs. Supervised machine learning provides tools to process vast amounts of data efficiently and generate various alternatives, ensuring that decisions are grounded in empirical evidence and are more rational [

25,

29]. Based on these findings, we propose the following study hypothesis:

Hypothesis 1. “Supervised machine learning has a significant positive impact on rational decision-making in e-government systems”.

Recent advancements in unsupervised machine learning have been driven by the development of novel algorithms, theoretical progress, and the increasing availability of online data and cost-effective computing resources. These advancements have led to the widespread application of unsupervised learning techniques across various sectors, including science, technology, and commerce, heralding a new era of evidence-based decision-making across diverse domains [

31]. Unsupervised machine learning algorithms, such as those used for pattern discovery, anomaly detection, and data exploration, have proven particularly valuable. For example, pattern discovery algorithms like clustering can uncover hidden structures within data, such as grouping customers based on their purchasing behavior. This capability allows businesses to identify market segments and tailor their marketing strategies, thereby advancing decision-making related to market segmentation [

32]. Anomaly detection algorithms are also crucial, as they can identify unusual patterns or outliers in data, which may indicate potential issues. In cybersecurity, for instance, these algorithms can detect suspicious activities in network traffic, enabling a quick response to security threats and supporting informed decision-making to mitigate risks [

33]. Additionally, data-exploration techniques like dimensionality reduction help visualize high-dimensional data and identify important features. This process aids decision-makers in understanding the underlying relationships within the data and making informed decisions based on the insights gained [

34].

The analysis explored the relationship between unsupervised machine learning and rational decision-making within e-government systems. Numerous studies have investigated how unsupervised machine learning techniques, such as clustering, anomaly detection, and data exploration, improve decision-making by uncovering hidden patterns, detecting anomalies, and simplifying complex datasets [

26,

27]. The findings consistently show a significant positive correlation between unsupervised learning methods and enhanced decision-making, suggesting that when these techniques are employed, decision-makers can gain deeper insights and make more informed, rational choices [

26]. This positive relationship has been observed across various sectors [

32,

33], where unsupervised learning techniques are applied to analyze unstructured or high-dimensional data [

34]. These techniques help identify trends, segment populations, and highlight outliers, all essential for making data-driven decisions.

Our study focuses on the e-government context, where unsupervised machine learning can address the unique challenges of managing diverse and often unstructured data sources [

27]. Public administrators in e-government systems must deal with vast amounts of data, ranging from citizen feedback to service usage patterns, often without predefined categories or labels [

5]. In e-government, unsupervised learning can enhance the quality of decision-making by enabling the discovery of previously unknown relationships within the data, identifying emerging trends, and detecting anomalies that could indicate potential risks or opportunities [

28]. Integrating unsupervised machine learning in e-government systems thus contributes to a more adaptive, evidence-based, and rational decision-making process, ultimately leading to better outcomes for citizens [

27,

34]. Based on these findings, we hypothesize the following:

Hypothesis 2. “Unsupervised machine learning has a significant positive impact on rational decision-making in e-government systems”.

2.2. Rational Decision-Making

Rational decision-making is a pivotal element in human behavior, permeating various disciplines such as economics, psychology, and management [

35]. This literature review juxtaposes insights from seminal works with recent studies, delving into diverse dimensions of rational decision-making. Herbert Simon’s groundbreaking work in “Administrative Behavior” laid the groundwork by introducing the concept of bounded rationality [

35,

36,

37]. Herbert Simon’s work indeed revolutionized our understanding of decision-making processes. By introducing the concept of bounded rationality, he highlighted the inherent cognitive limitations that individuals face when making decisions. This departure from the classical economic model of complete rationality has had profound implications across various disciplines [

38,

39].

Simon’s insights have influenced subsequent research by shifting the focus toward understanding how individuals make decisions under constraints rather than assuming perfect rationality. This approach acknowledges that decision-makers often operate with limited information, time, and cognitive resources, leading to satisficing rather than optimizing behavior [

40]. Furthermore, Simon’s ideas have permeated fields beyond economics, including psychology and management. His emphasis on bounded rationality has led to a more nuanced understanding of human behavior in organizational settings, where decision-makers must navigate complex environments with imperfect information [

41].

Overall, Simon’s contributions have enriched our understanding of decision-making processes, highlighting the importance of considering cognitive limitations and contextual factors in rational decision-making. Julmi’s [

42] exploration of “Rational Decision-Making in Organizations” shifted the focus toward the application of rational decision-making in organizational settings. This study scrutinized how organizational structures and decision-making frameworks either facilitate or impede rational choices. Insights from this work shed light on the practical implications of rational decision-making theories. On the other side, Power et al.’s [

43] comprehensive examination centered on the impact of information asymmetry on rational decision-making. This study underscored how unequal access to information can lead to suboptimal decisions and highlighted the critical need to address information disparities to foster truly rational decision-making processes. The gap in Power et al.’s [

43] study was covered by Cao et al. [

44] as they explored the role of technology in rational decision-making. Their study investigated how advancements in artificial intelligence and machine learning influence decision-making processes. The findings provide contemporary insights into the integration of technology and its impact on rational decision-making, which is the most relevant to our study.

Acciarini et al. [

45] concentrated on the ethical dimensions of rational decision-making. These researchers introduced a moral layer to the discourse. Their investigation delved into the ethical considerations individuals encounter when engaging in rational decision-making, underscoring the pivotal role of moral reasoning in the realm of rational decision-making. In contrast, Bag et al. [

46] explored the interplay between emotion and rational decision-making. Their study delved into how emotional states can both augment and hinder the rational decision-making process. This study offers a nuanced perspective on the often-overlooked emotional dimensions in decision-making.

The theoretical landscape of rational decision-making is diverse, reflecting the interdisciplinary nature of this field. From the classical economic model to bounded rationality, prospect theory, decision heuristics, behavioral economics, and institutional analyses, each theory contributes to a nuanced understanding of decision processes [

47]. Recognizing the complexities inherent in decision-making, scholars continue to refine and integrate these theories, fostering a comprehensive framework that addresses the dynamic nature of human choices across various contexts.

2.3. The Mediating Role of Trust between Machine Learning and Rational Decision-Making

Integrating machine learning (ML) into rational decision-making processes has garnered significant attention across various domains, including finance and healthcare, as well as within government sectors. The focus of government entities on adopting ML technologies has accelerated their implementation in public administration, enhancing the efficiency, transparency, and effectiveness of policymaking and service delivery [

21,

34]. However, the successful implementation of ML systems relies not only on their technical capabilities but also on the trust users place in these systems. This is why we explore the mediating role of trust in utilizing ML to support rational decision-making [

48].

Trust plays a crucial role in the acceptance and utilization of ML systems. This study suggests that individuals are more likely to rely on ML-generated insights and recommendations when they perceive the system as reliable, competent, and transparent. Moreover, trust acts as a mediator between the perceived usefulness of ML technology and users’ willingness to adopt it for decision-making [

48,

49].

Ferrario and Viganò [

49] presented a multilayer trust model in human–AI interactions, highlighting factors, such as transparency, explainability, and accountability. Several factors influence the development of trust in ML systems. Users are more likely to trust ML algorithms that produce accurate and reliable results consistently. Additionally, transparent and explainable ML models instill confidence in users by providing insights into the decision-making process and underlying algorithms. Furthermore, establishing accountability mechanisms can enhance trust by ensuring that ML systems are held responsible for their decisions and actions [

49].

Araujo et al. [

50] investigated public perceptions of automated decision-making by AI, exploring the various factors that influence trust. Bag et al. [

46] proposed an integrated AI framework for B2B marketing decision-making, leveraging AI technologies to enhance knowledge creation and firm performance. Cao et al. [

44] explored managers’ attitudes toward using AI for decision-making in organizations, underscoring the importance of trust in AI systems. Despite its importance, trust in ML systems is not always easily established or maintained. Challenges like algorithmic bias, data privacy concerns, and a lack of interpretability can undermine users’ trust in ML technologies. Moreover, the black-box nature of some ML algorithms poses challenges to understanding how decisions are made, leading to skepticism and distrust among users. Understanding the mediating role of trust is crucial for successfully implementing ML systems in decision-making contexts. Organizations must prioritize building trust in ML technologies through transparency, accountability, and ethical practices. Additionally, further study is needed to explore the complex interplay between trust, perceived usefulness, and adoption intentions in diverse settings and user populations [

44,

46,

49].

Transparency is crucial for establishing trust in e-government services, especially as these services increasingly adopt machine learning (ML) and artificial intelligence (AI) systems [

50,

51]. The complexity of these technologies might slow the adoption process among users. Therefore, governments need to communicate clearly about how these technologies are utilized, focusing on transparency in data usage, decision-making processes, and algorithmic fairness [

52]. Key transparency components in e-government include clear policies on data usage and privacy, ensuring that citizens are informed about how their data are collected, stored, and utilized [

51]. Additionally, explaining the decision-making processes of AI and ML systems is essential, as it enables users to understand the rationale behind automated decisions [

51,

52,

53].

Spalević et al. [

51] and Ogunleye [

52] both explored the enhancement of e-government services through AI, with Spalević et al. [

51] emphasizing the importance of legally grounded transparency in building trust. Their study suggested that clear legal frameworks are vital for guiding the ethical use of AI, ensuring that citizens are well-informed about how AI systems are integrated into public services. Similarly, Ogunleye [

52] highlighted the role of AI in improving e-government service delivery, arguing that transparency in data science and ML processes is essential for building trust. This study suggested that transparent data practices and explainable AI models can lead to more effective and trusted e-government services.

Chohan and Akhter [

53] examined how AI can enhance the value of e-government services, emphasizing the potential of AI to improve service delivery, efficiency, and citizen engagement. Their study also underscored the importance of transparency in AI-based e-government services in ensuring trust and successful implementation. Additionally, Satti and Rasool [

54] focused on the role of technology in enhancing government transparency and accountability, highlighting that transparent governance, supported by technology, is crucial for establishing citizen trust, particularly with the introduction of new AI and ML technologies.

Janssen et al. [

55] put forth a decision-making framework for e-government utilizing AI and machine learning techniques, specifically focusing on data governance. Ingrams et al. [

56] examined citizen perceptions of AI in government decision-making, shedding light on the significant role of trust. The literature review underscores the potential benefits and challenges associated with applying machine learning in decision support systems, with a particular emphasis on factors such as data quality and interpretability. Trust in AI systems is highlighted as a crucial aspect in the literature [

48,

49], and the exploration of trust extends to e-government decision-making [

50,

55,

56]. This introduces the third and fourth hypotheses. Therefore, we hypothesize the following:

Hypothesis 3. “Machine learning has a significant positive impact on trust levels in e-government systems”.

Hypothesis 3a. “Supervised machine learning has a significant positive impact on trust levels in e-government systems”.

Hypothesis 3b. “Unsupervised machine learning has a significant positive impact on trust levels in e-government systems”.

Hypothesis 4. “Trust has a significant positive impact on rational decision-making in e-government systems”.

Trust as a mediating variable that might affect the relationship between ML and RDM builds on the literature, suggesting that trust plays a crucial mediating role in AI systems [

48] and considering the potential influence of trust on decision-making. Therefore, we hypothesize the following:

Hypothesis 5. “Trust plays a mediating role in the relationship between machine learning and rational decision-making in e-government systems”.

Hypothesis 5a. “Trust plays a mediating role in the relationship between supervised machine learn-ing and rational decision-making in e-government systems”.

Hypothesis 5b. “Trust plays a mediating role in the relationship between unsupervised machine learning and rational decision-making in e-government systems”.

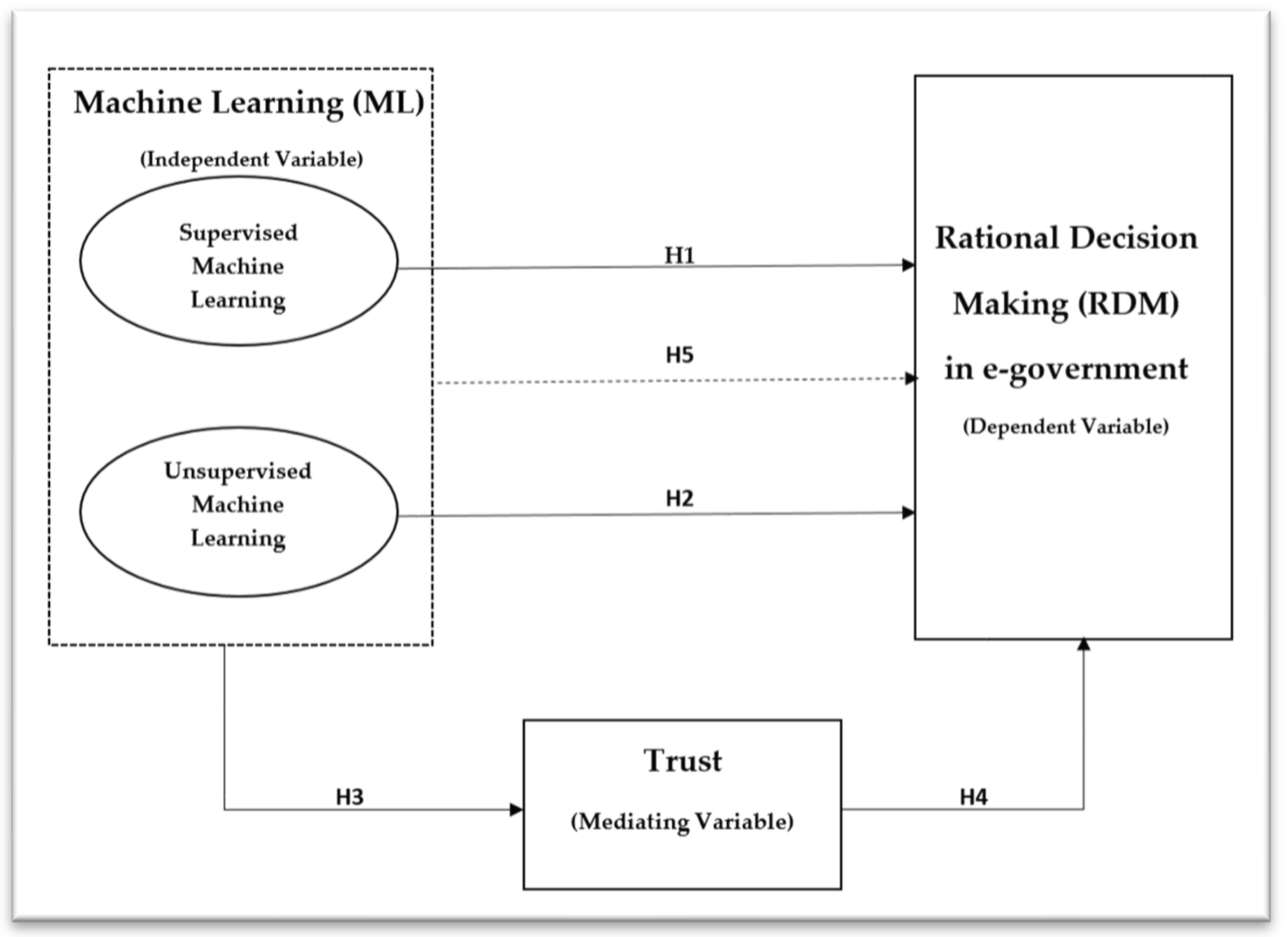

The objective of this study was to examine and analyze the relationships among the variables ML (machine learning), RDM (rational decision-making) in the e-government context, and trust, as illustrated by the conceptual framework presented in

Figure 1.

3. Research Methodology

The concepts of machine learning (ML) and rational decision-making are increasingly intertwined with the evolution of e-government systems. Machine learning, a subset of artificial intelligence, enables systems to learn from data, identify patterns, and make predictions. In the context of e-government, these capabilities are crucial for processing large volumes of data, automating routine tasks, and enhancing the precision and efficiency of decision-making processes. Rational decision-making, which involves logical, systematic, and data-driven approaches, is a foundational element of effective governance. In e-government systems, the integration of ML can enhance rational decision-making by providing data-driven insights, optimizing resource allocation, and improving service delivery to citizens. The principal objective of this study is to construct a conceptual framework that provides a thorough understanding of the influence of machine learning on rational decision-making, specifically within the context of e-government. This study explores how machine learning technologies can enhance the decision-making processes of government entities by improving efficiency, accuracy, and responsiveness to citizen needs. A key aspect of this investigation is the role of trust as a mediating variable in the relationship between machine learning and rational decision-making, as trust is fundamental to citizen engagement and the successful adoption of e-government services. The primary data for this study were collected while utilizing the most suitable methodologies for data collection and sampling strategies.

3.1. Sample and Data Collection

The online survey method was deemed appropriate due to the dispersed nature of the target population. The questionnaire was distributed to employees who work on MoDEE premises, as well as employees who work across various ministries and institutes but are directly responsible to the Head of the Digital Transformation Program at MoDEE. The online survey approach enabled communication with respondents who may have been difficult to reach through conventional methods. This study adhered to ethical principles by ensuring voluntary participation, maintaining the confidentiality of responses, and respecting respondent’s rights to privacy and anonymity. Before distributing the questionnaire, the researchers obtained permission from MoDEE authorities.

Middle-level management employees were purposefully selected due to their engagement in diverse tasks across various departments utilizing machine learning technology. The choice of middle-level management is intentional, given their tendency to make more rational day-to-day decisions. The Ministry of Digital Economy and Entrepreneurship (MoDEE) was chosen as the study site, being the ideal entity for adopting new technologies like artificial intelligence and machine learning. The MoDEE serves as the umbrella organization for the Jordanian e-government, representing the technical arm of the government. The selected respondents are employees who work in the digital transformation directorate and are most familiar with using machine learning techniques in decision-making processes. They are responsible for implementing the executive plan for Jordan’s AI strategy of 2023–2027, which includes various projects centered on enhancing decision-making through ML. Jordan’s e-governance efforts have advanced significantly since its establishment in 2001, focusing on digitizing public administration, improving service delivery, and enhancing transparency. The government has developed a range of e-services and invested in digital infrastructure, though challenges like digital literacy gaps, cybersecurity threats, and regional disparities remain. Looking forward, Jordan aims to deepen digital transformation through smart government initiatives, leveraging technologies like artificial intelligence and blockchain. The focus on capacity building and cybersecurity is crucial for achieving the country’s vision of an efficient, inclusive, and resilient digital government by 2025.

This study aims to contribute significantly by exploring the role of machine learning (ML) and trust in rational decision-making (RDM) within the Jordanian government. This is a unique endeavor, as no similar study has been conducted in Jordan. According to the 2023 human resources report of MoDEE, the total staff population was 245. The researchers utilized a quantitative study methodology, employing an electronically structured questionnaire as the means of collecting data. The survey strategy was chosen for its versatility in combining quantitative and qualitative aspects of business studies.

The online survey was deemed appropriate due to the dispersed nature of the target population for both employees who work at MoDEE premises and others who work across various ministries and institutes leading the digital transformation process and reporting to the head of the digital transformation program at MoDEE, presenting challenges in time, cost, and potential nonresponse. This approach enabled communication with respondents who may have been difficult to reach through conventional methods. The study adhered to ethical principles by ensuring voluntary participation, maintaining the confidentiality of responses, and respecting respondents’ rights to privacy and anonymity. Before distributing the questionnaire, the researchers obtained permission from the MoDEE authorities.

Collaboration with a reputable organization such as MoDEE, as recommended by Sekaran and Bougie [

57], was established to enhance response rates. MoDEE distributed the survey link to its staff, accompanied by a participant information sheet explaining the study’s nature. The survey took place over three months (October–December) in the fall semester of 2023. The responses were gathered and systematically documented directly in a private database. To determine the sample size, a formula recommended by Sekaran and Bougie [

57] was applied. This formula adjusts for a finite population to prevent unnecessary data collection, ensures a balanced and practical sample size, and maintains high statistical confidence at a 95% confidence level and a 5% margin of error. The formula is as follows:

where n = sample size, N = population size, Z = Z-value (based on the desired confidence level, e.g., 1.96 for 95%,

p = estimated proportion of the population that has the attribute of interest, q = 1 −

p (proportion without the attribute), and e = margin of error.

With a population size of 245, the ideal sample size was calculated to be 152. This study employed a convenience sampling method with 163 distributed questionnaires, as we distributed more questionnaires than the calculated sample size to ensure that enough usable data were collected for analysis and considering potential nonresponses or unusable data. However, of the 163 distributed questionnaires, only 141 were usable for analysis, representing an 86.5% response rate.

The demographic characteristics of the respondents can be seen in

Table 1 below:

3.2. Variable Measurement

This study adopted a quantitative and cross-sectional model, employing a questionnaire comprising four sections and 46 items: demographic information, the machine learning scale, the rational decision-making scale, and the trust scale. This study utilized a five-point Likert scale, categorizing responses based on importance or the degree of agreement. The scale ranges from strong agreement at one extreme to strong disagreement at the other, with intermediate points. Each point on the scale carries a corresponding score, with (1) indicating the least agreement and (5) indicating the most agreement for each response.

The questionnaire used in the study was meticulously structured to assess the impact of machine learning on rational decision-making within Jordanian e-government. It was developed through extensive literature research and collaboration with subject matter experts in artificial intelligence. The questionnaire had a total of 46 items with four main sections, containing the following sections: Demographics (Section A) collects basic demographic information to understand the profile of respondents (age, education level, experience period, and gender). Rational decision-making (Section B) measures the respondents’ approach to decision-making, focusing on rationality and systematic processes. It contains 10 items adapted from established studies [

58,

59], evaluating aspects of logical decision-making, the consideration of alternatives, and the use of data for making informed decisions. Machine learning (Sections C-1 and C-2) features 30 items that assess the understanding of both supervised and unsupervised machine learning [

1,

3,

27,

29,

60,

61], affecting the accuracy of data analysis [

23,

27,

29], understanding patterns [

5,

26,

27,

33], evaluating potential risks and uncertainties [

28,

33], and generating alternatives [

25,

29]. Trust (Section D) evaluates the level of trust that respondents have in machine learning technologies as a mediator for rational decision-making. It includes six items derived from relevant literature, measuring trust in the accuracy, reliability, and benefits of machine learning technologies in digital transformation [

62]. The items in each section were carefully chosen and adapted from validated sources to ensure they accurately captured the constructs being studied.

To ensure clarity and understanding among respondents, two rounds of pretesting were conducted. Initially, the questionnaire was reviewed by academic researchers experienced in questionnaire design, and subsequently, it was piloted with artificial intelligence experts. The validity of the questionnaire tool was assessed through face and construct validity. Face validity involves evaluating the clarity, linguistic quality, and alignment of paragraphs with their intended dimensions. Specialized arbitrators in machine learning and academic experts in business administration were consulted, and their recommendations were incorporated into the final questionnaire.

Given that the questionnaire was initially developed in English, a translation process was carried out from English to Arabic and vice versa to verify the content’s accuracy. The Arabic version underwent evaluation to ensure clarity and intelligibility, with specialists examining the material after amendments. The revised Arabic version was then pretested with 33 respondents before distributing the surveys to the larger population.

3.3. Data Analysis

In this study, we employed SPSS v25 and AMOS v23 software to analyze the collected sample, utilizing a combination of multiple regression and confirmatory factor analysis approaches to scrutinize our hypotheses. AMOS software was used to analyze studies with mediating variables because it allows for exploration, provides tools for assessing model fit, and offers a clear visualization of the relationships in the model. This study also explores trust as a mediating variable between machine learning and rational decision-making. Multiple regression in SPSS is considered a contemporary and effective alternative to traditional analysis tools, featuring advancements, such as confirmatory analysis, exploration of nonlinear impacts, and the examination of mediating and moderating effects [

63]. Numerous scholars in the field have advocated for using multiple regression statistical methods to explore mediation effects, drawing on both primary and secondary data [

64]. We posited that multiple regression using SPSS would be the most suitable method for our study.

Confirmatory factor analysis (CFA) was employed to establish a measurement model for all self-rating scales through a convergent validity test, and we conducted confirmatory factor analysis (CFA) using AMOS. The modification index was then used to select variables for refinement [

65]. We prioritized removing components with the highest modification index values until the desired goodness of fits was attained. Although most goodness-of-fit indicators exceeded specified cutoff thresholds, a few factor loadings fell below the minimum requirement of 0.5. Consequently, these were excluded to ensure the validity of our data framework. All components of the observed variables demonstrated factor loadings exceeding the critical point of 0.5, confirming their validity [

66].

4. Results

4.1. Testing Goodness-of-Fit, Model Validity, Reliability, and Correlation Coefficients

Initially, the reliability of the scale and the validity of the questionnaire were assessed using Cronbach’s alpha coefficient. As suggested by Sekaran and Bougie [

57], Cronbach’s alpha values exceeding (0.70) indicate high internal consistency in measuring variables, contributing to increased reliability. To evaluate the stability of the study instrument, Cronbach’s internal consistency was computed—a recognized method for gauging the tool’s stability. This approach evaluates the homogeneity of the tool’s statements using Cronbach’s alpha, measuring the extent to which the units or items within the test relate to each other and the scale as a whole. Cronbach’s alpha coefficients were employed to evaluate the measurement reliability, and the construct correlation was used to assess the validity of the sample [

67].

Table 2 presents the reliability coefficients (Cronbach’s alpha) for the various dimensions and fields of the study, ranging between 0.730 and 0.962. All these values are considered high, demonstrating strong internal consistency and indicating acceptability for practical application. It is worth noting that most studies consider a reliability coefficient acceptance threshold of 0.70 [

67].

Thirdly, factor analysis was employed to identify principal components and assess whether the chosen factors in the study adequately capture the variables, as well as to determine the relationship between the questionnaire’s factors and the variables. Hair et al. [

64] emphasized that exploratory factor analysis (EFA) is utilized for data exploration, indicating the necessary number of factors for better data representation. EFA assigns each measured or observed variable to a factor based on the load estimation factor value. A key characteristic of EFA is that factors are derived solely from statistical outcomes, devoid of theoretical assumptions, and the naming of factors occurs post-factor analysis. Essentially, EFA allows analysis without prior knowledge of the existing factors or the allocation of variables to constructs.

Exploratory factor analysis (EFA) was employed to reduce the observed variables and identify relationships between them. The principal component analysis (PCA) technique, along with Promax with Kaiser normalization rotation, was used to extract factors. The analysis, applied to the machine learning items, revealed two subdimensions measured with 30 items. All item saturations (loadings) ranged from 0.410 to 0.843, as shown in

Table 3, surpassing the threshold of 0.4. Orthogonal rotation resulted in the classification of questionnaire items into two factors. For the two factors, the value of the matrix determinant is 0.013, exceeding 0, which indicates that there is no autocorrelation problem between the elements of the variable.

Exploratory factor analysis revealed the rotation matrix for items related to rational decision-making, measured with 10 items. The EFA demonstrates that all item saturations (loadings) fall within the range of 0.54–0.82, as shown in

Table 4, surpassing the threshold of 0.4. Through orthogonal rotation, the questionnaire items are categorized into one factor. Notably, the matrix determinant value was calculated to be 0.011, exceeding 0, indicating the absence of an autocorrelation issue among the elements of the variable.

Exploratory factor analysis also revealed the rotation matrix for trust-related items, involving six items. The EFA demonstrated that all item saturations (loadings) fall within the range of 0.647–0.820, surpassing the threshold of 0.4, as illustrated in

Table 5. Through orthogonal rotation, the questionnaire items were categorized into one factor. Notably, the matrix determinant value was calculated to be 0.041, exceeding 0, indicating the absence of an autocorrelation issue among the elements of the variable.

To determine the absolute model fit index, a goodness-of-fit test was conducted to assess the alignment of the data sample with the connecting path map of the overall framework. Subsequently, we assessed the validity of the measurement model based on established reliability and validity tests. The goodness-of-fit evaluation, crucial for determining how well the model aligns with the dataset’s variance–covariance structure, indicated a favorable fit for both the CFA measurement and structural models.

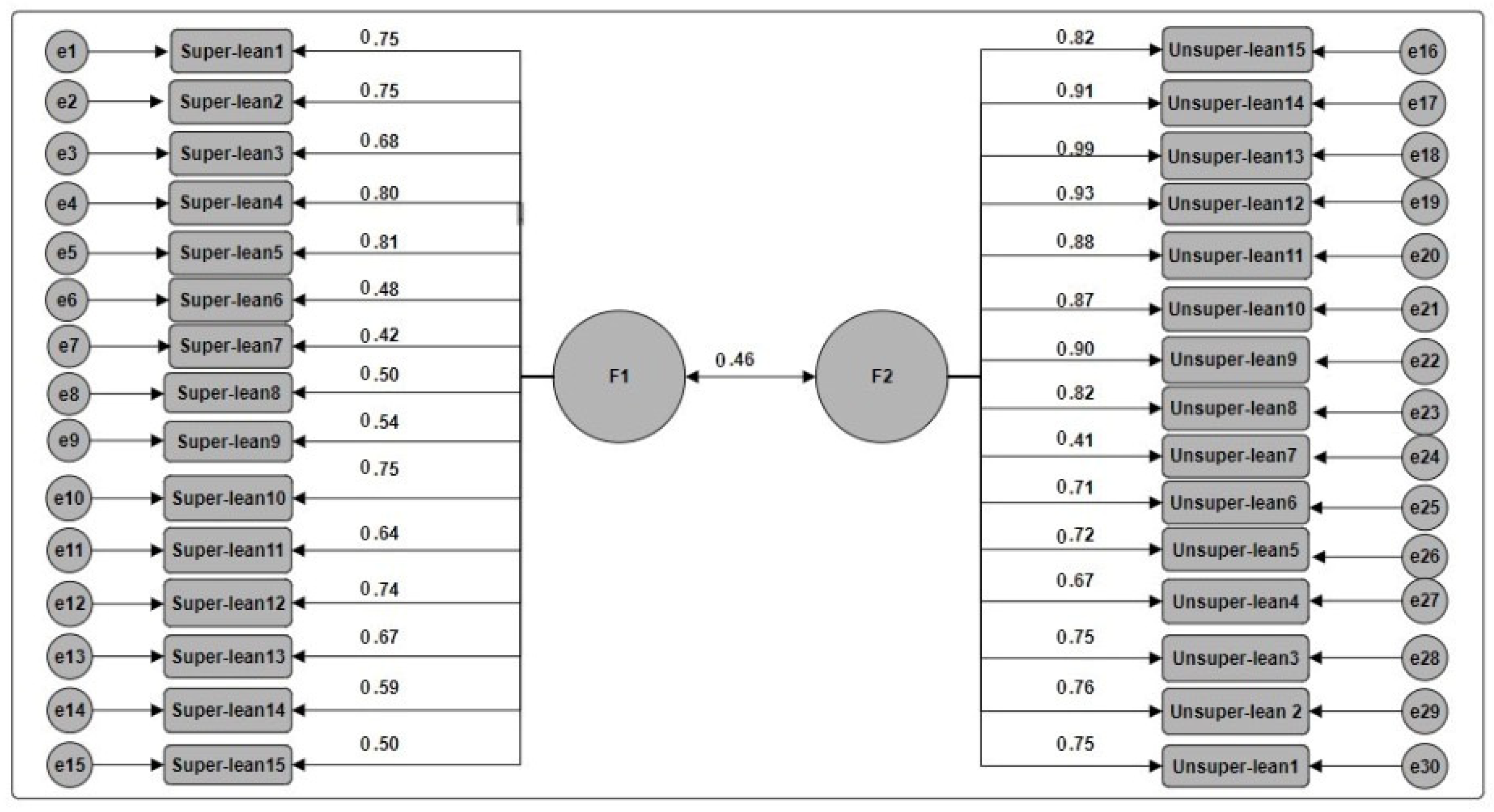

Fourthly, to ensure the construct validity of the variable measurement items and their alignment with the respective constructs, confirmatory factor analysis (CFA) was performed. This process involved examining the saturation values (factor loadings) of each measurement item to confirm that they adequately represent the underlying constructs. This study used various fit indices and statistical indicators to evaluate how well each measurement aligns with the theoretical model and the overall study measures [

67]. The primary goal of conducting CFA was to verify the validity of the proposed model, which includes latent variables and the indicators used to measure them. Construct validity is considered satisfactory if the standard regression weights (factor loadings) of the items are greater than 0.40. This threshold indicates that the measurement items have a strong and meaningful relationship with their corresponding constructs. For instance, in

Figure 2, the confirmatory factor analysis for machine learning (both supervised and unsupervised) is depicted, illustrating how well the items align with the latent variables.

The Kaiser–Meyer–Olkin (KMO) results for all three variables (ML as independent, trust as mediating, and RDM as the dependent variable) are above 0.001, as indicated in

Table 6. This implies that the sample size employed in this study is deemed sufficient. Additionally, the chi-square test result is deemed satisfactory, with a significant level of 0.000.

Table 6 shows that the matrix determinant value is 0.013, surpassing 0, signifying the absence of an autocorrelation issue among the variable elements.

For the machine learning factor, the Kaiser–Meyer–Olkin (KMO) test yielded a value of 0.84, surpassing the threshold of 0.50. This suggests that the sample size in the study is adequate to accurately measure the variable. Bartlett’s test produced a value of 5145.632 with a significance level of 0.000, indicating a significant relationship between the sub-elements of the variable. Similarly, for the rational decision-making factor, the KMO test resulted in a value of 0.80, exceeding 0.50, indicating an adequate sample size for precise variable measurement. Bartlett’s test yielded a value of 1190.304 with a significance level of 0.000, implying a significant relationship between the subelements of the variable. Regarding the trust factor, the KMO test produced a value of 0.824, surpassing 0.50, indicating a sufficient sample size for accurate variable measurement. Bartlett’s test resulted in a value of 435.701 with a significance level of 0.000, suggesting a significant relationship between the subelements of the variable.

4.2. Statistical Assumption

Diagnostic tests are crucial in determining data adequacy for drawing conclusions, representing a vital requirement for researchers [

57]. The skewness and kurtosis tests confirm that the data distribution is normal, with skewness values within −2–2 and kurtosis values within −7–7, making the data suitable for further statistical analysis. Examining variance inflation factors (VIF) and tolerance values confirms the absence of multicollinearity among the independent variables, with tolerance values exceeding 0.10 and VIF values remaining below 10, indicating no significant overlap between the variables. Additionally, the Durbin–Watson test results, with values ranging between 1.660 and 1.922, indicate no significant autocorrelation among residuals, confirming that the independence of errors is maintained in the analysis.

4.3. Hypotheses Testing

Researchers employed SPSS for data analysis and used AMOS software to conduct mediation analysis. This study consists of five main hypotheses with subhypotheses. The findings in this section are based on the standardized coefficient Beta, which quantifies the strength of the relationship between an independent variable and the dependent variable. For both direct and indirect effects, the confidence interval (typically set at 95%) should not include zero between its upper (ULCI) and lower (LLCI) limits.

In the deep analysis, it is clear that machine learning in both categories (supervised and unsupervised) positively affects rational decision-making in the context of e-government, especially when tested in Jordanian e-government. This is supported by the analysis results shown in

Table 7, where the values support H1 and H2. The data outlined H1 as Beta = 0.770,

p = 0.00,

p < 0.05. Furthermore, LLCI/ULCI is between 0.759 and 1.005, indicating significance, as 0 is excluded from this interval. The values also confirm H2, as Beta = 0.869,

p = 0.00,

p < 0.05; LLCI/ULCI is between 0.693 and 0.840, indicating significance, as 0 is excluded from this interval. Thus, hypotheses H1 and H2 were accepted, which indicates that supervised/unsupervised machine learning positively impacts rational decision-making in e-government.

The results shown in

Table 7 also support H3 and its subhypotheses (Beta = 0.917,

p = 0.00,

p < 0.05), and the LLCI/ULCI range falls between 0.823 and 0.953; therefore, hypothesis H3 is accepted, whereby machine learning positively impacts the trust levels at the e-government. Both subhypotheses (H3a and H3b) supported the values of Beta (0.795 and 0.862, respectively, at

p = 0.00,

p < 0.05) and LLCI/ULCI, as shown in

Table 7, indicating significance, as zero was excluded from the interval. Thus, subhypotheses H3a and H3b are accepted, which indicates that supervised/unsupervised machine learning positively impacts the trust levels at e-government.

In examining

Table 7, the values confirm H4 as Beta = 0.949,

p = 0.00,

p < 0.05. Furthermore, LLCI/ULCI is between 1.02 and 1.13, indicating significance as 0 is excluded from this interval. Thus, hypothesis H4 is accepted, which indicates that trust positively impacts rational decision-making in e-government.

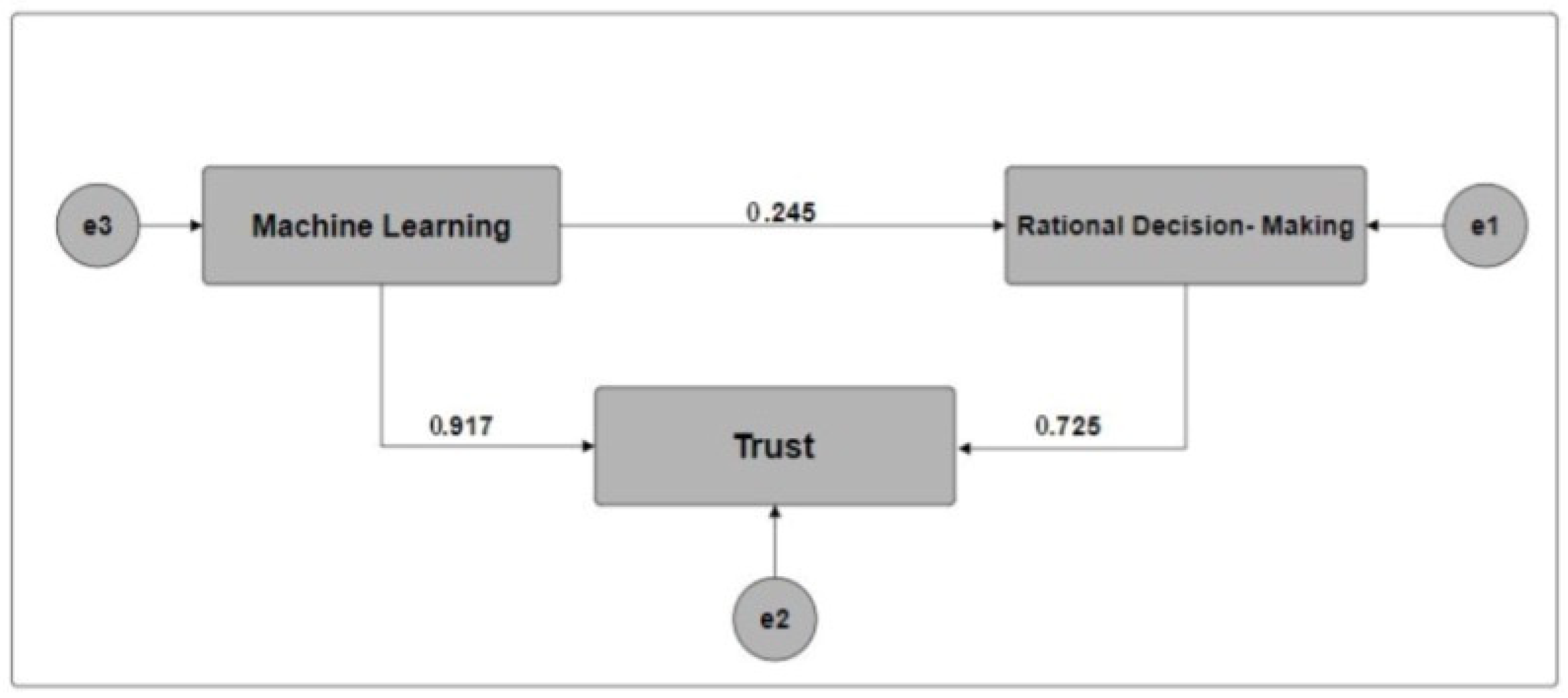

The values of the direct and indirect impact in

Table 8 show that the value of the impact of the independent variable on the dependent reached 0.245, the value of the effect of the independent variable on the mediator was 0.917, and the value of the direct effect of the mediator on the dependent was 0.725. Moreover, as illustrated in

Figure 3, it was concluded that

These effects were expressed using standard values, as it was noted that all the values of these effects (coefficients) were statistically significant [

68], as they were all less than 0.05. At the same time, they were less than 0.001, therefore It is symbolized by the symbol (**), which means that there is an indirect effect of the mediating variable, given that all values of the significance level were statistically significant, which indicates that there are partial mediators of trust (as a mediating variable) in the relationship between machine learning and rational decision-making in e-government. This leads to hypothesis H5 being accepted.

5. Discussion

Integrating machine learning into e-government systems has introduced a new dimension to decision-making processes, particularly in the context of rational decision-making (RDM). This study aimed to explore the impact of ML on RDM within the Jordanian e-government sector, specifically focusing on the mediating role of trust.

The findings provide compelling evidence that both supervised and unsupervised ML techniques significantly enhance decision-making outcomes, especially when trust is present as a mediating factor. The results demonstrated that ML has an appositive effect on RDM. This finding is similar to those of Sharma et al. [

2], Al-Mushayt [

5], Kureljusic and Metz [

15], Merkert et al. [

18], Loukili et al. [

24], and Alexopoulos et al. [

28], who indicated that AI, especially ML, is essential for the improvement and acceleration of decision-making processes in various domains. In the analysis, we revealed that supervised machine learning has a substantial impact on rational decision-making, accounting for 59.2% of the variance (R

2 = 0.592). This indicates that nearly 60% of the decision-making processes within the Jordanian e-government, as facilitated by middle management, can be explained by the application of supervised learning models. The remaining 40% is influenced by other factors not covered within the scope of this study, such as individual decision-makers’ experiences or external pressures.

Usama et al. [

34] and Alloghani et al. [

27] focused on unsupervised machine learning in decision-making and showed similarity to our results, whereby unsupervised machine learning showed an even more significant impact, explaining 75.5% of the variance in rational decision-making (R

2 = 0.755). This strong correlation suggests that unsupervised learning techniques, which include clustering and anomaly detection, are particularly effective in uncovering patterns that guide rational decisions in e-government environments. Therefore, policymakers and decision-makers in e-government should prioritize the integration of machine learning techniques in future projects within the Jordanian e-government. Special attention should be given to investing in unsupervised machine learning, given its strong correlation with rational decision-making.

Consequently, the Jordanian e-government should aim to expand the implementation of ML techniques in decision-making processes to improve decision quality, generate more alternatives, and accelerate the rational decision-making cycle. This approach leverages the interest and engagement of middle management in using machine learning to enhance their work.

Furthermore, the findings clearly indicate that both supervised and unsupervised ML techniques significantly contribute to building trust within the e-government framework. The R

2 value of 0.840 further confirms that ML accounts for 84% of the variance in trust levels, indicating a robust relationship between these variables. R

2 values of 0.631 and 0.742, respectively, indicate that supervised ML explains 63.1% of the variance in trust, while unsupervised ML accounts for 74.2%. This finding underscores the importance of incorporating ML into e-government initiatives, as fostering trust is crucial for the successful implementation and adoption of digital technologies in public administration. Ferrario and Viganò [

49], in their study, highlighted e-trust, and Janssen et al. [

55] found similar results that AI builds more trust levels. These findings highlight empirical evidence that using ML techniques can help enhance trust levels, where it was found that ML is a systematic data-driven process with high accuracy levels. It is consistent and objective, unlike human decisions, and improves transparency. In terms of speed and efficiency, ML systems can process and analyze large volumes of data much faster than humans, enabling quick decision-making.

Furthermore, the findings indicate that trust levels play a significant role in enhancing rational decision-making (RDM) within the e-government framework. The R

2 value of 0.901 further confirms that trust levels contribute to 90% of the variance in RDM, indicating a robust relationship between these variables. This finding highlights the critical role of trust in the success of decisions made in e-government initiatives. These results can be compared with previous studies by Yu and Li [

48] and Abu-Shanab [

62].

The findings from this study underscore the critical role of trust as a mediator between machine learning (ML) and rational decision-making (RDM) in e-government contexts. The significant indirect effect of 0.664, combined with the direct impact of 0.245, illustrates how trust enhances the relationship between ML and RDM. This aligns with a similar study highlighting trust’s essential role in the adoption and effectiveness of advanced technologies. For instance, studies have consistently shown that trust in technology can influence its acceptance and the decision-making processes it supports [

50,

51,

53]. In the context of e-government, trust can mitigate skepticism regarding new technologies, facilitating their integration and improving their impact on decision-making. Trust not only enhances the impact of ML, but also plays a crucial role in ensuring that the decisions made are rational and well-informed. This finding highlights the importance of cultivating trust within government entities that adopt ML technologies, as it can significantly amplify the benefits of these technologies in decision-making processes.

6. Implications

This study’s principal conclusions assert that investing in machine learning (ML) will fortify and enrich the rational decision-making (RDM) of the Jordanian e-government in the digital transformation process and underscore the critical role of trust as a mediator. This study makes noteworthy contributions to the evolution of decision-making theories, particularly within the e-government domain. This study advances our theoretical understanding of the intricate interplay between technological advancements and decision-making capabilities in government settings. Furthermore, the findings bridge technology (ML) and decision sciences, offering insights into the nuanced relationship between technological progress and decision-making processes. This integration is pivotal for developing comprehensive theories that encapsulate the evolving dynamics of decision-making in the digital age. The confirmation of trust as a mediator in the connection between machine learning (ML) and rational decision-making (RDM) contributes significantly to the broader conversation about the role of trust in influencing decision-making processes. This is especially relevant in environments shaped by technological advancements. The uniqueness of this study lies in its theoretical contribution, as it addresses a gap in the existing literature by empirically assessing the mediating role of trust between ML and RDM in the government sector. Notably, this study is groundbreaking in Jordan, being the first of its kind and thus making a noteworthy contribution to the existing body of knowledge.

On the other side, for practitioners in the e-government sector in Jordan, this study suggests strategic investments in ML technologies to augment decision-making processes during digital transformation initiatives. This involves the thoughtful adoption and integration of ML tools that align with the specific needs and challenges of the e-government. Recognizing the pivotal role of trust as a mediator, practitioners should prioritize initiatives that cultivate and sustain trust among stakeholders. Transparent communication, ethical AI practices, and measures to address algorithmic bias and data privacy concerns are imperative in this context. In the workforce, the implications extend to the need for tailored training programs. Government officials and decision-makers must be equipped with the necessary skills to harness ML technologies effectively, ensuring they can leverage these tools for informed and rational decision-making. Moreover, this study suggests developing a comprehensive framework for systematically integrating ML into decision-making processes within the e-government sector. Such a framework should consider the specific characteristics of the government context, ensuring alignment with organizational goals and ethical standards.

In summary, these implications underscore the significance of informed ML investments and the critical role of trust in shaping decision-making processes in the dynamic landscape of Jordanian e-government transformation. These insights provide a foundation for future study, policy development, and strategic planning in technology-driven governance.

7. Conclusions, Future Research, and Limitations

Our study of the relationship between ML and RDM with the mediating impact of trust within the e-government of Jordan yielded valuable insights. Integrating machine learning (ML) into e-government systems has significantly impacted rational decision-making (RDM) within the Jordanian e-government sector, with trust emerging as a crucial mediator in this relationship. This study demonstrated that ML techniques, both supervised and unsupervised, substantially enhance decision-making outcomes when trust is present, thereby underscoring the pivotal role of trust in leveraging ML for improved governance. The findings align with those of previous studies that highlighted the efficacy of ML in enhancing decision-making processes across various domains. These results advocate for the prioritization of ML integration in future e-government projects in Jordan, with a particular emphasis on unsupervised ML due to its strong correlation with improved decision-making. This study confirms that trust levels clearly contribute to the variance in RDM, illustrating the critical role of trust in enhancing the effectiveness of ML applications.

For the Jordanian e-government sector, this study recommends strategic investments in ML technologies to enhance decision-making processes during digital transformation initiatives. This includes adopting ML tools that align with the specific needs and challenges of the e-government sector. Emphasizing the role of trust, practitioners should focus on initiatives that build and maintain trust among stakeholders. Transparent communication, ethical AI practices, and measures to address algorithmic bias and data privacy concerns are crucial.

The limitations of machine learning in the decision-making study include the early stage of development, which primarily focuses on theoretical aspects rather than empirical studies. While there is potential for extensive study on machine learning applications and challenges, particularly within the government sector, there is a lack of attention to the rapid technological changes worldwide and their synchronization with the slow adoption of technology within the government sector. This study faces several limitations, including the early stage of AI studies in the Jordanian government, which primarily examine expected effects rather than practical implementations. Furthermore, implementing AI-based systems incurs high costs, as it is a time-, cost-, and resource-intensive process. Without proper validation during training, machine learning models may suffer from overfitting, leading to the retention of noise rather than relevant data, thereby hindering generalization. Additionally, the resistance to change among humans and a lack of trust in technologies are significant barriers to adopting new technologies, further limiting the effectiveness of machine learning in decision-making processes. Responses to questionnaires may be biased due to various factors such as social desirability bias, respondent mood, or misunderstanding of questions. Decision-making processes can be complex and influenced by many factors, including emotions, cognitive biases, and situational context.

In conclusion, this study contributes to the understanding of ML’s impact on rational decision-making and the mediating role of trust and lays the groundwork for future research endeavors. It urges researchers to explore the multifaceted relationships between ML, decision-making, and trust in varied contexts, fostering a richer comprehension of these dynamics for both theoretical advancements and practical applications.