4.1. Performance Comparison of Credit Scoring Models

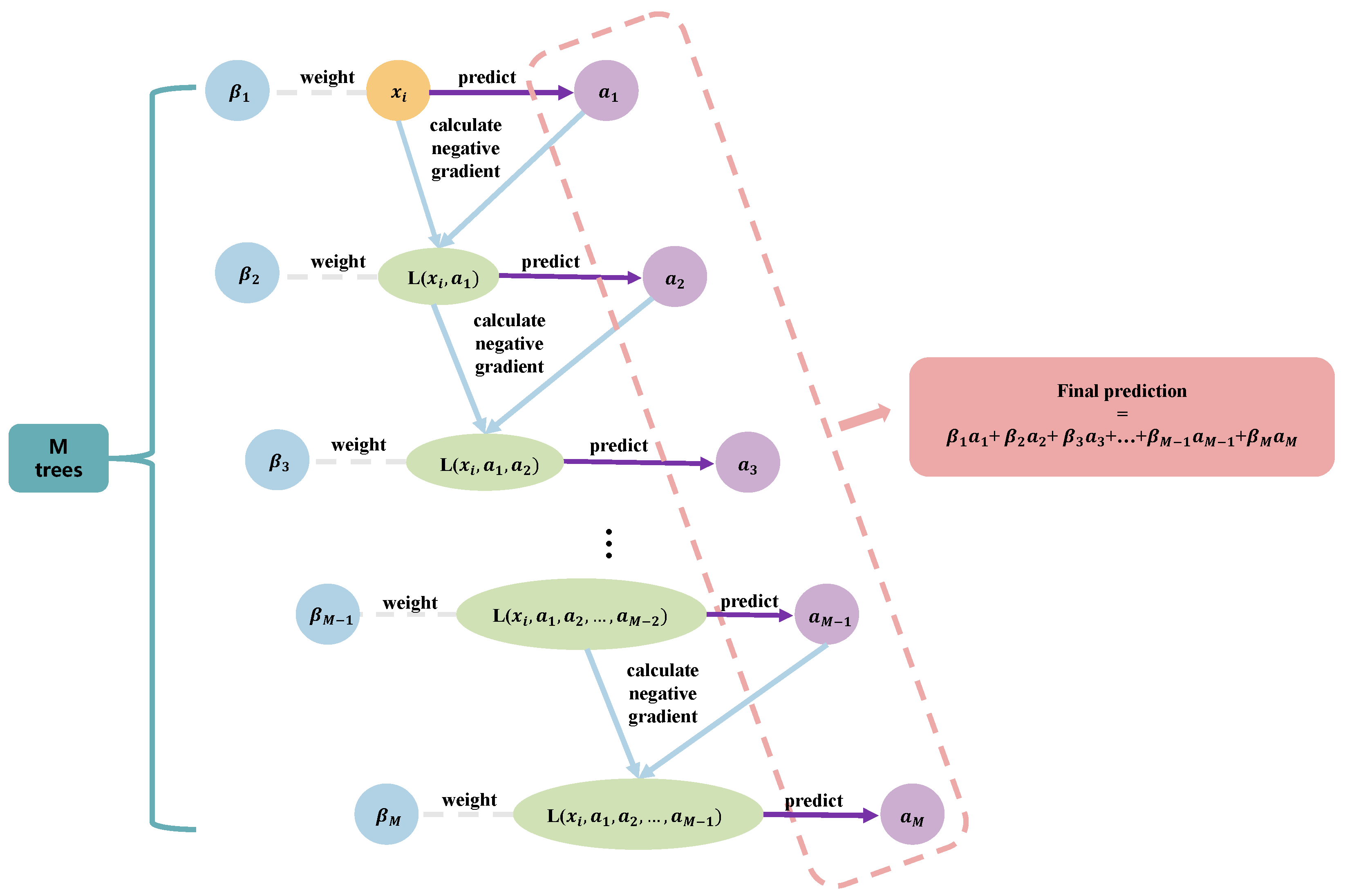

In this study, we innovatively embedded the GHM loss function into XGBoost to deal with imbalanced data. The optimal parameter values were selected by calculating the mean square error of the model under different parameter values. Finally, the parameters were adjusted to the interval of 7 for gradient density estimation, and the harmonic parameter

for sample weight calculation was 0.65. When applying the model to each dataset, we further considered the optimization of the algorithm according to the complexity of different datasets. To mitigate the risk of overfitting, the parameters of each dataset were adjusted as shown in

Table 2.

For the purpose of comparing the efficacy of diverse learning techniques and confirming that the XGBoost-B-GHM has the best performance amongst imbalanced learning models, we used the receiver operating characteristic curve (ROC), which has been widely used in signal detection theory to describe the tradeoff between the hit rate and false alarm rate of the classifier [

40], as the metric for comparison. In our credit scoring work, the aim is to predict whether a bank user will default, so the dataset can be partitioned into two distinct classes (positive and negative samples); i.e., it can be modeled as a binary classifier. Assuming that the negative sample is 0 and the positive sample is 1, four possibilities can be obtained by combining the predicted value with the actual value in pairs: TP, FP, TN, and FN, as shown in

Table 3, denoting the actual value as Y and the predicted value as

, where TP represents True Positive; that is, the applicant is correctly predicted to be a good candidate (both the predicted value and the actual value are good applicants). FP stands for False Positive; that is, the applicant was wrongly predicted to be a good applicant when actually was not. TN stands for True Negative, which means the applicant is correctly predicted to be bad (both the predicted and the actual value are bad applicants), while FN stands for False Negative, which means the applicant is wrongly predicted to be bad (the predicted applicant is deemed to be bad when she/he is not). The horizontal coordinate of the ROC curve is FPR (False Positive Rate) and the vertical coordinate is TPR (True Positive Rate). FPR calculates the proportion of prediction errors (FP) in all negative samples, while TPR calculates the proportion of prediction errors (TP) in all positive samples. The formula is as follows:

where # represents the number, i.e.,

represents the number of negative samples, and

represents the number of positive samples. In addition, the area under the curve (AUC) is an important indicator in ROC curve analysis. The value of AUC ranges from 0 to 1. The closer the AUC is to 1, that is, the farther the ROC curve is from the reference line, the better the classifier performance. In our experiment, for each large dataset, the final ROC curve was drawn after an average of 40 repeats to avoid randomness and enhance robustness.

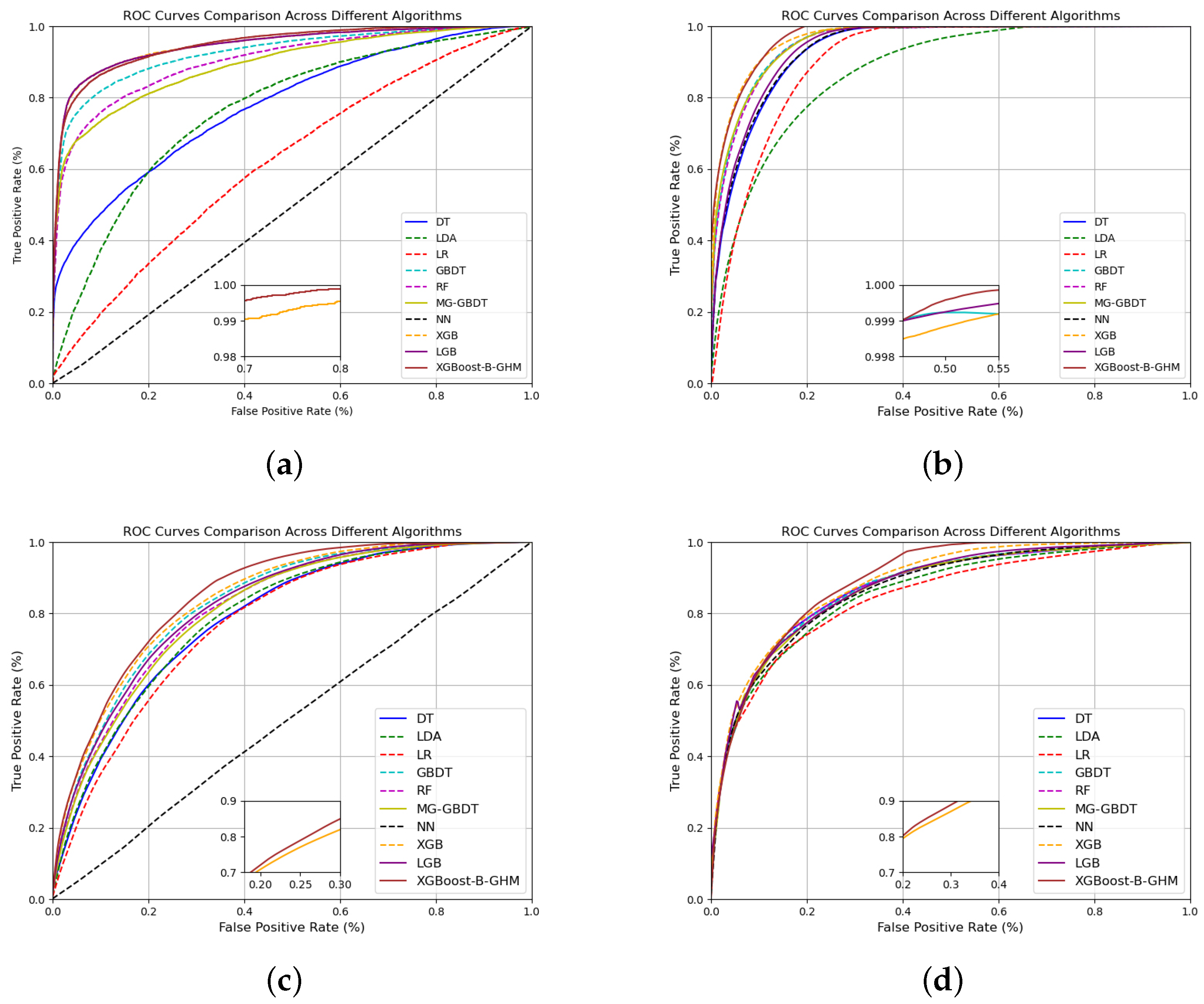

Figure 4 shows the ROC curves of different credit scoring models on four large datasets: Bankfear, Fannie, Give, and LC. In

Figure 4, it is obvious to notice that the XGBoost-B-GHM outperforms any of the other models on all four large datasets, especially on LC. Upon comparing the fine-grained visualization, it becomes evident that the ROC curve convergence rates of the XGB and XGBoost-B-GHM are very similar. The XGB is second only to the XGBoost-B-GHM in classification performance and also shows better classification performance. By comparing with MG-GBDT, we obtain a model with better classification performance than the advanced integrated model proposed by Liu et al. [

14], and obtain more accurate results under the premise of inheriting the good interpretability of the hierarchical organization. From

Figure 4a,c, we can find that NN has the worst classification performance, which may be caused by the extreme imbalance of data. NN is an instance-based learning method, and its classification decision is completely dependent on the nearest neighbor samples in the training dataset. In imbalanced datasets, the number of samples of a few classes is far less than that of most classes, so the samples of most classes are denser in space and easier to become the nearest neighbors, which makes NN algorithm present a great disadvantage in classification accuracy. Two linear algorithms, LR and LDA, also expose such problems. This means that the performance of the algorithm based on boosting is much superior to that of the baseline model, and it also shows that it is necessary to deal with imbalanced data.

In addition to the ROC, for the sake of further showing the superiority of the model, we selected accuracy score (Acc), precision score (Prec), recall rate (Rec), Brier score (BS), and H-measure (HM) as comparative metrics.

Accuracy is the most basic evaluation index, which describes whether the overall result is predicted correctly or not. The value of accuracy can be calculated by the following formula:

The higher the accuracy, the better the overall prediction performance of the model. In many studies, accuracy may not be a completely reliable indicator because there is a category imbalance in the dataset. In this study, the GHM loss function is used to deal with the problem of class imbalance, which makes the model pay balanced attention to different classes of samples, thus significantly improving the accuracy value of the model.

Precision and accuracy look similar, but they are completely different concepts. Precision is the percentage of samples that are actually positive when the model predicts positive results. It measures the reliability of the model’s positive results and can be calculated by the following formula:

The recall rate conveys the probability that the sample is correctly predicted as a positive example among all the samples that are actually positive examples. It measures the probability that the model will predict all the positive examples correctly, and its formula is as follows:

According to Equations (15) and (17), the higher the accuracy and recall rate, the more superior the predictive capabilities of the model. However, there is often a tradeoff between the two; that is, the improvement in one indicator is often accompanied by the decline in another indicator, so comprehensive consideration is necessary.

Brier score is also a measure of the difference between the algorithm’s predicted value and the true value and is calculated as the mean square error of the probabilistic prediction relative to the test sample, expressed as

where

N is the number of samples and

denotes the indicator function. Therefore, when the Brier score is small, it means that the distinctiveness between the prediction probability and the actual result is tiny; that is, the prediction of the model is more accurate, and vice versa, that the prediction performance of the model is poor.

Table 4 shows the performance comparison of various credit scoring models on the Bankfear dataset. According to

Table 4, the XGBoost-B-GHM performs better than the baseline credit scoring models that were mentioned at the beginning of 3.2 in three out of five metrics, and also shows better predictive performance than the advanced models (MG-GBDT, XGB, and LGB). The XGBoost-B-GHM scores closest to 1 on the accuracy, AUC, and precision indicators with higher predictive accuracy and reliability. In addition, due to the tradeoff between precision and recall rate, it is difficult for the same model to reach the highest value in both two indexes. Therefore, although the precision of the XGBoost-B-GHM is not the highest among all the models, it is undoubtedly the best in terms of the comprehensive performance of precision and recall rate. Nevertheless, it is worth mentioning that the XGBoost-B-GHM did not achieve better performance in the BS metric than XGB and LGB. Still, the XGBoost-B-GHM scores better on the BS metric than the other benchmark credit scoring models. Although it performs well in many aspects, it may not be dominant in specific datasets or tasks, which also conforms to the fact that each model has its applicable scenarios and limitations, and the selection and adjustment of hyperparameters still need to be optimized.

In the comparison of fifteen models, LR performed the worst in accuracy, AUC, and recall rate, NN performed the worst in precision, and AdaBoost performed the worst in BS. From this, we can easily find that the predictive efficiency of the benchmark credit scoring model is not very high. Among them, LR and LDA had the worst overall performance. Considering that Bankfear is a large dataset, its sample size is sufficient to support the training of machine learning models, so the problem of sparse data can be excluded. Then, the poor performance of LDA and LR is mainly due to their linear nature, and, secondly, it may be that it ignores the order between words, leading to a certain degree of semantic loss. In addition, compared with more advanced ensemble learning, LDA is not efficient when processing large-scale datasets and cannot fully learn the features of the dataset, thus affecting its prediction accuracy.

Table 5 shows the performance comparison of various credit scoring models on the LC dataset. As can be observed from

Table 5, the XGBoost-B-GHM achieved the best results in every evaluation indicator. This surprising result, on the one hand, shows that the parameter settings on the LC dataset are almost optimal, and, on the other hand, it shows a significant improvement in the classification performance compared to its base model. In addition, MG-GBDT and XGB also show good classification and prediction performance. Because XGB’s training complexity is lower than the XGBoost-B-GHM, it can be used as an effective alternative to the XGBoost-B-GHM. The results shown in

Table 5 show that KNN is almost the worst-performing model on the LC dataset, which is similar but not identical to the results on the Bankfear dataset. As with ROC curves, extremely imbalanced datasets make KNN poor for classification and prediction performance.

Table 6 displays the performance comparison of various credit scoring models on the Fannie dataset. As can be observed from

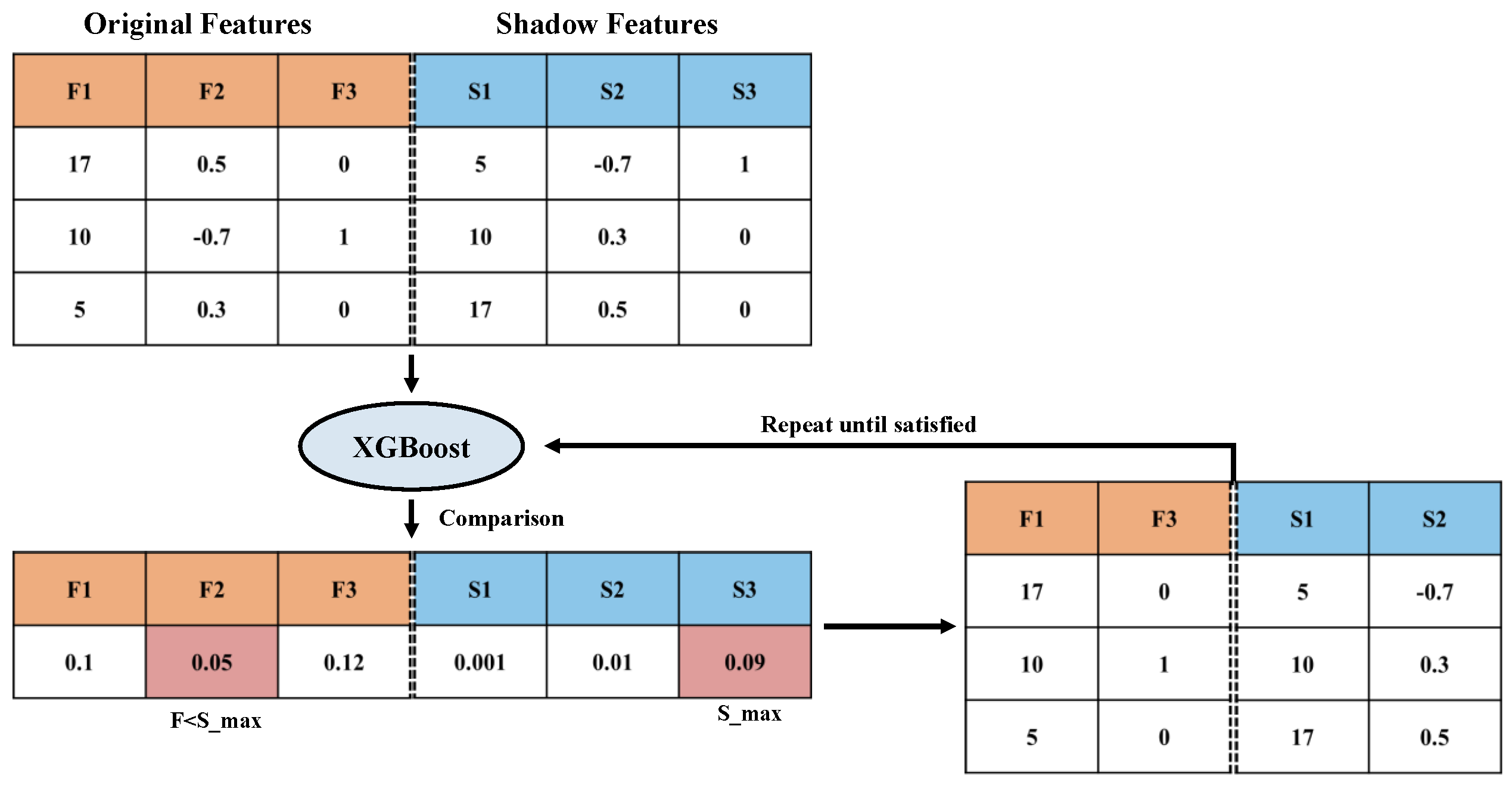

Table 6, compared with the performance of MG-GBDT, XGB, and LGB, the overall performance of the XGBoost-B-GHM has been greatly improved, especially in accuracy, AUC, and recall rate, and the XGBoost-B-GHM has achieved the best results. In the precision score, MG-GBDT is better than the XGBoost-B-GHM, and, in the Brier score, all the models except AdaBoost, KNN, and NN are better than the new model. This result may be related to the characteristics of the Fannie dataset. Boruta is an effective method for feature selection, but it may remove some features that are critical to the model performance under different hyperparameter settings for different datasets. At the same time, hyperparameter adjustment is also crucial. The Brier score can be reduced by tuning the parameters without affecting the overall performance.

Table 7 reveals the performance comparison of various credit scoring models on the Give dataset. Similar in some respects to the results of the Bankfear and Fannie datasets, the XGBoost-B-GHM presents the best results on three metrics, namely accuracy score, AUC, and recall rate. On precision score, XGB is significantly higher than any of the other models, and LGB’s Brier score is better than the XGBoost-B-GHM. XGB is 25% more precise than the XGBoost-B-GHM, but the recall rate is 86.7% lower than the XGBoost-B-GHM, which is a considerable gap. It can be observed that the new model proposed in this paper, namely the XGBoost-B-GHM, demonstrates great improvement over the original XGBoost algorithm. Considering other performance aspects, LGB may be an effective alternative to the XGBoost-B-GHM.

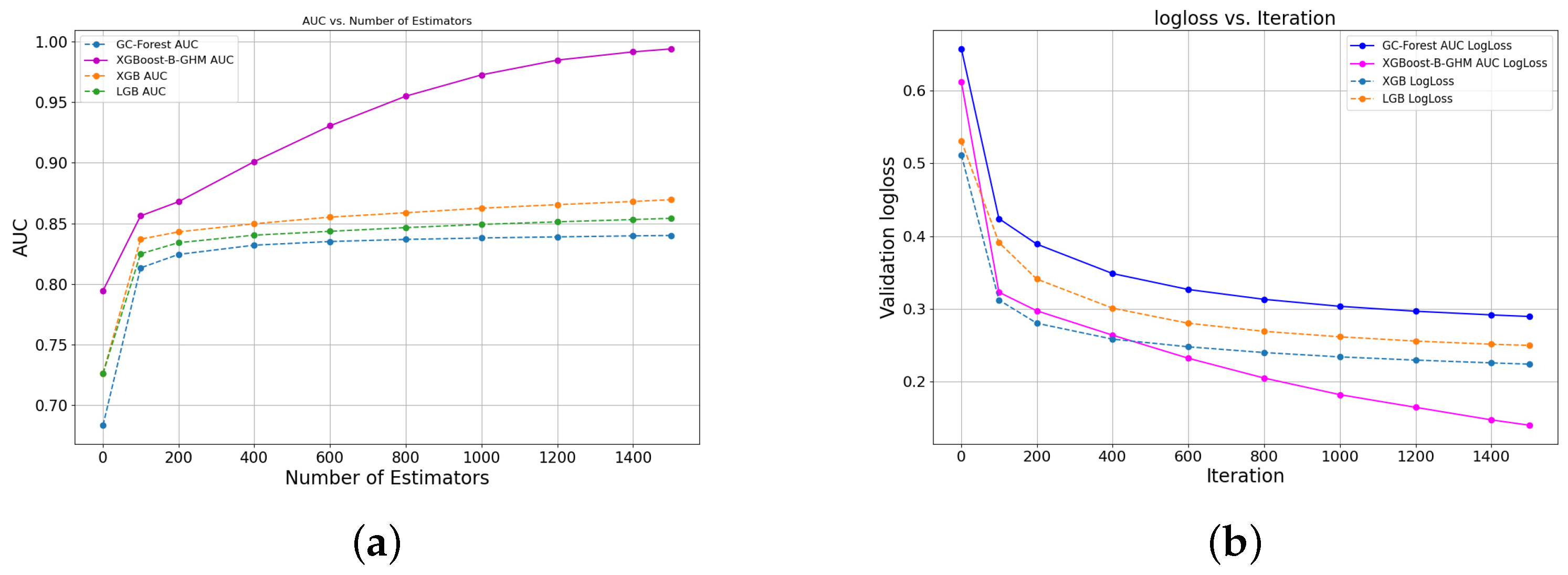

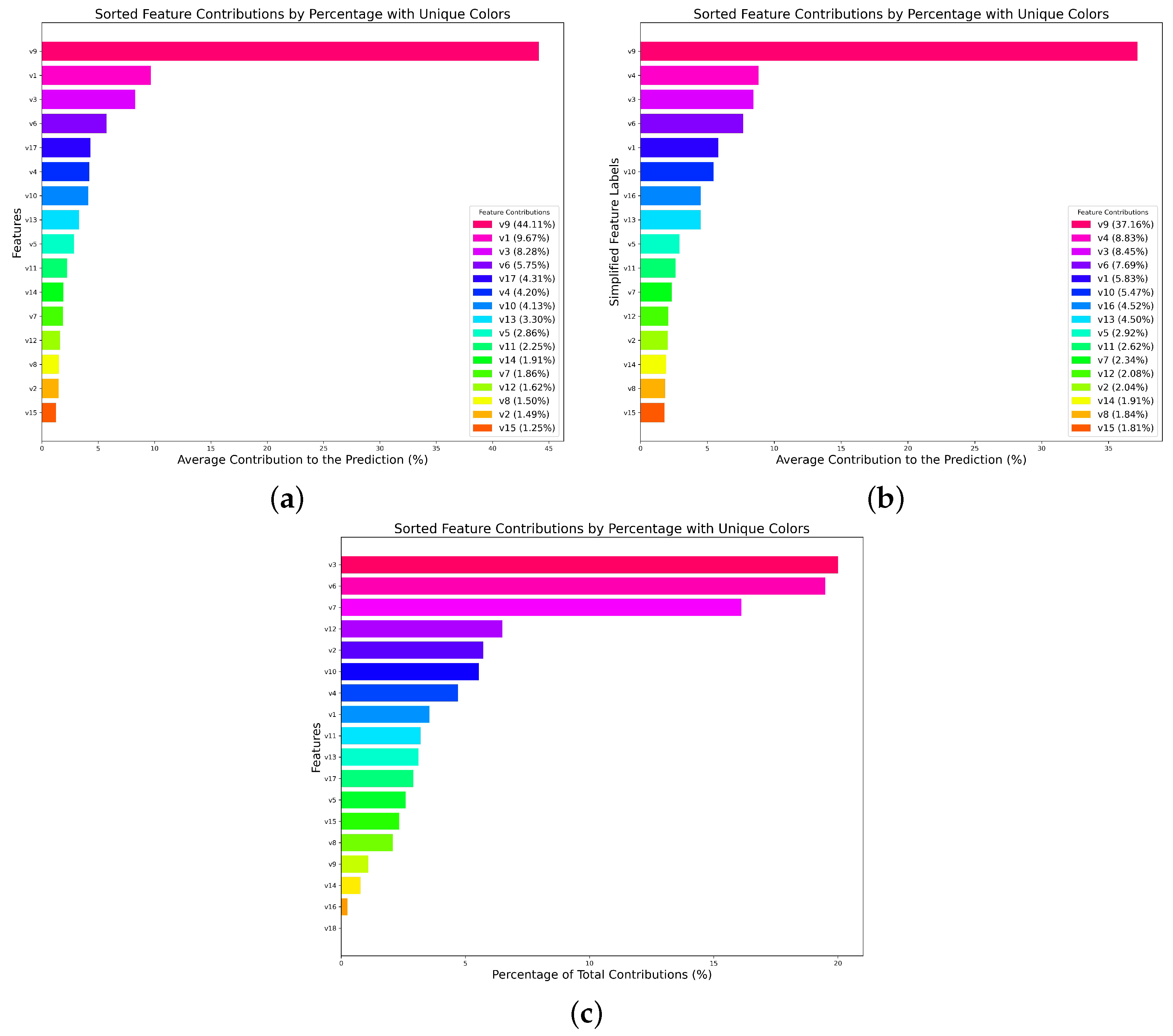

4.3. AUC and Loss Curves of Integration Models

Due to the competitive performance of several boosting models on ROC curves, we further compared the AUC and loss curves of the MG-GBDT, XGB, LGB, and XGBoost-B-GHM. The AUC considers the performance of the model under all the possible thresholds, providing a more complete picture of the classification capability of the model than the accuracy or recall rate under a single threshold. Moreover, in the process of adjusting the parameters, it is very important to observe the changes in the various parameters during training, so the validation loss function is introduced into our visualization.

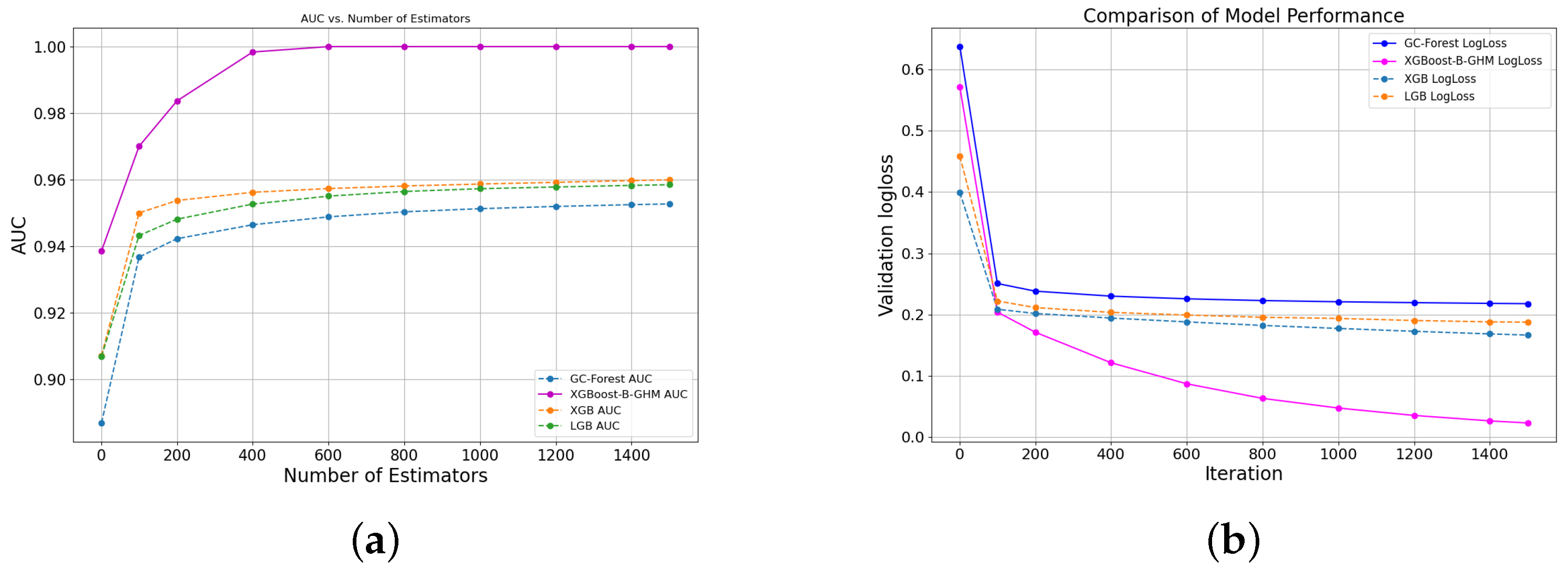

Figure 5,

Figure 6,

Figure 7 and

Figure 8 show the AUC and loss curves of four advanced integration models on four credit score datasets, where GC-Forest represents the core algorithm of the MG-GBDT model and Boruta-XGB represents the core algorithm of the XGBoost-B-GHM.

Figure 5 shows the AUC curve and the loss curve on the LC dataset. The overall trend between the two curves and the gap between the other models are similar to the performance on the Bankfear dataset, but the performance on the LC dataset is even better. The AUC curve of the XGBoost-B-GHM converges to 1 after 400 iterations. The performance of LGB and XGB is similar, while XGB is slightly higher than LGB. Both of them converge to 0.96 after 400 iterations, and GC-Forest has the worst performance. In the comparison of the ensemble algorithm loss curves shown in

Figure 5b, the XGBoost-B-GHM has the smallest prediction error, and the performance of XGB and LGB is opposite to that of their AUC curves, which indicates that the XGBoost-B-GHM is the optimal approach for the credit scoring of an LC dataset.

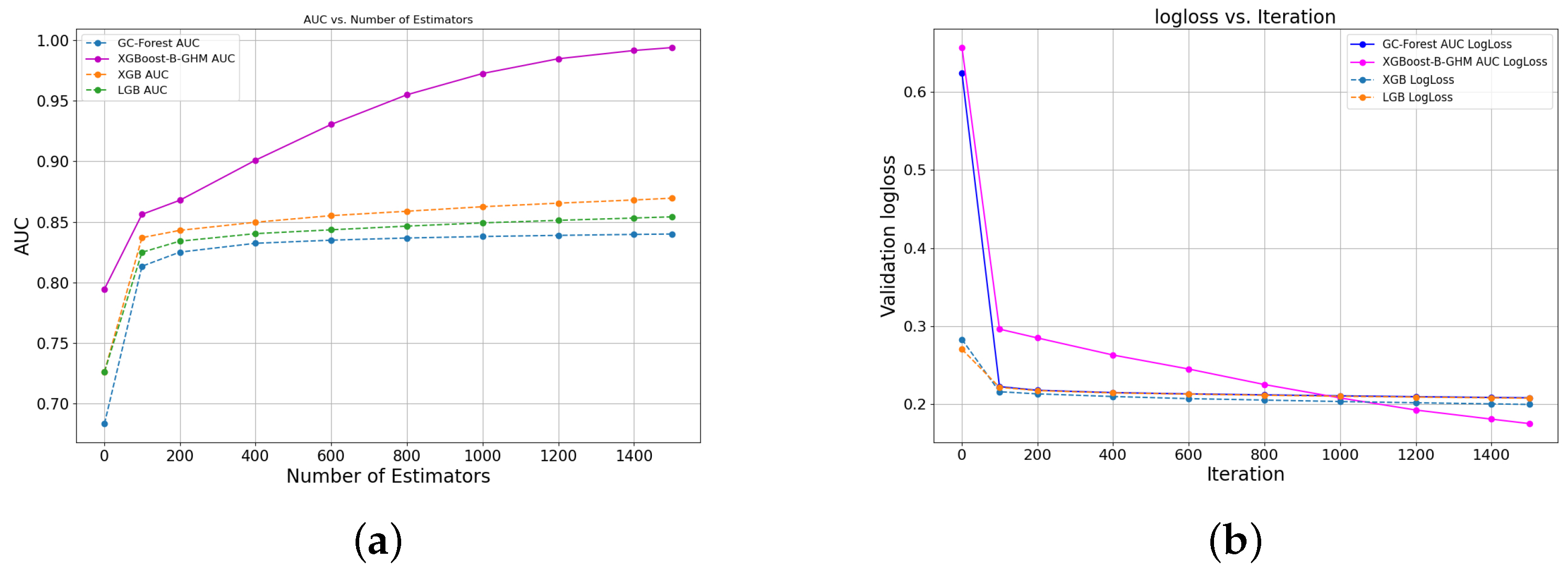

We can obtain the following information from

Figure 6.

Figure 6a shows the AUC curves of the four ensemble methods on the Bankfear dataset;

Figure 6b shows the loss curves of the four ensemble methods on the Bankfear dataset. It can be clearly observed that, after 200 iterations, the slope of the AUC curve of the XGBoost-B-GHM increases significantly, showing a strong performance far superior to the other three ensemble models. After 400 iterations, the prediction error of the XGBoost-B-GHM decreased significantly, but, different from the other three integrated models, the loss curve of the new model still showed a downward trend after 1000 iterations, and more iterations were needed to stabilize it. After 1400 iterations, a stable trend appeared.

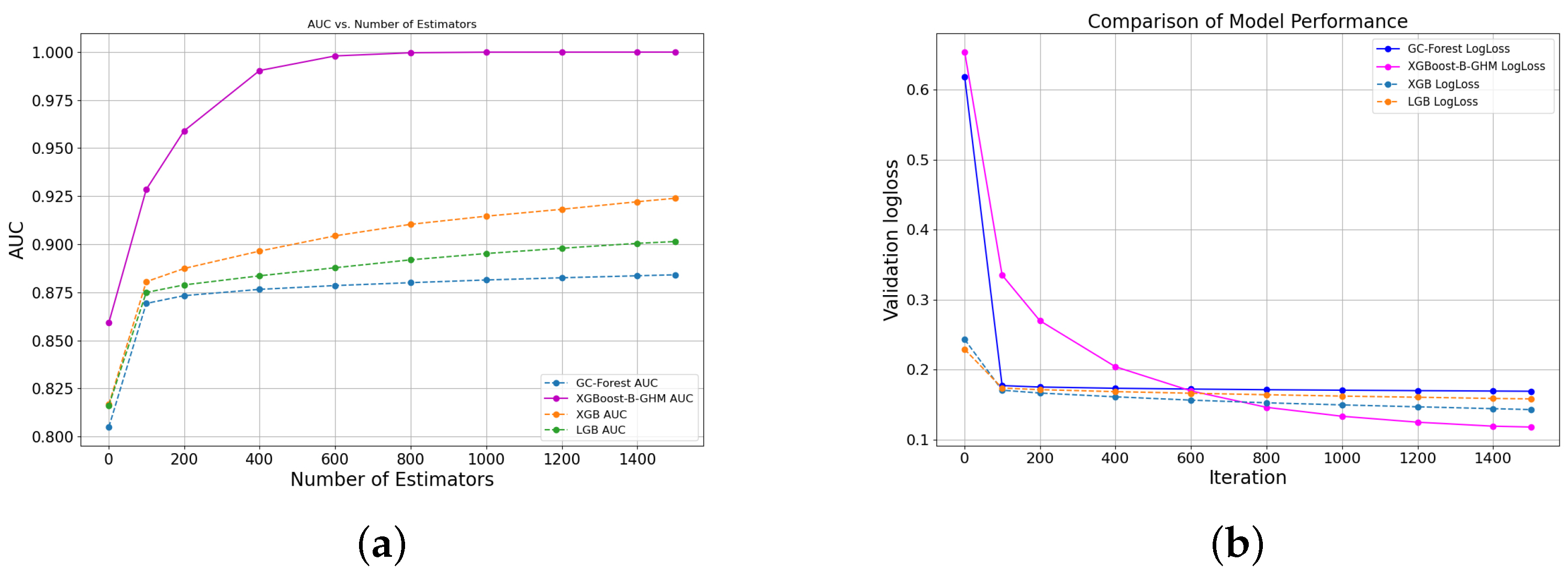

The plot in

Figure 7 presents the AUC curve and the loss curve on the Fannie dataset. In

Figure 7a, the optimal AUC curve on the Fannie dataset is still provided by the XGBoost-B-GHM model, and the performance of the other three ensemble models is still the same as before, among which XGB is the best, LGB is second, and GC-Forest is the worst, but there is a large gap between the three models and the XGBoost-B-GHM. As depicted in

Figure 7b, when the number of iterations is small, the prediction error of the XGBoost-B-GHM is large, and, after 1000 iterations, it obtains the optimal convergence loss. In addition, the convergence values of the loss curves of LGB and GC-Forest are similar, and it can be observed from the fine-grained comparison plot that the predicted loss of LGB is slightly lower than that of GC-Forest.

Figure 8 shows the AUC curve and the loss curve on the Give dataset. From

Figure 8a,b, we can find that it is still the XGBoost-B-GHM that obtains the best convergence AUC and convergence loss. XGB obtained the second-best convergence AUC and loss score, but its AUC performance was still significantly lower than the XGBoost-B-GHM.

On all four datasets, at the beginning of training, the loss value of the XGBoost-B-GHM decreased significantly, indicating that the learning rate was set appropriately and the gradient descent process was underway. After a certain number of iterations, the loss curve of the XGBoost-B-GHM decreases steadily until it becomes stable. On the Give dataset, the prediction error of the XGBoost-B-GHM after 100 iterations is much smaller than that of the other models. On the Bankfear dataset, the prediction error of the XGBoost-B-GHM after 400 iterations is much smaller than that of the other models. After more than 1000 iterations, the prediction error of the proposed model on both the LC and Fannie datasets gradually leveled off. We can also see from

Figure 7b and

Figure 8b that the loss curves of XGB and LGB are similar because they have the same gradient lifting framework and similar loss functions and optimization objectives. The XGBoost-B-GHM creatively embeds the GHM loss function in XGB with more subtle errors than XGB and LGB, which further validates the excellent performance of the XGB model embedded in GHM in handling imbalanced data.

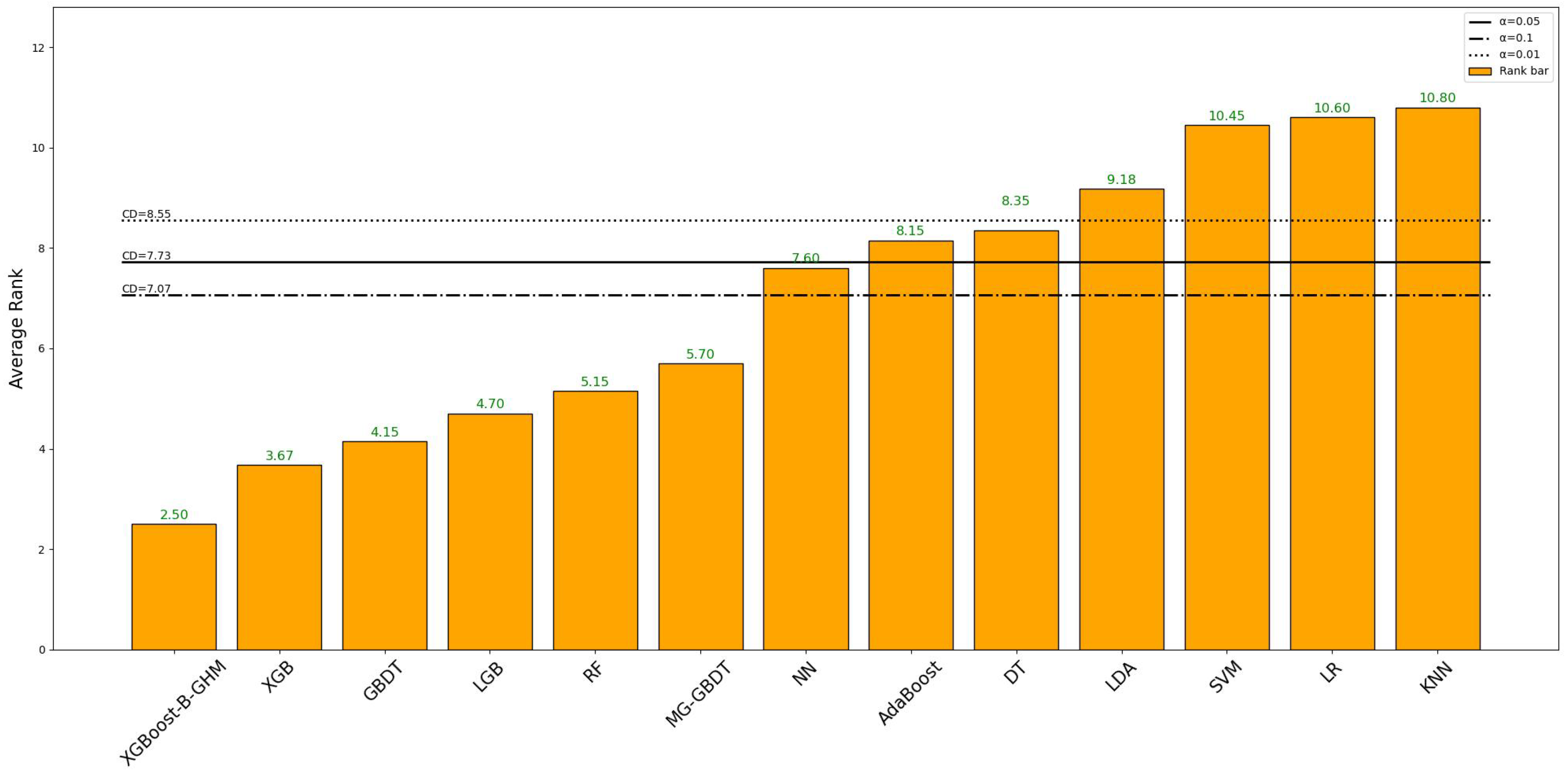

4.6. Significance Test

For the sake of testing whether there are differences in the performance comparison results of each group of data in the experiment and whether the differences are significant, a statistical significance test is conducted. Due to the differences between non-parametric statistical testing methods and parametric hypothesis testing methods, the non-parametric statistical significance testing technique Friedman test was adopted because we could not make simple assumptions about the population distribution in large datasets. The statistics of Friedman’s test can be calculated as follows:

where

denotes the mean of the ranks of the j-th method, k denotes the number of methods involved, and N denotes the number of datasets or experiments involved. Friedman statistics obey the chi-squared distribution of k-1 degrees of freedom, and they are calculated to 29.8479 and the

p-value of the statistics is calculated to 0.0029, which are shown in

Table 18. This P-value is less than the significance level (set

to 0.1, 0.05, and 0.01, respectively, for calculation), so the null hypothesis can be rejected and the scores of each metric are considered to be significantly different. Due to the condition that the null hypothesis is rejected, the Nemenyi follow-up test will be performed. In the Nemenyi test, two methods are said to be significantly different if the mean rank difference between them reaches a critical difference (CD). The calculation of CD can be expressed by the following formula:

In the above formula,

represents the significance level. In this experiment, we set the value of

as 0.1, 0.05, and 0.01, respectively, to calculate CD at different significance levels, and

is the constant obtained by looking up the table and

= 2.9480. So far, we can calculate the CD values at the corresponding significance level as 7.07, 7.73, and 8.55 according to Equation (

18).

Figure 11 depicts the average ranking of all the metrics on the four datasets for all the credit evaluation models tested in this paper. The three horizontal lines from top to bottom show the value of CD calculated with significance levels of 0.01, 0.5, and 0.1, respectively. We can see from

Figure 11 that the average ranking of the XGBoost-B-GHM is 2.5, which is the highest among all 13 models. The experimental results show that the performance of the ensemble model is much higher than that of the single model, and the tree structure is more suitable for imbalanced data, and its performance improvement is significant. The XGB model performs better than LGB on imbalanced data and is a reliable alternative to the XGBoost-B-GHM. SVM and LR, which were originally linear classifiers, have shown poor performance on imbalanced datasets. KNN ranks the lowest, indicating that it may not be a model that can be used on imbalanced data, although it may not perform as poorly on other datasets.