Defining Complex Adaptive Systems: An Algorithmic Approach

Abstract

1. Introduction

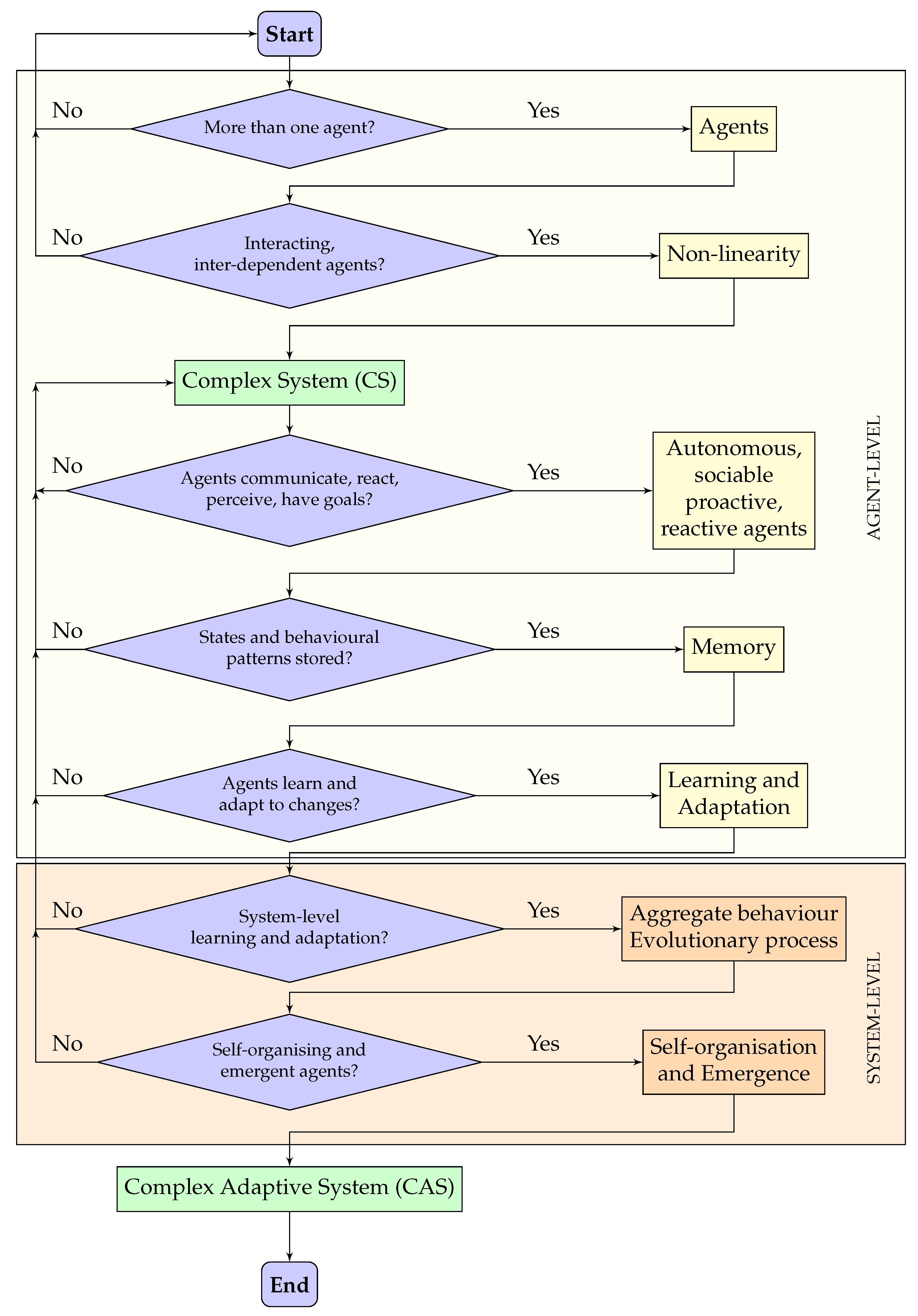

- A clear delineation between a CS and a CAS, providing minimal agent properties to meet a CS definition and an ordered set of properties to meet a full CAS definition;

- A robust algorithmic definition of a CAS that can act as a basis for an auditing tool that can determine whether a system is a CAS;

- An exploration of the proposed definition through two case studies.

2. Review of CAS Definitions

2.1. Systematic Review Methodology

2.2. Reviewed Definitions

- A CS comprises several interconnected elements interacting dynamically;

- These interactions are nonlinear, exhibiting rich behavioural patterns and competition;

- Because CSs constantly change and evolve, these systems do not hold equilibrium conditions, as these conditions make the system flat;

- Individual elements in CSs do not have knowledge of the behaviour of the whole system.

2.3. Analysis

- What are the minimal agent properties required to define a CS or a CAS?

- What are the minimal properties for a system to be considered a CS?

- What are the minimal properties for a system to be considered a CAS?

- How can a CS and a CAS be differentiated?

2.3.1. Complexity and Hierarchy

2.3.2. Self-Organisation and Emergence

2.3.3. Minimal CS and CAS Properties

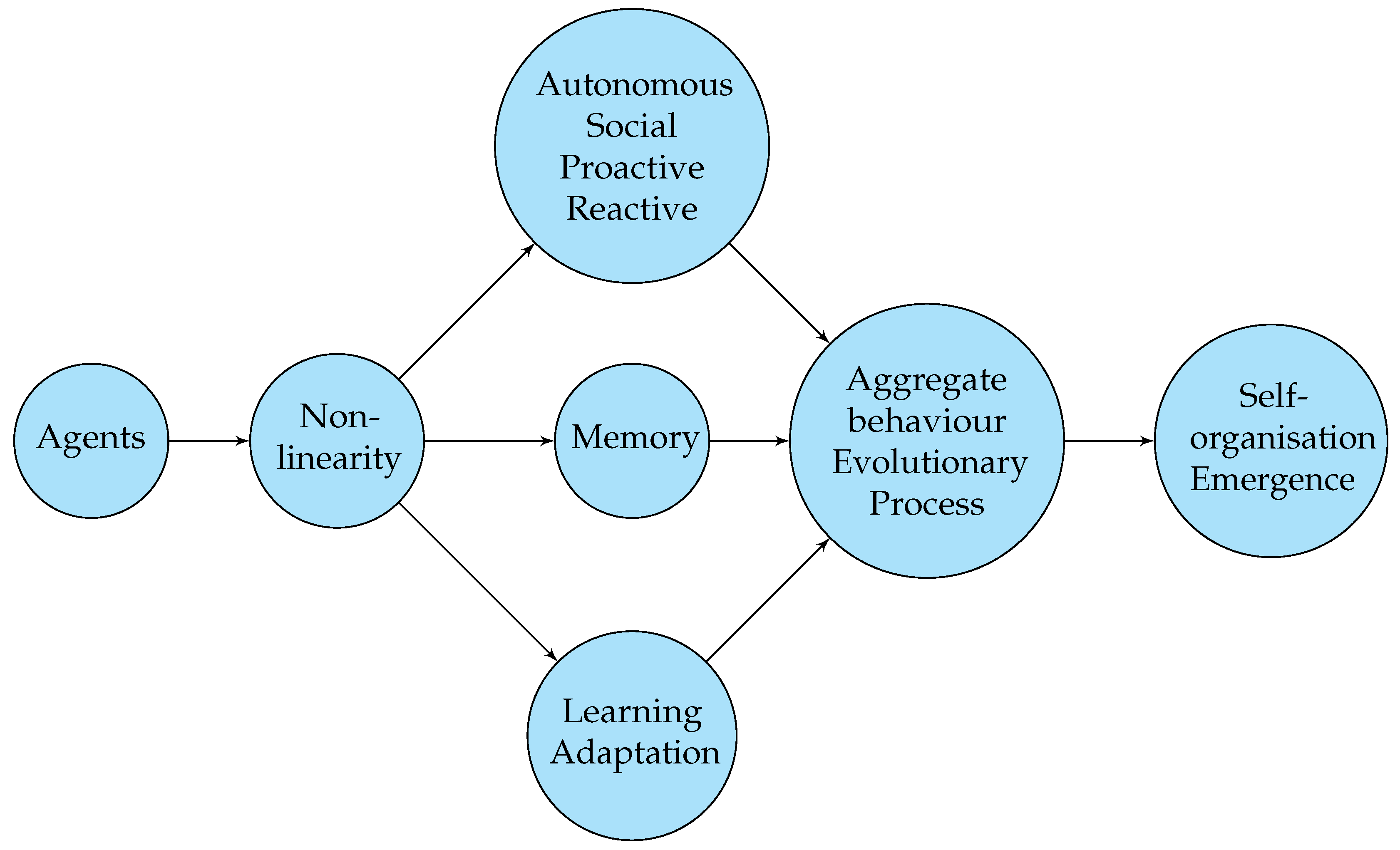

3. A Novel CAS Definition

3.1. First Stage: Complex System

3.2. Second Stage: Complex Adaptive System

3.2.1. Autonomous, Proactive, Reactive Agents with Social Ability

- Autonomous: Agents are defined to be autonomous if they perform their operations without any internal or external intervention. They are fully independent, and there is no central authority;

- Reactive: Agents perceive the environment in which they reside and respond to the changes in a timely manner;

- Proactive: Agents intend to act and have goals to be achieved, exhibiting goal-oriented behaviour;

- Social ability: Agents have means of communication to act, react, and be responsible for their own actions to make decisions and achieve their goals.

3.2.2. Memory

3.2.3. Learning and Adaptation

3.2.4. Aggregate Behaviour and Evolutionary Process

3.2.5. Self-Organisation and Emergence

4. Case Study 1: Clinical Commissioning Groups (CCGs)

4.1. Complex System

4.1.1. Inter-Connected and Inter-Dependent Agents

4.1.2. Nonlinearity

4.2. Complex Adaptive System

4.2.1. Autonomy of Agents

4.2.2. Memory

4.2.3. From Learning and Adaptation to Self-Organisation and Emergence

4.2.4. Results

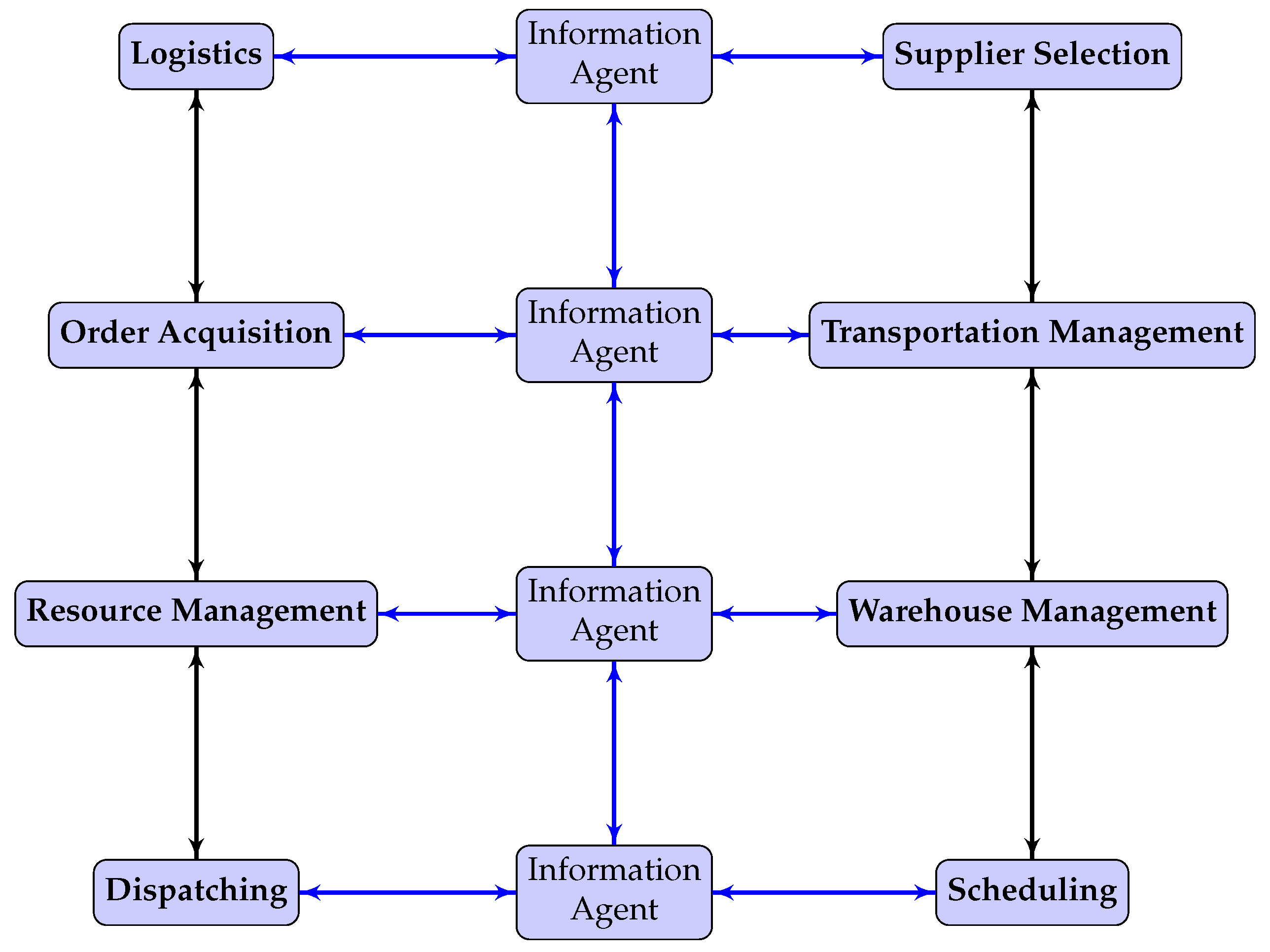

5. Case Study 2: Supply Chain Management

5.1. Complex System

5.1.1. Interconnected and Interdependent Agents

5.1.2. Nonlinearity

5.2. Complex Adaptive System

5.2.1. Autonomy of Agents

5.2.2. Memory

5.2.3. From Learning and Adaptation to Self-Organisation and Emergence

5.2.4. Results

5.3. Further Examples

6. Discussion and Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zimmerman, B.; Lindberg, C.; Plsek, P. A complexity science primer: What is complexity science and why should I learn about it. In Adapted From: Edgeware: Lessons From Complexity Science for Health Care Leaders; VHA Inc.: Dallas, TX, USA, 1998. [Google Scholar]

- Rouse, W.B. Health care as a complex adaptive system: Implications for design and management. Bridge-Wash.-Natl. Acad. Eng. 2008, 38, 17. [Google Scholar]

- Mitchell, M. Complex systems: Network thinking. Artif. Intell. 2006, 170, 1194–1212. [Google Scholar] [CrossRef]

- Abbott, R.; Hadžikadić, M. Complex adaptive systems, systems thinking, and agent-based modeling. In Advanced Technologies, Systems, and Applications; Springer: Berlin/Heidelberg, Germany, 2017; pp. 1–8. [Google Scholar]

- Hodiamont, F.; Jünger, S.; Leidl, R.; Maier, B.O.; Schildmann, E.; Bausewein, C. Understanding complexity—The palliative care situation as a complex adaptive system. BMC Health Serv. Res. 2019, 19, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Hughes, G.; Shaw, S.E.; Greenhalgh, T. Rethinking integrated care: A systematic hermeneutic review of the literature on integrated care strategies and concepts. Milbank Q. 2020, 98, 446–492. [Google Scholar] [CrossRef]

- Jagustović, R.; Zougmoré, R.B.; Kessler, A.; Ritsema, C.J.; Keesstra, S.; Reynolds, M. Contribution of systems thinking and complex adaptive system attributes to sustainable food production: Example from a climate-smart village. Agric. Syst. 2019, 171, 65–75. [Google Scholar] [CrossRef]

- Kitchenham, B.; Charters, S. Guidelines for Performing Systematic Literature Reviews in Software Engineering; Technical Report EBSE 2007-001, Keele University and Durham University Joint Report; Scientific Research: Atlanta, GA, USA, 2007. [Google Scholar]

- Martín-Martín, A.; Thelwall, M.; Orduna-Malea, E.; Delgado López-Cózar, E. Google Scholar, Microsoft Academic, Scopus, Dimensions, Web of Science, and OpenCitations’ COCI: A multidisciplinary comparison of coverage via citations. Scientometrics 2021, 126, 871–906. [Google Scholar] [CrossRef] [PubMed]

- Cilliers, P. Complexity and Postmodernism: Understanding Complex Systems; Routledge: London, UK, 2002. [Google Scholar]

- Ladyman, J.; Lambert, J.; Wiesner, K. What is a complex system? Eur. J. Philos. Sci. 2013, 3, 33–67. [Google Scholar] [CrossRef]

- Simon, H.A. The architecture of complexity. In Facets of Systems Science; Springer: Berlin/Heidelberg, Germany, 1991; pp. 457–476. [Google Scholar]

- Rudall, B.H.; Wallis, S.E. Emerging order in CAS theory: Mapping some perspectives. Kybernetes 2008, 37, 1016–1029. [Google Scholar] [CrossRef]

- Chan, S. Complex adaptive systems. In ESD. 83 Research Seminar in Engineering Systems; MIT: Cambridge, MA, USA, 2001; Volume 31, pp. 1–19. [Google Scholar]

- Holland, J.H. Complex adaptive systems. Daedalus 1992, 121, 17–30. [Google Scholar]

- Plsek, P.E.; Greenhalgh, T. The challenge of complexity in health care. BMJ 2001, 323, 625–628. [Google Scholar] [CrossRef]

- Baryannis, G.; Woznowski, P.; Antoniou, G. Rule-Based Real-Time ADL Recognition in a Smart Home Environment. In Proceedings of the Rule Technologies. Research, Tools, and Applications: 10th International Symposium on Rules and Rule Markup Languages for the Semantic Web (RuleML 2016), Stony Brook, NY, USA, 6–9 July 2016; Alferes, J.J., Bertossi, L., Governatori, G., Fodor, P., Roman, D., Eds.; Lecture Notes in Computer Science. Springer: Berlin/Heidelberg, Germany, 2016; Volume 9718, pp. 325–340. [Google Scholar] [CrossRef]

- Dorri, A.; Kanhere, S.S.; Jurdak, R. Multi-agent systems: A survey. IEEE Access 2018, 6, 28573–28593. [Google Scholar] [CrossRef]

- Wooldridge, M.; Jennings, N.R. Intelligent agents: Theory and practice. Knowl. Eng. Rev. 1995, 10, 115–152. [Google Scholar] [CrossRef]

- Franklin, S.; Graesser, A. Is it an Agent, or just a Program?: A Taxonomy for Autonomous Agents. In International Workshop on Agent Theories, Architectures, and Languages; Springer: Berlin/Heidelberg, Germany, 1996; pp. 21–35. [Google Scholar]

- Castle, C.J.; Crooks, A.T. Principles and Concepts of Agent-Based Modelling for Developing Geospatial Simulations; Centre for Advanced Spatial Analysis (UCL): London, UK, 2006. [Google Scholar]

- Camazine, S.; Deneubourg, J.; Franks, N.; Sneyd, J.; Theraulaz, G.; Bonabeau, E. Self-Organization in Biological Systems; Princeton University Press: Princeton, NJ, USA, 2001. [Google Scholar]

- Amaral, L.A.; Ottino, J.M. Complex networks: Augmenting the framework for the study of complex systems. Eur. Phys. J. B 2004, 38, 147–162. [Google Scholar] [CrossRef]

- Funtowicz, S.; Ravetz, J.R. Emergent complex systems. Futures 1994, 26, 568–582. [Google Scholar] [CrossRef]

- Holden, L.M. Complex adaptive systems: Concept analysis. J. Adv. Nurs. 2005, 52, 651–657. [Google Scholar] [CrossRef] [PubMed]

- Siegenfeld, A.F.; Bar-Yam, Y. An introduction to complex systems science and its applications. Complexity 2020, 2020, 6105872. [Google Scholar] [CrossRef]

- Dooley, K. Complex adaptive systems: A nominal definition. Chaos Netw. 1996, 8, 2–3. [Google Scholar]

- Tarvid, A. Complex Adaptive Systems and Agent-Based Modelling. In Agent-Based Modelling of Social Networks in Labour–Education Market System; Springer: Berlin/Heidelberg, Germany, 2016; pp. 23–38. [Google Scholar]

- Carmichael, T.; Hadžikadić, M. The fundamentals of complex adaptive systems. In Complex Adaptive Systems; Springer: Berlin/Heidelberg, Germany, 2019; pp. 1–16. [Google Scholar]

- NHS Commissioning Board. Clinical Commissioning Group Governing Body Members: Role Outlines, Attributes and Skills; NHS Commissioning: Leeds, UK, 2012. [Google Scholar]

- Fox, M.S.; Chionglo, J.F.; Barbuceanu, M. The Integrated Supply Chain Management System; Department of Industrial Engineering, University of Toronto: Toronto, ON, Canada, 1993. [Google Scholar]

- Ladyman, J.; Wiesner, K. What Is a Complex System? Yale University Press: New Haven, CT, USA, 2020. [Google Scholar]

- Sumpter, D.J.; Beekman, M. From nonlinearity to optimality: Pheromone trail foraging by ants. Anim. Behav. 2003, 66, 273–280. [Google Scholar] [CrossRef]

- Carpendale, J.I.M.; Frayn, M. Autonomy in ants and humans. Behav. Brain Sci. 2016, 39, e95. [Google Scholar] [CrossRef]

- Van Bilsen, A.; Bekebrede, G.; Mayer, I. Understanding Complex Adaptive Systems by Playing Games. Inform. Educ. 2010, 9, 1–18. [Google Scholar] [CrossRef]

- Alibrahim, A.; Wu, S. Modelling competition in health care markets as a complex adaptive system: An agent-based framework. Health Syst. 2020, 9, 212–225. [Google Scholar] [CrossRef] [PubMed]

- Braz, A.C.; de Mello, A.M. Circular economy supply network management: A complex adaptive system. Int. J. Prod. Econ. 2022, 243, 108317. [Google Scholar] [CrossRef]

- Keshavarz, N.; Nutbeam, D.; Rowling, L.; Khavarpour, F. Schools as social complex adaptive systems: A new way to understand the challenges of introducing the health promoting schools concept. Soc. Sci. Med. 2010, 70, 1467–1474. [Google Scholar] [CrossRef]

- Long, Q.; Li, S. The Innovation Network as a Complex Adaptive System: Flexible Multi-agent Based Modeling, Simulation, and Evolutionary Decision Making. In Proceedings of the 2014 Fifth International Conference on Intelligent Systems Design and Engineering Applications, Hunan, China, 15–16 June 2014; pp. 1060–1064. [Google Scholar]

- Svítek, M.; Skobelev, P.; Kozhevnikov, S. Smart City 5.0 as an urban ecosystem of Smart services. In Service Oriented, Holonic and Multi-Agent Manufacturing Systems for Industry of the Future: Proceedings of SOHOMA 2019 9; Springer: Berlin/Heidelberg, Germany, 2020; pp. 426–438. [Google Scholar]

- Rittel, H.W.J.; Webber, M.M. Dilemmas in a general theory of planning. Policy Sci. 1973, 4, 155–169. [Google Scholar] [CrossRef]

- Francalanza, E.; Borg, J.; Constantinescu, C. Approaches for handling wicked manufacturing system design problems. Procedia CIRP 2018, 67, 134–139. [Google Scholar] [CrossRef]

- Papadakis, E.; Baryannis, G.; Petutschnig, A.; Blaschke, T. Function-Based Search of Place Using Theoretical, Empirical and Probabilistic Patterns. ISPRS Int. J. Geo-Inf. 2019, 8, 92. [Google Scholar] [CrossRef]

- Omar, M.; Baryannis, G. Semi-automated development of conceptual models from natural language text. Data Knowl. Eng. 2020, 127, 101796. [Google Scholar] [CrossRef]

| Term | Definition | Sources |

|---|---|---|

| Complexity | Involves the formation of inter-relationships among multiple interacting elements. | [1] |

| Hierarchy | One of the pivotal building blocks of a CS that represents the relationships among subsystems | [12] |

| Decomposition of a CAS into a hierarchy of subsystems is not suitable because of the potential for information loss during the interactions among subsystems. | [2] | |

| Self-organisation | The ability of CSs to “develop or change internal structure spontaneously and adaptively in order to cope with, or manipulate, their environment”. | [10] |

| Emergence | An unpredictable situation resulting from the outcome of the relationships among agents in a CAS, in which the evolution of the system cannot be described. | [1] |

| A characteristic of systems exhibiting “downward causation”, representing an increase in complexity. | [11] | |

| Complicated | A system containing large numbers of components that have limited ability to respond to changes in the environment. | [23] |

| Complex | A system containing large numbers of components that are able to adapt, self-govern, and emerge. | [23] |

| Hierarchy and Complex Systems | A hierarchical organisation of agents is not a prerequisite for a Complex System. |

| Complex System | A system that contains multiple interconnected and interdependent interacting agents that exhibit nonlinear behaviour. |

| From Complex to Complex Adaptive Systems | Complexity is a necessary but insufficient condition for a Complex Adaptive System, as supporting memory, learning and adaptation, and self-organisation and emergence are required to achieve an adaptive behaviour. |

| Memory | The ability of an agent to store patterns of behaviour in a dynamic manner, retaining or discarding patterns based on their frequency of occurring. |

| Learning and Adaptation | An agent learns as a result of its own actions, the actions of other agents, and changes to the environment or agents’ structures. Adaptation is observed when an agent changes its behaviour or strategies as a result of learning. |

| Aggregate Behaviour | The amalgamation of individual agents’ interactions; this takes place at a system level, which is referred to as the macroscopic level [10]. |

| Evolutionary process | Leads a CAS to develop over time based on learning and adaptation taking place at the system level [10]. |

| Self-organisation and Emergence | The ability of agents to organise themselves through memory, learning, and adaptation at both the agent and system levels, which leads them to exhibit properties and behaviours at the system level that are not apparent at the agent level. |

| Complex Adaptive System | A Complex System is where agents are autonomous, pro-active, and reactive; have social ability; have memory; can learn and adapt; show aggregate behaviour; show evolutionary processes; and show self-organisation and emergence. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ahmad, M.A.; Baryannis, G.; Hill, R. Defining Complex Adaptive Systems: An Algorithmic Approach. Systems 2024, 12, 45. https://doi.org/10.3390/systems12020045

Ahmad MA, Baryannis G, Hill R. Defining Complex Adaptive Systems: An Algorithmic Approach. Systems. 2024; 12(2):45. https://doi.org/10.3390/systems12020045

Chicago/Turabian StyleAhmad, Muhammad Ayyaz, George Baryannis, and Richard Hill. 2024. "Defining Complex Adaptive Systems: An Algorithmic Approach" Systems 12, no. 2: 45. https://doi.org/10.3390/systems12020045

APA StyleAhmad, M. A., Baryannis, G., & Hill, R. (2024). Defining Complex Adaptive Systems: An Algorithmic Approach. Systems, 12(2), 45. https://doi.org/10.3390/systems12020045