Constant Companionship Without Disturbances: Enhancing Transparency to Improve Automated Tasks in Urban Rail Transit Driving

Abstract

:1. Introduction

Research Gap and Motivation

- A transparent design framework that includes continuous feedback, explanatory notes, and advance predictions for different automation states during urban rail transit driving is proposed.

- The impact of different transparency levels on task performance is revealed using multidimensional metrics including SA, TiA, SoA, workload, takeover performance, and visual behavior with the help of simulated driving trials.

- The advantages and limitations of different levels of transparency are clarified using reasoning analysis combining experimental data and participant interviews, thus providing practical guidance for optimizing urban rail transit interfaces.

2. Methods

2.1. Participants

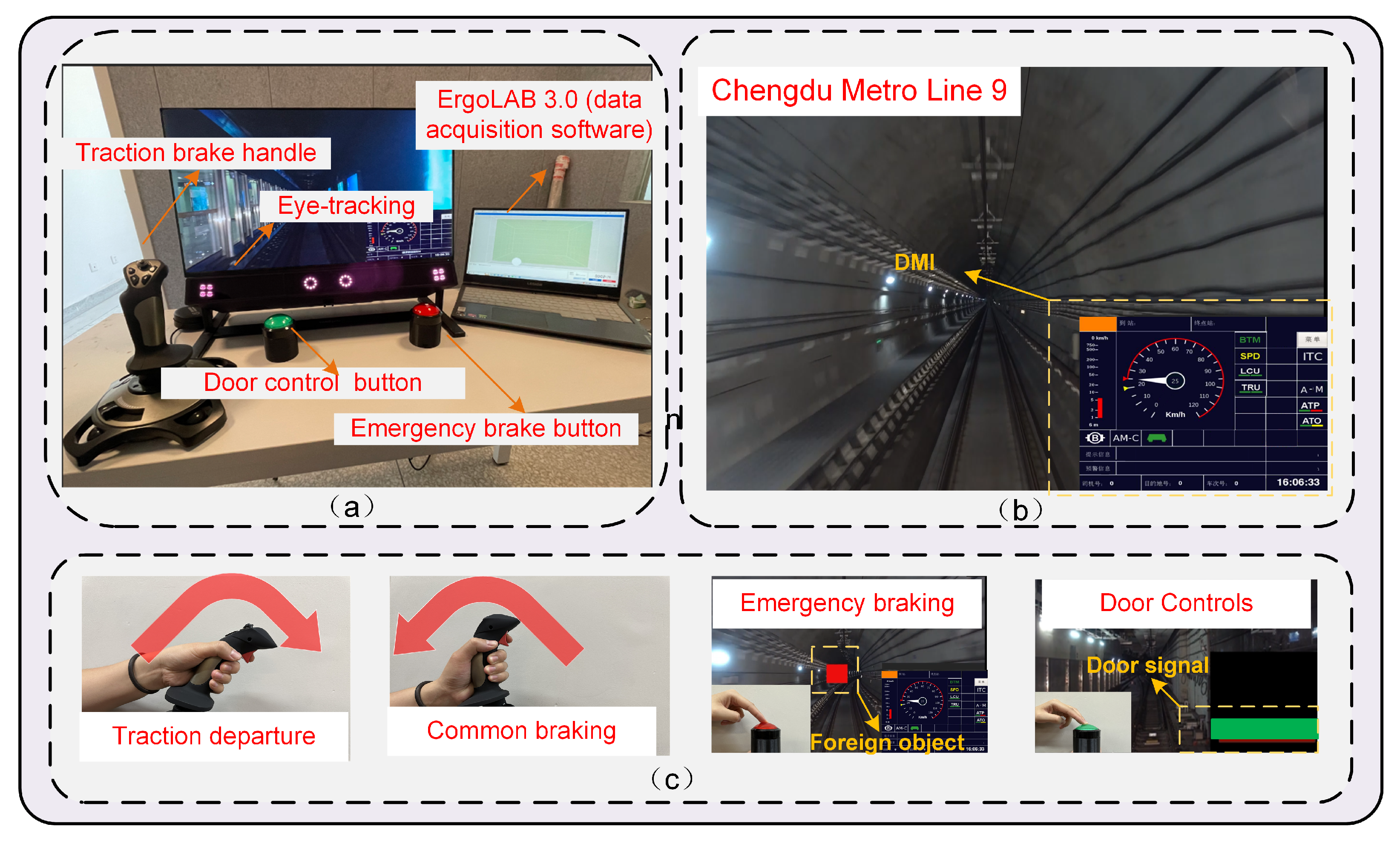

2.2. Experiment Environment

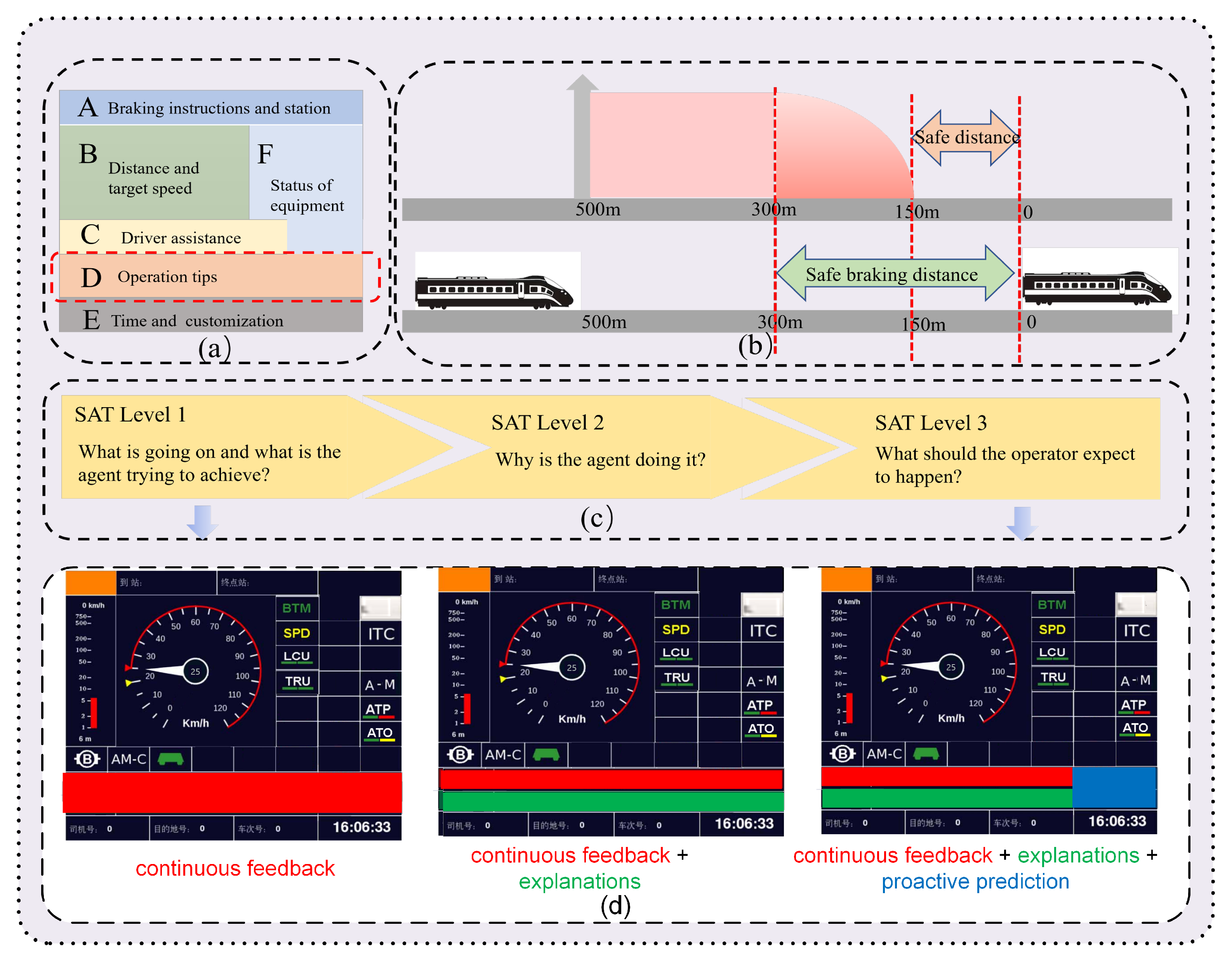

2.3. Interface Design

2.4. Scenario

2.5. Measurement

2.5.1. Situational Awareness Global Assessment Technique

2.5.2. Operational Performance Indicators

- Common brake task response time: the time between the system issuing the deceleration command and the participant pulling the handle backward by more than 10% [67];

- Emergency brake response time: the time between the system issuing the stopping command and the participant pressing of the brake button;

- Unexpected brake response time: the time from when a foreign object appears in the tunnel to when the participant presses the brake button.

2.5.3. Questionnaires

- Fatigue levels were measured using the Karolinska Sleepiness Scale (KSS), a nine-point Likert scale ranging from 1 (very alert) to 9 (very sleepy). Scores below 3 indicate a state of alertness, while scores above 7 indicate extreme drowsiness [68];

- Workload was measured using the NASA Task Load Index (NASA-TLX), requiring participants to rate their workloads across the dimensions of mental demand, physical demand, temporal demand, performance, effort, and frustration [69];

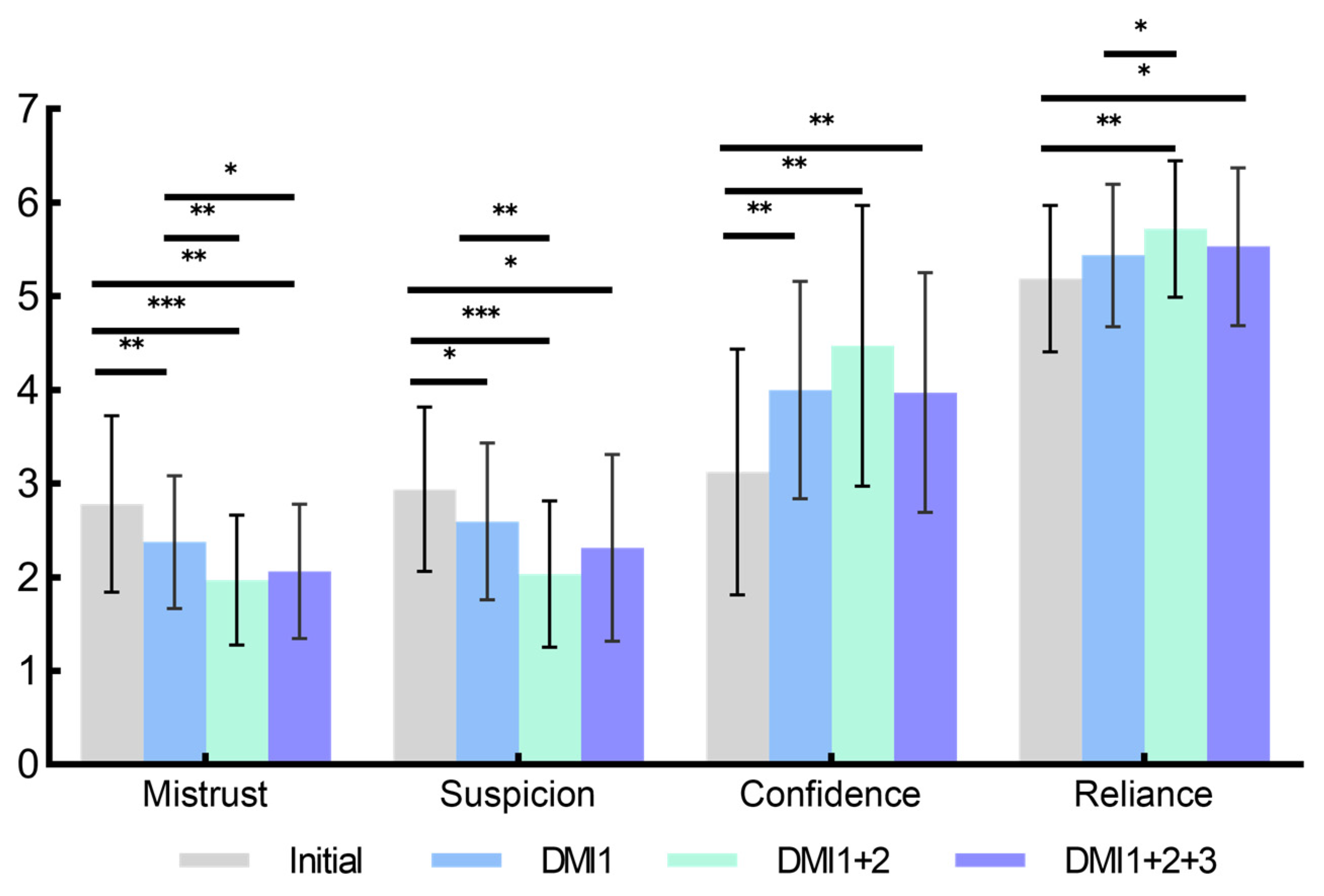

- TiA was assessed using a seven-point Likert scale questionnaire based on the study of Jian et al. [70], encompassing four trust-related items: mistrust (“the system behaves in an underhanded manner”), suspicion (“I am suspicious of the system’s intended action or outputs”), confidence (“I am confident in the system”), and reliance (“the system is reliable”);

- SoA was evaluated using a seven-point Likert scale questionnaire designed to assess the perceived degree of control during the task [71];

- Acceptance was assessed using a seven-point Likert scale questionnaire comprising nine items: (1) useful to useless, (2) pleasant to unpleasant, (3) bad to good, (4) nice to annoying, (5) effective to redundant, (6) stimulating to displeasing, (7) aiding to no help, (8) unwelcome to welcome, and (9) enhancing vigilance to sleep-inducing. Scores for items 1, 2, 4, 5, 7, and 9 were reversed during calculation [72];

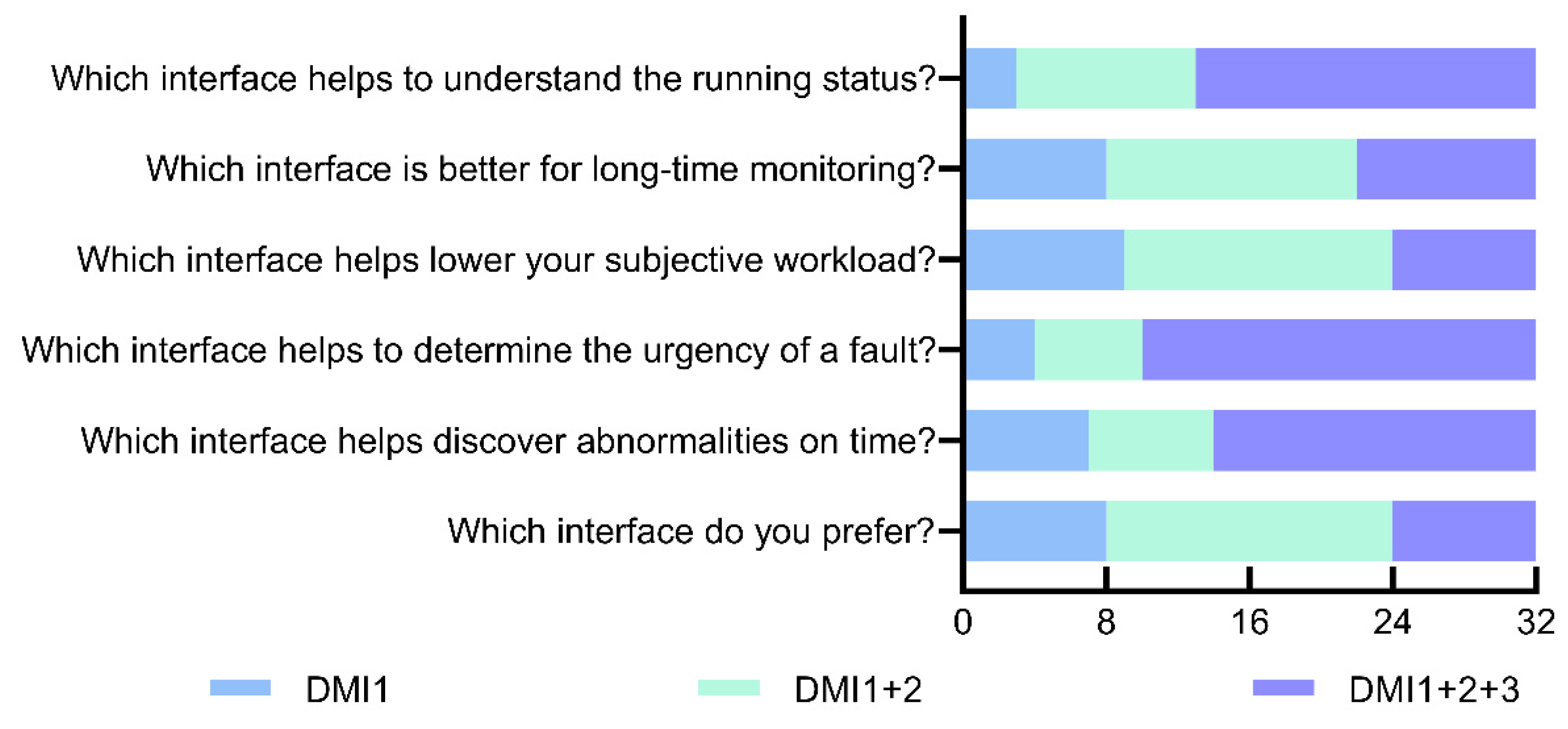

- Interface preference was collected using a questionnaire based on the study of Wu et al. [73] and encompassed six aspects: preference for overall design; timeliness of alarm detection; judgment of alarm urgency; workload introduced by judging alarm levels; support for prolonged monitoring; and support for parameter trend perception. Participants ranked all DMIs in order of preference based on the questionnaire after completing all tests.

2.5.4. Visual Behavior Indicators

2.6. Procedure

- Information gathering: Upon arrival, participants were provided with an informed consent form for the experiment. They then filled out basic demographic information, including age, gender, and visual acuity. They also completed a questionnaire to report their experiences with daily driving tasks and their perceptions of the initial TiA and SoA based on the baseline DMI design;

- Training: Participants received training to ensure their familiarity with the operation of the simulated driving platform and the practical tasks of the experiment, and they were subsequently tested to assess their proficiency;

- Fatigue confirmation and eye tracker calibration: Before commencing the experiment, participants completed the KSS questionnaire to assess fatigue levels, and it was ensured that they were below level 3. We assisted participants in performing eye tracker calibration using a five-point method;

- Experimental test: Participants engaged in tasks based on the GoA-2 mode that involved both autonomous driving supervision and manual tasks. During each driving test, participants were subjected to two SAGAT measurements and encountered five non-routine events. Throughout the experiment, SAGAT interrogations and occurrences of non-routine events were randomly ordered to avoid the memory effect, and participants were not informed of their frequency. Even if participants did not answer SAGAT questions or failed in fault operations, the experimental tasks were not interrupted. A railway training expert remotely monitored and evaluated compliance with operations;

- Single questionnaire and intergroup breaks: Upon the completion of each trial, participants were required to fill out the NASA-TLX, TiA, SoA, and acceptance questionnaires, and they were then provided with a minimum of one hour of rest to ensure that their subsequent KSS questionnaire results did not fall below 3, as well as to allow them to recover and maintain adequate alertness for the next phase of the experiment;

- Post-experiment interview: After completing three trials, participants underwent semi-structured interviews in conjunction with the interface preference questionnaire to gather feedback on their actual operational experience and interface preferences. These interviews provided insights into their subjective perceptions and preferences.

3. Results

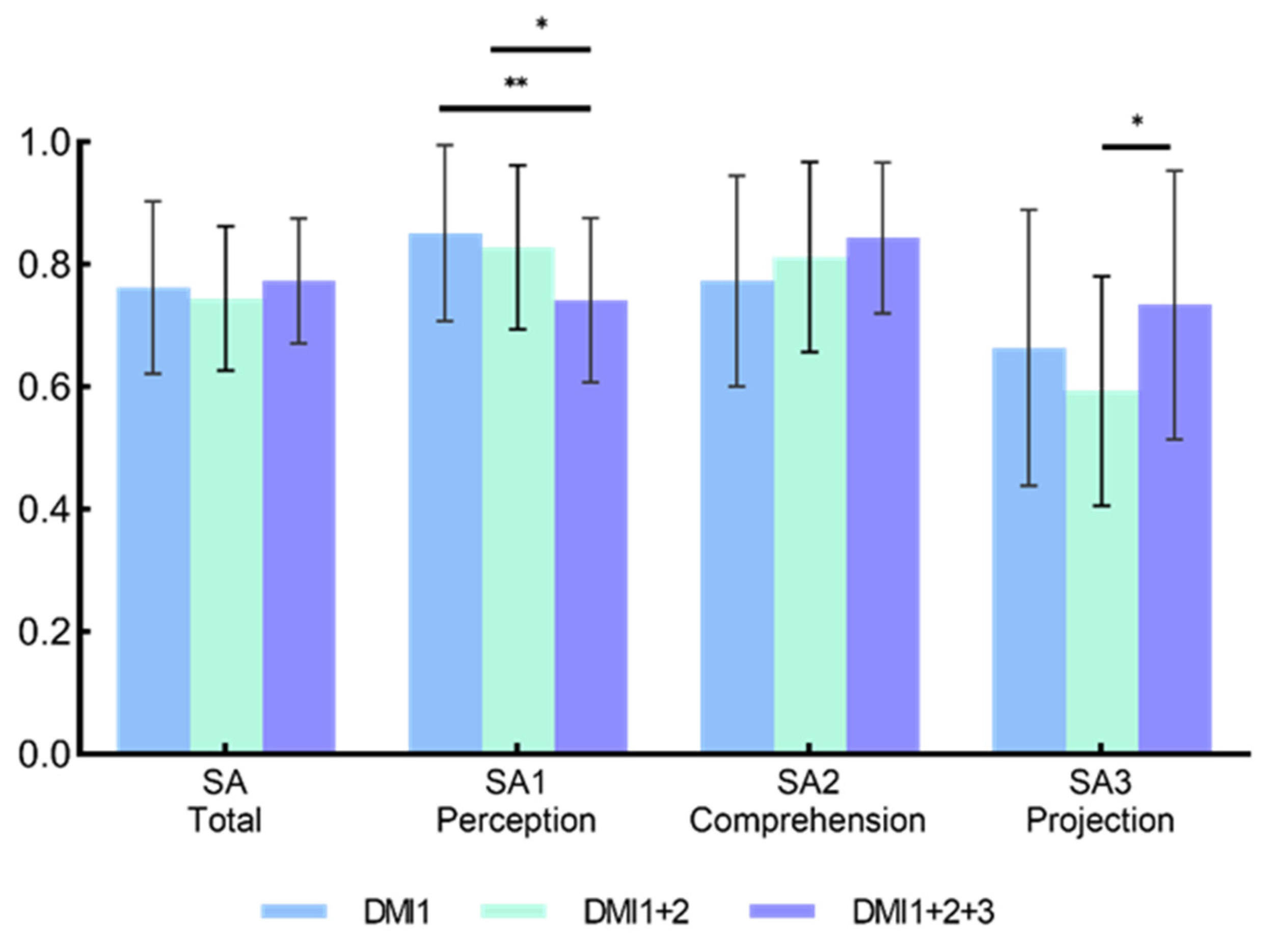

3.1. Situational Awareness

3.2. Operational Performance

3.3. Questionnaire Results

3.3.1. Trust in Automation

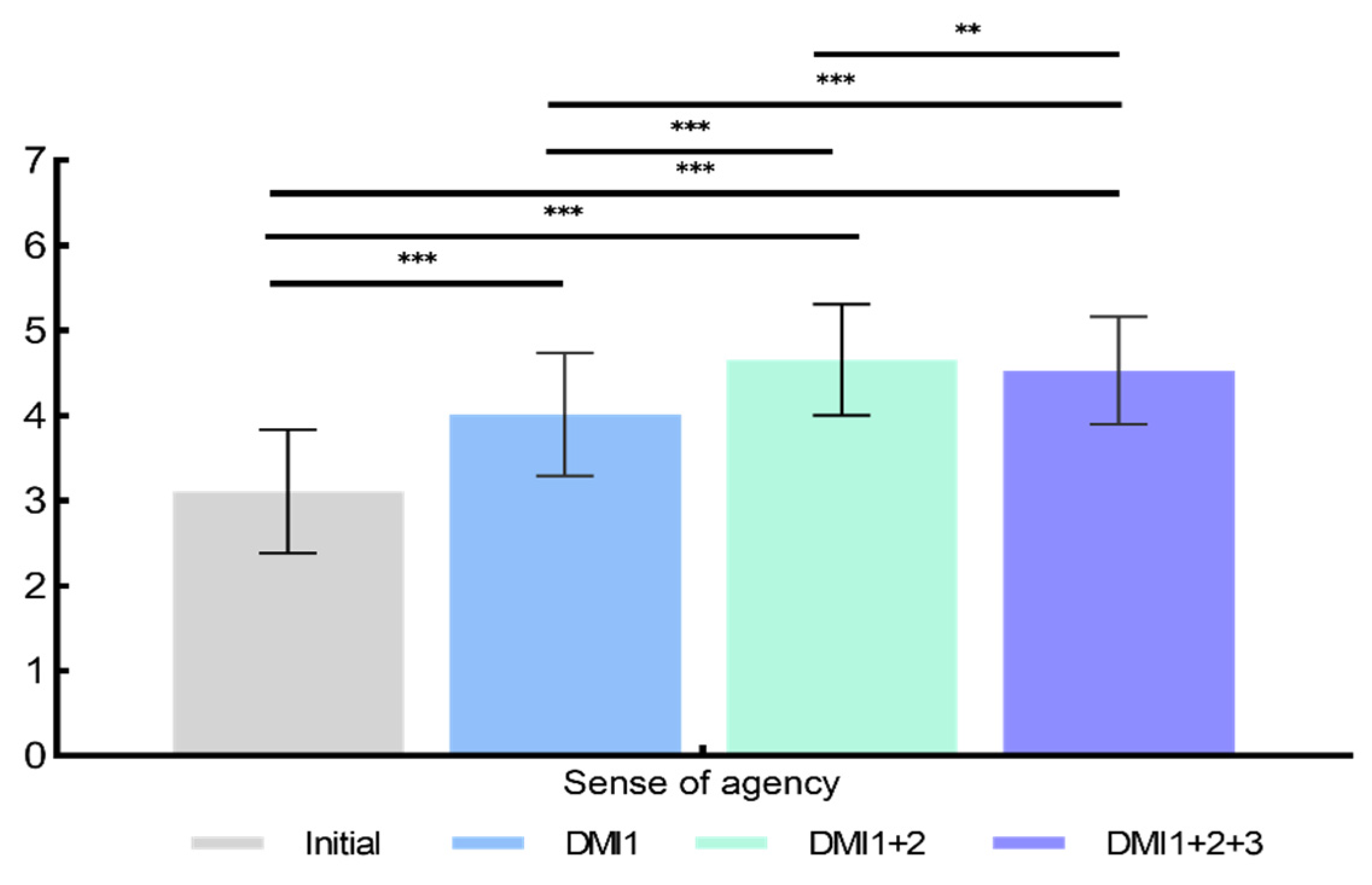

3.3.2. Sense of Agency

3.3.3. Acceptance

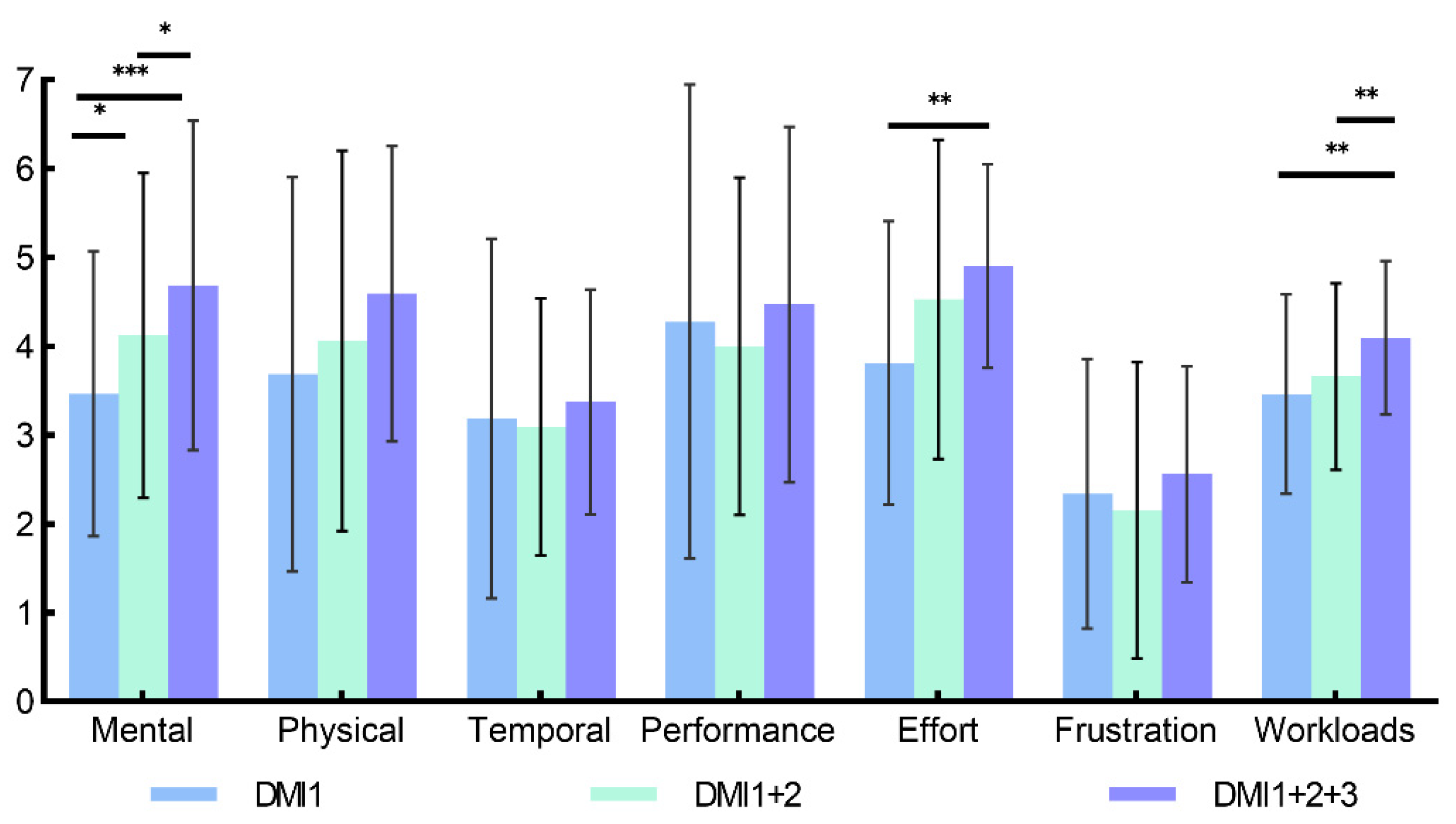

3.3.4. NASA-TLX

3.3.5. Interface Preferences

3.4. Visual Behavior

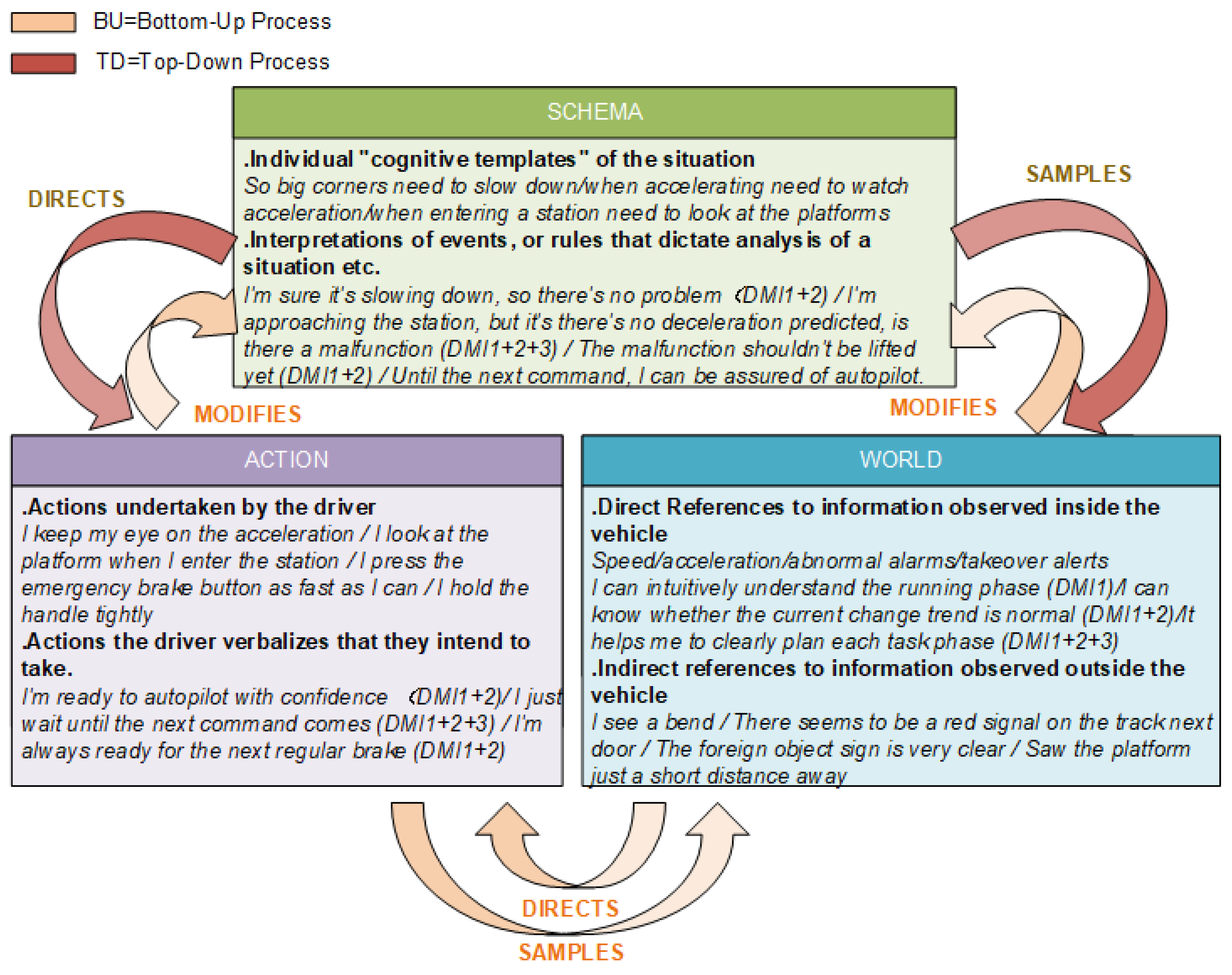

4. Discussion

4.1. Benefits of Appropriate Transparency

4.2. Advantages of High Transparency

4.3. Limitations of High Transparency

- Excessive and non-intuitive perceptual elements: Current designs include multiple elements—such as speed, distance, and time—that require the driver to switch cognitive focus and perform additional calculations, thus hindering the efficiency of higher-level SA. One possible way to solve this problem is to choose generic perceptual element types and show the deviation between the current condition and the planned condition [110].

- Continuous, indiscriminate information input leading to cognitive conflict: Some elements in the high-transparency design, such as station distance and track junction position, can be intuitively observed by the driver and do not require predictive information. Displaying all information simultaneously leads to sensory overload, limiting the effective generation of SA [111]. To address this shortcoming, goal-directed task analysis can be used to mitigate cognitive overload and enhance SA generation by prioritizing and filtering information based on the importance of the task [112].

4.4. Limitations and Future Research

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Moccia, L.; Allen, D.W.; Laporte, G.; Spinosa, A. Mode boundaries of automated metro and semi-rapid rail in urban transit. Public Transp. 2022, 14, 739–802. [Google Scholar] [CrossRef]

- Su, S.; Wang, X.; Cao, Y.; Yin, J. An Energy-Efficient Train Operation Approach by Integrating the Metro Timetabling and Eco-Driving. IEEE Trans. Intell. Transp. Syst. 2020, 21, 4252–4268. [Google Scholar] [CrossRef]

- Diab, E.; Shalaby, A. Metro transit system resilience: Understanding the impacts of outdoor tracks and weather conditions on metro system interruptions. Int. J. Sustain. Transp. 2020, 14, 657–670. [Google Scholar] [CrossRef]

- Yuan, W.; Frey, H.C. Potential for metro rail energy savings and emissions reduction via eco-driving. Appl. Energy 2020, 268, 114944. [Google Scholar] [CrossRef]

- Bai, Y.; Cao, Y.; Yu, Z.; Ho, T.K.; Roberts, C.; Mao, B. Cooperative Control of Metro Trains to Minimize Net Energy Consumption. IEEE Trans. Intell. Transp. Syst. 2020, 21, 2063–2077. [Google Scholar] [CrossRef]

- Song, H.; Liu, J.; Schnieder, E. Validation, verification and evaluation of a Train to Train Distance Measurement System by means of Colored Petri Nets. Reliab. Eng. Syst. Saf. 2017, 164, 10–23. [Google Scholar] [CrossRef]

- Hasanzadeh, S.; Zarei, S.F.; Najafi, E. A Train Scheduling for Energy Optimization: Tehran Metro System as a Case Study. IEEE Trans. Intell. Transp. Syst. 2023, 24, 357–366. [Google Scholar] [CrossRef]

- Amendola, A.; Barbareschi, M.; De Simone, S.; Mezzina, G.; Moriconi, A.; Saragaglia, C.L.; Serra, D.; De Venuto, D. A real-time vital control module to increase capabilities of railway control systems in highly automated train operations. Real-Time Syst. 2023, 59, 636–661. [Google Scholar] [CrossRef]

- Carvajal-Carreño, W.; Cucala, A.P.; Fernández-Cardador, A. Fuzzy train tracking algorithm for the energy efficient operation of CBTC equipped metro lines. Eng. Appl. Artif. Intell. 2016, 53, 19–31. [Google Scholar] [CrossRef]

- Rjabovs, A.; Palacin, R. The influence of system design-related factors on the safety performance of metro drivers. Proc. Inst. Mech. Eng. Part F J. Rail Rapid Transit 2016, 231, 317–328. [Google Scholar] [CrossRef]

- Niu, K.; Fang, W.; Song, Q.; Guo, B.; Du, Y.; Chen, Y. An Evaluation Method for Emergency Procedures in Automatic Metro Based on Complexity. IEEE Trans. Intell. Transp. Syst. 2021, 22, 370–383. [Google Scholar] [CrossRef]

- Kim, H. Trustworthiness of unmanned automated subway services and its effects on passengers’ anxiety and fear. Transp. Res. Part F Traffic Psychol. Behav. 2019, 65, 158–175. [Google Scholar] [CrossRef]

- Zhao, J.; Yang, F.; Guo, Y.; Ren, X. A CAST-Based Analysis of the Metro Accident That Was Triggered by the Zhengzhou Heavy Rainstorm Disaster. Int. J. Environ. Res. Public Health 2022, 19, 10696. [Google Scholar] [CrossRef] [PubMed]

- Hyde, P.; Ulianov, C.; Liu, J.; Banic, M.; Simonovic, M.; Ristic-Durrant, D. Use cases for obstacle detection and track intrusion detection systems in the context of new generation of railway traffic management systems. Proc. Inst. Mech. Eng. Part F J. Rail Rapid Transit 2022, 236, 149–158. [Google Scholar] [CrossRef]

- Wang, A.; Guo, B.; Du, H.; Bao, H. Impact of Automation at Different Cognitive Stages on High-Speed Train Driving Performance. IEEE Trans. Intell. Transp. Syst. 2022, 23, 24599–24608. [Google Scholar] [CrossRef]

- Naweed, A.; Balakrishnan, G. Understanding the visual skills and strategies of train drivers in the urban rail environment. Work 2014, 47, 339–352. [Google Scholar] [CrossRef] [PubMed]

- Jansson, A.; Olsson, E.; Kecklund, L. Acting or reacting? A cognitive work analysis approach to the train driver task. In Rail Human Factors; Routledge: London, UK, 2017; pp. 40–49. [Google Scholar]

- Andreasson, R.; Jansson, A.A.; Lindblom, J. The coordination between train traffic controllers and train drivers: A distributed cognition perspective on railway. Cogn. Technol. Work 2019, 21, 417–443. [Google Scholar] [CrossRef]

- Wohleber, R.W.; Stowers, K.; Barnes, M.; Chen, J.Y.C. Agent transparency in mixed-initiative multi-UxV control: How should intelligent agent collaborators speak their minds? Comput. Hum. Behav. 2023, 148, 107866. [Google Scholar] [CrossRef]

- Carsten, O.; Martens, M.H. How can humans understand their automated cars? HMI principles, problems and solutions. Cogn. Technol. Work 2019, 21, 3–20. [Google Scholar] [CrossRef]

- Wohleber, R.W.; Calhoun, G.L.; Funke, G.J.; Ruff, H.; Chiu, C.Y.P.; Lin, J.; Matthews, G. The Impact of Automation Reliability and Operator Fatigue on Performance and Reliance. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2016, 60, 211–215. [Google Scholar] [CrossRef]

- Pokam, R.; Debernard, S.; Chauvin, C.; Langlois, S. Principles of transparency for autonomous vehicles: First results of an experiment with an augmented reality human–machine interface. Cogn. Technol. Work 2019, 21, 643–656. [Google Scholar] [CrossRef]

- Vössing, M.; Kühl, N.; Lind, M.; Satzger, G. Designing Transparency for Effective Human-AI Collaboration. Inf. Syst. Front. 2022, 24, 877–895. [Google Scholar] [CrossRef]

- Naweed, A. Investigations into the skills of modern and traditional train driving. Appl. Ergon. 2014, 45, 462–470. [Google Scholar] [CrossRef]

- van de Merwe, K.; Mallam, S.; Nazir, S. Agent Transparency, Situation Awareness, Mental Workload, and Operator Performance: A Systematic Literature Review. Hum. Factors 2022, 66, 180–208. [Google Scholar] [CrossRef] [PubMed]

- Scott, O.; Tracy, S.; Florian, J.; Peter, H.; Jessie, Y.C.C. Determinants of system transparency and its influence on trust in and reliance on unmanned robotic systems. In Unmanned Systems Technology XVI; SPIE: Bellingham, WA, USA, 2014; p. 90840E. [Google Scholar]

- Chen, J.Y.C.; Lakhmani, S.G.; Stowers, K.; Selkowitz, A.R.; Wright, J.L.; Barnes, M. Situation awareness-based agent transparency and human-autonomy teaming effectiveness. Theor. Issues Ergon. Sci. 2018, 19, 259–282. [Google Scholar] [CrossRef]

- Bhaskara, A.; Duong, L.; Brooks, J.; Li, R.; McInerney, R.; Skinner, M.; Pongracic, H.; Loft, S. Effect of automation transparency in the management of multiple unmanned vehicles. Appl. Ergon. 2021, 90, 103243. [Google Scholar] [CrossRef]

- Roth, G.; Schulte, A.; Schmitt, F.; Brand, Y. Transparency for a Workload-Adaptive Cognitive Agent in a Manned–Unmanned Teaming Application. IEEE Trans. Hum.-Mach. Syst. 2020, 50, 225–233. [Google Scholar] [CrossRef]

- Skraaning, G.; Jamieson, G.A. Human Performance Benefits of The Automation Transparency Design Principle: Validation and Variation. Hum. Factors 2019, 63, 379–401. [Google Scholar] [CrossRef] [PubMed]

- Guznov, S.; Lyons, J.; Pfahler, M.; Heironimus, A.; Woolley, M.; Friedman, J.; Neimeier, A. Robot Transparency and Team Orientation Effects on Human–Robot Teaming. Int. J. Hum. Comput. Interact. 2020, 36, 650–660. [Google Scholar] [CrossRef]

- Wright, J.L.; Chen, J.Y.C.; Barnes, M.J.; Hancock, P.A. The Effect of Agent Reasoning Transparency on Complacent Behavior: An Analysis of Eye Movements and Response Performance. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2017, 61, 1594–1598. [Google Scholar] [CrossRef]

- Wright, J.L.; Chen, J.Y.C.; Lakhmani, S.G. Agent Transparency and Reliability in Human–Robot Interaction: The Influence on User Confidence and Perceived Reliability. IEEE Trans. Hum. Mach. Syst. 2020, 50, 254–263. [Google Scholar] [CrossRef]

- Cohen-Lazry, G.; Borowsky, A.; Oron-Gilad, T. The impact of auditory continual feedback on take-overs in Level 3 automated vehicles. Transp. Res. Part F Traffic Psychol. Behav. 2020, 75, 145–159. [Google Scholar] [CrossRef]

- Tinga, A.M.; van Zeumeren, I.M.; Christoph, M.; van Grondelle, E.; Cleij, D.; Aldea, A.; van Nes, N. Development and evaluation of a human machine interface to support mode awareness in different automated driving modes. Transp. Res. Part F Traffic Psychol. Behav. 2023, 92, 238–254. [Google Scholar] [CrossRef]

- Wohleber, R.W.; Stowers, K.; Chen, J.Y.C.; Barnes, M. Effects of agent transparency and communication framing on human-agent teaming. In Proceedings of the 2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, AB, Canada, 5–8 October 2017; pp. 3427–3432. [Google Scholar]

- Sonoda, K.; Wada, T. Displaying System Situation Awareness Increases Driver Trust in Automated Driving. IEEE Trans. Intell. Veh. 2017, 2, 185–193. [Google Scholar] [CrossRef]

- Jansson, A.; Olsson, E.; Erlandsson, M. Bridging the gap between analysis and design: Improving existing driver interfaces with tools from the framework of cognitive work analysis. Cogn. Technol. Work 2006, 8, 41–49. [Google Scholar] [CrossRef]

- Körber, M.; Prasch, L.; Bengler, K. Why Do I Have to Drive Now? Post Hoc Explanations of Takeover Requests. Hum. Factors 2017, 60, 305–323. [Google Scholar] [CrossRef] [PubMed]

- Koo, J.; Kwac, J.; Ju, W.; Steinert, M.; Leifer, L.; Nass, C. Why did my car just do that? Explaining semi-autonomous driving actions to improve driver understanding, trust, and performance. Int. J. Interact. Des. Manuf. (IJIDeM) 2015, 9, 269–275. [Google Scholar] [CrossRef]

- Tinga, A.M.; Cleij, D.; Jansen, R.J.; van der Kint, S.; van Nes, N. Human machine interface design for continuous support of mode awareness during automated driving: An online simulation. Transp. Res. Part F Traffic Psychol. Behav. 2022, 87, 102–119. [Google Scholar] [CrossRef]

- Ruijten, P.A.M.; Terken, J.M.B.; Chandramouli, S.N. Enhancing Trust in Autonomous Vehicles through Intelligent User Interfaces That Mimic Human Behavior. Multimodal Technol. Interact. 2018, 2, 62. [Google Scholar] [CrossRef]

- Liu, W.; Li, Q.; Wang, Z.; Wang, W.; Zeng, C.; Cheng, B. A Literature Review on Additional Semantic Information Conveyed from Driving Automation Systems to Drivers through Advanced In-Vehicle HMI Just Before, During, and Right After Takeover Request. Int. J. Hum. Comput. Interact. 2023, 39, 1995–2015. [Google Scholar] [CrossRef]

- Lu, Z.; Zhang, B.; Feldhütter, A.; Happee, R.; Martens, M.; De Winter, J.C.F. Beyond mere take-over requests: The effects of monitoring requests on driver attention, take-over performance, and acceptance. Transp. Res. Part F Traffic Psychol. Behav. 2019, 63, 22–37. [Google Scholar] [CrossRef]

- Pipkorn, L.; Tivesten, E.; Dozza, M. It’s about time! Earlier take-over requests in automated driving enable safer responses to conflicts. Transp. Res. Part F Traffic Psychol. Behav. 2022, 86, 196–209. [Google Scholar] [CrossRef]

- Marti, P.; Jallais, C.; Koustanaï, A.; Guillaume, A.; Mars, F. Impact of the driver’s visual engagement on situation awareness and takeover quality. Transp. Res. Part F Traffic Psychol. Behav. 2022, 87, 391–402. [Google Scholar] [CrossRef]

- Chen, K.-T.; Chen, H.-Y.W.; Bisantz, A. Adding visual contextual information to continuous sonification feedback about low-reliability situations in conditionally automated driving: A driving simulator study. Transp. Res. Part F Traffic Psychol. Behav. 2023, 94, 25–41. [Google Scholar] [CrossRef]

- Ou, Y.-K.; Huang, W.-X.; Fang, C.-W. Effects of different takeover request interfaces on takeover behavior and performance during conditionally automated driving. Accid. Anal. Prev. 2021, 162, 106425. [Google Scholar] [CrossRef] [PubMed]

- Gold, C.; Damböck, D.; Lorenz, L.; Bengler, K. “Take over!” How long does it take to get the driver back into the loop? Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2013, 57, 1938–1942. [Google Scholar] [CrossRef]

- Display, T.D.; Stjernstrm, R.; Borlv, E.; Olsson, E.; Sandblad, B. User-Centred Design of a Train Driver Display; Department of Information Technology, Uppsala University Sweden: Uppsala, Sweden, 2001. [Google Scholar]

- Panou, K.; Tzieropoulos, P.; Emery, D. Railway driver advice systems: Evaluation of methods, tools and systems. J. Rail Transp. Plan. Manag. 2013, 3, 150–162. [Google Scholar] [CrossRef]

- Saeed Tariq, U.; Alabi Bortiorkor, N.T.; Labi, S. Preparing Road Infrastructure to Accommodate Connected and Automated Vehicles: System-Level Perspective. J. Infrastruct. Syst. 2021, 27, 06020003. [Google Scholar] [CrossRef]

- He, R.; Ai, B.; Zhong, Z.; Yang, M.; Chen, R.; Ding, J.; Ma, Z.; Sun, G.; Liu, C. 5G for Railways: Next Generation Railway Dedicated Communications. IEEE Commun. Mag. 2022, 60, 130–136. [Google Scholar] [CrossRef]

- Getty, D.J.; Swets, J.A.; Pickett, R.M.; Gonthier, D. System Operator Response to Warnings of Danger: A Laboratory Investigation of the Effects of the Predictive Value of a Warning on Human Response Time. J. Exp. Psychol. Appl. 1995, 1, 19–33. [Google Scholar] [CrossRef]

- Tijerina, L.; Blommer, M.; Curry, R.; Swaminathan, R.; Kochhar, D.S.; Talamonti, W. An Exploratory Study of Driver Response to Reduced System Confidence Notifications in Automated Driving. IEEE Trans. Intell. Veh. 2016, 1, 325–334. [Google Scholar] [CrossRef]

- Wang, S.; Liu, Y.; Li, S.; Liu, Z.; You, X.; Li, Y. The effect of two-stage warning system on human performance along with different takeover strategies. Int. J. Ind. Ergon. 2023, 97, 103492. [Google Scholar] [CrossRef]

- Wright, J.L.; Chen, J.Y.C.; Barnes, M.J.; Hancock, P.A. Agent Reasoning Transparency’s Effect on Operator Workload. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2016, 60, 249–253. [Google Scholar] [CrossRef]

- Lyons, J.B.; Havig, P.R. Transparency in a Human-Machine Context: Approaches for Fostering Shared Awareness/Intent. In Proceedings of the Virtual, Augmented and Mixed Reality. Designing and Developing Virtual and Augmented Environments, Heraklion, Greece, 22–27 June 2014; Shumaker, R., Lackey, S., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 181–190. [Google Scholar]

- Tausch, A.; Kluge, A. The best task allocation process is to decide on one’s own: Effects of the allocation agent in human–robot interaction on perceived work characteristics and satisfaction. Cogn. Technol. Work 2022, 24, 39–55. [Google Scholar] [CrossRef]

- Dunn, N.; Williamson, A. Driving monotonous routes in a train simulator: The effect of task demand on driving performance and subjective experience. Ergonomics 2012, 55, 997–1008. [Google Scholar] [CrossRef]

- Olsson, N. A validation study comparing performance in a low-fidelity train-driving simulator with actual train driving performance. Transp. Res. Part F Traffic Psychol. Behav. 2023, 97, 109–122. [Google Scholar] [CrossRef]

- Zhang, W.; Wang, C.; Chen, N. Design and implementation of on-board human-machine interface for CBTC system. Railw. Signal. Commun. 2016, 52, 80–82. [Google Scholar]

- Wang, Y.; Sheng, K.; Niu, P.; Chu, C.; Li, M.; Jia, L. A comprehensive analysis method of urban rail transit operation accidents and safety management strategies based on text big data. Saf. Sci. 2024, 172, 106400. [Google Scholar] [CrossRef]

- Endsley, M.R.; Selcon, S.J.; Hardiman, T.D.; Croft, D.G. A Comparative Analysis of Sagat and Sart for Evaluations of Situation Awareness. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 1998, 42, 82–86. [Google Scholar] [CrossRef]

- Golightly, D.; Ryan, B.; Dadashi, N.; Pickup, L.; Wilson, J.R. Use of scenarios and function analyses to understand the impact of situation awareness on safe and effective work on rail tracks. Saf. Sci. 2013, 56, 52–62. [Google Scholar] [CrossRef]

- Rose, J.; Bearman, C.; Naweed, A.; Dorrian, J. Proceed with caution: Using verbal protocol analysis to measure situation awareness. Ergonomics 2019, 62, 115–127. [Google Scholar] [CrossRef] [PubMed]

- Zhou, H.; Kamijo, K.; Itoh, M.; Kitazaki, S. Effects of explanation-based knowledge regarding system functions and driver’s roles on driver takeover during conditionally automated driving: A test track study. Transp. Res. Part F Traffic Psychol. Behav. 2021, 77, 1–9. [Google Scholar] [CrossRef]

- Åkerstedt, T.; Gillberg, M. Subjective and Objective Sleepiness in the Active Individual. Int. J. Neurosci. 1990, 52, 29–37. [Google Scholar] [CrossRef] [PubMed]

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (Task Load Index): Results of empirical and theoretical research. In Advances in Psychology; Elsevier: Amsterdam, The Netherlands, 1988; Volume 52, pp. 139–183. [Google Scholar]

- Jian, J.-Y.; Bisantz, A.M.; Drury, C.G. Foundations for an empirically determined scale of trust in automated systems. Int. J. Cogn. Ergon. 2000, 4, 53–71. [Google Scholar] [CrossRef]

- Haggard, P.; Tsakiris, M. The Experience of Agency: Feelings, Judgments, and Responsibility. Curr. Dir. Psychol. Sci. 2009, 18, 242–246. [Google Scholar] [CrossRef]

- Van Der Laan, J.D.; Heino, A.; De Waard, D. A simple procedure for the assessment of acceptance of advanced transport telematics. Transp. Res. Part C Emerg. Technol. 1997, 5, 1–10. [Google Scholar] [CrossRef]

- Wu, X.; Yuan, X.; Li, Z.; Song, F.; Sang, W. Validation of “alarm bar” alternative interface for digital control panel design: A preliminary experimental study. Int. J. Ind. Ergon. 2016, 51, 43–51. [Google Scholar] [CrossRef]

- Kim, S.; van Egmond, R.; Happee, R. How manoeuvre information via auditory (spatial and beep) and visual UI can enhance trust and acceptance in automated driving. Transp. Res. Part F Traffic Psychol. Behav. 2024, 100, 22–36. [Google Scholar] [CrossRef]

- Fu, R.; Liu, W.; Zhang, H.; Liu, X.; Yuan, W. Adopting an HMI for overtaking assistance—Impact of distance display, advice, and guidance information on driver gaze and performance. Accid. Anal. Prev. 2023, 191, 107204. [Google Scholar] [CrossRef]

- Rjabovs, A.; Palacin, R. Investigation into Effects of System Design on Metro Drivers’ Safety-Related Performance: An Eye-Tracking Study. Urban Rail Transit 2019, 5, 267–277. [Google Scholar] [CrossRef]

- Koo, B.-Y.; Jang, M.-H.; Kim, Y.-C.; Mah, K.-C. Changes in the subjective fatigue and pupil diameters induced by watching LED TVs. Optik 2018, 164, 701–710. [Google Scholar] [CrossRef]

- McConkie, G.W.; Rayner, K. The span of the effective stimulus during a fixation in reading. Percept. Psychophys. 1975, 17, 578–586. [Google Scholar] [CrossRef]

- Jap, B.T.; Lal, S.; Fischer, P. Comparing combinations of EEG activity in train drivers during monotonous driving. Expert Syst. Appl. 2011, 38, 996–1003. [Google Scholar] [CrossRef]

- Ma, R.H.Y.; Morris, A.; Herriotts, P.; Birrell, S. Investigating what level of visual information inspires trust in a user of a highly automated vehicle. Appl. Ergon. 2021, 90, 103272. [Google Scholar] [CrossRef] [PubMed]

- Taylor, S.; Wang, M.; Jeon, M. Reliable and transparent in-vehicle agents lead to higher behavioral trust in conditionally automated driving systems. Front. Psychol. 2023, 14, 1121622. [Google Scholar] [CrossRef] [PubMed]

- Hartwich, F.; Witzlack, C.; Beggiato, M.; Krems, J.F. The first impression counts—A combined driving simulator and test track study on the development of trust and acceptance of highly automated driving. Transp. Res. Part F Traffic Psychol. Behav. 2019, 65, 522–535. [Google Scholar] [CrossRef]

- Gegoff, I.; Tatasciore, M.; Bowden, V.; McCarley, J.; Loft, S. Transparent Automated Advice to Mitigate the Impact of Variation in Automation Reliability. Hum. Factors 2023, 66, 2008–2024. [Google Scholar] [CrossRef] [PubMed]

- Zanatto, D.; Bifani, S.; Noyes, J. Constraining the Sense of Agency in Human-Machine Interaction. Int. J. Hum. Comput. Interact. 2023, 40, 3482–3493. [Google Scholar] [CrossRef]

- Byun, J.; Kim, H.-R.; Lee, H.-E.; Kim, S.-E.; Lee, J. Factors associated with suicide ideation among subway drivers in Korea. Ann. Occup. Environ. Med. 2016, 28, 31. [Google Scholar] [CrossRef] [PubMed]

- Aylward, K.; Weber, R.; Lundh, M.; MacKinnon, S.N.; Dahlman, J. Navigators’ views of a collision avoidance decision support system for maritime navigation. J. Navig. 2022, 75, 1035–1048. [Google Scholar] [CrossRef]

- Ma, S.; Zhang, W.; Yang, Z.; Kang, C.; Wu, C.; Chai, C.; Shi, J.; Zeng, Y.; Li, H. Take over Gradually in Conditional Automated Driving: The Effect of Two-stage Warning Systems on Situation Awareness, Driving Stress, Takeover Performance, and Acceptance. Int. J. Hum. Comput. Interact. 2021, 37, 352–362. [Google Scholar] [CrossRef]

- Winkler, S.; Werneke, J.; Vollrath, M. Timing of early warning stages in a multi stage collision warning system: Drivers’ evaluation depending on situational influences. Transp. Res. Part F Traffic Psychol. Behav. 2016, 36, 57–68. [Google Scholar] [CrossRef]

- Li, J.; Guo, J.; Wijnands, J.S.; Yu, R.; Xu, C.; Stevenson, M. Assessing injury severity of secondary incidents using support vector machines. J. Transp. Saf. Secur. 2022, 14, 197–216. [Google Scholar] [CrossRef]

- Stanton, N.A.; Walker, G.H. Exploring the psychological factors involved in the Ladbroke Grove rail accident. Accid. Anal. Prev. 2011, 43, 1117–1127. [Google Scholar] [CrossRef] [PubMed]

- Xu, L.; Guo, L.; Ge, P.; Wang, X. Effect of multiple monitoring requests on vigilance and readiness by measuring eye movement and takeover performance. Transp. Res. Part F Traffic Psychol. Behav. 2022, 91, 179–190. [Google Scholar] [CrossRef]

- Callari, T.C.; Moody, L.; Mortimer, M.; Stefan, H.; Horan, B.; Birrell, S. “Braking bad”: The influence of haptic feedback and tram driver experience on emergency braking performance. Appl. Ergon. 2024, 116, 104206. [Google Scholar] [CrossRef] [PubMed]

- Vantrepotte, Q.; Berberian, B.; Pagliari, M.; Chambon, V. Leveraging human agency to improve confidence and acceptability in human-machine interactions. Cognition 2022, 222, 105020. [Google Scholar] [CrossRef]

- Jamieson, G.A.; Skraaning, G.; Joe, J. The B737 MAX 8 Accidents as Operational Experiences with Automation Transparency. IEEE Trans. Hum. Mach. Syst. 2022, 52, 794–797. [Google Scholar] [CrossRef]

- Kraus, J.; Scholz, D.; Stiegemeier, D.; Baumann, M. The More You Know: Trust Dynamics and Calibration in Highly Automated Driving and the Effects of Take-Overs, System Malfunction, and System Transparency. Hum. Factors 2019, 62, 718–736. [Google Scholar] [CrossRef] [PubMed]

- Körber, M.; Baseler, E.; Bengler, K. Introduction matters: Manipulating trust in automation and reliance in automated driving. Appl. Ergon. 2018, 66, 18–31. [Google Scholar] [CrossRef]

- Crawford, J.R.; Goh, Y.M.; Hubbard, E.M. Generalised Anxiety Disorder and Depression on Implicit and Explicit Trust Tendencies Toward Automated Systems. IEEE Access 2021, 9, 68081–68092. [Google Scholar] [CrossRef]

- Ha, T.; Kim, S.; Seo, D.; Lee, S. Effects of explanation types and perceived risk on trust in autonomous vehicles. Transp. Res. Part F Traffic Psychol. Behav. 2020, 73, 271–280. [Google Scholar] [CrossRef]

- Lee, J.D.; See, K.A. Trust in Automation: Designing for Appropriate Reliance. Hum. Factors 2004, 46, 50–80. [Google Scholar] [CrossRef] [PubMed]

- Ulahannan, A.; Thompson, S.; Jennings, P.; Birrell, S. Using Glance Behaviour to Inform the Design of Adaptive HMI for Partially Automated Vehicles. IEEE Trans. Intell. Transp. Syst. 2022, 23, 4877–4892. [Google Scholar] [CrossRef]

- Scerbo, M.W. Theoretical perspectives on adaptive automation. In Automation and Human Performance: Theory and Applications; CRC Press: Boca Raton, FL, USA, 2018; pp. 37–63. [Google Scholar]

- Tatasciore, M.; Bowden, V.; Loft, S. Do concurrent task demands impact the benefit of automation transparency? Appl. Ergon. 2023, 110, 104022. [Google Scholar] [CrossRef] [PubMed]

- Vagia, M.; Transeth, A.A.; Fjerdingen, S.A. A literature review on the levels of automation during the years. What are the different taxonomies that have been proposed? Appl. Ergon. 2016, 53, 190–202. [Google Scholar] [CrossRef] [PubMed]

- Selkowitz, A.R.; Lakhmani, S.G.; Chen, J.Y.C. Using agent transparency to support situation awareness of the Autonomous Squad Member. Cogn. Syst. Res. 2017, 46, 13–25. [Google Scholar] [CrossRef]

- van de Merwe, K.; Mallam, S.; Nazir, S.; Engelhardtsen, Ø. Supporting human supervision in autonomous collision avoidance through agent transparency. Saf. Sci. 2024, 169, 106329. [Google Scholar] [CrossRef]

- Neisser, U. Cognition and Reality; W. H. Freeman: San Francisco, CA, USA, 1976. [Google Scholar]

- Plant, K.L.; Stanton, N.A. Why did the pilots shut down the wrong engine? Explaining errors in context using Schema Theory and the Perceptual Cycle Model. Saf. Sci. 2012, 50, 300–315. [Google Scholar] [CrossRef]

- Plant, K.L.; Stanton, N.A. The process of processing: Exploring the validity of Neisser’s perceptual cycle model with accounts from critical decision-making in the cockpit. Ergonomics 2015, 58, 909–923. [Google Scholar] [CrossRef]

- Revell, K.M.A.; Richardson, J.; Langdon, P.; Bradley, M.; Politis, I.; Thompson, S.; Skrypchuck, L.; O’Donoghue, J.; Mouzakitis, A.; Stanton, N.A. Breaking the cycle of frustration: Applying Neisser’s Perceptual Cycle Model to drivers of semi-autonomous vehicles. Appl. Ergon. 2020, 85, 103037. [Google Scholar] [CrossRef] [PubMed]

- Sharma, A.; Nazir, S.; Ernstsen, J. Situation awareness information requirements for maritime navigation: A goal directed task analysis. Saf. Sci. 2019, 120, 745–752. [Google Scholar] [CrossRef]

- Cohen, M.A.; Dennett, D.C.; Kanwisher, N. What is the bandwidth of perceptual experience? Trends Cogn. Sci. 2016, 20, 324–335. [Google Scholar] [CrossRef] [PubMed]

- Endsley, M.R. A survey of situation awareness requirements in air-to-air combat fighters. Int. J. Aviat. Psychol. 1993, 3, 157–168. [Google Scholar] [CrossRef]

- Yoshida, M.; Shimizu, E.; Sugomori, M.; Umeda, A. Regulatory Requirements on the Competence of Remote Operator in Maritime Autonomous Surface Ship: Situation Awareness, Ship Sense and Goal-Based Gap Analysis. Appl. Sci. 2020, 10, 8751. [Google Scholar] [CrossRef]

| GoA Level | Driving Mode | Door Closure | Setting Train in Motion | Stopping Train | Operation in Case of Disruption |

|---|---|---|---|---|---|

| GoA-0 | Run on sight (ROS) | Driver | Driver | Driver | Driver |

| GoA-1 | Automatic train protection (ATP) | Driver | Driver | Driver | Driver |

| GoA-2 | Semi-automatic train operation (STO) | Driver | Automatic | Automatic | Driver |

| GoA-3 | Automatic train operation (ATO) | Attendant | Automatic | Automatic | Attendant |

| GoA-4 | Unattended train operation (UTO) | Automatic | Automatic | Automatic | Automatic |

| Operational Performance | DMI1 | DMI1+2 | DMI1+2+3 | |||

|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | Mean | SD | |

| Common braking (s) | 1.66 | 0.35 | 1.68 | 0.40 | 1.10 | 0.32 |

| Emergency braking (s) | 1.51 | 0.43 | 1.43 | 0.42 | 0.77 | 0.30 |

| Unexpected braking (s) | 1.41 | 0.31 | 1.39 | 0.39 | 1.52 | 0.40 |

| Questionnaire | Item | DMI1 | DMI1+2 | DMI1+2+3 | |||

|---|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | Mean | SD | ||

| TiA | Mistrust | 2.38 | 0.71 | 1.97 | 0.69 | 2.06 | 0.72 |

| Suspicion | 2.59 | 0.84 | 2.03 | 0.78 | 2.31 | 1.00 | |

| Confidence | 4.00 | 1.16 | 4.47 | 1.50 | 3.97 | 1.28 | |

| Reliance | 5.44 | 0.76 | 5.72 | 0.73 | 5.53 | 0.84 | |

| SoA | Total | 4.02 | 0.72 | 4.66 | 0.65 | 4.53 | 0.63 |

| Acceptance | Average | 4.05 | 0.55 | 4.46 | 0.54 | 4.22 | 0.49 |

| NASA-TLX | Mental demand | 3.47 | 1.61 | 4.13 | 1.83 | 4.69 | 1.86 |

| Physical demand | 3.69 | 2.22 | 4.06 | 2.14 | 4.59 | 1.66 | |

| Temporal demand | 3.19 | 2.02 | 3.09 | 1.44 | 3.38 | 1.26 | |

| Perform | 4.28 | 2.67 | 4.00 | 1.90 | 4.47 | 2.00 | |

| Effort | 3.81 | 1.60 | 4.53 | 1.80 | 4.91 | 1.15 | |

| Frustration | 2.34 | 1.52 | 2.16 | 1.67 | 2.56 | 1.22 | |

| Workload (average) | 3.46 | 1.12 | 3.66 | 1.05 | 4.10 | 0.86 | |

| Visual Behavioral Indicators | DMI1 | DMI1+2 | DMI1+2+3 | |||

|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | Mean | SD | |

| Total saccade count | 158.46 | 42.23 | 180.64 | 60.32 | 251.61 | 65.71 |

| Total fixation count | 400.07 | 105.45 | 450.57 | 104.43 | 737.96 | 153.90 |

| Total fixation duration (s) | 94.97 | 33.79 | 104.67 | 35.69 | 179.37 | 53.97 |

| Average fixation duration (s) | 0.23 | 0.06 | 0.22 | 0.05 | 0.24 | 0.05 |

| Average pupil diameter (mm) | 3.76 | 0.76 | 3.81 | 0.59 | 3.75 | 0.67 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ding, T.; Zhi, J.; Yu, D.; Li, R.; He, S.; Wu, W.; Jing, C. Constant Companionship Without Disturbances: Enhancing Transparency to Improve Automated Tasks in Urban Rail Transit Driving. Systems 2024, 12, 576. https://doi.org/10.3390/systems12120576

Ding T, Zhi J, Yu D, Li R, He S, Wu W, Jing C. Constant Companionship Without Disturbances: Enhancing Transparency to Improve Automated Tasks in Urban Rail Transit Driving. Systems. 2024; 12(12):576. https://doi.org/10.3390/systems12120576

Chicago/Turabian StyleDing, Tiecheng, Jinyi Zhi, Dongyu Yu, Ruizhen Li, Sijun He, Wenyi Wu, and Chunhui Jing. 2024. "Constant Companionship Without Disturbances: Enhancing Transparency to Improve Automated Tasks in Urban Rail Transit Driving" Systems 12, no. 12: 576. https://doi.org/10.3390/systems12120576

APA StyleDing, T., Zhi, J., Yu, D., Li, R., He, S., Wu, W., & Jing, C. (2024). Constant Companionship Without Disturbances: Enhancing Transparency to Improve Automated Tasks in Urban Rail Transit Driving. Systems, 12(12), 576. https://doi.org/10.3390/systems12120576