Abstract

With the acceleration of human society’s digitization and the application of innovative technologies to emerging media, popular social media platforms are inundated by fresh news and multimedia content from multiple more or less reliable sources. This abundance of circulating and accessible information and content has intensified the difficulty of separating good, real, and true information from bad, false, and fake information. As it has been proven, most unwanted content is created automatically using bots (automated accounts supported by artificial intelligence), and it is difficult for authorities and respective media platforms to combat the proliferation of such malicious, pervasive, and artificially intelligent entities. In this article, we propose using automated account (bots)-originating content to compete with and reduce the speed of propagating a harmful rumor on a given social media platform by modeling the underlying relationship between the circulating contents when they are related to the same topic and present relative interest for respective online communities using differential equations and dynamical systems. We studied the proposed model qualitatively and quantitatively and found that peaceful coexistence could be obtained under certain conditions, and improving the controlled social bot’s content attractiveness and visibility has a significant impact on the long-term behavior of the system depending on the control parameters.

1. Introduction

In the era of artificial intelligence applied to communication, emerging media such as social media have created a reign of new, exceptionally active, and powerful public opinion. In parallel with the “COVID-19 epidemic”, from which a new norm has emerged, human societies are facing an “information epidemic” [1], which is characterized by the profound transmutation and complexification of global public opinion. Social bots, as the main tools used in creating and propagating all sorts of content, are acting behind the scenes in a large number of popular social media spaces, significantly influencing the dynamics of international public opinion [2,3]. Topics such as social bots [4], fake news [5], misinformation [6], social media manipulation [7], information propaganda [8], and political communication algorithms [9] have increasingly become research hotspots in academia. According to a report released by the Internet Research Institute (University of Oxford), by the end of 2020, the number of countries in the world that use social bots to conduct computational propaganda in social media spaces reached 81, and it has shown a continuous growth trend [10]. It can be seen that the social media ecosystem is changing from being completely dominated by humans to a state of “humans + social bots” interactions and symbiosis.

As an important part of social networks, social bots imitate human behavior to make themselves look similar to real human users and participate in online discussions on social network platforms. They gradually evolved from communication intermediaries in information dissemination to independent or autonomous communicators. They shape the social interaction behavior and relationship mode between online user groups on the basis of information diffusion and dissemination and became important tools in public opinion manipulation. European and American scholars generally give negative connotations to this new actor in the social network ecosystem. While the friendly face or positive side of this new “entity” has recently been continuously virulently criticized, the integration of algorithms and information shows signs of positive feedback [11,12]. More and more researchers have found that social bots can not only act on the diffusion of negative information [13] but they can also facilitate the diffusion of positive content [14]. In other terms, social bots should be mainly considered as neutral technical tools that use algorithms to spread ideology and infiltrate social networks, and at the same time, they are political entities aiming to manipulate social opinion via propaganda [15], with both technical and social attributes. These dual attributes of bots enable them to participate by deeply interfering in ongoing communication via various important nodes in social networks and then connecting and integrating social network resources based on controlling smart virtual agent identities. Thus, in the effort to tackle falsehood and misleading content proliferating on popular social media platforms, social bots can be used to fuel conversation, inject positive narratives (ideas, comments, etc.), and broadcast authoritative real information on targeted social network platforms for social security purposes, as an example.

In this regard, social bots, the “double-edged sword,” would then join the right side and help in reducing the spread of rumor and fake news by expanding the spread of real information and restoring the truth. Nevertheless, for a better and healthier coexistence of people and social bots in online social environments, at least for the sake of international public opinion (on subjects of importance such as public health, social wellbeing, etc.), we propose, in this investigation, a focus on the dissemination of actual information itself, explore how to use social bots to support or refresh authoritative or high-credibility information, and discuss challenges related spreading rumors or disinformation with the aim to reduce their manipulative effects. To achieve this goal in this article, we consider a holistic or systemic approach to disinformation and information dissemination in social networks under the effect of social bots being used to support authoritative or high-credibility information to overcome circulating negative or distorted narratives and content. Analyzing the underlying relationship between information and related disinformation propagation and dynamics in social networks would provide solid evidence of the importance of relying on social bots to combat negative propaganda and manipulation dissimulated in distorted circulating content. In particular, we consider in our approach the interactions that occur when content related to a specific topic of interest published by social bots (high-credibility or authoritative information) competes with related rumors or fake news on a given social network platform as in an ecosystem where interacting species share resources (number of views, comments, reposts, etc.).

We use a two-species cooperative and competitive model system based on the modified Lotka–Volterra to model the effect of interactions, determining the dynamics of the system when the propagating rumor competes with the related authoritative information posted using controlled social bots in a closed environment. In this approach, species one (social-bot-originated content supporting high-credibility information) and species two (rumor, false information, or disinformation) are directly affecting the evolution dynamic positively (cooperation) when one of the two drives people to crosscheck other related content, and they are affecting one another negatively (competition) when one of the two directly convinces the public (no need to crosscheck other related contents) at a different rate depending on control parameters and other related factors. We model the negative effects of competition using nonlinear terms, which present the advantage of being more realistic by taking into account eventual lags existing in the interactive species assimilation efficiency or harvesting time [16,17,18].

In the qualitative analysis, we show that the proposed model system could be stable locally and asymptotically at certain conditions around its given fixed points. Furthermore, the results of numerical simulations show that the dynamic of interactions is impacted directly by species behavior and abilities (strength), information or rumor attractiveness in terms of ideas and concepts or closeness to the targeted public interest, the visibility on the platform, etc., with respect to resource consumption or the number of views, likes, reposts, comments, and so forth. This suggests that adopting a different strategy in web marketing, for example, to support the presence of high-credibility information on the respective platform, optimizing existing countermeasures and security layers, or improving the community’s awareness may result in clearing up the disinformation being fed to them and can boost the diffusion of informative and non-harmful content on given social media platforms regardless of whether the originators are humans or automated machines.

2. Literature Review

2.1. The Connotation and Characteristics of Social Bots

There are four categories with respect to the current computational propaganda (bots as information disseminators) drivers in the world: government agencies, political parties, business companies, and social groups. As many related studies show, 76% of the investigated countries have confirmed that government agencies/politicians and political parties use computational propaganda in political competition and propaganda. This means that computational propaganda is usually in the hands of politicians with sufficient resources and power to speak [19], and social media has become an important factor in interfering in online elections.

Regarding the disseminated content, there are four main motives and overall goals that social bots are extensively used for supporting the authorities, attacking competitors, suppressing dissidents, and creating differences.

As for the scale of the dissemination, it can be divided into three levels. Firstly, only a small team is needed, which is limited to the primary stage of domestic or political election operations [1], (including Argentina, Germany, Italy, the Netherlands, Greece, Spain, Sweden, South Africa, etc.). Secondly, using bots allows the equivalent of having a full-time staff size and a variety of strategic tools to coordinate with the intermediate stage of the long-term control of public opinion. In some cases, this may even influence overseas participating individuals (including Austria, Brazil, Czech Republic, Indonesia, Kenya, Malaysia, Mexico, South Korea, Thailand, and other countries). Thirdly, using this powerful tool could advance domestic and overseas political idea dissemination with a large number of organized team members and R&D operation investments (including the United States, the United Kingdom, Russia, Australia, Egypt, India, Israel, Ukraine, Saudi Arabia, the Philippines, and other countries) [20].

From a social influence standpoint, social bots’ impact on the public opinion sphere is more complex and profound than that of traditional propaganda. On one hand, it relies on algorithms to generate public knowledge more efficiently, gather network mobilization, and use social media to narrow the gap in public political participation; on the other hand, it may be used to create political anxiety and panic, manipulate public opinion across borders, and tear social consensus, which indeed has a negative impact on society.

Communication contents and characteristics of social bots are given in Table 1.

Table 1.

Communication contents and characteristics of social bots.

2.2. Social Bots Behavior across Social Networks

With Web 3.0’s new features enabling advanced control and security, many online social groups are widely distributed in social media space [23]. Scholars often associate social bots with fake news and computational propaganda. They argued that social bots can automatically generate information and disseminate it among human users in the process of online opinion formation and propagation. The characteristics and behaviors of social bots in social networks were mainly studied from three positions: human behavior imitation, information propagation, and interaction with human users.

Being good at imitating human users is a distinctive feature of social bots. They try to imitate all online activities of human users and make themselves look similar to humans, including behaviors such as the expansion of influence, the penetration in online discussions, and the building of social relationship networks [24]. Social bots shape the behavior and relationship patterns of social interactions among online user groups based on information dissemination and diffusion, and achieve large dissemination in online communities [25].

For the facilitation of information diffusion, social bots play key roles in producing and disseminating new information or content of interest to the public. It is noteworthy that the behavior of social bots in facilitating information diffusion acts on both negative [13] and positive information [14]. Currently, scholars have focused on the spread of negative information behavior due to the alarming malicious abuse of social bots. For example, social bots implement some manipulation strategies, post or share information used as bait and is misleading, and they actively generate and proliferate politically biased information.

As for interacting with human users, social bots can automatically generate messages and communicate with human users on respective social network platforms either through direct conversations [26] or indirect interactions [27]; they work hard to shape or dress themselves as trustworthy entities or figures that can influence or even change the behavior of human users [28]. Currently, social bots have successfully infiltrated social networks by engaging in interactive activities with humans, with a penetration success rate of 80% on Facebook [29].

Social bots’ main behavior in information dissemination are given in Table 2.

Table 2.

Social bots’ main behavior in information dissemination.

2.3. The Impact of Social Bots on Social Media

As a new “pseudo-human specie” active in the virtual network, social bots are affecting more and more information dissemination dynamics with their personification (human-like) characteristics; it is obvious that they may truly become an “invisible hand” that would end up shaping real human society via propaganda and manipulation [15]. In recent years, scholars have primarily investigated the influence of social bots on social networks focusing on public opinion manipulation [34], spreading disinformation [35], and influencing public perception [36].

For the purpose of manipulating public opinion, social bots are being intensively utilized in orienting trending topics and public opinion in favor of a given opinion. Online commentary related to the US presidential election [37] and the Brexit vote [38] illustrates that online discussions in the political arena manipulated by social bots can affect voting outcomes [36]. In addition, social bots can also be used to interfere with international politics and, to some extent, influence political discussion networks in other countries [39].

Social bots or Botnets are mostly automated accounts created on popular social media platforms used to disseminate false information through political figures or celebrities and influencers regardless of whether they are social marketers or not. They use a number of strategies to spread false information at a rapid pace, thereby expanding the reach of users [40]. Examples include amplifying disinformation at the earliest stages, creating many original tweets, replacing and hijacking tags, targeting influencers with replies and others, injecting content into conversations, and even disguising geographic locations with the address of real people [41].

Comments published by social bots can influence public perception by increasing the uncertainty of public opinion on a given issue [42]. Scholars have experimentally demonstrated that information published by social bots can effectively change people’s opinions and influence their perceptions and even make the public question the long-standing consensus [43]. Hence, individuals with malicious intentions are targeting certain groups of people for various agendas using this powerful tool. In particular, social bots are employed to troll and propagate disinformation or fake news and hate campaigns among other minds, poisoning and threatening social harmony and wellbeing [37].

3. Model Formulation

In this section, we formulate the proposed interaction model and describe the model parameters and mathematical implications.

Let and , respectively, represent the number of views for example at time of the corresponding social bots content and the related circulating rumor or harmful content. and contain related concepts and ideas with relative interest for the readership, and the increase in views of would likely drive readers to check or at least recommend to the virtual community without knowing that the latter is unreliable or harmful. This is what makes rumors or fake news insidious and difficult to spot for most people with average cognitive ability. In particular, when we know that modern humans do not take time to reflect or analyze what they read or watch online, some ideas or concepts transported in non-verified allegations could have damaging mind effects. We formalize this phenomenon using Holling type I with a constant positive effect for practical purposes, assuming that the majority of people would check, comment, recommend, or read the related contents of interest. If we consider that malicious individuals or entities have strategic objective control for propagating rumors, then it is logical to conceive that could possess attractive concepts or ideas with profound penetration in the human mind that are difficult to dissipate, such as false hope (leading to bias). For that reason, we propose to model the negative effect of competition occurring between and using a Holling Type II functional response to let the competitors have the ability to defend themselves (assimilated to respective content attractiveness, level of credibility of their source, their freshness, interest to the online community, etc.) and benefit from their innate strength with respect to each species’ presence on a respective platform (number of views, comments, reposts, etc.) or saturation. The interaction is modeled by the following system:

where and are, respectively, the natural growth coefficients of and representing the rate of increase in size or view count per unit of time. represents the positive feedback coefficient capturing the effect of cooperation as described earlier. and are respective competition parameters in terms of the per capita decrease in views when targeted readers choose to exclusively view or consume or content. Parameters and formalize the respective assimilation efficiency due to the relative time needed before counting the negative feedback and each species’ intrinsic ability [44]. and could be adjusted within the function of the data at hand or the expected results in a controlled situation.

4. Preliminaries

In this section, we discuss the existence and characteristics of the system and positive solutions within the planar space.

will increase views only if , which signifies the following.

has a lower and upper bound on the phase plane of the system for . Furthermore, as , we have

We set the following.

At max, when

Similarly,

As and and possess lower and upper bounds, we can conclude, based on the Lipschitz continuity, that all positive solutions of the system are defined and unique [45].

From (4) and (5), it is clear that or the divergence could be zero for some set of parameter values. Based on the Poincaré–Bendixson theorem, we can say that there exists at least one closed orbit lying on the phase plane of the system, and for a given set of parameter values, the periodic motion of the solution curves could give birth to a limit cycle at the neighborhood of the positive coexistence equilibrium point. Furthermore, the Laplacian or the gradient of the divergence (the direction of the maximum slope) in terms of flux or vector movement does not have a definite sign. This means that there exists some set of parameter values for which the direction of the slope would be positive, negative, or zero. This is an indication of the rich dynamical behavior of the system for different sets of interactions.

5. Existence of Equilibrium States

The isoclines of the system are given by

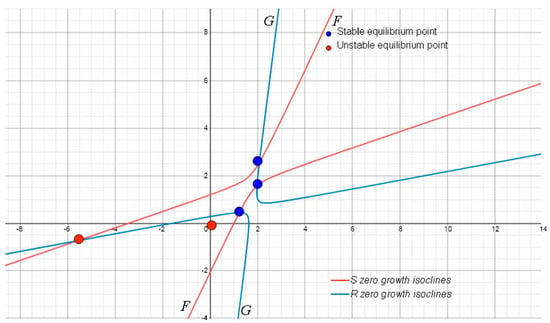

where and are, respectively, and zero growth isocline equations. A graphical representation of and is given in Figure 1, where it can be observed that the system admits at least five distinct solutions, and depending on the parameter value, any of them could be stable except for the origin.

Figure 1.

Graph of F and G showing three positive solutions and one negative equilibrium point.

The system could reach a steady state in five cases.

Case 1: When only the competition terms are equal to zero, this would be the case when and possess strong similarities and people can almost confuse them; in this case, the relationship governing the dynamics of the system would be exclusively mutually beneficial for both. The system will admit given that holds as unique positive coexistence equilibrium.

Case 2: When only the competition and cooperative terms are equal to zero, would then be the unique positive equilibrium of the system provided , , and . In this scenario, would naturally benefit from cooperation while can only thrive at a higher density. This could be assimilated into the strategy of respective social media platforms and the authority to promote over the trending rumor.

Case 3: When only competition and cooperative terms are equal to zero, would be the unique positive equilibrium point of the system provided ,and . In this scenario, would naturally suffer or benefit from competition, while can only benefit from cooperation. This could be the case for strong similarity between interactive species with respect to the content or concept they deliver to the virtual community.

Case 4: When only cooperative terms are equal to zero, the unique positive equilibrium point (equilibrium state) of the system is given by as long as . In this case, competition is intensive and there would be no gain from the strong presence of or on a given social network platform. The interacting species can only benefit from the debate if they are attractive enough and present very good concepts or ideas that nourish the online community’s mind.

Case 5: As for the steady state equilibrium or the coexistence equilibrium corresponding to the most interesting and practical case where both cooperative and competitive interactions occur, both and will increase or decrease during the function of control parameters and with respect to the attractiveness of each entity and other related factors. can be computed using an iterative method, such as the Newton–Raphson method, as follows.

If we set

where are initial guesses, we will then have

It follows that

Each of these equilibrium points could behave differently according to the situation at hand and the parameter value. Based on the stability theory, the ideal condition to satisfy for the system to be stable is that the respective unique positive equilibrium point of the system should be the only attracting spot for trajectories initiating at the origin. This is the requirement for traveling curves to approach asymptotically or to orbit around the given coexistence equilibrium.

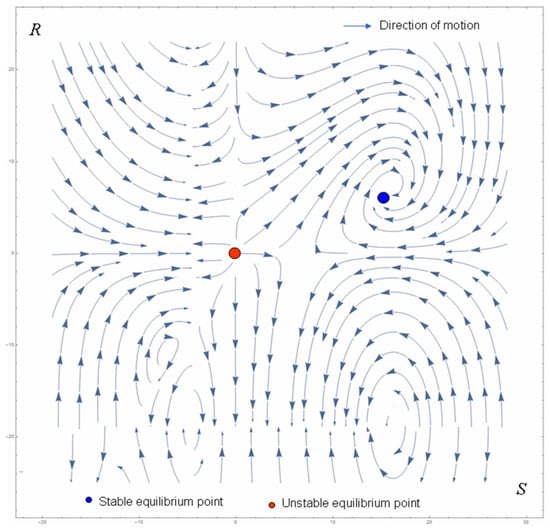

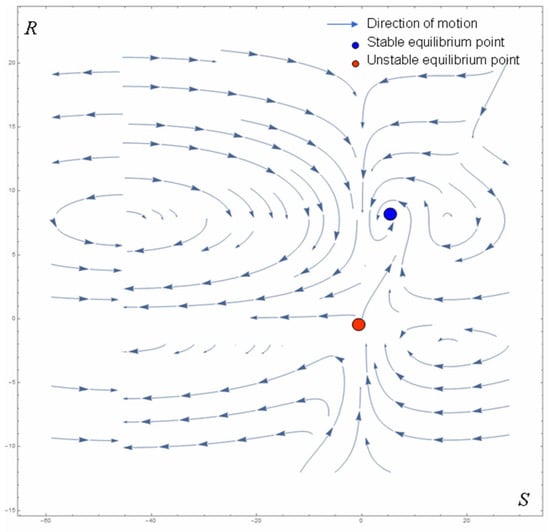

The other positive points must be repelling or at least unreachable, as portrayed in Figure 2 and Figure 3 in which a unique stable equilibrium point is shown in blue. Note that the origin is always unstable (in red), while the other points could be unstable or exhibit semi-stability or neutral stability.

Figure 2.

Phase trajectories showing a unique stable positive equilibrium point. The origin is a source node.

Figure 3.

Phase trajectories showing a unique positive equilibrium point in blue, unstable nodes, and the origin behaving as a source node.

6. Model Stability Analysis

In this section, we study the stability conditions around fixed points of the system and analyze the outcome of interactions occurring between and .

The linearization of the system gives

Based on the Routh Hurwitz criterion, we can analyze the system’s evolution over time around all equilibrium points by evaluating the sign of the polynomial’s characteristic equation roots. The polynomial characteristic equation is given by

where

The roots of equation (11) are given by

The system is stable according to the Routh Hurwitz criterion only if and . This could be satisfied in the following case:

and

Furthermore, if we take case , then we will have

By solving (15), we obtain and . The coexistence equilibrium point could have three different dynamical behaviors depending on sign and value. Traveling curves when approaching the steady state equilibrium will an exhibit inward spiral motion in case (stable) and an outward motion if (unstable). Trajectories will orbit around the steady state equilibrium in uniform motion for (neutrally stable). The practical interpretation of this dynamical behavior would be when and coexist on a given social media platform, and there are interactions occurring when online users read, comment, repost, react, etc.; these interactions will have different outputs depending on key parameters and many other factors related to the information’s proximity to the interacting individual’s biases, interests, awareness, educational background, etc. The coexistence of and could last longer or not, one of the interacting entities will dominate and occupy more space in the community’s mind or mobilize more attention in ongoing related discussions, or they could both thrive and appear periodically in conversations for a relative period. It would be interesting to investigate the determinants driving people to be attracted to or with respect to when and for what reason, but these are out of the scope of this investigation.

Let us analyze the local behavior of the system around as an example. For this case, we set . It follows that

Furthermore, when we vary , which is considered as a bifurcation parameter (applying bifurcation theory), we obtain

The Hopf bifurcation theorem requires to be hyperbolic, meaning that the following conditions must be satisfied: . It follows that

If (18) could be satisfied, then traveling orbits would have enough velocity to traverse the imaginary axis (transversality condition).

Expanding , we obtain

If we set

and rewrite (19), we obtain

If we set

then

If we can ensure (21) is met, then would be hyperbolic. Assuming that the genericity condition is fulfilled for a given parameter value and with the sign of depending on the sign, we can conclude that will behave as a center for closed curves that are traveling the phase plane and approaching this coexistence equilibrium. This would be considered as an ideal scenario in which and coexist peacefully and interact.

7. Results and Discussion

In this section, we carried out computer simulations to illustrate the behavior of the system when and are interacting. In all scenarios, we assume that the model parameters are positive constant numbers. They formalize the intrinsic growth rate or per capita increase in views and the number of reposts or comments, etc., for and across respective social media platforms for and . As for the underlying mutual relationship occurring when a rumor shares the same interest with related true information, coefficient could be deducted or computed from the gain that each interactive species obtains when people share, comment, like, or read them in terms of their presence on respective platforms. Similarly, for and , they account for competitive interaction coefficients that capture the negative feedback resulting from the interaction when online readers or the audience only read or comment on one of the respective content presented in or . Parameters and mainly model the assimilation of captured resources (using ecology or biology concepts) or views (comments, like, repost, etc.) in this case. These two coefficients could also be considered as control parameters for adjusting the function of the expected outcome in a controlled environment, as stipulated earlier. We tested different scenarios by slightly varying the initial conditions and control the parameter value to analyze the sensitivity of the system relative to small perturbations and the outcome of the underlying relationship.

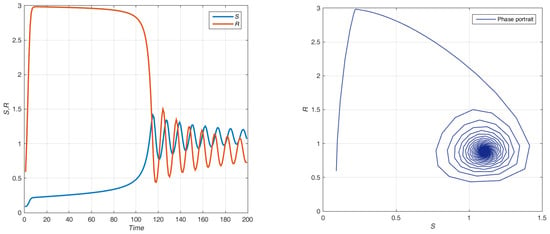

Figure 4 displays the dynamics of the interaction when wins the competition and invades the respective platform in which most interacting individuals read, repost, comment, and so forth. wins, while significantly fewer people do so with . Because of ’s high attractiveness, as determined by = 2.18, and the advantage of cooperation a1 = 0.51, it remains relatively competitive and does not vanish. This is one of the optimal situations where contains true information released by the authority, or approved social bots fulfill their mission of dissipating falsehood or at least minimizing the harmful effects of non-verified information containing insidious ideas in the community’s mind. However, to reach this ideal goal, social media platforms, together with the authority, should work hand in hand when managing respective social bot activities by providing the right content at the right time via the right node (which could be challenging to achieve). Using social influencers or online celebrities with a good reputation (prestige or high credibility) could be an alternative to accelerate the diffusion of verified content. This will be reflected in the model parameter value as will significantly increase views and related comments as an example. Depending on control parameter , will reach the saturation threshold at relative speeds. The neutrality of algorithms (artificial intelligence or social bots) when they are used to spread misinformation increases the challenge of managing online content and maintaining safer online activities (a burden for system governance). Social media platforms should play the social bot card to enhance their content and security management and participate more intelligently in combating disinformation. This result implies that leaning on social bots to enhance social media management may ease part of the burden of traditional management and control.

Figure 4.

S and R interaction dynamic time series and phase space for the following: S(0) = 0.8; R(0) = 0.04; α = 1.6; a1 = 0.51; a2 = 0.032; a3 = 0.023; b1 = 0.197; β = 2.18; b2 = 0.0329; and N = 7.

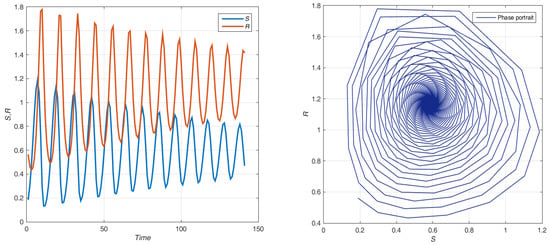

In Figure 5, increases views at high speeds because of ’s weakness in attracting people with α = 0.8. However, manages to make its way back in the competition in the end and gets the community’s attention. This suggests strengthening the relative real or true information at the expense of propagating rumors and fake news. Social media platforms and authorities could use social bots to improve the presence of related true or verified information and content, which is , on the platform via existing tools and methods, such as rankings or web marketing, etc. Therefore, in the future, social bots can be used to produce new content or refresh positive circulating and become the norm in disseminating high-credibility information. Of course, automated authoring is not only about turning data into information and then publishing it, but it should particularly be about making machines as an extension of humans. This also means that we should give full play to the dual advantages of social bots (artificial intelligence) and manual (human intelligence) review, eliminate management lag brought about by the rapid development of artificial intelligence, and ensure the sustainable development of social networks (ecosystem).

Figure 5.

S and R interaction dynamic time series and phase space for S(0) = 0.09; R(0) = 0.6; α = 0.8; a1 = 0.7657; a2 = 0.223; a3 = 0.088; b1 = 0.5329; β = 1.9863; b2 = 0.0831; and N = 7.

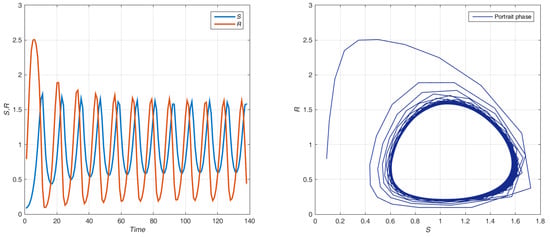

Figure 6 displays the case where is clearly overpowered even if it possesses a relatively greater (double) per capita grow rate α = 1.88 compared to β = 0.79 for . The steady state equilibrium is asymptotically stable, and all trajectories orbit around this point of the positive quadrant of the phase plane. In this situation, the trending rumor invades the platform, has the strongest presence, and receives the most attention when reposting, commenting, and so on. To avoid this undesirable outcome, one could optimize the social bots’ online activities to drive more comments or reposts by using, for instance, the capacity of this powerful tool to create multiple automatic accounts and generate larger numbers of virtual followers very rapidly; this will also boost its assimilation efficiency in ecological terms (or positive feedback in strengthening online presence). Here, a2/a3 is relatively greater than b1/b2. We derive from this result that via artificial intelligence, high-credibility or authoritative information with scientific support or proofs can be efficiently pushed toward social media users for increasing knowledge and rising awareness to say the least. In addition, all combined effort and technology would without a doubt help restore public trust in mainstream media and build more healthier and democratic social environments.

Figure 6.

Peaceful coexistence of S and R when R dominates, time series and phase space for S(0) = 0.19; R(0) = 0.56; α = 1.88; a1 = 0.78; a2 = 0.478; a3 = 0.288; b1 = 0.06; β = 0.79; b2 = 0.0631; and N = 7.

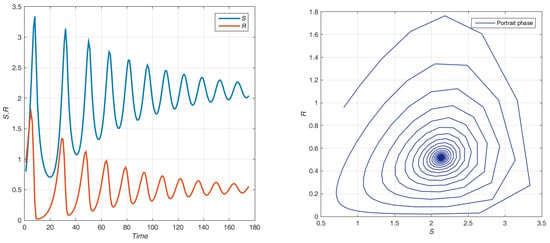

Figure 7 is an illustration of one of the desirable scenarios where the system admits periodic orbits, and a supercritical bifurcation occurs at the vicinity of the unique positive equilibrium point when traversing (bifurcating) the orbit of the imaginary axis. This is an ideal outcome for some cases where concept or idea propagates and can be utilized to orient the community towards the discovery of truth or a given idea. Some rumors could present the same concept linking to the truth. Other could just be a slight distortion of reality (truth), which is consistent with the theoretical analyses presented earlier. It can be observed that social bots can play the role of real human users and actively communicate with other users by virtue of their anthropomorphic characteristics. They could disseminate true information in some targeted conversations where false allegation (misinformation, disinformation, and rumor) has been detected on the basis that some distorted view of reality has the potential to trigger users’ thinking and judgment on a particular topic or shake their belief or trust in the authority. This would end up helping in maintaining the stability of online public opinion on sensible issues for instance.

Figure 7.

Time series and phase portrait of the interaction when a limit cycle arises around the steady state equilibrium for S(0) = 0.09; R(0) = 0.8; α = 0.85; a1 = 0.77; a2 = 0.18; a3 = 0.088; b1 = 0.5329; β = 2.09; b2 = 0.0831; and N = 6.

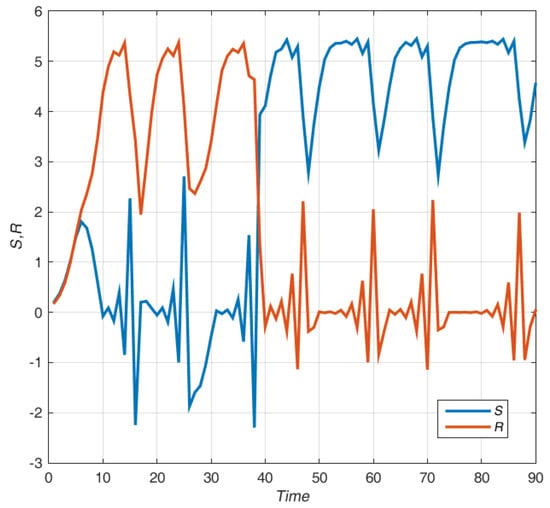

In Figure 8, the system is chaotic, and exhibits exponential growth for three cycles, benefiting from significantly stronger competition with respect to a2/a3 vs. b1/b2 before collapsing when facing a situation with scarce resources. wins the debate starting from the fourth cycle. This is one of the unwanted outcomes to avoid. This could arise when and possess relatively equal strength in capturing the attention of the community. This dynamic is evidence of the system’s sensibility to small perturbations and especially to initial conditions. To avoid this outcome, one could vary slightly the initial conditions and stabilize the system.

Figure 8.

Time series of the interaction when the steady state equilibrium is unstable, and the positive solution exhibits chaotic behavior for S(0) = 0.218; R(0) = 0.19; α = 1.8; a1 = 0.45; a2 = 0.3; a3 = 0.02; b1 = 0.27; β = 1.854; b2 = 0.03; and N = 14.

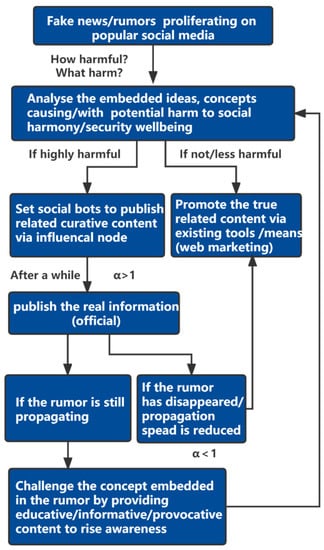

An overview is given in Figure 9 to illustrate how the proposed method could be used for combating fake news and disinformation on popular social networks.

Figure 9.

Overview of the proposed method of using social bots for combating fake news and disinformation.

8. Conclusions

In this article, a two-species cooperative and competitive interaction system was proposed to model the underlying interactions occurring on a social media platform between a circulating rumor and the relative information published using automated accounts or social bots. By applying the stability theory and qualitatively studying the proposed system dynamic, we found that peaceful coexistence is possible and the system could exhibit complex dynamical behavior. The results show that adapting ’s competitiveness via control parameters may enhance the diffusion of the truth and boost the targeted online community’s awareness and capacity for discernment in an effort to combat fake news and falsehoods proliferating on popular social media platforms. To support this overall goal, it is better to optimize the presence of on respective platforms by better conveying the main trending ideas or concepts and using the right web marketing techniques. This would end up strengthening authoritative content and reduce the presence or effect of on the platform.

Author Contributions

Conceptualization, Y.Z. and W.S.; methodology, Y.H.K.; software, Y.H.K.; formal analysis, W.S.; writing—original draft, W.S. and Y.H.K.; writing—review and editing, Y.Z. and Y.S.; visualization, W.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Social Science Foundation of China: 22BXW038; Doctoral Innovation Funding Project of Hebei: CXZZBS2022124.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data that support the findings of this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare that there are no conflict of interest regarding the publication of this research article.

References

- Gorwa, R.; Guilbeault, D. Unpacking the Social Media Bot: A Typology to Guide Research and Policy. Policy Internet 2018, 12, 225–248. [Google Scholar] [CrossRef]

- Shao, C.; Ciampaglia, G.L.; Varol, O.; Yang, K.-C.; Flammini, A.; Menczer, F. The spread of low-credibility content by social bots. Nat. Commun. 2018, 9, 4787. [Google Scholar] [CrossRef]

- Bunker, D. Who do you trust? The digital destruction of shared situational awareness and the COVID-19 infodemic. Int. J. Inf. Manag. 2020, 55, 102201. [Google Scholar] [CrossRef] [PubMed]

- Ferrara, E.; Varol, O.; Davis, C.; Menczer, F.; Flammini, A. The rise of social bots. Commun. ACM 2016, 59, 96–104. [Google Scholar] [CrossRef]

- Velichety, S.; Shrivastava, U. Quantifying the impacts of online fake news on the equity value of social media platforms—Evidence from Twitter. Int. J. Inf. Manag. 2022, 64, 102474. [Google Scholar] [CrossRef]

- King, K.K.; Wang, B. Diffusion of real versus misinformation during a crisis event: A big data-driven approach. Int. J. Inf. Manag. 2021, 2, 102390. [Google Scholar] [CrossRef]

- Aral, S.; Eckles, D. Protecting elections from social media manipulation. Science 2019, 365, 858–861. [Google Scholar] [CrossRef] [PubMed]

- Chen, D.-B.; Wang, G.-N.; Zeng, A.; Fu, Y.; Zhang, Y.-C. Optimizing Online Social Networks for Information Propagation. PLoS ONE 2014, 9, e96614. [Google Scholar] [CrossRef]

- Duan, Z.; Li, J.; Lukito, J.; Yang, K.-C.; Chen, F.; Shah, D.V.; Yang, S. Algorithmic Agents in the Hybrid Media System: Social Bots, Selective Amplification, and Partisan News about COVID-19. Hum. Commun. Res. 2022, 48, 516–542. [Google Scholar] [CrossRef]

- Bradshaw, S.; Bailey, H.; Howard, P.N. Industrialized disinformation: 2020 Global Inventory of Organized Social Media Manipulation; Programme on Democracy & Technology: Oxford, UK, 2020; pp. 1–26. Available online: https://demtech.oii.ox.ac.uk/research/posts/industrialized-disinformation/ (accessed on 6 January 2022.).

- Ruiz-Núñez, C.; Segado-Fernández, S.; Jiménez-Gómez, B.; Hidalgo, P.J.J.; Magdalena, C.S.R.; Pollo, M.D.C.Á.; Santillán-Garcia, A.; Herrera-Peco, I. Bots’ Activity on COVID-19 Pro and Anti-Vaccination Networks: Analysis of Spanish-Written Messages on Twitter. Vaccines 2022, 10, 1240. [Google Scholar] [CrossRef]

- Bienvenue, E. Computational propaganda: Political parties, politicians, and political manipulation on social media. Int. Aff. 2020, 96, 525–527. [Google Scholar] [CrossRef]

- Jones, M.O. Propaganda, fake news, and fake trends: The weaponization of twitter bots in the gulf crisis. Int. J. Commun. -Us. 2019, 13, 1389–1415. [Google Scholar]

- Al-Rawi, A.; Shukla, V. Bots as Active News Promoters: A Digital Analysis of COVID-19 Tweets. Information 2020, 11, 461. [Google Scholar] [CrossRef]

- Howard, P.N. New Media Campaigns and the Managed Citizen; Cambridge University Press: Cambridge, UK, 2005. [Google Scholar] [CrossRef]

- Beck, C.W.; Guinan, J.A.; Blumer, L.S.; Matthews, R.W. Exploring the Lotka-Volterra Competition Model using Two Species of Parasitoid Wasps. Teaching Issues and Experiments in Ecology. 2004. Available online: https://www.researchgate.net/publication/308718294_Exploring_the_Lotka-Volterra_Competition_Model_using_Two_Species_of_Parasitoid_Wasps (accessed on 25 May 2022).

- Miller, D.A.; Grand, J.B.; Fondell, T.F.; Anthony, M. Predator functional response and prey survival: Direct and indirect interactions affecting a marked prey population. J. Anim. Ecol. 2005, 75, 101–110. [Google Scholar] [CrossRef]

- Mao, X.; Sabanis, S.; Renshaw, E. Asymptotic behaviour of the stochastic Lotka–Volterra model. J. Math. Anal. Appl. 2003, 287, 141–156. [Google Scholar] [CrossRef]

- Xu, S. Computational Propaganda Poses Challenge. Global Times. 2017. Available online: https://www.globaltimes.cn/page/201709/1066077.shtml (accessed on 25 May 2022).

- Bradshaw, S.; Howard, P.N. The Global Disinformation Order: 2019 Global Inventory of Organised Social Media Manipulation. Working Paper. Oxford, UK: Project on Computational Propaganda. 2019, pp. 17–20. Available online: https://demtech.oii.ox.ac.uk/research/posts/the-global-disinformation-order-2019-global-inventory-of-organised-social-media-manipulation/ (accessed on 25 May 2022).

- Howard, P.N.; Woolley, S.; Calo, R. Algorithms, bots, and political communication in the US 2016 election: The challenge of automated political communication for election law and administration. J. Inf. Technol. Politics 2018, 15, 81–93. [Google Scholar] [CrossRef]

- Renault, T. Market Manipulation and Suspicious Stock Recommendations on Social Media; Social Science Electronic Publishing: New York, NY, USA, 2017; pp. 1–41. [Google Scholar] [CrossRef]

- Michienzi, A.; Guidi, B.; Ricci, L.; De Salve, A. Incremental communication patterns in online social groups. Knowl. Inf. Syst. 2021, 63, 1339–1364. [Google Scholar] [CrossRef]

- Howard, P.N. Social media, news and political information during the us election: Was polarizing content concentrated in swing states? arXiv Preprent 2018, arXiv:1802.03573. [Google Scholar] [CrossRef]

- Wang, S. The New York Times Built a Slack Bot to Help Decide Which Stories to Post to Social Media. Nieman Lab. 2015. Available online: https://www.niemanlab.org/2015/08/the-new-york-times-built-a-slack-bot-to-help-decide-which-stories-to-post-to-social-media/ (accessed on 25 May 2022).

- Schuchard, R.; Crooks, A.; Stefanidis, A.; Croitoru, A. Bots in Nets: Empirical Comparative Analysis of Bot Evidence in Social Networks. In Proceedings of the International Conference on Complex Networks and Their Applications, Cambridge, UK, 11–13 December 2018; pp. 424–436. [Google Scholar] [CrossRef]

- Stella, M.; Ferrara, E.; De Domenico, M. Bots increase exposure to negative and inflammatory content in online social systems. Proc. Natl. Acad. Sci. USA 2018, 115, 12435–12440. [Google Scholar] [CrossRef]

- Howard, P.; Kollanyi, B.; Woolley, S. Bots and Automation over Twitter during the Second, U.S. Presidential Debate. COMPROP Data Memos, Political Bots. 2016. Available online: https://ora.ox.ac.uk/objects/uuid:ad5ed49f-2ce3-4e74-a74b-74324f7dafba (accessed on 5 January 2022).

- Boshmaf, Y.; Muslukhov, I.; Beznosov, K.; Ripeanu, M. Design and analysis of a social botnet. Comput. Networks 2012, 57, 556–578. [Google Scholar] [CrossRef]

- Aiello, L.M.; Deplano, M.; Schifanella, R.; Ruffo, G. People Are Strange When You’re a Stranger: Impact and Influence of Bots on Social Networks. Comput. Sci. 2012, 6, 10–17. [Google Scholar] [CrossRef]

- Freitas, C.; Benevenuto, F.; Ghosh, S.; Veloso, A. Reverse Engineering Socialbot Infiltration Strategies in Twitter. In Proceedings of the 2015 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining, Paris, France, 25–28 August 2015. [Google Scholar] [CrossRef]

- Kitzie, V.L.; Mohammadi, E.; Karami, A. “Life never matters in the DEMOCRATS MIND”: Examining strategies of retweeted social bots during a mass shooting event. Proc. Assoc. Inf. Sci. Technol. 2018, 55, 254–263. [Google Scholar] [CrossRef]

- Salge, C.A.D.L.; Karahanna, E. Protesting Corruption on Twitter: Is It a Bot or Is It a Person? Acad. Manag. Discov. 2018, 4, 32–49. [Google Scholar] [CrossRef]

- Guidi, B.; Michienzi, A. Users and Bots behaviour analysis in Blockchain Social Media. In Proceedings of the 2020 Seventh International Conference on Social Networks Analysis, Management and Security (SNAMS), Paris, France, 14–16 December 2020; pp. 1–8. [Google Scholar] [CrossRef]

- Hajli, N.; Saeed, U.; Tajvidi, M.; Shirazi, F. Social Bots and the Spread of Disinformation in Social Media: The Challenges of Artificial Intelligence. Br. J. Manag. 2021, 33, 1238–1253. [Google Scholar] [CrossRef]

- Ferrara, E.; Yang, Z. Measuring Emotional Contagion in Social Media. PLoS ONE 2015, 10, e0142390. [Google Scholar] [CrossRef]

- Ferrara, E.; Cresci, S.; Luceri, L. Misinformation, manipulation, and abuse on social media in the era of COVID-19. J. Comput. Soc. Sci. 2020, 3, 271–277. [Google Scholar] [CrossRef]

- Bastos, M.; Mercea, D. The public accountability of social platforms: Lessons from a study on bots and trolls in the Brexit campaign. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 2018, 376, 20180003. [Google Scholar] [CrossRef]

- Bessi, A.; Ferrara, E. Social bots distort the 2016 U.S. Presidential election online discussion. First Monday 2016, 21, 11. [Google Scholar] [CrossRef]

- Shao, C.; Ciampaglia, G.L.; Varol, O.; Flammini, A.; Menczer, F. The spread of fake news by social bots. arXiv Preprent 2017, arXiv:1707.07592. [Google Scholar] [CrossRef]

- Shao, C.; Hui, P.-M.; Wang, L.; Jiang, X.; Flammini, A.; Menczer, F.; Ciampaglia, G.L. Anatomy of an online misinformation network. PLoS ONE 2018, 13, e0196087. [Google Scholar] [CrossRef]

- Zerback, T.; Töpfl, F.; Knöpfle, M. The disconcerting potential of online disinformation: Persuasive effects of astroturfing comments and three strategies for inoculation against them. New Media Soc. 2020, 23, 1080–1098. [Google Scholar] [CrossRef]

- Broniatowski, D.A.; Jamison, A.M.; Qi, S.; AlKulaib, L.; Chen, T.; Benton, A.; Quinn, S.C.; Dredze, M. Weaponized Health Communication: Twitter Bots and Russian Trolls Amplify the Vaccine Debate. Am. J. Public Health 2018, 108, 1378–1384. [Google Scholar] [CrossRef] [PubMed]

- Dyakonov, K.M. Equivalent norms on lipschitz-type spaces of holomorphic functions. Acta Math. 1997, 178, 143–167. [Google Scholar] [CrossRef]

- Pavlović, M. On Dyakonov’s paper “Equivalent normos on Lipschitz-type spaces of homorphic functions”. Acta Math. 1999, 183, 141–143. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).