1. Introduction

Traditional fuzzy control models have achieved remarkable success in making inferences for single-source fuzzy information systems. They have been increasingly applied to intelligent driving [

1,

2,

3], intelligent medical [

4,

5,

6], intelligent factories [

7,

8,

9], and various other fields. It is believed that information fusion enhances the descriptive ability of objects through the use of data redundancy and complementarity [

10,

11,

12]. A Fuzzy Inference System (FIS) could be improved by combining information fusion theory with fuzzy control theory to enable the system to perform better in terms of control and decision-making tasks than before. Motivated by this, we examine a fuzzy inference model that is driven by multi-source fuzzy data and look at how multi-source fuzzy data affect the precision of the inference model.

The fuzzy set (FS) and the rough set (RS) are two commonly used methods for describing fuzzy data or knowledge in a different way. As far back as 1965, scholar Zade was the first to propose the fuzzy set theory [

13]. Its essence is to mine the decision-making value of fuzzy data by constructing membership functions and performing fuzzy set operations. Zadeh’s fuzzy set is modeled as:

where

represents the membership degree of

in set

A, and “+” represents the component connection symbol in a fuzzy set; see [

13]. Rough set theory was first proposed by Pawlak in 1982 [

14]. The core idea is to use equivalence relation

R to construct the equivalence class of object

x in universe

U. The approximation set composed of equivalent objects is called the lower approximation set, denoted as:

see [

15]. In contrast, the approximation set composed of equivalent objects and similar objects is called the upper approximation set, denoted as:

see [

15]. A key aspect of using fuzzy set theory to describe fuzzy knowledge lies in modeling membership functions [

16]. There are four candidates for membership functions: bell-shaped curve function [

17], S-shaped curve function [

18], Z-shaped curve function [

19], and

-shaped curve function [

20]. Theoretically, the bell-shaped curve function is most widely used because it follows the law of large numbers and the central limit theorem [

21,

22].

Unfortunately, bell-shaped curve functions do not directly work for fuzzy systems involving multiple sources, for two reasons. First, it requires the function input to be a continuous and accurate real number. Second, it requires data from different sources to be analyzed using the same metrics so that comparability of data can be ensured. Our solution to these two problems is to use interval values to represent fuzzy data (such as measurement errors, degrees, spatial and temporal distances, emotions, etc.) and then to normalize heterogeneous fuzzy data to exact real numbers using interval normalization. Upon normalization, these real numbers will all be subjected to the same quantization factor (mean and variance). Additionally, we propose an adaptive membership function model that considers the dynamics of a multi-source environment, such as different information sources joining and leaving the system. With the aid of the membership function model, not only are we able to represent the overall distribution of data from multiple sources, but we are also able to adjust the membership value of the input variables to accommodate dynamic changes in the data. Combining the normalization method with the adaptive membership function, we develop a Gaussian-shaped Fuzzy Inference System (GFIS) driven by multi-source fuzzy data.

Our article makes two contributions to this literature. The first contribution is to propose an interval normalization method that is based on the normalization idea of Z-scores [

23], and describe the meaning of fuzzy data by the standard deviations of each data value from the mean. The interval normalization method can be formalized as:

where

is an interval. The greatest challenge and innovation of our method lies in calculating mean

and variance

for fuzzy data. In this work, we present formal models for mean and variance of fuzzy data, and we develop an approach for normalizing fuzzy data (interval value) with these formal models. The interval-value representation and normalization of fuzzy data are the premise of designing the fuzzy model of input variables in GFIS.

The second contribution of this article consists of deriving a Gaussian-shaped membership function for the input variable in GFIS. This function can be used to analyze normal-distributed data, and we denote it as:

where

m is the number of information sources. A significant advantage of the proposed membership function lies in its ability to be adapted to the change of information sources. As a general rule, membership functions based on Gaussian distributions require dynamic mean and variance parameters to be specified as hyperparameters. When function variables are normalized in advance, we can ensure that the mean value of function variables is always 0 and that variance increases linearly with the number of information sources available. Therefore, we do not have to incur large computational costs to obtain the hyperparameters of Gaussian-shaped membership functions. Additionally, a Gaussian-shaped membership function can handle dynamic changes in information sources effectively. It is important to note that the GFIS, which comprises the normalization method and Gaussian-shaped membership function, is thus applicable to multi-source fuzzy environments.

The rest of the article is organized as follows:

Section 2 provides a selected literature overview.

Section 3 introduces preliminaries.

Section 4 outlines the methodology proposed.

Section 5 details the experimental design and

Section 6 reports the experimental results. In

Section 7, we present the conclusions.

2. Related Work

Due to its smoothness and ability to reflect people’s thinking characteristics, the Gaussian membership function has been widely used. Below, we provide a brief description of several Gaussian membership function methods and their applications in the literature. It is worth mentioning that our method (among the three alternatives discussed) is the only one that takes into account both data heterogeneity and fuzzy data fusion.

Hosseini et al. presented a Gaussian interval type-2 membership function and applied it to the classification of lung CAD (Computer Aided Design) [

24]. Type-2 interval membership functions extend type-1 interval membership functions. In the type-2 fuzzy system, the membership of the type-1 fuzzy set is also characterized by a fuzzy set, which can improve the ability to deal with uncertainties. However, there are additional theoretical and computational challenges associated with the Gaussian interval type-2 membership function.

In Kong et al.’s study, a Gaussian membership function combined with a neural network model was designed to help diagnose automobile faults [

25]. The system has been proven to perform better in terms of reasoning accuracy than either a network model or a fuzzy inference model alone. However, it relies on a single source of information to derive fuzzy inferences from multivariable data.

Li et al. proposed a Gaussian fuzzy inference model for multi-source homogeneous data [

26]. The model uses three indicators (center representation, population, and density function) to describe single-source information and adopts a mixed Gaussian model to represent multi-source fusion information. This model does not address the heterogeneity and fuzziness of multi-source data. In addition, the model fails to account for differences between variables in a multidimensional dataset in terms of magnitude and measurement standards.

In previous research, different fuzzy inference models and membership functions were proposed for various practical applications. We propose a Gaussian fuzzy control inference model to solve the fuzzy inference problem in a multi-source fuzzy environment. Our model can improve medical CAD diagnostic accuracy by fusing X-ray images from different institutions. For automobile fault diagnosis, our model can describe each diagnosis parameter with fuzzy data. By further modeling these fuzzy parameters with fuzzy sets, we enhance fuzzy systems’ ability to cope with uncertainty. The fuzzy normalization model for multi-source data fusion enables us to map multivariate data to a dimensionless distribution space to ensure that the data metrics are aligned.

3. Preliminary

The Fuzzy Inference System (FIS), also known as a fuzzy system, is a software application that utilizes fuzzy set theory and fuzzy inference technology to process fuzzy information. In order to illustrate FIS’s application scenarios, let us take car following as an example. Due to the fact that the driver’s behavior is fuzzy and uncertain during the process of controlling the car, it can be difficult to accurately describe the driver’s behavior. When a car follows another car, it is necessary to maintain a safe distance in order to ensure the safety of drivers. FIS can be used to control the distance between a car and the one it is following. Specifically, based on the driver’s experience, the fuzzy rules of FIS for car following are summarized as follows. When a driver believes that the relative distance is far greater than the safe distance and the relative speed is fast, he or she accelerates appropriately. This will make the difference between the relative and safe distances as small as possible.

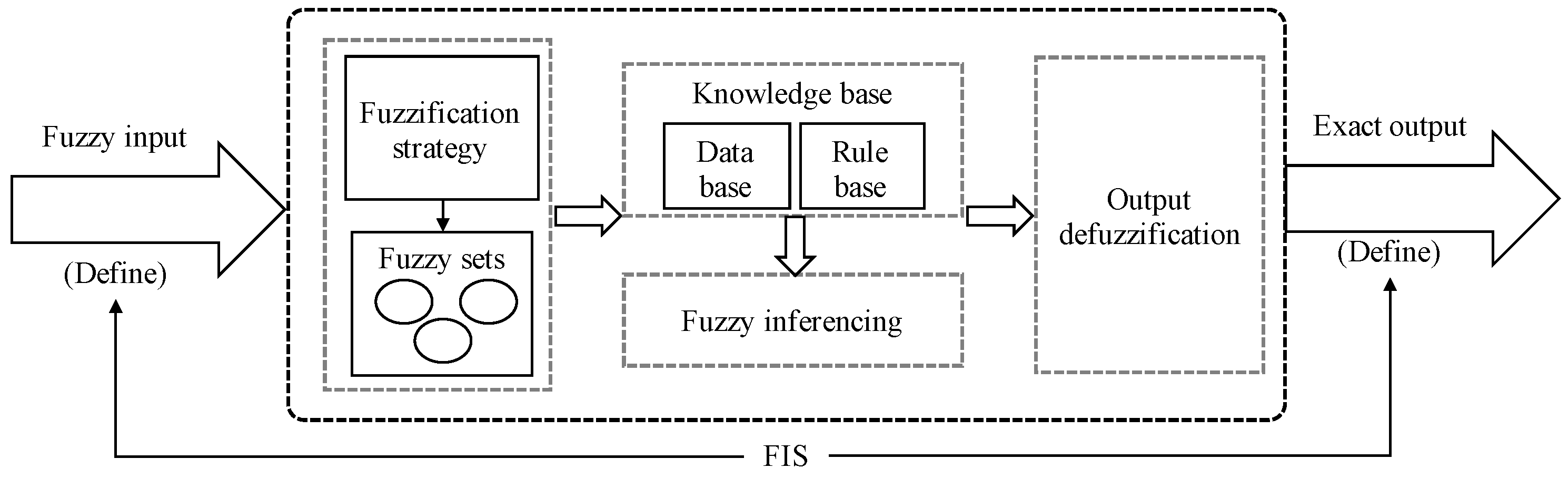

The classical FIS consists of five basic components: the definition of inputs and outputs; the construction of fuzzification strategies; the construction of knowledge bases; the design of fuzzy inference mechanisms; and the defuzzification of output. See

Figure 1 for details.

(1) The definition of inputs and outputs

In an FIS, the inputs and outputs correspond to the observation variables and operation variables, respectively. Definition of inputs and outputs includes determining parameters, variable numbers, data formats, etc. An FIS that has one input variable is called a single variable FIS and an FIS that has more than one input variable is called a multivariable FIS. FIS driven by multi-source fuzzy data encounters challenges in normalizing heterogeneous fuzzy data due to the fact that the traditional method of normalizing data fails in this situation.

(2) The construction of fuzzification strategies

Fuzzification is the process of assigning each input variable to a fuzzy set with a certain degree of membership. An input variable can be either an exact value or fuzzy data with noise [

27]. It is therefore necessary to consider the format of input variables when developing a strategy for fuzzification. In particular, fuzzy data are typically presented in discrete nominal formats or in aggregate interval-value formats, which makes mathematical fitting of membership functions challenging. In this paper, we use interval normalization to convert heterogeneous fuzzy data into continuous and exact values. We also use a math function to derive the membership function for fuzzy data.

(3) The construction of knowledge bases

In a knowledge base, there are two parts: a database and a rule base, respectively [

28]. Among the features in the database are membership functions, scale transformation factors, and fuzzy set variables. The rule base contains some fuzzy control conditions and fuzzy logic relationships.

(4) The design of fuzzy inference mechanisms

Fuzzy inference uses fuzzy control conditions and fuzzy logics to predict the future status of operating variables. This is the core of an FIS. In an FIS, syllogisms [

29,

30,

31] are commonly used to make inferences, which can be expressed as follows:

Truth: IF is , ⋯, and is , THEN y is B.

Condition: is , ⋯, and is .

The inference result: y is .

According to the FIS, truth is represented by fuzzy implication relations, denoted as . The inference result is derived from the combination of fuzzy conditions and fuzzy logics.

(5) The defuzzification of output

In general, the result derived from the FIS is a fuzzy value or a set, which must be deblurred to identify a clear control signal or a decision output. Most commonly used defuzzification methods include the maximum membership [

32], the weighted average [

33], and the center of gravity [

34].

Maximum Membership Given

k FIS submodels, the output of each FIS submodel is

with the membership degree of

. The final output of the FIS model is given by:

where

see [

32].

Weighted Average Given

k FIS submodels, the output of each FIS submodel is

with the membership degree of

. The final output of the FIS model is:

as

k is the number of sub FIS models, see [

33].

Center of Gravity Given

k FIS submodels, the output of each FIS submodel is

with the membership degree of

. The final output of the FIS model is:

as

k is the number of FIS submodels, see [

34].

5. Experiments

5.1. Data Description

To illustrate our method, three datasets are retrieved from the University of California Irvine (UCL) Machine Learning Repository to conduct the effectiveness and efficiency analysis.

Table 1 summarizes the details of the three datasets.

The Wine dataset [

38] consists of three grape varieties with 13 chemical components. Thirteen chemical components are taken as the 13 dimensions of an input parameter (

) and three grape varieties are taken as the three categories of an output parameter (

). GFIS is used for inferencing the category of grape based on the 13 kinds of chemical components. The fuzzy rule is that, if

, then

with the weight

,

. Our proposed membership function is used to calculate the weight of each sub-T–S model output. Finally, we perform the

K-means algorithm to cluster and explain the final inference results.

-Distance [

41] is used as the distance formula. By calculating the clustering precision, we assess the effectiveness of the proposed normalization method and the membership function. The more precise the clustering, the more effective the proposed methods. The User dataset [

39] consists of four user varieties with five kinds of study data. Five kinds of study data are taken as the five dimensions of an input parameter and four user varieties are taken as the four categories of an output parameter. The Climate dataset [

40] consists of two kinds of climate varieties with 18 kinds of climate data. Eighteen climate data are taken as the 18 dimensions of an input parameter and two kinds of climate varieties are taken as the two categories of an output parameter. To fully verify the effectiveness of the proposed methods, the same fuzzy inference program is conducted on the two datasets, respectively. Due to the lack of a public database containing multi-source fuzzy data, we refer to [

42,

43] to preprocess the original datasets in order to obtain target datasets:

Step 1: Let

denote an original dataset. Construct an interval-valued dataset, denoted as:

where

is the standard deviation of the i-th attribute in the same class and and is a noise condition factor. In the benchmark analysis, we set

Step 2: Let the number of information sources be m. Construct a multi-source interval-valued dataset by copying each piece of data m times.

Step 3: Get a random number

r from a normal distribution

. If

,

otherwise

Take a test dataset with two attributes and two categories as an example.

The original test data are: category 1

and category 2

. The mean and standard deviation of the first attribute of Category 1 are

and

The mean and standard deviation of the first attribute of Category 2 are

and

Similarly, the standard deviations of the two attributes of Category 2 are and .

Let . The interval value data set is: Category 1 and Category 2 .

In the end, we can construct a multi-source dataset by following steps 2 and 3.

5.2. Experimental Settings

We performed all experiments using Pycharm on MacOS 12.1 with an Intel Core i7 2.6GHz processor and 16 GB RAM. Three different experiments were conducted to illustrate the impact of multi-source fuzzy data on fuzzy inference accuracy. Three kinds of experiments are described briefly below:

- (1)

Experiment 1 is conducted on the original dataset. The original dataset refers to the dataset which has not been processed to remove noise (fuzzy processing).

- (2)

Experiment 2 is conducted on the normalized dataset. The normalized dataset is obtained by fuzzifying (noise addition) and normalizing the original dataset.

- (3)

Experiment 3 is conducted on the fusion dataset. Fusion data are obtained by summing normalized data from different information sources, denoted as:

where

is the

i-th fusion data and

is the

i-th normalized data from the

j-th information source.

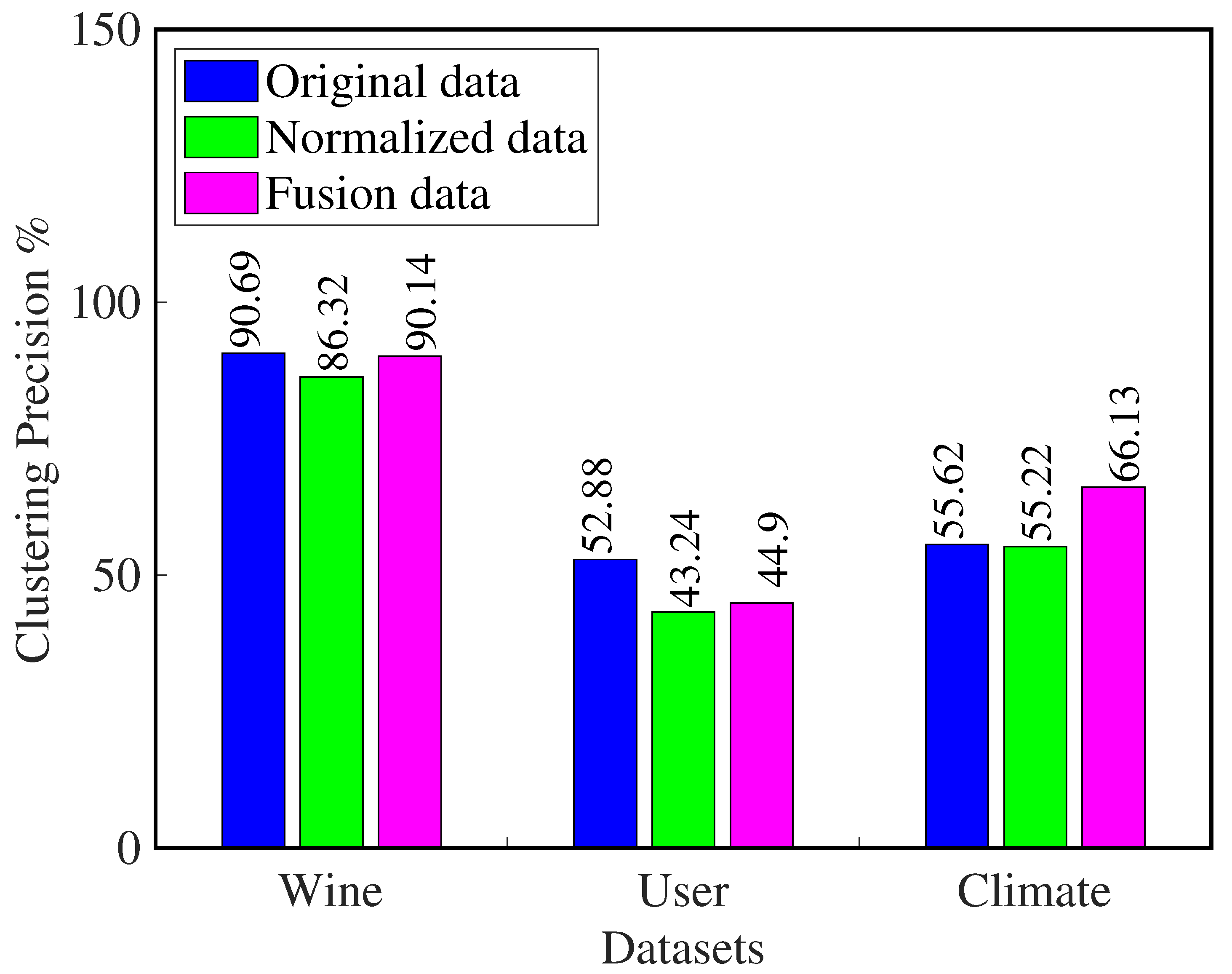

5.3. Performance Measurement

In this subsection, we evaluate the effectiveness and efficiency of the proposed methodology. In our study, the dataset goes through four stages of state: original data, interval-valued data, normalized data, and fusion data. Since the input parameters of GFIS must be exact numerical values, we only perform GFIS inference on the original, normalized, and fusion datasets. For the purpose of defuzzying and interpreting the inference results, the K-means algorithm is used to cluster the inference results, with each cluster indicating a decision category. Clustering results are compared with the actual decision categories to obtain the clustering precision, which reflects the effectiveness of the proposed normalization method and the membership function. The proposed method becomes more effective as clustering precision increases.

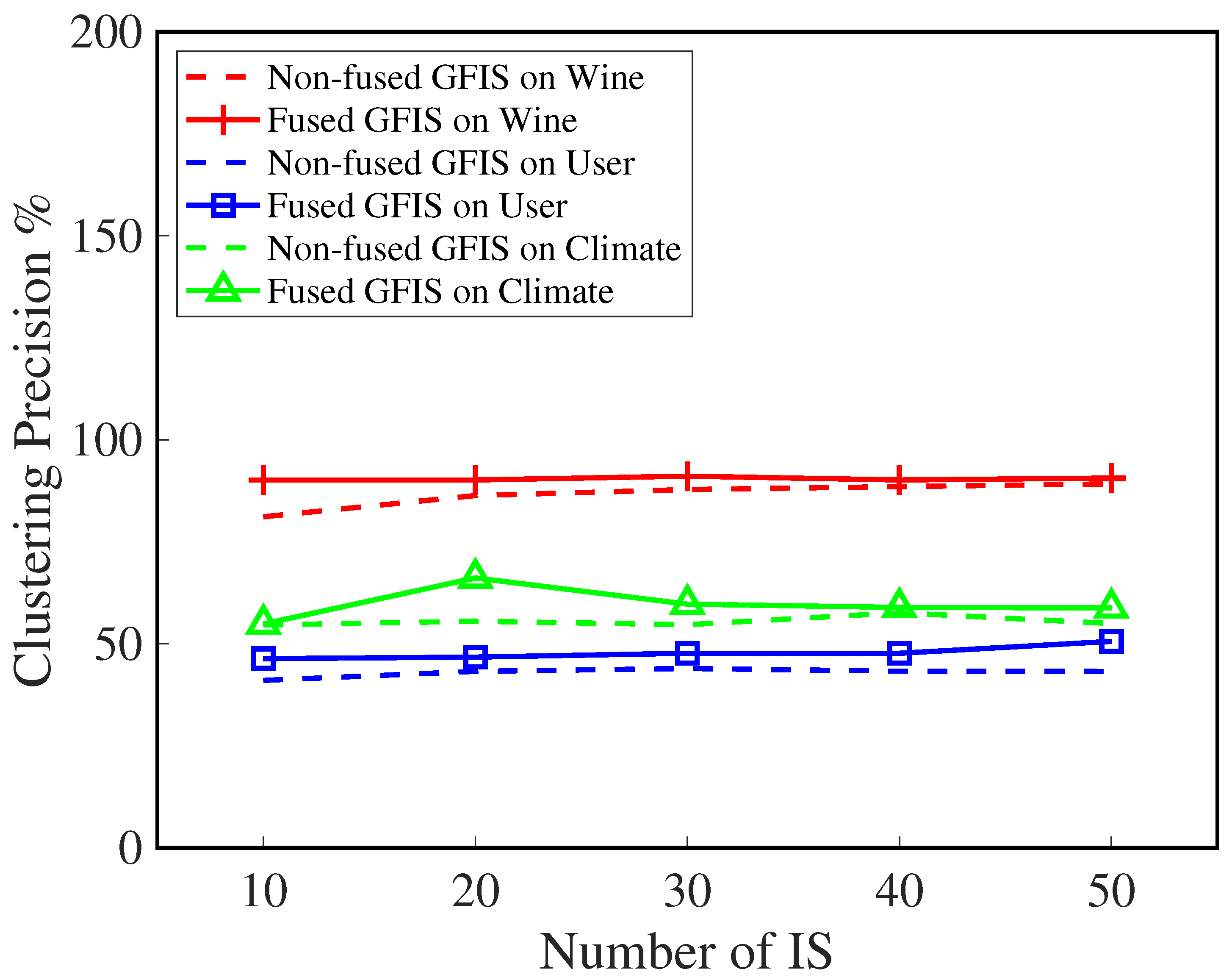

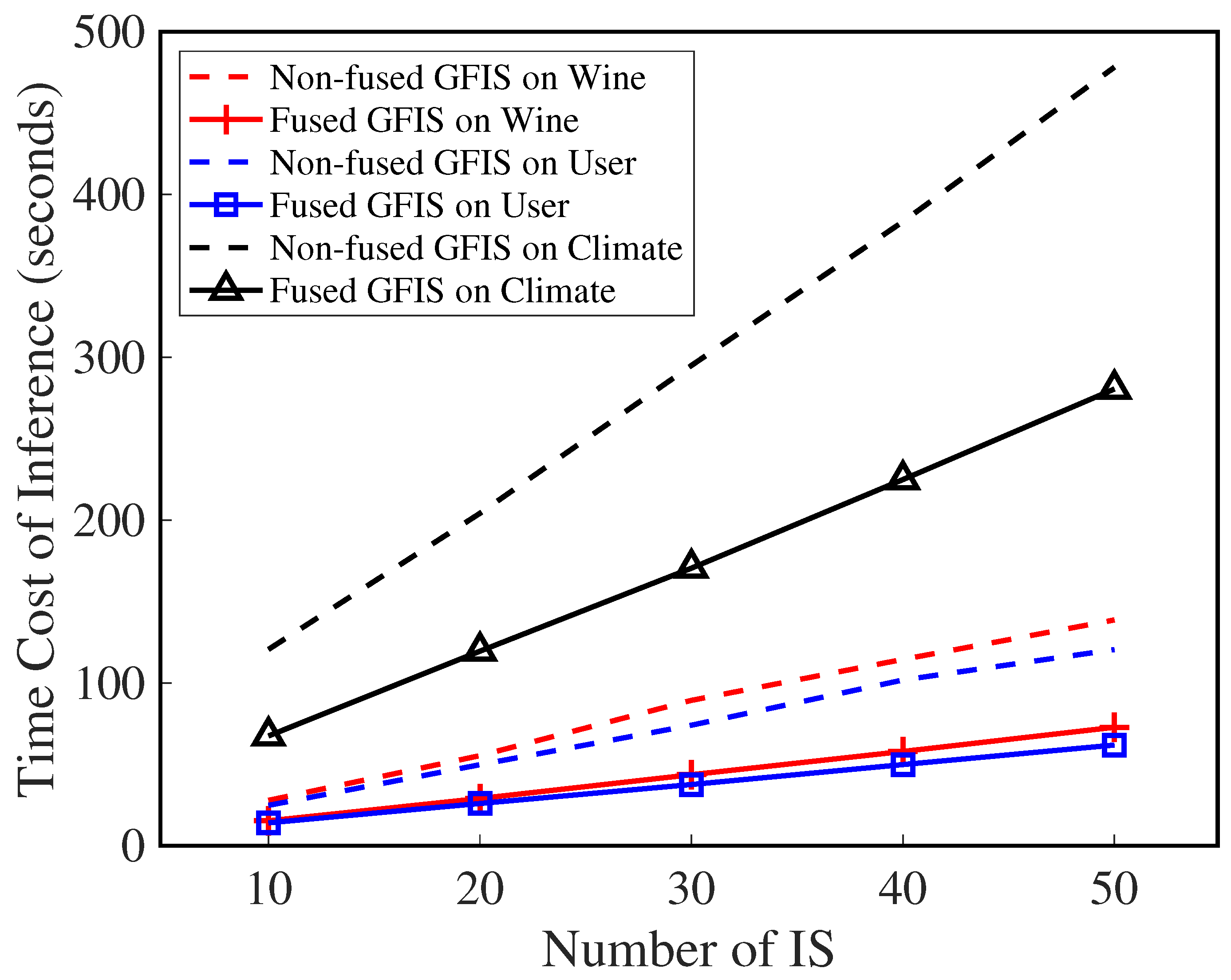

In a multi-source environment, GFIS inference on normalized data are performed independently for each information source, and the weighted average is used to indicate the final GFIS inference. However, the difference is that the GFIS inference of fusion data is to fuse the data of all information sources first and then perform GFIS inference on the fusion data. To facilitate memory, the GFIS inference experiment conducted on normalized data is called Non-fused GFIS, while the GFIS inference experiment on fusion data is called Fused GFIS. In Non-fused GFIS, the clustering precision of inference results is denoted as:

where IS stands for information sources. In Fused GFIS, the clustering precision of inference results is expressed as:

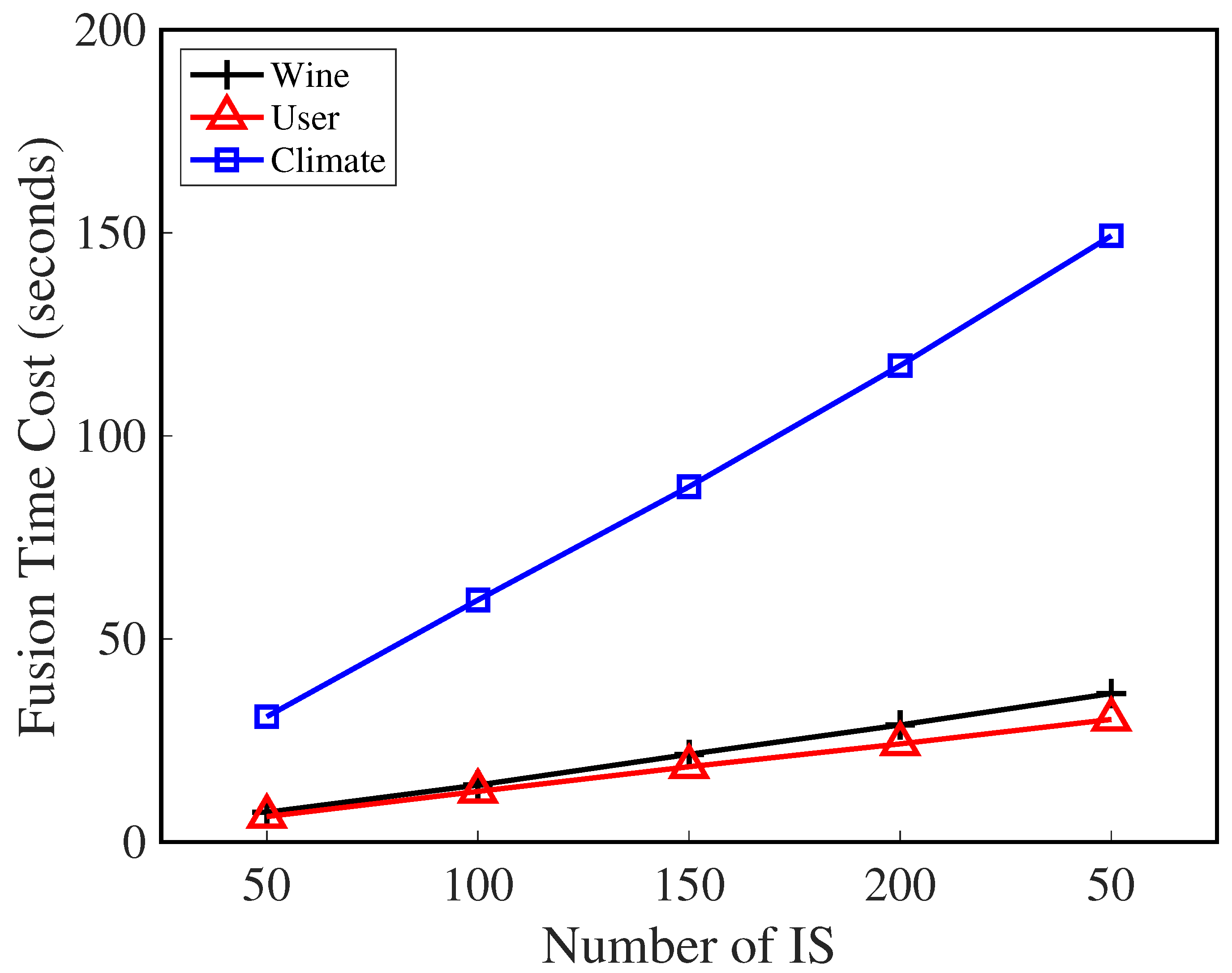

We have used K-means clustering to quantify the precision of inference results for different GFIS models. We are now ready to test the operating efficiency of the normalization method, the fusion method, and two types of GFIS models. Additionally to accuracy, operating efficiency is an equally crucial metric for evaluating models or methods, as it tells us how much it costs to operate them. A high level of operating efficiency indicates a high level of availability and feasibility.

Our fusion method is based on the addition law of normal distribution; see Equation (

18). Through the transformation of data distribution space, we guarantee that the normalized data from each information source satisfy the standard normal distribution

and the fusion data from

m independent information sources meet the normal distribution

. We need to calculate the sum of each object with respect to the sources of information as well as the variance of the fusion data according to the normal distribution formula. Thus, the proposed fusion method has a total time cost equal to the sum of the two calculation steps.

7. Conclusions

In this article, we develop a new Gaussian-shaped fuzzy inference system that is suitable for multi-source fuzzy environments. To achieve this goal, our first step is to normalize multi-source fuzzy data to remove the heterogeneity of multi-source data. In order to obtain the fused data of an object, we sum the normalized descriptions of it from different information sources. We then propose an adaptive membership function for the fusion data, which provides the basis for granulating the input for GFIS and designing its inference rules. We also propose a novel fuzzy inference framework by integrating the normalization method and adaptive membership function with the T–S model.

We conducted extensive experiments on three benchmark datasets to evaluate the effectiveness of the proposed methods. Three main conclusions can be drawn from the experimental results. First, the normalization of interval-value data can slightly reduce the clustering accuracy of the original data since it scales the distances and adds some noise. Second, the Fused GFIS model’s inference precision is significantly higher than that of the Non-fused GFIS model. This is due to the fact that data fusion can remove some of the errors from different sources of information. Third, with an adaptive membership function, the proposed GFIS can handle fuzzy inferences of incremental data more efficiently.

There are some limitations to the proposed GFIS, which helps us identify future research directions. First, all data variables should be independent. Second, all data variables have to follow the law of large numbers and the central limit theorem. Third, when applying GFIS, it is necessary to select appropriate fuzzy rules and inference logic.