Abstract

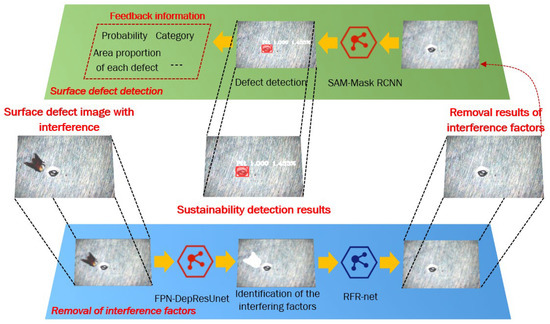

When detecting surface defects in the industrial cutting environment, the defects are easily contaminated and covered by many interference factors (such as chips and coolant residue) that exist on the machined surface. These interfering factors hinder the sustainable detection of surface defects. Furthermore, addressing the challenge of detecting surface defects in the presence of interference factors has proven to be a difficult problem in the current detection field. To solve this problem, a sustainable detection method for surface defects is proposed. The method is divided into two steps: one is the identification and removal of interference factors; the other is the detection of surface defects. First, a new FPN-DepResUnet model is constructed by modifying the Unet model from three aspects. The FPN-DepResUnet model is used to identify the interference factors in the image. Compared to the Unet model, the MAP of the FPN-DepResUnet model is increased by 5.77%, reaching 94.82%. The interfering factors are then removed using the RFR-net model. The RFR-net model performs point-to-point repair of interference regions. The repair process is performed by finding high-quality pixels similar to the interference region from the rest of the image. The negative effects of the interfering factors are removed by combining the FPN-DepResUnet model with the RFR-net model. On this basis, the SAM-Mask RCNN model is proposed for efficient defect detection of clean surface images. Compared with the Mask RCNN model, the MAP of the proposed SAM-Mask RCNN model increased by 2.00%, reaching 94.62%. Further, the inspection results can be fed back with a variety of surface defect information including defect types, the number of pixels in the different defect regions, and the proportion of different defect regions in the entire image. This enables predictive maintenance and control of the machined surface quality during machining.

1. Introduction

Zero Defect Manufacturing (ZDM) has been described as one of today’s most promising strategies. The goal of this strategy is to reduce and mitigate defects in manufacturing processes, or in other words to eliminate defected parts during production [1,2]. Surface defect detection provides a boost to the realization of this strategy. Surface defect detection is widely used in various industrial quality inspection processes and is the key parameter to control product quality [3,4,5]. By detecting surface defects during the production process, the damage caused by the uncontrolled machining process can be remedied in advance to prevent the production of defective parts [6].

Surface defect detection mainly consists of locating the defect location and identifying the defect type. There are many existing studies on surface defect detection. However, most existing studies do not consider the impact of the complex environment in the actual manufacturing process. For example, in the production cutting environment, there are many disturbances from the manufacturing environment (coolant residues or debris). At this time, under the influence of such interference factors, the accuracy of defect detection on surface images using existing research methods is low. Therefore, the main contribution of this paper is to propose a sustainable surface defect detection method. It can remedy the detection link break caused by the existence of interference factors, which has practical industrial application value.

The method is divided into two steps: one is the identification and removal of interference factors; the other is the detection of surface defects. First, the constructed FPN-DepResUnet model and the RFR-net model are used to identify and remove the interfering factors in the surface images, respectively. Then, the surface defects are detected using the proposed SAM-Mask RCNN model. The FPN-DepResUnet model and the SAM-Mask RCNN model proposed in this paper have novel structures. At the same time, all models are experimentally validated, and the results showed the effectiveness of the proposed method. The research content structure of this paper is shown in Figure 1.

Figure 1.

Structure of the research content of this paper.

This paper is organized as follows. In Section 2, some advanced defect detection methods for cutting surfaces are briefly reviewed. In Section 3, an identification method based on the FPN-DepResUnet model for interference factors is proposed. In Section 4, the RFR-net model is used to remove the interference factors in the surface images. In Section 5, a surface defect detection method based on SAM-Mask RCNN model is proposed. Finally, the conclusions are provided in Section 6.

2. Related Works

With the continuous in-depth research of artificial intelligence technology, the existing research on surface defect detection methods mainly focuses on deep learning. The deep learning method was proposed by Hinton et al. [7]. Firstly, it has been successfully applied to the classical image classification task. In the existing defect detection literature, there are three types of detection methods: classification, target detection, and segmentation [8,9].

In terms of the defect detection of deep learning-based classification network, Tabernik et al. [10] designed a segmentation network and used it to achieve the classification of defect images. The designed network consists of two parts, segmentation network and decision network, which are trained separately. The network achieves 99.9% classification accuracy in a training set of only 399 samples (50 of which are defective samples). Zhang et al. [11] proposed a novel model called cost-sensitive residual convolutional neural network (CS-ResNet). This new model solves the problems caused by unbalanced data sets and misclassification of real and fake defects. The model improves the ResNet network in two ways. One is to give greater weight to a small number of true defects based on class imbalance. The second is to optimize CS-ResNet by minimizing the weighted cross-entropy loss function. Ma et al. [12] proposed an improved DenseNet network to identify the surface defects of polymerized lithium-ion batteries. The classification accuracy of the method is more than 99%.

In terms of the defect detection of deep learning-based target detection networks, the difference between this method and the classification network is that it is not only necessary to determine whether there are defects and defect categories in the image to be detected, but also to determine the location and size of the defects (external rectangle). The network structure of this method mainly includes Faster R-CNN [13], YOLO (You Only Look Once, YOLO) [14], SSD (Single Shot Multibox Detector, SSD) [15], and so on. Zhao et al. [16] composed the SE network and the SSD network. The authors proposed a new SE-SSD model. This network solves the problem that large target defects and small target defects cannot be better detected at the same time in fabric defect detection. The model is based on the SSD network with the addition of the SE module after its convolution operation. The SE module increases the weight of the model on the feature channels containing defect information. This increases the accuracy in fabric defect detection. Zheng et al. [17] focused on the problem that wafer surface defects are easily confused with the background and are difficult to detect. A new method for wafer surface defect detection based on background subtraction and Faster R-CNN is proposed. The interference of background is eliminated using image differencing operation. Experimental results show that the proposed method improves the mAP by 5.2% compared to the original Faster R-CNN. Yin et al. [18] used YOLO V3 network to detect damage defects in sewage pipelines and obtained 85.37% mAP.

With respect to the defect detection of deep learning-based pixel segmentation networks, this method not only realizes the functions of the above two methods, but also obtains the accurate shape and position information of the defects through the segmentation network, so as to achieve a reliable judgment of the surface quality. Uzen et al. [19] proposed a novel method based on Depth-wise Squeeze and Excitation Block-based Efficient-Unet (DSEB-EUNet) for automatic surface defect detection. The proposed model includes a Unet network, and a deep extrusion and excitation block added to the skip connection of the Unet. Feng et al. [20] used an improved Encoder-Decoder network to detect surface defects of hydropower dams. This network combines the features of the Encoder–Decoder (Unet) by the pixel-by-pixel addition of feature maps. This can improve the accuracy of defect segmentation. Han et al. [21] used Faster R-CNN network to detect images that may contain defects. The defects in these images are segmented using the Unet network.

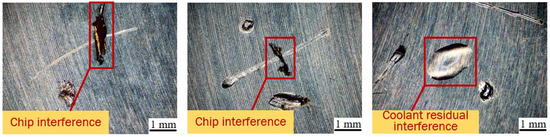

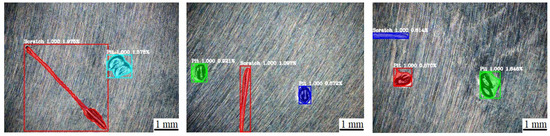

The above detection method has achieved a good detection effect, but it ignores the adverse effects of interference factors in the actual cutting environment. As is well known, due to the effects of the actual industrial cutting environment, mechanical parts can easily produce stains or cutting surfaces contaminated by interference factors (coolant residue or chips). These interfering factors can cover the defect location and affect the sustainability detection of the surface. The surface defect images covered by interference factors are shown in Figure 2.

Figure 2.

Surface defect image covered by interference factors (coolant residual or chips).

Through the literature review and analysis, there are few studies on the sustainable detection of surface defects. Gyimah et al. [22] proposed a Robustly Completed Local Binary Pattern (RCLBP) framework for surface defect detection. The framework establishes a denoising technique based on a Non-Local (NL) means filter with wavelet thresholding. This denoising technique filters the noise in the image while preserving the texture and edges. The experimental results show that the proposed framework is noise-resistant and can be applied to the detection of surface defects under changing light. However, the accuracy and robustness of this framework can be improved. Yang et al. [23] proposed an anti-interference roughness detection method based on deep learning. This method combines the CNN model, CBAM Res Net semantic segmentation model, and PConv Net image painting model. The experimental results show that the surface roughness detection accuracy of the proposed method is 90.0% under the influence of interference factors. The proposed method depends on the regular texture structure and is characterized by poor detection accuracy. Deng et al. [24] used a Yolo V2 network to detect crack defects on concrete surface. The ability of the network to detect real defects in complex backgrounds is trained by adding coating interference to the acquired images. The mAP of this method is 77%. Sun et al. [25] proposed a surface defect detection system for printing fabric based on an accelerated robust feature algorithm. The detection system is used to detect the defects of white silk, spots, and wrinkles on fabric products. The two-way unique matching method is used to reduce the interference of mismatch points and realize the precise positioning of defects. The experimental results show that the system has 98% accuracy in detecting surface defects of printed fabrics.

The above defect detection methods still need to be improved in two aspects: first, most of the studies only consider the texture interference or noise interference on the image, which does not meet the needs of practical industrial application scenarios. Second, the use of image processing technology can easily cause the distortion of the processed surface image and weak adaptability. Therefore, in order to promote the realization of the ZDM strategy, it is very necessary to carry out research on sustainable defect detection suitable for actual industrial production. The main idea of this paper is to first identify and remove the interference factors in the surface, and then to detect the surface defects. Through the analysis, the Unet model is selected to identify the interference factors. This is because the Unet model has the advantages of a simple structure, running speed block, and high detection accuracy. At the same time, with the help of the “portrait repair” idea, this paper selects the RFR-net network model to remove the interference factors. This is because the RFR-net network model is one of the existing models with a better repair effect. Finally, the Mask RCNN model is selected for surface defects. This is because the model is simpler and more flexible and has high segmentation accuracy than traditional methods.

In this paper, the authors conduct an in-depth study based on the Unet model and the Mask RCNN model. First, the shortcomings of both models are analyzed in the case of practical industrial applications. On this basis, the paper proposes the corresponding solution to optimize and improve the structure of the two models. Finally, the two newly constructed model structures are tested using the dataset. The test results also show that the two newly constructed model structures have higher detection accuracy and better practical application value. Meanwhile, it also demonstrates the novelty and effectiveness of the newly proposed sustainability detection method.

3. Identification of the Interfering Factors in the Surface

The primary objective of surface defect sustainable detection is to use the method to eliminate interference factors without affecting the information features of the rest of the image. To accomplish this, the first step is to identify the location of interference factors in the image. In this section, Unet model is selected to identify interfering-factor locations. This is because it has the advantages of high accuracy, simple structure, and retaining detailed information while segmenting images.

The encoder component of the Unet model [26] utilizes a traditional convolution layer that may lead to issues such as more convolution operations, large computation, and a single activation function. Large parameter numbers also easily lead to overfitting of models when dealing with tasks with small sample sizes. In order to improve the detection accuracy of the Unet model, it is improved in this section. In this section, the traditional convolutional neural network of the backbone feature extraction network of the Unet model is replaced by a deep separable convolution to reduce the number of parameters of the network. At the same time, the Feature Pyramid Network (FPN) [27] is introduced to optimize the jump connection part of the model to improve its segmentation accuracy. To avoid the problem of gradient disappearance or bursting with the model depth, the residual structure [9] is introduced.

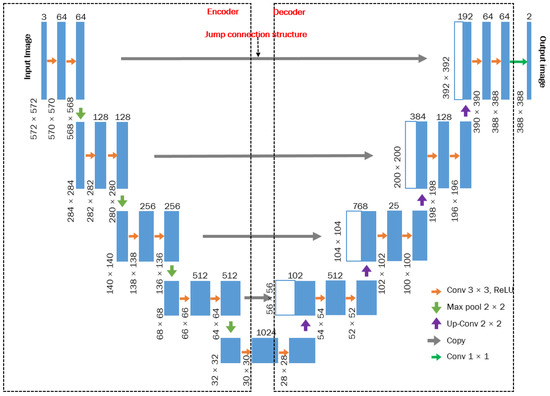

3.1. Unet Model

The Unet model comprises of two symmetrically composed components, namely the encoder and the decoder, which support each other’s function, as depicted in Figure 3. The encoder is used to extract the feature information of the target image, which consists of convolution and maximal pooling. The decoder’s role is to integrate the effective feature layer extracted by the encoder. This is achieved by up-sampling and stacking the feature layers using the feature fusion technique. In addition, Unet also uses the jump connection structure to transmit the feature map extracted by the encoder into the decoder of the corresponding scale, thus strengthening the feature extraction ability of the model and achieving high segmentation accuracy. Finally, based on the feature recognition results, category labels are assigned to each pixel in the image.

Figure 3.

The Structure of the Unet model.

3.2. FPN-DepResUnet Model

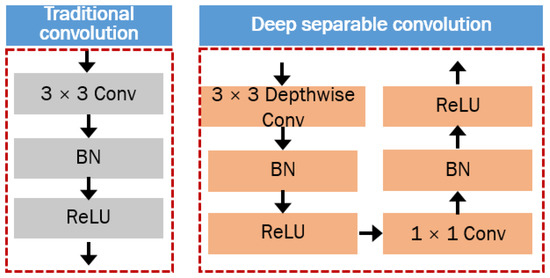

3.2.1. Depth-Separable Convolution

In traditional convolution, all channels of the image are convolved by the same convolution kernel. Therefore, when processing inputs with a high number of channels, traditional convolution fails to extract detailed features from each channel layer. In depth-separable convolution, each channel of the image is convolved by different convolution kernels. Depth-separable convolution can effectively reduce the number of parameters in the operation process and play a key role in streamlining the model. The network structure of traditional convolution and depth-separable convolution is shown in Figure 4. Depth-separable convolution is composed of a deeply separable block and ordinary convolution blocks (1 × 1). The convolution kernel size for deep separable convolutions is 3 × 3. Ordinary convolution can adjust the number of channels. Batch Normalization (BN) makes the distribution of eigenvalues per each network as stable as possible through the normalization operation. ReLU is the most widely used activation function, and its function is to increase the nonlinear relationship among the various feature layers. The combination of BN + ReLU can suppress the gradient explosion occurring during the network model training and speed up the training speed.

Figure 4.

The network structure of traditional convolution and depth-separable convolution.

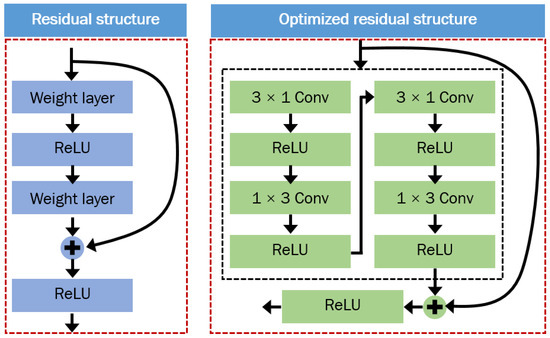

3.2.2. Residual Structure

As the convolution depth increases, the model training produces more and more errors, thus reducing the performance. Therefore, this section adds the residual structure to compensate for the decline of the model performance. Residual structure can not only solve the problem of performance degradation, but also reduce the probability of gradient problem and improve the ability of the model to segment interference factors in the image. Figure 5 illustrates the residual structure, which consists of fast connections and identity maps. In practical industrial applications, a single layer of residual blocks may not suffice. Therefore, to improve the performance of the model in segmenting interference factors, the residual structure is optimized: the two 3 × 3 convolution layers are replaced with the structures of 3 × 1, 1 × 3, 3 × 1, and 1 × 3.

Figure 5.

Residual Structure and Optimized Residual Structure.

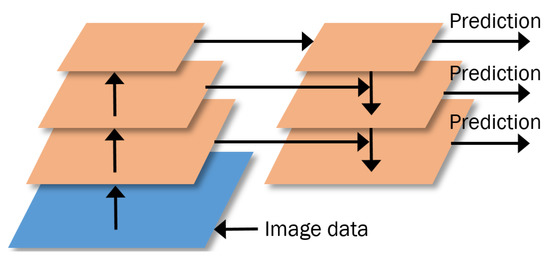

3.2.3. Feature Pyramid Network (FPN)

In deep learning networks, shallow feature maps obtained by less convolution have the characteristics of weak semantic information and strong contour texture detail information. The deep feature maps obtained by more convolutions have the characteristics of strong semantic information and weak contour texture detail information. Although the jump connection in the Unet model makes the two feature maps merge with Concat channels, there will be a large difference in semantic information between the two connected feature maps. This difference in semantic information results in low accuracy when splitting some datasets. To address this problem, this section uses FPN to optimize the jump connection.

FPN allows the fusion of feature maps at different scales of the encoding structure. The encoding structure of FPN is convolved and down-sampled from the top-down level. The feature fusion part is a structure that combines information from the bottom–top levels and from the deep–shallow layers level. The structure of FPN is shown in Figure 6.

Figure 6.

The structure of FPN (Top-down level convolution and down-sampling).

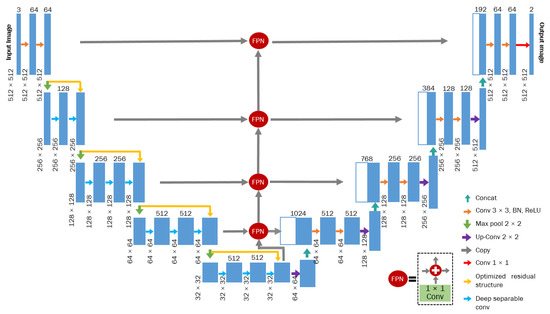

The FPN-DepResUnet model proposed in this section combines the lightweight characteristics of deeply separable convolution, the training performance characteristics of residual structure, and the multi-layer feature fusion characteristics of FPN, which effectively solves the problems such as incomplete feature acquisition of interference factors in images and easy overfitting of model training. At the same time, in order to promote the model construction and better universality, there are some differences between the Unet model constructed in this section and the traditional Unet model. Double up-sampling was performed in the decoder of the Unet model, followed by feature fusion. This method ensures that the height and width of the final feature layer obtained are the same as in the input image. The structure of the FPN-DepResUnet model is shown in Figure 7.

Figure 7.

The structure of the FPN-DepResUnet model (FPN, deeply divisible convolution, and residual structures are introduced).

3.3. Model Training and Discussion of Results

3.3.1. Model Training

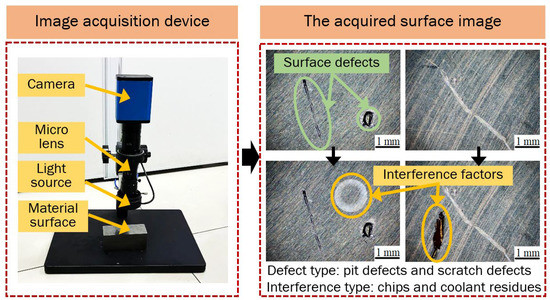

This paper uses a large number of cutting surfaces as the basis for the study. These cutting surfaces are obtained experimentally. In the process of cutting experiments, the surface defects are often covered by interference factors in the environment. Firstly, a large number of superalloy parts are obtained with cutting experiments. The material used in the milling experiments is GH4169. The type of cutting tool is a ceramic knife. This process selects the clean cutting mode of high-speed dry milling. The machine tool is the CNC machining center of DMG company in Germany. Then, the cutting surface images of the superalloy parts are acquired using the image acquisition device. Among them, the image acquisition is based on the built image data acquisition device, which is composed of an industrial camera, a micro lens, and light sources. As shown in Figure 8, the parts are placed on the workbench and the surface images of different parts are collected using the image acquisition device. For the surface at the same location, the images are acquired twice. One is the surface image without environmental interference; the other is the surface image covered with environmental interference factors. This paper assumes that interfering factors are chips and coolant residues often present in the cutting environment, and the types of defects are pit defects and scratch defects. At the same time, the interference factors account for less than 40% of the surface image. The surface defect image dataset with interference and surface defect image dataset without interference are obtained by the acquisition device. The images in these datasets are used as data training and testing data in deep learning models. In this paper, the network model is trained and tested using PyCharm software. The surface size of the image is 640 × 480 pixels. The actual field of view of the image is 6.425 mm × 4.819 mm. The acquired surface image is shown in Figure 8.

Figure 8.

The image acquisition device and the acquired two types of surface images (no-interference surface images and surface images with interference factors).

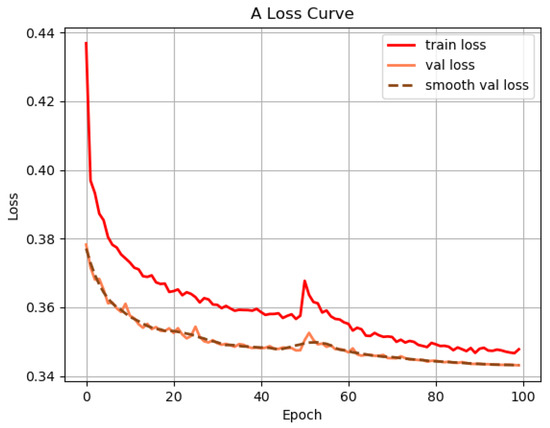

Segmentation is treated as a dichotomous problem, where the interference factor is in the foreground and all other objects are in the background. To reduce the risk of overfitting the model, data enhancement is performed on the original image data, including horizontal flipping, vertical flipping, and Gaussian noise. A total of 1000 images are randomly selected from the two datasets. The data is increased from 1000 images to 5000 images, where the data amount of the training set is 4500 images, and the rest is used as the test set. The labelme tool is used to label different interference regions in the defect images before training. The model selects Adam as the optimization algorithm for the learning rate, where the initialized learning rate is set to 4 × 10−4. The loss curves of FPN-DepResUnet model are shown in Figure 9.

Figure 9.

Loss curve of FPN-DepResUnet model.

3.3.2. Results and Discussion

This section selects two universal indicators commonly used to evaluate segmentation performance: Mean Intersection over Union (MIoU), and Mean Pixel Accuracy (MPA) and Params. Meanwhile, ablation experiments are performed in this section to illustrate the efficacy of the proposed FPN-DepResUnet model. The models for the ablation experiments are as follows: Unet, Dep-Unet, DepRes-Unet, and FPN-DepResUnet. Among them, Dep-Unet is to replace the traditional convolutional layer of Unet with depth separable convolution; DepRes-Unet introduces the residual structure on the basis of Dep-Unet; and FPN-DepResUnet introduces the FPN structure on the basis of DepRes-Unet. The experimental results are shown in Table 1.

Table 1.

Analysis of ablation test results.

Compared with the Unet model, the MIoU and MAP of the Dep-Unet model increased by 2.03% and 1.80%, respectively, and the Params decreased by 45.48%, which verified the effect of deep separable convolution on reducing the number of parameters; the MIoU and MAP of DepRes-Unet model increased by 3.66% and 3.15%, respectively, and Params decreased by 33.11%, which verified the improvement effect of residual structure on the feature extraction ability of backbone network; MIoU and MAP of FPN-DepResUnet model increased by 5.86% and 5.77%, respectively, and the Params decreased by 29.90%, which proved that FPN can improve the segmentation effect of the model. Experimental analysis shows that the three improvements proposed in this section improve the detection performance of the model. After calculation and testing, the detection speed of the model for a single surface image is 0.85 s.

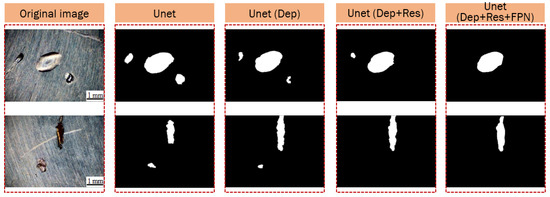

The identification results of the four models on interference factors are shown in Figure 10. From the results, it can be seen that compared with Dep-Unet, DepRes-Unet, and FPN-DepResUnet, the identification accuracy of Unet model is lower (there is an identification error; the integrity of edge identification of interference factors is not good). Compared with Unet, Dep-Unet, and DepRes-Unet, the identification accuracy of FPN-DepResUnet is greatly improved. It is also clear from the results that the three aspects of the optimization of the Unet model in this paper have good segmentation effects, respectively. The optimization of the three aspects effectively avoids the influence of noise in the image on the recognition of interference factors. The above experiments demonstrate the effectiveness of the FPN-DepResUnet model proposed in this paper.

Figure 10.

The results of four models (Unet, Dep-Unet, DepRes-Unet, and FPN-DepResUnet) for the recognition of interference factors in images.

4. Elimination of Interference Factors

With the continuous expansion and application of deep learning technology, some scholars have applied it to repair some old photographs or some disturbed portraits [28,29,30]. Therefore, with the help of the idea of portrait restoration, this paper applies it to eliminate interference regions in the image. The pixel point-to-point repair is performed by finding high-quality feature points similar to the interference regions in the rest of the image. This can eliminate the problem of image distortion and form an efficient series mechanism of repair and detection.

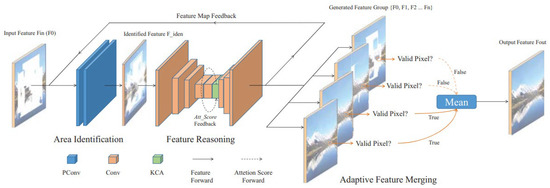

Recurrent Feature Reasoning network (RFR net) [30] is an image restoration network proposed by Jingyuan Li. The structure of the network is shown in Figure 11.

Figure 11.

RFR-net structure [30].

4.1. RFR Network

There are two main innovations in the literature [30]. ① A recurrent feature reasoning (RFR) module is proposed. Contrary to the one-shot filling in many previous works, RFR is a cyclic and gradual filling process. The latter one further fills or predicts a more reasonable repair value based on the previously filled feature map. In this way, after repeated cycles, the feature fusion link is entered. ② A Knowledge Consistent Attention (KCA) mechanism network is proposed. The KCA mechanism guides the patch-swap process according to the adaptive fusion of the attention-scores in the adjacent two cycles.

4.2. Network Training and Discussion of Results

A total of 1000 images are selected from two data sets for training. Among them, 900 images are used as training sets and 100 images are used as test sets. The training process is to use the original image and the interference “mask” image. The “mask” image is selected from the NVIDIA irregular mask dataset. The input of the training process is surface images and “mask” images, and the output is surface images. In the training process, the idea of transfer learning is introduced to realize the effective extraction of high-quality features of texture images by the RFR-net model. The basic ideas of transfer learning are as follows. First, the pre-training model and weight with good generalization ability trained on large datasets (such as Imagenet datasets) are selected. Then, some parameters of the convolution layer are fixed, and the network is retrained in the target domain. Finally, all convolutional layer parameters are liberalized, and the network is fine-tuned on the target dataset. Initial training and fine-tuning of the RFR-net model were performed using the pre-training weights. The initial training was iterated 50,000 times and the fine-tuning parameters were trained 30,000 times.

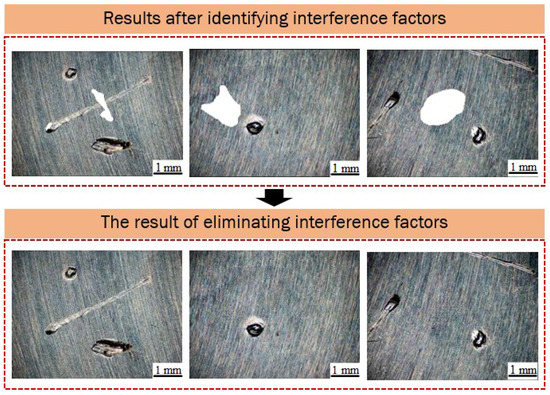

In this paper, PSNR and SSIM are used to evaluate the removal accuracy of the model. The higher the PSNR value, the less distorted the surface image is after removing the interfering factors. The range of SSIM is [0, 1], the larger value indicates the less distorted surface image after removing the interfering factors. The evaluation results are shown in Table 2. Researchers generally believe that the ideal PSNR range is 30 dB–50 dB, and the ideal SSIM range is 0.9–1. Compared with the original image, the average PSNR value of 100 repaired images is 32.82, and the average SSIM value is 0.917. According to the evaluation and analysis results, the repair effect of RFR net model is good. After calculation and testing, the detection speed of the model for a single surface image is 1.18 s. The results of removing interference factors based on the RFR-net model are shown in Figure 12. The results also show that the RFR-net model directionally removes interfering factors based on the Unet model clutter identification results. The adverse effects of segmentation and coverage of defects caused by interfering factors are avoided in the removed surface images.

Table 2.

Evaluation results of RFR-net model.

Figure 12.

The results of removing interference factors based on the RFR-net model.

5. Surface Defect Detection Based on SAM-Mask RCNN Model

Through the above research, the FPN-DepResUnet model can be used to identify and locate different interference regions in the image and obtain the “mask” results of the interference regions. Further, RFR-net is used to remove the interference regions in the image with the “mask” result as the input. The removal process is such that the pixels in the interference regions are filled with high-quality features similar to those in the rest of the image. The continuous filling process eliminates the negative effects of environmental disturbances on the surface defect detection process. Based on the acquired clean surface images, this section conducts defect detection research.

The surface defect detection in the cutting process is not only to accurately detect the type and location of defects, but also to detect the region contour of each defect. According to the region contour of the detected defect, the number of pixels of each defect and the area proportion of the defect regions can be calculated. At the same time, the above calculated information is quickly fed back to the cutting staff. Based on the feedback information (frequency and area ratio of defects) from multiple inspection results, the staff will take appropriate measures in advance to make changes to the current machining process, such as changing the tool. This can prevent greater damage to the surface being machined and achieve predictive maintenance and control of surface machining quality. Through the above analysis, Mask RCNN [31] is selected as the surface defect detection network in this paper.

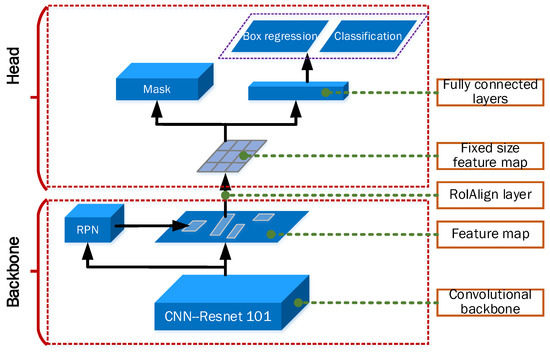

5.1. Mask RCNN Model

The model structure of Mask RCNN is shown in Figure 13. Mask RCNN is a small and flexible general-purpose object instance segmentation framework. It can not only detect the targets in the image, but also provide a high-quality segmentation result for each detected target. The whole network structure consists of two parts; one is Backbone for feature extraction, and the other is Head for classification, image regression prediction, and “mask” prediction for each ROI. In the backbone part, Mask RCNN uses Resnet101 as the backbone feature extraction network. The extracted four effective features are used to construct the Feature Pyramid Network (FPN). FPN can realize multi-scale fusion of feature maps extracted by backbone feature extraction network. Region Proposal Network (RPN) is used to help the network obtain the suggestion box. The suggestion box is to filter whether there is a defect target in which region of the image. RoIAlign is used to deal with the problem that the mask in the detection results is not aligned to the defect region in the original image. The head part classifies the ROI obtained by the network, predicts the image regression, and predicts the “mask”.

Figure 13.

The structure of Mask RCNN model.

5.2. SAM-Mask RCNN Instance Segmentation Module

This section aims at defect segmentation to improve the segmentation structure of the Mask RCNN model. Firstly, the defect of the Mask RCNN instance segmentation model is analyzed. The Mask RCNN instance segmentation model uses a simple deconvolution operation to recover the mask of the defect. This approach can easily lead to the loss of defect edge information features, resulting in the problem of inaccurate defect identification. To address the above problems, this section proposes a Mask RCNN instance segmentation model based on the serial attention mechanism (SAM-Mask RCNN instance segmentation model). The defect feature information is enhanced at the channel level and at the spatial level to reduce the problem of defect information loss in the convolution operation.

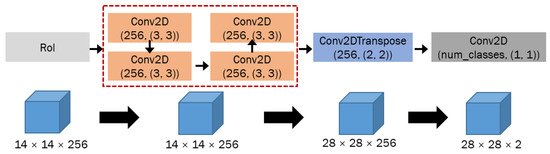

5.2.1. Mask RCNN Instance Segmentation Model

The defect segmentation is to separate the defect and the background to better identify the shape and position information of the defect. The running process and the number of layers of the segmentation model of Mask RCNN are shown in Figure 14. First, the feature map obtained from the RoIAlign layer is convoluted four times, and then the mask is obtained after the deconvolution operation. Finally, the number of channels in the mask is adjusted by convolution to match the number of target species. Although the Mask RCNN can recover the belonging category of pixels, its recognition ability for defect edges is poor. The reason for the detection is the reduced resolution and lost details after the deconvolution operation.

Figure 14.

The running process and number of layers of the segmentation model of Mask RCNN.

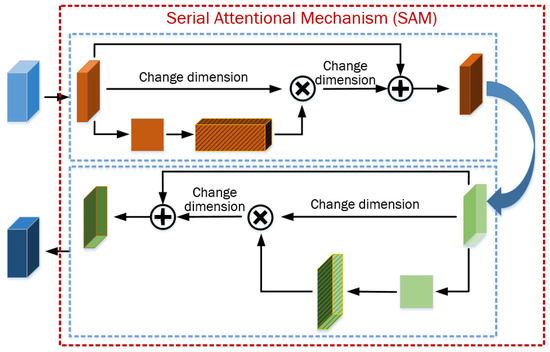

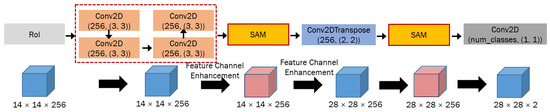

5.2.2. SAM-Mask RCNN Instance Segmentation Model

The attention mechanism can effectively improve the detection performance of deep networks. The purpose of introducing the attention mechanism in the model is to add weight values to each feature point of the feature layer, and to automatically enhance the original feature layer based on the weight of the feature points. Attention mechanisms include the channel attention mechanism [32] and the spatial attention mechanism [33]. The channel attention mechanism and the spatial attention mechanism only enhance the feature layer from a single perspective, ignoring several factors in the actual computation process. Therefore, this section proposes a Serial Attention Mechanism (SAM). SAM integrates the channel attention mechanisms and the spatial attention mechanisms to make them work serially together. Specifically, before deconvolution, the feature layer is first fed into the SAM for channel feature enhancement. After deconvolution, the feature layer is fed back into the SAM for feature recovery enhancement. Among them, the channel attention mechanism uses the correlation between the category features of different channels to perform feature reinforcement and improve the classification accuracy. The spatial attention mechanism can simulate the connection between different local features and promote the classification accuracy between features. The structure and operational process of SAM is shown in Figure 15. The running process and number of layers of the SAM-Mask RCNN instance segmentation model is shown in Figure 16.

Figure 15.

The structure and operational process of SAM.

Figure 16.

The running process and number of layers of the SAM-Mask RCNN instance segmentation model.

5.3. Model Training and Discussion of Results

5.3.1. Model Training

The training process uses 1000 images selected from the surface defect image without interference dataset. The labelme tool is used to label defects in the images before training. In the training process, in order to increase the robustness of the data, the data enhancement method is used to expand the training data. The total number of extended images is 10,000. Among them, 9000 are the training set and 1000 are the test set. The training process also introduces the pre-training weight with good generalization. The loss curves of the SAM-Mask RCNN instance segmentation model are shown in Figure 17.

Figure 17.

Loss curves of the SAM-Mask RCNN instance segmentation model.

5.3.2. Results and Discussion

The evaluation of the model selects two universal indicators: MIoU and MPA. In order to verify the superiority of the proposed SAM-Mask RCNN instance segmentation model, the common segmentation algorithms such as Mask RCNN, Unet, and DeepLab V3+ are also trained on the same data set, experimental environment, and hyperparameters. These popular segmentation algorithms are used by many researchers as a criterion to evaluate the superiority of the proposed algorithms. After training, two evaluation indexes are calculated for each of the four models. The model evaluation results are shown in Table 3. Compared with the other three networks, the SAM-Mask RCNN instance segmentation model has maximum improvements of 4.74% and 3.39% in the MIoU index and the MPA index, respectively. The defect detection results of the SAM-Mask RCNN instance segmentation model is shown in Figure 18. After calculation and testing, the detection speed of the model for a single surface image is 0.94 s. The output of test results includes category, probability, and area proportion of each defect. From the test results, it can be seen that the model can identify the edge part of the defect region well and accurately. At the same time, the detection result can provide the cutting process guidance for the cutting staff. On the one hand, when the proportion of the defect region in the surface image starts to increase, it indicates that the cutting tool has a large amount of wear or damage. At this point, the cutting staff should replace the tool with a new one. On the other hand, if the tool does not show much wear and breakage, this indicates that the current cutting process parameters do not match the material being cut. At this point, the cutting staff should adjust the cutting process parameters, such as cutting speed, feed per tooth, etc.

Table 3.

Comparison results of the two evaluation indicators.

Figure 18.

Defect detection results of SAM-Mask RCNN model.

This paper integrates interference factors removal and defect detection to achieve sustainable detection of surface defects. The identification and removal of the interference factors are achieved using the FPN-DepResUnet model and the RFR-net model, respectively. On this basis, the SAM-Mask RCNN model is used to effectively detect and provide information feedback on the clean defect images. This provides guidance for the predictive maintenance of the surface quality.

6. Conclusions

ZDM is one of the main goals of advanced manufacturing. In the aerospace, railway, automobile, and other vital industries, there is an increasing need to monitor the surface quality of production parts to ensure zero defects. Surface defect detection provides technical support to achieve this goal, which has been studied by many scholars. However, the problem of surface defect detection affected by environmental interference factors (chips or coolant residual) in the cutting process is rarely studied, which is a difficult point in existing research. In addition, these interference factors are complex and variable, with different shapes, which also brings challenges to the research process. Interference factors hinder the sustainable detection of surface defects. To solve this problem, this paper proposes a sustainable detection method for surface defects. The method is divided into two steps: one is the identification and removal of interference factors; the other is the detection of surface defects.

- Identification and elimination of interfering factors: The Unet model is used as the research basis to identify the interfering factors. First, the shortcomings of the Unet model are analyzed. Then, the structure of the Unet model is optimized from three aspects of parameter number, training performance, and feature information fusion, and a new FPN-DepResUnet model is constructed. The effectiveness of each optimization aspect of the proposed FPN-DepResUnet model is verified by ablation experiments. Compared with the Unet model, MIoU and MAP of the FPN-DepResUnet model increased by 5.86% and 5.77%, and Params reduced by 29.90%. Accurate identification of the interference factors is achieved by the FPN-DepResUnet model. Furthermore, the interfering factors are removed using the RFR-net model.

- Detection of the surface defects: Based on the above research, clean surface images are obtained. SAM-Mask RCNN model is constructed to solve the problem of Mask RCNN model segmentation of the defect edge in the image. Then, the SAM-Mask RCNN model is used to perform effective defect detection on the surface image and feedback the detection information. The proposed SAM-Mask RCNN model has an accuracy of 94.62% for defect detection. Compared with the other traditional segmentation models (such as Mask RCNN, Unet and DeepLab V3+), the SAM-Mask RCNN model has maximum improvements of 4.74% and 3.39% in the MIoU index and the MPA index, respectively. The feedback information includes defect type, number of pixels in the defect regions, and area ratio of the defect regions. At the same time, the feedback information can provide the cutting process guidance for the cutting staff.

The method proposed in this paper solves the problem of detecting defects on surfaces with interference factors and achieves the goal of sustainable detection of surface defects. The method proposed in this paper can maintain a high accuracy of 94.62% for surface defect detection under the influence of complex environmental interference factors. The study in this paper still has some shortcomings. In this paper, the study assumes that there are only two major interfering factors in the environment. However, there will be some other interference factors in the actual production environment, for example, dust. In addition, this paper also assumes that the detection time of a single image within 3 s can meet the application requirements of industrial production. These shortcomings are also problems and challenges to be faced and overcome in the future research process. Further research results based on this paper will provide strong technical support for the active control of machining surface quality, which is conducive to the realization of the ZDM strategy.

Author Contributions

Conceptualization, W.C. and B.Z.; methodology, W.C. and B.Z.; investigation, W.C.; resources, Q.Z.; data curation, H.S. and C.H.; writing—original draft preparation, L.L.; writing—review and editing, J.L.; funding acquisition, B.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Key Research and Development Project of China (2022YFB4601403), National Natural Science Foundation of China (No. 52175336), National Natural Science Foundation of China (No. 52275464), and Scientific Research Project for National High-level Innovative Talents of Hebei Province Full-time Introduction (2021HBQZYCXY004).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Psarommatis, F.; May, G.; Dreyfus, P.-A.; Kiritsis, D. Zero defect manufacturing: State-of-the-art review, shortcomings and future directions in research. Int. J. Prod. Res. 2020, 58, 1–17. [Google Scholar] [CrossRef]

- Powell, D.; Magnanini, M.-C.; Colledani, M.; Myklebust, O. Advancing zero defect manufacturing: A state-of-the-art perspective and future research directions. Comput. Ind. 2022, 136, 103596. [Google Scholar] [CrossRef]

- Wang, R.-J.; Liang, F.-L.; Mou, X.-W.; Chen, L.-T.; Yu, X.-Y.; Peng, Z.-J.; Chen, H.-Y. Development of an Improved YOLOv7-Based Model for Detecting Defects on Strip Steel Surfaces. Coatings 2023, 13, 536. [Google Scholar] [CrossRef]

- Su, H.; Zhang, J.-B.; Zhang, B.-H.; Zou, W. Review of research on the inspection of surface defect based on visual perception. Comput. Integr. Manuf. Syst. 2021, 29, 1–31. [Google Scholar]

- Munoz-Escalona, P.; Maropoulos, P.-G. A geometrical model for surface roughness prediction when face milling Al 7075-T7351 with square insert tools. J. Manuf. Syst. 2015, 36, 216–223. [Google Scholar] [CrossRef]

- Wen, X.; Shan, J.-R.; He, Y.; Song, K.-C. Steel Surface Defect Recognition: A Survey. Coatings 2023, 13, 17. [Google Scholar] [CrossRef]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Li, Q.-Y.; Luo, Z.-Q.; Chen, H.-B.; Li, C.-J. An Overview of Deeply Optimized Convolutional Neural Networks and Research in Surface Defect Classification of Workpieces. IEEE Access 2022, 10, 26443–26462. [Google Scholar] [CrossRef]

- Lavadiya, D.-N.; Dorafshan, S. Deep learning models for analysis of non-destructive evaluation data to evaluate reinforced concrete bridge decks: A survey. Eng. Rep. 2023, e12608. [Google Scholar] [CrossRef]

- Tabernik, D.; Sela, S.; Skvarc, J.; Skocaj, D. Segmentation-based deep-learning approach for surface-defect detection. J. Intell. Manuf. 2020, 31, 759–776. [Google Scholar] [CrossRef]

- Zhang, H.; Jiang, L.-X.; Li, C.-Q. CS-ResNet: Cost-sensitive residual convolutional neural network for PCB cosmetic defect detection. Expert Syst. Appl. 2021, 185, 115673. [Google Scholar] [CrossRef]

- Ma, L.; Xie, W.; Zhang, Y. Blister defect detection based on convolutional neural network for polymer lithium-Ion battery. Appl. Sci. 2019, 9, 1085–1096. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 28, 91–99. [Google Scholar] [CrossRef]

- Redmon, J.; Diwala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, real-time object detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2016), Seattle, WA, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single shot multibox detectot. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Zhao, H.-Q.; Zhang, T.-S. Fabric Surface Defect Detection Using SE-SSDNet. Symmetry 2022, 14, 2373. [Google Scholar] [CrossRef]

- Zheng, J.-B.; Zhang, T. Wafer Surface Defect Detection Based on Background Subtraction and Faster R-CNN. Micromachines 2023, 14, 905. [Google Scholar] [CrossRef]

- Yin, X.; Chen, Y.; Boufeene, A.; Zaman, H.; Al-Hussein, M.; Kurach, L. A deep learning -based framework for an automated defect detection system for sewer pipes. Autom. Constr. 2020, 109, 102967. [Google Scholar] [CrossRef]

- Uzen, H.; Turkoglu, M.; Aslan, M.; Hanbay, D. Depth-wise Squeeze and Excitation Block-based Efficient-Unet model for surface defect detection. Vis. Comput. 2022, 39, 1745–1764. [Google Scholar] [CrossRef]

- Feng, C.-C.; Zhang, H.; Wang, H.-R.; Wang, S.; Li, Y.-L. Automatic pixel-level crack detection on dam surface using deep convolutional network. Sensors 2020, 20, 2069. [Google Scholar] [CrossRef]

- Han, H.; Gao, C.-Q.; Zhao, Y.; Liao, S.-S.; Tang, L.; Li, X.-D. Polycrystalline silicon wafer defect segmentation based on deep convolutional neural networks. Pattern Recognit. Lett. 2020, 130, 234–241. [Google Scholar] [CrossRef]

- Gyimah, N.-K.; Girma, A.; Mahmoud, M.-N.; Nateghi, S.; Homaifar, A.; Opoku, D. A Robust Completed Local Binary Pattern (RCLBP) for Surface Defect Detection. In Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics (SMC), Electronic Network, Melbourne, Australia, 17–20 October 2021; pp. 1927–1934. [Google Scholar]

- Yang, J.-Z.; Zou, B.; Guo, G.-Q.; Chen, W.; Wang, X.-F.; Zhang, K.-H. A study on the roughness detection for machined surface covered with chips based on deep learning. J. Manuf. Process. 2022, 84, 77–87. [Google Scholar] [CrossRef]

- Deng, J.-H.; Lu, Y.; Lee, V.-C.-S. Imaging-based crack detection on concrete surfaces using You Only Look Once network. Struct. Health Monit. 2020, 20, 484–499. [Google Scholar] [CrossRef]

- Sun, N.; Cao, B.-T. Real-Time Image Defect Detection System of Cloth Digital Printing Machine. Comput. Intell. Neurosci. 2022, 2022, 5625945. [Google Scholar] [CrossRef] [PubMed]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the 18th International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Lin, T.-Y.; Dollar, P.; Girshick, R.; He, K.-M.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2017), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Wan, Z.-Y.; Zhang, B.; Chen, D.-D.; Zhang, P.; Chen, D.; Liao, J.; Wen, F. Bringing Old Photos Back to Life. In Proceedings of the 2020 IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2020), Seattle, WA, USA, 13–19 June 2020; pp. 2744–2754. [Google Scholar]

- Lahiri, A.; Jain, A.-K.; Agrawal, S.; Mitra, P.; Biswas, P.-K. Prior Guided GAN Based Semantic Inpainting. In Proceedings of the 2020 IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2020), Seattle, WA, USA, 13–19 June 2020; pp. 13693–13702. [Google Scholar]

- Li, J.-Y.; Wang, N.; Zhang, L.-F.; Du, B.; Tao, D.-C. Recurrent Feature Reasoning for Image Inpainting. In Proceedings of the 2020 IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2020), Seattle, WA, USA, 13–19 June 2020; pp. 7757–7765. [Google Scholar]

- He, K.-M.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 386–397. [Google Scholar] [CrossRef] [PubMed]

- Wang, Q.-L.; Wu, B.-G.; Zhu, P.-F.; Li, P.-H.; Zuo, W.-M.; Hu, Q.-H. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. In Proceedings of the 2020 IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2020), Seattle, WA, USA, 13–19 June 2020; pp. 11531–11539. [Google Scholar]

- Fu, J.; Liu, J.; Tian, H.-J.; Li, Y.; Bao, Y.-J.; Fang, Z.-W.; Lu, H.-Q. Dual Attention Network for Scene segmentation. In Proceedings of the 2019 IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2019), Long Beach, CA, USA, 15–19 June 2019; pp. 3141–3149. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).