The Role of ChatGPT and AI Chatbots in Optimizing Antibiotic Therapy: A Comprehensive Narrative Review

Abstract

1. Introduction

2. Materials and Methods

- Search Strategy

- AI Technologies: “chatbot*”, “conversational agent*”, “artificial intelligence”, “AI”, “ChatGPT”, “LLMs”, and names of specific AI systems (e.g., “Bard AI”, “Claude AI”).

- Antibiotic Therapy: “antibiotic therapy”, “antibiotic prescribing”, “antimicrobial stewardship”, “antibiotic stewardship”, “antibiotherapy”.

- Clinical Context: “error reduction”, “medication errors”, “prescribing errors”, “adherence”, “clinical decision support”, “decision-making”, “accessibility”, “education”, “patient education”, “health education”, “resource-limited settings”, “developing countries”.

- Search Formulas used:

- 1.

- SCOPUS: TITLE-ABS-KEY(chatbot* OR “conversational agent*” OR “artificial intelligence” OR AI OR ChatGPT) AND TITLE-ABS-KEY(“antibiotic therapy” OR “antibiotic prescribing” OR “antimicrobial stewardship” OR “antibiotic stewardship”) AND TITLE-ABS-KEY(“error reduction” OR “medication errors” OR “prescribing errors” OR adherence OR “clinical decision support” OR “decision-making” OR accessibility OR education OR “patient education” OR “health education” OR “resource-limited settings” OR “developing countries”).

- 2.

- Web of Science: TS = (chatbot* OR “conversational agent*” OR “artificial intelligence” OR ChatGPT OR LLMs OR “Bard AI” OR “Claude AI” OR “Siri” OR “Alexa” OR “Google Assistant” OR “Microsoft Copilot” OR “Anthropic Claude” OR “IBM Watson” OR “Jasper AI” OR “Perplexity AI” OR “Replika”) AND TS = (“antibiotic therapy” OR “antibiotic prescribing” OR “antimicrobial stewardship” OR “antibiotic stewardship” OR “antibiotherapy”).

- 3.

- PubMed: (chatbot* OR “conversational agent*” OR “artificial intelligence” OR ChatGPT OR LLMs OR “Bard AI” OR “Claude AI” OR “Siri” OR “Alexa” OR “Google Assistant” OR “Microsoft Copilot” OR “Anthropic Claude” OR “IBM Watson” OR “Jasper AI” OR “Perplexity AI” OR “Replika”) AND (“antibiotic therapy” OR “antibiotic prescribing” OR “antimicrobial stewardship” OR “antibiotic stewardship” OR “antibiotherapy”).

- 4.

- Google Scholar: (“chatbot*” OR “conversational agent*” OR “artificial intelligence” OR “AI” OR “ChatGPT” OR “LLMs” OR “Bard AI” OR “Claude AI”) AND (“antibiotic therapy” OR “antibiotic prescribing” OR “antimicrobial stewardship” OR “antibiotic stewardship” OR “antibiotherapy”) AND (“error reduction” OR “medication errors” OR “prescribing errors” OR “clinical decision support” OR “patient education”).

- Inclusion and Exclusion Criteria

- Rationale for Design and Data Synthesis

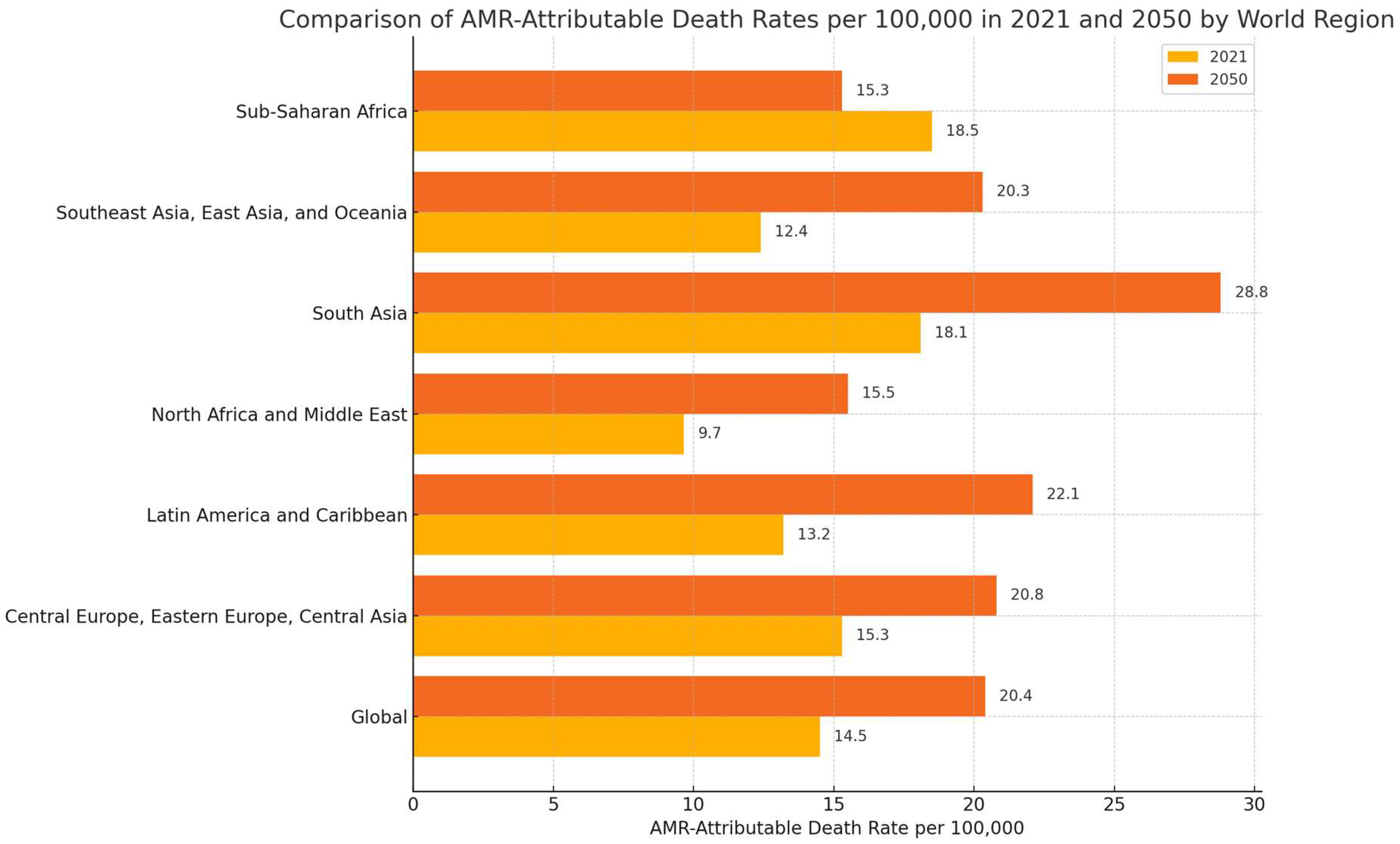

3. Current Trends in Antimicrobial Resistance: Recent Data and the Need for Innovative Solutions

4. AI-Based Chatbots: From Design Principles to Practical Applications

4.1. What Are AI-Based Chatbots?

4.2. How Do These Models Work?

4.3. Capabilities and Limitations of AI-Based Chatbots

4.4. Practical Applications of AI-Based Chatbots in Healthcare

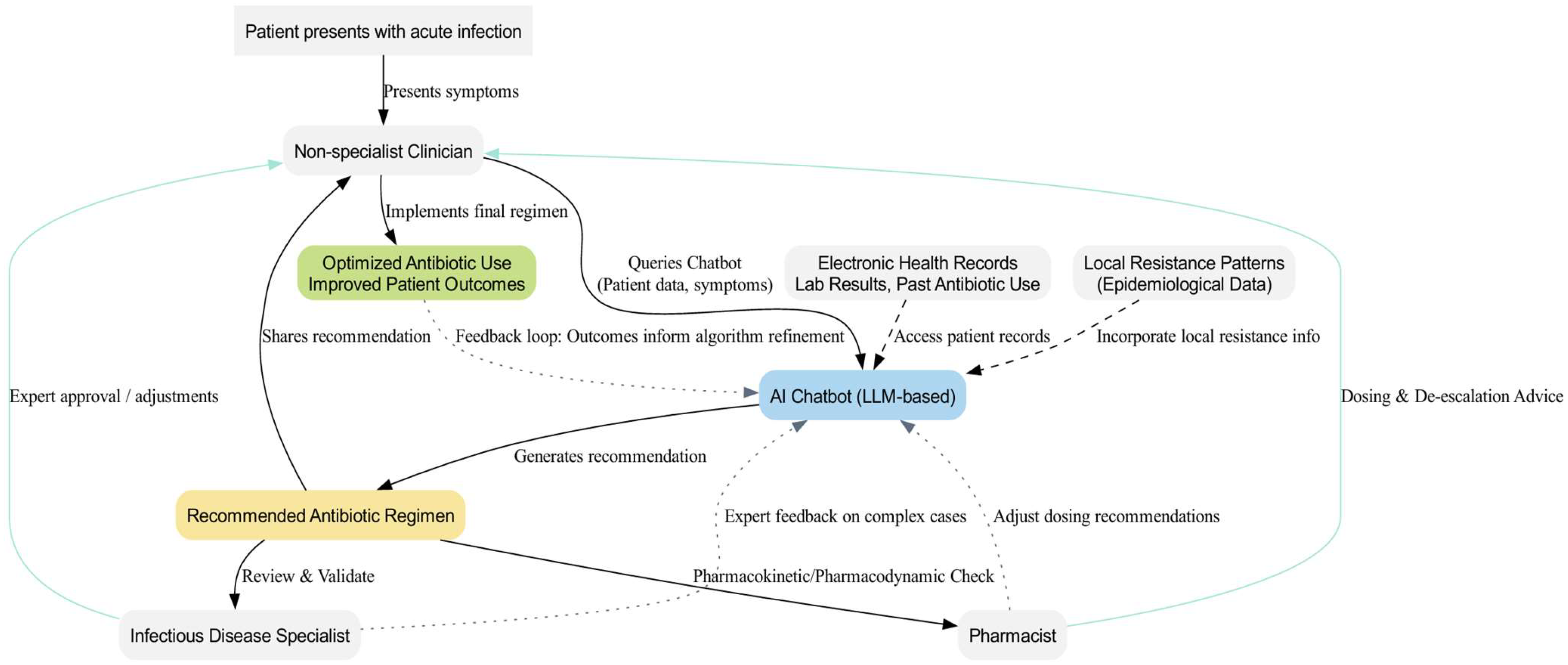

5. The Use of AI-Based Chatbots in Antibiotic Therapy

6. Benefits of Chatbots in Optimizing Antibiotic Therapy

7. Beyond Chatbots: Other AI Applications in Optimizing Antibiotic Therapy

8. Challenges and Limitations

Proposed Strategies to Address AI Chatbot Limitations

- Algorithmic Bias. Training datasets that underrepresent certain patient populations can produce skewed recommendations and exacerbate health disparities. To reduce bias, AI chatbots need the following:

- ▪

- Dataset Expansion: Collaborations across diverse institutions to include various demographics and clinical contexts.

- ▪

- Regular Testing: Frequent evaluations with representative patient cohorts.

- ▪

- Feedback Loops: Clinicians and pharmacists flag questionable outputs, prompting updates to training processes.

- Unsafe Advice/Missed Clinical Nuances. Chatbots can overlook key patient factors or propose outdated therapies, underscoring the need for human oversight. Suggested fixes are as follows:

- ▪

- Safety Checks: Automated alerts for allergies, interactions, or guideline mismatches.

- ▪

- Specialist Review: Infectious disease experts or pharmacists approve final suggestions, especially in high-stakes scenarios.

- ▪

- Contextual Prompts: Structured reminders for comorbidities, patient age, and recent antibiotic history.

- Hallucinations and Misinformation. When chatbots confidently provide incorrect information, major clinical risks arise. Mitigation approaches include the following:

- ▪

- Model Refinement: Carefully crafted prompts or limiting response scope.

- ▪

- Step-by-Step Reasoning: Documenting the model’s reasoning to spot errors.

- ▪

- Validation Layers: Cross-checking outputs against trusted sources (antibiograms, guidelines).

- Data Privacy and Confidentiality. Compliance with regulations like GDPR and HIPAA is essential. Protective methods include the following:

- ▪

- Federated Learning: Training models locally at each institution without centralizing sensitive data.

- ▪

- Differential Privacy: Introducing controlled “noise” to prevent re-identification.

- ▪

- Secure Enclaves: Using encrypted, access-controlled environments for AI model tuning.

9. Conclusions

10. Future Perspectives

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Jiang, F.; Jiang, Y.; Zhi, H.; Dong, Y.; Li, H.; Ma, S.; Wang, Y.; Dong, Q.; Shen, H.; Wang, Y. Artificial Intelligence in Healthcare: Past, Present and Future. Stroke Vasc. Neurol. 2017, 2, 230–243. [Google Scholar] [CrossRef] [PubMed]

- ChatGPT. Available online: https://openai.com/chatgpt/overview/ (accessed on 21 November 2024).

- Gemini—Conversează Prin Chat ca să îți Inspire Idei. Available online: https://gemini.google.com (accessed on 21 November 2024).

- Meet Claude. Available online: https://www.anthropic.com/claude (accessed on 21 November 2024).

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models Are Few-Shot Learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Voice Mode FAQ|OpenAI Help Center. Available online: https://help.openai.com/en/articles/8400625-voice-mode-faq (accessed on 21 November 2024).

- Zhang, Y.; Sun, S.; Galley, M.; Chen, Y.-C.; Brockett, C.; Gao, X.; Gao, J.; Liu, J.; Dolan, B. DialoGPT: Large-Scale Generative Pre-Training for Conversational Response Generation. arXiv 2020, arXiv:1911.00536. [Google Scholar]

- Hoy, M.B. Alexa, Siri, Cortana, and More: An Introduction to Voice Assistants. Med. Ref. Serv. Q. 2018, 37, 81–88. [Google Scholar] [CrossRef] [PubMed]

- Ruksakulpiwat, S.; Kumar, A.; Ajibade, A. Using ChatGPT in Medical Research: Current Status and Future Directions. J. Multidiscip. Healthc. 2023, 16, 1513–1520. [Google Scholar] [CrossRef]

- Omarov, B.; Narynov, S.; Zhumanov, Z. Artificial Intelligence-Enabled Chatbots in Mental Health: A Systematic Review. Comput. Mater. Contin. 2022, 74, 5105–5122. [Google Scholar] [CrossRef]

- Grassini, E.; Buzzi, M.; Leporini, B.; Vozna, A. A Systematic Review of Chatbots in Inclusive Healthcare: Insights from the Last 5 Years. Univers. Access Inf. Soc. 2024, 1–9. [Google Scholar] [CrossRef]

- Casu, M.; Triscari, S.; Battiato, S.; Guarnera, L.; Caponnetto, P. AI Chatbots for Mental Health: A Scoping Review of Effectiveness, Feasibility, and Applications. Appl. Sci. 2024, 14, 5889. [Google Scholar] [CrossRef]

- Laumer, S.; Maier, C.; Gubler, F. Chatbot acceptance in healthcare: Explaining user adoption of conversational agents for disease diagnosis. In Proceedings of the 27th European Conference on Information Systems (ECIS), Stockholm, Sweden, 8–14 June 2019. Research Papers. [Google Scholar]

- Webster, P. Virtual Health Care in the Era of COVID-19. Lancet 2020, 395, 1180–1181. [Google Scholar] [CrossRef]

- Zeng, F.; Liang, X.; Chen, Z. New Roles for Clinicians in the Age of Artificial Intelligence. BIO Integr. 2024, 1, 113–117. [Google Scholar] [CrossRef]

- Abavisani, M.; Khoshrou, A.; Karbas Foroushan, S.; Sahebkar, A. Chatting with Artificial Intelligence to Combat Antibiotic Resistance: Opportunities and Challenges. Curr. Res. Biotechnol. 2024, 7, 100197. [Google Scholar] [CrossRef]

- Howard, A.; Hope, W.; Gerada, A. ChatGPT and Antimicrobial Advice: The End of the Consulting Infection Doctor? Lancet Infect. Dis. 2023, 23, 405–406. [Google Scholar] [CrossRef] [PubMed]

- Sarink, M.J.; Bakker, I.L.; Anas, A.A.; Yusuf, E. A Study on the Performance of ChatGPT in Infectious Diseases Clinical Consultation. Clin. Microbiol. Infect. 2023, 29, 1088–1089. [Google Scholar] [CrossRef] [PubMed]

- De Vito, A.; Geremia, N.; Marino, A.; Bavaro, D.F.; Caruana, G.; Meschiari, M.; Colpani, A.; Mazzitelli, M.; Scaglione, V.; Venanzi Rullo, E.; et al. Assessing ChatGPT’s Theoretical Knowledge and Prescriptive Accuracy in Bacterial Infections: A Comparative Study with Infectious Diseases Residents and Specialists. Infection 2024, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Maillard, A.; Micheli, G.; Lefevre, L.; Guyonnet, C.; Poyart, C.; Canouï, E.; Belan, M.; Charlier, C. Can Chatbot Artificial Intelligence Replace Infectious Diseases Physicians in the Management of Bloodstream Infections? A Prospective Cohort Study. Clin. Infect. Dis. 2024, 78, 825–832. [Google Scholar] [CrossRef]

- Naghavi, M.; Vollset, S.E.; Ikuta, K.S.; Swetschinski, L.R.; Gray, A.P.; Wool, E.E.; Aguilar, G.R.; Mestrovic, T.; Smith, G.; Han, C.; et al. Global Burden of Bacterial Antimicrobial Resistance 1990–2021: A Systematic Analysis with Forecasts to 2050. Lancet 2024, 404, 1199–1226. [Google Scholar] [CrossRef]

- Murray, C.J.L.; Ikuta, K.S.; Sharara, F.; Swetschinski, L.; Robles Aguilar, G.; Gray, A.; Han, C.; Bisignano, C.; Rao, P.; Wool, E.; et al. Global Burden of Bacterial Antimicrobial Resistance in 2019: A Systematic Analysis. Lancet 2022, 399, 629–655. [Google Scholar] [CrossRef]

- Cascella, M.; Montomoli, J.; Bellini, V.; Bignami, E. Evaluating the Feasibility of ChatGPT in Healthcare: An Analysis of Multiple Clinical and Research Scenarios. J. Med. Syst. 2023, 47, 33. [Google Scholar] [CrossRef]

- Lee, P.; Bubeck, S.; Petro, J. Benefits, Limits, and Risks of GPT-4 as an AI Chatbot for Medicine. N. Engl. J. Med. 2023, 388, 1233–1239. [Google Scholar] [CrossRef]

- Shah, N.H.; Entwistle, D.; Pfeffer, M.A. Creation and Adoption of Large Language Models in Medicine. JAMA 2023, 330, 866–869. [Google Scholar] [CrossRef]

- Wojcik, G.; Ring, N.; McCulloch, C.; Willis, D.S.; Williams, B.; Kydonaki, K. Understanding the Complexities of Antibiotic Prescribing Behaviour in Acute Hospitals: A Systematic Review and Meta-Ethnography. Arch. Public Health 2021, 79, 134. [Google Scholar] [CrossRef] [PubMed]

- Harun, M.G.D.; Sumon, S.A.; Hasan, I.; Akther, F.M.; Islam, M.S.; Anwar, M.M.U. Barriers, Facilitators, Perceptions and Impact of Interventions in Implementing Antimicrobial Stewardship Programs in Hospitals of Low-Middle and Middle Countries: A Scoping Review. Antimicrob. Resist. Infect. Control 2024, 13, 8. [Google Scholar] [CrossRef] [PubMed]

- Serban, G.P.R. Consumul de Antibiotice, Rezistența Microbiană și Infecții Asociate Asistenței Medicale în România—2018. Available online: https://insp.gov.ro/download/CNSCBT/docman-files/Analiza%20date%20supraveghere/infectii_asociate_asistentei_medicale/Consumul-de-antibiotice-rezistenta-microbiana-si-infectiile-asociate-asistentei-medicale-Romania-2018.pdf (accessed on 30 November 2024).

- Hassoun-Kheir, N.; Guedes, M.; Ngo Nsoga, M.-T.; Argante, L.; Arieti, F.; Gladstone, B.P.; Kingston, R.; Naylor, N.R.; Pezzani, M.D.; Pouwels, K.B.; et al. A Systematic Review on the Excess Health Risk of Antibiotic-Resistant Bloodstream Infections for Six Key Pathogens in Europe. Clin. Microbiol. Infect. 2024, 30, S14–S25. [Google Scholar] [CrossRef] [PubMed]

- European Centre for Disease Prevention and Control. Antimicrobial Resistance in the EU/EEA (EARS-Net). In Annual Epidemiological Report for 2023; ECDC: Solna, Sweden, 2023. [Google Scholar]

- Ajulo, S. Global Antimicrobial Resistance and Use Surveillance System (GLASS) Report 2022, 1st ed.; World Health Organization: Geneva, Switzerland, 2022; ISBN 978-92-4-006270-2. [Google Scholar]

- Langford, B.J.; Soucy, J.-P.R.; Leung, V.; So, M.; Kwan, A.T.H.; Portnoff, J.S.; Bertagnolio, S.; Raybardhan, S.; MacFadden, D.R.; Daneman, N. Antibiotic Resistance Associated with the COVID-19 Pandemic: A Systematic Review and Meta-Analysis. Clin. Microbiol. Infect. 2023, 29, 302–309. [Google Scholar] [CrossRef]

- Luyt, C.-E.; Bréchot, N.; Trouillet, J.-L.; Chastre, J. Antibiotic Stewardship in the Intensive Care Unit. Crit. Care 2014, 18, 480. [Google Scholar] [CrossRef]

- Hyun, D.Y.; Hersh, A.L.; Namtu, K.; Palazzi, D.L.; Maples, H.D.; Newland, J.G.; Saiman, L. Antimicrobial Stewardship in Pediatrics: How Every Pediatrician Can Be a Steward. JAMA Pediatr. 2013, 167, 859–866. [Google Scholar] [CrossRef]

- Donà, D.; Barbieri, E.; Daverio, M.; Lundin, R.; Giaquinto, C.; Zaoutis, T.; Sharland, M. Implementation and Impact of Pediatric Antimicrobial Stewardship Programs: A Systematic Scoping Review. Antimicrob. Resist. Infect. Control 2020, 9, 3. [Google Scholar] [CrossRef]

- Apisarnthanarak, A.; Kwa, A.L.-H.; Chiu, C.-H.; Kumar, S.; Thu, L.T.A.; Tan, B.H.; Zong, Z.; Chuang, Y.C.; Karuniawati, A.; Tayzon, M.F.; et al. Antimicrobial Stewardship for Acute-Care Hospitals: An Asian Perspective. Infect. Control Hosp. Epidemiol. 2018, 39, 1237–1245. [Google Scholar] [CrossRef]

- Godman, B.; Egwuenu, A.; Haque, M.; Malande, O.O.; Schellack, N.; Kumar, S.; Saleem, Z.; Sneddon, J.; Hoxha, I.; Islam, S.; et al. Strategies to Improve Antimicrobial Utilization with a Special Focus on Developing Countries. Life 2021, 11, 528. [Google Scholar] [CrossRef]

- The Five Ds of Outpatient Antibiotic Stewardship for Urinary Tract Infections. Available online: https://journals.asm.org/doi/epdf/10.1128/cmr.00003-20?src=getftr&utm_source=scopus&getft_integrator=scopus (accessed on 9 December 2024).

- Kumar, N.R.; Balraj, T.A.; Kempegowda, S.N.; Prashant, A. Multidrug-Resistant Sepsis: A Critical Healthcare Challenge. Antibiotics 2024, 13, 46. [Google Scholar] [CrossRef]

- Shum, H.; He, X.; Li, D. From Eliza to XiaoIce: Challenges and Opportunities with Social Chatbots. Front. Inf. Technol. Electron. Eng. 2018, 19, 10–26. [Google Scholar] [CrossRef]

- OpenAI.; Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; et al. GPT-4 Technical Report. arXiv 2024, arXiv:2303.08774. [Google Scholar]

- Weizenbaum, J. ELIZA—A Computer Program for the Study of Natural Language Communication between Man and Machine. Commun. ACM 1966, 9, 36–45. [Google Scholar] [CrossRef]

- Bhattacharya, P.; Prasad, V.K.; Verma, A.; Gupta, D.; Sapsomboon, A.; Viriyasitavat, W.; Dhiman, G. Demystifying ChatGPT: An In-Depth Survey of OpenAI’s Robust Large Language Models. Arch. Comput. Methods Eng. 2024, 31, 4557–4600. [Google Scholar] [CrossRef]

- Bansal, G.; Chamola, V.; Hussain, A.; Guizani, M.; Niyato, D. Transforming Conversations with AI—A Comprehensive Study of ChatGPT. Cogn. Comput. 2024, 16, 2487–2510. [Google Scholar] [CrossRef]

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer. J. Mach. Learn. Res. 2020, 21, 1–67. [Google Scholar]

- Gao, L.; Biderman, S.; Black, S.; Golding, L.; Hoppe, T.; Foster, C.; Phang, J.; He, H.; Thite, A.; Nabeshima, N.; et al. The Pile: An 800GB Dataset of Diverse Text for Language Modeling. arXiv 2020, arXiv:2101.00027. [Google Scholar]

- Kumar, V.; Srivastava, P.; Dwivedi, A.; Budhiraja, I.; Ghosh, D.; Goyal, V.; Arora, R. Large-Language-Models (LLM)-Based AI Chatbots: Architecture, In-Depth Analysis and Their Performance Evaluation, Proceedings of the Recent Trends in Image Processing and Pattern Recognition, Bidar, India, 16–17 December 2016; Santosh, K., Makkar, A., Conway, M., Singh, A.K., Vacavant, A., Abou el Kalam, A., Bouguelia, M.-R., Hegadi, R., Eds.; Springer Nature: Cham, Switzerland, 2024; pp. 237–249. [Google Scholar]

- Leiter, C.; Zhang, R.; Chen, Y.; Belouadi, J.; Larionov, D.; Fresen, V.; Eger, S. ChatGPT: A Meta-Analysis after 2.5 Months. Mach. Learn. Appl. 2024, 16, 100540. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Ouyang, L.; Wu, J.; Jiang, X.; Almeida, D.; Wainwright, C.L.; Mishkin, P.; Zhang, C.; Agarwal, S.; Slama, K.; Ray, A.; et al. Training Language Models to Follow Instructions with Human Feedback. In Proceedings of the 36th Conference on Neural Information Processing Systems, New Orleans, LA, USA, 28 November–9 December 2022. [Google Scholar]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language Models Are Unsupervised Multitask Learners. OpenAI Blog 2019, 1, 9. [Google Scholar]

- Tenney, I.; Das, D.; Pavlick, E. BERT Rediscovers the Classical NLP Pipeline. arXiv 2019, arXiv:1905.05950. [Google Scholar]

- Dai, Z.; Yang, Z.; Yang, Y.; Carbonell, J.; Le, Q.V.; Salakhutdinov, R. Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context. arXiv 2019, arXiv:1901.02860. [Google Scholar]

- Clark, K.; Khandelwal, U.; Levy, O.; Manning, C.D. What Does BERT Look At? An Analysis of BERT’s Attention. arXiv 2019, arXiv:1906.04341. [Google Scholar]

- Ji, Z.; Lee, N.; Frieske, R.; Yu, T.; Su, D.; Xu, Y.; Ishii, E.; Bang, Y.J.; Madotto, A.; Fung, P. Survey of Hallucination in Natural Language Generation. ACM Comput. Surv. 2023, 55, 1–38. [Google Scholar] [CrossRef]

- Maynez, J.; Narayan, S.; Bohnet, B.; McDonald, R. On Faithfulness and Factuality in Abstractive Summarization. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; Jurafsky, D., Chai, J., Schluter, N., Tetreault, J., Eds.; Association for Computational Linguistics, 2020; pp. 1906–1919. [Google Scholar]

- Ahmad, M.A.; Yaramis, I.; Roy, T.D. Creating Trustworthy LLMs: Dealing with Hallucinations in Healthcare AI. arXiv 2023, arXiv:2311.01463. [Google Scholar]

- Islam, A.; Chang, K. Real-Time AI-Based Informational Decision-Making Support System Utilizing Dynamic Text Sources. Appl. Sci. 2021, 11, 6237. [Google Scholar] [CrossRef]

- Esteva, A.; Robicquet, A.; Ramsundar, B.; Kuleshov, V.; DePristo, M.; Chou, K.; Cui, C.; Corrado, G.; Thrun, S.; Dean, J. A Guide to Deep Learning in Healthcare. Nat. Med. 2019, 25, 24–29. [Google Scholar] [CrossRef]

- Berner, E.S.; La Lande, T.J. Overview of Clinical Decision Support Systems. In Clinical Decision Support Systems; Springer: New York, NY, USA, 2007; pp. 3–22. ISBN 978-0-387-38319-4. [Google Scholar]

- Frangoudes, F.; Hadjiaros, M.; Schiza, E.C.; Matsangidou, M.; Tsivitanidou, O.; Neokleous, K. An Overview of the Use of Chatbots in Medical and Healthcare Education. In Proceedings of the Learning and Collaboration Technologies: Games and Virtual Environments for Learning, Virtual, 24–29 July 2021; Zaphiris, P., Ioannou, A., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 170–184. [Google Scholar]

- Huang, R.S.; Benour, A.; Kemppainen, J.; Leung, F.-H. The Future of AI Clinicians: Assessing the Modern Standard of Chatbots and Their Approach to Diagnostic Uncertainty. BMC Med. Educ. 2024, 24, 1133. [Google Scholar] [CrossRef]

- Pinto-de-Sá, R.; Sousa-Pinto, B.; Costa-de-Oliveira, S. Brave New World of Artificial Intelligence: Its Use in Antimicrobial Stewardship—A Systematic Review. Antibiotics 2024, 13, 307. [Google Scholar] [CrossRef]

- Chang, A.; Chen, J.H. BSAC Vanguard Series: Artificial Intelligence and Antibiotic Stewardship. J. Antimicrob. Chemother. 2022, 77, 1216–1217. [Google Scholar] [CrossRef]

- Peiffer-Smadja, N.; Dellière, S.; Rodriguez, C.; Birgand, G.; Lescure, F.-X.; Fourati, S.; Ruppé, E. Machine Learning in the Clinical Microbiology Laboratory: Has the Time Come for Routine Practice? Clin. Microbiol. Infect. 2020, 26, 1300–1309. [Google Scholar] [CrossRef]

- Vandenberg, O.; Durand, G.; Hallin, M.; Diefenbach, A.; Gant, V.; Murray, P.; Kozlakidis, Z.; van Belkum, A. Consolidation of Clinical Microbiology Laboratories and Introduction of Transformative Technologies. Clin. Microbiol. Rev. 2020, 33, 10–1128. [Google Scholar] [CrossRef]

- Feretzakis, G.; Loupelis, E.; Sakagianni, A.; Kalles, D.; Martsoukou, M.; Lada, M.; Skarmoutsou, N.; Christopoulos, C.; Valakis, K.; Velentza, A.; et al. Using Machine Learning Techniques to Aid Empirical Antibiotic Therapy Decisions in the Intensive Care Unit of a General Hospital in Greece. Antibiotics 2020, 9, 50. [Google Scholar] [CrossRef] [PubMed]

- Fanelli, U.; Pappalardo, M.; Chinè, V.; Gismondi, P.; Neglia, C.; Argentiero, A.; Calderaro, A.; Prati, A.; Esposito, S. Role of Artificial Intelligence in Fighting Antimicrobial Resistance in Pediatrics. Antibiotics 2020, 9, 767. [Google Scholar] [CrossRef] [PubMed]

- Oonsivilai, M.; Mo, Y.; Luangasanatip, N.; Lubell, Y.; Miliya, T.; Tan, P.; Loeuk, L.; Turner, P.; Cooper, B.S. Using Machine Learning to Guide Targeted and Locally Tailored Empiric Antibiotic Prescribing in a Children’s Hospital in Cambodia. Wellcome Open Res. 2018, 3, 131. [Google Scholar] [CrossRef] [PubMed]

- Coelho, J.R.; Carriço, J.A.; Knight, D.; Martínez, J.-L.; Morrissey, I.; Oggioni, M.R.; Freitas, A.T. The Use of Machine Learning Methodologies to Analyse Antibiotic and Biocide Susceptibility in Staphylococcus Aureus. PLoS ONE 2013, 8, e55582. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.; Liu, S.; Yang, H.; Guo, J.; Wu, Y.; Liu, J. Ethical Considerations of Using ChatGPT in Health Care. J. Med. Internet Res. 2023, 25, e48009. [Google Scholar] [CrossRef]

- Topol, E.J. High-Performance Medicine: The Convergence of Human and Artificial Intelligence. Nat. Med. 2019, 25, 44–56. [Google Scholar] [CrossRef]

- Mehrabi, N.; Morstatter, F.; Saxena, N.; Lerman, K.; Galstyan, A. A Survey on Bias and Fairness in Machine Learning. ACM Comput. Surv. 2021, 54, 1–35. [Google Scholar] [CrossRef]

- HIPAA, vs. GDPR Compliance: What’s the Difference? Available online: https://www.onetrust.com/blog/hipaa-vs-gdpr-compliance/ (accessed on 2 December 2024).

- Office for Civil Rights (OCR). Health Information Privacy. Available online: https://www.hhs.gov/hipaa/index.html (accessed on 9 December 2024).

- General Data Protection Regulation (GDPR)—Legal Text. Available online: https://gdpr-info.eu/ (accessed on 9 December 2024).

- Zhou, J.; Müller, H.; Holzinger, A.; Chen, F. Ethical ChatGPT: Concerns, Challenges, and Commandments. Electronics 2024, 13, 3417. [Google Scholar] [CrossRef]

- Center for Devices and Radiological Health. Artificial Intelligence and Machine Learning in Software as a Medical Device. FDA. 2024. Available online: https://www.fda.gov/medical-devices/software-medical-device-samd/artificial-intelligence-and-machine-learning-software-medical-device (accessed on 3 December 2024).

- European Commission; Joint Research Centre. AI Watch: Defining Artificial Intelligence: Towards an Operational Definition and Taxonomy of Artificial Intelligence; Publications Office: Luxembourg, 2020. [Google Scholar]

- Regulation—2017/746—EN—Medical Device Regulation—EUR-Lex. Available online: https://eur-lex.europa.eu/eli/reg/2017/746/oj (accessed on 9 December 2024).

- European Union. Regulation (EU) 2017/745 of the European Parliament and of the Council of 5 April 2017 on Medical Devices, Amending Directive 2001/83/EC, Regulation (EC) No 178/2002 and Regulation (EC) No 1223/2009 and Repealing Council Directives 90/385/EEC and 93/42/EEC (Text with EEA Relevance). Off. J. Eur. Union 2017, 117, 1–175. [Google Scholar]

- Gerke, S.; Minssen, T.; Cohen, G. Chapter 12—Ethical and Legal Challenges of Artificial Intelligence-Driven Healthcare. In Artificial Intelligence in Healthcare; Bohr, A., Memarzadeh, K., Eds.; Academic Press: Cambridge, MA, USA, 2020; pp. 295–336. ISBN 978-0-12-818438-7. [Google Scholar]

- Alam, K.; Kumar, A.; Samiullah, F.N.U. Prospectives and Drawbacks of ChatGPT in Healthcare and Clinical Medicine. In AI and Ethics; Springer: Berlin/Heidelberg, Germany, 2024; pp. 1–7. [Google Scholar] [CrossRef]

- Sujan, M.; Smith-Frazer, C.; Malamateniou, C.; Connor, J.; Gardner, A.; Unsworth, H.; Husain, H. Validation Framework for the Use of AI in Healthcare: Overview of the New British Standard BS30440. BMJ Health Care Inform. 2023, 30, e100749. [Google Scholar] [CrossRef]

- Sheller, M.J.; Edwards, B.; Reina, G.A.; Martin, J.; Pati, S.; Kotrotsou, A.; Milchenko, M.; Xu, W.; Marcus, D.; Colen, R.R.; et al. Federated Learning in Medicine: Facilitating Multi-Institutional Collaborations without Sharing Patient Data. Sci. Rep. 2020, 10, 12598. [Google Scholar] [CrossRef] [PubMed]

- Rieke, N.; Hancox, J.; Li, W.; Milletarì, F.; Roth, H.R.; Albarqouni, S.; Bakas, S.; Galtier, M.N.; Landman, B.A.; Maier-Hein, K.; et al. The Future of Digital Health with Federated Learning. NPJ Digit. Med. 2020, 3, 119. [Google Scholar] [CrossRef] [PubMed]

- Chen, I.Y.; Joshi, S.; Ghassemi, M. Treating Health Disparities with Artificial Intelligence. Nat. Med. 2020, 26, 16–17. [Google Scholar] [CrossRef] [PubMed]

| Study Reference (Author, Year) | Study Design and Setting | Infection Type | Primary Outcomes | Key Limitations | Implications |

|---|---|---|---|---|---|

| Maillard et al., 2023, [20] | Prospective cohort study, tertiary hospital | Bloodstream infections (BSIs) | 64% adequate empirical therapies, 36% optimal definitive therapies | Inadequate source control in some cases, long treatment durations | Useful as a supplementary tool, requires oversight |

| De Vito et al., 2024, [19] | Comparative study, single center | Various bacterial infections (BSIs, pneumonia, etc.) | 70% accuracy in theoretical questions, limitations in resistance mechanism recognition | Older antibiotic preferences, limited guideline alignment | Promising in education, unsuitable for complex decisions |

| Sarink et al., 2023, [18] | Retrospective analysis, tertiary hospital | Positive blood cultures | Mean accuracy 2.8/5, highest for blood cultures | Ambiguous recommendations, occasional factual inaccuracies | Cannot replace clinicians, serves as diagnostic aid |

| Howard et al., 2023, [17] | Qualitative exploratory research, single center | General antimicrobial advice | Recognized contraindications inconsistently; proposed harmful recommendations | Failures in situational awareness, inconsistent inference | Needs human supervision, risk of dangerous advice |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Antonie, N.I.; Gheorghe, G.; Ionescu, V.A.; Tiucă, L.-C.; Diaconu, C.C. The Role of ChatGPT and AI Chatbots in Optimizing Antibiotic Therapy: A Comprehensive Narrative Review. Antibiotics 2025, 14, 60. https://doi.org/10.3390/antibiotics14010060

Antonie NI, Gheorghe G, Ionescu VA, Tiucă L-C, Diaconu CC. The Role of ChatGPT and AI Chatbots in Optimizing Antibiotic Therapy: A Comprehensive Narrative Review. Antibiotics. 2025; 14(1):60. https://doi.org/10.3390/antibiotics14010060

Chicago/Turabian StyleAntonie, Ninel Iacobus, Gina Gheorghe, Vlad Alexandru Ionescu, Loredana-Crista Tiucă, and Camelia Cristina Diaconu. 2025. "The Role of ChatGPT and AI Chatbots in Optimizing Antibiotic Therapy: A Comprehensive Narrative Review" Antibiotics 14, no. 1: 60. https://doi.org/10.3390/antibiotics14010060

APA StyleAntonie, N. I., Gheorghe, G., Ionescu, V. A., Tiucă, L.-C., & Diaconu, C. C. (2025). The Role of ChatGPT and AI Chatbots in Optimizing Antibiotic Therapy: A Comprehensive Narrative Review. Antibiotics, 14(1), 60. https://doi.org/10.3390/antibiotics14010060