1. Introduction

Magnetoresistive sensors are essential components in high-density information storage and position/speed monitoring technologies [

1]. Their performance is influenced not only by the field dependence of the material parameters but also by the geometric configuration of the device [

2]. Narrow band-gap semiconductors such as InSb have attracted considerable interest due to their high carrier mobilities, which provide superior sensitivity and extended frequency response beyond the 10–20 kHz range typical of Si Hall sensors [

3].

(Permalloy) is widely employed in spin-valve read heads because of its negligible magnetostriction, which effectively minimizes stress-induced anisotropy [

4,

5]. In ferromagnetic metals like

, electrical resistivity is strongly influenced by spin-dependent scattering processes. The likelihood of such scattering depends on spin orientation and magnetic ordering [

6], giving rise to the anisotropic magnetoresistance (AMR) effect. When magnetization is aligned by an external field, spin disorder is reduced, resulting in lower electron scattering and decreased resistivity. In contrast, at zero field, a demagnetized multi-domain state enhances spin-disorder scattering, leading to higher resistivity. Temperature introduces additional effects: as it rises, thermal spin disorder increases resistivity, and near the Curie temperature (

), critical spin fluctuations produce a pronounced peak [

7]. This phenomenon—often described as enhanced spin-disorder scattering in the critical region—is analogous to the Curie–Weiss behavior of magnetic susceptibility. Above

, susceptibility follows the Curie–Weiss law, reflecting the loss of long-range ferromagnetic order. Below

, spontaneous magnetization decreases progressively with temperature and vanishes at

through a second-order phase transition. This temperature dependence, sometimes approximated by mean-field models such as

provides the theoretical framework for interpreting our high-temperature magnetotransport data.

In practice, extending magnetoresistivity characterization to elevated temperatures is challenging: measurements may be limited by instrument constraints and affected by thermal drift, contact-resistance changes, or irreversible modifications in ultrathin films (e.g., oxidation or interdiffusion). Moreover, the high-temperature response reflects multiple competing scattering mechanisms, including spin-disorder contributions that grow with temperature and can become strongly non-linear near the Curie region. These factors make straightforward extrapolation beyond the experimentally accessible range unreliable and motivate data-driven surrogate models for robust high-temperature prediction.

Despite significant advances in magnetoresistive technology, accurately predicting material properties, such as magnetoresistivity at elevated temperatures, remains a challenge [

8]. Traditional experimental methods often face limitations when extending observations beyond experimentally feasible conditions [

9]. In recent years, machine learning (ML) has emerged as a powerful tool to address these challenges, enabling data-driven predictions that extend beyond the limits of experimental data [

10,

11,

12,

13].

The novelty of the present work is to treat high-temperature magnetoresistivity prediction as a supervised learning problem based on the full field-dependent curves, rather than predicting only a few scalar summaries. We benchmark multiple regression models under a unified evaluation protocol, then use the best-performing model to extrapolate magnetoresistivity to temperatures above the measurement limit. Importantly, the extrapolated temperature dependence is also used to estimate the Curie temperature, and the predicted behavior is checked for physical consistency using normalized representations (e.g., trends versus

). This combined curve-level prediction, extrapolation, and

estimation provides a device-relevant surrogate modeling workflow that reduces the need for technically challenging high-temperature experiments.

This study leverages advanced machine learning models, including Support Vector Regression (SVR), Random Forest Regression (RFR), and Neural Networks (NN), to analyze and predict the magnetoresistive behavior of

(Permalloy) monolayers. By employing these sophisticated models, we aim to generate accurate predictions of magnetoresistivity at temperatures exceeding 350 °C, a range that is typically inaccessible through conventional experimental methods [

14,

15]. The integration of ML models in our approach not only enhances predictive accuracy but also enables more precise estimation of critical material properties, such as the

[

16,

17]. These predictive insights are expected to play a crucial role in advancing the application of Permalloy in next-generation technologies, including high-density information storage and spintronic devices.

2. Materials and Methods

2.1. Experimental Techniques

The 5 nm-thick

(Permalloy) films were deposited onto a 3-inch single-crystal Si wafer with its native

oxide layer preserved (prepared in-house by Recep Sahingoz, Yozgat Bozok University, Yozgat, Türkiye.). Films with an individual thickness of 5 nm are deposited under high-vacuum conditions using magnetron sputtering. The as-deposit films were 50–60 mm long and

wide. The samples were thoroughly cleaned with acetone and alcohol prior to further processing to remove surface impurities.

The films were then cut into

pieces for measurement. Electrical contacts were established by coating the sample corners with silver paint and attaching copper wires [

18]. Resistance was measured using the Van der Pauw technique, where a constant current (10–100 mA) was applied in-plane via a constant current source, and the corresponding voltage was measured using a digital voltmeter. The Helmholtz coils were driven by a bipolar power supply to generate the magnetic field.

Magnetic measurements were performed using the Lake Shore Hall Effect Measurement System (HEMS) in 30 steps over the temperature range of 25 °C to 350 °C [

19]. Hall coefficients and resistance at each temperature were obtained by reversing the current direction and using all possible contact configurations [

20,

21]. Temperature-dependent magnetic measurements were carried out on

-thick

(Permalloy) films deposited under vacuum on

[

22,

23]. An external magnetic field of was applied to the sample at each temperature. Resistance changes with applied magnetic field and temperature were measured at each temperature, as shown in

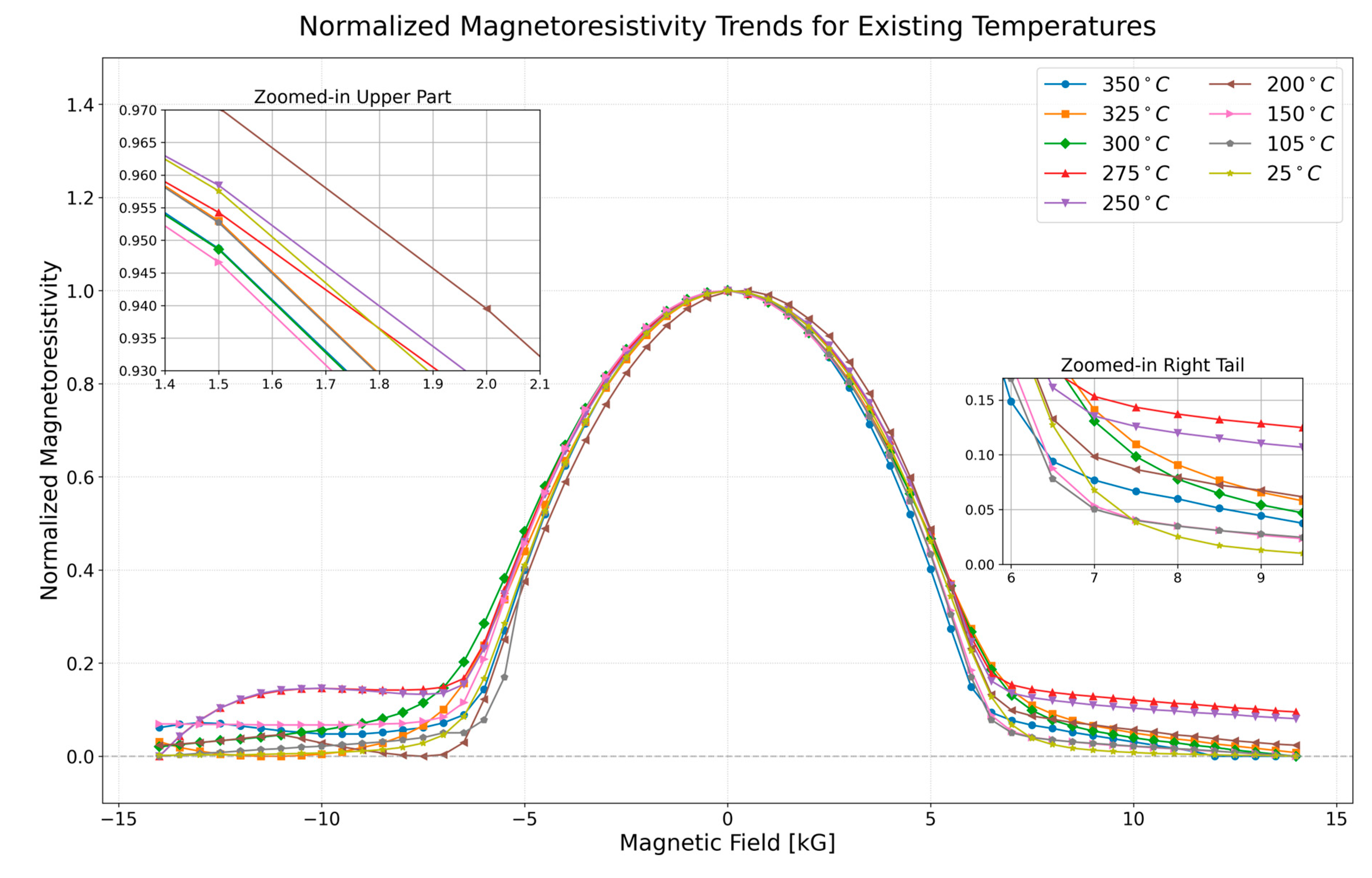

Figure 1.

In

Figure 1, temperatures were kept constant during the experiment. For example, magnetoresistance measurements were made by changing the magnetic field, while the temperature was fixed at 250 °C [

24]. It can be seen in the normalized graphs that the magnetoresistivity values show considerable differences [

25]. It is well known that changes in resistivity arise from the material’s composition, geometry, and external factors such as temperature, stress, and pressure, as discussed in similar studies [

26]. The temperature was kept constant during the application of the magnetic field. Separate magnetic measurements were taken for each temperature value and presented in the same graph [

27].

The magnetization of the material is inversely proportional to the temperature. Magnetization decreases with temperature and disappears at the

, where the material becomes paramagnetic. This phenomenon is known as the Curie–Weiss law in ferromagnetic materials [

9]. Even though the sample temperature is constant under the applied magnetic field, the observed resistance change is due to changes in Hall mobility and carrier density [

5].

In the absence of a magnetic field, the apparent carrier density decreases with increasing temperature, whereas the Hall mobility increases at higher temperatures. At 25 °C,

is approximately

; this falls to

by 350 °C. Meanwhile, the Hall mobility rises from

at 250 °C to

at 350 °C [

28]. These characteristics differ from those typical in ordinary metals (where carrier density is approximately stable and the Hall mobility typically decreases with higher temperature owing to phonon scattering). Instead, these behaviors arise from the interplay of the ordinary and anomalous Hall effects (AHEs) in ferromagnetic

. When the AHE contribution (scaling with magnetization) dominates, it counteracts the ordinary Hall voltage, resulting in an apparent overestimation of carrier density.

When the temperature rises while the magnetization also falls, the effect of the AHE is minimized, exposing the intrinsic carrier density which seems to decline. The increase in

is mainly attributed to the remaining carriers experiencing reduced scattering in the near-paramagnetic regime, even though phonon scattering increases.

In summary, the observed decrease in carrier density and increase in the Hall mobility at elevated temperatures are explained by the weakening anomalous Hall contribution and prevailing scattering mechanisms, rather than actual variations in carrier density or reduced phonon scattering.

2.2. Machine Learning Methodology

This study applies several machine learning (ML) models, including Support Vector Regression (SVR), Random Forest Regression (RFR), Decision Tree Regression, Neural Networks (NN), Gaussian Process Regression (GPR), and Linear Regression, to predict magnetoresistivity and estimate critical temperatures such as the

. These models are selected for their ability to model non-linear relationships and to extend predictions beyond the experimental temperature range. This section details the architectures, key parameters, configurations for each model, and the crucial role of synthetic data generation.

SVR captures non-linear dependencies in the data using a radial basis function (RBF) kernel. The model is configured with three key hyperparameters: the regularization parameter (), the kernel coefficient (), and the

-insensitive margin. Here, based on systematic tuning, the hyperparameters

, kernel width

, and

were selected. Specifically, grid searches and cross-validation were performed: a lower

(e.g., 1) was observed to underfit (high bias, producing smoother predictions that missed curvature), whereas a very high

(e.g., 100) overfit the noise (high variance, capturing small fluctuations that hurt generalization).

gave the best balance between bias and variance on validation sets. For the kernel parameter, we found

optimal; a larger

(such as 1.0) made the SVR local, fitting individual points tightly but failing to generalize (similar to overfitting), while a much smaller

(e.g., 0.01) made it global (almost linear). The chosen

allowed the SVR to capture the broad non-linear trend without chasing high-frequency noise. The parameter

, controlling the tolerance for the fit error, was set to

(for normalized resistivity values ~0–1), allowing minor deviations to be ignored and improving robustness. Using a significantly larger

(e.g., 0.1) slightly degraded accuracy (as the model would ignore small but systematic variations), while a smaller

(e.g., 0.001) did not noticeably improve accuracy but made training slower. With these optimized hyperparameters, the SVR achieved prediction accuracy almost on par with RFR.

RFR operates as an ensemble learning technique, constructing 400 decision trees trained on bootstrapped subsets of data and features. A distinct prediction is made for each tree.

Inference is determined by the ensemble output, which is defined as the average prediction from all the trees. This process is able to effectively preserve complex non-linear interactions between magnetoresistivity and magnetic field while reducing model variability and preventing overfitting. With the noise-augmented training scheme as seen above, the relatively high number of estimators () ensures the model stability against noisy inputs.

In contrast, in decision tree regression, the input data is compartmentalized using a single hierarchical structure through the iterative selection of optimal feature thresholds. This methodology is characterized by high interpretability and clearly defined decision logic, making it suitable for exploratory analysis. However, when applied to small or moderately sized datasets, it is particularly prone to overfitting, resulting in the capture of noise rather than the true signal. Consequently, while decision trees may demonstrate efficacy in training data, their capacity for generalization to unseen data is frequently suboptimal. Notwithstanding this limitation, decision tree regression remains a valuable baseline for comparing the performance of more robust ensemble methods, such as random forests.

In order to learn non-linear mappings from magnetic field to magnetoresistivity, NN were implemented as deep feedforward architectures. Four dense layers make up the enhanced NN architecture: 512 neurons for the input layer, 256 and 128 neurons for the hidden layers, and an output layer at the end. Dropout layers with rates of 0.4 and 0.3 are used to avoid overfitting, and all hidden layers introduce non-linearity using the ReLU activation function. To stabilize training, batch normalization is added after the initial dense layer. In order to minimize the mean squared error loss, the model is trained using the Adam optimizer at a learning rate of 0.0001. To avoid overfitting and enhance generalization, adaptive learning rate reduction (factor = 0.6, patience = 150) and early stopping (patience = 200) are used.

GPR offers a probabilistic modeling approach that can not only provide point predictions but also measures of uncertainty. Hyperparameters were optimized with up to 30 restarts to overcome local minima in the likelihood landscape. Normalized targets were utilized to learn the model that was regularized with an

noise term. GPR demonstrated the ability to interpolate within the training range and supplied predictive uncertainty where it is extrapolated that rendered the method highly useful for the calculation of confidence intervals in sparse or noisy spaces.

Linear Regression serves as a baseline model for evaluating the linearity of the relationship between temperature, magnetic field, and magnetoresistivity. While computationally efficient, it is insufficient to capture the complex, non-linear interactions in the dataset, highlighting the need for more advanced techniques.

Synthetic data generation plays a critical role in enhancing the robustness and generalizability of the machine learning models applied in this study. Gaussian noise is introduced to the experimental data to simulate the variability often encountered in real-world measurements. Additionally, synthetic magnetoresistivity values are computed for temperatures beyond the experimental range using the magnetization model

, where

and

are fitted parameters derived from the experimental data. These model predictions are further smoothed using Gaussian filtering to minimize abrupt variations while retaining key trends. This approach ensures that the ML models train on a broader spectrum of conditions, facilitating improved extrapolation and predictive accuracy for unseen temperature and magnetic field values. After augmentation, the training pool contained 50% synthetic data.

The dataset consists of 300 measurements collected in a temperature range of 25 °C to 350 °C and a magnetic field of

. These data points are divided into 70% for training, 15% for validation, and 15% for testing. Cross-validation is performed to ensure model generalizability, and hyperparameter optimization is performed for SVR, RFR, NN, and GPR to fine-tune their performance. Although the dataset is modest, the magnetoresistivity exhibits a structured response for each temperature it is approximately Gaussian like in the intermediate field range, while showing clear and systematic deviations in the high field tail. Moreover, the temperature evolution follows a physically constrained trend that can be approximated by

, which supports stable learning and enables estimation of

. This structure reduces the amount of data required to learn robust patterns, but the tail discrepancies indicate that a single simple parametric form is insufficient across the full field range. Therefore, ML is employed to learn a global surrogate model that captures both Gaussian like core and the non-Gaussian tail behavior, and to support extrapolation beyond the experimental temperature limit.

Although many regression algorithms exit, we intentionally focus on a compact set of widely used approaches that cover complementary modeling strategies. This supports the main aim of the paper, which is to perform a controlled comparison under identical preprocessing, data splitting, and evaluation metrics. For reproducibility, all hyperparameters and training settings are reported so that readers can regenerate the results. The next section presents the comparative performance and discusses the predictive behavior of each method.

3. Results and Discussion

The behavior of the MR data, as shown in

Figure 2, demonstrates an almost perfect Gaussian pattern in the central and intermediate regions of the magnetic field range. This consistency underscores the quality of the dataset and the robustness of the machine learning models. However, in the tail regions, discrepancies between the magnetoresistivity curves become more pronounced for different temperature values, which is a common feature in magnetic systems as they approach magnetic saturation.

As magnetic saturation effects dominate in the tail regions, the magnetoresistive sensitivity is reduced, leading to the observed deviations. These deviations do not undermine the overall fit or predictive accuracy of the models in the primary and intermediate regions but rather highlight the physical limitations and complexities of magnetoresistive behavior at extreme magnetic field values. This transition from regular Gaussian behavior to saturation effects provides valuable insights into the material’s magnetoresistive properties, which are critical for understanding its performance across different regimes.

To extend the understanding of the material’s properties beyond the experimentally measured range, ML techniques were employed to analyze the dataset of magnetoresistivity as a function of temperature and magnetic field. The dataset, comprising measurements across a temperature range of 25–350 °C and magnetic fields up to

, was used to train several ML models [

10]. These models included regression techniques, which were trained to predict magnetoresistivity beyond the experimental limits [

8].

The ML models were evaluated using metrics such as Mean Absolute Error (MAE) and the

score to assess predictive accuracy [

29]. To summarize the temperature-wise robustness of the two best-performing models, we report the average and standard deviation of their scores across the temperature steps listed in

Table 1 and

Table 2. Over this range, Random Forest Regressor achieves an average

score of

, while Support Vector Regressor yields

; consistently, the average MSE values in

Table 1 are

for Random Forest and

for SVR. Since the magnetoresistivity curves are dominated by a Gaussian-like profile, even small gains in overall agreement are valuable for preserving the full curve shape; therefore, Random Forest is adopted as the preferred model for high-temperature extrapolative predictions beyond the experimental window [

30,

31].

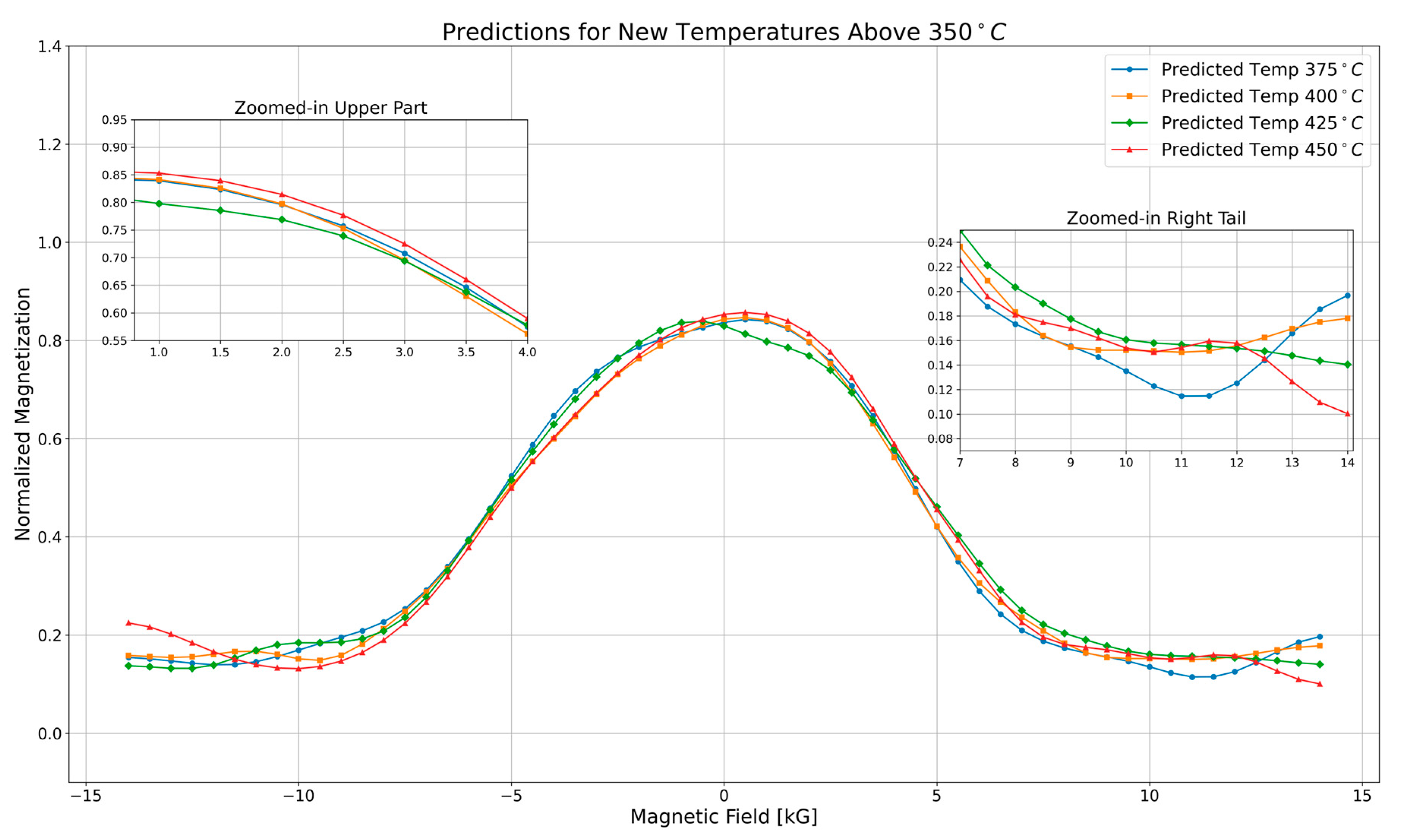

The predictions plot in

Figure 2 illustrates the variation in normalized magnetization as a function of the magnetic field at temperatures exceeding

(

,

,

, and

). Each curve represents a specific temperature and follows a Gaussian-like profile, peaking at zero magnetic field and symmetrically decreasing as the field strength increases. As temperature increases, magnetization decreases, which is expected due to thermal agitation reducing magnetic alignment. The

curve (red) consistently exhibits the lowest magnetization values, while the

curve (blue) maintains the highest values across the field range.

The zoomed-in upper region, covering the

to

range, provides a closer look at how magnetization decreases with increasing magnetic field. The curves follow a smooth downward trend, with higher-temperature curves lying below lower-temperature ones. This gradual separation of the curves confirms the expected temperature-dependent decline in magnetization, with no abrupt changes in behavior.

The zoomed-in right tail, spanning

to

, highlights magnetization behavior at high magnetic fields. In this region, magnetization values are generally low, but temperature-driven variations cause slight deviations from the Gaussian shape. The 450 °C curve (red) reaches the lowest magnetization values, reflecting stronger thermal effects at higher temperatures. Despite these differences, all curves stabilize at low magnetization values, suggesting a reduced sensitivity to the magnetic field at elevated temperatures, where thermal fluctuations dominate.

Using the refined models, predictions were made for magnetoresistivity at temperatures significantly higher than

, specifically at

,

,

, and

. These predictions provide insights into the behavior of the material at higher temperatures, which are challenging to achieve in standard laboratory conditions [

32]. The plots reveal that as the temperature increases beyond

, the predicted magnetoresistivity values continue to follow the expected trend, demonstrating the models’ capability to extrapolate beyond the experimentally available data [

33].

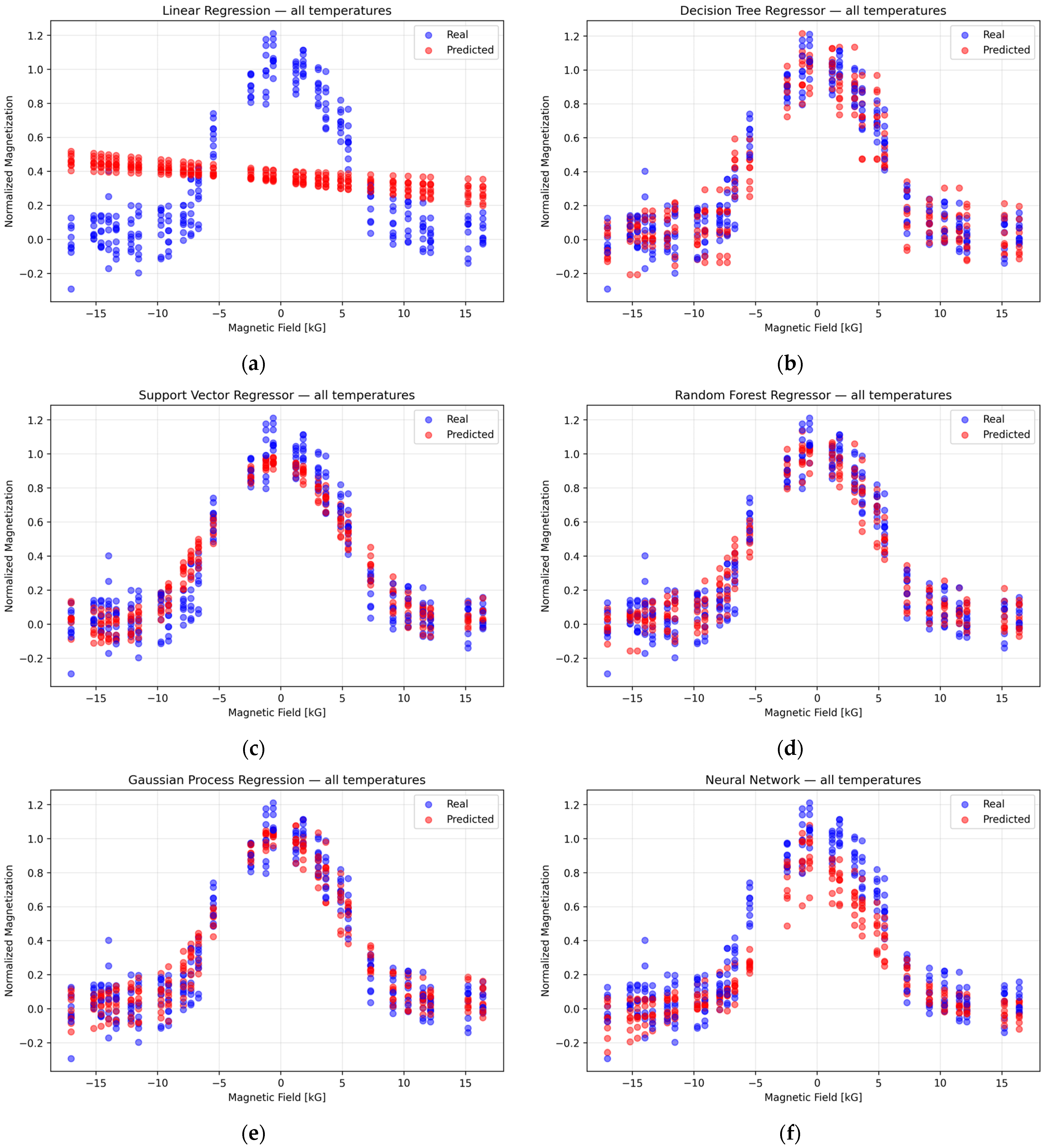

The real vs. predicted values for different ML models at

are shown in

Figure 3. This comparison highlights the performance of various models, with the RFR model providing the most accurate predictions. The scatter plot demonstrates a strong alignment between the predicted and actual values for the RFR, while other models show larger deviations, indicating the superior predictive power of the Random Forest model in this scenario [

34,

35,

36].

Importantly, the Random Forest Regression (RFR) model did not merely achieve high predictive accuracy—it also captured meaningful physical trends inherent in the data. Specifically, the model recognized that increasing the temperature generally raises the material’s baseline resistivity, a consequence of enhanced scattering at higher

, while applying a higher magnetic field lowers the resistivity by suppressing magnetic scattering. In other words, it successfully reflected the well-known magnetoresistive behavior where an external field reduces resistivity (negative magnetoresistance) by aligning magnetic moments and thereby reducing spin-disorder scattering [

37].

These trends are consistent with established physics: near the

(where spins become disordered), one typically observes a strong negative magnetoresistance (i.e., a significant drop in resistivity under applied field), whereas at low temperatures (deep in the ferromagnetic phase with ordered spins), the magnetoresistive effect is much weaker [

37].

The key point is that the RFR’s predictions respect known physical behavior (e.g., no unphysical spikes or trends), indicating that the model learned underlying dependencies rather than spurious correlations. In effect, the RFR operates as an implicit physics-based model for resistivity as a function of temperature and field. Its learned mapping can be interpreted as effectively capturing separate contributions of temperature and magnetic field, for instance:

where

is a baseline resistivity increasing with temperature (reflecting temperature-dependent scattering processes), and

is a field-dependent component associated with spin-disorder scattering, which decreases as the field

increases.

Physically,

represents the resistivity in the absence of magnetic scattering (e.g., due to lattice or impurity scattering), while

represents the additional resistivity from spin disorder, which is suppressed by an external magnetic field [

38]. This form is analogous to Matthiessen’s rule—where total resistivity is approximately the sum of independent contributions—and mirrors what is observed in magnetoresistive materials.

Near the

, the spin-disorder contribution

is large (random spins increase resistivity), but a strong enough magnetic field can align the spins and drastically reduce this part of the resistivity [

37]. Far below

, the spins are mostly aligned even at zero field, so

is inherently small, and the application of a field yields only a minor resistivity change, consistent with the weaker magnetoresistance observed at low

.

Although this two-component form was not explicitly imposed in the modeling, it was effectively discovered from the training data by the RFR (and, to a lesser extent, the SVR), reflecting the underlying physical relationship. This accounts for the model’s high predictive accuracy and the fact that its predictions conform to established physical laws of magnetoresistivity, thereby lending credibility to its use in analyzing and predicting magnetoresistive behavior.

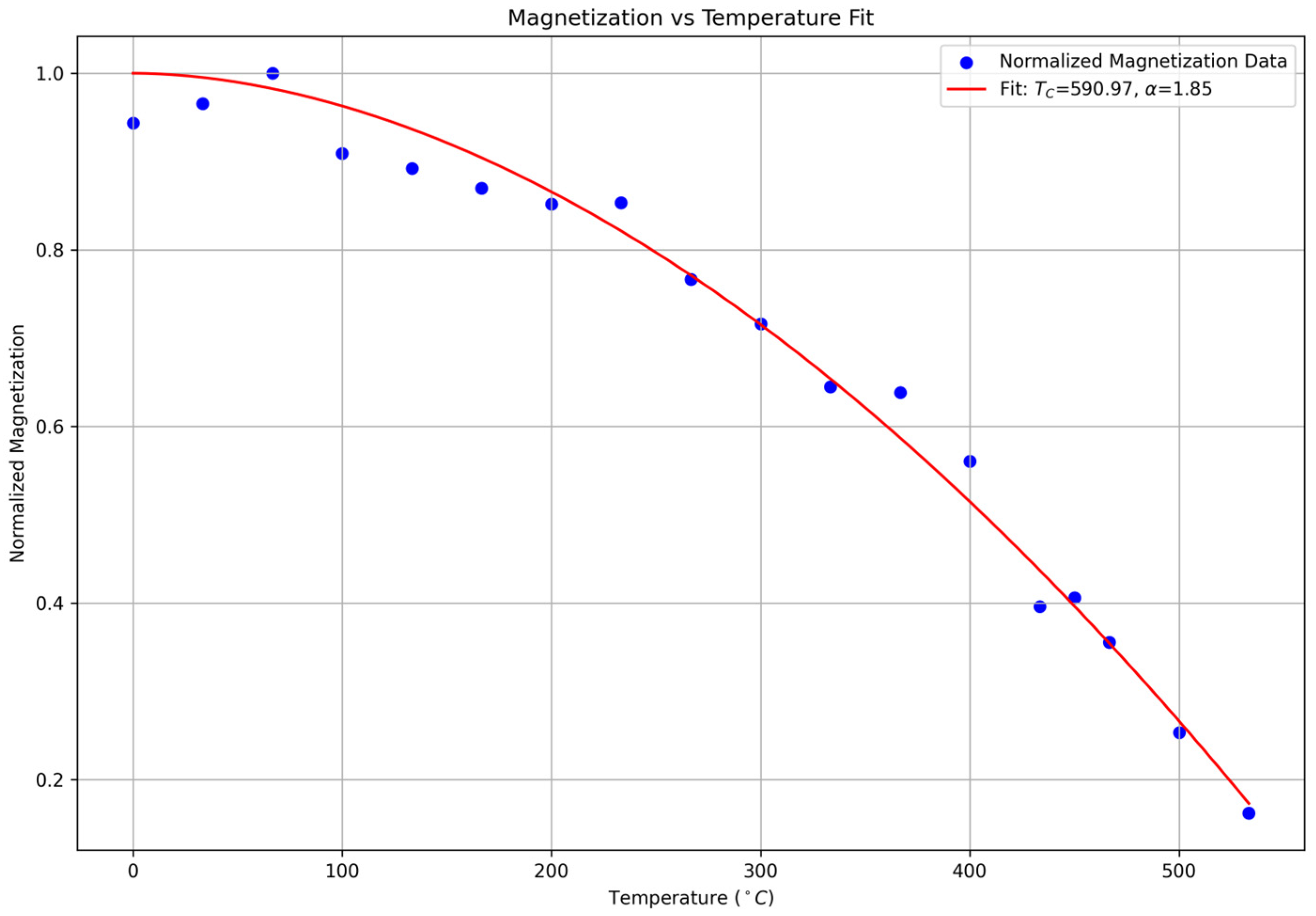

After identifying RFR as the best-performing model, predictions were extended to temperatures above

, beyond the experimental range. Using the predicted magnetoresistivity data,

was determined by fitting the data to the equation

, where

is a fitting parameter. Through this fitting procedure (see

Figure 4), the

was estimated to be approximately

demonstrating the success of our methodology and the quality of both the data and model predictions. This result aligns closely with recent studies that report a

of

for

[

17], underscoring the precision of the RFR model in predicting critical material properties.

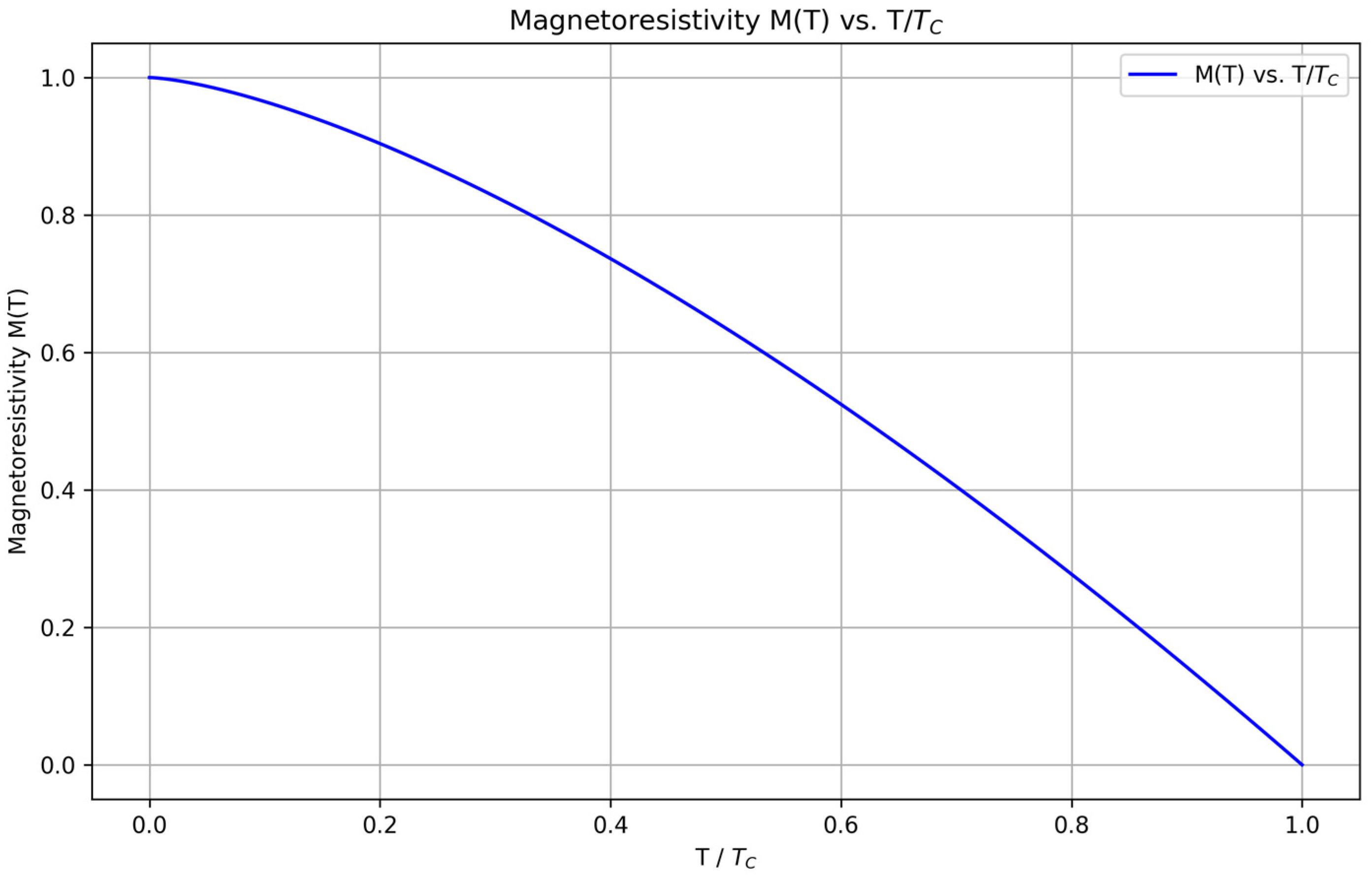

The normalized magnetoresistivity

as a function of the normalized temperature

is shown in

Figure 5 [

39,

40]. This plot is particularly significant as it compares well with the theoretical models and existing literature, indicating that the ML predictions align closely with known physical behaviors [

40,

41]. The curve demonstrates a smooth decline in magnetoresistivity with increasing normalized temperature, consistent with theoretical expectations, and further validates the model’s accuracy in capturing the material’s intrinsic properties [

36].

Overall, this advanced analysis provided enhanced predictive models for the material’s magnetoresistive behavior and critical temperature transitions [

24]. The Random Forest Regression model, in particular, showcased the potential of machine learning in accurately forecasting material properties beyond experimental temperature limits [

11]. The extension of predictions to elevated temperatures underscores the effectiveness of these ML models in offering insights into material behavior under conditions that are challenging to replicate experimentally. This study underscores the significant role that machine learning can play in advancing our understanding of complex material properties and aiding in the development of new technologies [

42].