Emotion Recognition from Realistic Dynamic Emotional Expressions Cohere with Established Emotion Recognition Tests: A Proof-of-Concept Validation of the Emotional Accuracy Test

Abstract

Highlights

- A positive relation was found for the recognition of posed, enacted and spontaneous expressions.

- Individual differences were consistent across the three emotion recognition tests.

- Participants most enjoyed the test with real emotional stories (EAT).

1. Introduction

1.1. Assessing Individual Differences in Emotion Recognition

1.2. The Current Research

2. Method

2.1. Participants

2.2. Measures

2.3. Procedure

3. Results

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

| 1 | Noteworthy is the classical, dyadic version of the empathic accuracy paradigm (e.g., Ickes et al. 1990; Stinson and Ickes 1992). One limitation of this original version of the paradigm is that the paradigm was used each time with new target individuals. There was thus no standard test to utilize across studies. Here, we focus our discussion on a more recent version of the empathic accuracy paradigm, which involves a standard set of target individuals to be utilized across different studies, making each finding directly comparable to previous findings using the same stimulus set. |

| 2 | The Emotional Accuracy Test discussed in the present manuscript is identical to the recognition test originally described in Israelashvili et al. (2020a): which referred to accurate emotion recognition. |

| 3 | Readers should note that some emotion categories in these tasks (GERT, RMET) do not have a prototypical expression (e.g., playful). Nonetheless, we refer to them as prototypical since we presume that resemblance with prototypical (rather than idiosyncratic) representations of emotional expressions guided the production (GERT) and the selection (RMET) of all emotional stimuli included in these tests. |

| 4 | This inclusion criterion was not preregistered for Study 1. Our decision to nevertheless apply it was primarily informed by reviewers’ comments about the need to report only reliable data. Importantly, all patterns of findings reported in this manuscript remain the same (or stronger) when the excluded participants are included in the analyses. |

| 5 | Participants also completed the Interpersonal Reactivity Index (IRI; Davis 1983) and the Ten Items Personality Inventory. We also asked whether participants had had similar life experiences to those described in the videos and assessed their empathic responses toward the person in the video by eliciting written support messages. These measures were collected for research questions not addressed in the present manuscript. Here, we focus on measures and analyses directly relevant for testing our hypotheses, as specified in the preregistration of the current study. |

References

- Baron-Cohen, Simon, Sally Wheelwright, Jacqueline Hill, Yogini Raste, and Ian Plumb. 2001. The “Reading the Mind in the Eyes” test revised version: A study with normal adults, and adults with Asperger syndrome or high-functioning autism. Journal of Child Psychology and Psychiatry 42: 241–51. [Google Scholar] [CrossRef] [PubMed]

- Barrett, Lisa Feldman, Ralph Adolphs, Aleix Martinez, Stacy Marsella, and Seth Pollak. 2019. Emotional expressions reconsidered: Challenges to inferring emotion in human facial movements. Psychological Science in the Public Interest 20: 1–68. [Google Scholar] [CrossRef] [PubMed]

- Brown, Casey L., Sandy J. Lwi, Madeleine S. Goodkind, Katherine P. Rankin, Jennifer Merrilees, Bruce L. Miller, and Robert W. Levenson. 2018. Empathic accuracy deficits in patients with neurodegenerative disease: Association with caregiver depression. American Journal of Geriatric Psychiatry 26: 484–93. [Google Scholar] [CrossRef] [PubMed]

- Connolly, Hannah L., Carmen E. Lefevre, Andrew W. Young, and Gary J. Lewis. 2020. Emotion recognition ability: Evidence for a supramodal factor and its links to social cognition. Cognition 197: 104166. [Google Scholar] [CrossRef] [PubMed]

- Davis, Mark. 1983. Measuring Individual Differences in Empathy: Evidence for a Multidimensional Approach. Journal of Personality and Social Psychology 44: 113–26. [Google Scholar]

- DeRight, Jonathan, and Randall S. Jorgensen. 2015. I just want my research credit: Frequency of suboptimal effort in a non-clinical healthy undergraduate sample. The Clinical Neuropsychologist 29: 101–17. [Google Scholar] [CrossRef] [PubMed]

- Eckland, Nathaniel S., Teresa M. Leyro, Wendy Berry Mendes, and Renee J. Thompson. 2018. A multi-method investigation of the association between emotional clarity and empathy. Emotion 18: 638. [Google Scholar] [CrossRef] [PubMed]

- Elfenbein, Hillary Anger, Abigail A. Marsh, and Nalini Ambady. 2002. Emotional intelligence and the recognition of emotion from facial expressions. In The Wisdom in Feeling: Psychological Processes in Emotional Intelligence. Edited by Lisa Feldman Barrett and Peter Salovey. New York: Guilford Press, pp. 37–59. [Google Scholar]

- Elfenbein, Hillary Anger, Maw Der Foo, Judith White, Hwee Hoon Tan, and Voon Chuan Aik. 2007. Reading your counterpart: The benefit of emotion recognition accuracy for effectiveness in negotiation. Journal of Nonverbal Behavior 31: 205–23. [Google Scholar] [CrossRef]

- Eyal, Tal, Mary Steffel, and Nicholas Epley. 2018. Perspective mistaking: Accurately understanding the mind of another requires getting perspective, not taking perspective. Journal of Personality and Social Psychology 114: 547. [Google Scholar] [CrossRef]

- Fanelli, Daniele, and John P. A. Ioannidis. 2013. US studies may overestimate effect sizes in softer research. Proceedings of the National Academy of Sciences of the United States of America 110: 15031–36. [Google Scholar] [CrossRef]

- Fischer, Agneta H., and Antony S. R. Manstead. 2016. Social functions of emotion and emotion regulation. In Handbook of Emotions, 4th ed. Edited by Lisa Feldman Barrett, Michael Lewis and Jeannette M. Haviland-Jones. New York: The Guilford Press, pp. 424–39. [Google Scholar]

- Halberstadt, Amy G., Susanne A. Denham, and Julie C. Dunsmore. 2001. Affective social competence. Social Development 10: 79–119. [Google Scholar] [CrossRef]

- Hall, Judith A., and Marianne Schmid Mast. 2007. Sources of accuracy in the empathic accuracy paradigm. Emotion 7: 438–46. [Google Scholar] [CrossRef] [PubMed]

- Hall, Judith A., Susan A. Andrzejewski, and Jennelle E. Yopchick. 2009. Psychosocial correlates of interpersonal sensitivity: A meta-analysis. Journal of Nonverbal Behavior 33: 149–80. [Google Scholar] [CrossRef]

- Hampson, Elizabeth, Sari M. van Anders, and Lucy I. Mullin. 2006. A female advantage in the recognition of emotional facial expressions: Test of an evolutionary hypothesis. Evolution and Human Behavior 27: 401–16. [Google Scholar] [CrossRef]

- Ickes, William, Linda Stinson, Victor Bissonnette, and Stella Garcia. 1990. Naturalistic social cognition: Empathic accuracy in mixed-sex dyads. Journal of Personality and Social Psychology 59: 730–42. [Google Scholar] [CrossRef]

- Israelashvili, Jacob, Disa Sauter, and Agneta Fischer. 2019a. How Well Can We Assess Our Ability to Understand Others’ Feelings? Beliefs About Taking Others’ Perspectives and Actual Understanding of Others’ Emotions. Frontiers in Psychology 10: 1080. [Google Scholar] [CrossRef]

- Israelashvili, Jacob, Ran. R. Hassin, and Hillel Aviezer. 2019b. When emotions run high: A critical role for context in the unfolding of dynamic, real-life facial affect. Emotion 19: 558. [Google Scholar] [CrossRef]

- Israelashvili, Jacob, Disa Sauter, and Agneta Fischer. 2020a. Different faces of empathy: Feelings of similarity disrupt recognition of negative emotions. Journal of Experimental Social Psychology 87: 103912. [Google Scholar] [CrossRef]

- Israelashvili, Jacob, Disa Sauter, and Agneta Fischer. 2020b. Two facets of affective empathy: Concern and distress have opposite relationships to emotion recognition. Cognition and Emotion 34: 1112–22. [Google Scholar] [CrossRef] [PubMed]

- Jones, Catherine R. G., Andrew Pickles, Milena Falcaro, Anita J. S. Marsden, Francesca Happé, Sophie K. Scott, Disa Sauter, Jenifer Tregay, Rebecca J. Phillips, Gillian Baird, and et al. 2011. A multimodal approach to emotion recognition ability in autism spectrum disorders. Journal of Child Psychology and Psychiatry 52: 275–85. [Google Scholar] [CrossRef]

- Kenny, David A. 2013. Issues in the measurement of judgmental accuracy. In Understanding Other Minds: Perspectives from Developmental Social Neuroscience. Oxford: Oxford University Press, pp. 104–16. [Google Scholar]

- Lee, Jerry W., Patricia S. Jones, Yoshimitsu Mineyama, and Xinwei Esther Zhang. 2002. Cultural differences in responses to a Likert scale. Research in Nursing & Health 25: 295–306. [Google Scholar]

- Lewis, Gary J., Carmen E. Lefevre, and Andrew W. Young. 2016. Functional architecture of visual emotion recognition ability: A latent variable approach. Journal of Experimental Psychology: General 145: 589–602. [Google Scholar] [CrossRef]

- Mackes, Nuria K., Dennis Golm, Owen G. O’Daly, Sagari Sarkar, Edmund J. S. Sonuga-Barke, Graeme Fairchild, and Mitul A. Mehta. 2018. Tracking emotions in the brain–revisiting the empathic accuracy task. NeuroImage 178: 677–86. [Google Scholar] [CrossRef] [PubMed]

- Nelson, Nicole L., and James A. Russell. 2013. Universality revisited. Emotion Review 5: 8–15. [Google Scholar] [CrossRef]

- Oakley, Beth F., Rebecca Brewer, Geoffrey Bird, and Caroline Catmur. 2016. Theory of mind is not theory of emotion: A cautionary note on the Reading the Mind in the Eyes Test. Journal of Abnormal Psychology 125: 818. [Google Scholar] [CrossRef] [PubMed]

- Olderbak, Sally, Oliver Wilhelm, Gabriel Olaru, Mattis Geiger, Meghan W. Brenneman, and Richard D. Roberts. 2015. A psychometric analysis of the reading the mind in the eyes test: Toward a brief form for research and applied settings. Frontiers in Psychology 6: 1503. [Google Scholar] [CrossRef]

- Ong, Desmond, Zhengxuan Wu, Zhi-Xuan Tan, Marianne Reddan, Isabella Kahhale, Alison Mattek, and Jamil Zaki. 2019. Modeling emotion in complex stories: The Stanford Emotional Narratives Dataset. IEEE Transactions on Affective Computing. [Google Scholar] [CrossRef]

- Peter, Paul, Gilbert A. Churchill, Jr., and Tom J. Brown. 1993. Caution in the use of difference scores in consumer research. Journal of Consumer Research 19: 655–62. [Google Scholar] [CrossRef]

- Rauers, Antje, Elisabeth Blanke, and Michaela Riediger. 2013. Everyday empathic accuracy in younger and older couples: Do you need to see your partner to know his or her feelings? Psychological Science 24: 2210–17. [Google Scholar] [CrossRef]

- Rimé, Bernard. 2009. Emotion Elicits the Social Sharing of Emotion: Theory and Empirical Review. Emotion Review 1: 60–85. [Google Scholar] [CrossRef]

- Rimé, Bernard, Batja Mesquita, Stefano Boca, and Pierre Philippot. 1991. Beyond the emotional event: Six studies on the social sharing of emotion. Cognition & Emotion 5: 435–65. [Google Scholar]

- Russell, James A. 1994. Is there universal recognition of emotion from facial expression? A review of the cross-cultural studies. Psychological Bulletin 115: 102–41. [Google Scholar] [CrossRef] [PubMed]

- Salovey, Peter, and John D. Mayer. 1990. Emotional Intelligence. Imagination, Cognition and Personality 9: 185–211. [Google Scholar] [CrossRef]

- Sauter, Disa A., and Agneta H. Fischer. 2018. Can perceivers recognize emotions from spontaneous expressions? Cognition & Emotion 32: 504–15. [Google Scholar]

- Scherer, Klaus R., and Ursula Scherer. 2011. Assessing the ability to recognize facial and vocal expressions of emotion: Construction and validation of the emotion recognition Index. Journal of Nonverbal Behavior 35: 305–26. [Google Scholar] [CrossRef]

- Scherer, Klaus R., Elizabeth Clark-Polner, and Marcello Mortillaro. 2011. In the eye of the beholder? Universality and cultural specificity in the expression and perception of emotion. International Journal of Psychology 46: 401–35. [Google Scholar] [CrossRef]

- Schlegel, Katja, Didier Grandjean, and Klaus R. Scherer. 2012. Emotion recognition: Unidimensional ability or a set of modality- and emotion-specific skills? Personality and Individual Differences 53: 16–21. [Google Scholar] [CrossRef]

- Schlegel, Katja, Didier Grandjean, and Klaus R. Scherer. 2014. Introducing the Geneva emotion recognition test: An example of Rasch based test development. Psychological Assessment 26: 666–72. [Google Scholar] [CrossRef]

- Schlegel, Katja, Thomas Boone, and Judith A. Hall. 2017. Individual differences in interpersonal accuracy: A multi-level meta-analysis to assess whether judging other people is One skill or many. Journal of Nonverbal Behavior 41: 103–37. [Google Scholar] [CrossRef]

- Schlegel, Katja, Tristan Palese, Marianne Schmid Mast, Thomas H. Rammsayer, Judith A. Hall, and Nora A. Murphy. 2019. A meta-analysis of the relationship between emotion recognition ability and intelligence. Cognition and Emotion 34: 329–51. [Google Scholar] [CrossRef]

- Schmitt, Neal. 1996. Uses and abuses of coefficient alpha. Psychological Assessment 8: 350–53. [Google Scholar] [CrossRef]

- Shipley, Walter C. 1940. A self-administering scale for measuring intellectual impairment and deterioration. The Journal of Psychology 9: 371–77. [Google Scholar] [CrossRef]

- Stinson, Linda, and William Ickes. 1992. Empathic accuracy in the interactions of male friends versus male strangers. Journal of Personality and Social Psychology 62: 787–97. [Google Scholar] [CrossRef]

- Sze, Jocelyn A., Madeleine S. Goodkind, Anett Gyurak, and Robert W. Levenson. 2012. Aging and Emotion Recognition: Not Just a Losing Matter. Psychol Aging 27: 940–50. [Google Scholar] [CrossRef] [PubMed]

- Ta, Vivian, and William Ickes. 2017. Empathic Accuracy: Standard Stimulus Paradigm (EA-SSP). In The Sourcebook of Listening Research. Available online: http://dx.doi.org/10.1002/9781119102991.ch23 (accessed on 1 May 2021).

- Tang, Yulong, Paul L. Harris, Hong Zou, Juan Wang, and Zhinuo Zhang. 2020. The relationship between emotion understanding and social skills in preschoolers: The mediating role of verbal ability and the moderating role of working memory. European Journal of Developmental Psychology, 1–17. [Google Scholar] [CrossRef]

- Wagner, Hugh. 1990. The spontaneous facial expression of differential positive and negative emotions. Motivation and Emotion 14: 27–43. [Google Scholar] [CrossRef]

- Wilhelm, Oliver, Andrea Hildebrandt, Karsten Manske, Annekathrin Schacht, and Werner Sommer. 2014. Test battery for measuring the perception and rec- ognition of facial expressions of emotion. Frontiers in Psychology 5: 404. [Google Scholar] [CrossRef]

- Wilhelm, Peter, and Meinrad Perrez. 2004. How is my partner feeling in different daily-life settings? Accuracy of spouses’ judgments about their partner’s feelings at work and at home. Social Indicators Research 67: 183–246. [Google Scholar] [CrossRef]

- Zaki, Jamil, Niall Bolger, and Kevin Ochsner. 2008. It takes two: The interpersonal nature of empathic accuracy. Psychological Science 19: 399–404. [Google Scholar] [CrossRef]

- Zhou, Haotian, Elizabeth A. Majka, and Nicholas Epley. 2017. Inferring perspective versus getting perspective: Underestimating the value of being in another person’s shoes. Psychological Science 28: 482–93. [Google Scholar] [CrossRef] [PubMed]

| Task | Stimuli | Emotional Cues | Emotional Expression | Basis of Accuracy3 | Choice Options |

|---|---|---|---|---|---|

| RMET | Static pictures | Eyes (nonverbal) | Posed | Prototypical expression | Four (select one) |

| GERT | Dynamic videos | Voice, body and face (nonverbal) | Reenacted | Prototypical expression | Fourteen (select one) |

| EAT | Dynamic videos | Words, voice, facial and body movements (verbal and nonverbal) | Spontaneous | Targets’ emotions | Ten (select all applicable, rate each using 0–6 scale) |

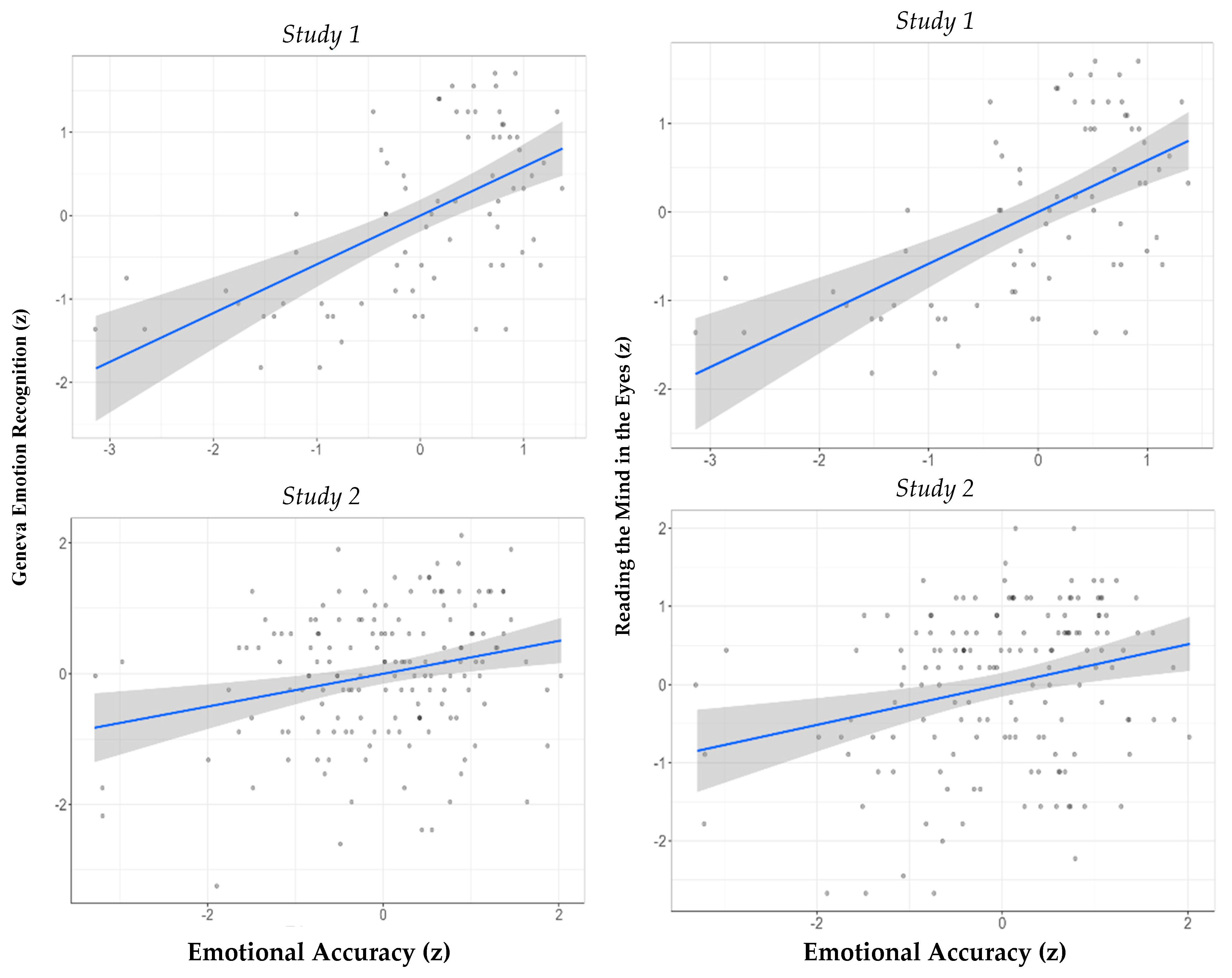

| Study 1 (N = 74; USA, MTurk) | |||||||

| Pearson’s r | EAT | GERT | RMET | Spearman’s rho | EAT | GERT | RMET |

| GERT | 0.59 *** (0.41, 0.72) | GERT | 0.55 *** (0.37, 0.69) | ||||

| RMET | 0.60 *** (0.43, 0.73) | 0.65 *** (0.49, 0.76) | RMET | 0.55 *** (0.37, 0.69) | 0.65 *** (0.49, 0.77) | ||

| Verbal IQ | 0.31 *** (0.09, 0.51) | 0.37 *** (0.15, 0.55) | 0.45 *** (0.24, 0.61) | Verbal IQ | 0.39 *** (0.18, 0.57) | 0.34 *** (0.12, 0.53) | 0.45 *** (0.25, 0.62) |

| Study 2 (N = 157; UK; Prolific) | |||||||

| Pearson’s r | EAT | GERT | RMET | Spearman’s rho | EAT | GERT | RMET |

| GERT | 0.25 ** (0.10, 0.39) | GERT | 0.22 ** (0.07, 0.36) | ||||

| RMET | 0.26 ** (0.11, 0.40) | 0.34 *** (0.19, 0.47) | RMET | 0.25 ** (0.10, 0.39) | 0.25 ** (0.10, 0.39) | ||

| Verbal IQ | 0.15 (−0.01, 0.30) | 0.33 *** (0.18, 0.46) | 0.29 *** (0.14, 0.43) | Verbal IQ | 0.04 (−0.12, 0.20) | 0.24 ** (0.09, 0.38) | 0.25 ** (0.10, 0.39) |

| EAT | GERT | RMET | |

|---|---|---|---|

| Study 1 | 4.77 a (1.21) | 3.85 b (1.85) | 4.16 b (1.59) |

| Study 2 | 4.09 a (1.41) | 4.07 a (1.53) | 3.78 b (1.56) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Israelashvili, J.; Pauw, L.S.; Sauter, D.A.; Fischer, A.H. Emotion Recognition from Realistic Dynamic Emotional Expressions Cohere with Established Emotion Recognition Tests: A Proof-of-Concept Validation of the Emotional Accuracy Test. J. Intell. 2021, 9, 25. https://doi.org/10.3390/jintelligence9020025

Israelashvili J, Pauw LS, Sauter DA, Fischer AH. Emotion Recognition from Realistic Dynamic Emotional Expressions Cohere with Established Emotion Recognition Tests: A Proof-of-Concept Validation of the Emotional Accuracy Test. Journal of Intelligence. 2021; 9(2):25. https://doi.org/10.3390/jintelligence9020025

Chicago/Turabian StyleIsraelashvili, Jacob, Lisanne S. Pauw, Disa A. Sauter, and Agneta H. Fischer. 2021. "Emotion Recognition from Realistic Dynamic Emotional Expressions Cohere with Established Emotion Recognition Tests: A Proof-of-Concept Validation of the Emotional Accuracy Test" Journal of Intelligence 9, no. 2: 25. https://doi.org/10.3390/jintelligence9020025

APA StyleIsraelashvili, J., Pauw, L. S., Sauter, D. A., & Fischer, A. H. (2021). Emotion Recognition from Realistic Dynamic Emotional Expressions Cohere with Established Emotion Recognition Tests: A Proof-of-Concept Validation of the Emotional Accuracy Test. Journal of Intelligence, 9(2), 25. https://doi.org/10.3390/jintelligence9020025