Abstract

The relative value of specific versus general cognitive abilities for the prediction of practical outcomes has been debated since the inception of modern intelligence theorizing and testing. This editorial introduces a special issue dedicated to exploring this ongoing “great debate”. It provides an overview of the debate, explains the motivation for the special issue and two types of submissions solicited, and briefly illustrates how differing conceptualizations of cognitive abilities demand different analytic strategies for predicting criteria, and that these different strategies can yield conflicting findings about the real-world importance of general versus specific abilities.

1. Introduction to the Special Issue

“To state one argument is not necessarily to be deaf to all others.”—Robert Louis Stevenson [1] (p. 11).

Measuring intelligence with the express purpose of predicting practical outcomes has played a major role in the discipline since its exception [2]. The apparent failure of sensory tests of intelligence to predict school grades led to their demise [3,4]. The Binet-Simon [5] was created with the practical goal of identifying students with developmental delays in order to track them into different schools as universal public education was instituted in France [6]. The Binet-Simon is considered the first “modern” intelligence test because it succeeded in fulfilling its purpose and, in doing so, served as a model for all the tests that followed it. Hugo Munsterberg, a pioneer of industrial/organizational psychology [7], used, and advocated the use of, intelligence tests for personnel selection [8,9,10]. Historically, intelligence testing comprised a major branch of applied psychology due to it being widely practiced in schools, the workplace and the military [11,12,13,14], as it is today [15,16,17,18].

For as long as psychometric tests have been used to chart the basic structure of intelligence and predict criteria outside the laboratory (e.g., grades, job performance), there has been tension between emphasizing general and specific abilities [19,20,21]. Insofar as the basic structure of individual differences in cognitive abilities, these tensions have largely been resolved by integrating specific and general abilities into hierarchical models. In the applied realm, however, debate remains.

This state of affairs may seem surprising, as from the 1980s to the early 2000s, research findings consistently demonstrated that specific abilities were relatively useless for predicting important real-world outcomes (e.g., grades, job performance) once g was accounted for [22]. This point of view is perhaps best characterized by the moniker “Not Much More Than g” (NMMg) [23,24,25,26]. Nonetheless, even during the high-water mark of this point of view, there were occasional dissenters who explicitly questioned it [27,28,29] or conducted research demonstrating that sometimes specific abilities did account for useful incremental validity beyond g [30,31,32,33]. Furthermore, when surveys explicitly asked about the relative value of general and specific abilities for applied prediction, substantial disagreement was revealed [34,35]. Since the apogee of NMMg, there has been a growing revival of using specific abilities to predict applied criteria (e.g., [20,36,37,38,39,40,41,42,43,44,45,46,47,48,49]). Recently, there have been calls to investigate the applied potential of specific abilities (e.g., [50,51,52,53,54,55,56,57]), and personnel selection researchers are actively reexamining whether specific abilities have value beyond g for predicting performance [58]. The research literature supporting NMMg cannot be denied, however, and the point of view it represents retains its allure for interpreting many practical findings (e.g., [59,60]). The purpose of this special issue is to continue the “great debate” about the relative practical value of measures of specific and general abilities.

We solicited two types of contributions for the special issue. The first type of invitation was for nonempirical theoretical, critical or integrative perspectives on the issue of general versus specific abilities for predicting real-world outcomes. The second type was empirical and inspired by Bliese, Halverson and Schriesheim’s [61] approach: We provided a covariance matrix and the raw data for three intelligence measures from a Thurstonian test battery and school grades in a sample of German adolescents. Contributors were invited to analyze the data as they saw fit, with the overarching purpose of addressing three major questions:

- Do the data present evidence for the usefulness of specific abilities?

- How important are specific abilities relative to general abilities for predicting grades?

- To what degree could (or should) researchers use different prediction models for each of the different outcome criteria?

In asking contributors to analyze the same data according to their own theoretical and practical viewpoint(s), we hoped to draw out assumptions and perspectives that might otherwise remain implicit.

2. Data Provided

We provided a covariance matrix of the relationships between scores on three intelligence tests from a Thurstonian test battery and school grades in a sample of 219 German adolescents and young adults who were enrolled in a German middle, high or vocational school. The data were gathered directly at the schools or at a local fair for young adults interested in vocational education. A portion of these data were the basis for analyses published in Lang and Lang [62].

The intelligence tests came from the Wilde Intelligence test—a test rooted in Thurstone’s work in the 1940s that was developed in Germany in the 1950s with the original purpose of selecting civil service employees; the test is widely used in Europe due to its long history, and is now available in a revised version. The most recent iteration of this battery [63] includes a recommendation for a short form that consists of the three tests that generated the scores included in our data. The first test (“unfolding”) measures figural reasoning, the second consists of a relatively complex number-series task (and thus also measures reasoning), and third comprises verbal analogies. All three tests are speeded, meaning missingness is somewhat related to performance on the tests.

Grades in Germany are commonly rated on a scale ranging from very good (6) to poor (1). Poor is rarely used in the system and sometimes combined with insufficient (2), and thus rarely appears in the data supplied. The scale is roughly equivalent to the American grading system of A to F. The data include participants’ sex, age, and grades in Math, German, English and Sports.

We originally provided the data as a covariance matrix and aggregated raw data file but also shared item data with interested authors. We view them as fairly typical of intelligence data gathered in school and other applied settings.

3. Theoretical Motivation

We judged it particularly important to draw out contributors’ theoretical and practical assumptions because different conceptualizations of intelligence require different approaches to data analysis in order to appropriately model the relations between abilities and criteria. Alternatives to models of intelligence rooted in Spearman’s original theory have existed almost since the inception of that theory (e.g., [64,65,66,67,68]), but have arisen with seemingly increasing regularity in the last 15 years (e.g., [69,70,71,72,73,74]). Unlike some other alternatives (e.g., [75,76,77,78,79]), most of these models do not cast doubt on the very existence of a general psychometric factor, but they do differ in its interpretation. These theories intrinsically offer differing outlooks on how g relates to specific abilities and, by extension, how to model relationships among g, specific abilities and practical outcomes. We illustrate this point by briefly outlining how the two hierarchical factor-analytic models most widely used for studying abilities at different strata [73] demand different analytic strategies to appropriately examine how those abilities relate to external criteria.

The first type of hierarchical conceptualization is the higher-order (HO) model. In this family of models, the pervasive positive intercorrelations among scores on tests of specific abilities are taken to imply a “higher-order” latent trait that accounts for them. Although HO models (e.g., [80,81]) differ in the number and composition of their ability strata, they ultimately posit a general factor that sits atop their hierarchies. Thus, although HO models acknowledge the existence of specific abilities, they also treat g as a construct that accounts for much of the variance in those abilities and, by extension, whatever outcomes those narrower abilities are predictive of. By virtue of the fact that g resides at the apex of the specific ability hierarchies in these models, those abilities are ultimately “subordinate” to it [82].

A second family of hierarchical models consists of the bifactor or nested-factor (NF) models [30]. Typically, in this class of models a general latent factor associated with all observed variables is specified, along with narrower latent factors associated with only a subset of observed variables (see Reise [83] for more details). In the context of cognitive abilities assessment, this general latent factor is usually treated as representing g, and the narrower factors interpreted as representing specific abilities, depending upon the content of the test battery and the data analytic procedures implemented (e.g., [84]). As a consequence, g and specific ability factors are treated as uncorrelated in NF models. Unlike in HO models, these factors are not conceptualized as existing at different “levels”, but instead are treated as differing along a continuum of generality. In the NF family of models, the defining characteristic of the abilities is breadth, rather than subordination [82].

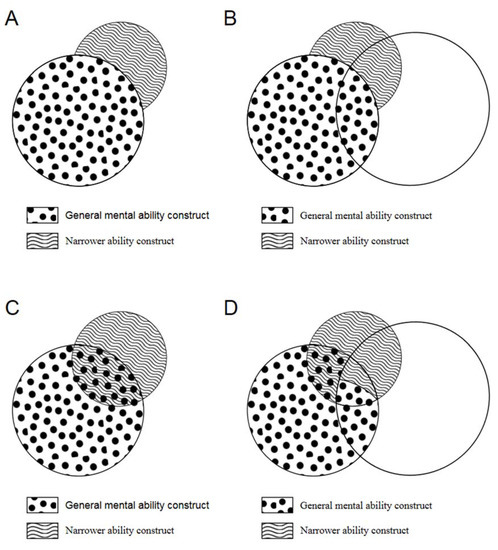

Lang et al. [20] illustrated that whether an HO or NF model is chosen to conceptualize individual differences in intelligence has important implications for analyzing the proportional relevance of general and specific abilities for predicting outcomes. When an HO model is selected, variance that is shared among g, specific abilities and a criterion will be attributed to g, as g is treated as a latent construct that accounts for variance in those specific abilities. As a consequence, only variance that is not shared between g and specific abilities is treated as a unique predictor of the criterion. This state of affairs is depicted in terms of predicting job performance with g and a single specific ability in panels A and B of Figure 1. In these scenarios, a commonly adopted approach is hierarchical regression, with g scores entered in the first step and specific ability scores in the second. In these situations, specific abilities typically account for a small amount of variance in the criterion beyond g [19,20].

Figure 1.

This figure depicts a simplified scenario with a single general mental ability (GMA) measure and a single narrow cognitive ability measure. As shown in Panel A, higher-order models attribute all shared variance between the GMA measure and the narrower cognitive ability measure to GMA. Panel B depicts the consequence of this type of conceptualization: Criterion variance in job performance jointly explained by the GMA measure and the narrower cognitive ability measure is solely attributed to GMA. Nested-factors models, in contrast, do not assume that the variance shared by the GMA measure and narrower cognitive ability measure is wholly attributable to GMA and distributes the variance across the two constructs (Panel C). Accordingly, as illustrated in Panel D, criterion variance in job performance jointly explained by the GMA measure and the narrower cognitive ability measure may be attributable to either the GMA construct or the narrower cognitive ability construct. Adapted from Lang et al. [20] (p. 599).

When an NF model is selected to conceptualize individual differences in intelligence, g and specific abilities are treated as uncorrelated, necessitating a different analytic strategy than the traditional incremental validity approach when predicting practical criteria. Depending on the composition of the test(s) being used, some data analytic approaches include explicitly using a bifactor method to estimate g and specific abilities, and predicting criteria using the resultant latent variables [33], extracting g from test scores first and then using the residuals representing specific abilities to predict criteria [37], or using relative-importance analyses to ensure that variance shared among g, specific abilities and the criterion is not automatically attributed to g [20,44,47]. This final strategy is depicted in panels C and D of Figure 1. When an NF perspective is adopted, and the analyses are properly aligned with it, results often show that specific abilities can account for substantial variance in criteria beyond g and are sometimes even more important predictors than g [19].

The HO and NF conceptualizations are in many ways only a starting point for thinking about how to model relations among abilities of differing generality and practical criteria. Other approaches in (or related to) the factor-analytic tradition that can be used to explore these associations include the hierarchies of factor solutions method [73,85], behavior domain theory [86], and formative measurement models [87]. Other treatments of intelligence that reside outside the factor analytic tradition (e.g., [88,89]) and treat g as an emergent phenomenon represent new challenges (and opportunities) for studying the relative importance of different strata of abilities for predicting practical outcomes. The existence of these many possibilities for modeling differences in human cognitive abilities underscores the need for researchers and practitioners to select their analytic techniques carefully, in order to ensure those techniques are properly aligned with the model of intelligence being invoked.

4. Editorial Note on the Contributions

The articles in this special issue were solicited from scholars who have demonstrated expertise in the investigation of not only human intelligence but also cognitive abilities of differing breadth and their associations with applied criteria. Consequently, we believe this collection of papers both provides an excellent overview of the ongoing debate about the relative practical importance of general and specific abilities, and substantially advances this debate. As editors, we have reviewed these contributions through multiple iterations of revision, and in all cases the authors were highly responsive to our feedback. We are proud to be the editors of a special issue that consists of such outstanding contributions to the field.

Author Contributions

Conflicts of Interest

The authors declare no conflict of interest.

References

- Stevenson, R.L. An Apology for Idlers and Other Essays; Thomas B. Mosher: Portland, ME, USA, 1916. [Google Scholar]

- Danziger, K. Naming the Mind: How Psychology Found Its Language; Sage: London, UK, 1997. [Google Scholar]

- Sharp, S.E. Individual psychology: A study in psychological method. Am. J. Psychol. 1899, 10, 329–391. [Google Scholar] [CrossRef]

- Wissler, C. The correlation of mental and physical tests. Psychol. Rev. 1901, 3, i-62. [Google Scholar] [CrossRef]

- Binet, A.; Simon, T. New methods for the diagnosis of the intellectual level of subnormals. L’Annee Psychol. 1905, 12, 191–244. [Google Scholar]

- Schneider, W.H. After Binet: French intelligence testing, 1900–1950. J. Hist. Behav. Sci. 1992, 28, 111–132. [Google Scholar] [CrossRef]

- Benjamin, L.T. Hugo Münsterberg: Portrait of an applied psychologist. In Portraits of Pioneers in Psychology; Kimble, G.A., Wertheimer, M., Eds.; Erlbaum: Mahwah, NJ, USA, 2000; Volume 4, pp. 113–129. [Google Scholar]

- Kell, H.J.; Lubinski, D. Spatial ability: A neglected talent in educational and occupational settings. Roeper Rev. 2013, 35, 219–230. [Google Scholar] [CrossRef]

- Kevles, D.J. Testing the Army’s intelligence: Psychologists and the military in World War I. J. Am. Hist. 1968, 55, 565–581. [Google Scholar] [CrossRef]

- Moskowitz, M.J. Hugo Münsterberg: A study in the history of applied psychology. Am. Psychol. 1977, 32, 824–842. [Google Scholar] [CrossRef]

- Bingham, W.V. On the possibility of an applied psychology. Psychol. Rev. 1923, 30, 289–305. [Google Scholar] [CrossRef]

- Katzell, R.A.; Austin, J.T. From then to now: The development of industrial-organizational psychology in the United States. J. Appl. Psychol. 1992, 77, 803–835. [Google Scholar] [CrossRef]

- Sackett, P.R.; Lievens, F.; Van Iddekinge, C.H.; Kuncel, N.R. Individual differences and their measurement: A review of 100 years of research. J. Appl. Psychol. 2017, 102, 254–273. [Google Scholar] [CrossRef] [PubMed]

- Terman, L.M. The status of applied psychology in the United States. J. Appl. Psychol. 1921, 5, 1–4. [Google Scholar] [CrossRef][Green Version]

- Gardner, H. Who owns intelligence? Atl. Mon. 1999, 283, 67–76. [Google Scholar]

- Gardner, H.E. Intelligence Reframed: Multiple Intelligences for the 21st Century; Hachette UK: London, UK, 2000. [Google Scholar]

- Sternberg, R.J. (Ed.) North American approaches to intelligence. In International Handbook of Intelligence; Cambridge University Press: Cambridge, UK, 2004; pp. 411–444. [Google Scholar]

- Sternberg, R.J. Testing: For better and worse. Phi Delta Kappan 2016, 98, 66–71. [Google Scholar] [CrossRef]

- Kell, H.J.; Lang, J.W.B. Specific abilities in the workplace: More important than g? J. Intell. 2017, 5, 13. [Google Scholar] [CrossRef]

- Lang, J.W.B.; Kersting, M.; Hülsheger, U.R.; Lang, J. General mental ability, narrower cognitive abilities, and job performance: The perspective of the nested-factors model of cognitive abilities. Pers. Psychol. 2010, 63, 595–640. [Google Scholar] [CrossRef]

- Thorndike, R.M.; Lohman, D.F. A Century of Ability Testing; Riverside: Chicago, IL, USA, 1990. [Google Scholar]

- Murphy, K. What can we learn from “Not Much More than g”? J. Intell. 2017, 5, 8. [Google Scholar] [CrossRef]

- Olea, M.M.; Ree, M.J. Predicting pilot and navigator criteria: Not much more than g. J. Appl. Psychol. 1994, 79, 845–851. [Google Scholar] [CrossRef]

- Ree, M.J.; Earles, J.A. Predicting training success: Not much more than g. Pers. Psychol. 1991, 44, 321–332. [Google Scholar] [CrossRef]

- Ree, M.J.; Earles, J.A. Predicting occupational criteria: Not much more than g. In Human Abilities: Their Nature and Measurement; Dennis, I., Tapsfield, P., Eds.; Erlbaum: Mahwah, NJ, USA, 1996; pp. 151–165. [Google Scholar]

- Ree, M.J.; Earles, J.A.; Teachout, M.S. Predicting job performance: Not much more than g. J. Appl. Psychol. 1994, 79, 518–524. [Google Scholar] [CrossRef]

- Bowman, D.B.; Markham, P.M.; Roberts, R.D. Expanding the frontier of human cognitive abilities: So much more than (plain) g! Learn. Individ. Differ. 2002, 13, 127–158. [Google Scholar] [CrossRef]

- Murphy, K.R. Individual differences and behavior in organizations: Much more than g. In Individual Differences and Behavior in Organizations; Murphy, K., Ed.; Jossey-Bass: San Francisco, CA, USA, 1996; pp. 3–30. [Google Scholar]

- Stankov, L. g: A diminutive general. In The General Factor of Intelligence: How General Is It? Sternberg, R.J., Grigorenko, E.L., Eds.; Erlbaum: Mahwah, NJ, USA, 2002; pp. 19–37. [Google Scholar]

- Gustafsson, J.-E.; Balke, G. General and specific abilities as predictors of school achievement. Multivar. Behav. Res. 1993, 28, 407–434. [Google Scholar] [CrossRef] [PubMed]

- LePine, J.A.; Hollenbeck, J.R.; Ilgen, D.R.; Hedlund, J. Effects of individual differences on the performance of hierarchical decision-making teams: Much more than g. J. Appl. Psychol. 1997, 82, 803–811. [Google Scholar] [CrossRef]

- Levine, E.L.; Spector, P.E.; Menon, S.; Narayanan, L. Validity generalization for cognitive, psychomotor, and perceptual tests for craft jobs in the utility industry. Hum. Perform. 1996, 9, 1–22. [Google Scholar] [CrossRef]

- Reeve, C.L. Differential ability antecedents of general and specific dimensions of declarative knowledge: More than g. Intelligence 2004, 32, 621–652. [Google Scholar] [CrossRef]

- Murphy, K.R.; Cronin, B.E.; Tam, A.P. Controversy and consensus regarding the use of cognitive ability testing in organizations. J. Appl. Psychol. 2003, 88, 660–671. [Google Scholar] [CrossRef] [PubMed]

- Reeve, C.L.; Charles, J.E. Survey of opinions on the primacy of g and social consequences of ability testing: A comparison of expert and non-expert views. Intelligence 2008, 36, 681–688. [Google Scholar] [CrossRef]

- Coyle, T.R. Ability tilt for whites and blacks: Support for differentiation and investment theories. Intelligence 2016, 56, 28–34. [Google Scholar] [CrossRef]

- Coyle, T.R. Non-g residuals of group factors predict ability tilt, college majors, and jobs: A non-g nexus. Intelligence 2018, 67, 19–25. [Google Scholar] [CrossRef]

- Coyle, T.R.; Pillow, D.R. SAT and ACT predict college GPA after removing g. Intelligence 2008, 36, 719–729. [Google Scholar] [CrossRef]

- Coyle, T.R.; Purcell, J.M.; Snyder, A.C.; Richmond, M.C. Ability tilt on the SAT and ACT predicts specific abilities and college majors. Intelligence 2014, 46, 18–24. [Google Scholar] [CrossRef]

- Coyle, T.R.; Snyder, A.C.; Richmond, M.C. Sex differences in ability tilt: Support for investment theory. Intelligence 2015, 50, 209–220. [Google Scholar] [CrossRef]

- Coyle, T.R.; Snyder, A.C.; Richmond, M.C.; Little, M. SAT non-g residuals predict course specific GPAs: Support for investment theory. Intelligence 2015, 51, 57–66. [Google Scholar] [CrossRef]

- Kell, H.J.; Lubinski, D.; Benbow, C.P. Who rises to the top? Early indicators. Psychol. Sci. 2013, 24, 648–659. [Google Scholar] [CrossRef] [PubMed]

- Kell, H.J.; Lubinski, D.; Benbow, C.P.; Steiger, J.H. Creativity and technical innovation: Spatial ability’s unique role. Psychol. Sci. 2013, 24, 1831–1836. [Google Scholar] [CrossRef] [PubMed]

- Lang, J.W.B.; Bliese, P.D. I–O psychology and progressive research programs on intelligence. Ind. Organ. Psychol. 2012, 5, 161–166. [Google Scholar] [CrossRef]

- Makel, M.C.; Kell, H.J.; Lubinski, D.; Putallaz, M.; Benbow, C.P. When lightning strikes twice: Profoundly gifted, profoundly accomplished. Psychol. Sci. 2016, 27, 1004–1018. [Google Scholar] [CrossRef] [PubMed]

- Park, G.; Lubinski, D.; Benbow, C.P. Contrasting intellectual patterns predict creativity in the arts and sciences: Tracking intellectually precocious youth over 25 years. Psychol. Sci. 2007, 18, 948–952. [Google Scholar] [CrossRef] [PubMed]

- Stanhope, D.S.; Surface, E.A. Examining the incremental validity and relative importance of specific cognitive abilities in a training context. J. Pers. Psychol. 2014, 13, 146–156. [Google Scholar] [CrossRef]

- Wai, J.; Lubinski, D.; Benbow, C.P. Spatial ability for STEM domains: Aligning over 50 years of cumulative psychological knowledge solidifies its importance. J. Educ. Psychol. 2009, 101, 817–835. [Google Scholar] [CrossRef]

- Ziegler, M.; Dietl, E.; Danay, E.; Vogel, M.; Bühner, M. Predicting training success with general mental ability, specific ability tests, and (Un) structured interviews: A meta-analysis with unique samples. Int. J. Sel. Assess. 2011, 19, 170–182. [Google Scholar] [CrossRef]

- Lievens, F.; Reeve, C.L. Where I–O psychology should really (re)start its investigation of intelligence constructs and their measurement. Ind. Organ. Psychol. 2012, 5, 153–158. [Google Scholar] [CrossRef]

- Coyle, T.R. Predictive validity of non-g residuals of tests: More than g. J. Intell. 2014, 2, 21–25. [Google Scholar] [CrossRef]

- Flynn, J.R. Reflections about Intelligence over 40 Years. Intelligence 2018. Available online: https://www.sciencedirect.com/science/article/pii/S0160289618300904?dgcid=raven_sd_aip_email (accessed on 31 August 2018).

- Reeve, C.L.; Scherbaum, C.; Goldstein, H. Manifestations of intelligence: Expanding the measurement space to reconsider specific cognitive abilities. Hum. Resour. Manag. Rev. 2015, 25, 28–37. [Google Scholar] [CrossRef]

- Ritchie, S.J.; Bates, T.C.; Deary, I.J. Is education associated with improvements in general cognitive ability, or in specific skills? Devel. Psychol. 2015, 51, 573–582. [Google Scholar] [CrossRef] [PubMed]

- Schneider, W.J.; Newman, D.A. Intelligence is multidimensional: Theoretical review and implications of specific cognitive abilities. Hum. Resour. Manag. Rev. 2015, 25, 12–27. [Google Scholar] [CrossRef]

- Krumm, S.; Schmidt-Atzert, L.; Lipnevich, A.A. Insights beyond g: Specific cognitive abilities at work. J. Pers. Psychol. 2014, 13, 117–122. [Google Scholar] [CrossRef]

- Wee, S.; Newman, D.A.; Song, Q.C. More than g-factors: Second-stratum factors should not be ignored. Ind. Organ. Psychol. 2015, 8, 482–488. [Google Scholar] [CrossRef]

- Ryan, A.M.; Ployhart, R.E. A century of selection. Annu. Rev. Psychol. 2014, 65, 693–717. [Google Scholar] [CrossRef] [PubMed]

- Gottfredson, L.S. A g theorist on why Kovacs and Conway’s Process Overlap Theory amplifies, not opposes, g theory. Psychol. Inq. 2016, 27, 210–217. [Google Scholar] [CrossRef]

- Ree, M.J.; Carretta, T.R.; Teachout, M.S. Pervasiveness of dominant general factors in organizational measurement. Ind. Organ. Psychol. 2015, 8, 409–427. [Google Scholar] [CrossRef]

- Bliese, P.D.; Halverson, R.R.; Schriesheim, C.A. Benchmarking multilevel methods in leadership: The articles, the model, and the data set. Leadersh. Quart. 2002, 13, 3–14. [Google Scholar] [CrossRef]

- Lang, J.W.B.; Lang, J. Priming competence diminishes the link between cognitive test anxiety and test performance: Implications for the interpretation of test scores. Psychol. Sci. 2010, 21, 811–819. [Google Scholar] [CrossRef] [PubMed]

- Kersting, M.; Althoff, K.; Jäger, A.O. Wilde-Intelligenz-Test 2: WIT-2; Hogrefe, Verlag für Psychologie: Göttingen, Germany, 2008. [Google Scholar]

- Brown, W. Some experimental results in the correlation of mental abilities. Br. J. Psychol. 1910, 3, 296–322. [Google Scholar]

- Brown, W.; Thomson, G.H. The Essentials of Mental Measurement; Cambridge University Press: Cambridge, UK, 1921. [Google Scholar]

- Thorndike, E.L.; Lay, W.; Dean, P.R. The relation of accuracy in sensory discrimination to general intelligence. Am. J. Psychol. 1909, 20, 364–369. [Google Scholar] [CrossRef]

- Tryon, R.C. A theory of psychological components—An alternative to “mathematical factors”. Psychol. Rev. 1935, 42, 425–445. [Google Scholar] [CrossRef]

- Tryon, R.C. Reliability and behavior domain validity: Reformulation and historical critique. Psychol. Bull. 1957, 54, 229–249. [Google Scholar] [CrossRef] [PubMed]

- Bartholomew, D.J.; Allerhand, M.; Deary, I.J. Measuring mental capacity: Thomson’s Bonds model and Spearman’s g-model compared. Intelligence 2013, 41, 222–233. [Google Scholar] [CrossRef]

- Dickens, W.T. What Is g? Available online: https://www.brookings.edu/wp-content/uploads/2016/06/20070503.pdf (accessed on 2 May 2018).

- Kievit, R.A.; Davis, S.W.; Griffiths, J.; Correia, M.M.; Henson, R.N. A watershed model of individual differences in fluid intelligence. Neuropsychologia 2016, 91, 186–198. [Google Scholar] [CrossRef] [PubMed]

- Kovacs, K.; Conway, A.R. Process overlap theory: A unified account of the general factor of intelligence. Psychol. Inq. 2016, 27, 151–177. [Google Scholar] [CrossRef]

- Lang, J.W.B.; Kersting, M.; Beauducel, A. Hierarchies of factor solutions in the intelligence domain: Applying methodology from personality psychology to gain insights into the nature of intelligence. Learn. Individ. Differ. 2016, 47, 37–50. [Google Scholar] [CrossRef][Green Version]

- Van Der Maas, H.L.; Dolan, C.V.; Grasman, R.P.; Wicherts, J.M.; Huizenga, H.M.; Raijmakers, M.E. A dynamical model of general intelligence: The positive manifold of intelligence by mutualism. Psychol. Rev. 2006, 113, 842–861. [Google Scholar] [CrossRef] [PubMed]

- Campbell, D.T.; Fiske, D.W. Convergent and discriminant validation by the multitrait-multimethod matrix. Psychol. Bull. 1959, 56, 81–105. [Google Scholar] [CrossRef] [PubMed]

- Gould, S.J. The Mismeasure of Man, 2nd ed.; W. W. Norton & Company: New York, NY, USA, 1996. [Google Scholar]

- Howe, M.J. Separate skills or general intelligence: The autonomy of human abilities. Br. J. Educ. Psychol. 1989, 59, 351–360. [Google Scholar] [CrossRef]

- Schlinger, H.D. The myth of intelligence. Psychol. Record 2003, 53, 15–32. [Google Scholar]

- Schönemann, P.H. Jensen’s g: Outmoded theories and unconquered frontiers. In Arthur Jensen: Consensus and Controversy; Modgil, S., Modgil, C., Eds.; The Falmer Press: New York, NY, USA, 1987; pp. 313–328. [Google Scholar]

- Johnson, W.; Bouchard, T.J. The structure of human intelligence: It is verbal, perceptual, and image rotation (VPR), not fluid and crystallized. Intelligence 2005, 33, 393–416. [Google Scholar] [CrossRef]

- McGrew, K.S. CHC theory and the human cognitive abilities project: Standing on the shoulders of the giants of psychometric intelligence research. Intelligence 2009, 37, 1–10. [Google Scholar] [CrossRef]

- Humphreys, L.G. The primary mental ability. In Intelligence and Learning; Friedman, M.P., Das, J.R., O’Connor, N., Eds.; Plenum: New York, NY, USA, 1981; pp. 87–102. [Google Scholar]

- Reise, S.P. The rediscovery of bifactor measurement models. Multivar. Behav. Res. 2012, 47, 667–696. [Google Scholar] [CrossRef] [PubMed]

- Murray, A.L.; Johnson, W. The limitations of model fit in comparing the bi-factor versus higher-order models of human cognitive ability structure. Intelligence 2013, 41, 407–422. [Google Scholar] [CrossRef]

- Goldberg, L.R. Doing it all bass-ackwards: The development of hierarchical factor structures from the top down. J. Res. Personal. 2006, 40, 347–358. [Google Scholar] [CrossRef]

- McDonald, R.P. Behavior domains in theory and in practice. Alta. J. Educ. Res. 2003, 49, 212–230. [Google Scholar]

- Bollen, K.; Lennox, R. Conventional wisdom on measurement: A structural equation perspective. Psychol. Bull. 1991, 110, 305–314. [Google Scholar] [CrossRef]

- Kievit, R.A.; Lindenberger, U.; Goodyer, I.M.; Jones, P.B.; Fonagy, P.; Bullmore, E.T.; Dolan, R.J. Mutualistic coupling between vocabulary and reasoning supports cognitive development during late adolescence and early adulthood. Psychol. Sci. 2017, 28, 1419–1431. [Google Scholar] [CrossRef] [PubMed]

- Van Der Maas, H.L.; Kan, K.J.; Marsman, M.; Stevenson, C.E. Network models for cognitive development and intelligence. J. Intell. 2017, 5, 16. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).